Abstract

The growing number of students in higher education institutions, along with students’ diverse educational backgrounds, is driving demand for more individual study support. Furthermore, online lectures increased due to the COVID-19 pandemic and are expected to continue, further accelerating the need for self-regulated learning. Individual digital study assistants (IDSA) address these challenges via ubiquitous, easy, automatic online access. This Action Design Research-based study entailed designing, developing, and evaluating an IDSA that aims to support students’ self-regulated learning, study organization, and goal achievement for students in their early study phase with limited knowledge of higher education institutions. Therefore, data from 28 qualitative expert interviews, a quantitative survey of 570 students, and a literature review was used to derive seven general IDSA requirements, including functionalities, contact options, data-based responsiveness and individuality, a well-tested system, marketing strategies, data protection, and usability. The research team incorporated the identified requirements into an IDSA prototype, tested by more than 1000 students, that includes functionalities as recommending lectures based on individual interests and competencies, matching students, and providing feedback about strengths and weaknesses in learning behaviors. The results and findings compromise a knowledge base for academics, support IDSA theory building, and illustrate IDSA design and development to guide system developers and decision-makers in higher education. This knowledge can also be transferred to other higher education institutions to support implementing IDSAs with limited adaptations. Further, this research introduces a feasible functional system to support self-organization.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction and motivation

The increasingly heterogeneous nature of students resulting from the ongoing reforms being applied in the context of higher education institutions, such as the Bologna Process in Europe and the Bradly Report in Australia, have led to the growing need for personalized and individualized student counseling and support (Clarke et al., 2013; Van der Wende, 2000; Wong & Li, 2019). Furthermore, while student numbers are growing, the number of lecturers and administrators has almost remained static, making personal support and advising alone no longer feasible (Hornsby & Osman, 2014; Klammer, 2019; Marczok, 2016; Organisation for Economic Co-operation and Development [OECD], 2023). In addition, the COVID-19 pandemic has driven an increase in online lectures, intensifying the need for students to engage in self-organization and goal-oriented learning. Within the context of online-based learning, evidence suggests that low interaction rates can decrease learning effectiveness, increase the likelihood of student dropout, and decrease students’ overall satisfaction with their learning experiences (Eom et al., 2016; Hone & El Said, 2016). According to Traus et al. (2020), students often have intrinsic motivational difficulties and frequently perceive self-organization as challenging. Nevertheless, Wolters and Hussain (2015) concluded that self-regulation competencies for self-study significantly influence successful graduation in the context of higher education.

Digital assistants provide solutions to motivate individuals and organizations in dynamic conditions and requirements. They support the ability to respond to changes in higher education, assist students digitally, and provide personal advising and counseling (Abad-Segura et al., 2020). Several recent studies have demonstrated digital assistants’ ability to improve self-regulation, academic performance, and soft skills (Daradoumis et al., 2021; Lee et al., 2022; Wambsganss et al., 2021). Furthermore, digital support can encounter wrong self-assessments and address student heterogeneity, while improving learning experiences (Marczok, 2016). Their ubiquitous access support the benefit of immediate answers and suggestions anywhere and anytime, regardless of the number of requests and availability of personal advisors (Weber et al., 2021). In particular, individual digital study assistants (IDSA) support students by providing situation-specific, individualized recommendations (Karrenbauer et al., 2021; König et al., 2023). IDSAs can counteract growing student heterogeneity, a factor that is currently affecting higher education in terms of spawning more diverse individual needs and increasing the demand for timely, flexible support. In contrast to pedagogical conversational agents, an IDSA does not directly assist in various facets of learning; instead, it promotes self-organized, goal-oriented study by providing functionalities that support major- or course-specific suggestions and identify individual learning strategies matching students’ strengths and weaknesses. In light of the functionalities of IDSAs, higher education institutions can meet the challenge of rising student numbers by using IDSA technology to offer individually tailored add-ons alongside human counseling services (Karrenbauer et al., 2021; Weber et al., 2021).

In the context of higher education, changing conditions, increasing needs for individualized support, and diverse stakeholder perspectives underlie the need for a comprehensive investigation and reflection on the requirements for an IDSA. Accordingly, this research aimed to identify requirements for IDSA design and development through data collected from a student survey, expert interviews, and from the literature. In turn, these requirements guided the development of an IDSA prototype as part of the current research. The target user group mainly comprised students in their early study phase at a higher education institution, representing a time when most such students would be relative newcomers with little or no experience concerning a higher education institution's organization and routines. In this context, the current research aimed to develop an IDSA that students could use regardless of background or academic performance. Following the development phase, a 3-month test phase allowed the evaluation of the IDSA based on user data. The resulting IDSA provides students with various functionalities that aim to enhance their self-regulatory abilities and provide individual recommendations. Besides e-learning tools, the IDSA rather serves as an advisor to enhance study organization and networking.

This research provides prescriptive design knowledge for researchers, system developers, higher education decision-makers and stakeholders to design and develop a user-centric IDSA. Therefore, the research findings advance the knowledge base in this area. In particular, the implemented system introduces a first functionally feasible IDSA to foster self-regulated study. The IDSA differs from many educational studies in that it is not only tested and evaluated under controlled conditions but also in a real-world setting (Hobert, 2019a). As an added benefit, the system architecture and derived functionalities can be transferred to and implemented in other higher education institutions with only minor adaptations. In conducting this research, an Action Design Research (ADR; Sein et al., 2011) process that included design-oriented and empirical analyses was applied to address the following research questions (RQs):

RQ1

What requirements guide user-centric design and development of an IDSA?

RQ2

How can an IDSA be designed based on the identified requirements?

In a first step and to give an overview of current research, a review of the theoretical foundations of self-regulation, the student life cycle, and IDSA in higher education institutions is presented, followed by a description of the research design and methods used. In particular, an ADR-oriented process, which included qualitative and quantitative methodologies and a literature review, was followed to deduce theoretical and practical contributions and interact iteratively with researchers, students, lecturers, and other higher education stakeholders. Then, the identified IDSA requirements from the performed studies and the literature are described and the IDSA prototype development and its evaluation results are explained. Next, the paper highlights both specific and general implications and recommendations that emerged from the current research’s findings. To conclude, limitations, an outlook for further research, and conclusions are presented.

Theoretical background

Self-regulation theory

Self-regulated learning refers to Kizilcec et al. (2017), who based their work on definitions and models that Pintrich (2000) and Zimmerman (2000) had introduced earlier. These models, that are now well-established in the self-regulated learning field, provide different approaches for explaining the same process. Specifically, Pintrich’s (2000) model focuses on different types of self-regulated learning strategies. Pintrich (2000) defined self-regulated learning as “an active, constructive process in which learners set goals for their learning and then attempt to monitor, regulate, and control their cognition, intentions, and behavior, guided and constrained by their goals and the contextual features of the environment” (p. 453). In contrast, Zimmerman (2000) described self-regulated learning as “self-generated thoughts, feelings, and actions that are planned and cyclically adjusted to achieve personal goals” (p. 14).

In a later revision, Zimmerman and Moylan (2009) described a cyclical process comprising feedback loops to explain the self-regulated learning process, beginning with a personal feedback loop that incorporates information about performance. The authors divided student feedback loops into three phases: pre-thinking, performance, and reflection. Specifically, the pre-thinking phase refers to learning processes and motivational sources that precede learning efforts and guide students’ preparation and readiness to self-regulate their learning. Next is the performance phase, which occurs during learning and affects concentration and performance. The process comes full circle with a reflection phase, which depends on how often feedback is given and by whom. It is important that the person giving the feedback is recognized by the learner. Zimmerman and Moylan (2009) cited quiz grades or personal sources, such as keeping a journal, as feedback and reflection examples.

At the action level, the ability to self-regulate is manifested at different levels: micro (e.g., the reception of a particular text), meso (e.g., time management within a course), and macro (e.g., general study organization) (Sitzmann & Weinhardt, 2018). Vanslambrouk et al. (2017) grouped action related skills under the term introspection, referring to observing individualized goal-related behavior. In this way, it can be determined whether the strategies used serve to achieve the goal in the sense of a target-actual comparison (Adam & Ryan, 2017; Heckhausen & Heckhausen, 2018; Pintrich, 2000; Schunk, 2005). Thus, self-observation can be understood as a metacognitive process linked to self-regulation that leads to engaging in working and learning activities to achieve a goal. Considering extrinsic factors and internal processes, such as intrinsic motivation and attention, is essential for goal-directed self-observation (Heckhausen & Heckhausen, 2018; Pintrich, 2000). Ryan and Deci (2000) defined intrinsic motivation as performing an activity for its inherent satisfaction rather than for some separable consequence. When intrinsically motivated, a person engages in action for fun or a challenge. In contrast, extrinsic motivation occurs when an activity is carried out to attain a separable outcome. However, in contrast to the perspective of some researchers that extrinsically motivated behavior is non-autonomous, Self-determination Theory proposes that extrinsic motivation can vary significantly in the degree to which it is autonomous (Ryan & Deci, 2000). An IDSA can develop functionalities, including a balance of intrinsic and extrinsic factors. The Interactive-Constructive-Active–Passive (ICAP) didactic framework, which links cognitive engagement to active learning outcomes, provides a way to guide such functionalities (Chi & Wylie, 2014). In particular, the ICAP model categorizes student-observable learning activities associated with specific cognitive learning processes into four levels of quality: passive [p], active [a], constructive [c], and interactive [i]. The abbreviation ICAP should be read backward.

Student life cycle

The student life cycle concept was initially introduced in response to the need to professionalize administrative and IT-supported study processes and to support the efficient management of interfaces with study organization and quality management systems (Schulmeister, 2007). This cycle is also draws from organizational research models, combining stakeholder theories, strategic management theories, process-structured organizational systems, and higher education institution’s service and customer relations functions (Sjöström et al., 2019; Smidt & Sursock, 2011). The student life cycle refers to the development of support and functionalities (see Table 1) (Kaklauskas et al., 2012; Sprenger et al., 2010). Lizzio and Wilson (2012) introduced the following phases: orientation, applying for placement and matriculation participation in courses and examinations, graduation, and exmatriculation, and alumni activities. The structure and focus of a student life cycle may differ in terms of teaching (Schulmeister, 2007), quality management (Pohlenz et al., 2020), and the cost of a campus management system (Sprenger et al., 2010). Transition phases, for example, arriving at a university or moving from undergraduate to postgraduate studies, require intensive support (Bates & Hayes, 2017).

Wymbs (2016) noted that a lot of electronic data is already collected during the enrollment phase. These data can be used to provide students with individualized support in choosing a suitable degree program, which can be achieved, for example, by matching self-assessment data with the campus management system and personal data collected at the beginning of the degree program. Because the study phases uncover essential needs and requirements, the student life cycle phases are suitable for student-centered actions, such as addressing needs at the beginning of a student’s studies like questions about student financing or where to find specific locations on campus. This phase-specific focus provides an opportunity to support students individually by translating requirements for an IDSA into practical information (e.g., tests and assignments). In the higher education environment, the ongoing digital transformation has created a wide range of study programs, seminars, and lectures while also accommodating individual needs, such as study financing and psychological support, using different methodological-didactic and media designs.

As an organizational structure, a student life cycle provides a binding, market-oriented set of rules for students, teachers, and higher education administration, ensuring stability in diversity (Schulmeister, 2007). When applied dynamically, it can divide the organization of studies into specific phases by defining support, information, and services for each phase at the micro, meso, and macro levels (Gaisch & Aichinger, 2016). The current research used the student life cycle of Sprenger et al. (2010) to guide the development of support and functionalities. The study process comprised three phases (see Table 1), which included structured sub-dimensions that can be used to structure the development and implementation of IDSAs.

Individual digital study assistants in higher education institutions

Digital transformations enabled the development of various digital systems in the educational context. For example, chatbots, also known as virtual assistants or conversational agents, have become commonplace (Bouaiachi et al., 2014; Hobert, 2019b; Ranoliya et al., 2017). In an educational setting, pedagogical conversational agents are chatbots used in a learning-oriented context (Wellnhammer et al., 2020). Substantial research has emerged in this field over the last decade. Pedagogical conversational agents’ functions and purposes have been manifold and include developing argumentation skills (Wambsganss et al., 2020, 2021), idea generation (Vladova et al., 2019), math education (Cai et al., 2021), programming skills (Hobert, 2019b), information acquisition (Meyer von Wolff et al., 2020), and improving general learning (Winkler et al., 2020). According to Weber et al.’s (2021) taxonomy, pedagogical conversational agents can fulfill various roles (e.g., motivator, tutor, peer, or a combination). Furthermore, they can support different stages in the learning process, including preparation, actual learning, practice and repetition, and reflection. According to previous findings, virtual assistants can improve academic performance by engaging students in learning (Lee et al., 2022). Some of the more recent literature has also addressed the ethical aspects of such assistance (e.g., Spiekermann et al., 2022).

Knote et al. (2019) identified five archetypes of smart personal assistants: chatbots, adaptive voice (vision) assistants, embodied virtual assistants, passive pervasive assistants, and natural conversation assistants. The digital transformation has also led to the development of IDSAs. Following Karrenbauer et al. (2021), this research defines an IDSA as an efficient online student support tool that strengthens self-regulation skills, goal achievement, and study organization through suitable functionalities. In particular, an IDSA considers individual goals, interests, and the sensitization of individual competencies to provide support, recommendations, and reminders to students, for example, helping them plan and manage their studies more efficiently. Depending on its design, architecture, and functionalities, an IDSA can be categorized into one of Knote et al.’s (2019) five identified smart personal assistants’ archetypes. Contrary to a pedagogical conversational agent, an IDSA does not fit the typical roles of a pedagogical conversational agent and is not directly involved in the different facets of the learning process (Weber et al., 2021). Instead, an IDSA provides individual study structuring, situation-specific guidance, and recommendations through interactive information gathering. In addition, it precedes the learning process and offers functionalities that deal with learning content on a reflective level. For example, it can support students with major and course selections and exam organization, in contrast to pedagogical conversational agents, which can then be employed to learn the content through quizzes (Ruan et al., 2019). Furthermore, an IDSA can provide individual learning strategies based on already-completed modules or self-assessments (Karrenbauer et al, 2021). Students can then use these strategies in the preparation stage of learning (Weber et al., 2021). Based on the functionalities, an IDSA can support students’ self-regulation and self-organization abilities to manage their studies individually. For its functionalities, an IDSA can access different information sources, including details that students may provide in a student–IDSA dialog, along with data retrieved automatically from campus or learning management systems, from completed and available modules, or from external resources, such as open educational resources (OER) platforms (Karrenbauer et al., 2021).

Digital assistants provide solutions to existing challenges in higher education institutions that have emerged due to increasing student heterogeneity and growing student numbers. Existing research has primarily focused on developing (personal) conversational agents to support direct learning (Cai et al., 2021; Wambsganss et al., 2020, 2021; Winkler et al., 2020). In contrast, the present research analyzed the requirements for an IDSA and implemented them in a system prototype (Hobert, 2019b) designed to provide functionalities that address the learning content on a reflective level. Accordingly, it followed Sein et al.’s (2011) ADR approach by connecting “theory with practice and thinking with doing” (p. 39). Thus, this research’s multi-perspective analysis combined the results of qualitative and quantitative analyses with scientific knowledge to conceptualize requirements for an IDSA and implemented and evaluated a prototype.

Research design and methods

Exploratory research to develop a user-centric IDSA for higher education institutions must take into consideration students, lecturers, and organizational leaders’ perspectives along with a diverse spectrum of models and theories. ADR lends itself to this type of design and development involving stakeholders and researchers who are working collaboratively (Moloney & Church, 2012). Sein et al. (2011) provide the necessary rigor through the structured ADR approach. An ADR process is guided by the research question(s) and creates requirements based on iterative cycles. Similar to Schütz et al. (2013), ADR was suitable for this endeavor because of the need to solve practical problems in a real-world setting requiring collaboration between researchers and stakeholders. Following ADR, the methodological research process included four stages, which are summarized in Table 2. Due to the iterations, some overlaps and additions occurred between the four stages.

Stage 1: Problem Formulation: The problem formulation in Sects. 1 and 2 led to the identification of a research opportunity to develop an IDSA in higher education institutions. With the IDSA, the focus is on further developing self-regulation and reflection skills in a specific task by providing appropriate cues, reminders, and recommendations. The research goal was part of a long-term project conducted by three higher education institutions with an interdisciplinary ADR team since early 2019.

Stage 2: Building, Intervention, and Evaluation: Beginning in February 2019, the researchers conducted an online survey (n = 570) and multiple expert interviews with lecturers (Lec.; n = 9) and employees from organizational units (OrgaU.; n = 19; see Table 2). The survey participants came from three German higher education institutions and the qualitative interviews were conducted with participants from one university. The questionnaire, expert profiles, and interview guides are available in Online Appendix 1 to 4. The questionnaire and interview guideline were constructed based on the project goal and defined research questions. Both the survey and the interview guideline were then pre-tested with target groups and experts and feedback was incorporated. The results of the qualitative and quantitative parts enabled the findings to be triangulated. For the current research, the in-between method was chosen, which accommodated a methodological mix of survey instruments (Flick, 2018). Following exploratory studies inspired by Myatt (2007), an intensive literature review in a fourth iteration was performed, which was conducted using Cooper’s (1988) taxonomy and the methods of vom Brocke et al., (2009, 2015), Webster and Watson (2002), and Watson and Webster (2020). The researchers derived IDSA design and development requirements from the qualitative, quantitative, and literature analysis results. Next, the ADR team commenced with the system and software design phase, where the developers incorporated functional and design requirements into a technical tool. Due to the ADR team’s interdisciplinary work and the involvement of many stakeholders, the requirement definitions and technical feasibility underwent continuous change. This challenging dynamic was addressed by agile software development (e.g., SCRUM; Schwaber, 1997). The IDSA prototype was launched in the local learning management systems of three German higher education institutions in a 3-month test phase to collect feedback from target users.

Stage 3: Reflection and Learning: Focusing on the requirements for an IDSA from a user-centric perspective by reflecting on the student survey was a first inductive-exploratory step. This process revealed the need to explore additional views through further interviews with stakeholders and lecturers from different organizational units, advisory offices, and faculties. After the exploratory studies provided first impressions, a thematically different, method-guided literature review was conducted to identify theory-guided requirements. The triangulation of all collected data allowed the research team to combine and reflect upon different perspectives, and the reflection was used, in turn, to derive further requirements. The chosen sequence from the inductive-explorative approach (to the theory-driven inquiry) allowed the researchers to be more open to the needs of the internal stakeholders. The results and findings were subsequently published and discussed with information system (IS) experts and higher education administration and management experts at several conferences and workshops. This latter step allowed the IDSA prototype to be developed and influenced the theoretical development through the general findings.

Stage 4: Learning Formalization: This stage entailed conducting a multi-perspective generalization of IDSA design and development recommendations based on the performed reviews, analysis, and software prototyping. Due to the simultaneous development of the prototype, there were visible discrepancies between “wish and reality” and between the stakeholders’ requirements and the theoretical findings. The results and findings were complemented with different perspectives through triangulation and considered their relevance to the IDSA design and development requirements.

Results and findings

IDSA requirements based on quantitative and qualitative analyses

All results and findings were merged into seven requirements with various IDSA design and development sub-items based on the quantitative student survey and qualitative expert interviews. An IDSA in higher education must address different functionalities (Requirement (R.1)), often with regard to pedagogical content. “If it does not have enough attractive functionalities, it will not be used because students are fed up” (OrgaU.12). The participants specified that they wanted functionalities to support learning organization (R.1.1), self-regulation (R.1.2), goal-setting and achievement (R.1.3), and course recommendations (R.1.4), including OER and teaching networks (R.1.5). In addition, they requested recommendations and suggestions based on their interests, competencies, and strengths (R.1.6). Concerning interest-based suggestions, one lecturer exemplarily suggested “[…] if you provide the students with individualized offers, so to speak, and say, we have seen that you are interested in this and that also in your free time or in sports, we could then offer this and that course” (Lec.4). Students also wanted the IDSA to allow them to use recommendations without automatically anticipating changes (R.1.7). “It should have a supporting function, but you should not rely on it exclusively but also be encouraged to look into the content, objectives, study structure yourself” (student survey). Another necessary IDSA functionality entailed networking and exchanging experiences (R.1.8), including topics about workloads for single courses, examination experiences, higher education network building, and an exchange of general experiences. Students, lecturers, and higher education administration experts alike emphasized that an IDSA must provide support and simplification through useful functionalities to ensure its added value (R.1.9).

In addition, an IDSA must allow contact options and provide contact details (R.2), feedback opportunities (R.2.1), and technical support contacts (R.2.2) in case of problems and questions. Data-based responsiveness and individuality (R.3) were also highlighted as IDSA requirements. Many participants indicated their wish to be able to personalize the IDSA (R.3.1), including, for example, the ability to activate and deactivate individual functionalities or to have access to “detailed setting options of the different offers so that I can design my environment even more individually” (student survey). The responses also indicated that the IDSA must include knowledge of a student’s academic course (R.3.2) and provide interdepartmental information (R.3.3) to simplify study organization. Another requirement was that existing data, including the campus management system, learning management systems, and examination regulations, should be (semi-) automatically imported into the IDSA (R.3.4) to minimize the manual effort needed to enter core data and ensure low changeover costs (R.3.5). However, the timeliness of data, content, and dates was deemed crucial (R.3.6). “Keeping all the data completely up to date because every now and then, something pops up in the flourishing biotope, and you think, aha, that’s also there now. It’s also important for the students to find something up-to-date” (OrgaU.8).

Another requirement was that the IDSA must be exhaustively tested (R.4) before being rolled out. The participants identified the need for an intensive test phase to identify faults (R.4.1) and to prevent technical issues and user problems. “The important thing is that it works, so the technology is important. If it does not work once and a second time, the whole thing is off the table” (OrgaU.17). According to the participants, the system must also be continuously further improved. They also stipulated that any errors that should occur ought to be corrected (R.4.2) and that new updates should be made available (R.4.3). In the continuous testing and further development process, students’ feedback must be incorporated (R.4.4). “It is best to let students test the application to identify weaknesses” (student survey).

The results also highlight the importance of marketing strategies (R.5), including the pricing policy for the IDSA (R.5.1). Students stated that they would be unwilling to pay for an IDSA and advertisements was also highlighted as a user barrier. The participants thought that high levels of awareness (R.5.2) are required to ensure widespread usage and added value, especially in the case of networking functionalities. “It must be made known that there is such a thing and that it is very much appreciated” (OrgaU.9) so that “many students would use it” (OrgaU.14). The participants also indicated a preference for target group-oriented communication and wording (R.5.3). “Write in simple language so that students understand it and that it is not too cumbersome” (OrgaU.12).

Generally, students, higher education administration experts, and lecturers recommended that an IDSA should also address data protection and security (R.6) and consider usability aspects (R.7). Specifically, the results highlight the importance of transparent data handling (R.6.1), anonymous data collection (R.6.2), and avoiding any misuse of data (R.6.3). “Before students agree to the use of their personal data, if applicable, it must be explained to them what they get in return (benefits) and why [an IDSA] then makes better recommendations” (OrgaU.12). Individualized personal data protection and security mean that an IDSA must feature detailed privacy settings (R.6.4). Furthermore, the results and findings reveal that intuitive, easy usability (R.7.1) is essential. “I think students would use that one if it were user-friendly, not that you need a manual” (OrgaU.10). Participants also mentioned that the IDSA should incorporate a modular design (R.7.2) to adjust the functionalities’ use, allowing uninteresting or irrelevant functionalities to be hidden. In addition, responses indicated that the registration procedure must be as simple and straightforward as possible (R.7.3). Lastly, optional introduction tutorials (R.7.4) were suggested to be able to improve students’ understanding of the IDSA, its functionalities, purpose, and use. Table 3 illustrates all identified (sub-)requirements form the qualitative and quantitative studies.

IDSA requirements from theory

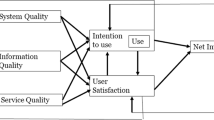

The literature review provided the theoretical framework for the requirements (Winkler & Söllner, 2020) and the basis for the IDSA prototype development. Five domains were identified: (a) user satisfaction, (b) pedagogical domain, (c) service quality, (d) perceived information quality, and (e) perceived system quality. (a) According to Zheng et al. (2013), user satisfaction is a subjectively perceived quality that varies from person to person. It is a perceived value and extends the IS success model to include net benefits (Petter & McLean, 2009). Net benefits refer to the perception-relevant variables discussed by Naveh et al. (2012) and Isaac et al. (2017), such as content completeness (R.3.6), knowledge acquisition (R.1.1–R.1.3 and R.1.5), and performance improvement (R.1.1–1.3). These variables were assigned to the pedagogical domain (b) based on the ICAP framework (see section on self-regulation theory). The individual criteria (e.g., passive learning, activating, constructing, interacting, and reflecting) are specifically adapted to online teaching and learning. This pedagogical framework undergirded the development of IDSA functionalities (Chi & Wylie, 2014; Winkler & Söllner, 2020). The technological domain of interest is divided into the requirements following Zheng et al. (2013): (c) Service quality depends on the service worker being empathetic, reliable, responsive, and available. These characteristics create a viable user experience (R.7) (Lee-Post, 2009; Uppal et al., 2017). (d) In the field of perceived information quality, Machado-Da-Silva et al. (2014) and Raspopovic et al. (2014) postulated that such variables as ease of use (R.7), interesting content (R.1), understandability (R.7.1), clear writing (R.5.3), relevance (R.1.9), and usefulness (R.1.9) contribute to user willingness. (e) In terms of subjectively perceived system quality, as described by Lin and Wang (2012) and Alla et al. (2013), ease of use (R.7.1) and correct navigation (R.7) also promote usability. Zheng et al. (2013) emphasized the importance of these requirements; specifically, the more motivated users are, the more they will use the IDSA.

IDSA prototype development

To ensure frequent communication in the development team and to respond to changes in the requirements, the research team performed weekly SCRUM planning and retrospectives. The software was continuously tested before being deployed to users during these weekly meetings, which took place during a 6-month development phase (R.4). Figure 1 illustrates the overall system architecture of the IDSA prototype based on the identified requirements. The IDSA’s integration as a plugin in an existing learning management system represents a fundamental characteristic of the system architecture. This decision was intended to address several requirements: Access to the IDSA through the learning management system ensured easy access at no additional cost because the learning management system is freely available for all students (R.5.1). Additionally, it aimed to create high awareness among students, as the learning management system comprised an optimal dissemination channel (R.5.2). As the learning management system was a familiar platform for many students, this integration was anticipated to further enhance the IDSA’s user-friendliness and accessibility (R.7.1). No additional registration process was required, as students already had an account in the learning management system and could use the IDSA immediately (R.7.3). A subgroup of the ADR team ensured that the wording was appropriate and appealing to students (R.5.3).

In the prototype, linking the IDSA to a learning management system allowed data exchange. A data delivery plugin transferred learning management system data about lectures, courses, and seminars to the backend database. Furthermore, data from the students’ learning management system accounts contained information about their studies, semesters, and enrolled courses. The learning management system and IDSA data linkage addressed R.3 and associated sub-items (R.3.1–R.3.5). The backend architecture was developed with the Python-based Django framework, a commonly used full-stack web development framework. Full-stack refers to extensive functionalities, such as high-security standards (e.g., cross-site scripting protection, SQL injection protection, and HTTPS deployment; R.6.3). The core of the backend was the database. A cronjob (time-based job scheduler) performs a daily recurring database query and accordingly updates the database with learning management system data. The learning management system had a data interface accessible to the cronjob. Figure 1 displays the data delivery plugin connection to the database. The backend database was designed to interact with the frontend plugin of the IDSA through an application programming interface (API). Each IDSA user received a 32-digit user ID, guaranteeing the anonymization of personal data (R.6.2). Before using the IDSA for the first time, the students were directed to the data-sharing settings and were provided instruction on data transmission, processing, and storage, as well as data encryption (R.6.1). Generally, users could decide whether to share their data with the IDSA research team, other students, or no one. Additionally, users could choose which of their data to share, including courses, academic degrees, semesters, gender, and learning management system usage behavior. These sharing settings could be modified anytime (R.6.1 and R.6.4). The backend system encompassed the scripts representing the IDSA’s functionalities. Table 4 outlines the developed functionalities of the IDSA and associated descriptions. The entire IDSA prototype development culminated in a 3-month testing phase. Student feedback was incorporated into the development process through a feedback functionality (R.2.1, R.4.1, R.4.4).

IDSA prototype evaluation

After the design iteration, the implemented IDSA prototype was rolled out in three German higher education institutions to allow the research team to evaluate the requirements and IDSA prototype. The students had access to the IDSA through the local learning management system and could use and test the prototype, providing usage data for analysis. The IDSA prototype also featured a functionality that was included specifically for evaluation and presented quantitative questions on usability, added value, acceptance, and wording based on a 5-point Likert scale ranging from 1 (don’t agree) to 5 (fully agree). The scale and questions were adapted from Bruner (2012). The participants provided further feedback in open text fields. The questionnaire and usage data provided user feedback (R.2.1). System usage, testing, responding to the questionnaire, and other forms of feedback were voluntary. Out of 1,036 users, 643 allowed their data to be used for research purposes (R.5.2, R.6). Almost 50% of these participants came from one university, while 40% were from the second, and the remaining 10% were from the third university. Out of the 643 students who allowed their data to be used, 135 users had no data stored about their study degree and 482 (75%) were undergraduates. Of these, 33% were from the first university, 62% were from the second, and 5% were from the third. The difference between the actual users and available data illustrates the option given to students to opt out of any further use of their data. Many of the participating students decided not to share their data (R.6).

The prototype allowed self-autonomy (R.1.7) by enabling each student to individualize (R.3.1) the IDSA, by allowing the choice between selecting a functionality and its recommendations or ignoring them (R.1, R.7.2). The usage data revealed that the functionalities “Organization of Learning” (R.1.1), “Interests” (R.1.4, R.1.6), “OER” (R.1.5), and “Personality” (R.1.2) were the most used in that each of these had between 509 and 555 users. Next in order of preference was the functionality “To-do” (R.1.1–R.1.3), which had 480 users. The participants used the remaining functions, “Get-Together” (R.1.1, R.1.8) and “Data Sovereignty” (R.1.7, R.6), between 310 and 387 times. However, the students did not always perceive that the functionalities added value, as indicated in the questionnaire in the functionality “Evaluation” (M = 2.79, \(\sigma\) = 1.6; R.2.1, R.4.4). Students were indecisive and could only partly imagine using the IDSA regularly (M = 3.1, \(\sigma\) = 1.6; R.1.9). An average of 3.58 showed that the students indicated that the IDSA prototype’s functionalities encouraged them to think and reflect more on their individual study goals (R.1.3; \(\sigma\) = 1.71).

The functionality “Evaluation” included six questions that evaluated the usability and user interface (R.7) of the IDSA prototype. The students requested to indicate using the same 5-point Likert scale whether they enjoyed using the IDSA or perceived the design and realization as boring. They were also asked to evaluate perceived ease and intuitive usage, design, usability, and comfort of use (Bruner, 2012). The first four questions prompted feedback from 271 students. However, because each of the last two questions received only 19 answers, they were excluded from the analysis. The average feedback rating was 3.3 in all categories. The students particularly enjoyed the usage (M = 3.75, \(\sigma\) = 1.79) and perceived using the IDSA as relatively easy and intuitive (M = 3.44, \(\sigma\) = 1.65). Students gave their lowest ratings to the design (M = 3.08, \(\sigma\) = 1.58) and its realization (M = 2.93, \(\sigma\) = 1.58), indicating that these characteristics require further improvement.

The results align with the requirement of an instructional tutorial (R.7.4) to increase usability. Almost all students elected to receive basic (51%) or detailed information (40%) about the IDSA prototype, its functionalities, and its usage. The students could understand the text and content and rated understandability by an average of 3.52 (\(\sigma\) = 1.68; R.5.3). Forty-five students gave feedback via open text (R.2.1). For example, some students provided information about the “Organization of Learning” (R.1.1) functionality: “Tips are all mostly very helpful for subjects where you need to memorize, but for mathematical subjects, I find them less useful, unfortunately” (student feedback). Other students suggested improving the usability (R.7): “It would be perfect to be able to decide the arrangement of the functions yourself. In this way, the individually relevant functions can be arranged at the top” (student feedback). Students found the IDSA to add value (R.1.9): “The study assistant is a very useful tool for accessing sources of information that one did not know about before. A lot of useful tips are provided. Unfortunately, study daily life often offers little time to deal with the assistant extensively” (student feedback). Other students found the functionality “Data Sovereignty” (R.1.7) helpful. Lastly, 25 students identified system errors (R.4).

Discussion, implications, and recommendations

Before discussing results, barriers, and issues, the discussion will focus on answering RQ1 and RQ2. RQ1 was answered by collecting seven requirement groups with several sub-requirements based on the literature, qualitative, and quantitative studies. Different stakeholders and the existing literature defined the requirements; specifically, they addressed technical (Zheng et al., 2013), content-related (Isaac et al., 2017; Naveh et al., 2012; Raspopovic et al., 2014), economic, and educational (Chi & Wylie, 2014) aspects. The requirements were implemented in an IDSA prototype and tested in the field with students. This procedure addressed RQ2 and outlined the development process for an IDSA prototype using multi-perspective requirements. Although the scientific literature on digital assistants at higher education institutions is limited, it has increased in recent years. Recent studies have featured the development of archetypes or taxonomies for pedagogical conversational agents (Knote et al., 2019; Weber et al., 2021) or chatbot requirements (Meyer von Wolff et al., 2020). In contrast, the current research collected requirements for a concrete manifestation of an IDSA, then tested and evaluated a prototype. Through this approach, this investigation established a link between the meta-level and practical implementations.

Previous research has also identified requirements and design principles for (pedagogical) conversational agents, including design principles for argumentative learning (Wambsganss et al., 2020), to learn to program (Hobert, 2019b), or general learning (Winkler et al., 2020). Some studies provided a mock-up prototype (Wambsganss et al., 2021) or a functional feasible one (Hobert, 2019b) that were evaluated through experiments (Wambsganns et al., 2020; Winkler et al., 2020) or in the field. However, many of them included only a limited number of participants (Hobert, 2019b). This research extends academic knowledge by identifying requirements for an IDSA and evaluating the IDSA prototype in a real-world setting with more than 1,000 participants (Hobert, 2019a). Through an ADR process, design principles can be derived in addition to practical insights, while the anatomy of IS design theory outlined by Jones and Gregor (2007) was followed to summarize and communicate the results (see Table 5). Systematically deriving requirements from multiple perspectives highlights IDSA attributes that lead to design patterns in an IDSA’s technical architecture and functionality.

The following section discusses results and findings that emerged during the ADR process, offering helpful insights for decision-makers and stakeholders in the practical implementation. According to the student survey responses, students expected a variety of functionalities when using an IDSA, in line with the existing literature (Chi & Wylie, 2014; Winkler & Söllner, 2020). Requirements, such as the functionalities summarized in Table 4, can be individually adapted to enable a transfer to create a refined IDSA. Practitioners can specifically modify the functionalities to address student groups within different life cycle phases. Therefore, the requirements form as a foundation to conceptualize a multi-functional IDSA. Overall, the functionalities of the IDSA prototype were rated slightly above the satisfaction level (M = 3.0–4.0), and students only perceived limited added value from certain functionalities (M = 2.70, \(\sigma\) = 1.6). This feedback suggests the need to critically evaluate an IDSA prototype’s maturity before its deployment. Multi-functionality must not be achieved at the expense of the depth and content of individual functionalities (Hobert, 2019a). The derived requirements demonstrate that students and other higher education stakeholders ranked privacy protection and transparency highly, which is consistent with current research (Spiekermann et al., 2022). Allowing students to decide how their data is handled strengthens self-autonomy and builds trust.

Although integrating the IDSA prototype into an existing learning management system offered significant advantages, several shortcomings occurred during the ADR-guided development process. For instance, the ADR team had to incorporate management and IDSA developers and operators to ensure seamless integration in the learning management system and coordinate efforts in the development process. Furthermore, the learning management system partly predefined the frontend structure and navigation, precluding frequent change. Therefore, IDSA usability and user-friendliness are limited by the learning management system, or in the case of a campus management system integration, by the campus management system. According to the results of the prototype evaluation, the users found the visual design of the learning management system interface unappealing and tedious (M = 3.08, \(\sigma\) = 1.58). Therefore, researchers and higher education institutions stakeholders must balance this trade-off. This outcome further highlights that good visibility and accessibility in a learning management system do not guarantee success. Organizational units and lecturers should also be incentivized to generate content for the IDSA (König et al., 2021). For example, they should provide student material from their subject-specific repertoire and courses, including recommendations concerning OER. The quantitative and qualitative data revealed the focus of students and higher education institution administration experts focus on such requirements as attractive features, easy and intuitive usage, and accessible and up-to-date materials. These aspects align with previous theoretical findings (Alla et al., 2013; Isaac et al., 2017; Lin & Wang, 2012; Machado-Da-Silva et al., 2014; Zheng et al., 2013).

High standards in data protection and privacy can impede IDSA functionalities. As IDSAs partly rely on students’ curricula and course data to provide helpful recommendations, access to these data should be provided. If students do not provide the IDSA with their personal data, several functionalities (e.g., networking, course recommendations) will be unavailable. Privacy policies necessitate privacy-compliant texts that allow students to approve or reject options while, at the same time, educating them about the importance of protecting their personal data. It is critical to encourage students to reflect more deeply on their privacy-related behavior. Depending on the decision, evaluations of user data by stakeholders and IDSA developers to improve software quality are restricted or even impossible. Nevertheless, students’ ability to deny data-sharing for research purposes ultimately restricts further research. These trade-offs must be considered while developing an IDSA, and developers should carefully consider how to combine IDSA functionalities and data privacy protection.

The expert interviews with lecturers and organizational units revealed diverse requirements for an IDSA. This multi-perspective stakeholder analysis required prioritization by the ADR team, which was adequately communicated to the stakeholders. Further IDSA developments should consider the value of marketing of an IDSA prototype or final IDSA. Although the learning management system at the three chosen higher education institutions was used to ensure the highest visibility and accessibility, only slightly more than 1,000 students out of over 60,000 used and evaluated the IDSA prototype. The data shows that the relative usage rate is relatively low, and that this aspect must be strengthened in further IDSA developments. The fact that only 45 users provided written feedback created challenges in evaluating user behavior and indicates that students did not like the option of free-text feedback. Therefore, future research and developments should consider other feedback methods.

Limitations, further research, and conclusions

This research entailed the design, development, and evaluation of an IDSA prototype. The research’s aim was to strengthen higher education students’ self-regulation skills, improve study organization, and provide individual recommendations to support human advising. The requirements are intended as high-level guidance for higher education institutions stakeholders and IDSA developers and offer the first step in developing new design theories for IDSA. Identifying and evaluating appropriate requirements for an IDSA comprise a dynamic process requiring concerted efforts from all stakeholders.

This research is limited by its focus on three typical higher education institutions in Germany, a narrow perspective that reduces the generalizability of the results and findings and cannot ensure completeness. Higher education institutions in different countries feature many context-specific aspects and differences. Therefore, further research should examine how cultural differences may impact the requirements, design, development, and introduction of an IDSA. Further research can also focus on more case studies of different and similar higher education institutions to increase global generalizability. Additionally, even though this research has demonstrated the IDSA’s functional feasibility (proof-of-concept), further research should prove the IDSA’s proof-of-value and proof-of-use (Nunamaker et al., 2015). For example, future studies should analyze the long-term impacts of IDSA usage in higher education, including efficiency, usefulness, and acceptance. Various adverse effects may be associated with IDSA usage and its impact on strengthening self-regulation skills, study organization, and goal achievement. Despite significant research on voice and natural language-based systems, these features were not included in the IDSA prototype because of restrictions imposed by the local learning management system. Further research can include voice and natural language-based systems, particularly to improve usability.

In conclusion, the multi-perspective research results, findings, and recommendations illustrate how to design and develop a user-centric IDSA and provide the foundation for new opportunities for further investigation of IDSA in higher education institutions.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Abad-Segura, E., González-Zamar, M.-D., Infante-Moro, J. C., & Ruipérez García, G. (2020). Sustainable management of digital transformation in higher education: Global research trends. Sustainability, 12(5), 2107. https://doi.org/10.3390/su12052107

Adams, N., Little, T. D., & Ryan, R. M. (2017). Self-determination theory. In M. Wehmeyer, K. Shogren, T. Little, & S. Lopez (Eds.), Development of self-determination through the life-course (pp. 416–436). Springer.

Alla, M. M. S. O., Faryadi, Q., & Fabil, N. B. (2013). The impact of system quality in e-learning system. Journal of Computer Science and Information Technology, 1(2), 14–23.

Bates, L., & Hayes, H. (2017). Using the student lifecycle approach to enhance employability: An example from criminology and criminal justice. Asia-Pacific Journal of Cooperative Education, 18(2) (Special Issue), 141–151.

Bouaiachi, Y., Khaldi, M., & Azmani, A. (2014). A prototype expert system for academic orientation and student major selection. International Journal of Scientific & Engineering Research, 5(11), 25–28.

Bruner, G. (2012). Marketing scales handbook. GCBII Productions.

Cai, W., Grossman, J., Lin, Z. J., Sheng, H., Wei, J.T.-Z., Williams, J. J., & Goel, S. (2021). Bandit algorithms to personalize educational chatbots. Machine Learning, 110(9), 2389–2418. https://doi.org/10.1007/s10994-021-05983-y

Chi, M. T. H., & Wylie, R. (2014). The ICAP framework: Linking cognitive engagement to active learning outcomes. Educational Psychologist, 49(4), 219–243. https://doi.org/10.1080/00461520.2014.965823

Clarke, J., Nelson, K., & Stoodley, I. (2013). The place of higher education institutions in assessing student engagement, success and retention: A maturity model to guide practice. In S. Frielick, N. Buissink-Smith, P. Wyse, J. Billot, J. Hallas, & E. Whitehead (Eds.), Research and development in higher education: The place of learning and teaching (pp. 91–101). Higher Education Research and Development Society of Australasia.

Cooper, H. M. (1988). Organizing knowledge syntheses: A taxonomy of literature reviews. Knowledge in Society, 1, 104–126.

Daradoumis, T., Marquès Puig, J. M., Arguedas, M., & Liñan, L. C. (2021). A distributed systems laboratory that helps students accomplish their assignments through self-regulation of behavior. Educational Technology Research and Development, 69, 1077–1099. https://doi.org/10.1007/s11423-021-09975-6

Eom, S. B., Wen, H. J., & Ashill, N. (2006). The determinants of students’ perceived learning outcomes and satisfaction in university online education: An empirical investigation. Decision Sciences Journal of Innovative Education, 4, 215–235. https://doi.org/10.1111/j.1540-4609.2006.00114.x

Flick, U. (2018). Triangulation and mixed method. Sage Publications.

Gaisch, M., Aichinger, R. (2016). Pathways for the establishment of an inclusive higher education governance system: An innovative approach for diversity management. Proposal for the 38th EAIR Forum Birmingham 2016.

Heckhausen, H., & Heckhausen, J. (2018). Development of motivation. In J. Heckhausen & H. Heckhausen (Eds.), Motivation and action (pp. 679–743). Springer.

Hobert, S. (2019a). How are you, chatbot? Evaluating chatbots in educational settings—Results of a literature review. In N. Pinkwart & J. Konert (Eds.), DELFI 2019 (pp. 259–270). Gesellschaft für Informatik e.V.

Hobert, S. (2019b). Say hello to 'Coding Tutor'! Design and evaluation of a chatbot-based learning system supporting students to learn to program. In Proceedings of the international conference on information systems.

Hone, K. S., & El Said, G. R. (2016). Exploring the factors affecting MOOC retention: A survey study. Computers and Education, 98, 157–168. https://doi.org/10.1016/j.compedu.2016.03.016

Hornsby, D. J., & Osman, R. (2014). Massification in higher education: Large classes and student learning. Higher Education, 67, 711–719. https://doi.org/10.1007/s10734-014-9733-1

Isaac, O., Abdullah, Z., Ramayah, T., & Mutahar, A. M. (2017). Internet usage, user satisfaction, task-technology fit, and performance impact among public sector employees in Yemen. International Journal of Information and Learning Technology, 34(3), 210–241. https://doi.org/10.1108/IJILT-11-2016-0051

Jones, D., & Gregor, S. (2007). The anatomy of a design theory. Journal of the Association for Information Systems, 8, Article 19.

Kaklauskas, A., Daniūnas, A., Amaratunga, D., Urbonas, V., Lill, I., Gudauskas, R., & D‘amato, M., Trinkūnas, V., & Jackutė, I. (2012). Life cycle process model of a market-oriented and student centered higher education. International Journal of Strategic Property Management, 16(4), 414–430. https://doi.org/10.3846/1648715X.2012.750631

Karrenbauer, C., König, C. M., & Breitner, M. H. (2021). Individual digital study assistant for higher education institutions: Status quo analysis and further research agenda. In Innovation through information systems: Volume III: A collection of latest research on management issues (pp. 108–124). Springer

Kizilcec, R. F., Perez-Sanagustín, M., & Maldonado, J. J. (2017). Self-regulated learning strategies predict learner behavior and goal attainment in massive open online courses. Computers & Education, 104, 18–33. https://doi.org/10.1016/j.compedu.2016.10.001

Klammer, U. (2019). Diversity management und Hochschulentwicklung [Engl. Diversity management and higher education development]. In D. Kergel & B. Heidkamp (Eds.), Praxishandbuch Habitussensibilität und Diversität in der Hochschullehre (pp. 45–69). Springer.

Knote, R., Janson, A., Söllner, M., & LeimeisterIn , J. M. (2019). Classifying smart personal assistants: An empirical cluster analysis. In Proceedings of the Hawaii international conference on system sciences.

König, C. M., Karrenbauer, C., & Breitner, M. H. (2023). Critical success factors and challenges for individual digital study assistants in higher education: A mixed methods analysis. Education and Information Technologies, 28(4), 4475–4503. https://doi.org/10.1007/s10639-022-11394-w

König, C. M., Reinken, C., Greiff, P., Karrenbauer, C., Hoppe, U. A., & Breitner, M. H. (2021). Incentives for lecturers to use OERs and participate in inter-university teaching exchange networks. In Proceedings of the Americas conference on information systems.

Lee, Y. F., Hwang, G. J., & Chen, P. Y. (2022). Impacts of an AI-based chabot on college students’ after-class review, academic performance, self-efficacy, learning attitude, and motivation. Educational Technology Research and Development, 70, 1843–1865. https://doi.org/10.1007/s11423-022-10142-8

Lee-Post, A. (2009). E-learning success model: An information systems perspective. Electronic Journal of e-Learning, 7(1), 61–70.

Lin, W. S., & Wang, C. H. (2012). Antecedences to continued intentions of adopting e-learning systems in blended learning instruction: A contingency framework based on models of information system success and task-technology fit. Computers & Education, 58(1), 88–99. https://doi.org/10.1016/j.compedu.2011.07.008

Lizzio, A., & Wilson, K. (2012). Student lifecycle, transition and orientation. Strategic Student Orientation. Griffith University.

Machado-da-Silva, F., Meirelles, F. S., Filenga, D., & Filho, M. B. (2014). Student satisfaction process in virtual learning system: Considerations based in information and service quality from Brazil’s experience. Turkish Online Journal of Distance Education, 15(3), 122–142.

Marczok, Y. M. (2016). Blended learning as a response to student heterogeneity. Managing innovation and diversity in knowledge society through turbulent time. In Proceedings of the MakeLearn and TIIM joint international conference.

Meyer von Wolff, R., Nörtemann, J., Hobert, S., & Schumann, M. (2020). Chatbots for the information acquisition at universities—A student’s view on the application area. In A. Følstad, T. Araujo, S. Papadopoulos, L.-C. Law, O.-C. Granmo, E. Luger, & P. B. Brandtzaeg (Eds.), Chatbot research and design—3rd international workshop (conversations) (pp. 231–244). Springer.

Moloney, M., & Church, L. (2012). Engaged scholarship: Action design research for new software product development. In Proceedings of the international conference on information systems.

Myatt, G. J. (2007). Making sense of data—A practical guide to exploratory data analysis and data mining. Wiley.

Naveh, G., Tubin, D., & Pliskin, N. (2012). Student satisfaction with learning management systems: A lens of critical success factors. Technology, Pedagogy and Education, 21(3), 337–350. https://doi.org/10.1080/1475939X.2012.720413

Nunamaker, J. F., Briggs, R. O., Derrick, D. C., & Schwabe, G. (2015). The last research mile: Achieving both rigor and relevance in information systems research. Journal of Management Information Systems, 32(3), 10–47. https://doi.org/10.1080/07421222.2015.1094961

OECD. (2023). Number of students (indicator). Tertiary 1995–2020. Retrieved May 11, 2023 from https://data.oecd.org/eduresource/number-of-students.htm.

Petter, S., & McLean, E. R. (2009). A meta-analytic assessment of the DeLone and McLean IS success model: An examination of IS success at the individual level. Information and Management, 46(3), 159–166. https://doi.org/10.1016/j.im.2008.12.006

Pintrich, P. R. (2000). The role of goal orientations in self regulated learning. In M. Boekaerts, P. R. Pintrich, & M. Zeidner (Eds.), Handbook of self regulation (pp. 451–502). Academic Press.

Pohlenz, P., Mitterauer, L., & Harris-Huemmert, S. (2020). Qualitätssicherung im student life cycle [Engl. Quality assurance in the student life cycle]. Waxmann.

Ranoliya, B. R., Raghuwanshi, N., & Singh, S. (2017). Chatbot for university related FAQs. In Proceedings of the international conference on advances in computing, communications and informatics.

Raspopovic, M., Jankulovic, A., Runic, J., & Lucic, V. (2014). Success factors for e-learning in a developing country: A case study of Serbia. The International Review of Research in Open and Distributed Learning, 15(3), 1–23.

Ruan, S., Jiang, L., Xu, J., Tham, B. J.-K., Qiu, Z., Zhu, Y., Murnane, E. L., Brunskill, E. & Landay, J. A. (2019). QuizBot: A dialogue-based adaptive learning system for factual knowledge. In Proceedings of the CHI conference on human factors in computing systems.

Ryan, R. M., & Deci, E. L. (2000). Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. American Psychologist, 55, 68–78. https://doi.org/10.1037/0003-066X.55.1.68

Schulmeister, R. (2007). Der “Student Lifecycle“ als organisationsprinzip für e-learning, [Engl. The “Student Lifecycle” as an organizational principle for e-learning]. In R. Keil, M. Kerres, & R. Schulmeister (Eds.), eUniversity update Bologna (pp. 45–78). Waxmann.

Schunk, D. H. (2005). Self-regulated learning: The educational legacy of Paul R. Pintrich. Educational Psychologist, 40, 85–94. https://doi.org/10.1207/s15326985ep4002_3

Schütz, A., Widjaja, T., & Gregory, R. W. (2013). Escape from Winchester mansion—Toward a set of design principles to master complexity in IT architecture. In Proceedings of the international conference on information systems.

Schwaber, K. (1997). SCRUM development process. In J. Sutherland, C. Casanave, J. Miller, P. Patel, & G. Hollowell (Eds.), Business object design and implementation (pp. 117–134). Springer.

Sein, M., Henfridsson, O., Purao, S., Rossi, M., & Lindgren, R. (2011). Action design research. Management Information Systems Quarterly, 35(1), 37–56. https://doi.org/10.2307/23043488

Sjöström, J., Aghaee, N., Dahlin, M., & Agerfalk, P. (2019). Designing chatbots for higher education practice. In Proceedings of the international conference on information systems education and research.

Sitzmann, T., & Weinhardt, J. M. (2018). Training engagement theory: A multilevel perspective on the effectiveness of work-related training. Journal of Management, 44(2), 732–756. https://doi.org/10.1177/0149206315574596

Spiekermann, S., Krasnova, H., Hinz, O., Baumann, A., Benlian, A., Gimpel, H., Heimbach, I., Köster, A., Maedche, A., Niehaves, B., Risius, M., & Trenz, M. (2022). Values and ethics in information systems: A state-of-the-art analysis and avenues for future research. Business & Information Systems Engineering, 64, 247–264. https://doi.org/10.1007/s12599-021-00734-8

Sprenger, J., Klages, M., & Breitner, M. H. (2010). Cost-benefit analysis for the selection, migration, and operation of a campus management system. Business & Information Systems Engineering, 2, 219–231. https://doi.org/10.1007/s12599-010-0110-z

Smidt, H., & Sursock, A. (2011). Engaging in lifelong learning: Shaping inclusive and responsive university strategies [SIRUS]. European University Association Publications.

Traus, A., Höffken, K., Thomas, S., Mangold, K., & Schroer, W. (2020). Stu.diCo. Studieren digital in Zeiten von Corona, [Engl. Stu.diCo. Studying digitally in times of corona]. Retrieved May 11, 2022 from https://doi.org/10.18442/150.

Uppal, M. A., Ali, S., & Gulliver, S. R. (2017). Factors determining e-learning service quality. British Journal of Educational Technology, 49(3), 412–426. https://doi.org/10.1111/bjet.12552

Van der Wende, M. C. (2000). The bologna declaration: Enhancing the transparency and competitiveness of European higher education. Journal of Studies in International Education, 4, 3–10. https://doi.org/10.1080/713669277

Vanslambrouck, S., Zhu, C., Lombaerts, K., Philipsen, B., & Tondeur, J. (2017). Students’ motivation and subjective task value of participating in online and blended learning environments. The Internet and Higher Education, 36, 33–40. https://doi.org/10.1016/j.iheduc.2017.09.002

Vladova, G., Haase, J., Rüdian, L., & Pinkwart, N. (2019). Educational chatbot with learning avatar for personalization. In Proceedings of the Americas conference on information systems.

Vom Brocke, J., Simons, A., Niehaves, B., Reimer, K., Plattfaut, R., & Cleven, A. (2009). Reconstructing the giant: On the importance of rigour in documenting the literature search process. In Proceedings of the European conference on information systems.

Vom Brocke, J., Simons, A., Riemer, K., Niehaves, B., Plattfaut, R., & Cleven, A. (2015). Standing on the shoulders of giants: Challenges and recommendations of literature search in information systems research. Communications of the Association for Information Systems, 37, 205–224.

Wambsganss, T., Söllner, M., & Leimeister, J. (2020). Design and evaluation of an adaptive dialog-based tutoring system for argumentation skills. In Proceedings of the international conference on information systems.

Wambsganss, T., Weber, F., & Söllner, M. (2021). Designing an adaptive empathy learning tool. In Proceedings of the international conference on Wirtschaftsinformatik.

Watson, R. T., & Webster, J. (2020). Analysing the past to prepare for the future: Writing a literature review a roadmap for release 2.0. Journal of Decision Systems, 29(3), 129–147.

Weber, F., Wambsganss T., Rüttmann, D., & Söllner, M. (2021). Pedagogical agents for interactive learning: A taxonomy of conversational agents in education. In Proceedings of the international conference on information systems.

Webster, J., & Watson, R. T. (2002). Analyzing the past to prepare for the future: Writing a literature review. Management Information Systems Quarterly, 26(2), 13–23.

Wellnhammer, N., Dolata, M., Steigler, S., & Schwabe, G. (2020). Studying with the help of digital tutors: Design aspects of conversational agents that influence the learning process. In Proceedings of the Hawaii international conference on system sciences.

Winkler, R., Hobert, S., Salovaara, A., Söllner M., & Leimeister, J. M. (2020). Sara, the lecturer: Improving learning in online education with a scaffolding-based conversational agent. In Proceedings of the CHI conference on human factors in computing systems.

Winkler, R., & Söllner, M. (2020). Towards empowering educators to create their own smart personal assistant. In Proceedings of the Hawaii international conference of system science.

Wolters, C. A., & Hussain, M. (2015). Investigating grit and its relations with college students’ self-regulated learning and academic achievement. Metacognition and Learning, 10, 293–311. https://doi.org/10.1007/s11409-014-9128-9

Wong, B. T. M., & Li, K. C. (2019). Using open educational resources for teaching in higher education: A review of case studies. In Proceedings of the international symposium on educational technology.

Wymbs, C. (2016). Make better use of data across the student life cycle. Enrollment Management Report, 20(9), 8.

Zheng, Y., Zhao, K., & Stylianou, A. (2013). The impacts of information quality and system quality on users’ continuance intention in information-exchange virtual communities: An empirical investigation. Decision Support Systems, 56, 513–524. https://doi.org/10.1016/j.dss.2012.11.008

Zimmerman, B. (2000). Self-efficacy: An essential motive to learning. Contemporary Educational Psychology, 25, 82–91. https://doi.org/10.1006/ceps.1999.1016

Zimmerman, B. J., & Moylan, A. R. (2009). Self-regulation: Where metacognition and motivation intersect. In D. J. Hacker, J. Dunlosky, & A. C. Graesser (Eds.), Handbook of Metacognition in Education (pp. 299–315). Routledge.

Funding

Open Access funding enabled and organized by Projekt DEAL. This work was supported by the German Federal Ministry of Education and Research, Bonn [Grant Number 16DHB2123]. The funding source had no involvement in the conduct of the research and/or preparation of the article.

Author information

Authors and Affiliations

Contributions

Conceptualization: CK; Methodology: CK, TB, CMK; Roles/writing—original draft: CK, TB, CMK; Writing—review and editing: CK, TB, CMK, MHB; Project administration: CK; Data curation: CK, CMK; Software development: TB; Funding acquisition: MHB; Supervision/conceptualization: MHB.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Karrenbauer, C., Brauner, T., König, C.M. et al. Design, development, and evaluation of an individual digital study assistant for higher education students. Education Tech Research Dev 71, 2047–2071 (2023). https://doi.org/10.1007/s11423-023-10255-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11423-023-10255-8