Abstract

Prior to this study, a testable model for the influence of contextual knowledge (XK) on teacher candidates’ intention to integrate technology into classroom instruction had not been established. We applied the Decomposed Theory of Planned Behavior (DTPB) to aid us in this effort. Our work (a) provided a theoretical conceptualization for factors of XK through application of the DTPB, (b) represented the synergistic effects among these factors, and (c) allowed us to explore their influences on teacher candidates’ intentions to teach with technology. To assess our model, which includes factors such as teacher candidates’ beliefs, attitudes, and efficacy, we developed an instrument, the Intention to Teach with Technology (IT2) Survey. Results from the structural equation model of the survey data indicated our model fit the data very well and readily accounted for various XK factors, the relations among these factors, and their influence on teacher candidates’ intentions to integrate technology into teaching. Given the complexity of the context in any teaching situation, its relation to and influence on technology integration, and the previously limited examination of context in research and teacher development, the results indicate the proposed model is quite plausible, accounting for 75% of the variation in intention. The study demonstrates the IT2 Survey is an effective instrument to examine factors associated with XK and their influences on technology integration. Our work extends theory about technology integration by including XK and has implications for researchers as well as practitioners who seek to advance technology integration in preparation programs.

Similar content being viewed by others

Teaching always occurs within a learning environment, a context. The context where teaching and learning takes place is already complex and is complicated further when incorporating technology as a learning tool. Educators who teach with technology encounter widely varying contextual factors, including access to technology, attitudes about using technology, teacher training, teachers’ beliefs about the usefulness of technology, self-efficacy, and the ease with which teachers use technology. As we considered context, we wondered how we might bring together these and other disparate, but related factors into a comprehensive model of context and apply that model to understand how contextual factors influence teacher candidates’ future use of technology.

The purpose of this study was to examine the influence of contextual knowledge on teacher candidates’ intention to integrate technology into classroom instruction. The role of context on intention to integrate technology has been neglected in previous research (Kelly, 2010; Mishra, 2019; Rosenberg & Koehler, 2015a, 2015b). We developed and tested a model based on the Decomposed Theory of Planned Behavior in which we conceptualized contextual factors, represented their synergistic effects on one another, and explored the influences of these factors on teacher candidates’ intention to integrate technology into classroom instruction. We gathered data to assess our model using an instrument we created, the Intention to Teach with Technology (IT2) Survey. Three research questions (RQ) guided our study:

RQ1. How can we conceptualize factors of context with respect to technology integration?

RQ2. How are contextual factors related to one another?

RQ3. How do contextual factors influence intention to integrate technology into instruction?

Intention to use/integrate technology

Studies on the intention to use technology as well as research on using technology for teaching and learning have been prominent in the research literature during the past 10–12 years. Taherdoost (2018) offered a succinct summary of 14 models that have been employed to examine variables that influence the use of technology and/or intention to use technology. To illustrate the nature of this research work, we will briefly explain three models that assess the intention to use technology.

In the first, Teo, his colleagues, and other researchers have used the Technology Acceptance Model (TAM) to investigate intention to use technology (Cheng, 2019; Scherer et al., 2019; Teo, 2009, 2011, 2015; Teo et al., 2009, 2019; Teo & van Schaik, 2012). This model appealed to researchers because it included a limited number of variables, usually from four to eight, and a limited number of items, fewer than 20 for simpler models. In the simplest models, the four variables were perceived ease of use, perceived usefulness, attitudes, and intention. To enhance the TAM model, researchers have incorporated “external variables” such as computer self-efficacy, subjective norms, and facilitating conditions into TAM research studies. In terms of accounting for variation in intention, results from TAM studies have accounted for between 27 and 60% of the variation (Cheng, 2019; Scherer et al., 2019; Teo, 2009, 2011, 2015; Teo et al., 2009; Teo & Zhou, 2017).

The Theory of Planned Behavior (TPB) and variants such as the Decomposed Theory of Planned Behavior (DTPB) have served as a second model that has received considerable attention with respect to examining intention to use technology (Cheng, 2019; Sadaf & Gezer, 2020; Sadaf et al., 2012, 2016; Teo et al., 2016). In this research, investigators applied Ajzen’s (1985, n.d.) TPB work, which included variables such as behavioral beliefs, normative beliefs, control beliefs, attitudes, subjective norms, perceived behavioral control, and how they interrelate to determine intention to use or integrate technology. Generally, results have shown the models using the TPB accounted for between 45 and 65% of the variation in intention to use technology (Cheng, 2019; Teo et al., 2016). Researchers have compared the TAM and TPB models, the results of which showed the TAM and TPB models to be equivalent in terms of their abilities to account for variance in intention to use technology (Cheng, 2019; Teo & van Schaik, 2012). A more extensive explanation of the TPB and DTPB models is provided in a later section of the article because the DTPB served as the model for this study.

A third model receiving some attention with regard to studying intention to use technology has been the Unified Theory of Acceptance and Use of Technology (UTAUT; Chao, 2019; Dwivedi et al., 2019; Teo & Noyes, 2014; Venkatesh et al., 2003; Venkatesh et al., 2016). In the UTAUT, variables such as performance expectancy, effort expectancy, social influence, and facilitating conditions played central roles in predicting intention to use technology along with moderator variables such as gender, age, experience, and personal volition in technology use. By including the basic and moderator variables, this model has accounted for between 36 and 55% of the variation in intention (Chao, 2019; Dwivedi et al., 2019; Venkatesh et al., 2003). Dwivedi et al. (2019) suggested there were concerns with the UTAUT model because moderators did not seem to apply to all situations and the original UTAUT model did not include attitudes among the assessed variables. This was a substantial limitation as compared to the TAM and TPB. Although the notion of context was implicit in some of the research endeavors using these models, generally, there was no mention of context in the research work.

Contextual knowledge and its influence on using technology

Technological Pedagogical Content Knowledge (TPACK; Mishra & Koehler, 2006), has served as a theoretical framework for understanding the way technological knowledge, pedagogical knowledge, and content knowledge have been represented by those teaching with technology. The relations and interactive influences of the three knowledge bases were depicted by three overlapping circles in a Venn diagram. Because teaching never occurs in a vacuum, the TPACK diagram included a larger, dotted circle to represent the contexts where teaching and learning take place. In this way, TPACK provided a conceptual understanding of the complexities involved when teachers integrate technology into their instruction.

For researchers and practitioners alike, the TPACK diagram called attention to how content combines with technology and pedagogy, within learning experiences. Again, the role of context has been overlooked, garnering much less attention than other aspects of the framework. For example, an examination of peer-reviewed journal articles that included TPACK from 2006 to 2009 revealed a complete absence of investigation of context in studies (Kelly, 2010). Even as research progressed through 2013, a review of TPACK-relevant, empirical studies showed only 36% of 193 journal articles included a mention of context (Rosenberg & Koehler, 2015a, 2015b). However, when context was incorporated, it was insufficiently theorized (Rosenberg & Koehler, 2015a). With a reminder that context is an essential component of the TPACK framework, Rosenberg and Koehler called for researchers to more adequately examine context by “focus[ing] on investigating the complexity of practice, the development of measures that include context, and aligning … educational technology research with other disciplines through greater attention to context” (2015a, p. 186).

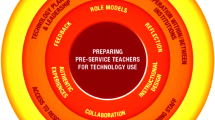

In 2019, Mishra clarified the intended role of context in the TPACK framework and changed contexts from the prior TPACK diagram to contextual knowledge (XK) in a revised diagram. As Mishra noted: “Contextual knowledge becomes of critical importance to teachers, and a lack of it limits the effectiveness [of technology integration]” (2019, p. 77). The emphasis on the importance of XK was reflected in the new version of the TPACK framework as noted in Fig. 1.

TPACK image as of 2019. Note This is the most recent update to the TPACK figure. From “Considering Contextual Knowledge: The TPACK Diagram Gets an Upgrade,” by Mishra (2019), Journal of Digital Learning in Teacher Education, 35(2), p. 77 (https://doi.org/10.1080/21532974.2019.1588611).

Although there has been limited work on context, several researchers have considered contextual factors in technology integration. For example, Koh et al. (2014) defined context using four distinguishable factors based on their qualitative work: physical/technological, cultural/institutional, interpersonal, and intrapersonal. Results showed when teachers focused on cultural/institutional factors (e.g., logistics of lesson implementation), technology integration was inhibited; whereas, when intrapersonal contextual factors (e.g., beliefs about teaching and students) were the focus, technology integration was supported.

In other work on context, Porras-Hernández and Salinas-Amescua (2013) tried to account for the dynamics of classroom learning environments using a model that included micro, meso, and macro factors. Porras-Hernández and Salinas-Amescua (2015) extended their conceptualizations of context when they discussed the link between surrounding conditions (e.g., physical, economic, cultural) and issues of the digital divide, but their framework has not been empirically verified. To summarize, the research with respect to context and its influence on technology integration has been limited and has not offered models that are amenable to (dis)confirmation.

Developing a theoretical framework for context

Affordances

Based on the previous work on context, it was clear that context was complex and ill-defined. As a beginning point to tackle the complex construct of context, we drew upon Gibson’s (1979) concept of affordances. Gibson used affordances to mean the quality of an object that offered “an action possibility … in the environment to an individual” (McGrenere & Ho, 2000, p. 179). Gibson’s perspective on affordances necessitated (a) an actor, (b) a context that includes some affordance, and (c) an action that could be carried out by the actor in the context. For example, in the current study, a teacher candidate would be the actor and teaching settings that include technology would be the context. Using Gibson’s concept of affordances, the teacher candidate may engage in teaching actions that use technology. Researchers have used Gibson’s affordances to develop terms such as usefulness and use (McGrenere & Ho, 2000), which have substantially influenced other researchers’ work on technology and its use by humans (Taylor & Todd, 1995; Teo, 2009, 2011, 2015; Venkatesh et al., 2003).

Theory of planned behavior

Our investigation into the importance of XK when integrating technology led us to consider Ajzen’s (1985, n.d.) Theory of Planned Behavior (TPB) as a helpful paradigm. The TPB appeared to be a viable model for several reasons. First, the theory afforded an opportunity to assess the intention to integrate technology. Second, and conspicuously, the TPB accounted for selected contextual factors, such as teacher candidates’ behavioral beliefs, attitudes, teacher candidates’ perceptions of the usefulness of technology, and self-efficacy for integrating technology into instruction. Thus, from our initial perspective, the theoretical framework offered by the TPB was well situated to account for important contextual variables influencing teacher candidates’ perspectives about using technology in their classrooms.

Decomposed theory of planned behavior

As we continued to explore theoretical conceptualizations of context, we reviewed the Decomposed Theory of Planned Behavior (DTPB; Atsoglou & Jimoyiannis, 2012; Sadaf & Gezer, 2020; Sadaf et al., 2012, 2016; Taylor & Todd, 1995), a theory that extended Ajzen’s (1985, n.d.) TPB to assess factors related to technology use. In the DTPB, constructs have been focused on technology issues. For example, in their efforts, Taylor and Todd (1995) took the more general, broad categories such as behavioral beliefs from Ajzen’s TPB and deconstructed them to better consider technology-related aspects. In doing so, behavioral beliefs became three technology-focused categories: perceived usefulness of technology to their situation (PU), perceived ease of use of technology (PEU), and compatibility of technology with current values and needs (COMP).

Synthesizing a model of XK using DTPB

Prior to our work, a testable model for XK had not been established. Our thinking about XK, Ajzen’s TPB, and Taylor and Todd’s DTPB led to an amalgam of various kinds of XK and teacher candidates’ intentions to integrate technology into their instruction. See Fig. 2. Thus, we anticipated the DTPB would help us to assess a theoretically-based model of context and examine the influence of context on intentions to integrate technology.

To examine the various constructs, their relations, and their influence on intention to teach with technology, we employed a structural equation model to examine our XK model, which is illustrated in Fig. 3. In the model, PU, PEU, and COMP, denoted by rectangles, serve as observed variables (i.e., parcels) composed of groups of items that comprise teacher candidates’ perspectives of XK. Together, the three parcels—PU, PEU, and COMP—constituted the latent variable of behavioral beliefs, denoted by an oval. Similarly, ATT1 and ATT2, observed parcels, constitute the latent variable of attitudes. The other portions of the figure can be understood in the same way.

In prior research employing the DTPB, investigators deconstructed control beliefs into facilitative conditions—technology (FCT), facilitative conditions—resources (FCR), and self-efficacy (SEff) (Sadaf et al., 2012, 2016; Taylor & Todd, 1995). FCT referred to the availability of technology resources, such as computers and software. By comparison, FCR represented resources such as support, time, and training. SEff signified the perceived ability to successfully employ technology. These three observed parcels, groups of survey items, constituted the latent variable control beliefs, which in the DTPB were more focused on factors associated with technology use as compared to Ajzen’s (1985, n.d.) TPB. Researchers employing the DTPB have particularized normative beliefs into smaller, more distinct referent groups, like peer influence and superior influence. These factors are reflected in our model as shown in Fig. 3.

Method

In this section, we describe how we conducted our study. We present information about the study sample, survey development, the IT2 Survey, procedure, and data analysis.

Participants

The study took place at Arizona State University, a large university in the southwestern United States. Participants were teacher candidates who were enrolled in certification programs. Teacher candidates who took the Intention to Teach with Technology (IT2) Survey included a diverse group of students majoring in elementary education, n = 50; early childhood education, n = 43; special education, n = 31; secondary education, n = 53; and master’s degree with certification, n = 13. Most participants were juniors and seniors, which meant that they had participated in college coursework specifically related to technology integration theories and practices, understood what using technology to teach involved, and attained experience in teaching with technology in PK-12 settings. Those participating in the survey had completed at least one semester in their respective teacher education program that included a concurrent, semester-long field experience. This nonrandom sample of 190 students provided perceptions concerning the items on the survey and was sufficient to conduct the initial factor analysis and subsequent analyses. The gender distribution was representative of our student body, consisting of 84% female students, 14% male students, and 2% unreported. The average age of the respondents was 24.94 years with a SD of 7.34. This age was consistent with the average age of credential-seeking undergraduate students, which is 25 (National Center for Education Statistics, 2018).

Instrument

Survey development

Items for the IT2 Survey, which was based on the DTPB, were constructed to assess observed variables that were related to seven latent variables including behavioral, normative, and control beliefs, as well as attitudes, subjective norms, perceived behavior control, and intention to use technology in the classroom. In a previous study (Foulger et al., 2019a), we collected teacher candidates’ perspectives on factors that influenced their technology integration abilities. As Ajzen (n.d.) noted, constructing survey items based on target audiences’ voices assured that the IT2 Survey represented terminologies, languages, and discourses that were commonly used by teacher candidates. To begin the process, we gathered responses from 106 of our teacher candidates using an open-ended questionnaire.

Respondents provided responses to questions like, “When you are teaching, who will influence your integration of technology?” and “How would technology be useful in your teaching?” Individual responses were aggregated into larger, meaningful categories that represented students’ ideas and language, and reflected their perceptions about their future teaching with technology (Foulger et al., 2019a). Thus, we used Ajzen’s (n.d.) approach of gathering group norms using students’ voices, which directed the development of the items on the IT2 Survey. After the initial draft of the survey was completed, we conducted think-aloud interviews with teacher candidates to ensure all the items were clear and easy to understand. The research team made minor revisions based on suggestions offered by these participants.

The IT2 Survey

In all, 56 items were used in the final version of the IT2 Survey. These items represented 18 observed parcels, consisting of 2–6 items each. Examples of items were, “Integrating technology into my classroom instruction will fit well with the way that I teach,” “When I am a certified classroom teacher, students will expect me to integrate technology into my classroom instruction,” and “Access to computer technology and software/apps will support my ability to integrate technology into my classroom instruction.” Consistent with Ajzen’s approach, participants responded to the items on a 7-point scale. Anchors for the scales varied and included pairs such as 7 = Extremely Easy to 1 = Extremely Difficult; 7 = Definitely True to 1 = Definitely False; 7 = Extremely Likely to 1 = Extremely Unlikely, and 7 = Strongly Agree to 1 = Strongly Disagree. These items constituted 18 parcels, which served as the observed variables in the exploratory and confirmatory factor analyses. For example, items 1–4 formed ATT1, attitude parcel 1; and items 5–7 composed ATT2, attitude parcel 2. The items, parcels, and latent variables to which the parcels belong have been presented in Appendix 1. The complete survey has been provided in Appendix 2. A set of demographic questions is embedded at the end of the IT2 Survey.

Procedure

First, we contacted program coordinators to identify potential courses and established a means for communicating with the instructors of these courses. We asked course instructors to distribute the URL for the survey to their students via email. Given the distribution method, we cannot determine how many students received the invitation to participate from their instructors. Based on program coordinators’ estimate of the number of students who received the request to participate, approximately 30% responded, which was typical for an online survey. Teacher candidates who completed the survey did so in 10–12 min on average.

Data analysis

In any analysis of structural equation models, two aspects of the model must be established. First, the measurement model must be confirmed, to ensure that the adequacy of the assessments of the latent constructs is demonstrated prior to assessing the relations among those constructs. Thus, the effectiveness of the parcels—for example, PU, PEU, and COMP, with respect to assessing the latent construct of behavioral beliefs—must be clearly demonstrated. Similarly, other parcels must demonstrate appropriate loadings on their respective latent variables. Second, the structural model must be confirmed. In this step, the adequacy of the relations among latent variables is assessed by examining the magnitudes of the path coefficients between latent variables. Doing so tests the plausibility of the model.

As noted above, in the analysis, we used parcels, rather than individual items. The rationale for using parcels was twofold. First, researchers using the DTPB have used parcels in their analysis of data (Sadaf et al., 2012, 2016; Taylor & Todd, 1995). For example, the DPTB model suggested certain parcels such as PU, PEU, COMP, FCR, and FCT. Parceling of items for other latent variables, such as attitude, subjective norms, perceived behavioral control, and intention, was random (Little et al., 2002, 2013). Thus, parcels were defined in two ways. For those measuring behavioral, normative, and control beliefs, parcels were defined using conceptual overlapping based on the DTPB constructs from earlier research. For those measuring attitudes, subjective norms, perceived behavioral control, and intention, parcels were defined randomly. Second, the analysis conducted here, was focused on understanding the various constructs and their relations with other constructs in the model, not on exploring the functioning of individual items, so parceling was appropriate for our situation (Little et al., 2002, 2013). Parceling led to the analysis of a more parsimonious model (Little et al., 2013).

Results

In this section, we describe the preliminary data analysis work, including an exploratory factor analysis. Following that, we provide the results of confirmatory factor analyses to test and modify the model.

Preliminary data analysis

To initiate the process, data were screened and missing values were replaced. Of the total data points, < 2% of the data were missing, which usually consisted of an item being omitted here or there for respondents who had missing data. Missing values were replaced with the group mean for the item.

Consistent with typical structural equation modeling procedures, we conducted an exploratory factor analysis of the survey data with SPSS 24 to determine whether the data warranted further analysis (Byrne, 2010; Schumacker & Lomax, 2010). Results indicated the observed parcels accounted for just over 75% of the variance. Further, the analysis showed modest cross loadings of parcels from normative beliefs, including peer influence, supervisor influence, and parent influence on a third factor, which showed a range of loadings from .345 to .500. However, these normative belief items were predominantly loaded on the first factor, knowledge of context in technology integration, with a range of loadings from .693 to .766. These outcomes were evident in the lower loadings for these parcels in the confirmatory factor analyses. Results for the control beliefs, including FCR and FCT, showed this sample of teacher candidates viewed items in these parcels as being somewhat different from other items, which resulted in these parcels loading on a second factor, facilitating conditions, in the exploratory factor analysis. These outcomes were also reflected in the lower parcel loadings in the confirmatory factor analyses.

We computed collinearity diagnostics for the latent variables. There was no evidence of multicollinearity with all VIF statistics being under 5 (Kline, 2011). The data were normally distributed, demonstrating no evidence of skewness with all values ≤ │1.222│ or kurtosis with all values ≤ │1.482│, which were within established acceptable ranges (Byrne, 2010; Hair et al., 2010; Kline, 2011).

Consistent with structural equation modeling practice, three respondents were removed from further analyses because their data indicated they were outliers in this sample of teacher candidates, which resulted in a final sample of 187 (Byrne, 2010; Schumacker & Lomax, 2010).

Assessing the measurement model

We used AMOS, version 24, to conduct the confirmatory factor analyses of the covariances of the 18 observed parcels. In Table 1, for the 18 parcels, we have provided means, standard deviations, final standardized loadings, standard errors, and bias estimates of the factor loadings. The standard errors and bias estimates were based on a bootstrapping procedure. In Table 1, we also provided statistics for the latent variables, including the average variance extracted (AVE), composite reliability (CR), and Cronbach’s alpha reliability.

In general, the data in Table 1 suggested the means for respondents’ scores were on the higher ends of the scales for the parcels. Factor loadings were strong with all but three of the factor loadings exceeding .750. See Table 1 and Fig. 4. Two were much lower: FCR and FCT were .439 and .438, respectively. These results were consistent with those that emerged from the exploratory factor analysis and indicated teacher candidates in this sample viewed the items in these parcels in different ways than those in other parcels. Having to envisage the availability of supportive resources and technology may have been more difficult for these teacher candidates as compared to envisioning other contextual matters assessed on the IT2 Survey.

The standard errors for the factor loadings were reasonable for this sample of teacher candidates. Additionally, we obtained bias estimates of the factor loadings, which were based on bootstrapping in which 200 samples of n = 200 were used to determine the bias estimates for the factor loadings. These bias estimates indicated there was no bias in the factor loading estimates.

In Table 1, average variance extracted (AVE) values, one means of assessing the adequacy of the measurement model of a structural equation model, were quite reasonable and ranged from .628 to .944, with one exception. The AVE for control beliefs, which included parcels for FCR, FCT, and SEff, was much lower, .374. With respect to composite reliability (CR), the CRs were all strong, ranging from .831 to .971, with the exception of control beliefs, which was .616 and included parcels for FCR, FCT, and SEff, once again. For the latent variables, all Cronbach’s alpha coefficients exceeded .70, ranging from .748 to .971, which indicated strong reliability for the latent variables.

Consistent with sound practice in reporting the results of structural equation modeling, we provide the correlation matrix for the 18 observed parcels in Table 2 (Schumacker & Lomax, 2010). Generally, the correlations were quite variable, ranging from .143 to .943. All of the correlations were significant at the .05 level or less. Some evidence suggests that parcels related to latent variables tended to correlate with the other parcels for that latent variable as compared to those outside the parcel. There was one clear exception: correlations of the parcels for control beliefs—i.e., FCR and FCT—were not correlated very highly with SEff.

Testing the structural model

Next, we tested our original model, which can be seen in Fig. 3, using AMOS. The original model was based on Taylor and Todd’s (1995) DTPB adaptation of Ajzen’s (1985, n.d.) TPB. As shown in Table 3, the data did not fit the original model very well. See Table 3 column 2 for the fit statistics on the original model. For example, the SRMR = .072, which was high, given that a more acceptable value is .05. Fit indices, such as IFI, TLI, and CFI, were below the more typical .95, which has constituted excellent fit for a model. The RMSEA = .112 was large and substantially exceeded the usual and acceptable range of values from .05 to .08.

We modified the model six times using modification indices output from AMOS. We added one parameter, a correlation coefficient, during each of the modifications to arrive at our final model, which accounted for 75.2% of the variance. We have provided values for the factor loadings, path coefficients, and correlations for the final model in Fig. 4. Additionally, fit statistics for the final model have been provided in Table 3, column 3. The χ2 (120) = 225.76, p < .001, was significant, which has been typical when testing structural equation models. Nevertheless, other fit indices indicated the data fitted the final model very well. Specifically, χ2/df = 1.881, which was < 2.00, indicating an excellent fit. Moreover, SRMR = .051, which was slightly higher than .05, but also indicated an excellent fit. Additionally, the fit indices including IFI, TLI, and CFI equaled or exceeded 0.96, which was greater than the 0.95 criterion that has been used to indicate excellent fit. The RMSEA = .069, which was in the acceptable range of values from .05 to .08, and the 90% confidence interval for the RMSEA was .055–.083.

A description of the six correlations that were added to the final model, as compared to the original model, was warranted. These correlations were added to the model one at a time using the modification indices information provided in the AMOS output. Four of the six correlations were within latent variable correlations, i.e., correlations among parcels within the same latent variable. For example, within the control beliefs latent variable, we added the correlation of the observed parcel FCR to the observed parcel FCT, which was .700. Similarly, we added three correlations among three parcels—peer, superior, and parental influences with one another—that were part of the normative beliefs latent variable. As shown in Fig. 4, the correlations were .362, .374, and .391. Adding these four correlations, which were based on the correlations among parcels within a latent variable, was typical in structural equation model revisions.

Model modifications involving the other two correlations were not as intuitive, but were defensible. The correlation between PEU and SEff was reasonable as part of the final model because SEff, the perceived ability to engage in a course of action, such as using technology during instruction, would likely be related to PEU of technology for instruction. The correlation between student influence and behavioral beliefs was included because it had one of the largest modification indices. Further, we included the correlation because behavioral beliefs included assessment of PU and COMP, which were rooted in how technology integration would be beneficial to students’ learning. Thus, teacher candidate responses to student influences on teacher candidates’ XK in the normative beliefs parcel were associated with teacher candidates’ behavioral beliefs about the benefits afforded to students by using technology in classroom teaching.

Taken together, the data fit the final model very well and accounted for just over 75% of the variation. After including the additional correlations in the final model, the various fit indices indicated the data were very consistent with the model. The fit indices were consistent with what would be considered an excellent fitting model, as shown in Table 3 when comparing columns 3, fit indices for the final model, and columns 4 or 5, criterion levels for fit indices (Hooper et al., 2008; Hu & Bentler, 1999; Kline, 2011). Thus, given the model of contextual factors examined in the study, the IT2 Survey was demonstrated to be effective in assessing XK factors, their connections, and their influences on teacher candidates’ intentions to integrate technology into their classroom instruction.

Discussion

The discussion section consists of three parts. First, we discuss connections to the extant literature and implications for research. Second, we discuss implications for practice and research on practice. We close with conclusions.

Connections to the research literature and implications for research

In connecting our work to the literature, we discuss three issues, including (a) the contextual turn, (b) theoretical frameworks, and (c) attacking the “wickedness” of technology integration by using XK.

Contextual turn

In the current study, using the IT2 Survey, we examine teacher candidates’ perceptions about XK, the connections among various XK factors, and how XK is related to participants’ intentions to integrate technology into their future classroom instruction. Our work responds to recent calls to strengthen research on XK (Mishra, 2019; Rosenberg & Koehler, 2015a, 2015b). Our work moves educational technology research toward making the “contextual turn,” a move which changes the focus on XK from being aware of context to more purposefully accounting for context as an influence on technology use in education. Rosenberg and Koehler (2015a) noted that educational technology researchers had not made the contextual turn, which hindered the exploration of context with respect to technology integration. Thus, in the current study, we develop a model of context and employ the IT2 Survey to focus on examining context in a way that is similar to how other disciplines in education—such as child development, classroom ecology, and educational psychology—consider the importance of context (Rosenberg & Koehler, 2015a, 2015b).

Defining XK using theoretical frameworks

As we conduct our work on context and its influence on technology integration, we draw upon a theoretical perspective, the DTPB, to aid our investigation of XK. Rosenberg and Koehler (2015a) also claimed a lack of progress on research with respect to XK and its influence on technology use occurs because there are few frameworks to guide such efforts. The DTPB, grounded in Ajzen’s (1985, n.d.) theoretical framework about factors that influence intention to engage in a behavior, provides a powerful theoretical framework that allows us to focus on assessing XK factors using the IT2 Survey to determine teacher candidates’ intention to integrate technology into instruction. The DTPB supports our efforts as we use the IT2 Survey to assess a wide range of XK factors, such as asking questions about PEU, PU, and COMP to determine XK related to behavioral beliefs; measuring ATT1 and ATT2 to determine XK related to attitudes; and evaluating how these XK factors influence intention to integrate technology into classroom instruction. Similarly, the IT2 Survey assesses normative beliefs, such as student, peer, supervisor, and parental influences on technology use; subjective norms; and how this kind of XK affects intention. The IT2 Survey assesses XK about how FCT, FCR, and SEff affect behavioral control; XK about perceived behavioral control; and how these XK factors influence the intention to integrate technology into teaching.

Results from the analysis for the measurement model are consistent with the DTPB. Specifically, the latent variables in the model, behavioral beliefs, attitudes, and intention, are supported by the data from the observed variables, or parcels, and are consistent with the latent variables from the DTPB. Results from the analysis of the structural model indicate it is a viable model that is consistent with the DTPB. In particular, the path coefficients between the latent variables are all significant and demonstrate magnitudes that suggest the XK factors contribute to influencing intention to integrate technology into classroom instruction.

Attacking the “wickedness” of technology integration using XK

With respect to connecting our work to the extant literature, Koehler and Mishra (2008) claimed integrating technology into classroom instruction is a “wicked” problem because it is “ill-structured,” involving many factors, including “complex interdependencies among a large number of contextually bound variables” (pp. 10–11). In the current study, we begin to tackle the “wickedness” of technology integration by examining various XK factors, their relations with one another, and their influence on technology integration using our model based on the DTPB and the IT2 Survey. We recognize XK is a largely missing factor in the research on integrating technology, but we believe we have achieved some substantial new insights concerning the multifaceted construct of XK.

Implications for research

With respect to implications for research, our use of the DTPB model to examine XK represents a model that has the potential to examine teacher candidates’ perspectives on XK and the relation between XK and intention to integrate technology into classroom instruction. In the IT2 Survey items, we asked teacher candidates to envision themselves in their classroom settings, with questions such as, “Integrating technology into my classroom instruction will fit well with the way that I teach” and “When I am a certified classroom teacher, students will expect me to integrate technology into my classroom instruction.” Notably, our focus was on integrating technology into classroom instruction. Given the current situation regarding the implementation of massive online education in the PK-12 setting due to COVID-19, a new, productive line of research could include examining factors of XK related to teaching in online settings.

Researchers studying XK could expand on the current efforts to include information about PK-12 students’ knowledge and background, and additional information about influences of communities on XK (Porras-Hernández & Salinas-Amescua, 2013). Another manner in which this work on XK could be expanded is to examine state and national influences on XK (see also Mishra, 2019). Further, XK could be expanded to include how standards and requirements of professional organizations influence XK as related to the intention to integrate technology into classroom instruction. Efforts to examine XK more closely could be well-served by employing interviews with participants to probe more deeply into the reciprocity of factors on XK, participants’ understanding of XK, and how XK influences participants’ intentions to integrate technology into classroom instruction.

Implications for teacher preparation

In developing the IT2 Survey, we were initially driven by a shared, college-wide vision to graduate students as competent teachers who, upon entry into the field, would be capable of and committed to leveraging the power of technology in teaching in varied PK-12 settings. Conversations in our college about this need led us to employ a program-deep and program-wide approach with respect to technology-related curriculum and instruction, which we call ‘technology infusion’ (Foulger, 2020). With infusion as the guiding principle that informed our efforts, we systematically examined implementation of this vision (Buss et al., 2015, 2017, 2018; Foulger et al., 2012, 2015, 2019a, 2019b; Wetzel et al., 2014). At this point, we plan to use the IT2 Survey at strategic points across the span of our preparation programs to help our teacher educators make informed decisions about how to improve the way their courses address XK. Additionally, we believe this will help us to develop a more complete picture of our teacher candidates’ progress in learning to teach with technology. We anticipate that strategic and ongoing use of the IT2 Survey could help us identify areas of refinement in our program design, the effectiveness of coursework within a given semester, and areas in which technology infusion might need to be improved.

For other colleges of education, including XK as an important factor in learning to teach with technology provides a new component of technology integration for program leaders to consider and suggests research work that could be done on curricula, instructors, and school-based teacher mentors of teacher candidates. First, as they incorporate XK more fully into the curricula, they may wish to monitor students’ XK growth and its relation to technology integration to determine the influence of their programs’ curricula on XK. Second, program leaders may explore how instruction provided in program courses influences teacher candidates’ XK. Research related to teacher mentors’ and university supervisors’ skill sets for teaching with technology may also be informative in considering how XK is developed by teacher candidates.

Limitations and generalizability

One limitation of our study is that all student participants were volunteers. Volunteer bias in survey research can be reduced by increasing rates of volunteering (Salkind, 2010). Thus, to increase participation we offered a lottery drawing for gift cards. An additional limitation is related to the contextual factors we examined. Although we accounted for 75% of the variance, there are undoubtedly other XK factors associated with technology integration. For example, other XK factors might include teacher candidates’ knowledge and background related to content, teacher candidates’ ability and dispositions about using technology for learning, and elements related to the PK-12 classroom environment, such as the appeal of the environment.

The findings of this study are generalizable to other teacher candidates because the sample population is representative of the larger population of teacher candidates. Specifically, our sample mirrors common demographics of other juniors and seniors in teacher preparation programs (e.g., gender, age, certification program). To ensure questions were valid for this group, unambiguous, and clearly written (Rea & Parker, 2014), we used teacher candidates’ language/perceptions about technology integration, which we obtained in a prior study (Foulger et al., 2019a, b). Finally, the findings have ecological validity for other preparation programs because the XK factors queried in the IT2 Survey are comprehensive in scope, theoretically based, and are similar to those faced by students in other programs.

We anticipate some cultural variation may exist. Thus, for instance, in other cultures, cultural norms may have greater influences on normative beliefs and hence on subjective norms and thus on intention to integrate technology. Notably, this question is open to empirical examination and such cross-cultural explorations would contribute important information about the effects of XK on technology integration.

Conclusions

This study extends theory about technology integration by incorporating XK as a foundational component. We can answer our three research questions in the affirmative. With respect to RQ1, we are able to conceptualize context by drawing on the DTPB to develop a model focusing on contextual factors that includes behavioral, normative, and control beliefs, and also incorporates attitudes, subjective norms, perceived behavioral control, and intention to integrate technology. Results show the model is plausible for understanding contextual variables and their relation to the intention to integrate technology into classroom instruction. For RQ2, the model we developed based on the DTPB allows us to observe positive, significant effects of contextual factors on other contextual components, like the influence of (a) behavioral beliefs on attitudes, (b) normative beliefs on subjective norms, and (c) control beliefs on perceived behavioral control. With respect to RQ3, we find contextual factors such as attitudes, subjective norms, and perceived behavioral control all exhibit positive, significant influences on intentions to integrate technology.

The previous theoretical foundation for XK has been poorly defined (Rosenberg & Koehler, 2015b). Nevertheless, as we show here, XK is an important concept in the conversation about developing technology integration in teachers. We resituate XK within the theoretical framework of DTBP, which is comprehensive, parsimonious, and practical for researchers and teacher educators. Further, we extend the work of assessing technology integration (Chao, 2019; Cheng, 2019; Scherer et al., 2019; Teo et al., 2019) by systematically accounting for XK. Notably, researchers and teacher educators will find value in using the IT2 Survey to examine how the development of XK influences technology integration.

References

Ajzen, I. (1985). From intentions to actions: A theory of planned behavior. In J. Kuhl & J. Beckmann (Eds.), Action control: From cognition to behavior (pp. 11–39). Springer. https://doi.org/10.1007/978-3-642-69746-3_2

Ajzen, I. (n.d.). Interactive TPB Model [Figure]. Retrieved from https://people.umass.edu/aizen/tpb.diag.html

Atsoglou, K., & Jimoyiannis, A. (2012). Teachers’ decisions to use ICT in classroom practice: An investigation based on decomposed theory of planned behavior. International Journal of Digital Literacy and Digital Competence, 3(2), 20–37. https://doi.org/10.4018/jdldc.2012040102

Byrne, B. M. (2010). Structural equation modeling with AMOS: Basic concepts, applications, and programming (3rd ed.). Routledge.

Buss, R. R., Wetzel, K., Foulger, T. S., & Lindsey, L. (2015). Preparing teachers to integrate technology into K-12 instruction: Comparing a stand-alone technology course with a technology-infused approach. Journal of Digital Learning in Teacher Education, 31(4), 160–172. https://doi.org/10.1080/21532974.2015.1055012

Buss, R. R., Lindsey, L., Foulger, T. S., Wetzel, K., & Pasquel, S. (2017). Assessing a technology infusion approach in a teacher preparation program. International Journal of Technology in Teaching and Learning, 13(1), 33–44. https://sicet.org/main/wp-content/uploads/2018/10/3_Ray_Buss.pdf

Buss, R., Foulger, T. S., Wetzel, K. A., & Lindsey, L. (2018). Preparing teachers to integrate technology into K-12 instruction II: Examining the effects of technology-infused methods courses and student teaching. Journal of Digital Learning in Teacher Education, 34(3), 134–150. https://doi.org/10.1080/21532974.2018.1437852

Chao, C.-M. (2019). Factors determining the behavioral intention to use mobile learning: An application and extension of the UTAUT model. Frontiers in Psychology, 10. https://www.frontiersin.org/articles. https://doi.org/10.3389/fpsyg.2019.01652/full

Cheng, E. W. L. (2019). Choosing between the theory of planned behavior (TPB) and the technology acceptance model (TAM). Educational Technology Research and Development, 67, 21–37. https://doi.org/10.1007/s11423-018-9598-6

Council for the Accreditation of Educator Preparation. (2019). 2019 Accreditation Handbook. http://caepnet.org/~/media/Files/caep/accreditation-resources/publication-handbook-publiccomment-2019.pdf?la=en

Dwivedi, Y. K., Rana, N. P., Jeyaraj, A., Clement, M., & Williams, M. D. (2019). Re-examining the unified theory of acceptance and use of technology (UTAUT): Towards a revised theoretical model. Information Systems Frontiers, 21, 719–734. https://doi.org/10.1007/s10796-017-9774-y

Foulger, T. S. (2020). Designing technology infusion: Considerations for teacher preparation programs. In A. C. Borthwick, T. S. Foulger, & K. J. Graziano (Eds.), Championing technology infusion in teacher preparation: A framework for supporting future educators (pp. 3–28). International Society for Technology in Education.

Foulger, T. S., Buss, R. R., Wetzel, K., & Lindsey, L. (2012). Preservice teacher education: Benchmarking a stand-alone ed tech course in preparation for change. Journal of Digital Learning in Teacher Education, 29(2), 48–58. https://doi.org/10.1080/21532974.2012.10784704

Foulger, T. S., Buss, R. R., Wetzel, K., & Lindsey, L. (2015). Instructors’ growth in TPACK: Teaching technology-infused methods courses to pre-service teachers. Journal of Digital Learning in Teacher Education, 31(4), 134–147. https://doi.org/10.1080/21532974.2015.1055010

Foulger, T. S., Buss, R., Su, M., Donner, J., & Wetzel, K. (2019a). Predicting teacher candidates’ future use of technology: Developing a survey using the theory of planned behavior. In K. Graziano (Ed.), Proceedings of Society for Information Technology & Teacher Education International Conference (pp. 2177–2183). Las Vegas, NV, United States: Association for the Advancement of Computing in Education (AACE). https://www.learntechlib.org/primary/p/207991/

Foulger, T. S., Wetzel, K., & Buss, R. (2019b). Moving toward a technology infusion approach: Considerations for teacher preparation programs. Journal of Digital Learning in Teacher Education, 35(2), 79–91. https://doi.org/10.1080/21532974.2019.1568325

Gibson, J. J. (1979). The ecological approach to visual perception. Houghton Mifflin.

Hair, J. F., Jr., Black, W. C., Babin, B. J., & Anderson, R. E. (2010). Multivariate data analysis (7th ed.). Prentice Hall.

Hooper, D., Couglan, J., & Mullen, M. R. (2008). Structural equation modelling: Guidelines for determining model fit. Electronic Journal of Business Research Methods, 6(1), 53–60.

Hu, L. T., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling, 6(1), 1–55.

Kelly, M. A. (2010). Technological pedagogical content knowledge (TPACK): A content analysis of 2006–2009 print journal articles. In D. Gibson & B. Dodge (Eds.), Proceedings of the Society for Information Technology and Teacher Education International Conference 2010 (pp. 3880–3888). Chesapeake, VA: Association for the Advancement of Computing in Education (AACE). https://learntechlib.org/primary/33985/

Kline, R. B. (2011). Principles and practice of structural equation modeling (3rd ed.). Guilford Press.

Koehler, M. J., & Mishra, P. (2008). Introducing TPCK. In AACTE Committee on Technology and Innovation (Ed.), Handbook of technological pedagogical content knowledge (TPCK) for educators (pp. 3–29). Routledge.

Koh, J. H. L., Chai, C.S. Lai, & Tay, L. Y. (2014). TPACK-in-Action: Unpacking the contextual influences of teachers' construction of technological pedagogical content knowledge (TPACK). Computers & Education, 78, 20–29. https://doi.org/10.1016/j.compedu.2014.04.022

Little, T. D., Cunningham, W. A., Shahar, G., & Widaman, K. F. (2002). To parcel or not to parcel: Exploring the question, weighing the merits. Structural Equation Modeling, 9(2), 151–173. https://doi.org/10.1207/S15328007SEM0902_1

Little, T. D., Rhemulla, M., Gibson, K., & Schoemann, A. M. (2013). Why the items versus parcels controversy needn’t be one. Psychological Methods, 18(3), 285–300. https://doi.org/10.1037/a0033266

McGrenere, J., & Ho, W. (2000). Affordances: Clarifying and evolving a concept. In S. Fels & P. Poulin (Eds.), Proceedings of Graphic Interfaces 2000 (pp. 179–186). https://doi.org/10.20380/GI2000.24

Mishra, P. (2019). Considering contextual knowledge: The TPACK diagram gets an upgrade. Journal of Digital Learning in Teacher Education, 35(2), 76–78. https://doi.org/10.1080/21532974.2019.1588611

Mishra, P., & Koehler, M. J. (2006). Technological pedagogical content knowledge: A framework for teacher knowledge. Teachers College Record, 108(6), 1017–1055. http://citeseerx.ist.psu.edu/viewdoc/download? 10.1.1.523.3855&rep=rep1&type=p

National Center for Education Statistics. (2018). Average age of credential seeking undergraduates. Retrieved from https://nces.ed.gov/surveys/ctes/figures/fig_2018149-7.asp

Office of Educational Technology. (2017). Reimagining the role of technology in education: 2017 National Education Technology Plan update. http://tech.ed.gov/files/2017/01/NETP17.pdf

Porras-Hernández, L. H., & Salinas-Amescua, B. (2013). Strengthening TPACK: A broader notion of context and the use of teacher’s narratives to reveal knowledge construction. Journal of Educational Computing Research, 48(2), 223–244. https://doi.org/10.2190/EC.48.2.f

Porras-Hernández, L. H., & Salinas-Amescua, B. (2015). A reconstruction of rural teachers’ technology integration experiences: Searching for equity. In M. L. Niess & H. Gillow-Wiles (Eds.), Handbook of research on teacher education in the digital age (pp. 281–306). IGI Global.

Rea, L. M., & Parker, R. A. (2014). Designing and conducting survey research: A comprehensive guide. Wiley.

Rosenberg, J. M., & Koehler, M. J. (2015a). Context and teaching with technology in the digital age. In M. L. Niess & H. Gillow-Wiles (Eds.), Handbook of research on teacher education in the digital age (pp. 440–465). IGI Global.

Rosenberg, J. M., & Koehler, M. J. (2015b). Context and technological pedagogical content knowledge (TPACK): A systematic review. Journal of Research on Technology in Education, 47, 186–210. https://doi.org/10.1080/15391523.2015.1052663

Sadaf, A., & Gezer, T. (2020). Exploring factors that influence teachers’ intentions to integrate digital literacy using the decomposed theory of planned behavior. Journal of Digital Learning in Teacher Education, 36(2), 124–145.

Sadaf, A., Newby, T. J., & Ertmer, P. A. (2012). Exploring pre-service teachers’ beliefs about using Web 2.0 technologies in PK-12 classrooms. Computers & Education, 59(3), 937–945. https://doi.org/10.1016/j.compedu.2012.04.001

Sadaf, A., Newby, T. J., & Ertmer, P. A. (2016). An investigation of the factors that influence preservice teachers’ intentions and integration of Web 2.0 tools. Educational Technology Research and Development, 64(1), 37–64. https://doi.org/10.1007/s11423-015-9410-9

Salkind, N. J. (2010). Volunteer bias. In Encyclopedia of research design (Vol. 1, pp. 1609–1610). SAGE Publications, Inc. https://doi.org/10.4135/9781412961288.n492

Scherer, R., Siddiq, F., & Tondeur, J. (2019). The technology acceptance model (TAM): A meta-analytic structural equation modeling approach to explaining teachers’ adoption of digital technology in education. Computers & Education, 128(1), 13–35. https://doi.org/10.1016/j.compedu.2018.09.009

Schumacker, R. E., & Lomax, R. G. (2010). A beginner’s guide to structural equation modeling (3rd ed.). Routledge.

Taherdoost, H. (2018). A review of technology acceptance and adoption models and theories. Procedia Manufacturing, 22, 960–967. https://doi.org/10.1016/j.promfg.2018.03.137

Taylor, S., & Todd, P. (1995). Understanding technology information usage: A test of competing models. Information Systems Research, 6(2), 144–176. https://www.jstor.org/stable/pdf/23011007

Teo, T. (2009). Modelling technology acceptance in education: A study of preservice teachers. Computers & Education, 52(2), 302–312. https://doi.org/10.1016/j.compedu.2008.08.006

Teo, T. (2011). Factors influencing teachers’ intention to use technology: Model development and test. Computers & Education, 57(4), 2432–2440. https://doi.org/10.1016/j.compedu.2011.06.008

Teo, T. (2015). Comparing pre-service and in-service teachers’ acceptance of technology: Assessment of measurement invariance and latent mean differences. Computers & Education, 83(1), 22–31. https://doi.org/10.1016/j.compedu.2014.11.015

Teo, T., & van Schaik, P. (2012). Understanding the intention to use technology by pre-service teachers: An empirical test of competing theoretical models. International Journal of Human-Computer Interaction, 28(3), 178–188. https://doi.org/10.1080/10447318.2011.581892

Teo, T., & Noyes, J. (2014). Explaining the intention to use technology among pre-service teachers: A multi-group analysis of the Unified Theory of Acceptance and Use of Technology. Interactive Learning Environments, 22(1), 51–66. https://doi.org/10.1080/10494820.2011.641674

Teo, T., & Zhou, M. (2017). The influence of teachers’ conceptions of teaching and learning on their technology acceptance. Interactive Learning Environments, 25(4), 513–527. https://doi.org/10.1080/10494820.2016.1143844

Teo, T., Lee, C. B., Chai, C. S., & Wong, S. L. (2009). Assessing the intention to use technology among pre-service teachers in Singapore and Malaysia: A multi-group invariance analysis of the Technology Acceptance Model (TAM). Computers & Education, 53(3), 1000–1009. https://doi.org/10.1016/j.compedu.2009.05.017

Teo, T., Zhou, M., & Noyes, J. (2016). Teachers and technology: Development of an extended theory of planned behavior. Educational Technology Research and Development, 64, 1033–1052. https://doi.org/10.1007/s11423-016-9446-5

Teo, T., Sang, G., Mei, B., & Hoi, C. K. W. (2019). Investigating pre-service teachers’ acceptance of Web 2.0 technologies in their future teaching: A Chinese perspective. Interactive Learning Environments, 27(4), 530–546. https://doi.org/10.1080/10494820.2018.1489290

Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27(3), 425–478. http://www.jstor.com/stable/30036540

Venkatesh, V., Thong, J. Y. L., & Xu, X. (2016). Unified theory of acceptance and use of technology: A synthesis and the road ahead. Journal of the Association for Information Systems, 17(5), 328–376.

Wetzel, K., Buss, R. R., Foulger, T. S., & Lindsey, L. (2014). Infusing educational technology in teaching methods courses: Successes and dilemmas. Journal of Digital Learning in Teacher Education, 30(3), 89–103. https://doi.org/10.1080/21532974.2014.891877

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

We conducted this study in accordance with the ethical standards of Arizona State University Institutional Review Board.

Informed consent

We obtained informed consent from all individual participants involved in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1

The Intention to Teach with Technology (IT2) Survey: administration and analysis guide

Use of the IT2 survey by others

Feel free to use the IT2 Survey for your research and/or program assessment needs. Please consider providing Dr. Teresa Foulger at Teresa.Foulger@asu.edu with a description of your usage (research questions, program assessment focus, population, etc.), and the circumstances of your research or program assessment. Our goal is to maintain an open archive of how the survey is used, including any translations of the survey that emerge.

Administration

The Intention to Teach with Technology (IT2) Survey may be administered using online or paper-and-pencil formats. In our administration, we employed an online format.

Data analysis

For data analysis, we used the following observed items and parcels, which were associated with the latent variables as noted.

Questions | Observed parcels | Latent variable |

|---|---|---|

1–4 | ATT1 (Attitude 1) | Attitudes (ATT) |

5–7 | ATT2 (Attitude 2) | Attitudes (ATT) |

8–13 | PU (Perceived usefulness) | Behavioral beliefs (BB) |

14–16 | PEU (Perceived ease of use) | Behavioral beliefs (BB) |

17–19 | COMP (Compatibility) | Behavioral beliefs (BB) |

20–22 | StInf (Student influences) | Normative beliefs (NB) |

23–25 | PrInf (Peer [Teacher] influences) | Normative beliefs (NB) |

26–28 | SupInf (Supervisor influences) | Normative beliefs (NB) |

29–31 | ParInf (Parent influences) | Normative beliefs (NB) |

32–34 | FCR (Facilitating conditions resources) | Control beliefs (CB) |

35–37 | FCT (Facilitating conditions technology) | Control beliefs (CB) |

38–40 | SEff (Self-efficacy) | Control beliefs (CB) |

41–42 | SN1 (Subjective norms 1) | Subjective norms (SN) |

43–44 | SN2 (Subjective norms 2) | Subjective norms (SN) |

45–48 | PBC1 (Perceived behavioral control 1) | Perc. Beh. Control (PBC) |

49–52 | PBC2 (Perceived behavioral control 2) | Perc. Beh. Control (PBC) |

53–54 | INT1 (Intention 1) | Intention (INT) |

55–56 | INT2 (Intention 2) | Intention (INT) |

Appendix 2

The Intention to Teach with Technology (IT2) Survey: survey questions

Technology is a broad concept that can mean a lot of different things. For the purpose of this survey, technology is referring to digital technology/technologies. That is, the digital tools we use such as computers, laptops, smart phones, iPads, projection systems, interactive whiteboards, applications (apps), etc.

Q1–7. (ATT) For me, integrating technology into classroom instruction is …

Q8–10. (BB-PU) Integrating technology into my classroom instruction will …

Q11–13. (BB-PU) Integrating technology into my classroom instruction will …

Q14. (BB-PEU) It will be easy for me to integrate technology into my classroom instruction.

Q15. (BB-PEU) The extra time it takes to integrate technology into my classroom instruction is worthwhile.

Q16. (BB-PEU) Potential technological glitches will prevent me from integrating technology into my classroom instruction.

Q17. (BB-COMP) Integrating technology into my classroom instruction will provide my students with learning resources aligned with my instruction.

Q18. (BB-COMP) Integrating technology into my classroom instruction is compatible with the way I will teach.

Q19. (BB-COMP) Integrating technology into my classroom instruction will fit well with the way that I teach.

Q20–21. (NB-STI) When I am a certified teacher …

Q22. (NB-STI) When I am a certified teacher, students will want me to integrate technology into my classroom instruction.

Q23–24. (NB-PI) When I am a certified teacher …

Q25. (NB-PI) When I am a certified teacher, my teacher colleagues will expect me to integrate technology into my classroom instruction …

Q26–27. (NB-SUPI) When I am a certified teacher …

Q28. (NB-SUPI) When I am a certified teacher, district leaders will expect me to integrate technology into my classroom instruction.

Q29. (NB-PARI) When I am a certified teacher, parents will expect me to integrate technology into my classroom instruction.

Q30. (NB-PARI) When I am a certified teacher, parents will hope I integrate technology into my classroom instruction.

Q31. (NB-PARI) When I am a certified teacher, parents will want me to integrate technology into my classroom instruction.

Q32. (CB-FCR) Funding at my school will affect my ability to integrate technology into my classroom instruction.

Q33. (CB-FCR) Support from others (e.g., principal, district, parent) at my school will affect my ability to integrate technology into my classroom instruction.

Q34. (CB-FCR) Professional development (e.g., workshops, online resources, instructional coaches) to which I have access will affect my ability to integrate technology into my classroom instruction.

Q35. (CB-FCT) Access to technology will affect my ability to integrate technology into my classroom instruction.

Q36. (CB-FCT) Access to computer technology and software/apps will support my ability to integrate technology into my classroom instruction.

Q37. (CB-FCT) Access to mobile devices for my students’ use will affect my ability to integrate technology into my classroom instruction.

Q38–40. (CB-SE) Please answer the following questions.

Q41–42. (SN) When I am a certified teacher …

Q43–44. (SN) When I am a certified teacher …

Q45. (PBC) When I am a certified teacher, integrating technology will be …

Q46–48. (PBC) When I am a certified teacher …

Q49. (PBC) When I am a certified teacher, I will integrate technology as I see fit.

Q50. (PBC) When I am a certified teacher, I will integrate technology consistently.

Q51–52. (PBC) When I am a certified teacher …

Q53–54. (INT) When I am a certified teacher …

Q55–56. (INT) When I am a certified teacher …

Demographics

Q60 Please create a unique identifier known only to you. Use the first three letters of your mother’s first name and the last four digits of your phone number. For example, Sar4567 would be the identifier if your mom’s first name was Sarah and your phone number is (623) 555-4567.

Q61 What is your Program/Major?

Q62 To which gender identity do you most identify?

Q63 What is your age?

Q64 What term/semester are you currently in?

Q65 What is your school/district location?

Rights and permissions

About this article

Cite this article

Foulger, T.S., Buss, R.R. & Su, M. The IT2 Survey: contextual knowledge (XK) influences on teacher candidates’ intention to integrate technology. Education Tech Research Dev 69, 2729–2760 (2021). https://doi.org/10.1007/s11423-021-10033-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11423-021-10033-4