Abstract

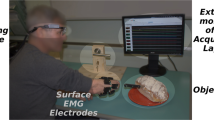

Upper limb and hand functionality is critical to many activities of daily living, and the amputation of one can lead to significant functionality loss for individuals. From this perspective, advanced prosthetic hands of the future are anticipated to benefit from improved shared control between a robotic hand and its human user, but more importantly from the improved capability to infer human intent from multimodal sensor data to provide the robotic hand perception abilities regarding the operational context. Such multimodal sensor data may include various environment sensors including vision, as well as human physiology and behavior sensors including electromyography and inertial measurement units. A fusion methodology for environmental state and human intent estimation can combine these sources of evidence in order to help prosthetic hand motion planning and control. In this paper, we present a dataset of this type that was gathered with the anticipation of cameras being built into prosthetic hands, and computer vision methods will need to assess this hand-view visual evidence in order to estimate human intent. Specifically, paired images from human eye-view and hand-view of various objects placed at different orientations have been captured at the initial state of grasping trials, followed by paired video, EMG and IMU from the arm of the human during a grasp, lift, put-down, and retract style trial structure. For each trial, based on eye-view images of the scene showing the hand and object on a table, multiple humans were asked to sort in decreasing order of preference, five grasp types appropriate for the object in its given configuration relative to the hand. The potential utility of paired eye-view and hand-view images was illustrated by training a convolutional neural network to process hand-view images in order to predict eye-view labels assigned by humans.

Similar content being viewed by others

References

RSL Steeper, BeBionic3. http://bebionic.com/. Accessed 21 March 2017

Bitzer S, Van Der Smagt P (2006) Learning EMG control of a robotic hand: towards active prostheses. In: Proceedings 2006 IEEE international conference on robotics and automation, 2006. ICRA 2006, pp 2819–2823. IEEE

DeGol J, Akhtar A, Manja B, Bretl T (2016) Automatic grasp selection using a camera in a hand prosthesis. In: 2016 IEEE 38th annual international conference of the engineering in medicine and biology society (EMBC), pp 431–434. IEEE

Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L (2009) Imagenet: a large-scale hierarchical image database. In: IEEE conference on computer vision and pattern recognition, 2009. CVPR 2009, pp 248–255. IEEE

Feix T, Romero J, Schmiedmayer HB, Dollar AM, Kragic D (2016) The grasp taxonomy of human grasp types. IEEE Trans Hum Mach Syst 46(1):66–77

Ghazaei G, Alameer A, Degenaar P, Morgan G, Nazarpour K (2015) An exploratory study on the use of convolutional neural networks for object grasp classification. In: Proceedings of 2nd IET international conference on intelligent signal processing, London, UK, 1–2 December 2015. IET, p 5

Ghazaei G, Alameer A, Degenaar P, Morgan G, Nazarpour K (2017) Deep learning-based artificial vision for grasp classification in myoelectric hands. J Neural Eng 14(3):036025

Gigli A, Gijsberts A, Gregori V, Cognolato M, Atzori M, Caputo B (2017) Visual cues to improve myoelectric control of upper limb prostheses. arXiv preprint arXiv:1709.02236

Günay SY, Quivira F, Erdoğmuş D (2017) Muscle synergy-based grasp classification for robotic hand prosthetics. In: Proceedings of the 10th international conference on pervasive technologies related to assistive environments, pp 335–338. ACM

Günay SY, Yarossi M, Brooks DH, Tunik E, Erdoğmuş D (2019) Transfer learning using low-dimensional subspaces for EMG-based classification of hand posture. In: 2019 9th international IEEE/EMBS conference on neural engineering (NER), pp 1097–1100. IEEE

Han M, Günay SY, Yildiz İ, Bonato P, Onal CD, Padir T, Schirner G, Erdoğmuş D (2019) From hand-perspective visual information to grasp type probabilities: deep learning via ranking labels. In: Proceedings of the 12th ACM international conference on pervasive technologies related to assistive environments, pp. 256–263. ACM

Hoff B, Arbib MA (1993) Models of trajectory formation and temporal interaction of reach and grasp. J Motor Behav 25(3):175–192

Karsch K, Liu C, Kang S (2012) Depth extraction from video using non-parametric sampling-supplemental material. In: European conference on computer vision. Citeseer

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, pp 1097–1105

Leeb R, Sagha H, Chavarriaga R, del R Millán J (2011) A hybrid brain–computer interface based on the fusion of electroencephalographic and electromyographic activities. J Neural Eng 8(2):025011

Levine S, Pastor P, Krizhevsky A, Ibarz J, Quillen D (2018) Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection. Int J Robot Res 37(4–5):421–436

Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu CY, Berg AC (2016) Ssd: Single shot multibox detector. In: European conference on computer vision, pp 21–37. Springer, New York

Mon-Williams M, Tresilian JR, Coppard VL, Carson RG (2001) The effect of obstacle position on reach-to-grasp movements. Exper Brain Res 137(3–4):497–501

Özdenizci O, Günay SY, Quivira F, Erdoğmuş D (2018) Hierarchical graphical models for context-aware hybrid brain-machine interfaces. In: 40th annual international conference of the IEEE engineering in medicine and biology society, accepted. IEEE

Redmon J, Angelova A (2015) Real-time grasp detection using convolutional neural networks. In: 2015 IEEE international conference on robotics and automation (ICRA), pp 1316–1322. IEEE

Redmon J, Divvala S, Girshick R, Farhadi A (2016) You only look once: unified, real-time object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 779–788

Resnik L, Klinger SL, Etter K (2014) The deka arm: its features, functionality, and evolution during the veterans affairs study to optimize the deka arm. Prosthetics Orthot Int 38(6):492–504

Rubinstein RY, Kroese DP (2013) The cross-entropy method: a unified approach to combinatorial optimization, Monte-Carlo simulation and machine learning. Springer, New York

Rzeszutek R, Androutsos D (2013) Efficient automatic depth estimation for video. In: 2013 18th international conference on digital signal processing (DSP), pp 1–6. IEEE

Simard PY, Steinkraus D, Platt JC et al (2003) Best practices for convolutional neural networks applied to visual document analysis. ICDAR 3:958–962

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556

Štrbac M, Kočović S, Marković M, Popović DB (2014) Microsoft kinect-based artificial perception system for control of functional electrical stimulation assisted grasping. BioMed Res Int 2014:12

Acknowledgements

This work was supported by NSF (CPS-1544895, CPS-1544636, CPS-1544815), NIH (R01DC009834).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The complete HANDS dataset can be found in the Northeastern University Digital Repository Service (DRS), under the CSL/2018 Collection: http://hdl.handle.net/2047/D20294524

Rights and permissions

About this article

Cite this article

Han, M., Günay, S.Y., Schirner, G. et al. HANDS: a multimodal dataset for modeling toward human grasp intent inference in prosthetic hands. Intel Serv Robotics 13, 179–185 (2020). https://doi.org/10.1007/s11370-019-00293-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11370-019-00293-8