Abstract

Background

In a previous work, the problem of identifying residual stresses through relaxation methods was demonstrated to be mathematically ill-posed. In practice, it means that the solution process is affected by a bias-variance tradeoff, where some theoretically uncomputable bias has to be introduced in order to obtain a solution with a manageable signal-to-noise ratio.

Objective

As a consequence, an important question arises: how can the solution uncertainty be quantified if a part of it is inaccessible? Additional physical knowledge could—in theory—provide a characterization of bias, but this process is practically impossible with presently available techniques.

Methods

A brief review of biases in established methods is provided, showing that ruling them out would require a piece of knowledge that is never available in practice. Then, the concept of average stresses over a distance is introduced, and it is shown that finding them generates a well-posed problem. A numerical example illustrates the theoretical discussion

Results

Since finding average stresses is a well-posed problem, the bias-variance tradeoff disappears. The uncertainties of the results can be estimated with the usual methods, and exact confidence intervals can be obtained.

Conclusions

On a broader scope, we argue that residual stresses and relaxation methods expose the limits of the concept of point-wise stress values, which instead works almost flawlessly when a natural unstressed state can be assumed, as in classical continuum mechanics (for instance, in the theory of elasticity). As a consequence, we are forced to focus on the effects of stress rather than on its point-wise evaluation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

On Ill-Posedness

Relaxation methods identify residual stresses from measurements of the relaxed strains produced by cuts in a given specimen. In a previous work [1], it was shown that they are ill-posed problems. This property should not be confounded with that of being ill-conditioned. Many ill-posed problems are also ill-conditioned in practice, but the reverse is not implied. Ill-posed problems are often ill-conditioned precisely because of their ill-posedness. As a consequence, it is not a mistake to refer to relaxation methods as ill-conditioned problems—they certainly are—though it would leave out an important piece of the story.

Avoiding its rigorous definition (the interested reader can find the details in [1]), ill-posedness means absence of continuity in the solving operator, that is, arbitrarily small changes in the inputs can lead to enormous changes in the outputs. In most practical cases, ill-posedness arises in the inversion of an integral operator. The latter typically works as a low-pass filter in the spatial domain, so its inversion behaves as a high-frequency amplifier with a gain factor that diverges as the input gets more oscillatory. Since each measurement sample has some kind of independent error, inputs always include some white-like noise that spans the critical frequencies of the amplifier. As a result, the amplification factor through the inverse operator becomes high enough to completely dominate the solution.

The key to understanding the peculiar features of ill-posedness is that those big output changes can be produced by arbitrarily small input changes; in other words, no input accuracy can limit the maximum error that may affect the results. The intuitive explanation of continuity is that a function f(x) is continuous “when its graph can be drawn without ever lifting the pen”. When a function is continuous, any desired uncertainty on the output value \(y = f(x)\) can be achieved by making sure that the uncertainty on the input value x is small enough. Again, the pen metaphor is instructive: if one cannot lift the pen, then an infinitesimal advance in x must produce only an infinitesimal advance in y. If the function is not continuous—for instance, if it has a jump at a point—even an infinitesimal change in the input can produce a finite change in the output. The concept of “error sensitivity” fails; roughly speaking, it is an infinite value. Stretching the concept into the multidimensional setting, if a operator is continuous, a perturbed output is sufficiently close to the ideal one when the input is close enough to its true value. When continuity is lost, that extremely beneficial property does not hold anymore.

To escape the absence of continuity and obtain a meaningful solution, oscillatory noise has to be filtered out. Discretization—something that is actually required to numerically handle the problem —is a filter itself, as it bounds the number of degrees of freedom (DOFs) in the solution. Unless it is backed up by physical knowledge, discretization introduces a bias in the result; this bias is generally uncomputable. The rather trivial exception where the solution is known in advance is of little practical help.

As shown in [1]:

Proposition 1

Every practical solution of an ill-posed problem comes with an inherent bias-variance tradeoff, controlled by the regularization level.

Note that the lack of continuity does not prevent the possibility of obtaining a meaningful solution of the relaxation methods. As a matter of fact, the map between relaxed strains and residual stresses is definitely well-understood, owing to the great works of the last decades [2,3,4,5,6,7,8,9,10,11,12,13,14,15]. If anything, the process can be numerically cumbersome due to the number of FEM analyses needed and/or experimentally challenging due to the small strains that have to be measured, but a solution is seldom prevented by theoretical shortcomings—at least with the established methods. Nonetheless, continuity is a fundamental property for the evaluation of the uncertainties that come with the solution.

The biggest concern pivots on uncertainty quantification. Every experimental practitioner knows that inputs uncertainties can be propagated through the given model to obtain an estimate of the solution uncertainty. Regardless of the specific method used (mostly an explicit propagation of a covariance matrix or a Monte Carlo technique), a finite quantity is always reported for the obtained residual stresses, as every numerical implementation is implicitly regularized. Then, it is tempting to try to minimize that value, as this would apparently signal a more accurate solution. The optimization of the uncertainty of results has been targeted in many previous works, not only by means of improvements of input data, but also (and quite specifically) through changes in the mathematical post-processing of the experimental measurements.

Unsurprisingly, the uncertainty seems to be optimized when the solution DOFs are kept low, an operation which includes low-pass filtering of input and/or output data—that is, limiting DOFs in the frequency domain.

The problem lies in the fact that the uncertainty quantification is inevitably applied to a biased linear operator that is not the original ill-posed one, as the latter actually yields solutions that are completely dominated by an infinite-variance noise. When the bias introduced by the discretization is not considered, the obtained uncertainty actually becomes an observation of the solution variability, leaving out a potentially huge and dominant part of the total uncertainty. Any regularization scheme (including a naive discretization) implicitly assumes that the solution has some very specific properties, which may not be present in the true solution. This bias can be arbitrarily high and cannot be rigorously estimated without additional hypotheses (some exceptions are discussed later). Section “Uncertain Uncertainties” will expose some potentially dangerous and often counterintuitive consequences of this fact.

Considering the physical aspects of the problem, bias can be arbitrarily high only in theory, as residual stresses are obviously limited by the material strength properties—but, again, that is additional information with respect to the mathematical problem. Anyway, bias can eventually be high enough to completely change the results of a practical measurement.

For the sake of completeness, bias also affects well-posed problems. For instance, the numerical implementation of a problem whose mathematical model is a definite integral (usually a continuous operator) is subject to a bias that depends on the specific quadrature scheme and is bounded by the properties of the input and its derivatives between the known (namely, measured) integration points, something that is obviously missing and that requires additional knowledge. The important difference with respect to ill-posed problems is that, as long as it is computationally feasible, in that case the discretization scheme can be refined with a rational increase in DOFs at a level where bias is clearly negligible without compromising on variance. On the contrary, when facing ill-posed problems the best strategy is not to merely increase the DOFs, but to look for a balance. As a consequence, bias could even end up being higher in magnitude than the observed variance.

Motivation

This paper tries to answer the following questions:

-

1.

If uncertainty is potentially infinite in the general case, what does one actually obtain when an uncertainty quantification technique is applied?

-

2.

What are the hypotheses to which the validity of the uncertainties obtained through most common strategies is conditioned?

-

3.

Is there a minimal set of assumptions that allow one to obtain more reliable uncertainty bounds for a given practical problem?

-

4.

How should results and their uncertainty bounds be effectively communicated?

Regarding point 3, we will demonstrate that by giving up the pursuit of point-wise stress values—elusive even from a theoretical standpoint—a well-posed problem is obtained, to which conventional uncertainty quantification techniques can be rigorously applied without introducing any dangerous bias. It is here anticipated that no refined mathematical machinery can overcome an inherent and substantial limit of the underlying model. On the contrary, many advanced techniques turn out to be quite dangerous when applied to ill-posed problems, as their complexity hinders the clarity of their assumptions.

The discussion of the following sections is related to inverse problems arising from residual stress measurements with relaxation methods, whose general mathematical form is as follows:

where h denotes the cut length and z is used as a spatial coordinate. A(h, z) has been called the influence function, calibration function or kernel of the problem and depends on constitutive properties of the material, on the geometry of the specimen and on that of the cut. Note that the notations A(h, z) or A(z, h) are equivalent in practice.

It must be added that the same considerations hold for all problems described by equation (1), including but not limited to cases where differentiation of a measured quantity is needed. For example, equation (1) includes the formulation of the residual stress identification problem in terms of eigenstrains [16].

This matter is anything but mathematical sophistry. Ill-posed problems are rooted in functional analysis, and many of their defining properties are quite difficult to grasp without some background in the subject. However, their practical applicability is mostly an engineering matter. Simply speaking, math demonstrates that the problem is not continuous and that the total uncertainty cannot be estimated, unless something else is known or assumed. It all comes down to know something that mathematics alone does not know, and that is an application-specific piece of knowledge.

That is why, in terms of pure solution accuracy, experienced residual stress practitioners are probably already near-optimal, precisely because (even without a rigorous way to frame the problem) they use their personal engineering judgment to handle the bias-variance tradeoff and choose a rational (if not optimal) solution for their application. On the other hand, we think that there is room for improving the quantification of uncertainties: we are often quite puzzled by reproducibility tests where \(95\%\) confidence intervals consistently don’t match among different experiments.

Probably the main point of this work goes against chasing elusive targets that can be proved to be uncomputable in the general case, so that research efforts can be effectively directed to things that can actually be improved in the present state of the art. Just to mention one of them, the huge quantity of input data provided by full-field methods is very promising [17,18,19,20], yet the framework used to process them is still rather tied to the usual strain gauge formalism; some interesting ideas have been discussed in [21, 22].

The paper is organized as follows:

-

In Section “Continuum Bodies and Point-Wise Values”, the discussion is introduced by analyzing the basic problem of measuring density throughout a solid; this fundamental and scalar property is unrelated to residual stresses, but the discussion serves to highlight general considerations.

-

In Section “Preliminaries”, the well-established instruments that allow one to obtain the solution of the residual stress inverse problem are briefly recalled. Additionally, a numerical reference problem is presented, to be paired with the theoretical discussions.

-

In Section “Uncertain Uncertainties”, the hidden biases of the solution process are discussed by using well-established techniques as examples. The main focus is not on the discretization error caused by finite DOFs, but rather on the other deceptive biases that impair a reliable uncertainty quantification. A fundamental question is raised: what is the point of reporting an uncertainty value that is, in turn, highly uncertain?

-

In Section “A Constructive Proposal”, the findings of the previous Section are used to constructively build a proposal that is aimed at minimizing the amount of uncomputable biases, thereby yielding a more reliable uncertainty quantification.

As stated in [1], whose this paper is the continuation, this discussion is not by any means a collection of arguments against the use of relaxation methods. Other ones are way more susceptible to the material structure at the micro-scale, so a comprehensive evaluation of their uncertainty is possibly even more difficult than the present case. Again, the aim of this paper is to provide guidance on how to best squeeze out reliable information from experimental results.

Continuum Bodies and Point-Wise Values

The Density of a Mysterious Solid

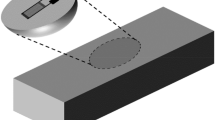

There exists a much more graspable physical analogue to the ill-posedness of relaxation methods (see Fig. 1). Assume that a company has realized a solid of given shape in a newly-engineered graded material where the density is made variable across the body. To verify the accuracy of the manufacturing process, the solid is given to an external lab, asking for a characterization of the mass density field—namely, the evaluation of the mass density as a function of the position in the solid, defined point-by-point. Nothing else is told to the laboratory, aiming at a truly blind verification.

(a) A mysterious solid whose unknown variable density is to be mapped throughout its volume. (b) To this aim, the solid is divided into smaller pieces, whose masses, volumes, and original locations in the specimen are recorded. The cutting scheme is arbitrary, but it profoundly affects the physical nature of the obtained result. By dividing the mass of a tiny piece by its volume, its average density is obtained. Unless some additional knowledge is available for assuming that a point-by-point evaluation of density is negligibly varying inside each small piece, nothing can be further inferred

First of all, laboratory technicians measure mass and volume of the whole specimen. By dividing those two quantities, the average density of the whole body is obtained. If the specimen material was known to be homogeneous, the experiment would stop here. Since no assumption can be made, more measurements are needed. The specimen is carefully cut into small sub-components, say, of approximately a hundredth of the initial size, whose masses, volumes, and original locations in the specimen are recorded. This process yields the average density of each piece. Altogether, the target density function is known through a map of its averaged values on some subsets of its domain.

The laboratory head may question whether the achieved resolution is enough for the customer’s needs and demands to increase the number of parts. Each sub-component is further divided into smaller sub-sub-components, whose average density is measured through mass and volume measurements. The process starts becoming quite challenging: the absolute precision of the laboratory instruments turns out to be quite poor in relative terms for these very tiny pieces. Moreover, the cut width stops being negligible. Anyway, by taking the uncertainties of the laboratory instruments into account, one can propagate them into uncertainty values of the obtained averaged densities. The smaller the parts, the more those densities are affected by high relative uncertainties, so at some point one must stop cutting, for example when the uncertainties on average densities exceed a certain threshold.

Regardless of the number of cut iterations, what is obtained is always a map of average densities over some given volumes. Here comes the main problem: are the point-wise values of the target density function constrained to fall within the obtained uncertainties? Without further assumptions (as in the present case), absolutely not. Indeed, a counterexample is trivial: each average density could arise from a few small but massive particles embedded in an almost vanishing matrix. There is no upper bound to the absolute error that could be made: as long as the massive particles are sufficiently small, their point-like density could be arbitrarily high. Ironically, that is actually the case.

At the atomic scale, matter is essentially a collection of tiny supermassive particles—nuclei—whose average density is in the order of \(10^{17}\, \mathrm {kg/m^3}\), embedded in low-density regions, which are filled only by electronic orbitals. At higher length scales, those two densities average out into the commonly known and used material density, which is fine for many practical applications.

The mathematical structure of this problem is akin to the one of relaxation methods. From a given density function \(\rho (\textbf{x})\), the mass m of a solid \(\Omega\) can be obtained as:

which is the simplest form of equation (1), obtained by setting a 3D unit function as kernel. One measures \(m(\Omega )\), characterizes \(\Omega\), and tries to identify \(\rho (\textbf{x})\). In other words, one wants to find a function through the results of some integrals over appropriate domains. Needless to say, this problem can be shown to be mathematically ill-posed.

What is literally ill-posed—even from a conceptual point of view—is the question itself. What is actually a point-wise mass density? Electrons do not occupy fixed positions in space, while thermal energy is stored in the vibrational motion of atoms in the crystal lattice, so strictly speaking density should be also a function of time. The point here is that density is almost always desired in its continuum mechanics definition, rather than in its strictly point-like value, and the two are substantially different. In fact, the first assumes that there exists an averaging volume that is sufficiently small for the averaged quantity to be considered practically point-wise, but sufficiently large to contain enough atoms so that density fluctuations due to individual motions can be neglected. The resulting averaged density becomes a function of spatial position only. The mass densities of materials, used in common engineering practice, fall within this definition, which—despite being openly artificial and missing the physical reality—serves as a valuable practical quantity. In fact, one can effectively use it to determine the mass of solids of any given shape through equation (2), provided that they are larger than the averaging volume; all while forgetting the discrete nature of matter and exploiting the advantages of calculus. In other words, we are trading a bit of physical rigor for invaluable practical convenience.

When trying to measure density in a mechanical component, finding a suitable averaging volume is not a particularly difficult task. For example, a \(1 \, \mu \mathrm {m^3}\) cube of iron contains about \(10^{11}\) atoms, while being definitely small for most applications in mechanical engineering. If the laboratory was able to provide the company with a characterization of the mass density averaged on \(1 \,\mu \mathrm {m^3}\) cubes, it is quite safe to say that all its practical needs would be satisfied. Note that this piece of information is just a reasonable bet, but it is not implied in any way by the properties of the problem. It is an assumption, not a deduction.

To sum up, the laboratory faces two issues when solving a problem like this one:

-

A theoretical issue. The solution of the problem equation looks for a point-wise quantity that actually does not exist or has significant conceptual shortcomings. Nonetheless, in many practical cases the point-wise values are not even needed. Density always presents itself inside integrals that yield measurable quantities (e.g., masses or moments of inertia). We are interested in predicting the results of those integrals, not in the mass density itself. Hence, why bother about the values and the (infinitely large) uncertainties of the point-wise density? All we need is an averaging volume that is small enough not to influence the results of integrals of the mass density, such as a refined discretization scheme.

-

A technological/metrological issue. For a fixed absolute error of the measuring instruments, smaller averaging volumes imply higher relative errors in the results. Clearly, results have a practical relevance if their uncertainties fall below a certain threshold, so the accuracy of the available instruments set some limitations on the minimum achievable averaging volume, which may be significantly larger than the one that would overcome the previous issue.

Eventually, the problem is solved if three conditions are met:

-

1.

there exists an averaging volume that is large enough to exclude the influence of matter discreteness;

-

2.

that volume is small enough to capture any spatially localized effect of the measured quantity that may affect the result being evaluated—usually an integral value such as mass;

-

3.

the accuracy of the available instruments allows one to obtain those integral values with reasonable uncertainties.

When at least one of these conditions is not met, the problem is unsolvable. Note that solvability is a user-dependent property and not an absolute one: the same unsolvable mass density measurement of a given material might be solvable (using the same instruments) for someone that will produce bigger parts out of that material, because the negligible influence of density variations at lower scales would allow a bigger averaging volume to be chosen. In our example, a \(1 \,\mu \mathrm {m^3}\) averaging volume would miserably fail the needs of the customer if the solid is made out of a nano-architected material whose density is intentionally designed to vary at the \(\textrm{nm}\) scale.

Ill-posedness is often caused by the theoretical issue and appears through the technological issue, as a coupling between the requirements set by conditions 2 and 3. In well-posed problems, the two requirements can be solved almost independently. In those cases, discretization schemes only marginally affect the sensitivity of results to the measurement uncertainties; measuring function values at more points with a given absolute precision does not decrease the overall precision in knowing the whole function. In ill-posed problems, the two requirements are instead coupled and define the bias-variance tradeoff [1].

Back to Residual Stresses

Let us apply the obtained results to residual stresses and relaxation methods. Again, what is a point-wise value of stress? If the whole problem of relaxation methods was reformulated in terms of inter-atomic forces, it would be well-posed—it has an extremely high number of degrees of freedom, though not an infinite one. Clearly, this approach is completely out of reach and probably more trouble than it is worth, so inter-atomic forces are averaged into the continuum mechanics formalism, which only works at some intermediate length scale.

By some manner, we are spoiled by the well-posedness of classical elasticity. In the ideal world of continuous homogeneous elastic solids, when suitable boundary conditions are applied, a unique solution in terms of point-wise stress fields is guaranteed and that solution is stable with respect to input variations. The boundary problem of classical elasticity is well-posed because of (not solely, of course) a rather strong mathematical assumption: a compatible natural state exists, in which the point-wise stress and strain fields are null throughout the body with no forces or displacements applied. When that holds, finding point-wise values of stresses and strains from suitable boundary conditions is a well-posed problem. Moreover, because of Saint Venant’s principle, boundary loads tend to produce regular stress and strain fields far from their application point, so that we consider regularity as a natural property of the stress field. Unfortunately, the above assumption must be dropped when considering residual stresses, because often it is the opposite of what actually generates them. Indeed, the latter are produced by incompatible permanent strain fields that aren’t necessarily regular (in terms of continuity or differentiability), and there are myriads of them that correspond to almost identical boundary conditions for the whole body. Even a simple prismatic bar under a single axial load could present an extremely intricate solution that satisfies compatibility and equilibrium, if the initial strain fields are not forced to be null.

Nonetheless, only integral effects of point-wise stresses can be observed and measured. We can measure forces, displacements, deformations of a given gauge length, etc., all of which depend on surface or volume integrals of the point-wise stress fields. Many failure criteria are defined—arguably for convenience—on point-wise values of stresses but are already known to cause some problems in relevant practical cases such as sharp notches, and most workarounds rely on switching to related integral quantities such as strain energy release rates [23], the Line Method in the Theory of Critical Distances [24], the Averaged Strain Energy Density criterion [25], etc. If it is not a bit of a stretch, we claim that stress in a continuum is a useful bridge quantity between other measurable quantities that depend on stress integrals over some finite spatial domain.

This is the same condition discussed in the mass density experiment, where it is necessary to give up on the evanescent concept of point-wise values of some intensive quantity and to settle for an averaged version that is refined enough to compute the desired integrals. Nonetheless, the theoretical and technological issues are arguably much more challenging than in the density problem, as the stress is a tensor and the averaging size that satisfies both requirements is likely to be confined in a very narrow interval.

Note that the very nature of relaxation methods forces some features of the averaging process. For instance, in the hole-drilling method, residual stresses are averaged over cylindrical surfaces with fixed diameter; for the slitting method, residual stresses are averaged over planar surfaces of given length; and so on. Except for the contour method, whose averaging surface could be freely chosen, in principle, other methods allow one to change the averaging size only over a single dimension. Regarding other directions, the bias introduced by the averaging size is simply inevitable and not measurable, requiring careful evaluation by the stress analyst. For instance, if stress fields exhibit fine patterns on the plane where the hole is drilled, nothing in the experiment can account for it, nor can it estimate the error we introduce by assuming a constant averaged value on the plane. It is important to remember that the assumption of constancy in the averaging dimensions imposed by the measurement process itself must be supported by other information and cannot be confirmed retrospectively.

As long as we want to avoid the effects of crystal grain discreteness and orientation, we are forced to average point-wise stresses over a significant number of grains. Then, at the very least, the averaging length is bounded below by the grain size. For a typical structural steel, that would be around \(10\,\mu \textrm{m}\) [26, 27]. It is worth noting that this limit changes drastically if the measurement is capable of analyzing residual stresses within a grain, while accurately modeling its specific anisotropy in space—that is the case with FIB-DIC measurements [28]; at that point, the lower limit is likely to become the breakdown of the continuum body assumption.

The averaging length is also limited from above by the requirements set by integrals that must be computed to evaluate some material performances of interest, and the problem becomes extremely application-specific: the following discussion should be raised before every residual stress measurement.

By trying to provide a typical example, it is reasonable to assume that residual stresses are often characterized because of their significance for structural integrity. The characteristic length used by common fatigue or fracture criteria is generally in the order of \(100\,\mu \textrm{m}\) for structural steels [24]. Then, the average residual stress fields over that length become the actual target of the measurement process.

Eventually, there remains the technological/metrological issue. The current state of the art is definitely not too far from the theoretical target—at least in this proposed example. A spatial resolution of \(100\,\mu \textrm{m}\) is not a tough request for a hole-drilling measurement at the present state of the art; for a slitting measurement it is much more of a challenge, although an impressive recent work proves that it is possible [29]. However, we are not simply interested in the average residual stresses over that length, since the whole point of this paper is to dig into their uncertainties. This fact sets additional requirements.

As shown in Section “Uncertain Uncertainties”, if one uses the Integral Method and a \(100\,\mu \textrm{m}\) step, the actual averaged solution over \(100\,\mu \textrm{m}\) steps is not achieved; instead, a biased result is obtained. The same applies to Tikhonov regularization. We should not rely on any of the methods that introduce uncomputable biases—in the sense that the true solution is needed to compute them. When solving ill-posed problems, obtaining results is deceptively much easier than computing their uncertainties.

A brief side point: as repeatedly remarked in [1], if some physical knowledge suggests that the solution has a specific form depending on few parameters, no bias is introduced at all when the problem is solved with respect to those parameters, so this whole discussion becomes pointless.

Preliminaries

A Reference Example

As done in [1], the discussion is aided by a reference example of an ideal and known but realistic residual stress distribution, hypothetically produced by a shot peening treatment on a thick steel component \((E=206\,\textrm{GPa},\) \(\nu = 0.3)\). The chosen residual stress distribution is inspired by the classic textbook of Schulze on shot peening [30]. Expressing the in-depth coordinate z in \(\textrm{mm}\) and \(\sigma (z)\) in \(\textrm{MPa}\), the following expression was assumed (plotted in Fig. 2):

For simplicity, having assumed a sufficiently thick specimen, tensile residual stresses for \(z > 0.25\, \textrm{mm}\) are neglected. In accordance with the ASTM E837 procedure [31] the relaxed strains measured by a standard type-A strain rosette with diameter \(D=5.13\, \textrm{mm}\) were numerically calculated by assuming a hole with diameter \(D_0=2.05\, \textrm{mm}\). The corresponding influence function was taken from [32].

To simulate the typical measurement in state-of-the-art conditions, 100 strain samples were generated, corresponding to a constant drilling step of \(10\,\mu \textrm{m}\). When measurement errors in relaxed strains had to be considered, a Gaussian noise having a standard deviation of \(\varsigma _\varepsilon =1\,\mu \varepsilon\) was superimposed on the calculated relaxed strain. A Monte Carlo simulation with \(10^4\) trials were employed to evaluate the corresponding distributions of results.

Besides its illustrative functions, a toy problem like this one has a much more fundamental purpose. The problem with residual stress measurements is that each method is kind of specialized in measuring residual stresses at a given length scale and for a range of depths from the specimen surface—see the useful chart in the first chapter of [14]. A reference laboratory measurement where most uncertainties are minimized to a negligible level (compared to practical applications) is arguably still missing. As a consequence, uncertainty quantification techniques are almost unverifiable: how can we be sure that a confidence interval evaluation strategy works, if true values cannot be obtained with a significantly higher accuracy? If two techniques yield different values, which one is wrong?

On the other hand, any proposed uncertainty quantification strategy must work at least in a controlled numerical setting, and here is where the toy problem comes in handy. It cannot prove that an uncertainty quantification works—the real world can always show errors that were not included in our computations—but it can effectively disprove it.

Degrees of Freedom cannot be Infinite

Ill-posedness manifests itself in a very subtle way. In practice, only a finite collection of relaxed strain readings is available; as a consequence, at most, the same number of DOFs can be assumed to represent the residual stress distributions. When the DOFs are made finite, the integral equation in equation (1) becomes a linear system and the operator is reduced to a suitable matrix.

A matrix is a representation of a linear finite-dimensional continuous operator: it sends infinitesimal inputs to infinitesimal outputs. Besides rigorous proofs, it can be directly verified by mere calculations. More interestingly, an input uncertainty cannot be amplified by the linear operator more than the maximum singular value of the matrix [33]. Did this fact overcome ill-posedness? Unfortunately no, even though the last fact is true.

What one observes is a bounded sensitivity of the finite-DOFs residual stress solution with respect to the input error. Unfortunately, the solution may have arbitrarily high frequency contents outside those specific DOFs that were considered in the calculations. Just to give the idea, the residual stress solution could present extremely high oscillations inside a given discretization step, as long as the integral in equation (1) yields the same relaxed strain values. In mathematical terms, discretization is itself a regularization technique. That is why it is often important to choose a basis for the residual stress functions which suitably represent a given experimental case—but that is engineering knowledge, not maths.

One may give up on the possibility to attain the true point-wise residual stress function and settle for its best approximation (in a least squares sense) in the chosen finite-dimensional space. For example, when the well-known Integral Method [5,6,7] is used, the results become the averaged residual stress along the given calculation intervals. Surprisingly, the following proposition holds, as shown in Section “Uncertain Uncertainties”:

Proposition 2

In general, a least squares approximation in the space of relaxed strains is not a least squares approximation in the space of residual stresses.

As a consequence, not only the obtained least squared solution could be arbitrarily far from the true one, it is not even the best one among the members of the chosen discretization scheme. In other words, the solution is also biased with respect to the element that best represents the true residual stress fields in terms of least-squares distance.

This last sentence is counter-intuitive and deserves an additional ideal reference example. Let us consider a thick specimen with an equi-biaxial residual stress distribution, assumed to be known with infinite accuracy, and a hole-drilling measurement performed in—say—ten equal steps. The relaxed strains are measured with no errors, too. The setup is so precise that the experiment could be repeated in any point of the specimen and the same relaxed strains are obtained. When the inverse problem is solved by the Integral Method, the same ten residual stress values are obtained for any hole. Given the accuracy of the setup, it is reasonable to expect that the obtained residual stress values are the average of the real stress distribution along each calculation step: in general, they are not. From a practical point of view, the solution obtained with the Integral Method is not an unbiased estimator of the average residual stresses in each interval. It is not at all a specific limit of the Integral Method; it is an inherent limit of equation (1).

Nonetheless, every bias vanishes as the discretization scheme is pushed to a high number of degrees of freedom. As that happens, the discretized operator better resembles the original (discontinuous) operator, and this fact manifests itself as an increase of the solution sensitivity to input errors towards unmanageable values, until the solution is practically dominated by noise. A trade-off between bias and variance becomes necessary; when it is not explicitly chosen, a hidden bias is inevitably being introduced.

Practical Solution

To solve equation (1), the residual stress is assumed to belong to the span of a n-dimensional basis \(\varvec{\beta }=\left[ \beta _1(z),\beta _2 (z) \ldots \beta _n (z) \right]\), while relaxed strains are sampled at a finite number of hole depths \(\textbf{h}=\left[ h_1,h_2 \ldots h_m \right]\). Linearity is exploited to evaluate the effect of every component of the residual stress basis to each relaxed strain measurement:

so that, for a given residual stress distribution \(\sigma (z) = \sum _{j=1}^{n} s_j \beta _j (z)\), the following relation holds:

By defining an array of measured strain samples \(\textbf{e}= \left[ \varepsilon (h_1),\varepsilon (h_2) \ldots \varepsilon (h_m) \right]\) and another array of residual stress components with respect to the chosen basis \(\textbf{s} = \left[ s_1,s_2 \ldots s_n \right]\), the usual linear system is obtained:

Depending on the specific choice of the basis \(\varvec{\beta }\) and on the solution strategy of equation (6), several approaches have been proposed to solve this inverse problem. The hidden biases introduced by those procedures are discussed in the following section.

Uncertain Uncertainties

Integral Method

The celebrated Integral Method [5,6,7] assumes that the residual stress distribution is a piecewise constant function (also known as staircase function). In mathematical terms, the interval \(\left[ 0,h_{\textrm{max}}\right]\) is divided into a finite number of subintervals \(H_j \triangleq \left[ h_{j-1},h_j\right]\), and their related indicator functions \(\chi _{H_j} (z)\) are used as a basis. Recall that \(\chi _{H_j} (z)\) is defined as:

The coordinates \(s_j\) of \(\sigma (z)\) with respect to that basis are the piecewise constant values of stresses in the chosen subintervals. The solution is obtained as:

Apart from the rather unreasonable case of knowing that \(\sigma (z)\) actually is a staircase function, there exists a representation error \(\sigma ^\perp (z)\) such that:

By plugging equation (9) into equation (1), for a given relaxed strain sample \(e_i\) one gets:

There is no guarantee that the second term at right-hand side is null. Depending on the specific \(h_i\), it could be either positive or negative, randomly perturbing the linear system.

Eventually, the coefficients \(s_j\) are found by inverting equation (6), but that is not the true model of physical reality, which is actually found in equation (10). A slightly wrong model is used both to find the solution and to compute its uncertainties. It is worth noting that this error is added to the representation error. Equation (9) already indicates that the best solution would anyway miss the component \(\sigma ^\perp (z)\). Additionally, the values of \(s_j\) are biased by a wrong inversion model. The correct one depends on \(\sigma ^\perp (z)\) and it is not accessible to the analyzer. This happens even if the input data is unaffected by any kind of error. In practice, even with perfect measurements, the results would not correspond to the average values of \(\sigma (z)\) over the subintervals \(H_j\). The only way to reasonably avoid this bias is to include the highest number of DOFs, so that \(\sigma ^\perp (z)\) can be deemed as physically negligible (this point is articulated in Section “A Constructive Proposal”). See Fig. 3 for a numerical example.

Another bias impairs the inversion process. When the regularization level is controlled by the size of the subintervals \(H_j\), the resulting DOFs are almost always less than the measurement points—that is, the matrix \(\textbf{A}\) is rectangular and has more rows than columns. The linear system in equation (6) becomes overdetermined, and \(\textbf{s}\) is evaluated in a least-squares sense.

Least-squares solutions of discrete inverse problems come with a fairly overlooked subtlety: the residuals are minimized in the only space where that operation is possible, which is the space of the measured relaxed strains. The least-squares solution \(\textbf{s}^\dagger\) is the one that best approximates \(\textbf{e}\) in terms of relaxed strains. In geometrical terms, it is the one such that \(\textbf{As}^\dagger\) is the orthogonal projection of \(\textbf{e}\) on the span of possible relaxed strains.

Unfortunately, the fact that \(\textbf{As}^\dagger\) is the best approximation of \(\textbf{e}\) does not imply that \(\textbf{s}^\dagger\) is the best approximation of the real \(\sigma (z)\), as that is hardly ever the case. In formal terms, the operator does not preserve orthogonality so, in general, an orthogonal projection in the space of measured relaxed strains is not an orthogonal projection in the space of residual stresses. As a consequence, not only the solution is affected by discretization error, it is not even the best one among those spanned by the chosen basis too.

Solutions obtained through the classic Integral Method. To achieve a reasonable output variance while avoiding additional regularization, the domain is divided into 10 subintervals, each having a \(100\,\mu \textrm{m}\) depth. The yellow solution corresponds to ideal errorless measurements and coincides with the expected value of experimental solutions (in blue), while the shading shows the corresponding \(\pm 2 \varsigma\) scatter band. Confidence intervals would have the same size, although they would be centered on a specific experimental solution. The actual average of the true solution inside each subinterval is shown in red. The red and the yellow curve do not correspond. In some subintervals, the actual average is even outside the \(\pm 2 \varsigma\) results band

When a technique for uncertainty quantification is applied to the usual linear system in equation (6), it is commonly assumed that a correct model of the physical reality is available. Then, the uncertainties of its constituents can be included. Uncertainties on relaxed strains can be considered in \(\textbf{e}\); all kinds of geometrical and constitutive uncertainties, in particular those on the cut depth measurements, can be included in \(\textbf{A}\); but the fact that equation (6) is not the true model of the physical process cannot be rigorously considered, since its associated error—exposed in equation (10)—depends on the unknown true value of the solution.

Eventually, the statistical distribution of \(\textbf{s}\) can be completely characterized but it is not possible to know where it is positioned with respect to the values of \(\sigma (z)\), neither to their averages over the calculation intervals, unless—as anticipated—some physical knowledge can rule out bias. Moreover, as a consequence of the bias-variance tradeoff, by varying the bias the variance of the solution actually changes and would give rise to different total uncertainties under the same estimation framework.

How can uncertainties be trusted, if they are themselves highly uncertain? A, say, \(95\%\) confidence interval on \(s_j\) is interpreted as a region in which the ideal values of \(s_j\)—namely, obtained with errorless input data— are included with a \(95\%\) probability. Nonetheless, the physical significance of being just an ideal result is rather questionable, as those ideal values are biased by an unknown quantity with respect to the actual average values of \(\sigma (z)\) over the subintervals \(H_j\), which are instead quantities tied to the physical reality. The \(95\%\) coverage holds only for those ideal values but not for the averages of \(\sigma (z)\), let alone for the point-like values of \(\sigma (z)\).

Power Series Method

Instead of indicator functions, a set of polynomials could be assumed as the basis \(\varvec{\beta }\) that spans the space of admissible solutions:

Equation (11) defines the so-called Power Series Method, which was also explored in Schajer’s pioneering paper [5].

All the biases discussed in the previous section still hold, though possibly in an even more underhand manner. The solutions of the Power Series Method \(s_j\) are not estimators of spatially averaged residual stress values; instead, they are the coefficients of a polynomial—each having a different dimension and measurement unit. As for the integral method, the observed variance only concerns the variability of the \(s_j\)’s with respect to their ideal errorless values. Nonetheless, the latter have a rather limited physical meaning: the, say, fifth coefficient itself of a polynomial stress distribution has hardly ever a direct influence on the structural behavior.

Solutions obtained through the Power Series Method and a Legendre polynomial basis. To achieve a reasonable output variance without additional regularization, sixth-order polynomials are employed. The yellow solution corresponds to ideal errorless measurements and coincides with the expected value of experimental solutions (in blue), while the shading shows the corresponding \(\pm 2 \varsigma\) dispersion. Confidence intervals would have the same size, although they would be centered on a specific experimental solution. The candidate that best approximates the true solution in a least-squares sense is reported in red. The red and the yellow curve do not correspond. The red curve is frequently outside the \(\pm 2 \varsigma\) results dispersion. Since the physical meaning of the red curve is definitely questionable, one may be tempted to compare the obtained solution with the true one itself, but the situation is even worse

As a consequence, the uncertainties of coefficients are frequently combined to obtain the point-like stress uncertainties, which are instead inherently biased by the fact that, in practice, a truncated polynomial expansion never represents exactly the true solution; in other words, there always exists a \(\sigma ^\perp (z)\) component as in equation (10). See Fig. 4 for a numerical example. In fact, no one can have a prior knowledge that a residual stress distribution is a polynomial function. There’s only a remarkable exception, which holds whenever the residual stress is known to have at most a linear trend. If a slender beam-like component is only subjected to far-field incompatibilities, as in the classical case of a straight beam in a two-dimensional statically indeterminate structure, then Navier’s formula holds, and residual stresses are described by a first-order polynomial. Note that in this favorable case the problem is well-posed and probably also sufficiently conditioned, so it is far form the “traps” of ill-posedness.

As a side note, it can be observed that a residual stress distribution could be considered better approximated by a polynomial than by a staircase function, at least in components made by a homogeneous material. The discontinuities of a staircase distribution would require the process that generated residual stresses to produce an intricate distribution of eigenstrains, which is not realistic for common manufacturing processes. Therefore, the disadvantages of the Power Series Method could be slightly compensated by the fact that \(\sigma ^\perp (z)\) is likely smaller than the one found with the Integral Method for a given number of DOFs. Nonetheless, this fact breaks down as soon as the residual stresses show high gradients that are better modeled by a discontinuity than by a smooth function.

The application of the Power Series Method exposes the core problem of ill-posedness: how many polynomial terms should be included? If too few are employed, the solution is very robust to input noise but highly biased; if too many are included, the contrary holds. Again, bias is not observable, so making this choice by evaluating the solution variance would actually demand a constant function—namely, a zero-order polynomial—to be used.

To the authors’ best knowledge, Prime and Hill were the first to acknowledge this effect in a now well-known paper [34], where they made clear the distinction between a “measurement uncertainty” and a “model uncertainty”, that is, respectively, variance and bias. They also proposed a heuristic method to evaluate the latter, which, despite not having a proven general validity, certainly raised awareness on the topic (see the recent works by Olson et al. [35, 36], where they call it “regularization uncertainty”), in addition to providing good results in many practical cases.

Smit and Reid [37,38,39] proposed another heuristic: the best order is the one with the lowest uncertainty among the ones that have converged. They actually seized a very important effect in ill-posed problems, called semiconvergence, which works as follows. Call n the number of DOFs for a given discretization scheme. If the true solution and input noise have markedly distinct features with respect to the given DOFs (for instance, one is very smooth while the other is rough and oscillatory), then the solution is seemingly observed to converge with increasing n to a given result, before starting to diverge again for much higher values of n. Needless to say, that convergent plateau is a sweet spot for the bias-variance tradeoff, and the strategy of choosing the least variant solution among results that are similarly biased is reasonably optimal (it is indeed quite similar to the well-established L-Curve criterion [40] and to the Quasi-Optimality principle [41]). Two problems arise. The first is that optimal does not mean unbiased: bias is null only if the solution is exactly modeled by a polynomial of the chosen order, and the solution may have some features that are only picked by very high-order terms—yet are lost in the noise sensitivity associated with that number of DOFs. The second is that semiconvergence may not even show up: especially if the residual stress distribution is not well modeled by a low-order polynomial, by increasing the maximum order one observes both a continuously changing solution and an ever increasing noise effect, without that useful “dead-zone”.

In a recent article, Brítez et al. [42] explored the use of nonconsecutive polynomial orders with the Power Series Method and proposed another heuristic to pick the best solution. When testing their algorithm and comparing it with other literature proposals, they asked what we believe to be the right question. If one constructs a \(95\%\) confidence interval, then it should have a \(95\%\) frequentist coverage, that is, the obtained bounds should include the true values \(95\%\) of the times that the procedure is applied. How does that figure compare with the actual coverage of the intervals provided by the methods? In other words, what is the accuracy of the given uncertainty? Results are extremely interesting, as none of the tested methods consistently achieves the right coverage for all datasets. Note that all tested methods include some heuristic estimation of the model error (namely, bias); if the same test was carried out on an uncertainty quantification strategy that does not even include this term, coverage would be shockingly low.

There is a minor statistical flaw in testing the coverage of confidence intervals by counting the fraction of residual stress values of a single solution that fall within the computed point-like intervals, as done in [35, 36, 42]. In fact, the entries of the obtained solution are correlated in space, and they are more and more correlated as the regularization level is increased. Confidence intervals come with a probability of including the underlying true value upon independent repetitions of the same bounds estimation procedure, something that does not hold for the intervals computed at different depths (being them correlated), while it does for a repetition of the whole experiment.

There is indeed an instructive example. Assume that the solution values are perfectly correlated in space, as when error is due to a constant shift from the true solution. In this case, either all or none of the confidence intervals at different depths include the corresponding true solution values. Nonetheless, upon repetition of the experiment, each confidence interval at a given depth will anyway include the corresponding true value with the desired probability. Clearly, this effect is not so dramatic in residual stress measurements, but in this work we will stick to the rigorous definition of confidence intervals coverage.

Tikhonov Regularization

Tikhonov regularization improves the ill-conditioning of refined discretization schemes, but, in exchange, it introduces a bias in the solution, whose form is anything but trivial. Its rigorous characterization requires some knowledge of functional analysis (see [43,44,45]), but an intuitive discussion can be provided by adopting a bit of linear algebra.

The coefficient matrix \(\textbf{A}\) can be written in terms of its Singular Value Decomposition (SVD) [33] as:

where \(\textbf{U}\) and \(\textbf{V}\) are orthogonal matrices, and \(\mathbf {\Sigma }\) is a diagonal matrix having the so-called singular values \(\lambda _i\) on the diagonal (usually arranged in descending order). The SVD provides a useful interpretation of how a finite-dimensional linear operator (namely, a matrix) acts on a vector. First, it finds its coordinates with respect to an orthogonal reference system \(\textbf{V}\), then it amplifies the i-th component by the singular value \(\lambda _i\), and finally it adjusts results through a rotation \(\textbf{U}\).

Hansen et al. [46] showed that low i’s (namely, high \(\vert {\lambda _i}\vert\)’s) are related to smoothly varying components, while high i’s (namely, low \(\vert {\lambda _i}\vert\)’s) are associated with rapidly oscillating ones, suggesting that the SVD of \(\textbf{A}\) somehow resembles a kind of Fourier transform in the spatial frequency domain. As a consequence, some terms and concepts in the following have been borrowed from signal processing, such as low-pass filter and high-frequency amplifier (see [1]).

The ill-posedness of equation (1) causes the singular values of \(\textbf{A}\) to accumulate at zero [45], that is, the more degrees of freedom are present, the more \(\lambda _i \rightarrow 0\) for high i. In other words, the forward problem (from residual stresses to relaxed strains) is a low-pass filter with respect to the basis \(\textbf{V}\).

A useful feature of the SVD is that it allows the least-squares solution of \(\textbf{As} = \textbf{e}\) to be obtained in a convenient form:

where \(\mathbf {\Sigma ^\dagger }\) is the diagonal matrix whose elements are given by the reciprocal of each singular value (null entries are left at zero). Its effect is the same: it projects on \(\textbf{U}\), amplifies by \(1/\lambda _i\), and rotates by \(\textbf{V}\).

If for high i \(\lambda _i \rightarrow 0\), then \(1/\lambda _i \rightarrow \infty\), and that is precisely the curse of ill-posedness. Some components receive an extremely high amplification, and if errors spans those components—which is almost always the case—noise ends up dominating the final result. The inverse problem is a high-frequency amplifier with respect to the basis \(\textbf{U}\).

Behind its practical implementation as a penalized least squares problem, Tikhonov regularization actually applies a filter on the terms \(1/\lambda _i\) [44]. Instead of amplifying by \(\frac{1}{\lambda _i}\), classical Tikhonov regularization substitutes that term with by \(\frac{1}{\lambda _i + \alpha }\), so that as \(\lambda _i \rightarrow 0\) the maximum amplification factor does not grow to infinity anymore and for large enough \(\lambda _i\) the solution is negligibly affected by the value of \(\alpha\). The second-order Tikhonov regularization included in the ASTM E837-20 standard [31] works similarly, although in a slightly more sophisticated mathematical context. This operation comes at the cost of a bias: by filtering high-order components, both noise and true content in that zone get distorted. This bias could be characterized, if some solution properties with respect to those components were known.

Nonetheless, a practical problem lies in the fact that \(\textbf{U}\) and \(\textbf{V}\) have hardly ever some physical meaning in relaxation methods. They are simply some orthogonal bases (in \(\mathbb {R}^n\), unrelated with geometrical orthogonality in the real world of the experiment) that allow one to write \(\textbf{A}\) as a SVD decomposition. As a consequence, it is practically impossible to have prior knowledge on how the solution behaves in those bases, such as the sparsity of its representation, so the corresponding bias cannot be known.

Tikhonov regularization is frequently coupled with some criteria on how to optimally choose the regularization parameter \(\alpha\). One of the most used strategy is the Morozov discrepancy principle, which is also suggested in the ASTM E837-20 standard [31]. Its details are covered in [1]; here, we should delve into the true meaning of the above-mentioned optimality.

A formal discussion of the Morozov discrepancy principle can be found in Chapter 7 of [44] and is way outside the scope of this paper, but some concepts are useful in the engineering practice too. A choice strategy of a regularization parameter is optimal if the obtained solution is the closest to the true one, among all possible values of the \(\alpha\) parameter. If this definition is assumed, the Morozov discrepancy principle is not optimal and, to our knowledge, a strategy that deterministically picks the optimal \(\alpha\) parameter does not exist. In a statistical discrete setting, the Morozov discrepancy principle is said to be convergent and order-optimal. Convergent means that the expected value of the root mean square solution error tends to zero as the degrees of freedom of the problem grow to infinity. Order-optimal means that the chosen parameter approaches the optimal one up to a multiplicative constant, as the degrees of freedom grow to infinity; strictly speaking, in the case of the Morozov criterion it requires some technical assumptions that will be omitted here. Note that both these properties are focused on the asymptotic behavior with respect to increasing the degrees of freedom. Similar considerations hold for other “optimal” parameter choice strategies.

From a practical point of view, the last paragraph leads to two important consequences:

-

1.

When Tikhonov regularization is used, the highest number of measurement points and the corresponding most refined discretization scheme should always be exploited. That experimental strategy ensures that the regularization parameter is chosen in the best possible way. It is impossible to know how much the obtained solution is better than another one coming from a less refined discretization scheme, but this ensures that the most favorable conditions are chosen for the method to work. Giving up on experimental data (such as reducing the number of physical drilling steps) makes no sense other than being a pure time-saving measure.

-

2.

Because of the previous point, for a given number of degrees of freedom there is no mathematical result that guarantees that the chosen parameter is close to the optimal one, even in a statistical sense. Depending on the problem and on the properties of the true solution, some methods can systematically over- or under-regularize the solution [47]. Sometimes the Morozov discrepancy principle yields a regularization parameter that is blatantly unreasonable, and there’s nothing wrong with this outcome. In practice, parameter choice criteria are just sound and well-grounded heuristics.

Strictly speaking, the Morozov discrepancy principle is agnostic to the specific regularization technique applied. For instance, it could be used to automatically choose also the size of the discretization step in the Integral Method or the maximum degree of polynomials in the Power Series Method.

Tikhonov regularization is particularly convenient because it allows one to easily play with its (single) regularization parameter and explore the bias-variance tradeoff without having to recompute the entire discretization scheme, thus avoiding a computationally burdensome procedure. Then, by mixing personal judgment and the outputs obtained by various parameter selection criteria, the analyst can choose a suitable solution. Nonetheless, a practical quantification of uncertainty is still an open—possibly unsolvable—problem. When uncertainty is not that big of a concern (for instance, in image deblurring, when only a nice reconstruction is needed), the upsides of this approach are particularly advantageous, but when its results are used to make safety-critical decisions (as when considering the effect of residual stresses in a failure assessment), it should be handled with extreme care.

As a side note, recall that the beneficial mathematical properties of the Morozov discrepancy principle hold under the assumption of deterministically known variance of the input, which is instead a not-so-trivial piece of information in the real world of measurements. The ASTM E837-20 provides a technique that estimates the input variance, but the variance of the estimated variance itself can be a cause for concern. See Figs. 5 and 6 for a numerical example. The interested reader can find a statistical analysis in Appendix A.

Solutions obtained through Tikhonov regularization and the Morozov discrepancy principle. Since DOFs are not limited by this approach, the best possible solution coincides with the true one, and it would be achieved with perfect measurements. A single realization of a solution is also reported, together with its computed \(95\%\) confidence interval. (a) Noise level known a priori and equal to \(\varsigma _\varepsilon = 1\,\mu \varepsilon\). (b) Noise level estimated through the procedure of ASTM E837-20 standard. Note that results are centered along a solution that is biased with respect to the true one, especially in areas where features vary on a small spatial scale. For example, the effect is rather pronounced at the surface. The fact that the noise level is not known a priori adds another layer of variability to the results, but it can have an apparently opposite effect on a single solution: when the noise level is overestimated, the same solution needs more regularization, and that decreases its perceived variability

Distribution of the ratio between the regularization parameter \(\alpha\) chosen by the Morozov discrepancy principle and the optimal value corresponding to the minimum solution error in a least squares sense. The two cases of known vs. estimated noise level are reported. Note that the picked value of \(\alpha\) is slightly overregularized on average, but prone to some cases of severe underregularization

Machine Learning Applications

The development of statistics and data science provided residual stress engineers with a plethora of tools to tackle the inverse problem of relaxation methods. Neural networks have been employed [48, 49], though mainly for the correction of non-linear effects on the measurements, such as plasticity. Anyway, the bias-variance tradeoff is a well-established concept in the context of neural networks [50], so the design and training of the network is generally planned accordingly.

Recently, a technique called Gaussian Process Regression (GPR) was employed to solve this inverse problem [51, 52]. A thorough analysis of its mathematical properties would require a whole separate paper, so the interested reader is referred to an impressive online article [53] and to a thorough yet not too technical review [54]. Nonetheless, its basic principles are discussed hereafter.

In summary, Gaussian processes are the functional equivalent of multivariate random variables: they establish a probability distribution over possible functions. The functions are distributed around a mean function, and their point-like values are correlated in space through a covariance kernel. The more values that are far from each other are positively correlated, the more the function is expected to be smooth; in other words, a function can hardly show abrupt changes if each time the points “immediately after” are highly correlated with the current one. The mean function is often assumed to be identically null, while the covariance kernel is assumed to follow a given mathematical law, controlled by a finite set of values called hyperparameters. A nice feature of GPR is that it provides a Bayesian framework to incorporate the observed measurements and obtain a posterior joint distribution of values, even outside of measurement points, from prior information about the Gaussian process. Then, one can propagate this distribution through any model of choice, obtaining the distributions of other quantities that depend on it.

When applied to residual stress measurements, a GPR application is straightforward: the relaxed strains (or the displacements, in the case of the Contour method) are assumed to be modeled by a Gaussian process; given the measured strains, a posterior distribution over the space of relaxed strains is obtained, which is propagated through the inverse operator to find the distribution of residual stresses. Being Gaussian, the mean of the posterior distribution is also the corresponding maximum a posteriori (MAP) estimator. Since the covariance kernel usually enforces smoothness, a sample from the posterior distribution typically appears as a filtered version of the input strains. Here is where regularization comes into play: highly oscillating inputs are filtered, so the inverse problem variance is tamed. The more the prior assumption of a covariance kernel incorporates smoothness assumptions, the more this will be reflected in the posterior distribution through Bayesian inference, and the problem will be regularized at the cost of bias—since the true solution may not be as smooth as expected.

If the input relaxed strains are actually a Gaussian process with that specific covariance kernel and hyperparameters, everything works, but, as usual, that piece of information cannot be available in a practical case. As a consequence, the procedure is carried out in two steps.

First, the hyperparameters of the assumed kernel are usually inferred from data via maximum likelihood estimation (MLE): the intrinsic smoothness level of data is chosen as the most statistically plausible given the observed measurements and the measurement error, if known. This rationale is the same of the Morozov discrepancy principle, although the math is a bit more sophisticated. Since there is measurement noise, it is more likely that the measured input strains be a slightly corrupted version of smooth true data, than them being very accurate observations of actually rough true data.

The regression phase is consequently carried out by assuming that the true data is a Gaussian process with that covariance kernel having the estimated hyperparameters.

Like the term says, the estimation of hyperparameters is done on a maximum likelihood basis, that is: among all possible models, the one under which the probability of obtaining the observed data is maximized (with respect to other models) is selected. Three concerns must be raised, as they actually hold for MLE in general, also when it is applied in other frameworks:

-

MLE looks for the “best” model among the ones indexed by the hyperparameters, but it provides no measure of the absolute probability of it being actually the true one. Although typical covariance kernels are fairly general, data may depart from the implied assumptions—for instance, discontinuities would be quite an issue for many established kernels.

-

MLE is likely to be statistically optimal in some sense, which means that the expected value of the results is probably closer to the true solution than with other choices of hyperparameters. However, again, optimal does not mean unbiased: it is the best with the given setup, but the bias introduced by the smoothness assumptions is active and affects the uncertainty quantification.

-

MLE is a statistical estimator, not a deterministic truth, in the sense that the values of the hyperparameters are uncertain, and ill-posedness is very punishing with respect to that kind of uncertainty. The uncertainty of residual stress can be computed by assuming that the posterior data distribution is exact, but there are some less-probable-though-not-so-unlikely hyperparameters (corresponding to lower data smoothness) that correspond to much greater variances in the residual stress solution; inevitably, uncertainty quantification is, in turn, quite uncertain.

For the sake of completeness, it is possible to avoid MLE and evaluate the posterior data distribution by marginalizing over the whole domain of hyperparameters (for instance, see Chapter 5 in [55]), although the operation is overly complex for the scope of a residual stress measurement. Anyway, that would leave the choice of a kernel family as the only remaining bias, at the cost of a rather sizable variance.

A common argument in favor of GPR claims that by using a so-called universal kernel, every continuous function can be approximated with infinite precision by a suitable mean of a posterior distribution predicted by GPR (formally, the reproducing kernel Hilbert space of a universal kernel is dense in the space of continuous functions). In simple words, regardless of the given kernel and hyperparameters, when fed with sufficient data the mean of the posterior distribution predicted by GPR can converge to any given solution. However, this concerns just an asymptotic behavior with respect to the size of the dataset: actually, for a given dataset, kernels inject a hugely influential prior in the Bayesian inference and favor some solutions over others at the expense of bias. Knowing that with increasing data the GPR estimate would converge to the truth—possibly in contrast to prior smoothness assumptions—is of little practical help, as convergence may be extremely slow [56]. Like the Morozov discrepancy principle, mathematics just states that having the greatest amount of data puts us in the most favorable conditions for GPR to work, though we don’t know how favorable they actually are.

Anyway, it is fair to say that GPR is likely to perform slightly better than the Tikhonov-Morozov approach, because of a combination of three factors: 1) since true relaxed strains are the output of a low-pass filter (equation (1)), a general smoothness assumption is reasonable; 2) GPR employs that prior assumption to build a MAP estimate of relaxed strains that is then propagated through an unbiased model (except for the bias induced by the discretization scheme, which should be kept as refined as possible); 3) GPR estimations are seemingly not so affected by lack of knowledge about the input noise. See Fig. 7 for a numerical example.

Solutions obtained through Gaussian Process Regression applied to relaxed strain data. Since DOFs are not limited by this approach, the best possible solution coincides with the true one, and it would be achieved with perfect measurements. A single realization of a solution is also reported, together with its computed \(95\%\) confidence interval. (a) Noise level known a priori and equal to \(\varsigma _\varepsilon = 1\,\mu \varepsilon\). (b) Noise level estimated by MLE as an additional hyperparameter. Note that results are centered along a solution that is biased with respect to the true one, especially in areas where features vary on a small spatial scale. For example, the effect is rather pronounced at the surface. The fact that the noise level is not known a priori has a seemingly negligible effect both on the dispersion of results and on a single realization of a solution. That is likely due to the fact that a higher estimated noise comes with a smoother kernel but at the same time it decreases the statistical power of observations, so the overall effect is less dramatic than with Tikhonov-Morozov approaches

To conclude, it is important to recall that ill-posedness cannot be solved with mathematical/statistical tricks; if anything, maybe what is missing right now is a convenient way to formally incorporate the physical knowledge about the engineering problem into its equations, and that is where the authors think should be directed our efforts. The following section is in attempt at facing this problem.

A Constructive Proposal

Main Idea

After having analyzed the shortcomings of the available techniques, it is now time to apply the conclusions of Section “Continuum Bodies and Point-Wise Values” in order to formulate a proposal on how relaxed strain measurements should be processed to obtain a residual stress distribution. The proposal is based on the fact that ill-posedness comes from the quest for point-wise stress values, while most likely what is requested is some integral value of residual stresses along a given “problem related” length. Therefore, it is suggested to give up on finding point-wise residual stresses with their uncertainties and to directly look for properly averaged quantities instead.

The procedure is grounded on the existence of three characteristic lengths, which depend on the particular phenomenon to be engineeringly faced and on the specific residual stress measurement:

-

1.

An averaging length \(l_\sigma\). This is the length at which residual stresses manifest their effects, being it required for fatigue models of crack initiation, fracture mechanics approaches for crack growth, distortion analyses, studies of tribological properties, or any other application of choice. In absence of application-specific information, \(l_\sigma\) is a length at which we accept that results will be averaged. Everything that happens at a scale under \(l_\sigma\) is assumed not to affect the considered phenomenon, and it is deemed as not technically relevant.

-

2.

A measuring step \(l_\textrm{m}\). This is the maximum resolution that can be technologically achieved by the adopted relaxation technique, regardless of the variance that would come with that resolution. In simple terms, it is the minimum step of the cutting process that can be performed consistently and whose effect can be reasonably captured by the chosen relaxed strain measuring method.

-

3.

A continuum length \(l_\textrm{c}\). This is the length scale where the chosen model of continuum—under which the influence functions for residual stress evaluation are deducted—starts failing at representing the local material properties. Typically, when measuring macro-stresses in a metallic component, considering that the models are based on homogeneous isotropic linear elastic materials, \(l_\textrm{c}\) is in the order of the average grain size.

If \(l_\textrm{c} > l_\sigma\) the mathematical framework used for the elaboration is inadequate, as the residual stress distribution would be significant at a length scale that is not modeled by a homogeneous isotropic material. Moreover, for the proposed procedure to work, \(l_\textrm{m}\) must be smaller than \(l_\sigma\) (ideally, one order of magnitude less).

The main result is the following. If \(l_\textrm{m}\) is approximately equal to or less than \(l_\textrm{c}\), the procedure yields virtually exact confidence intervals on residual stresses averaged along \(l_\sigma\). Otherwise, a technological/metrological limit of the process appears, which has to be reported as a result. To sum up, the following conditions are necessary:

When equation (14) holds, the procedure exploits the physical/technical knowledge that comes with \(l_\textrm{c}\). If everything that happens below the \(l_\textrm{c}\) scale falls outside the chosen continuum model, at that length scale the solution cannot be biased—the corresponding true solution does not even exist at lower length scales. As a matter of fact, it is the most refined solution one can get that still satisfies the continuum hypothesis.