Abstract

The tetrachoric correlation is a popular measure of association for binary data and estimates the correlation of an underlying normal latent vector. However, when the underlying vector is not normal, the tetrachoric correlation will be different from the underlying correlation. Since assuming underlying normality is often done on pragmatic and not substantial grounds, the estimated tetrachoric correlation may therefore be quite different from the true underlying correlation that is modeled in structural equation modeling. This motivates studying the range of latent correlations that are compatible with given binary data, when the distribution of the latent vector is partly or completely unknown. We show that nothing can be said about the latent correlations unless we know more than what can be derived from the data. We identify an interval constituting all latent correlations compatible with observed data when the marginals of the latent variables are known. Also, we quantify how partial knowledge of the dependence structure of the latent variables affect the range of compatible latent correlations. Implications for tests of underlying normality are briefly discussed.

Similar content being viewed by others

Change history

08 February 2021

The author missed to include the ESM files during the proof corrections stage.

References

Almeida, C., & Mouchart, M. (2014). Testing normality of latent variables in the polychoric correlation. Statistica, 74(1), 3–25. https://doi.org/10.6092/issn.1973-2201/4594.

Asparouhov, T., & Muthén, B. (2016). Structural equation models and mixture models with continuous nonnormal skewed distributions. Structural Equation Modeling, 23(1), 1–19. https://doi.org/10.1080/10705511.2014.947375.

Asquith, W. H. (2020). copBasic|General bivariate copula theory and many utility functions [Computer software manual]. Retrieved from https://CRAN.R-project.org/package=copBasic

Azzalini, A. (2013). The skew-normal and related families. Cambridge: Cambridge University Press. https://doi.org/10.1017/CBO9781139248891.

Bernard, C., Jiang, X., & Vanduffel, S. (2012). A note on ‘Improved Fréchet bounds and model-free pricing of multi-asset options’ by Tankov (2011). Journal of Applied Probability, 49(3), 866–875. https://doi.org/10.2139/ssrn.2003462.

Bollen, K. A. (2014). Structural equations with latent variables. New Jersey: Wiley. https://doi.org/10.1002/9781118619179.

Christoffersson, A. (1975). Factor analysis of dichotomized variables. Psychometrika, 40(1), 5–32. https://doi.org/10.1007/BF02291477.

Claeskens, G., & Hjort, N. L. (2008). Model selection and model averaging. Cambridge: Cambridge University Press. https://doi.org/10.1017/CBO9780511790485.

Foldnes, N., & Grønneberg, S. (2019a). On identification and non-normal simulation in ordinal covariance and item response models. Psychometrika, 84(4), 1000–1017. https://doi.org/10.1007/s11336-019-09688-z.

Foldnes, N., & Grønneberg, S. (2019b). Pernicious polychorics: The impact and detection of underlying non-normality. Structural Equation Modeling, 27(4), 525–543. https://doi.org/10.1080/10705511.2019.1673168.

Foldnes, N., & Grønneberg, S. (2020). The sensitivity of structural equation modeling with ordinal data to underlying non-normality and observed distributional forms. Psychological Methods. (Forthcoming).

Fréchet, M. (1958). Remarques de M. Fréchet au sujet de la note précédente. Comptes rendus hebdomadaires des séances de l’Académie des sciences(2), 2719–2720. Retrieved from https://gallica.bnf.fr/ark:/12148/bpt6k723q/f661.image

Fréchet, M. (1960). Sur les tableaux de corrélation dont les marges sont données. Revue de l’Institut International de Statistique, 28(1/2), 10–32. https://doi.org/10.2307/1401846.

Grønneberg, S., & Foldnes, N. (2017). Covariance model simulation using regular vines. Psychometrika, 82(4), 1035–1051. https://doi.org/10.1007/s11336-017-9569-60.

Höffding, W. (1940). Maßstabinvariante korrelationstheorie für diskontinuierliche verteilungen (Unpublished doctoral dissertation). Universität Berlin.

Joe, H. (1997). Multivariate models and multivariate dependence concepts. Boca Raton: CRC Press. https://doi.org/10.1201/b13150.

Jöreskog, K. G. (1994). Structural equation modeling with ordinal variables. In Multivariate analysis and its applications (pp. 297–310). Institute of Mathematical Statistics. https://doi.org/10.1214/lnms/1215463803

Jöreskog, K. G., & Sörbom, D. (1996). LISREL 8: User’s reference guide. Illinois: Scientific Software International.

Kallenberg, O. (2006). Foundations of modern probability (2nd ed.). Berlin: Springer Science. https://doi.org/10.1007/978-1-4757-4015-8.

Kolenikov, S., & Angeles, G. (2009). Socioeconomic status measurement with discrete proxy variables: Is principal component analysis a reliable answer? Review of Income and Wealth, 55(1), 128–165. https://doi.org/10.1111/j.1475-4991.2008.00309.x.

Lehmann, E. L. (1966). Some concepts of dependence. The Annals of Mathematical Statistics, 37(5), 1137–1153. https://doi.org/10.1214/aoms/1177699260.

Manski, C. F. (2003). Partial identification of probability distributions. Berlin: Springer Science. https://doi.org/10.1007/b97478.

Maydeu-Olivares, A. (2006). Limited information estimation and testing of discretized multivariate normal structural models. Psychometrika, 71(1), 57–77. https://doi.org/10.1007/s11336-005-0773-4.

Molenaar, D., & Dolan, C. V. (2018). Nonnormality in latent trait modelling. In P. Irwing, T. Booth, & D. J. Hughes (Eds.), The wiley handbook of psychometric testing (pp. 347–373). New Jersey: Wiley Online Library.

Muthén, B. (1978). Contributions to factor analysis of dichotomous variables. Psychometrika, 43(4), 551–560. https://doi.org/10.1007/BF02293813.

Muthén, B. (1984). A general structural equation model with dichotomous, ordered categorical, and continuous latent variable indicators. Psychometrika, 49(1), 115–132. https://doi.org/10.1007/BF02294210.

Muthén, B., & Hofacker, C. (1988). Testing the assumptions underlying tetrachoric correlations. Psychometrika, 53(4), 563–577. https://doi.org/10.1007/BF02294408.

Muthén, L. K., & Muthén, B. O. (1998–2017). Mplus user’s guide (8th ed., pp. 204–215). Los Angeles, CA: Muthén & Muthén.

Narasimhan, B., Johnson, S. G., Hahn, T., Bouvier, A., & Kiêu, K. (2020). cubature: Adaptive multivariate integration over hypercubes [Computer software manual]. Retrieved from https://CRAN.R-project.org/package=cubature

Nelsen, R. B. (2007). An introduction to copulas. Berlin: Springer Science. https://doi.org/10.1007/978-1-4757-3076-0.

Olsson, U. (1979). Maximum likelihood estimation of the polychoric correlation coefficient. Psychometrika, 44(4), 443–460. https://doi.org/10.1007/BF02296207.

Owen, D. B. (1980). A table of normal integrals. Communications in Statistics - Simulation and Computation, 9(4), 389–419. https://doi.org/10.1080/03610918008812164.

Pearl, J. (2009). Causality. Cambridge: Cambridge University Press. https://doi.org/10.1017/cbo9780511803161.

Pearson, K. (1900). I. Mathematical contributions to the theory of evolution.|VII. on the correlation of characters not quantitatively measurable. Philosophical Transactions of the Royal Society of London. Series A, 195, 1–47. https://doi.org/10.1098/rsta.1900.0022.

Pearson, K. (1909). On a new method of determining correlation between a measured character a, and a character b, of which only the percentage of cases wherein B exceeds (or falls short of) a given intensity is recorded for each grade of a. Biometrika, 7(1/2), 96–105. https://doi.org/10.2307/2345365.

Pearson, K., & Heron, D. (1913). On theories of association. Biometrika, 9(1/2), 159–315. https://doi.org/10.2307/2331805.

Pearson, K., & Pearson, E. S. (1922). On polychoric coefficients of correlation. Biometrika, 14(1/2), 127–156. https://doi.org/10.2307/2331858.

R Core Team. (2020). R: A language and environment for statistical computing [Computer software manual].

Rosseel, Y. (2012). lavaan: An R package for structural equation modeling. Journal of Statistical Software, 48(2), 1–36. https://doi.org/10.18637/jss.v048.i02.

Satorra, A., & Bentler, P. (1988). Scaling corrections for statistics in covariance structure analysis (Tech. Rep.). Retrieved from https://escholarship.org/content/qt3141h70c/qt3141h70c.pdf

Shapiro, A. (1983). Asymptotic distribution theory in the analysis of covariance structures. South African Statistical Journal, 17(1), 33–81.

Sklar, M. (1959). Fonctions de répartition à n dimensions et leurs marges. Publ Inst Statist Univ Paris, 8, 229–231.

Takane, Y., & de Leeuw, J. (1987). On the relationship between item response theory and factor analysis of discretized variables. Psychometrika, 52(3), 393–408. https://doi.org/10.1007/BF02294363.

Tamer, E. (2010). Partial identification in econometrics. Annual Review of Economics, 2(1), 167–195. https://doi.org/10.1146/annurev.economics.050708.143401.

Tankov, P. (2011). Improved Fréchet bounds and model-free pricing of multi-asset options. Journal of Applied Probability, 48(2), 389–403. https://doi.org/10.1239/jap/1308662634.

Tate, R. F. (1955a). Applications of correlation models for biserial data. Journal of the American Statistical Association, 50(272), 1078–1095. https://doi.org/10.1080/01621459.1955.10501293.

Tate, R. F. (1955b). The theory of correlation between two continuous variables when one is dichotomized. Biometrika, 42(1/2), 205–216. https://doi.org/10.21236/ad0029741.

Vaswani, S. (1950). Assumptions underlying the use of the tetrachoric correlation coefficient. Sankhyā: The Indian Journal of Statistics, 10(3), 269–276.

Whitt, W. (1976). Bivariate distributions with given marginals. The Annals of Statistics, 4(6), 1280–1289. https://doi.org/10.1214/aos/1176343660.

Yan, J. (2007). Enjoy the joy of copulas: With a package copula. Journal of Statistical Software, 21(4), 1–21. https://doi.org/10.18637/jss.v021.i04.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Appendix A. Technical Proofs and Further Numerical Illustrations

Appendix A. Technical Proofs and Further Numerical Illustrations

1.1 A.1. Further Numerical Illustrations for Given Marginals

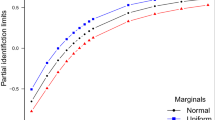

We here give further numerical illustrations of the bounds. Since there is a bijection between \(2 \times 2\) tables and the dichotomization of standard normal distributions with free correlations and free thresholds \(\tau _0, \tau _1\), we generate \(2 \times 2\) tables from proportions of a normal latent variable with varying correlations and chosen threshold parameters. For each table, we compute the bounds from Proposition 1 with standard normal marginals, as well as the bound from Proposition 2. Recall that the bound from Proposition 2 is actually the bound from Proposition 1 with uniform marginals. The lengths of the resulting intervals are shown in Figs. 4 and 5. Full computational details are given in the accompanying R scripts. In Fig. 4, we have \(\tau _1 = \tau _2 = 0\), which is a best case scenario. Figure 5 shows a more typical situation, where the length of all bounds are close to the maximal length of such an interval, namely 2. Figure 5 incidentally also illustrates that the bound for the Pearson correlation with standard normal marginals does not always contain the bound for Spearman’s rho. In both Fig. 4 and Fig. 5, points close or at the endpoints \(\rho = \pm 1\) are not included, as different numerical techniques are needed in this region, as done in an attached R file found in the online supplementary material. It is here found that minimum lengths are attained at \(\rho = \pm 1\) and \(\tau _1 = \tau _2 = 0\), with a length of 0.67 for normal marginals and 0.5 for uniform marginals. In our analysis, we use the R (2020) packages copula (Yan and Others 2007), cubature (Narasimhan, Johnson, Hahn, Bouvier, and Kiêu 2020), and copBasic (Asquith 2020).

1.2 A.2. Proofs for Section 2

We will sometimes use the following principle of duality, as observed by Tankov (2011, Appendix). The usual matrix of probabilities is

The swapped matrix is

This matrix has will have the same upper bound as the negative lower bound of P; this is because it corresponds to the discretized distribution of \(\left( -X,Y\right) \). Hence we may compute, say, a lower bound via an upper bound by using this duality. Some of the upcoming arguments apply this technique when convenient.

Proof of Theorem 1

We show that \(|\rho | \ne 1\) by contradiction. Suppose \(|\rho | = 1\). By the Cauchy-Schwarz inequality, \(Z_1 = a + b Z_2\) for some numbers a, b. For any thresholds \(\tau _1, \tau _2\), the probabilities of X equals the probability of observing \(Z\) in one of the quadrants \(x> \tau _1, y > \tau _2\) or \(x< \tau _1, y < \tau _2\) or \(x > \tau _1, y < \tau _2\) or \(x < \tau _1, y > \tau _2\). Since any two straight lines intersect at either one or zero points, one quadrant will have zero probability, therefore contradicting our assumption that none of the cell probabilities are zero. Therefore, \(|\rho | = 1\) is incompatible with the distribution of X.

Now we show that any \(\rho \in (0,1)\) is compatible with X. To do this, let \(a,b>0\) be two positive real numbers and define the random variable

Then \(\text {pr}[Z(a,b)\in A_{ij}]=\text {pr}[X=(i,j)]=p_{ij}\) when \(A_{ij}\) are the quadrants \(A_{00}=[-\infty ,0]\times [-\infty ,0]\), \(A_{01}=[0,\infty ]\times [-\infty ,0]\), \(A_{10}=[-\infty ,0]\times [0,\infty ]\), and \(A_{11}=[0,\infty ]\times [0,\infty ]\). Thus Z(a, b) induces X through discretization when \(\tau _{1}=\tau _{2}=0\). We now let \(a = 1/b\). When \(b \rightarrow 0^+\), we get a correlation converging to 1. When \(b \rightarrow \infty \), we get a correlation converging to \(-1\). This is visually obvious, as the points get closer and closer to a straight line, and is confirmed algebraically in the online appendix accompanying this paper. At the end of the online appendix, we also show that any intermediate value is possible, which is a consequence of the continuity of the correlation of Z as a function of b.

Proof of Proposition 1

Theorem 3.2.3 of Nelsen (2007, p. 70) shows that all copulas C that fulfil eq. (5) fulfil \(W_p(u,v) \le C(u,v) \le M_p(u,v)\) and that \(W_p,M_p\) are copulas fulfilling the constraint in eq. (5). The Höffding representation in eq. (4) therefore implies \(\rho (W_p[F_1,F_2]) \le \rho (F) \le \rho (M_p[F_1,F_2])\). Since \(W_p,M_p\) are copulas, this bound cannot be improved. We now show that the interval with limits as in the bound for \(\rho (F)\) equals \(\rho (\mathcal {P}, p)\). We use an argument that goes back to Fréchet (1958), (see Nelsen 2007, p. 15, exercise 2.4).

Let \(\rho _L= \rho (W_p[F_1,F_2])\) and \(\rho _U = \rho (M_p[F_1,F_2])\). Suppose \(\rho \in [\rho _L, \rho _U]\). Then there is an \(0 \le \alpha \le 1\) such that

Let \(C_\alpha (u,v) = \alpha W_p(u,v) + (1 - \alpha ) M_P(u,v)\) which is a convex combination of copulas, and hence a copula (Nelsen 2007, Exercise 2.3 and 2.4). Let \(H_\alpha (x_1,x_2) = C_\alpha (F_1(x_1), F_2(x_2))\). By the second half of Sklar’s theorem, \(H_\alpha \) is a distribution function with marginals \(F_1,F_2\). Since \(F_1(\tau _1) = p_{01} + p_{00}\) and \(F_2(\tau _2) = p_{10} + p_{00}\), and \(p_{00} = H_\alpha (\tau _1,\tau _2) = C_\alpha (F_1(\tau _1), F_2(\tau _2)) = C_\alpha (p_{01} + p_{00})\) the copula \(C_\alpha \) fulfils eq. (5). Therefore, \(H_\alpha \in \mathcal {P}\). We now show that \(\rho (H_\alpha ) = \rho \) using the Höffding representation from eq. (4) in Sect. 2.

Firstly, we have \(F_1(x_1) F_2(x_2) = \alpha F_1(x_1) F_2(x_2) + (1 - \alpha ) F_1(x_1) F_2(x_2)\), and so by the Höffding representation eq. (4), the covariance of \(H_\alpha \) equals

using eq. (8). \(\square \)

Proof of Proposition 2

Define \(a=p_{00}\), \(b=p_{00}+p_{01},\) \(c=p_{00}+p_{10}\) and \(d=c+b-a\). We will calculate the integral \(\int _{[0,1]^2} C_{U}\left( u,v\right) \mathrm{d}u \mathrm{d}v.\) Define the set \(A_{F}=\left[ a,d\right] \times \left[ a,d\right] \). Then

On \(A_{F}^{C}\) it holds that \(C_{U}\left( u,v\right) =\min \left( u,v\right) \). Since \(\int _{[0,1]^2}\min \left( u,v\right) \mathrm{d}u \mathrm{d}v=1/3\) and

the second integral in (9) equals

The next part is \(\int _{A_{F}}C_{U}\left( u,v\right) \mathrm{d}u \mathrm{d}v\). It is handy to divide \(A_{F}\) into four rectangles

At \(A_{BL}\) we have \(C_{U}\left( u,v\right) =a\) and

At \(A_{TR}\), \(C_{U}\left( u,v\right) =-d+u+v\) and its integral is

At \(A_{TL}\), \(C_{U}\left( u,v\right) =\min \left( u,a-c+v\right) \) and the integral equals

and at \(A_{BR}\), \(C_{U}\left( u,v\right) =\min \left( v,a-b+u\right) \) the integral is

Add all the expressions together, make the substitutions \(b=p_{01}+p_{00}\), \(a=p_{10}+p_{00}\) and simplify to get

hence

as claimed. The lower bound follows by duality.

The reasoning behind the decomposition can be seen in Fig. 6, where each colour correspond to a continuous part of the piece-wise continuous function \(C_U(u,v)\).

1.3 A.3. Proofs for Section 2.5

Proof of Proposition 3

We follow the structure of the argument of Proposition 1. To help simplify the argument, we structure the argument in a series of lemmas. For easy reference, these lemmas are stated inside the present proof. The proofs of these supporting lemmas follow after the present proof is complete.

Firstly, let us identify what can be said of C when knowing the distribution of X, which is given by the function \(p(x_1, y) = {\text {P}}( X_1 = x_1, Z_2 \le y)\), for \(x_1 = 0,1\) and y a real number. We have that \( p(0, y) = {\text {P}}(X_1 = 0 , Z_2 \le y) = {\text {P}}(Z_1 \le \tau _1, Z_2 \le y) = C(F_1(\tau _1), F_2(y)). \) Since \(p(0,y) + p(1,y) = F_2(y)\), and therefore \(p(1,y) = F_2(y) - p(0,y)\), we do not get new knowledge from similarly expressing p(1, y) in terms of the copula C. Our knowledge of C is therefore that

We now use a constrained Fréchet–Höffding bound found in Tankov (2011) to take into account this knowledge.

Lemma 1

Any copula C that satisfies eq. (10) also satisfies

where \(C_{L,\mathcal {L}}\) and \(C_{U,\mathcal {U}}\) are

Moreover, both \(C_{L,\mathcal {U}}\) and \(C_{U,\mathcal {U}}\) are copulas that satisfy eq. (10).

Let us now simplify the expressions for \(C_L, C_U\) through identifying the inner minimum or maximum in \(C_L, C_U\) respectively. This will show that they are equal to the expressions in the statement of the result. This is achieved in the following lemma.

Lemma 2

The copulas \(C_{L,\mathcal {U}}\) and \(C_{U,\mathcal {U}}\) are equal respectively to \(W_p, M_p\) from the statement of Proposition 3. That is,

and

From this, the Höffding representation from eq. (3) in Sect. 2 gives for any \(F \in \mathcal {P}\) which is compatible with p that \(\rho (W[F_1,F_2; p]) \le \rho (F) \le \rho (M[F_1,F_2; p])]\). We now show that any values within this interval can be attained as correlations in \(\rho (\mathcal {P}, p)\).

As in the proof of Proposition 1, we study convex combinations of \(W_p\) and \(M_p\). For \(0 \le \alpha \le 1\), we study \(C_\rho (u,v) =\alpha W_p + (1 - \alpha ) M_p\). That this class induces all correlation values in the stated interval follows exactly as in the proof of Proposition 1. What is left to show is that the convex combination also fulfil the restriction in eq. (10). Now from Lemma 1, we have that both \(W_p\) and \(M_p\) fulfil eq. (10), i.e., that \(W_p(F_1(\tau _1),v) = M_p(F_1(\tau _1),v) = p(0, F_2^ {-1} (v))\). Therefore, we also have \(C_\rho (F_1(\tau _1),v) =\alpha C_{L,\mathcal {U}}(F_1(\tau _1),v) + (1 - \alpha ) C_{U,\mathcal {U}}(F_1(\tau _1),v) = \alpha p(0, F_2^ {-1} (v)) + (1-\alpha ) p(0, F_2^ {-1} (v)) = p(0, F_2^ {-1} (v))\). \(\square \)

We now prove the two lemmas stated within the proof of Proposition 3.

Proof of Lemma 1

Since \(\mathcal {U}\) is compact, Theorem 1 (i) of Tankov (2011) shows the claimed bound, and that \(C_{L,\mathcal {U}}\) and \(C_{U,\mathcal {U}}\) fulfil eq. (10).

We now check the conditions of Theorem 1 (ii) of Tankov (2011) which shows that \(C_{L,\mathcal {U}}\) and \(C_{U,\mathcal {U}}\) are actually copulas. What is required is that \(\mathcal {U}\) is both a increasing and a so-called decreasing set, as defined in Tankov (2011, Sect. 2, bottom of p. 390) : A set \(S \subset [0,1]^2\) is increasing if for all \((a_1, b_1), (a_2, b_2) \in S\) we have either (i) \(a_1 \le a_2\) and \(b_1 \le b_2\) or (ii) \(a_1\ge a_2\) and \(b_1 \ge b_2\). For \(S = \mathcal {U}\) this is trivially fulfilled, since if \((a_1, b_1), (a_2, b_2) \in \mathcal {U}\) we have \(a_1 = a_2 = F_1(\tau _1)\) as we only have one possible element in the first coordinate, and therefore we trivially also have that either \(b_1 \le b_2\) or \(b_1 \ge b_2\) by tautology.

Similarly, recall that a set \(S \subseteq [0,1]^2\) is decreasing if for all \((a_1, b_1), (a_2, b_2) \in S\) we have either (i) \(a_1 \le a_2\) and \(b_1 \ge b_2\) or (ii) \(a_1\ge a_2\) and \(b_1 \le b_2\). This is again trivially fulfilled.

For the proof of Lemma 2, we need the following technical result.

Lemma 3

Let C be a bivariate copula distribution function and \(0\le a\le 1\). Then \(C(a, v) - v\) is decreasing in v when \(0\le v \le 1\).

Proof

By definition (Nelsen 2007, p. 8), a bivariate copula satifies \(C(1,v)=v\) when \(0\le v\le 1\) and

when \(0\le u_{1}\le u_{2}\le 1\) and \(0\le v_{1}\le v_{2}\le 1\). Now choose \(u_{1}=a\) and \(u_{2}=1\), and \(C(a,v_{1})-v_{1}\ge C(a,v_{2})-v_{2}\) when \(0\le v_{1}\le v_{2}\le 1\), as claimed. \(\square \)

Proof of Lemma 2

We start with \(C_{U,\mathcal {U}}.\) We must show that

where the first equality is from Lemma 1 while the second line is the definition of \(M_{p}(u,v)\) from Proposition 3. The second equality holds if, and only if,

which is true if and only if \(C(F_{1}(\tau _{1}),b)+(u-F_{1}(\tau _{1}))^{+}+(v-b)^{+}\) is minimized when \(b=v\). Now we show this is indeed the case. For \(b \le v\), we have \(0 \le v-b\), and so \(h(b) = C(F_1(\tau _1),b) + v-b\), which is decreasing by Lemma 3 (p. 20). For \(b > v\), we have \(v-b < 0\), and so \(h(b) = C(F_1(\tau _1),b)\), which is increasing. The minimum is therefore attained at \(b=v\) and

as claimed.

The case of \(C_{L,\mathcal {U}}\) is similar, as we have to show that

Again, the first line is from Lemma 1 and second line is the definition of \(W_{p}\) from Proposition 3. The second equality holds if, and only if,

This equality is true by the same reasoning as above. For \(b \le v\), we have \(b-v \le 0\) and so \(g(b) = C(F_1(\tau _1),b)\), which is increasing. For \(b > v\), we have \(b-v > 0\) and so \(g(b) = C(F_1(\tau _1),b)-b+v\), which is decreasing by Lemma 3 (p. 20). Therefore, the maximum is attained at \(b=v\), and

as claimed.

1.4 A.4. Proof for Section 3.2

Let \(S=\left( \Omega ,\Sigma \right) \) be a measure space. We assume S is an uncountable standard Borel space, i.e., it can be identified with the Borel space over the real numbers. We also assume that S is a rich Borel space, meaning it supports an independent uniform random variable that can be used as a randomization device (Kallenberg 2006, p.112). This assumption can be made with practically no loss of generality.

Proof of Theorem 2

The inclusion \(\gamma \left( P_{X,Y}\right) \subseteq \gamma \left( P_{X}\right) \) is true for any Z and S. Choose a \(P_{X}\), a \(P_{X,Y}\) compatible with \(P_X\), and a \(P_{Z}\in \gamma \left( P_{X}\right) \). We must show \(P_{Z}\in \gamma \left( P_{X,Y}\right) \), or \(P_{f_{\theta }\left( Z\right) ,Y}=P_{X,Y}\) for some \(\theta \in \Theta \). As a candidate \(\theta \) choose one of the witnesses of \(P_{f_{\theta }\left( Z\right) }=P_{X}\). By assumption there are two variables X, Y in S with distribution \(P_{X,Y}\) such that X is distributed as \(f_{\theta }\left( Z\right) \) when Z is distributed according to \(P_{Z}\). By Corollary 6.11 of Kallenberg (2006), there is a variable \(Z'\) in S such that \(X=f_{\theta }\left( Z'\right) \) and \(P_Z = P_Z'\). But then \(P_{f_{\theta }\left( Z'\right) ,Y}=P_{X,Y}\) and we are done.

1.5 A.5. Computational Simplifications when Applying Proposition 1

The integrals defining the end points of \(\rho (\mathcal {P}, p)\) in Proposition 1 can be calculated directly via numerical integration. However, this approach is computationally intensive, as we integrate functions with jumps. We here simplify the integrals in Proposition 1 by splitting the integrals into regions without jumps. This considerably reduces the computational burden of numerical integration. The analysis is analogous to the proof in Proposition 2, except that the integrals at \(A_{BR}\) and \(A_{TR}\) must be divided in two.

We only treat the upper bound. The lower bound can be found by duality. In the following argument, we assume that \(F_1, F_2\) have variance one, an assumption made without loss of generality, as it can be achieved by re-scaling.

Define

By the Höffding formula for covariance, we have

where

Here, \(J_1\) is the covariance of the distribution with the Fréchet–Höffding upper bound copula and marginals \(F_1,F_2\). The integral \(J_1\) is seen to be finite by the Cauchy-Schwarz inequality, since it is a covariance where the marginals are assumed to have finite variance. The integral \(\int _{\mathbb {R}^2} g\left( u,v\right) \mathrm{d}u \mathrm{d}v\) can be calculated using a similar decomposition as the one used in Proposition 2. We see that \(\rho (M[F_1,F_2;p]) = \sum _{i=1}^8 J_i\) where

The domains of integration can be seen in Fig. 6. Here \(R_{BL}\) is the bottom-left rectangle, \(T_{TL1}\) the first top-left triangle, et cetera.

When the marginals are normal, concrete formulas for the integrals over \(J_{3}\) and \(J_{4}\) are possible to derive by using well-known results for normal integrals (Owen 1980). A simple algebraic formula such as that given in Proposition 2 seems out of reach in this case, as the integrals \(J_{5},J_{6},J_{7},J_{8}\) are too complicated.

In our numerical implementation, we assume that \(F_1, F_2\) are equal, and are capable of supporting perfect correlations of \(\pm 1\), as is well known to hold for normal marginals. As shown in Sect. 2, the maximum possible correlation with marginals \(F_1,F_2\) equals \(J_1\), and so this assumption amounts to \(J_1 = 1\).

Rights and permissions

About this article

Cite this article

Grønneberg, S., Moss, J. & Foldnes, N. Partial Identification of Latent Correlations with Binary Data. Psychometrika 85, 1028–1051 (2020). https://doi.org/10.1007/s11336-020-09737-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11336-020-09737-y