Abstract

Model selection is a popular strategy in structural equation modeling (SEM). To select an “optimal” model, many selection criteria have been proposed. In this study, we derive the asymptotics of several popular selection procedures in SEM, including AIC, BIC, the RMSEA, and a two-stage rule for the RMSEA (RMSEA-2S). All of the results are derived under weak distributional assumptions and can be applied to a wide class of discrepancy functions. The results show that both AIC and BIC asymptotically select a model with the smallest population minimum discrepancy function (MDF) value regardless of nested or non-nested selection, but only BIC could consistently choose the most parsimonious one under nested model selection. When there are many non-nested models attaining the smallest MDF value, the consistency of BIC for the most parsimonious one fails. On the other hand, the RMSEA asymptotically selects a model that attains the smallest population RMSEA value, and the RESEA-2S chooses the most parsimonious model from all models with the population RMSEA smaller than the pre-specified cutoff. The empirical behavior of the considered criteria is also illustrated via four numerical examples.

Similar content being viewed by others

Notes

Both model goodness of fit and model complexity (or parsimony) are broad concepts, and researchers may interpret them in different ways. In the current study, model goodness of fit is measured by some minimum discrepancy function as introduced in Section 2, and model complexity is simply represented by the number of parameters. For readers who are interest in further discussion on model goodness of fit and model complexity, please refer to Preacher (2006).

References

Akaike, H. (1974). A new look at statistical model identification. IEEE Transactions on Automatic Control, 19, 716–723.

Bentler, P. M., & Weeks, D. G. (1980). Linear structural equations with latent variables. Psychometrika, 45, 289–308.

Bollen, K. A., Harden, J. J., Ray, S., & Zavisca, J. (2014). BIC and alternative Bayesian information criteria in the selection of structural equation models. Structural Equation Modeling, 21, 1–19.

Bozdogan, H. (1987). Model selection and Akaike’s information criterion (AIC): The general theory and its analytical extensions. Psychometrika, 52, 345–370.

Browne, M. W. (1974). Generalized least squares estimators in the analysis of covariance structures. South African Statistical Journal, 8, 1–24.

Browne, M. W. (1984). Asymptotic distribution-free methods for the analysis of covariance structures. British Journal of Mathematical and Statistical Psychology, 37, 62–83.

Browne, M. W., & Cudeck, R. (1993). Alternative ways of assessing model fit. In K. A. Bollen & J. S. Long (Eds.), Testing structural equation models (pp. 136–62). Newbury Park, CA: Sage.

Burnham, K. P., & Anderson, D. R. (2002). Model selection and multimodel inference: A practical information-theoretic approach (2nd ed.). New York, NY: Springer.

Cudeck, R., & Henly, S. J. (1991). Model selection in covariance structures analysis and the problem of sample size: A clarification. Psychological Bulletin, 109, 512–519.

Dziak, J. J., Coffman, D. L., Lanza, S. T., & Li, R. (2012). Sensitivity and specificity of information criteria (Tech. Rep. No. 12–119). University Park, PA: The Pennsylvania State University, The Methodology Center.

Feist, G. J., Bodner, T. E., Jacobs, J. F., Miles, M., & Tan, V. (1995). Integrating top-down and bottom-up structural models of subjective well-being: A longitudinal investigation. Journal of Personality and Social Psychology, 68, 138–150.

Fleishman, A. I. (1978). A method for simulating non-normal distributions. Psychometrika, 43, 521–532.

Hannan, E. J., & Quinn, B. G. (1979). The determination of the order of an autoregression. Journal of the Royal Statistical Society, Series B, 41, 190–195.

Haughton, D. M. A. (1988). On the choice of a model to fit data from an exponential family. Annals of Statistics, 16, 342–355.

Haughton, D. M. A., Oud, J. H. L., & Jansen, R. A. R. G. (1997). Information and other criteria in structural equation model selection. Communication in Statistics. Part B: Simulation and Computation, 26, 1477–1516.

Homburg, C. (1991). Cross-validation and information criteria in causal modeling. Journal of Marketing Research, 28, 137–144.

Ibrahim, J. G., Zhu, H.-T., & Tang, N.-S. (2008). Model selection criteria for missing-data problems using the EM algorithm. Journal of the American Statistical Association, 103, 1648–1658.

Jackson, D. L., Gillaspy, J. A, Jr., & Purc-Stephenson, R. (2009). Reporting practices in confirmatory factor analysis: An overview and some recommendations. Psychological Methods, 14, 6–23.

Jöreskog, K. G. (1993). Testing structural equation models. In K. A. Bollen & J. S. Lang (Eds.), Testing structural equation models (pp. 294–316). Newbury Park, CA: Sage.

Kaplan, D. (2009). Structural Equation Modeling: Foundations and Extensions (2nd ed.). Newbury Park, CA: SAGE Publications.

Keyes, C. L. M., Shmotkin, D., & Ryff, C. D. (2002). Optimizing well-being: The empirical encounter of two traditions. Journal of Personality and Social Psychology, 82, 1007–1022.

Kullback, S., & Leibler, R. A. (1951). On Information and Sufficiency. Annals of Mathematical Statistics, 22, 79–86.

Li, L. & Bentler, P. M. (2006). Robust statistical tests for evaluating the hypothesis of close fit of misspecified mean and covariance structural models. UCLA Statistics Preprint #494.

MacCallum, R. C. (2003). Working with imperfect models. Multivariate Behavioral Research, 38, 113–139.

MacCallum, R. C., & Austin, J. T. (2000). Applications of structural equation modeling in psychological research. Annual Review of Psychology, 51, 201–224.

Mallows, C. L. (1973). Some comments on \(C_p \). Technometrics, 15, 661–675.

McDonald, R. P. (2010). Structural models and the art of approximation. Perspectives on Psychological Science, 5, 675–686.

Micceri, T. (1989). The unicorn, the normal curve, and other improbable creatures. Psychological Bulletin, 105, 156–166.

Pitt, M. A., Myung, I., & Zhang, S. (2002). Toward a method of selecting among computational models of cognition. Psychological Review, 109, 472–491.

Preacher, K. J. (2006). Quantifying parsimony in structural equation modeling. Multivariate Behavioral Research, 41, 227–259.

Preacher, K. J., & Merkle, E. C. (2012). The problem of model selection uncertainty in structural equation modeling. Psychologcial Methods, 17, 1–14.

Preacher, K. J., Zhang, G., Kim, C., & Mels, G. (2013). Choosing the optimal number of factors in exploratory factor analysis: A model selection perspective. Multivariate Behavioral Research, 48, 28–56.

Satorra, A. (1989). Alternative test criteria in covariance structure analysis—A unified approach. Psychometrika, 54, 131–151.

Satorra, A., & Bentler, P. M. (2001). A scaled difference chi-square test statistic for moment structure analysis. Psychometrika, 66, 507–514.

Schwarz, G. (1978). Estimating the dimension of a model. Annals of Statistics, 6, 461–464.

Sclove, S. L. (1987). Application of model-selection criteria to some problems in multivariate analysis. Psychometrika, 52, 333–343.

Shah, R., & Goldstein, S. M. (2006). Use of structural equation modeling in operations management research: Looking back and forward. Journal of Operations Management, 24, 148–169.

Shao, J. (1997). An asymptotic theory for model selection. Statistics Sinica, 7, 221–264.

Shapiro, A. (1983). Asymptotic distribution theory in the analysis of covariance structures (a unified approach). South African Statistical Journal, 17, 33–81.

Shapiro, A. (1984). A note on the consistency of estimators in the analysis of moment structures. British Journal of Mathematical and Statistical Psychology, 1984, 84–88.

Shapiro, A. (2007). Statistical inference of moment structures. In S.-Y. Lee (Ed.), Handbook of latent variable and related models (pp. 229–260). Amsterdam: Elsevier.

Shapiro, A. (2009). Asymptotic normality of test statistics under alternative hypotheses. Journal of Multivariate Analysis, 100, 936–945.

Steiger, J. H., & Lind, J. C. (1980). Statistically-based tests for the number of common factors. Iowa City, IA: Paper presented at the annual Spring Meeting of the Psychometric Society.

Stone, M. (1974). Cross-validatory choice and assessment of statistical predictions. Journal of Royal Statistical Society, Series B, 36, 111–147.

Vrieze, S. I. (2012). Model selection and psychological theory: A discussion of the differences between Akaike information criterion (AIC) and the Bayesian information criterion (BIC). Psychological Methods, 17, 228–243.

Vuong, Q. H. (1989). Likelihood ratio tests for model selection and non-nested hypotheses. Econometrica, 57, 307–333.

Wahba, G. (1990). Spline Models for Observational Data. Philadelphia: SIAM.

West, S. G., Taylor, A. B., & Wu, W. (2012). Model fit and model selection in structural equation modeling. In R. H. Hoyle (Ed.), Handbook of Structural Equation Modeling. New York: Guilford Press.

White, H. (1982). Maximum likelihood estimation of misspecified models. Econometrica, 50, 1–25.

Author information

Authors and Affiliations

Corresponding author

Additional information

The research was supported in part by Grant MOST 104-2410-H-006-119-MY2 from the Ministry of Science and Technology in Taiwan. The author would like to thank Wen-Hsin Hu and Tzu-Yao Lin for their help in simulating data.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix

Appendix

The following two lemmas are helpful for proving the four main theorems.

Lemma 1

Let \(\mathcal{G}_N \) denote a random function of \(\alpha \) and \(\mathcal{B}=\big \{ \mathop {\max }\nolimits _{\alpha _1 \in \mathcal{A}_1 } \mathcal{G}_N \left( {\alpha _1 } \right) <\mathop {\min }\nolimits _{\alpha _2 \in \mathcal{A}_2 } \mathcal{G}_N \left( {\alpha _2 } \right) \big \}\). If the cardinality of \(\mathcal{A}_1 \) and \(\mathcal{A}_2 \) are both finite, and \({\mathbb {P}}\left( {\mathcal{G}_N \left( {\alpha _1 } \right) >\mathcal{G}_N \left( {\alpha _2 } \right) } \right) \rightarrow 0\) for each \(\alpha _1 \in \mathcal{A}_1 \) and \(\alpha _2 \in \mathcal{A}_2 \), then

Proof of Lemma 1

It suffices to show that the probability of \(\mathcal{B}^{c}\), the complement of \(\mathcal{B}\), converges to zero. By the fact \(\mathcal{B}^{c}\subset \, \mathop \bigcup \nolimits _{\alpha _1 \in \mathcal{A}_1 ,\alpha _2 \in \mathcal{A}_2 } \left\{ {\mathcal{G}_N \left( {\alpha _1 } \right) >\mathcal{G}_N \left( {\alpha _2 } \right) } \right\} \), Boole’s inequality implies that

Since both \(\mathcal{A}_1 \) and \(\mathcal{A}_2 \) are finite, and each \({\mathbb {P}}\left( {\mathcal{G}_N \left( {\alpha _1 } \right) >\mathcal{G}_N \left( {\alpha _2 } \right) } \right) \rightarrow 0\), the right-hand side converges to zero as \(N\rightarrow +\infty \).

Lemma 1 implies that under finite \(\mathcal{A}\), if we can show that \({\mathbb {P}}\left( {\mathcal{C}\left( {\alpha _1 ,\mathcal{D}, s} \right) >\mathcal{C}\left( {\alpha _2 ,\mathcal{D}, s} \right) } \right) \rightarrow 0\) for each \(\alpha _1 \in \mathcal{A}_1 \) and \(\alpha _2 \in \mathcal{A}_2 \), then \({\hat{\alpha }}_N \in \mathcal{A}_1 \). \(\square \)

Lemma 2

We define \(\mathcal{F}^{*}\left( \alpha \right) =\frac{\partial ^{2}\mathcal{D}\left( {\sigma ^{*}\left( \alpha \right) ,\sigma ^{0}} \right) }{\partial \theta _\alpha \partial \theta _\alpha ^T }\) and \(\mathcal{J}^{*}\left( \alpha \right) =\frac{\partial \mathcal{D}\left( {\sigma ^{*}\left( \alpha \right) ,\sigma ^{0}} \right) }{\partial \theta _\alpha \partial \sigma ^{T}}\). Let \(\alpha _1 \) and \(\alpha _2 \) denote two indexes of models. Consider two test statistics

and

where \(w_1 \) and \(w_2 \) are two nonnegative weights.

-

(1)

If \(w_1 \mathcal{D}\left( {\sigma ^{*}\left( {\alpha _1 } \right) ,\sigma ^{0}} \right) =w_2 \mathcal{D}\left( {\sigma ^{*}\left( {\alpha _2 } \right) ,\sigma ^{0}} \right) ,\) and \(\sigma ^{*}\left( {\alpha _1 } \right) =\sigma ^{*}\left( {\alpha _2 } \right) \), but \(\left| {\alpha _1 } \right| <\left| {\alpha _2 } \right| \),

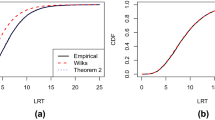

$$\begin{aligned} T_N \left( {\alpha _1 ,\alpha _2 ,w_1 ,w_2 } \right) \longrightarrow _L T\left( {\alpha _1 ,\alpha _2 ,w_1 ,w_2 } \right) =\mathop \sum \nolimits _k \lambda _k \chi _k^2 , \end{aligned}$$where \(\chi _k^2 \)’s are independent chi-square random variables, and \(\lambda _k \) is the \(k^{th}\) eigenvalue of \(\mathcal{W}^{*}\left( {\alpha _1 ,\alpha _2 ,w_1 ,w_2 } \right) \mathcal{V}^{*}\left( {\alpha _1 ,\alpha _2 } \right) \) with

$$\begin{aligned} \mathcal{W}^{*}\left( {\alpha _1 ,\alpha _2 ,w_1 ,w_2 } \right) =\frac{1}{2}\left( {{\begin{array}{cc} {w_1 \mathcal{F}^{*}\left( {\alpha _1 } \right) }&{} 0 \\ 0&{} {-w_2 \mathcal{F}^{*}\left( {\alpha _2 } \right) } \\ \end{array} }} \right) \end{aligned}$$and

$$\begin{aligned}&\mathcal{V}^{*}\left( {\alpha _1 ,\alpha _2 } \right) \\&\quad =\left( {{\begin{array}{cc} {\mathcal{F}^{*}\left( {\alpha _1 } \right) ^{-1}\mathcal{J}^{*}\left( {\alpha _1 } \right) {\Gamma }\mathcal{J}^{*}\left( {\alpha _1 } \right) ^{T}\mathcal{F}^{*}\left( {\alpha _1 } \right) ^{-1}}&{} \\ {\mathcal{F}^{*}\left( {\alpha _2 } \right) ^{-1}\mathcal{J}^{*}\left( {\alpha _2 } \right) {\Gamma }\mathcal{J}^{*}\left( {\alpha _1 } \right) ^{T}\mathcal{F}^{*}\left( {\alpha _1 } \right) ^{-1}}&{} {\mathcal{F}^{*}\left( {\alpha _2 } \right) ^{-1}\mathcal{J}^{*}\left( {\alpha _2 } \right) {\Gamma }\mathcal{J}^{*}\left( {\alpha _2 } \right) ^{T}\mathcal{F}^{*}\left( {\alpha _2 } \right) ^{-1}} \\ \end{array} }} \right) . \end{aligned}$$In particular, if \(w_1 =w_2 =1\), then \(T_N \left( {\alpha _1 ,\alpha _2 } \right) \equiv T_N \left( {\alpha _1 ,\alpha _2 ,1,1} \right) \longrightarrow _L T\left( {\alpha _1 ,\alpha _2 } \right) \).

-

(2)

If \(w_1 \mathcal{D}\left( {\sigma ^{*}\left( {\alpha _1 } \right) ,\sigma ^{0}} \right) =w_2 \mathcal{D}\left( {\sigma ^{*}\left( {\alpha _2 } \right) ,\sigma ^{0}} \right) \), but \(\sigma ^{*}\left( {\alpha _1 } \right) \ne \sigma ^{*}\left( {\alpha _2 } \right) \), then

$$\begin{aligned} Z_N \left( {\alpha _1 ,\alpha _2 ,w_1 ,w_2 } \right) \longrightarrow _L Z\left( {\alpha _1 ,\alpha _2 ,w_1 ,w_2 } \right) , \end{aligned}$$where \(Z\left( {\alpha _1 ,\alpha _2 ,w_1 ,w_2 } \right) \sim N\left( {0,\nu \left( {\alpha _1 ,\alpha _2 ,w_1 ,w_2 } \right) ^{T}{\Gamma }\nu \left( {\alpha _1 ,\alpha _2 ,w_1 ,w_2 } \right) } \right) \), with \({\Gamma }\) being the limiting covariance of \(\sqrt{N}\left( {s-\sigma ^{0}} \right) \), and

$$\begin{aligned} \nu \left( {\alpha _1 ,\alpha _2 ,w_1 ,w_2 } \right) =w_1 \frac{\partial \mathcal{D}\left( {\sigma ^{*}\left( {\alpha _1 } \right) ,\sigma ^{0}} \right) }{\partial \sigma }-w_2 \frac{\partial \mathcal{D}\left( {\sigma ^{*}\left( {\alpha _2 } \right) ,\sigma ^{0}} \right) }{\partial \sigma }. \end{aligned}$$In particular, if \(w_1 =w_2 =1\), then \(Z_N \left( {\alpha _1 ,\alpha _2 } \right) \equiv Z_N \left( {\alpha _1 ,\alpha _2 ,1,1} \right) \,\longrightarrow _{L} Z\left( {\alpha _1 ,\alpha _2 } \right) \).

Lemma 2 can be seen as a variant of Theorem 3.3 from Vuong (1989) under the SEM settings with general discrepancy function \(\mathcal{D}\). The proof of part (1) relies on the consistency and the asymptotic distribution of an MDF estimator under misspecified SEM models (see Satorra, 1989; Shapiro, 1983, 1984, 2007). Similar results can be also found in Satorra and Bentler (2001). Part (2) can be justified by the Delta method if we treat the discrepancy function as a function of a sample covariance vector (see Shapiro, 2009 for more general results). The complete proof of Lemma 2 can be found in the online supplemental material.

Because the consistency of the MDF estimator is crucial for deriving our results, the technical details of Theorem 1 in Shapiro (1984) are briefly discussed here. The consistency of an MDF estimator depends on the following: (a) \(\mathcal{D}\left( {\sigma _\alpha \left( {\theta _\alpha } \right) ,\sigma } \right) \) is a continuous function in both \(\theta _\alpha \) and \(\sigma \); (b) \(\Theta _\alpha \) is compact; (c) \(\theta _\alpha \) is conditionally identified at \(\theta _\alpha ^{*} \in \Theta _\alpha \), given \(\sigma =\sigma ^{0}\); (d) s is a consistent estimator for \(\sigma \). Obviously, (a) is implied by our conditions C and D. (b) is satisfied by the part (2) of Condition E. Part (1) of Condition E implies (c) to be true. Finally, (d) can be obtained by using Condition A. Shapiro (1984) also observed that in practice the compactness of \(\Theta _\alpha \) does not hold. Hence, Shapiro proposed the condition of inf-boundedness: There exists a \(\delta >\mathcal{D}\left( {\sigma _\alpha \left( {\theta _\alpha ^{*} } \right) ,\sigma ^{0}} \right) \) and a compact subset \(\Theta _\alpha ^{*} \subset \Theta _\alpha \) such that \(\left\{ {\theta _\alpha |\mathcal{D}\left( {\sigma _\alpha \left( {\theta _\alpha } \right) ,\sigma } \right) <\delta } \right\} \subset \Theta _\alpha ^{*} \) whenever \(\sigma \) is in the neighborhood of \(\sigma ^{0}\). Under this condition, the minimization actually takes place on \(\Theta _\alpha ^{*} \) for all \(\sigma \) near \(\sigma ^{0}\). Although it may not be easy to justify the inf-boundedness condition for all types of SEM models, finding a counterexample of practical interest is also difficult.

Proof of Theorem 1

-

(1)

If \(\mathcal{A}_d =\mathcal{A}\), part (1) holds trivially. For \(\mathcal{A}\backslash \mathcal{A}_d \ne \emptyset \), by Lemma 1, we only need to show

$$\begin{aligned} {\mathbb {P}}\left( {IC_{k_N } \left( {\alpha _d } \right) >IC_{k_N } \left( \alpha \right) } \right) \rightarrow 0, \end{aligned}$$for each \(\alpha _d \in \mathcal{A}_d \) and \(\alpha \in \mathcal{A}\backslash \mathcal{A}_d \). Since \(IC_{k_N } \left( {\alpha _d } \right) \longrightarrow _P \mathcal{D}^{*}\left( {\alpha _d } \right) \) and \(IC_{k_N } \left( \alpha \right) \longrightarrow _P \mathcal{D}^{*}\left( \alpha \right) >\mathcal{D}^{*}\left( {\alpha _d } \right) \) under \(k_N =O_{\mathbb {P}} \left( {N^{-1}} \right) \), given \(\epsilon >0\) we can find \(N\left( \epsilon \right) \) such that \({\mathbb {P}}\left( {IC_{k_N } \left( {\alpha _d } \right) >\frac{\mathcal{D}^{*}\left( \alpha \right) +\mathcal{D}^{*}\left( {\alpha _d } \right) }{2}} \right) <\frac{\epsilon }{2}\) and \({\mathbb {P}}\left( {IC_{k_N } \left( \alpha \right)<\frac{\mathcal{D}^{*}\left( \alpha \right) +\mathcal{D}^{*}\left( {\alpha _d } \right) }{2}} \right) <\frac{\epsilon }{2}\) whenever \(N>N\left( \epsilon \right) \). Hence, we have \({\mathbb {P}}\left( {IC_{k_N } \left( {\alpha _d } \right) >IC_{k_N } \left( \alpha \right) } \right) <\epsilon \) if \(N>N\left( \epsilon \right) \).

-

(2)

Let \(\alpha \) denote any element in \(\mathcal{A}_d \backslash \alpha _d^{*} \). Since the event \(\left\{ {IC_{k_N } \left( {\alpha _d^{*} } \right) -IC_{k_N } \left( \alpha \right) >0} \right\} \) is contained in \(\left\{ {{\hat{\alpha }}_N \in \mathcal{A}_d \backslash \alpha _d^{*} } \right\} \), we have \({\mathbb {P}}\left( {{\hat{\alpha }}_N \in \mathcal{A}_d \backslash \alpha _d^{*} } \right) \ge {\mathbb {P}}\left( IC_{k_N } \big ( {\alpha _d^{*} } \right) -IC_{k_N } \left( \alpha \right) >0 \big )\).

Case A: \(\sigma ^{*}\left( {\alpha _d^{*} } \right) =\sigma ^{*}\left( \alpha \right) \). The assumption implies that \(N\left( {IC_{k_N } \left( {\alpha _d } \right) -IC_{k_N } \left( \alpha \right) } \right) =T_N \left( {\alpha _d^{*} ,\alpha } \right) +Nk_N \left( {\left| {\alpha _d^{*} } \right| -\left| \alpha \right| } \right) \). Since \(\mathop {\lim }\nolimits _{N\rightarrow \infty } {\mathbb {P}}\left( {Nk_N \le M} \right) =1\) for some \(M<+\infty \) by the fact \(k_N =O_{\mathbb {P}} \left( {N^{-1}} \right) \), we have

$$\begin{aligned} {\mathbb {P}}\left( {N\left( {IC_{k_N } \left( {\alpha _d } \right) -IC_{k_N } \left( \alpha \right) } \right)>0} \right) \rightarrow {\mathbb {P}}\left( {T\left( {\alpha _d^{*} ,\alpha } \right)>M\left( {\left| {\alpha _d^{*} } \right| -\left| \alpha \right| } \right) } \right) >0, \end{aligned}$$and conclude \(\mathop {\lim }\nolimits _{N\rightarrow \infty } {\mathbb {P}}\left( {{\hat{\alpha }}_N \in \mathcal{A}_d \backslash \alpha _d^{*} } \right) \ge \mathop {\max }\nolimits _{\alpha \in \mathcal{A}_d \backslash \alpha _d^{*} } {\mathbb {P}}\left( {T\left( {\alpha _d^{*} ,\alpha } \right)>M\left( {\left| {\alpha _d^{*} } \right| -\left| \alpha \right| } \right) } \right) >0\).

Case B: \(\sigma ^{*}\left( {\alpha _d^{*} } \right) \ne \sigma ^{*}\left( \alpha \right) \). Since \(\sqrt{N}\left( {IC_{k_N } \left( {\alpha _d } \right) -IC_{k_N } \left( \alpha \right) } \right) =Z_N \left( {\alpha _d^{*} ,\alpha } \right) +\sqrt{N}k_N \big ( \left| {\alpha _d^{*} } \right| -\left| \alpha \right| \big )\), we have

$$\begin{aligned} {\mathbb {P}}\left( {\sqrt{N}\left( {IC_{k_N } \left( {\alpha _d } \right) -IC_{k_N } \left( \alpha \right) } \right)>0} \right) \rightarrow {\mathbb {P}}\left( {Z\left( {\alpha _d^{*} ,\alpha } \right)>0} \right) >0. \end{aligned}$$Therefore, \(\mathop {\lim }\nolimits _{N\rightarrow \infty } {\mathbb {P}}\left( {{\hat{\alpha }}_N \in \mathcal{A}_d \backslash \alpha _d^{*} } \right) \ge \mathop {\max }\nolimits _{\alpha \in \mathcal{A}_d \backslash \alpha _d^{*} } {\mathbb {P}}\left( {Z\left( {\alpha _d^{*} ,\alpha } \right)>0} \right) >0\). \(\square \)

Proof of Theorem 2

-

(1)

Let \(\alpha _d \in \mathcal{A}_d \) and \(\alpha \in \mathcal{A}\backslash \mathcal{A}_d \).

$$\begin{aligned} {\mathbb {P}}\left( {IC_{k_N } \left( {\alpha _d } \right) -IC_{k_N } \left( \alpha \right)>0} \right)= & {} {\mathbb {P}}\left( {{\hat{\mathcal{D}}} \left( {\alpha _d } \right) -{\hat{\mathcal{D}}} \left( \alpha \right) +k_N \left( {\left| {\alpha _d } \right| -\left| \alpha \right| } \right)>0} \right) \\&\rightarrow {\mathbb {P}}\left( {\mathcal{D}^{*}\left( {\alpha _d } \right) -\mathcal{D}^{*}\left( \alpha \right) >0} \right) =0. \end{aligned}$$ -

(2)

For each \(\alpha \in \mathcal{A}_d \backslash \mathcal{A}_d^{*} \), we have

$$\begin{aligned} {\mathbb {P}}\left( {IC_{k_N } \left( {\alpha _d^{*} } \right) -IC_{k_N } \left( \alpha \right)>0} \right)= & {} {\mathbb {P}}\left( {N\left( {IC_{k_N } \left( {\alpha _d^{*} } \right) -IC_{k_N } \left( \alpha \right) } \right)>0} \right) \\= & {} {\mathbb {P}}\left( {T_N \left( {\alpha _d^{*} ,\alpha } \right)>Nk_N \left( {\left| \alpha \right| -\left| {\alpha _d^{*} } \right| } \right) } \right) \\&\longrightarrow {\mathbb {P}}\left( T\left( {\alpha _d^{*} ,\alpha } \right) >+\infty \right) =0. \end{aligned}$$By lemma 1, we conclude \({\mathbb {P}}\left( {IC_{k_N } \left( {\alpha _d^{*} } \right) >\mathop {\min }\nolimits _{\alpha \in \mathcal{A}_d \backslash \alpha _d^{*} } IC_{k_N } \left( \alpha \right) } \right) \longrightarrow 0\) and \(\mathop {\lim }\nolimits _{N\rightarrow \infty } {\mathbb {P}}\left( {{\hat{\alpha }}_N =\alpha _d^{*} } \right) =1\).

-

(3)

Choose \(\alpha \in \mathcal{A}_d \backslash \alpha _d^{*} \), then

$$\begin{aligned} {\mathbb {P}}\left( {{\hat{\alpha }}_N \in \mathcal{A}_d \backslash \alpha _d^{*} } \right)\ge & {} {\mathbb {P}}\left( {\sqrt{N}\left( {IC_{k_N } \left( {\alpha _d^{*} } \right) -IC_{k_N } \left( \alpha \right) } \right)>0} \right) \\= & {} {\mathbb {P}}\left( {Z\left( {\alpha _d^{*} ,\alpha } \right)>\sqrt{N}k_N \left( {\left| \alpha \right| -\left| {\alpha _d^{*} } \right| } \right) +o_{\mathbb {P}} \left( 1 \right) } \right) \longrightarrow {\mathbb {P}}\left( Z\left( {\alpha _d^{*} ,\alpha } \right) \right. \\> & {} \left. M\left( {\left| \alpha \right| -\left| {\alpha _d^{*} } \right| } \right) \right) \end{aligned}$$Therefore, \(\mathop {\lim }\nolimits _{N\rightarrow \infty } {\mathbb {P}}\left( {{\hat{\alpha }}_N \in \mathcal{A}_d \backslash \alpha _d^{*} } \right) \ge \mathop {\max }\nolimits _{\alpha \in \mathcal{A}_d \backslash \alpha _d^{*} } {\mathbb {P}}\left( {Z\left( {\alpha _d^{*} ,\alpha } \right)>M\left( {\left| \alpha \right| -\left| {\alpha _d^{*} } \right| } \right) } \right) >0\).

\(\square \)

Proof of Theorem 3

-

(1)

Let \(\alpha _e \in \mathcal{A}_e \) and \(\alpha \in \mathcal{A}\backslash \mathcal{A}_e \). Because \(\frac{{\hat{\mathcal{D}}} \left( {\alpha _e } \right) }{df\left( {\alpha _e } \right) }-\frac{1}{N}\longrightarrow _P \frac{\mathcal{D}^{*}\left( {\alpha _e } \right) }{df\left( {\alpha _e } \right) }\) and \(\frac{{\hat{\mathcal{D}}} \left( \alpha \right) }{df\left( \alpha \right) }-\frac{1}{N}\longrightarrow _P \frac{\mathcal{D}^{*}\left( \alpha \right) }{df\left( \alpha \right) }>\frac{\mathcal{D}^{*}\left( {\alpha _e } \right) }{df\left( {\alpha _e } \right) }\), we have

$$\begin{aligned} {\mathbb {P}}\left( {RMSEA_N \left( {\alpha _e } \right) -RMSEA_N \left( \alpha \right)>0} \right) ={\mathbb {P}}\left( {\frac{\mathcal{D}^{*}\left( {\alpha _e } \right) }{df\left( {\alpha _e } \right) }>\frac{\mathcal{D}^{*}\left( \alpha \right) }{df\left( \alpha \right) }+o_{\mathbb {P}} \left( 1 \right) } \right) \longrightarrow 0. \end{aligned}$$ -

(2)

Let \(\alpha \in \mathcal{A}_e \backslash \mathcal{A}_e^{*} \). By the definition of \(\alpha _e^{*} \) and \(\mathcal{A}_e \backslash \alpha _e^{*} \), we know that \(\frac{\mathcal{D}^{*}\left( {\alpha _e^{*} } \right) }{df\left( {\alpha _e^{*} } \right) }=\frac{\mathcal{D}^{*}\left( \alpha \right) }{df\left( \alpha \right) }\) and hence \(df\left( \alpha \right) \mathcal{D}^{*}\left( {\alpha _e^{*} } \right) =df\left( {\alpha _e^{*} } \right) \mathcal{D}^{*}\left( \alpha \right) \). Since the event \(\big \{ RMSEA_N \left( {\alpha _e^{*} } \right) -RMSEA_N \left( \alpha \right) >0 \big \}\) is contained in \(\left\{ {{\hat{\alpha }}_N \in \mathcal{A}_e \backslash \alpha _e^{*} } \right\} \), we have \({\mathbb {P}}\left( {{\hat{\alpha }}_N \in \mathcal{A}_e \backslash \alpha _e^{*} } \right) \ge {\mathbb {P}}\left( {RMSEA_N \left( {\alpha _e^{*} } \right) -RMSEA_N \left( \alpha \right) >0} \right) \).

Case A. \(df\left( \alpha \right) \mathcal{D}^{*}\left( {\alpha _e^{*} } \right) =df\left( {\alpha _e^{*} } \right) \mathcal{D}^{*}\left( \alpha \right) =0\). Since the event \(\left\{ {\left( {\frac{{\hat{\mathcal{D}}} \left( {\alpha _e^{*} } \right) }{df\left( {\alpha _e^{*} } \right) }-\frac{1}{N}} \right) -\frac{{\hat{\mathcal{D}}} \left( \alpha \right) }{df\left( \alpha \right) }>0} \right\} \) is contained in \(\left\{ {\hbox {max}\left\{ {\frac{{\hat{\mathcal{D}}} \left( {\alpha _e^{*} } \right) }{df\left( {\alpha _e^{*} } \right) }-\frac{1}{N},0} \right\} -\hbox {max}\left\{ {\frac{{\hat{\mathcal{D}}} \left( \alpha \right) }{df\left( \alpha \right) }-\frac{1}{N},0} \right\}>0} \right\} =\big \{ RMSEA_N \left( {\alpha _e^{*} } \right) -RMSEA_N \left( \alpha \right) >0 \big \}\), we have

$$\begin{aligned} {\mathbb {P}}\left( {RMSEA_N \left( {\alpha _e^{*} } \right) -RMSEA_N \left( \alpha \right)>0} \right)\ge & {} {\mathbb {P}}\left( {\frac{{\hat{\mathcal{D}}} \left( {\alpha _e^{*} } \right) }{df\left( {\alpha _e^{*} } \right) }-\frac{{\hat{\mathcal{D}}} \left( \alpha \right) }{df\left( \alpha \right) }>\frac{1}{N}} \right) \\= & {} {\mathbb {P}}\left( T_N \left( {\alpha _e^{*} ,\alpha ,df\left( \alpha \right) ,df\left( {\alpha _e^{*} } \right) } \right) \right. \\> & {} \left. df\left( \alpha \right) df\left( {\alpha _e^{*} } \right) \right) \\&\rightarrow {\mathbb {P}}\left( T\left( {\alpha _e^{*} ,\alpha ,df\left( \alpha \right) ,df\left( {\alpha _e^{*} } \right) } \right) \right. \\> & {} \left. df\left( \alpha \right) df\left( {\alpha _e^{*} } \right) \right) >0 \end{aligned}$$Hence, \(\mathop {\lim }\nolimits _{N\rightarrow \infty } {\mathbb {P}}\left( {{\hat{\alpha }}_N \in \mathcal{A}_e \backslash \alpha _e^{*} } \right) \ge \mathop {\max }\nolimits _{\alpha \in \mathcal{A}_e \backslash \alpha _e^{*} } {\mathbb {P}}\left( {T\left( {\alpha _e^{*} ,\alpha ,df\left( \alpha \right) ,df\left( {\alpha _e^{*} } \right) } \right)>0} \right) >0\).

Case B. \(df\left( \alpha \right) \mathcal{D}^{*}\left( {\alpha _e^{*} } \right) =df\left( {\alpha _e^{*} } \right) \mathcal{D}^{*}\left( \alpha \right) \) but \(\sigma ^{*}\left( {\alpha _e^{*} } \right) \ne \sigma ^{*}\left( \alpha \right) \). Through similar technique in case A, we have

$$\begin{aligned}&{\mathbb {P}}\left( {RMSEA_N \left( {\alpha _e^{*} } \right) -RMSEA_N \left( \alpha \right)>0} \right) \ge {\mathbb {P}}\left( {\frac{{\hat{\mathcal{D}}} \left( {\alpha _e^{*} } \right) }{df\left( {\alpha _e^{*} } \right) }-\frac{{\hat{\mathcal{D}}} \left( \alpha \right) }{df\left( \alpha \right) }>\frac{1}{N}} \right) \\&\qquad ={\mathbb {P}}\left( Z_N \left( {\alpha _e^{*} ,\alpha ,df\left( \alpha \right) ,df\left( {\alpha _e^{*} } \right) } \right)>\frac{df\left( \alpha \right) df\left( {\alpha _e^{*} } \right) }{\sqrt{N}} \right) \\&\qquad \rightarrow {\mathbb {P}}\left( {Z\left( {\alpha _e^{*} ,\alpha ,df\left( \alpha \right) ,df\left( {\alpha _e^{*} } \right) } \right)>0} \right) >0 \end{aligned}$$We conclude that \(\mathop {\lim }\nolimits _{N\rightarrow \infty } {\mathbb {P}}\left( {{\hat{\alpha }}_N \in \mathcal{A}_e \backslash \alpha _e^{*} } \right) \ge \mathop {\max }\nolimits _{\alpha \in \mathcal{A}_e \backslash \alpha _e^{*} } {\mathbb {P}}\left( {Z\left( {\alpha _e^{*} ,\alpha ,df\left( \alpha \right) ,df\left( {\alpha _e^{*} } \right) } \right)>0} \right) >0\).

\(\square \)

Proof of Theorem 4

By the fact \(\frac{{\hat{\mathcal{D}}} \left( \alpha \right) }{df\left( \alpha \right) }-\frac{1}{N}\longrightarrow _P \frac{\mathcal{D}^{*}\left( \alpha \right) }{df\left( \alpha \right) }\) for each \(\alpha \in \mathcal{A}\) and \(\frac{\mathcal{D}^{*}\left( {\alpha _c } \right) }{df\left( {\alpha _c } \right) }<c\) for all \(\alpha _c \in \mathcal{A}_c \), we have

Hence, in the first stage, we can correctly identify all the models in \(\mathcal{A}_c \) under large N. Since the second stage is just to compare \(\left| {\alpha _c } \right| \) of each model in \(\mathcal{A}_c \), a non-random quantity, we conclude that \(\mathop {\lim }\nolimits _{N\rightarrow \infty } {\mathbb {P}}\left( {{\hat{\alpha }}_N \in \mathcal{A}_c^{*} } \right) =1\). \(\square \)

Rights and permissions

About this article

Cite this article

Huang, PH. Asymptotics of AIC, BIC, and RMSEA for Model Selection in Structural Equation Modeling. Psychometrika 82, 407–426 (2017). https://doi.org/10.1007/s11336-017-9572-y

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11336-017-9572-y