Abstract

Digital communication has become an essential part of both personal and professional contexts. However, unique characteristics of digital communication—such as lacking non-verbal cues or time asynchrony—clearly distinguish this new form of communication from traditional face-to-face communication. These differences raise questions about the transferability of previous findings on traditional communication to the digital communication context and emphasize the need for specialized research. To support and guide research on the analysis of digital communication, we conducted a systematic literature review encompassing 84 publications on digital communication in leading journals. By doing so, we provide an overview of the current body of research. Thereby, we focus on distinct fields of communication, methods used to collect and analyze digital communication data, as well as common methodological limitations. Building on these insights, we derive a series of comprehensive guidelines from five distinct areas for the collection and analysis of digital communication that can guide future research and organizational practice.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Digital technologies have introduced a transformative era (Nambisan et al. 2019; Verhoef et al. 2021), not only disrupting traditional business models but also revolutionizing human communication (Flanagin 2020; Kovaitė et al. 2020). Within this context, digital media has created a parallel communication space that eliminated physical communication costs and radically changed the transfer of information as well as—even more profoundly—the attribution of meaning between communicators (Drucker 2002). This in turn led to a paradigm shift brought about by this new form of communication and interaction, fundamentally altering the structures of today’s society (Baecker 2007; Drucker 2002). The scope of these complex structural changes has extended beyond previous predictions (Drucker 2002), leading to novel societal communication spaces characterized by high connectivity with numerous potential linkages, ultimately culminating in network-based societal structures (e.g., Castells 2004). As individuals and organizations increasingly shift to digital channels (Kovaitė et al. 2020; Nguyen et al. 2020, 2022), digital communication has become prevalent in both, personal and professional domains (e.g., Bradlow et al. 2017; Parks 2014; Wiencierz and Röttger 2017). However, despite its widespread prevalence, a common definition of (digital) ‘communication’ remains elusive, as semantic terms and understandings associated with it vary considerably. Several researchers adopt one or more inherent characteristics (e.g., interactivity, networking, digitalization, collaboration, etc.) to describe communication, resulting in “semantic confusion” (Scolari 2009, p. 946). Understandings range from the mere exchange of information (Grewal et al. 2022; Merriam-Webster 2024) to “interpreting one’s own behavior and the behavior of other people as a message (of something = information) […] and attributing a supposedly intended meaning to it” (Simon 2018, p. 96) via digital technologies. In many cases, the term is not even defined at all. Within the context of our study, we adopt Scolari’s (2009) argument that digitalization is an essential prerequisite for enabling associated characteristics of communication such as networking, collaboration, and interactivity. Building on this premise and Simon’s (2018) work, we define digital communication as the joint creation of meaningFootnote 1 through the utilization of digital technologies.

The growing significance of digital communication creates new opportunities for both practice and research (e.g., Capriotti et al. 2023; Confetto et al. 2023; Doedt and Maruyama 2023; Ganesh and Iyer 2023; Golmohammadi et al. 2023; Kaiser and Kuckertz 2023b; Mousavi and Gu 2023; Srinivasan et al. 2023). For businesses, analyzing digital communication represents an “enormously beneficial” (Grewal et al. 2022, p. 224) opportunity for measuring the effects of brand image and employer branding (Confetto et al. 2023; Garner 2022), understanding corporate social media communication (Jha and Verma 2023; Srinivasan et al. 2023), or assessing internal employee communication and sentiments (Colladon et al. 2021). For researchers, novel and distinctive data sources (i.e., emails, text messages, or social media data) as well as increasing amounts of data allow to analyze digital communication patterns (e.g., Huang and Yeo 2018; Riordan and Kreuz 2010), unfold social connections hidden in relationship patterns (e.g., Lu and Miller 2019; Zack and McKenney 1995), or visualize communication themes (e.g., Doedt and Maruyama 2023; Trier 2008).

Compared to traditional communication, however, digital communication brings along distinct characteristics (Kaye et al. 2022), such as changes in information flows (e.g., Georgakopoulou 2015; Sievert and Scholz 2017), the density of information (e.g., Arnold et al. 2023; Fakhfakh and Bouaziz 2023), or the timing of communication (e.g., Garett and Young 2023; Pluwak 2023), which in turn have an impact on resulting communication behavior (e.g., Kaye et al. 2022; Maris et al. 2023; Nixon and Guajardo 2023). Therefore, theoretical or methodological insights from traditional communication cannot simply be transferred to digital communication. However, examining the nuances of digital communication enables researchers to gain insights that challenge and refine existing theories (Scolari 2009), ultimately leading to epistemological insights about “novel processes of social and communicative change to which technologies are often closely linked” (Flanagin 2020, p. 23).

Despite its relevance, research on the distinct phenomenon of analyzing digital communication remains scarce. Although several literature reviews address (digital) communication (see for example Kaiser and Kuckertz 2023a; Meier and Reinecke 2021), these reviews do not specifically focus on the analysis of digital communication. Instead, they cover other valuable aspects, for instance by providing an overview of the research field of entrepreneurial communication (Kaiser and Kuckertz 2023a) or by outlining the effects of digital communication on mental health (Meier and Reinecke 2021). Furthermore, while isolated research methods are supported by existing guidelines and step-by-step instructions (e.g., topic modeling (Palese and Piccoli 2020) or social network analysis (Jan 2019)), there is a notable lack of comprehensive and understandable guidelines in the literature for the general analysis of digital communication. However, a thorough understanding of the analysis of digital communication is crucial for methodically capturing the phenomenon of interest in its entirety. In contrast to traditional communication, which benefits from established research methodologies, digital communication lacks standardized methods. This absence often prompts researchers to invent their own methodologies (e.g., Humphreys and Wang 2018) or to rely on ‘single sources of truth.’ This in turn leads to a high risk of incomplete or even incorrect analyses of digital communication. Therefore, the following research questions (RQs) emerge:

RQ1

What are the current methods for analyzing digital communication data in existing research?

RQ2

What can future scholars learn from existing research given the unique nature of this form of communication?

To address these RQs and the lack of comprehensive guidelines for the systematic and rigorous analysis of digital communication data, we conducted a systematic literature review (SLR) to support transparency and reproducibility (Fisch and Block 2018). An SLR aims to identify all relevant empirical evidence that meets pre-defined inclusion criteria with the objective of retrieving, evaluating, and synthesizing reliable information on a topic of interest (Snyder 2019; vom Brocke et al. 2015). We identified 84 publications across three academic databases. Following Schryen et al. (2020), our approach included criticizing (i.e., problematizing previously published methods and literature to reveal weaknesses), aggregating evidence (i.e., compiling previously published evidence), and developing a research agenda (i.e., emphasizing guidelines and emerging challenges for analyzing digital communication data in future research endeavors). This process is aimed at deriving robust guidelines for the analysis of digital communication data within the fields of digital communication, business, and information systems research.

The contributions of this study are threefold: First, we offer a systematic and comprehensive overview of the collective body of knowledge. Our insights relate to interdisciplinary research that draws on digital communication data, providing an overview for researchers in areas such as social sciences and communication (e.g., Paxton et al. 2022; Srinivasan et al. 2023), information systems (e.g., Jha and Verma 2024; Mousavi and Gu 2023), business (e.g., Paul et al. 2021), marketing (e.g., Labrecque et al. 2020; Sonnier et al. 2011), or entrepreneurship (e.g., Kaiser and Kuckertz 2023a) as well as studies focusing on research methods (e.g., Clark et al. 2021; Fisch and Block 2018; Palese and Piccoli 2020; Stieglitz et al. 2018). Specifically, we contribute to the methodological discourse on the analysis of digital communication (e.g., Humphreys and Wang 2018), big data methodologies, and social media analytics (e.g., Stieglitz et al. 2018). Second, we propose a set of guidelines for analyzing digital communication data. In doing so, we provide practitioners and researchers with a systematic, methodologically grounded guide that can be applied in a wide variety of digital contexts. Third, we bridge the gap between research and practice in digital communication. Our overview, as well as the best practices and guidelines derived from it, enable practitioners to capture and use theoretical insights, while at the same time informing researchers about current challenges that arise in practice.

2 Method

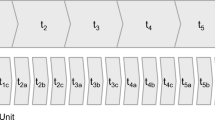

To disclose research on digital communication and corresponding analysis techniques, we draw on an SLR in reference to Snyder (2019) and Tranfield et al. (2003). SLRs minimize biases by following well-defined processes (Kitchenham and Brereton 2013), enhancing credibility (Paré et al. 2016), and seeking to ensure rigor through quality criteria, such as transparency, traceability, and reproducibility (Cram et al. 2020; Fisch and Block 2018; Templier and Paré, 2018). Following Keding (2021), our systematic approach contains four steps. Initially, we conducted several pilot searches in academic research databases (e.g., Web of Science Core Collection, Google Scholar) and leading journals to acquire “a broad conception […] about the topic” (Torraco 2005, p. 359)—that is the analysis of digital communication—as well as to identify sources and keywords of relevance, as recommended by Clark et al. (2021) and Fisch and Block (2018). To address digital communication, we considered general terms, such as “online communication,” “email communication,” and “social media communication,” as well as related synonyms and homonyms. Using asterisks, we included words that contained not only the term “communication” but also “communicator” or “communications,” as these words also aligned with our RQs. Several pilot searches and exploratory readings revealed that numerous articles referencing digital communication (or its equivalents) predominantly address the technical side of machine-to-machine communication, devoid of human involvement (e.g., Kennedy and Kolumbán, 2000; Lee and Messerschmitt 2012). Consequently, to omit these articles and to specifically incorporate instances of digital communication involving human interaction, we introduced a second search string. Given our emphasis on textual communication, we added concreate means of digital communication focusing on the most used text-based platforms. In this vein, we included prominent communication platforms and channels, such as “Facebook,” “WhatsApp,” “email,” and “Twitter” (now renamed “X”) to uphold concrete means of digital communication. Figure 1 depicts our methodological approach step by step.

In Step 1, we searched for “digital communication” as well as synonyms and homonyms in titles and keywords together with text-based platforms and communication mediums related to digital communication, in titles, abstracts, keywords, and/or subjects (EBSCOhost). Following Gusenbauer and Haddaway’s (2020) assessment, we selected the following well-suited academic research databases: Business Source Premier (EBSCOhost), Scopus, and Web of Science Core Collection with no lower time limit and up to November 2023. Databases were selected, as they provide access to leading social science, business, and information systems journals, therefore, “ensuring that all the top-tier sources are included in the review” (vom Brocke et al. 2009, p. 9). This procedure led to 4937 publications. In Step 2, we aimed to identify articles relevant to our review and therefore limited our review to English language articles published in peer-reviewed academic journals or conference proceedings (Rowley and Slack 2004; Tranfield et al. 2003). Further source types (e.g., books, commentaries) were excluded, due to their nonexistent, inconsistent, or nontransparent peer-review process (e.g., Moritz et al. 2023), ultimately leading to 3715 publications. In Step 3, we conducted a first quality assurance by excluding 622 duplicates. After that and in line with previous SLRs (e.g., Gernsheimer et al. 2021; Keding 2021; Klammer and Gueldenberg 2019), we considered the survey-based VHB-Jourqual3,Footnote 2 the Academic Journal Guide (CABS),Footnote 3 and Clarivate’s Journal Citation Report Impact FactorsFootnote 4 (JCR IF) 2022 as another quality threshold to ensure a high quality of the articles in the review.Footnote 5 Drawing on the journal ranking conversion list by Kraus et al. (2020, p. 1032), we included articles from peer-reviewed academic journals and conferences at a VHB-Jourqual3 “C” level equivalent or higher in at least one of the three leading academic journal rankings. This led to 285 publications. After that, the suitability of the remaining publications was assessed by two distinct researchers in a two-stage content-screening process (Kitchenham and Brereton 2013), which resulted in 153 publications. In Step 4, screening for inclusion (Templier and Paré, 2018), these publications were analyzed by two independent researchers based on a thorough full-text analysis to pinpoint relevant publications that analyzed digital communication. We excluded publications with the following characteristics: publications focusing on trading and special needs, due to their lack of focus on digital communication; publications solely relying on nominal variables, such as determining whether digital communication occurred or not because digital communication itself was not investigated in these cases; self-report studies, as they tended to focus on individual perceptions and experiences, which—while valuable—did not meet the specific analytical requirements of our research for the same reason. In cases of mismatches, the researchers discussed the respective publications until a consensus was reached. We selectively included articles that offered a nuanced examination of digital communication. This included qualitative, quantitative, as well as mixed-method publications, providing a comprehensive understanding of the digital communication landscape. Furthermore, we focused on articles that investigated specific determinants of digital communication, as these contributed significantly to advancing and refining the existing analytical framework in the field. This approach ensured a focused yet thorough exploration of the intricacies inherent in digital communication publications, which in total led to a compiled sample of 84 publications relevant to this study (for a detailed overview, please see appendix).

3 Descriptive analysis of the identified literature

Digital communication has been recognized as a means of bringing about groundbreaking changes in management communication requirements (Farmanfarmaian 1987) and management decisions (e.g., Bogorya 1985; Huber 1984). Its practical relevance and applicability increased abruptly with the general availability of the Internet in 1993 (Schatz and Hardin 1994), leading to the first scientific publication on digital communication just one year later (Griffith and Northcraft 1994). From then, only a few other publications were released until the early 2000s. In 2004, there was a significant increase in publications, with four being released. Subsequently, the annual publication rate remained consistently high until 2018. However, in 2019, there was a sudden increase to 10 publications per year, which was maintained almost continuously thereafter. As a result, the years 2019 to 2023 saw the highest number of publications, with a new record of 11 publications in 2023. Overall, publications were disseminated across 55 unique journals.

In total, publications indexed in VHB-Jourqual3 (63) came from 17 research fields (see Table 1). A particularly large number of publications were published in the fields of Business Informatics (20), Marketing (16), Organization/Human Resources (7), Sustainability Management (7), and Public Business Administration (7), covering close to two-thirds (64.77%) of publications indexed in VHB-Jourqual3.

An analysis of the VHB-Jourqual3 rankings of the journals in which the articles were published over time shows that the initial articles were exclusively published in the highest-ranking journals (A +). Subsequently, publications were primarily made in B- to C-level journals. Over time, more publications were published in higher-ranked journals. The peak of top-ranked publications (A and A +) was observed in the period from 2020 to 2023 (5), closely followed by pioneering publications from the 1990s (4). Twenty-one publications were not ranked in VHB-Jourqual3, but the respective journals had impact factors in other rankings that justified inclusion in the analysis. For an overview, please see Fig. 2.

Reasons for this ranking distribution (over time) could be that initially, accessing and analyzing digital data was challenging, requiring significant effort to conduct research under the given quality standards. This effort might have been rewarded through highly ranked publications. However, technological advancements have made it easier to access and process digital data, allowing a broader community to analyze it for scientific purposes. Ultimately, this also increases the requirements for high-quality publications in this field. Additionally, one important factor that might explain the high quantity of B and C journals is that newer publications were mostly published in rather technically oriented, newer journals, which (to date) tend to be ranked B or C in VHB-Jourqual3.

4 Content analysis

The results of our content analysis indicate that current analyses of digital communication are focused on a retrospective perspective and predominantly rely on data mining (e.g., web scraping or data extraction via Application Programming Interfaces (API)Footnote 6), and basic AI, such as natural language processing (e.g., topic modeling) or rather simple machine learning (ML)Footnote 7 algorithms (e.g., sentiment analysis through basic Python libraries) to decipher patterns, emotions, and trends in digital communication data. A deeper analysis of all publications on digital communication shows that publications diverge in several areas. First, the literature corpus encompasses a range of communication fields. Second, literature deals with different types of communication. Third, these publications employ a variety of methods for collecting and analyzing digital communication data.

4.1 Fields of communication

The first distinguishing feature of digital communication publications encompasses the field of communication (see Table 2). A distinction can be made between personal, business, and governmental communication, which, however, overlap in some publications.

In personal communication, the focus lies on the private lives of one or several people. Between 2002 and 2023, 23 publications addressed this topic, with a slight increase in recent years, consistent with the overall number of published works. The primary data sources used were social media data, including posts, comments, and profile pages (16), as well as text messages (4) and emails (4). The areas of personal communication primarily included personal relationships and dynamics (e.g., community building and communication (Kaufmann and Buckner 2014), norm building in social groups (Moor and Kanji 2019), or sexting (Brinkley et al. 2017)), emotional and physical health (e.g., teen health issues (Harvey et al. 2007) or emotional support for breast cancer (Yoo et al. 2014)), and social identity (e.g., attention seeking (DeWall et al. 2011) or language choice and identity (Warschauer et al. 2002)).

In business communication, the analysis focuses on work-related communication in the digital sphere. In our sample, 60 publications dealt with this field of communication. The publications spanned from 1994 to 2023, with a recent increase in the number of publications reflecting the general growth in the field. Data analyzed encompassed primarily social media data (32), but emails and newsletters (19), websites (5), and text messages (3) were used as well. With regards to topics, there were multiple dominating topics: marketing and customer engagement (e.g., social media brand communication (Wagner et al. 2017), the effectiveness of email marketing (Chaparro-Peláez et al. 2022), or brand positioning (Vural et al. 2021)), corporate communications and strategy (e.g., leadership communication (Capriotti and Ruesja 2018), Corporate Social Responsibility (CSR) communication (Palazzo et al. 2019), and social media strategies (Yue et al. 2023)), management and organizational communication (e.g., managing by email (Wasiak et al. 2011)), bad news communication (Sussman and Sproull 1999), and intra-group interactions (Zack and McKenney 1995)), and crisis management and adaptation (e.g., corporate communication in times of crises in general (Ayman et al. 2020) or COVID-19 in particular (Schoch et al. 2022)).

Governmental communication encompasses all discourse that is either political in nature or transmitted to or received by government entities. In our sample, a mere 7 publications from the period spanning 2004 to 2023 dealt with governmental communication. All use either social media data (5; Twitter: 3, Facebook: 2) or websites (2) and mainly targeted governmental communication strategies (e.g., Torpe and Nielsen 2004) or crisis and disaster management (e.g., Platania et al. 2022). Articles focusing on governmental communication did not include other fields of communication in their analysis, underlining the distinctive nature of governmental communication (DePaula et al. 2018), which is typically focused on democratic goals (i.e., providing information, seeking input, and facilitating interactions) rather than on self-promotion and marketing (e.g., Bellström et al. 2016; Bonsón et al. 2012).

Overall, it is evident that there is only a small overlap between the fields of communication. Most researchers tend to focus on a single communication field, such as business, private, or governmental communication, with only six publications blending private and business communication. This overlap mostly came from the fact that the respective publications did not explicitly mention which field of communication was analyzed and both were equally possible (Al-Garadi et al. 2016; Aleti et al. 2019; Colladon and Gloor 2019; Ko et al. 2022; Li et al. 2019b; Warschauer et al. 2002). For instance, Ko et al. (2022) examine how the presence of emojis in brand-related user-generated content on social media platforms like Instagram influences consumer engagement, thereby blurring the boundaries between personal and business communication due to their dual use in conveying both personal emotions and commercial messages. In conclusion, however, a trend of specialization could be observed that is likely influenced by differences in communication styles across these fields, particularly in aspects such as information disclosure or communication tone. Consequently, a focused approach in research is needed, where each field is examined separately to address its unique communicative characteristics.

4.2 Types of communication

As part of our analysis, we also examined the types of communication that were studied. Based on previous research (Balbi and Kittler 2016), we distinguished between one-to-one and one-to-many communication. One-to-one communication involves direct communication between two individuals. In the digital realm, this type of communication is commonly facilitated through mediums such as email (e.g., Sussman and Sproull 1999; Warschauer et al. 2002) or chat messages addressed to a single recipient, as exemplified in interactions between an applicant and a recruiter (e.g., Griffith and Northcraft 1994). In one-to-many communication, a single sender addresses multiple recipients simultaneously. This can be observed in the digital context through social media posts (e.g., Chiou et al. 2014) or emails sent to multiple recipients, such as promotional emails (Raman et al. 2019). Surprisingly, no major differences could be found between these types of communication with regard to topics targeted.

4.3 Research methods

Within the framework of our analysis, we identified multiple methods for collecting and analyzing digital communication data (for an overview, please see Table 3). These will be explained in the following.

4.3.1 Data collection methods

A broad distinction can be made between monitoring and communication studies (Cooper and Schindler 2014). Monitoring “includes studies in which the researcher inspects the activities of a subject or the nature of some material without attempting to elicit responses from anyone” (Cooper and Schindler 2014, p. 127) whereas in communication studies, there is a direct interaction between the researcher and the subjects (i.e., through questions). The literature reviewed in our study consisted mainly of monitoring studies (75). However, while researchers did not intervene in the communication process, they still observed one-way- as well as interactive communication by illustrating how responses and interactions (e.g., Twitter posts and comments) contribute to the collective construction of meaning within digital communication scenarios. Monitoring studies primarily used secondary data analysis of larger samples or case studies. Samples were selected by choosing a specific number of participants from all available individuals or groups, or from a subgroup to be studied. This allowed access to available data from these participants. An example would be the Twitter accounts of famous Chief Executive Officers (CEOs) (Huang and Yeo 2018). Case studies mostly accessed internal data from one or multiple selected companies or governments (e.g., El Baradei et al. 2021; Floreddu and Cabiddu 2016). Lastly, there were also a few communication studies (9) that created their data through experiments, for example during a simulation of digital versus non-digital negotiations (Griffith and Northcraft 1994).

Interestingly, most studies provided only a superficial description of their samples, if they provided any description at all (e.g., Harvey et al. 2007; Sonnier et al. 2011; Zhou and Zhang 2008); often it is only stated that a randomized sample was drawn (e.g., Huang and Yeo 2018; Yoo et al. 2014). For instance, Wasiak et al. (2011) conducted their study using emails from 650 senders to 1080 recipients, selecting only every 20th email from each sender in chronological order. However, they did not provide a detailed explanation of the sampling method or the criteria for this selection. The scarce sample description of the studies in our review can be partly explained by the fact that the selection procedure was regularly based either on closeness to the own research context (i.e., by drawing on students (e.g., Alonzo and Aiken 2004; Barron and Yechiam 2002; Griffith and Northcraft 1994), rendering the sample—even if drawn randomly within this group—potentially highly similar) or on criteria other than the person speaking: communication data such as Tweets or Facebook posts were, for example, selected based on geo-locations or regions (e.g., Al-Garadi et al. 2016; Fissi et al. 2022), on hashtags used (e.g., Pantano et al. 2021; Platania et al. 2022) or drawn as a completely random sample (e.g., Aleti et al. 2019; Huang and Yeo 2018; Paul et al. 2021; Yoo et al. 2014). In many cases, company or brand websites (e.g., Chamberlin and Boks 2018; Palazzo et al. 2019; Torpe and Nielsen 2004; Vollero et al. 2020) as well as social media accounts of companies or individuals were examined (e.g., DeWall et al. 2011; Kaufmann and Buckner 2014; Labrecque et al. 2020; Lu and Miller 2019; Wagner et al. 2017). This highlights another special feature of digital communication, namely the fact that organizations, as an entity, can now communicate on their behalf, without being represented by a person (of course, posts are still written by a person, but in contrast to traditional communication, this person is not visible anymore).

In many cases, the drawn sample was further strategically reduced based on predefined criteria to enhance the relevance and focus to the phenomenon of interest. For instance, Confetto et al. (2023) excluded promotional content, focusing solely on text-based employer branding materials, while Ganesh and Iyer (2023, p. 174) restricted their analysis to company-posted Tweets, omitting user comments “for the avoidance of doubt.” Moreover, several authors employed geographic and linguistic constraints, systematically excluding users who did not align with the regional focus of their studies (e.g., Capriotti et al. 2023; Doedt and Maruyama 2023). Also, users’ activity levels turned out to be a pivotal exclusion criterion in our sample (e.g., Colladon and Gloor 2019; Golmohammadi et al. 2023; Kaiser and Kuckertz 2023b; Srinivasan et al. 2023), as demonstrated by Srinivasan et al. (2023), who specifically excluded firms that had been inactive on Twitter for the preceding six months.

The analyzed units included mostly unstructured web data, such as social media data (48), emails (21), data from news or information websites (9), forum data (5), business network data (3), data from crowdfunding and review platforms (2), and other system data (e.g., an electronic gallery writing program; 8). Out of these data, social media data has become the most prevalent data to be studied, especially in recent years. In this context, Facebook and Twitter data have been used the most (31). The data analyzed consisted of original posts, comments, and profile pages. Especially posts and comments were characterized by their brevity (e.g., on Twitter, a maximum of 280 characters is allowed (Tur et al. 2021)), highlighting the fact that only small communication fragments were examined. In some instances, photos were also included in the analysis, such as the attractiveness of a Facebook profile photo (DeWall et al. 2011) or photos and their captions on the social media platform Instagram (Ko et al. 2022), a photo-sharing platform.

Data extraction was performed differently depending on the data type. Social media and website data extraction was primarily automated through APIs or web scraping (31). APIs allow data extraction from large, structured platforms such as Twitter or GitHub. However, not every data source offers an API to extract data. Therefore, web scraping served as an alternative, allowing automated information extraction from websites (Luscombe et al. 2022; Thomas and Mathur 2019). Within the studies analyzed, various web scraping approaches have been used, as also described in Luscombe et al. (2022): proprietary software (e.g., NCapture from Nvivo), paid custom web scraping services (e.g., ScrapeSimple), or self-developed solutions using open-source software (e.g., Python libraries). Email data was mostly provided by companies or institutions. This data cannot be accessed via APIs or web scraping, so it had to be retrieved by the administrators.

4.3.2 Data analysis methods

Data analysis methods exhibit a strong emphasis on multi-method studies (46). The types of analysis consisted mainly of descriptive analyses (31), content analyses (71), and network analyses (10). Descriptive analyses allow for describing and summarizing (large) amounts of data. They were therefore frequently utilized to contextualize data, such as the number of followers on social networks, posting frequencies, or the total number of units analyzed. In some cases, they also functioned as the main research method to analyze digital communication data. Exemplary research topics include the influence of firm Tweets on stock performance (Ganesh and Iyer 2023), feature use of work communication (Schoch et al. 2022), and comparing the Twitter use of CEOs (Capriotti and Ruesja 2018). However, descriptive analyses were frequently complemented by further content analyses. Content analysis can be defined as “a generic name for a variety of textual analyses that typically involves comparing, contrasting and categorizing a set of data” (Duncan-Howell 2009, p. 1015, following Hara et al. 2000). Although a possible further subdivision of this analysis method into qualitative and quantitative analysis has been discussed (see Krippendorff 2019), we will briefly outline this distinction to explain the associated possibilities. A qualitative content analysis (56) delves deep into the phenomena being studied (Harwood and Garry 2003), while a quantitative content analysis (42) focuses on the frequency of the occurrence of phenomena (see Weber 1990). However, the distinction between the two is not always clear-cut, as qualities need to be captured before frequencies can be determined (Krippendorff 2019). This might also be the reason why most studies relied on a quali-quantitative content analysis design “to identify the presence of certain words, themes, or concepts in qualitative data (such as text) with quantitative analysis to quantify and analyze their meanings and relationships” (Confetto et al. 2023, p. 126). Exemplary research topics of content analysis covered exploratory analyses of firm, entrepreneur, or CEO communication (e.g., Confetto et al. 2023; Huang and Yeo 2018; Kaiser and Kuckertz 2023b), effects of active online communication (Sheng 2019), or the formulation of email requests and its effects on the diffusion of responsibility (Barron and Yechiam 2002). Another commonly employed data analysis technique was network analysis. In network analysis, the main “focus [lays on] the structure of relationships, ranging from casual acquaintance to close bonds” (Serrat 2017, p. 40). It is assumed that not the individuals or entities on their own are (solely) important but that the relationships between them matter. In our context, network analysis was frequently used in the context of social networks, but also in email or other text message communications. Exemplary topics covered included evolving online debates (Prabowo et al. 2008), the dynamic evolution of digital communication networks (Trier 2008), identifying top performers (Wen et al. 2020), or the influence of spammers on the structure of a network (Colladon and Gloor 2019).

Regarding the automation of analysis, a broad distinction can be made between manual and automated data analysis. Descriptive analyses are typically automated, but this is not always the case for other types of analyses. In our dataset, most publications utilized manual content analysis (34), in some cases even with proprietary, manually developed coding schemes. However, since 2007 (e.g., Harvey et al. 2007), manual analyses have been supplemented (14) or replaced (29) by automated data analysis using specialized tools (see Table 4; for a detailed overview see Batrinca and Treleaven 2015 as well as Camacho et al. 2020). The most used tool for content analysis was Linguistic Inquiry Word Count (LIWC), a text analysis program that analyzes linguistic patterns and word frequencies in a text based on predefined or custom dictionaries (Boyd et al. 2022; Tausczik and Pennebaker 2010). It was followed by programming languages such as R or Python, which provide packages that enable sentiment analysis or various other aspects of textual data analysis. In terms of network analysis/visualization tools, Condor was used the most. It enables dynamic analyses of networks and visualization of relationships between actors or phenomena under observation (Gloor et al. 2009). However, programming languages also allow the integration of external packages that allow building word clouds, such as the Python ‘WordCloud’ or ‘rtweet’ package. In addition to the tools described here, some researchers, particularly in recent publications, have developed their own AI and ML algorithms or fine-tuned existing models. These were often trained with a dictionary and tested manually (e.g., Golmohammadi et al. 2023; Mousavi and Gu 2023), which appears to be an increasingly accepted approach.

4.4 Common limitations

In addition to the general limitations mentioned in nearly every study, our review revealed some prevalent limitations caused by the fact that digital communication was analyzed. These limitations included limitations in generalizability, the data analyzed, and the techniques used.

Regarding generalizability, it was noted that findings may not be transferable to other communication channels, such as emails, as well as to offline contexts. The data analyzed uncovered even more issues. First, it was addressed that there was a generally high potential for bias due to unstructured data, as well as due to data privacy restrictions (which can prevent data access). Second, there was often insufficient inclusion of additional data. The inclusion of offline data or additional platforms was mentioned particularly often. Ironically, while many publications included supplementing offline data (e.g., interviews or questionnaires), the inclusion of more than one online platform was scarcely addressed within publications in the dataset (e.g., Capriotti et al. 2023; Fernandez-Lores et al. 2022; Kaiser and Kuckertz 2023b), all of which were published within the last two years. Also, the integration of reactions to communication (e.g., comments on social media posts) into analysis was frequently mentioned since this would allow uncovering communication dynamics and potential differences to non-digital, everyday interactions (see also Drucker 2002). Within the data, other aspects, such as punctuation marks, emoticons, and even photos, have been mentioned to possibly enrich the analysis further. Finally, it was mentioned that the translation of original data for analysis purposes might distort the actual meaning, especially in the case of automated analysis, where the meaning is not double-checked by humans. Regarding the analysis methods and techniques used, two perspectives emerged: studies that employed manual methods for data analysis criticized the limited amount of data that could be analyzed. They suggested the potential for automation to cover more data. Conversely, automated studies often addressed the lack of depth and potentially misunderstood correlations. Specifically, dictionary-based programs and algorithms were seen as a strong determinant of possible biases (if only certain concepts appear in a dictionary and the creation of own dictionaries is not possible, one is bound to these concepts as well as the words that they enclose). Especially unsupervised automated methods, frequently utilized for their efficiency in processing large datasets inscrutable for humans (Eickhoff and Neuss 2017), often require validation through “human-labeled gold-standard sets” (Palese and Piccoli 2020, p. 434) to ensure the interpretability and relevance of the extracted results (e.g., Corradini et al. 2023). In the case of LIWC analyses, it has already been acknowledged that its sentiment analyses may not capture the full range of nuances in meaning (Chung and Pennebaker 2008; Huang and Yeo 2018; Kim et al. 2016).

5 Guidelines

When analyzing digital communication, researchers face a unique environment, for which no common scientific rules have been established yet. To raise awareness for the distinctiveness of analyzing digital communication and to provide first references, we derived some preliminary guidelines from our analyses based on the specifications of digital communication (for an overview, please see Fig. 3). These guidelines revolve around five key areas of digital communication: the medium, the sample, the data, the method of analysis, and the general nature of digital communication. Both individually and in combination, these areas shape the data collection and data analysis.

In turn, all areas are influenced by the chosen research objective and thus the RQ posed. A RQ “determines the realm of constructs to be considered or the type of interventions whose effects shall be analyzed” (Hansen et al. 2022, p. 2). Accordingly, the choice of the RQ and the research objective is the starting point for all further considerations of theory and method (Gregor 2006). In detail, a RQ narrows the scope of investigation (Creswell 2005; Johnson and Christensen 2004) and determines the “type of research design used, the sample size and sampling scheme employed, and the type of instruments administered as well as the data analysis techniques […] used” (Onwuegbuzie and Leech 2006, p. 475). This equally applies to the context of digital communication and therefore to future research endeavors. The applicability and scope of all the following guidelines are therefore largely contingent upon the outcome of these preliminary considerations.

5.1 The medium

Firstly, it is important to take a closer look at different media of digital communication. Previous research indicates that there is a strong temptation to choose media based on their (perceived) data availability and accessibility. In recent years, Facebook and Twitter have been the focus of research (e.g., Capriotti et al. 2023; Doedt and Maruyama 2023; Fissi et al. 2022; Ganesh and Iyer 2023; Srinivasan et al. 2023). On the one hand, this may be because—as pointed out in the results section—research has often focused on similar fields and topics (i.e., mostly business communication, such as marketing; see for example Chamberlin and Books (2018)) where the use of these platforms has proven useful (Bang et al. 2021; Rathore et al. 2017). On the other hand, it could also be because both offer an API that allows for easy data extraction,Footnote 8 while other types of data (e.g., emails) are more difficult to obtain (Barron and Yechiam 2002; Zack and McKenney 1995). It is crucial, however, to recognize that digital media, due to their inherent characteristics, differ significantly in their capacity to document and capture various phenomena of interest. Each form of digital media—whether it is video, audio, or text—possesses unique attributes that influence how effectively it can capture and convey specific types of information (Daft and Lengel 1984). For instance, visual media like videos are exceptionally adept at demonstrating visual and spatial relationships, rendering them ideal for capturing events that require visual context to be understood. On the other hand, textual media excel at providing detailed explanations and can incorporate a broader historical or theoretical context, which is essential for understanding more complex concepts. Therefore, it is important to consider certain hygiene factors when selecting the appropriate medium. First, it should be ensured that the chosen medium can (largely) capture the communication phenomenon under examination. For example, if an interaction phenomenon is analyzed, does the medium provide reactions (e.g., as in Capriotti et al. 2023; Srinivasan et al. 2023)? Or, if the timing of the communication is important, is this retrievable from the medium (e.g., as in Doedt and Maruyama 2023; Fissi et al. 2022)? Furthermore, it should be acknowledged that digital communication—especially social media communication, which was used most frequently in our dataset (e.g., Corradini et al. 2023; Fissi et al. 2022; Mousavi and Gu 2023)—is often created for a broad, public audience. While, if chosen on purpose (e.g., if social media strategies are analyzed, as done by Floreddu and Cabiddu 2016), this digital medium can show distinct features of public and digital communication, it does not necessarily cover aspects made for private communication. Consequently, when choosing a digital medium for capturing a phenomenon, it is vital to consider which form will most accurately and effectively communicate the desired content and details.

Secondly, the target group to be analyzed must be represented in the medium. In our review, we found that the presence of the group to be analyzed was one of the most important factors that determined the choice of a medium (Capriotti and Ruesja 2018; Chamberlin and Boks 2018). As social networks are particularly dynamic, this factor is highly relevant. Facebook, for example, has evolved significantly over the past ten years, and its user base has also changed. To determine the suitability of a medium for specific target groups, various publications (e.g., DeFilippis et al. 2022; Fisch and Block 2021; Jha and Verma 2024) can be consulted, along with official user statistics. However, in some cases, further research may be necessary to determine the fit between platforms and special phenomena.

Thirdly, most studies in our review acknowledged the limitation of only examining one medium and suggested exploring other mediums to further investigate the phenomenon (Möller et al. 2017; Pearce et al. 2020). However, the implications of this limitation are seldom sufficiently illuminated. Individuals may exhibit varying behaviors across different communication platforms. Therefore, it is quite possible that one medium is not sufficient to shed light on a phenomenon (Alonzo and Aiken 2004; Cheshin et al. 2013). Since most of the studies we analyzed were monitoring studies, it was not possible to determine if (and to what degree) subjects purposefully adapted their behavior to the digital context or a specific digital medium. Thus, multiple media should always be examined if the research phenomenon itself is not media-specific. Furthermore, a potential integration of additional communication studies or (non-digital) interviews should be considered to dig deeper into the emergence of digital communication as well as the intent behind communicating in a certain way (e.g., as done by Singh et al. 2020 who, in addition to emails, analyzed interviews as well).

5.2 The sample

In digital communication media, samples are rarely selected randomly, whether they are large samples or case studies. While most studies within our review claimed to draw a random sample from available data (e.g., Huang and Yeo 2018; Yoo et al. 2014), the selection is already influenced by one factor: who is present in a medium in the first place. This may not be too problematic for email use, as it can be assumed that—at least in corporate contexts—every employee has an email account. However, when it comes to social media, registration is usually voluntary. This can significantly affect the sample, not only in terms of the number of people but also in terms of their characteristics and media usage. For instance, social media tends to attract a younger demographic (Zhou 2023) and is often not very prevalent among those in higher hierarchical positions (e.g., Capriotti and Ruesja 2018; Huang and Yeo 2018). The usage of specific platforms also varies significantly by geographic location (Zhou 2023). Furthermore, research has demonstrated that individuals vary in their social media use based on their personality traits (e.g., Azucar et al. 2018; Özgüven and Mucan 2013). This in turn leads to a bias towards certain users and communication patterns. If samples are not selected randomly, potential filter bubbles and echo chambers might even intensify this problem (e.g., if only participants from their own surroundings are chosen). The complexity of the situation is compounded by the fact that numerous studies examined corporate social media platforms or websites for their investigations into digital communication (e.g., Chamberlin and Boks 2018; Palazzo et al. 2019). While some research queries may be suitable for observing corporate communication without a specific individual in focus, in this context it is still not even clear who is communicating in the first place. Given that we have defined digital communication, following Simon (2018), as the joint generation of meaning through digital media, it is highly dependent on the person involved in the generation of meaning. Consequently, it would be prudent to conduct a thorough examination of the specifics of the sample utilized.

Additionally, it is important to consider the circumstances surrounding user exclusion and sample reduction. Within our review, we found that researchers have strategically limited their samples to enhance analytical comprehensiveness and manageability (e.g., due to resource limitations necessitated by manual coding and analysis (e.g., Wasiak et al. 2011)) and/or to ensure reliability and validity. In this context, it is a common practice in research to exclude users who exhibit low levels of activity, particularly because users who generate few or no posts or emails can skew the results. However, it is precisely in such contexts that one should carefully consider and argue whether the absence of information really means that nothing can be learned about a particular communication phenomenon. An exclusion might be justified for some phenomena, such as the development of an individual’s language over time. However, it is important to give careful consideration before excluding people from a dataset. Since many social media users do not actively post (i.e., passive users or so-called lurkers), but are still present and responsive (Brewer et al. 2021; Dolan et al. 2019), it may be useful in some cases to include dummy variables for non-posting behavior. For instance, managers who do not post could communicate in this way. This shows that, although passive users do not actively engage and participate, leading to them being frequently overlooked or excluded in digital communication analyses (Gong et al. 2021), they often represent a silent but significant majority of digital media users (Ortiz et al. 2018). Hence, including passive users could provide a more comprehensive view of the inherent dynamics and underlying patterns, which are not directly visible through active participation alone (e.g., passive users could also follow/be followed).

5.3 The data

Due to the potentially biased sample and the specific characteristics of digital media, there are also peculiarities in the data to be analyzed. First, it must be assumed that a given sample, which can be highly similar, might also generate similar data in terms of content (i.e., echo chambers). In our review, we found that many studies used highly similar samples (e.g., posts by universities on Facebook and Twitter; Bularca et al. 2022; Capriotti et al. 2023). It is therefore possible that their communication adapts not only to the medium but also to the other individuals represented within that medium (French and Bazarova 2017; Jha and Verma 2024). This may be part of the phenomenon being studied, but it should be considered as it may create biases in the data.

Secondly, the amount of data generated through digital communication media has implications. Since digital communication examined by the studies in our review was often shorter than artifacts of traditional communication (Tur et al. 2021), the inclusion of an increasing number of entities becomes necessary, which complicates the analysis of cohesive communication phenomena. As Krippendorff (2019, p. 5) put it, since research faces ever more extensive contexts, “the large volumes of electronically available data call for computer aids.” The amount of data available today offers enormous potential, but it can often no longer be handled through manual analysis alone. This was also complemented by our results showing that most studies use automated support for data collection and/or analysis (see chapter 4.3.2). The tools developed offer ways to deal with this problem and there is a satisfactory to high correlation between automated and manual analysis (Golmohammadi et al. 2023; Krippendorff 2019; Singh et al. 2020). However, due to a possible lack of context, depth, and sometimes even validity (e.g., Chung and Pennebaker 2008; Huang and Yeo 2018; Kim et al. 2016; Palese and Piccoli 2020), literature has pointed out that researchers should maintain their role as responsible “humans-in-the-loop” (for further information, see Fügener et al. 2021; Wu et al. 2022; Zanzotto 2019), especially when employing unsupervised methods based on AI. However, this responsibility must now be interpreted more broadly due to the emergence of a paradoxical problem of automation. On the one hand, automation is often essential for collecting and analyzing vast amounts of data where manual processing is impractical (Debortoli et al. 2016; Palese and Piccoli 2020). On the other hand, counterintuitively, this also increases the workload for humans (see chapter 4.4). Additional preliminary considerations must be made for automated data extraction. For example, it is important to determine if all data is truly captured automatically and to identify any biases, such as survivorship bias. Furthermore, human support is always necessary for data analysis. Currently, most automated programs rely solely on word analysis with limited contextual reference (see also Chung and Pennebaker 2008; Huang and Yeo 2018; Kim et al. 2016). Researchers must create this context, which is why subsamples should always be analyzed manually and compared to different automatized model parametrizations (e.g., Palese and Piccoli 2020). It is also recommended to enrich the data with interviews or other communicative data to provide further meaning (for good examples from our review that do exactly this, please see Hatzithomas et al. 2016; Singh et al. 2020).

Finally, it is important to consider the structure of digital communication data. Most data from the web typically exhibit a non-uniform structure, characterized by its dynamic nature and the “irregularity in information organizations and structure” (Thomas and Mathur 2019, p. 454). Furthermore, digital communication data are seldom designed for research purposes. While this guarantees practical relevance, it also means that no scientific categories were employed during their creation, which now must be retroactively applied to the data. In this context, data cleansing also plays an important role. There are distinct communication patterns within digital media that are different from scientific categories or dictionaries since they encompass slang, abbreviations, emojis, or other patterns of communication (e.g., Riordan and Kreuz 2010; Samoggia et al. 2019; Skovholt et al. 2014; this has also been addressed in the limitation section of many studies in our review, as summarized in Sect. 4.4). These specifications must be considered since some parts of the data will need either translation or different research approaches. It is essential to document clearly how this non-scientific data is transformed into scientifically usable data. Unfortunately, studies in our review only seldom provided details on this (for a rare example, please see Capriotti et al. (2023) or Kaiser and Kuckertz (2023b)), which is why we encourage future research to share more details on data transformation. In this translation process, relevant elements should not be excluded simply because they are difficult to transform. However, exploring new avenues is necessary in this context, as predefined approaches have limited the possibilities thus far (e.g., automated sentiment analysis often limits differentiation to just three categories (e.g., Bellström et al. 2016) and pre-defined dictionaries are frequently employed for sample reduction by keywords (e.g., Srinivasan et al. 2023)). The trend towards more and more custom-built analysis models and algorithms (for example, see Sect. 4.3.2, Golmohammadi et al. (2023), or Mousavi and Gu (2023)) is evidence of the potential that exists here. We would like to encourage researchers here to continue exploring new avenues to fully exploit this potential and advance science to the next level.

5.4 The method of analysis

The method of data analysis also largely determines how digital communication can be examined. Our results showed that most studies carried out (often automated) content analyses (e.g., Capriotti et al. 2023; Ko et al. 2022; Paul et al. 2021; Paxton et al. 2022). While this method can offer advantages, such as reducing the extent of human involvement (Lewis et al. 2013), uncovering “systematic relationships in text and hence amongst constructs that may be overlooked by researchers” (Humphreys and Wang 2018, p. 1277), and facilitating easy implementation without requiring programming skills (Stieglitz et al. 2018), it is also important to consider whether it consistently is the best—or only—option for the specific research endeavor at hand. To answer this question, two things should be examined in more detail: First, it must be illuminated which type of content analysis should be chosen. Most of the content analyses we examined focused on actual content (qualitative) and did not just count the occurrence of certain phenomena (quantitative). However, usually, only certain topics were analyzed without examining their complexity or paying attention to the interaction between the respective communication partners (e.g., Capriotti and Ruesja 2018; Samoggia et al. 2019). This approach is well suited for the initial narrowing down of a communication phenomenon (e.g., Harwood and Garry 2003; Slater 2013), but takes away potential depth, as neither meaning generation between individuals nor relationships and backgrounds between different topics can be established in this way (Lewis et al. 2013). This problem is exacerbated by the automation of the analysis, depending on the tool or algorithm used (e.g., Grewal et al. 2022; Humphreys and Wang 2018). This rather superficial type of communication analysis is often geared more towards information transfer than communication in Simon’s (2018) sense. Secondly, attention must be paid to whether content analysis should be augmented with additional complementary or supplementary research methods. Regarding complementary methods, Lewis et al. (2013) advocate for the increased use of hybrid methodologies in research, combining automated and manual techniques to leverage the advantages of both. Only some publications in our dataset adopt this approach, implementing hybrid approaches that integrate automated content analysis with manual coding and sub-sample analysis (e.g., Bellström et al. 2016; DeWall et al. 2011). Regarding supplementary methods, the networks and the relationships between people are particularly important in Simon’s (2018) understanding of communication as the joint creation of meaning through networks and relationships, and therefore how individuals’ understandings of each other influence their relationships and vice versa (Han et al. 2020; Serrat 2017; Trier 2008). In this vein, the application of network analysis, which emphasizes the structure of relationships among individuals, is particularly relevant as it aligns with the generation of a shared meaning through communicative processes. This approach underscores that not merely the individual entities but the interactions within their social networks are crucial, thereby highlighting how behaviors and exchanges within these boundaries contribute to the collective construction of meaning. It makes a difference who says something to whom, especially in the underlying intention. However, supplementary network analyses were rarely used in the identified studies (for positive counterexamples see Kim et al. (2016) and Doedt and Maruyama (2023)). Rather, we could observe supplementary descriptive analyses (e.g., Confetto et al. 2023; Jha and Verma 2023; Labrecque et al. 2020). Although these also have the advantage of showing the prevalence of a phenomenon, the extent to which this method can measure communication in a deeper sense can be questioned here. Our recommendation would therefore clearly be to prefer qualitative methods of analysis (e.g., network analyses and qualitative content analyses) to more descriptive ones if the aim is not just to investigate the prevalence of a phenomenon without focusing on its form. In addition, research should move beyond dyadic relationships to a broader network perspective, to capture interactions leading to novel network structures, communication patterns, and meaning-making processes alike (Yang and Taylor 2015).

5.5 The nature of digital communication

Finally, it is important to consider the nature of digital communication as an overarching element. Special attention should be paid to the scope and intention of communication. In terms of the scope of communication, it is important to understand that digital communication is likely to transcend media. Observations of face-to-face communication typically cover the entire communication event, whereas digital communication often occurs on multiple levels (sometimes even simultaneously) and may extend beyond digital contexts (Castells 2004; Grewal et al. 2022). For example, individuals may exchange work emails while also speaking in person during breaks or Twitter discussions may be supplemented by private messages on another platform. Within these other contexts, communication may take completely different forms. It could also be possible that separate platforms are deliberately chosen for distinct aspects of a communication phenomenon (Yang and Liu 2017). While this will not have a negative impact on the observation of all phenomena, it should still be considered in terms of the generalizability of findings. Following Flanagin (2020, p. 24), we argue that, while the temptation to focus on technological tools may be strong, the focus should be on “aspects of technology that are likely to endure in their importance over time and across tools.” Therefore, in this context, it is essential to examine multiple platforms to ensure comprehensive coverage of the entire phenomenon of interest.

Speaking of the intention of digital communication, it is evident that, in many cases, it is harder to determine what a person wants to express with their words. Unlike in face-to-face interactions, where emotions can often be easily identified, the absence of communication cues in digital contexts makes it challenging to determine a person’s emotional state (Daft and Lengel 1984; Fimpel et al. 2023). Interpreting written texts can be complicated due to varying digital communication norms among individuals. For instance, capitalizing a word may be seen as emphasizing it by an elderly person, while a young person may view it as shouting. Additionally, it can be difficult to discern the intention behind a lack of response, for example to an email. Was the person busy or intentionally ignoring the other party? Thus, it is important to include as much contextual information as possible in the analysis since these issues cannot be reliably detected by automated methods. In many cases, the manual analysis of subsamples will facilitate the identification of intent.

Overall, previous research has produced many relevant and factually accurate findings. However, there are issues with generalizability, and in numerous cases, a more holistic view of a communication phenomenon would be advisable. These guidelines were created with the intention of providing a clear and objective framework for future research. It is not our intention to question the validity of prior research. Rather, we aim to provide ideas for conceptualizing and analyzing digital communication data to ensure that phenomena can be fully captured in this specific context. In this way, research will remain relevant and sustainable in the rapidly evolving digital landscape.

6 Conclusion

The purpose of this study was to identify current methods for analyzing digital communication and to establish guidelines for future research. To achieve this, we conducted an SLR and identified 84 publications that analyze digital communication. From these, we extracted their development and identified two distinguishing features in terms of content: the fields of communication that can be analyzed, as well as related methodological approaches. We also examined common methodological limitations to be able to learn from them. Based on the insights gained, we developed guidelines to assist future research in analyzing digital communication. Our contribution to current scholarly discourse is threefold. Firstly, we provide a comprehensive overview of the existing research on digital communication, enabling theorists and practitioners to identify the topics and methods that have been used to study digital communication in the past. Based on these findings as well as practical developments, we distill areas and methods for future research on digital communication. Secondly, we derive guidelines and best practices to assist future researchers in analyzing digital communication. These guidelines provide aid in establishing a clear methodological direction and in accounting for the specific context of digital communication. Thirdly, we aim to bridge the gap between science and practice. Digital communication is a highly practical phenomenon. At the same time, it is also closely studied in science. This offers a unique opportunity for a symbiotic relationship between science and practice. Our work ensures that, on the one hand, practitioners can use theoretical findings to analyze digital communication. On the other hand, we also help scholars to incorporate more practical developments into their research by introducing best practices for methods or possible data access.

6.1 Outlook

The future of analyzing digital communication is deemed for a significant paradigm shift, leading to a further transformation of societal structures and communication processes (extending the ideas outlined by Drucker 2002). As we move more and more towards a knowledge-based society, the ways in which information is created, shared, and communicated are drastically changing, necessitating a foundational rethinking of digital communication paradigms (Drucker 2002; Simon 2018). While some of Drucker’s (2002) predictions have come true and sometimes even been surpassed already, society is still deeply entangled in transformative processes. These processes are fueled by advancements in technology and AI (i.e., encompassing ML techniques) on the one hand, and increasingly available and dynamic opportunities to create and analyze data in real-time on the other hand. Our results indicate that current analyses of digital communication are focused on a retrospective perspective and predominantly rely on data mining (e.g., web scraping or API-calls) or basic AI, such as natural language processing (e.g., topic modeling), and simple ML algorithms (e.g., sentiment analysis through basic python libraries) to decipher patterns, emotions, and trends in digital communications. However, with increasing computational capacities—such as Apple’s neural engines in personal computers (Apple 2023)—and sophisticated knowledge of AI-enabled methods in society and academia, we expect a surge in the use of advanced ML techniques to analyze digital (communication) data. We anticipate that more sophisticated ML techniques, including deep learning algorithms, will be developed, that can analyze data in real-time as well as context aware. For example, the relatively basic sentiment analysis, which currently categorizes emotions based on single word occurrences, is likely to evolve through advanced AI methods to enable the identification of a broader, and more complex range of emotions. These developments also allow a much more comprehensive analysis of larger amounts of communication data, including reactions. Analyzing digital communication in this way will make it easier to better capture the complex field of communication dynamics and go beyond the analysis of pure one-way communication. This possibility to grasp complexity in turn would represent a major step in generating an understanding of communication according to Baecker (2007), Drucker (2002), and Simon (2018), namely as a highly complex, dynamic, and meaning-generating phenomenon.

Furthermore, predictive analytics will be increasingly employed to analyze digital communication. This advanced method enables extrapolating future communication behaviors and trends based on present or historical communication patterns. This can be particularly beneficial in addressing dysfunctional issues within organizations (i.e., resignations), therefore aiding in decision-support as well as conflict avoidance and resolution.

Following Susarla et al. (2023), another disruptive innovation that will impact digital communication research is the vast expansion and further development of generative AI, such as “ChatGPT” (OpenAI 2022). Its advancements are set to create new opportunities for personalized content creation and the deployment of individually self-trained (i.e., fine-tuned) language models to generate and analyze digital communication patterns. These (large) language models as well as other forms of AI will also enable researchers to include more and other types of data in an analysis. While textual data has been the primary focus of analysis, modern AIs can now accurately recognize the content of texts, images, and other file formats automatically (see Banh and Strobel 2023; Dwivedi et al. 2023). This implies that larger volumes of image, audio, and video data can be evaluated quickly in the future, and corresponding communication patterns can be assigned more comprehensively.

Despite the positive developments that can enable major advances in science and practice, it is important to also acknowledge potential dangers. One such danger is the assumption that AI is infallible due to its ever-improving outputs. However, AI is prone to mistakes, and recent AI systems are becoming increasingly difficult to detect errors. Therefore, caution is necessary to avoid drawing false conclusions. In addition, it is important to note that AI systems can hallucinate, meaning they may produce incorrect results based solely on probabilities (e.g., Hagendorff 2023; Ji et al. 2023; Raji et al. 2022). This is a new type of output error that must be considered. Additionally, there is a general risk of over-reliance on technical aids. In this vein, Flanagin’s (2020) work on the relevance of the phenomenon prior to the technology should be mentioned again, since his words apply to both scientific inquiry and analysis. Many modern analysis methods output results without showing any background information or sufficiently explaining how the results were derived. Relying exclusively on technology can therefore prevent a scientist from building a sufficient understanding of the matter.

Overall, we anticipate that technological advances will bring about a fundamental transformation in the field of digital communication and its analysis. However, it is important for researchers and practitioners to be cautious and not ignore potential dangers. With this in mind, we argue that future analysis of digital communication data represents a double-edged sword: while technological progress can yield more accurate, dynamic, and contextualized insights into observed phenomena, excessive dependence on unsupervised or opaque algorithms may produce “confidently generated results that seem plausible but are unreasonable with respect to the source of information” (Banh and Strobel 2023, p. 9) and therefore introduce new challenges for scientists in the field.

6.2 Limitations and avenues for future research

In addition to our contributions, this study has some limitations that should be considered. First, these encompass the common constraints of an SLR already mentioned by Moritz et al. (2023): the dependence on the keywords and search strings used, the inclusion and exclusion criteria (e.g., type of publication or language), and the limitation to findings provided by previous studies. To address the latter point, we have developed comprehensive guidelines that extend beyond the mere findings of previous research. However, certain topics have not yet been thoroughly researched. For instance, previous literature has scarcely analyzed dysfunctional communication, such as conflicts, with only a few exceptions (e.g., Duvanova et al. 2016). Therefore, we cannot provide any additional information or guidelines on this matter, but we would like to strongly encourage future research in this area.

Second, we would like to address another factor in more detail: We deliberately did not analyze self-reports of digital communication, as these only analyze perception, not communication itself. However, this greatly reduced our data set, showing that self-reports have been widely used to address the broad topic of digital communication. Simultaneously, this highlights the fact that part of the perceived experience of digital communication, namely the user’s perspective, is not covered by this SLR.

The limitations mentioned as well as the guidelines provided offer several avenues for future research. To begin, we encourage future research to employ a more holistic approach to digital communication research and its dynamics. In this sense, it is essential to include analyses of reactions to digital communication to examine prevailing communication dynamics more thoroughly. In more detail, future research should delve deeper into the nuances of communication dynamics, particularly distinguishing between one-way and interactive communication. Traditional one-way communication, common in mass communication contexts (Lee et al. 2008; Subekti et al. 2019), limits the interactive potential and reduces opportunities for the (joint) creation of meaning between communicators (e.g., Simon 2018). In contrast, interactive digital communication allows for a more dynamic exchange where meanings can be negotiated and (re-)formed in real-time. Incorporating analyses of reactions (e.g., emojis, likes, human responses) to digital communication thus offers a valuable approach for future research. This will ultimately support the clarification on how digital settings alter traditional communication paradigms as well as the extent to which digital media impacts the richness of communicative exchanges (e.g., DeFilippis et al. 2022). This objective could be further enriched by also incorporating the communicator’s perceptions and self-reports, as future research could ultimately provide a more comprehensive understanding of digital communication in this way, capturing not just the transmission of information but the creation of meaning that defines communication in the digital realm.

Another potential avenue for future research is based on the definition of digital communication as the joint creation of meaning through the utilization of digital technologies in reference to Simon (2018), emphasizing the interaction between sensing and feeling humans as communicators. This definition leads to the exclusion of rather technical machine-to-machine communication, focusing instead on the human elements of communication dynamics (as also done by most prior research, such as for example Capriotti and Ruesja 2018; Huang and Yeo 2018; Kaiser and Kuckertz 2023b). However, with the rapid development and integration of increasingly anthropomorphic AI, the traditional ontological boundaries between human and machine communicators are becoming increasingly blurred (Guzman 2020; Turkle 1984). Several researchers have already highlighted how AI is able to mimic human-like behavior (e.g., Banh and Strobel 2023; Dwivedi et al. 2023; Obrenovic et al. 2024) and provide responses indistinguishable from human communicators (e.g., Martins et al. 2024; Strauss et al. 2024; Strobel and Banh 2024). Given the evolving dynamics of increasingly anthropomorphic AI, future research should critically examine the differences in digital communication between humans, machines, and their interactions to create meaning. Exploring how these interactions challenge our human-based understanding of digital communication and assumptions about communicators could refine existing theories and provide epistemological insights into “novel processes of social and communicative change” (Flanagin 2020, p. 23).

In addition, our analysis revealed a broad use of automated tools for data extraction and a combination of manual and automated analysis methods. Since both methods have inherent limitations (see Sects. 4.3 and 4.4; Palese and Piccoli 2020), future research should strive for a balance that leverages the depth of manual analysis and the breadth of automated tools for the analysis of digital communication data. In detail, there are opportunities for researchers to enhance existing automated methods for data analysis by adapting them to be more transparent and interpretable for humans (e.g., as already done for topic modeling by Palese and Piccoli 2020). This could be achieved through the integration of explainable AI technologies, being defined as technologies that provide clarity on how decisions and analyses are derived to “evaluate, improve, justify, and learn from AI [methods] by building explanations for a [methods’] functioning or its predictions” (Brasse et al. 2023, p. 3). Such advancements would not only increase the reliability of automated data analysis but also improve their utility by aligning their processes with the needs for greater transparency, reliability, reproducibility, and understanding in research methodologies (e.g., Cram et al. 2020; Fisch and Block 2018; Templier and Paré 2018).

Data availability

All data will be sent out upon request to christina.strauss@uni-wh.de.

Notes