Abstract

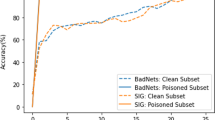

Deep learning models are vulnerable to backdoor attacks, where an adversary aims to fool the model via data poisoning, such that the victim models perform well on clean samples but behave wrongly on poisoned samples. While researchers have studied backdoor attacks in depth, they have focused on specific attack and defense methods, neglecting the impacts of basic training tricks on the effect of backdoor attacks. Analyzing these influencing factors helps facilitate secure deep learning systems and explore novel defense perspectives. To this end, we provide comprehensive evaluations using a weak clean-label backdoor attack on CIFAR10, focusing on the impacts of a wide range of neglected training tricks on backdoor attacks. Specifically, we concentrate on ten perspectives, e.g., batch size, data augmentation, warmup, and mixup, etc. The results demonstrate that backdoor attacks are sensitive to some training tricks, and optimizing the basic training tricks can significantly improve the effect of backdoor attacks. For example, appropriate warmup settings can enhance the effect of backdoor attacks by 22% and 6% for the two different trigger patterns, respectively. These facts further reveal the vulnerability of deep learning models to backdoor attacks.

Similar content being viewed by others

Data availability

The datasets used or analysed during the current study are available from the corresponding author on reasonable request.

References

LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436–444.

Jyoti, A., Ganesh, K.B., Gayala, M., Tunuguntla, N.L., Kamath, S., & Balasubramanian, V.N. (2022). On the robustness of explanations of deep neural network models: A survey. arXiv:2211.04780.

Dargan, S., Kumar, M., Ayyagari, M. R., & Kumar, G. (2020). A survey of deep learning and its applications: A new paradigm to machine learning. Archives of Computational Methods in Engineering, 27(4), 1071–1092.

Ning, F., Shi, Y., Cai, M., Xu, W., & Zhang, X. (2020). Manufacturing cost estimation based on a deep-learning method. Journal of Manufacturing Systems, 54, 186–195.

Ribeiro, M., Grolinger, K., & Capretz, M.A. (2015). Mlaas: Machine learning as a service. In 2015 IEEE 14th international conference on machine learning and applications (ICMLA). IEEE, pp. 896–902.

IMARC: Machine Learning as a Service (MLaaS) Market: Global Industry Trends, Share, Size, Growth, Opportunity and Forecast 2022-2027. https://www.imarcgroup.com/machine-learning-as-a-service-market.

Gu, T., Dolan-Gavitt, B., & Garg, S. (2017). Badnets: Identifying vulnerabilities in the machine learning model supply chain. arXiv:1708.06733.

Li, Y., Wu, B., Jiang, Y., Li, Z., & Xia, S.-T. (2020). Backdoor learning: A survey. arXiv:2007.08745.

Liu, Y., Ma, S., Aafer, Y., Lee, W.-C., Zhai, J., Wang, W., & Zhang, X. (2018). Trojaning attack on neural networks. In Network and distributed system security symposium. https://doi.org/10.14722/ndss.2018.23291.

Bagdasaryan, E., Veit, A., Hua, Y., Estrin, D., & Shmatikov, V. (2020). How to backdoor federated learning. In International conference on artificial intelligence and statistics. PMLR, pp. 2938–2948.

Chen, X., Liu, C., Li, B., Lu, K., & Song, D. (2017). Targeted backdoor attacks on deep learning systems using data poisoning. arXiv:1712.05526.

Turner, A., Tsipras, D., & Madry, A. (2018). Clean-label backdoor attacks.

Saha, A., Subramanya, A., & Pirsiavash, H. (2020). Hidden trigger backdoor attacks. In Proceedings of the AAAI conference on artificial intelligence, vol. 34, pp. 11957–11965.

Quiring, E., & Rieck, K. (2020). Backdooring and poisoning neural networks with image-scaling attacks. In 2020 IEEE security and privacy workshops (SPW). IEEE, pp. 41–47.

Li, Y., Li, Y., Wu, B., Li, L., He, R., & Lyu, S. (2021). Invisible backdoor attack with sample-specific triggers. In Proceedings of the IEEE/CVF international conference on computer vision, pp. 16463–16472.

Liu, Y., Xie, Y., & Srivastava, A. (2017). Neural trojans. In 2017 IEEE international conference on computer design (ICCD). IEEE, pp. 45–48.

Doan, B.G., Abbasnejad, E., & Ranasinghe, D.C. (2020). Februus: Input purification defense against trojan attacks on deep neural network systems. In Annual computer security applications conference, pp. 897–912.

Udeshi, S., Peng, S., Woo, G., Loh, L., Rawshan, L., & Chattopadhyay, S. (2019). Model agnostic defence against backdoor attacks in machine learning. arXiv:1908.02203.

Li, Y., Zhai, T., Wu, B., Jiang, Y., Li, Z., & Xia, S. (2020). Rethinking the trigger of backdoor attack. arXiv:2004.04692.

Wang, B., Yao, Y., Shan, S., Li, H., Viswanath, B., Zheng, H., & Zhao, B.Y. (2019). Neural cleanse: Identifying and mitigating backdoor attacks in neural networks. In 2019 IEEE symposium on security and privacy (SP). IEEE, pp. 707–723.

Kolouri, S., Saha, A., Pirsiavash, H., & Hoffmann, H. (2020) Universal litmus patterns: Revealing backdoor attacks in CNNs. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 301–310.

Huang, X., Alzantot, M., & Srivastava, M. (2019). Neuroninspect: Detecting backdoors in neural networks via output explanations. arXiv:1911.07399.

Gao, Y., Xu, C., Wang, D., Chen, S., Ranasinghe, D.C., & Nepal, S. (2019). Strip: A defence against trojan attacks on deep neural networks. In Proceedings of the 35th annual computer security applications conference, pp. 113–125.

Liu, K., Dolan-Gavitt, B., & Garg, S. (2018). Fine-pruning: Defending against backdooring attacks on deep neural networks. In International symposium on research in attacks, intrusions, and defenses, pp. 273–294. Springer.

Krizhevsky, A., & Hinton, G. (2009). Learning multiple layers of features from tiny images. Technical report, University of Toronto.

Loshchilov, I., & Hutter, F. (2016). Sgdr: Stochastic gradient descent with warm restarts. arXiv:1608.03983.

Smith, S.L., Kindermans, P.-J., Ying, C., & Le, Q.V. (2017). Don’t decay the learning rate, increase the batch size. arXiv:1711.00489.

Shorten, C., & Khoshgoftaar, T. M. (2019). A survey on image data augmentation for deep learning. Journal of Big Data, 6(1), 1–48.

Zhang, H., Cisse, M., Dauphin, Y.N., & Lopez-Paz, D. (2017). mixup: Beyond empirical risk minimization. arXiv:1710.09412

Müller, R., Kornblith, S., & Hinton, G.E. (2019). When does label smoothing help? In Advances in neural information processing systems, vol. 32.

Choi, D., Shallue, C.J., Nado, Z., Lee, J., Maddison, C.J., & Dahl, G.E. (2019). On empirical comparisons of optimizers for deep learning. arXiv:1910.05446

Loshchilov, I., & Hutter, F. (2017). Decoupled weight decay regularization. arXiv:1711.05101

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778

Yao, Y., Rosasco, L., & Caponnetto, A. (2007). On early stopping in gradient descent learning. Constructive Approximation, 26(2), 289–315.

Apicella, A., Donnarumma, F., Isgrò, F., & Prevete, R. (2021). A survey on modern trainable activation functions. Neural Networks, 138, 14–32.

Smys, S., Chen, J. I. Z., & Shakya, S. (2020). Survey on neural network architectures with deep learning. Journal of Soft Computing Paradigm (JSCP), 2(03), 186–194.

Acknowledgements

Not applicable

Funding

This work was supported by the National Natural Science Foundation of China for Joint Fund Project (No.U1936218), the National Natural Science Foundation of China (No.62302111)

Author information

Authors and Affiliations

Contributions

RH provided the main idea, designed the methodology and experiments, and prepared the manuscript. AY conducted the experimental analysis and manuscript preparation. HY and TH performed the data analysis, edited and reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no Conflict of interest.

Ethical approval

Not applicable for both human and/or animal studies.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hou, R., Yan, A., Yan, H. et al. Bag of tricks for backdoor learning. Wireless Netw (2024). https://doi.org/10.1007/s11276-024-03724-2

Accepted:

Published:

DOI: https://doi.org/10.1007/s11276-024-03724-2