Abstract

In recent years, research on few-shot learning (FSL) has been fast-growing in the 2D image domain due to the less requirement for labeled training data and greater generalization for novel classes. However, its application in 3D point cloud data is relatively under-explored. Not only need to distinguish unseen classes as in the 2D domain, 3D FSL is more challenging in terms of irregular structures, subtle inter-class differences, and high intra-class variances when trained on a low number of data. Moreover, different architectures and learning algorithms make it difficult to study the effectiveness of existing 2D FSL algorithms when migrating to the 3D domain. In this work, for the first time, we perform systematic and extensive investigations of directly applying recent 2D FSL works to 3D point cloud related backbone networks and thus suggest a strong learning baseline for few-shot 3D point cloud classification. Furthermore, we propose a new network, Point-cloud Correlation Interaction (PCIA), with three novel plug-and-play components called Salient-Part Fusion (SPF) module, Self-Channel Interaction Plus (SCI+) module, and Cross-Instance Fusion Plus (CIF+) module to obtain more representative embeddings and improve the feature distinction. These modules can be inserted into most FSL algorithms with minor changes and significantly improve the performance. Experimental results on three benchmark datasets, ModelNet40-FS, ShapeNet70-FS, and ScanObjectNN-FS, demonstrate that our method achieves state-of-the-art performance for the 3D FSL task.

Similar content being viewed by others

References

Antoniou, A., Edwards, H., & Storkey, A. (2018). How to train your maml. arXiv preprint arXiv:1810.09502.

Chang, A. X., Funkhouser, T., Guibas, L., Hanrahan, P., Huang, Q., Li, Z., Savarese, S., Savva, M., Song, S., Su, H., & Xiao, J. (2015). Shapenet: An information-rich 3d model repository. arXiv preprint arXiv:1512.03012.

Chapelle, O., Scholkopf, B., & Zien, A. (2009). Semi-supervised learning (chapelle, o. et al., eds.; 2006)[book reviews]. IEEE Transactions on Neural Networks, 20(3), 542.

Chen, W. Y., Liu, Y. C., Kira, Z., Wang, Y. C. F., & Huang, J. B. (2019). A closer look at few-shot classification. In International conference on learning representations.

Chen, Y., Hu, V. T., Gavves, E., Mensink, T., Mettes, P., Yang, P., & Snoek, C. G. (2020). Pointmixup: Augmentation for point clouds. In European conference on computer vision (pp. 330–345). Springer.

Chen, Y., Liu, Z., Xu, H., Darrell, T., & Wang, X. (2021b). Meta-baseline: Exploring simple meta-learning for few-shot learning. In International conference on computer vision (pp. 9062–9071).

Chen, C., Li, K., Wei, W., Zhou, J. T., & Zeng, Z. (2021). Hierarchical graph neural networks for few-shot learning. IEEE Transactions on Circuits and Systems for Video Technology, 32(1), 240–252.

Chen, S., Zheng, L., Zhang, Y., Sun, Z., & Xu, K. (2018). Veram: View-enhanced recurrent attention model for 3d shape classification. IEEE Transactions on Visualization and Computer Graphics, 25(12), 3244–3257.

Cheraghian, A., Rahman, S., Campbell, D., & Petersson, L. (2020). Transductive zero-shot learning for 3d point cloud classification. In Proceedings of the IEEE/CVF winter conference on applications of computer vision (pp 923–933).

Cosmo, L., Minello, G., Bronstein, M., Rodolà, E., Rossi, L., & Torsello, A. (2022). 3d shape analysis through a quantum lens: The average mixing kernel signature. International Journal of Computer Vision, 130(6), 1474–1493.

Crammer, K., & Singer, Y. (2001). On the algorithmic implementation of multiclass kernel-based vector machines. Journal of Machine Learning Research, 2, 265–292.

Dai, A., Chang, A. X., Savva, M., Halber, M., Funkhouser, T., & Nießner, M. (2017). Scannet: Richly-annotated 3d reconstructions of indoor scenes. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 5828–5839).

Devlin, J., Chang, M. W., Lee, K., & Toutanova, K. (2018). Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805.

Doersch, C., Gupta, A., & Zisserman, A. (2020). Crosstransformers: Spatially-aware few-shot transfer. Advances in Neural Information Processing Systems, 33, 21981–21993.

Feng, H., Liu, W., Wang, Y., & Liu, B. (2022). Enrich features for few-shot point cloud classification. In International Conference on Acoustics, Speech, and Signal Processing.

Feng, Y., Zhang, Z., Zhao, X., Ji, R., & Gao, Y. (2018). Gvcnn: Group-view convolutional neural networks for 3d shape recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 264–272).

Finn, C., Abbeel, P., & Levine, S. (2017). Model-agnostic meta-learning for fast adaptation of deep networks. In International conference on machine learning (pp. 1126–1135). PMLR.

Garcia, V., & Bruna, J. (2017). Few-shot learning with graph neural networks. arXiv preprint arXiv:1711.04043.

Guo, Y., Wang, H., Hu, Q., Liu, H., Liu, L., & Bennamoun, M. (2020). Deep learning for 3d point clouds: A survey. IEEE Transactions on Pattern Analysis and Machine Intelligence, 43(12), 4338–4364.

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 770–778).

Johnson, A. E., & Hebert, M. (1999). Using spin images for efficient object recognition in cluttered 3d scenes. IEEE Transactions on Pattern Analysis and Machine Intelligence, 21(5), 433–449.

Lee, K., Maji, S., Ravichandran, A., & Soatto, S. (2019). Meta-learning with differentiable convex optimization. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 10657–10665).

Liao, Y., Zhu, H., Zhang, Y., Ye, C., Chen, T., & Fan, J. (2021). Point cloud instance segmentation with semi-supervised bounding-box mining. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44(12), 10159–10170.

Li, Y., Bu, R., Sun, M., Wu, W., Di, X., & Chen, B. (2018). Pointcnn: Convolution on x-transformed points. Advances in Neural Information Processing Systems, 31, 828–838.

Li, R., Li, X., Heng, P. A., & Fu, C. W. (2020b). Pointaugment: An auto-augmentation framework for point cloud classification. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 6378–6387).

Li, A., Lu, Z., Guan, J., Xiang, T., Wang, L., & Wen, J. R. (2020). Transferrable feature and projection learning with class hierarchy for zero-shot learning. International Journal of Computer Vision, 128(12), 2810–2827.

Liu, Y., Fan, B., Meng, G., Lu, J., Xiang, S., & Pan, C. (2019a). Densepoint: Learning densely contextual representation for efficient point cloud processing. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 5239–5248).

Liu, Y., Fan, B., Xiang, S., & Pan, C. (2019b). Relation-shape convolutional neural network for point cloud analysis. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 8895–8904).

Luo, X., Xu, J., & Xu, Z. (2022). Channel importance matters in few-shot image classification. In International conference on machine learning (pp. 14542–14559).

Mangla, P., Singh, M., Sinha, A., Kumari, N., Balasubramanian, V. N., & Krishnamurthy, B. (2019). Charting the right manifold: Manifold mixup for few-shot learning. In: Workshop on Applications of Computer Vision (pp. 2218–2227).

Maturana, D., & Scherer, S. (2015). Voxnet: A 3d convolutional neural network for real-time object recognition. In 2015 IEEE/RSJ international conference on intelligent robots and systems (IROS) (pp. 922–928). IEEE.

Qi, C. R., Su, H., Mo, K., & Guibas, L. J. (2017a). Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 652–660).

Qi, C. R., Su, H., Nießner, M., Dai, A., Yan, M., & Guibas, L. J. (2016). Volumetric and multi-view cnns for object classification on 3d data. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 5648–5656).

Qi, C. R., Yi, L., Su, H., & Guibas, L. J. (2017). Pointnet++: Deep hierarchical feature learning on point sets in a metric space. Advances in Neural Information Processing Systems, 30, 5105–5114.

Rao, Y., Lu, J., Zhou, J. (2019). Spherical fractal convolutional neural networks for point cloud recognition. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 452–460).

Ravi, S., & Larochelle, H. (2017). Optimization as a model for few-shot learning. In International conference on learning representations.

Ren, M., Triantafillou, E., Ravi, S., Snell, J., Swersky, K., Tenenbaum, J. B., Larochelle, H., & Zemel, R. S. (2018). Meta-learning for semi-supervised few-shot classification. In International conference on learning representations.

Riegler, G., Osman Ulusoy, A., & Geiger, A. (2017). Octnet: Learning deep 3d representations at high resolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3577–3586).

Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., Huang, Z., Karpathy, A., Khosla, A., Bernstein, M., & Berg, A. C. (2015). Imagenet large scale visual recognition challenge. International journal of computer vision, 115(3), 211–252.

Rusu, R. B., Blodow, N., & Beetz, M. (2009). Fast point feature histograms (FPFH) for 3d registration. In 2009 IEEE international conference on robotics and automation (pp. 3212–3217). IEEE.

Shao, T., Yang, Y., Weng, Y., Hou, Q., & Zhou, K. (2018). H-CNN: Spatial hashing based CNN for 3d shape analysis. IEEE Transactions on Visualization and Computer Graphics, 26(7), 2403–2416.

Sharma, C., & Kaul, M. (2020). Self-supervised few-shot learning on point clouds. Advances in Neural Information Processing Systems, 33, 7212–7221.

Snell, J., Swersky, K., & Zemel, R. (2017). Prototypical networks for few-shot learning. Advances in Neural Information Processing Systems, 30, 4080–4090.

Stojanov, S., Thai, A., & Rehg, J. M. (2021). Using shape to categorize: Low-shot learning with an explicit shape bias. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 1798–1808).

Su, H., Jampani, V., Sun, D., Maji, S., Kalogerakis, E., Yang, M. H., & Kautz, J. (2018). Splatnet: Sparse lattice networks for point cloud processing. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 2530–2539).

Su, H., Maji, S., Kalogerakis, E., & Learned-Miller, E. (2015). Multi-view convolutional neural networks for 3d shape recognition. In Proceedings of the IEEE international conference on computer vision (pp. 945–953).

Sung, F., Yang, Y., Zhang, L., Xiang, T., Torr, P. H., & Hospedales, T. M. (2018). Learning to compare: Relation network for few-shot learning. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1199–1208).

Tanner, M. A., & Wong, W. H. (1987). The calculation of posterior distributions by data augmentation. Journal of the American statistical Association, 82(398), 528–540.

Uy, M. A., Pham, Q. H., Hua, B. S., Nguyen, T., & Yeung, S. K. (2019). Revisiting point cloud classification: A new benchmark dataset and classification model on real-world data. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 1588–1597).

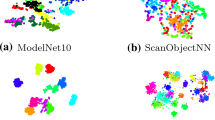

Van der Maaten, L., & Hinton, G. (2008). Visualizing data using t-SNE. Journal of Machine Learning Research, 9(86), 2579–2605.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, L., & Polosukhin, I. (2017). Attention is all you need. Advances in Neural Information Processing Systems, 30, 6000–6010.

Vinyals, O., Blundell, C., Lillicrap, T., & Wierstra, D. (2016). Matching networks for one shot learning. Advances in Neural Information Processing Systems, 29, 3637–3645.

Wang, Y., Chao, W. L., Weinberger, K. Q., & van der Maaten, L. (2019a). Simpleshot: Revisiting nearest-neighbor classification for few-shot learning. arXiv preprint arXiv:1911.04623.

Wang, Y., Sun, Y., Liu, Z., Sarma, S. E., Bronstein, M. M., & Solomon, J. M. (2019). Dynamic graph CNN for learning on point clouds. ACM Transactions on Graphics (ToG), 38(5), 1–12.

Wu, Z., Song, S., Khosla, A., Yu, F., Zhang, L., Tang, X., & Xiao, J. (2015). 3d shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1912–1920).

Xu, W., Xian, Y., Wang, J., Schiele, B., & Akata, Z. (2022). Attribute prototype network for any-shot learning. International Journal of Computer Vision, 130(7), 1735–1753.

Ye, H. J., Hu, H., & Zhan, D. C. (2021). Learning adaptive classifiers synthesis for generalized few-shot learning. International Journal of Computer Vision, 129(6), 1930–1953.

Ye, C., Zhu, H., Liao, Y., Zhang, Y., Chen, T., & Fan, J. (2022). What makes for effective few-shot point cloud classification? In Proceedings of the IEEE/CVF winter conference on applications of computer vision (pp 1829–1838).

Yu, T., Meng, J., & Yuan, J. (2018). Multi-view harmonized bilinear network for 3d object recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp 186–194).

Yu, X., Tang, L., Rao, Y., Huang, T., Zhou, J., & Lu, J. (2021). Point-bert: Pre-training 3d point cloud transformers with masked point modeling. arXiv preprint arXiv:2111.14819.

Zhang, Z., Hua, B. S., & Yeung, S. K. (2022). Riconv++: Effective rotation invariant convolutions for 3d point clouds deep learning. International Journal of Computer Vision, 130(5), 1228–1243.

Zhong, Y. (2009). Intrinsic shape signatures: A shape descriptor for 3d object recognition. 2009 IEEE 12th international conference on computer vision workshops (pp. 689–696). ICCV Workshops, IEEE.

Acknowledgements

This work is supported by National Natural Science Foundation of China (Nos. 62071127, U1909207 and 62101137), Zhejiang Lab Project (No. 2021KH0AB05), the Agency for Science, Technology and Research (A*STAR) under its AME Programmatic Funding Scheme (Project A18A2b0046), A*STAR Robot HTPO Seed Fund (Project C211518008), and Economic Development Board (EDB) OSTIN STDP Grant (Project, S22-19016-STDP).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Ming-Hsuan Yang.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ye, C., Zhu, H., Zhang, B. et al. A Closer Look at Few-Shot 3D Point Cloud Classification. Int J Comput Vis 131, 772–795 (2023). https://doi.org/10.1007/s11263-022-01731-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-022-01731-4