Abstract

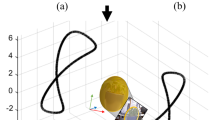

In this paper, we propose a method for initial camera pose estimation from just a single image which is robust to viewing conditions and does not require a detailed model of the scene. This method meets the growing need of easy deployment of robotics or augmented reality applications in any environments, especially those for which no accurate 3D model nor huge amount of ground truth data are available. It exploits the ability of deep learning techniques to reliably detect objects regardless of viewing conditions. Previous works have also shown that abstracting the geometry of a scene of objects by an ellipsoid cloud allows to compute the camera pose accurately enough for various application needs. Though promising, these approaches use the ellipses fitted to the detection bounding boxes as an approximation of the imaged objects. In this paper, we go one step further and propose a learning-based method which detects improved elliptic approximations of objects which are coherent with the 3D ellipsoids in terms of perspective projection. Experiments prove that the accuracy of the computed pose significantly increases thanks to our method. This is achieved with very little effort in terms of training data acquisition—a few hundred calibrated images of which only three need manual object annotation. Code and models are released at https://gitlab.inria.fr/tangram/3d-aware-ellipses-for-visual-localization.

Similar content being viewed by others

References

Arandjelovic, R., Gronát, P., Torii, A., Pajdla,T., & Sivic, J. (2016). Netvlad: CNN architecture for weakly supervised place recognition. In IEEE conference on computer vision and pattern recognition, CVPR 2016, Las Vegas, NV, USA, June 27–30, 2016 (pp. 5297–5307). IEEE Computer Society. Retrieved from https://doi.org/10.1109/CVPR.2016.572

Babenko, A., Slesarev, A., Chigorin, A., & Lempitsky, V. S. (2014) Neural codes for image retrieval. In: D. J. Fleet, T. Pajdla, B. Schiele, T. Tuytelaars (Eds.), Proceedings of 13th European conference on computer vision—ECCV 2014, Part I, Zurich, Switzerland, September 6–12, 2014.Lecture notes in computer science (Vol. 8689, pp. 584–599). Springer.

Brachmann, E., Krull, A., Nowozin, S., Shotton, J., Michel, F., Gumhold, S., & Rother, C. (2017). DSAC-differentiable RANSAC for camera localization. In IEEE conference on computer vision and pattern recognition, CVPR 2017, Honolulu, HI, USA, July 21-26, 2017 (pp. 2492–2500). IEEE Computer Society.

Brachmann, E., Michel, F., Krull, A., Yang, M. Y., Gumhold, S., & Rother, C. (2016). Uncertainty-driven 6d pose estimation of objects and scenes from a single RGB image. In IEEE conference on computer vision and pattern recognition, CVPR 2016, Las Vegas, NV, USA, June 27-30, 2016 (pp. 3364–3372). IEEE Computer Society. https://doi.org/10.1109/CVPR.2016.366

Brachmann, E., & Rother, C. (2018). Learning less is more-6d camera localization via 3d surface regression. In IEEE conference on computer vision and pattern recognition, CVPR 2018, Salt Lake City, UT, USA, June 18-22, 2018 (pp. 4654–4662). IEEE Computer Society.

Bui, M., Albarqouni, S., Ilic, S., & Navab, N. (2018). Scene coordinate and correspondence learning for image-based localization. In British machine vision conference 2018, BMVC 2018, Newcastle, UK, September 3-6, 2018 (p. 3). BMVA Press. Retrieved from http://bmvc2018.org/contents/papers/0523.pdf

Carion, N., Massa, F., Synnaeve, G., Usunier, N., Kirillov, A., & Zagoruyko, S. (2020). End-to-end object detection with transformers. In A. Vedaldi, H. Bischof, T. Brox, J. Frahm (Eds.), Proceedings of 16th European conference on computer vision—ECCV 2020, Part I, Glasgow, UK, August 23-28, 2020. Lecture notes in computer science (Vol. 12346, pp. 213–229). Springer. https://doi.org/10.1007/978-3-030-58452-8_13

Delhumeau, J., Gosselin, P. H., Jégou, H., & Pérez, P. (2013). Revisiting the VLAD image representation. In A. Jaimes, N. Sebe, N. Boujemaa, D. Gatica-Perez, D. A. Shamma, M. Worring, & R. Zimmermann (Eds.), ACM multimedia conference, MM ’13, Barcelona, Spain, October 21-25, 2013 (pp. 653–656). ACM.

DeTone, D., Malisiewicz, T., & Rabinovich, A. (2018). Superpoint: Self-supervised interest point detection and description. In: IEEE conference on computer vision and pattern recognition workshops, CVPR workshops 2018, Salt Lake City, UT, USA, June 18-22, 2018 (pp. 224–236). IEEE Computer Society.

Dong, W., Roy, P., Peng, C., & Isler, V. (2021). Ellipse R-CNN: Learning to infer elliptical object from clustering and occlusion. IEEE Transactions on Image Processing, 30, 2193–2206. https://doi.org/10.1109/TIP.2021.3050673.

Gaudillière, V., Simon, G., & Berger, M. O. (2019). Camera relocalization with ellipsoidal abstraction of objects. In 18th IEEE international symposium on mixed and augmented reality—ISMAR 2019, Beijing, China (pp. 19–29). Retrieved from https://hal.archives-ouvertes.fr/hal-02170784

Gaudillière, V., Simon, G., & Berger, M. O. (2020). Perspective-2-ellipsoid: Bridging the gap between object detections and 6-DoF camera pose. IEEE Robotics and Automation Letters, 5(4), 5189–5196.

He, K., Gkioxari, G., Dollár, P., & Girshick, R. B. (2020). Mask R-CNN. IEEE Transactions on Pattern Analysis and Machine Intelligence, 42(2), 386–397.

Hinterstoisser, S., Lepetit, V., Ilic, S., Holzer, S., Bradski, G. R., Konolige, K., & Navab, N. (2012). Model based training, detection and pose estimation of texture-less 3d objects in heavily cluttered scenes. In K. M. Lee, Y. Matsushita, J. M. Rehg, Z. Hu (Eds.), Proceedings of 11th Asian conference on computer vision—ACCV 2012, Daejeon, Korea, November 5-9, 2012, Revised Selected Papers, Part I. Lecture notes in computer science (Vol. 7724, pp. 548–562). Springer.

Hodaň, T., Haluza, P., Obdržálek, Š., Matas, J., Lourakis, M., & Zabulis, X. (2017). T-LESS: An RGB-D dataset for 6D pose estimation of texture-less objects. In IEEE winter conference on applications of computer vision (WACV).

Jégou, H., Douze, M., Schmid, C., & Pérez, P. (2010). Aggregating local descriptors into a compact image representation. In The twenty-third IEEE conference on computer vision and pattern recognition, CVPR 2010, San Francisco, CA, USA, 13-18 June 2010 (pp. 3304–3311). IEEE Computer Society.

Kehl, W., Manhardt, F., Tombari, F., Ilic, S., & Navab, N. (2017). SSD-6D: Making RGB-based 3D detection and 6D pose estimation great again. In IEEE international conference on computer vision, ICCV 2017, Venice, Italy, October 22-29, 2017 (pp. 1530–1538). IEEE Computer Society.

Kendall, A., & Cipolla, R. (2016). Modelling uncertainty in deep learning for camera relocalization. In IEEE international conference on robotics and automation (pp. 4762–4769).

Kendall, A., & Cipolla, R. (2017). Geometric loss functions for camera pose regression with deep learning. In IEEE conference on computer vision and pattern recognition (pp. 5974–5983).

Kendall, A., Grimes, M., & Cipolla, R. (2015). Posenet: A convolutional network for real-time 6-dof camera relocalization. In IEEE international conference on computer vision, ICCV 2015, Santiago, Chile, December 7-13, 2015 (pp. 2938–2946). IEEE Computer Society.

Kingma, D. P., & Ba, J. (2015). Adam: A method for stochastic optimization. In Y. Bengio, & Y. LeCun (Eds.), 3rd International conference on learning representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference track proceedings. http://arxiv.org/abs/1412.6980

Li, Y., Snavely, N., Huttenlocher, D., & Fua, P. (2012). Worldwide pose estimation using 3d point clouds. In: A. W. Fitzgibbon, S. Lazebnik, P. Perona, Y. Sato, & C. Schmid (Eds.), 12th European conference on computer vision—ECCV 2012, Florence, Italy, October 7-13, 2012, Proceedings, Part I. Lecture notes in computer science (Vol. 7572, pp. 15–29). Springer.

Li, Z., Wang, G., & Ji, X. (2019). CDPN: Coordinates-based disentangled pose network for real-time RGB-based 6-dof object pose estimation. In IEEE/CVF international conference on computer vision, ICCV 2019, Seoul, Korea (South), October 27-November 2, 2019 (pp. 7677–7686). IEEE.

Lowe, D. G. (2004). Distinctive image features from scale-invariant keypoints. International Journal of Computer Vision, 60(2), 91–110.

Melekhov, I., Ylioinas, J., Kannala, J., & Rahtu, E. (2017). Image-based localization using hourglass networks. In IEEE International conference on computer vision (pp. 879–886).

Mousavian, A., Anguelov, D., Flynn, J., & Kosecka, J. (2017). 3d bounding box estimation using deep learning and geometry. In IEEE conference on computer vision and pattern recognition, CVPR 2017, Honolulu, HI, USA, July 21-26, 2017 (pp. 5632–5640). IEEE Computer Society.

Nicholson, L., Milford, M., & Sünderhauf, N. (2019). QuadricSLAM: Dual quadrics from object detections as landmarks in object-oriented slam. IEEE Robotics and Automation Letters, 4, 1–8.

Nistér, D., & Stewénius, H. (2006). Scalable recognition with a vocabulary tree. In IEEE computer society conference on computer vision and pattern recognition (CVPR 2006), 17-22 June 2006, New York, NY, USA (pp. 2161–2168). IEEE Computer Society.

Pan, S., Fan, S., Wong, S. W. K., Zidek, J. V., & Rhodin, H. (2021). Ellipse detection and localization with applications to knots in sawn lumber images. In IEEE winter conference on applications of computer vision, WACV 2021, Waikoloa, HI, USA, January 3-8, 2021 (pp. 3891–3900). IEEE.

Park, K., Patten, T., & Vincze, M. (2019). Pix2pose: Pixel-wise coordinate regression of objects for 6d pose estimation. In IEEE/CVF international conference on computer vision, ICCV 2019, Seoul, Korea (South), October 27-November 2, 2019 (pp. 7667–7676). IEEE. https://doi.org/10.1109/ICCV.2019.00776

Paschalidou, D., Ulusoy, A. O., & Geiger, A. (2019). Superquadrics revisited: Learning 3d shape parsing beyond cuboids. In IEEE conference on computer vision and pattern recognition, CVPR 2019, Long Beach, CA, USA, June 16-20, 2019 (pp. 10344–10353). Computer Vision Foundation/IEEE.

Peng, S., Liu, Y., Huang, Q., Zhou, X., & Bao, H. (2019). Pvnet: Pixel-wise voting network for 6dof pose estimation. In IEEE conference on computer vision and pattern recognition, CVPR 2019, Long Beach, CA, USA, June 16-20, 2019 (pp. 4561–4570). Computer Vision Foundation/IEEE.

Perronnin, F., Liu, Y., Sánchez, J., & Poirier, H. (2010). Large-scale image retrieval with compressed fisher vectors. In The twenty-third IEEE conference on computer vision and pattern recognition, CVPR 2010, San Francisco, CA, USA, 13-18 June 2010 (pp. 3384–3391). IEEE Computer Society.

Philbin, J., Chum, O., Isard, M., Sivic, J., & Zisserman, A. (2007). Object retrieval with large vocabularies and fast spatial matching. In IEEE computer society conference on computer vision and pattern recognition (CVPR 2007), 18-23 June 2007, Minneapolis, Minnesota, USA. IEEE Computer Society.

Piasco, N., Sidibé, D., Demonceaux, C., & Gouet-Brunet, V. (2019). Perspective-n-learned-point: Pose estimation from relative depth. In 30th British machine vision conference 2019, BMVC 2019, Cardiff, UK, September 9-12, 2019 (p. 14). BMVA Press. Retrieved from https://bmvc2019.org/wp-content/uploads/papers/0981-paper.pdf

Rad, M., & Lepetit, V. (2017). BB8: A scalable, accurate, robust to partial occlusion method for predicting the 3d poses of challenging objects without using depth. In IEEE international conference on computer vision, ICCV 2017, Venice, Italy, October 22-29, 2017 (pp. 3848–3856). IEEE Computer Society.

Redmon, J., & Farhadi, A. (2017). YOLO9000: Better, faster, stronger. In CVPR.

Ren, S., He, K., Girshick, R. B., & Sun, J. (2015). Faster R-CNN: Towards real-time object detection with region proposal networks. In: C. Cortes, N. D. Lawrence, D. D. Lee, M. Sugiyama, & R. Garnett (Eds.), Advances in neural information processing systems 28: Annual conference on neural information processing systems 2015, December 7-12, 2015, Montreal, Quebec, Canada (pp. 91–99).

Rosenhahn, B., Brox, T., Cremers, D., & Seidel, H. (2006). A comparison of shape matching methods for contour based pose estimation. In R. Reulke, U. Eckardt, B. Flach, U. Knauer, & K. Polthier (Eds.), 11th International workshop on combinatorial image analysis, IWCIA 2006, Berlin, Germany, June 19-21, 2006, Proceedings. Lecture notes in computer science (Vol. 4040, pp. 263–276). Springer. https://doi.org/10.1007/11774938_21

Rubino, C., Crocco, M., & Bue, A. D. (2018). 3d object localisation from multi-view image detections. IEEE Transactions on Pattern Analysis and Machine Intelligence, 40, 1281–1294.

Rublee, E., Rabaud, V., Konolige, K., & Bradski, G. R. (2011). ORB: An efficient alternative to SIFT or SURF. In D. N. Metaxas, L. Quan, A. Sanfeliu, & L. V. Gool (Eds.), IEEE International conference on computer vision, ICCV 2011, Barcelona, Spain, November 6-13, 2011 (pp. 2564–2571). IEEE Computer Society.

Sarlin, P., DeTone, D., Malisiewicz, T., & Rabinovich, A. (2020). Superglue: Learning feature matching with graph neural networks. In IEEE/CVF conference on computer vision and pattern recognition, CVPR 2020, Seattle, WA, USA, June 13-19, 2020 (pp. 4937–4946). IEEE.

Sattler, T., Leibe, B., & Kobbelt, L. (2012). Improving image-based localization by active correspondence search. In: A. W. Fitzgibbon, S. Lazebnik, P. Perona, Y. Sato, & C. Schmid (Eds.), 12th European conference on computer vision—ECCV 2012, Florence, Italy, October 7-13, 2012, Proceedings, Part I. Lecture notes in computer science (Vol. 7572, pp. 752–765). Springer.

Sattler, T., Zhou, Q., Pollefeys, M., & Leal-Taixé, L. (2019). Understanding the limitations of CNN-based absolute camera pose regression. In IEEE conference on computer vision and pattern recognition, CVPR 2019, Long Beach, CA, USA, June 16-20, 2019 (pp. 3302–3312). Computer Vision Foundation/IEEE.

Sattler, T., Leibe, B., & Kobbelt, L. (2017). Efficient & effective prioritized matching for large-scale image-based localization. IEEE Transactions on Pattern Analysis and Machine Intelligence, 39(9), 1744–1756.

Shotton, J., Glocker, B., Zach, C., Izadi, S., Criminisi, A., & Fitzgibbon, A. W. (2013). Scene coordinate regression forests for camera relocalization in RGB-D images. In IEEE conference on computer vision and pattern recognition, Portland, OR, USA, June 23-28, 2013 (pp. 2930–2937). IEEE Computer Society.

Sivic, J., & Zisserman, A. (2003). Video google: A text retrieval approach to object matching in videos. In 9th IEEE international conference on computer vision (ICCV 2003), 14-17 October 2003, Nice, France (pp. 1470–1477). IEEE Computer Society.

Sundermeyer, M., Marton, Z. C., Durner, M., Brucker, M., & Triebel, R. (2018). Implicit 3D orientation learning for 6D object detection from RGB images. In V. Ferrari, M. Hebert, C. Sminchisescu, & Y. Weiss (Eds.), Computer vision—ECCV 2018 (pp. 712–729). Springer.

Taira, H., Okutomi, M., Sattler, T., Cimpoi, M., Pollefeys, M., Sivic, J., Pajdla, T., & Torii, A. (2018). InLoc: Indoor visual localization with dense matching and view synthesis. In IEEE conference on computer vision and pattern recognition, CVPR 2018, Salt Lake City, UT, USA, June 18-22, 2018 (pp. 7199–7209). IEEE Computer Society.

Tekin, B., Sinha, S. N., & Fua, P. (2018). Real-time seamless single shot 6D object pose prediction. In IEEE conference on computer vision and pattern recognition, CVPR 2018, Salt Lake City, UT, USA, June 18-22, 2018 (pp. 292–301).

Walch, F., Hazirbas, C., Leal-Taixé, L., Sattler, T., Hilsenbeck, S., & Cremers, D. (2017). Image-based localization using lstms for structured feature correlation. In IEEE international conference on computer vision, ICCV 2017, Venice, Italy, October 22-29, 2017 (pp. 627–637). IEEE Computer Society.

Wang, H., Sridhar, S., Huang, J., Valentin, J., Song, S., & Guibas, L. J. (2019). Normalized object coordinate space for category-level 6d object pose and size estimation. In IEEE conference on computer vision and pattern recognition, CVPR 2019, Long Beach, CA, USA, June 16-20, 2019 (pp. 2642–2651). Computer Vision Foundation/IEEE.

Weinzaepfel, P., Csurka, G., Cabon, Y., & Humenberger, M. (2019). Visual localization by learning objects-of-interest dense match regression. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR)

Yang, S., & Scherer, S. A. (2019). Cubeslam: Monocular 3-d object SLAM. IEEE Transactions on Robotics, 35(4), 925–938.

Yang, C., Simon, G., See, J., Berger, M. O., & Wang, W. (2020). WatchPose: A view-aware approach for camera pose data collection in industrial environments. Sensors, 20(11), 3045.

Yi, K. M., Trulls, E., Lepetit, V., & Fua, P. (2016). LIFT: Learned invariant feature transform. In: B. Leibe, J. Matas, N. Sebe, & M. Welling (Eds.), 14th European conference—ECCV 2016, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part VI. Lecture notes in computer science (Vol. 9910, pp. 467–483). Springer.

Zakharov, S., Shugurov, I., & Ilic, S. (2019). DPOD: 6d pose object detector and refiner. In IEEE/CVF international conference on computer vision, ICCV 2019, Seoul, Korea (South), October 27-November 2, 2019 (pp. 1941–1950). IEEE.

Zins, M., Simon, G., & Berger, M. O. (2020). 3D-aware ellipse prediction for object-based camera pose estimation. In International virtual conference on 3D vision—3DV 2020. Fukuoka/Virtual, Japan.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by A. Hilton.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zins, M., Simon, G. & Berger, MO. Object-Based Visual Camera Pose Estimation From Ellipsoidal Model and 3D-Aware Ellipse Prediction. Int J Comput Vis 130, 1107–1126 (2022). https://doi.org/10.1007/s11263-022-01585-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-022-01585-w