Abstract

Although various forms of explicit feedback such as ratings and reviews are important for recommenders, they are notoriously difficult to collect. However, beyond attributing these difficulties to user effort, we know surprisingly little about user motivations. Here, we provide a behavioral account of explicit feedback’s sparsity problem by modeling a range of constructs on the rating and review intentions of US food delivery platform users, using data collected from a structured survey (n = 796). Our model, combining the Technology Acceptance Model and Theory of Planned Behavior, revealed that standard industry practices for feedback collection appear misaligned with key psychological influences of behavioral intentions. Most notably, rating and review intentions were most influenced by subjective norms. This means that while most systems directly request feedback in user-to-provider relationships, eliciting them through social ties that manifest in user-to-user relationships is likely more effective. Secondly, our hypothesized dimensions of feedback’s perceived usefulness recorded insubstantial effect sizes on feedback intentions. These findings offered clues for practitioners to improve the connection between providing behaviors and recommendation benefits through contextualized messaging. In addition, perceived pressure and users’ high stated ability to provide feedback recorded insignificant effects, suggesting that frequent feedback requests may be ineffective. Lastly, privacy concerns recorded insignificant effects, hinting that the personalization-privacy paradox might not apply to preference information such as ratings and reviews. Our results provide a novel understanding of explicit feedback intentions to improve feedback collection in food delivery and beyond.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Recommendation systems are one of the most significant applications of artificial intelligence in consumer search and decision making. They have transformed how users search and interact online by computing vast amounts of data to learn individual preferences and present personalized content (Konstan and Riedl 2012). Many recommendation systems predict and rank items based on feedback data collected through user–recommender interactions (Zhang et al. 2019; Aggarwal 2014; Jugovac et al. 2017). Although user feedback behaviors can be thought to exist on a continuum rather than distinct categories (Jannach et al. 2018), those collected in the back end of information systems such as clicks and views are typically called ‘implicit feedback’ (Jawaheer et al. 2014). In contrast, more direct preference statements actively elicited from users such as ratings and reviews are known as ‘explicit feedback’ (Jawaheer et al. 2014) (see Fig. 1 for an illustration of different types of feedback). It is commonly acknowledged that explicit forms of feedback are far more difficult to collect because they pose greater cognitive efforts on the user (Jawaheer et al. 2014; Oard and Marchionini 1996). However, beyond these generic explanations, we currently lack an empirical understanding of the psychological influences that motivate explicit feedback behaviors.

What results from the lack of user feedback is the sparsity problem, in which recommenders predict and rank items based on extremely sparse user–item matrices (Aggarwal 2014; Isinkaye et al. 2015). To overcome this sparsity problem, a range of factorization and clustering techniques were initially developed (Idrissi and Zellou 2020; Sarwar et al. 2002). However, due to the relative abundance of implicit behavioral data such as clicks, views, and purchases, industry and academic attention gradually and overwhelmingly shifted to implicit feedback-based models (Jeunen 2019; Amatriain and Basilico 2016; Wang et al. 2021). One paper empirically justified this shift by finding that recommendations based on implicit feedback could predict items just as well as those based on explicit feedback (Zhao et al. 2018a). Moreover, several other studies over the years have found strong correlations and comparable accuracy metrics to support the use of implicit feedback (Akuma et al. 2016; Parra and Amatriain 2011; Jawaheer et al. 2010).

Despite this shift to implicit feedback-based models, explicit feedback should remain an important source of information because of its unique data characteristics for modeling user preferences. Firstly, explicit feedback is more expressive because, unlike implicit feedback, it measures both positive and negative user evaluations (Jawaheer et al. 2014). Many recommenders in academia and industry utilize explicit feedback’s valence to explore and exploit or ‘fine-tune’ user preferences (Zhao et al. 2018b; Steck et al. 2015; Fu et al. 2021; Wu et al. 2022). These features are particularly effective in recommendation systems with short-to-immediate feedback cycles where new items can be continuously updated and served from a large candidate pool—e.g., in real-time recommendations (Huyen 2022). In addition, several studies have illustrated that incorporating both forms of feedback into models can improve recommendation performance (Li and Chen 2016; Liu et al. 2017; Zhao et al. 2018a). Evidence also suggests that while implicit feedback-based systems are more engaging, they can increase negative actions, including user browsing effort, compared to rating-based recommenders (Zhao et al. 2018a). Moreover, beyond its value in recommenders, explicit feedback is also invaluable in consumer decision-making and marketing, influencing perceptions such as attitudes, credibility, and trust in online products and services (Flanagin et al. 2014; Ismagilova et al. 2020; Kim and Gambino 2016). Therefore, although explicit feedback has its own set of challenges, such as recommendation biases and rating consistency issues that are beyond the scope of this paper to examine (Amatriain et al. 2009; Chen et al. 2022), evidence supports the value of collecting explicit feedback for recommenders and consumers alike.

As explicit feedback helps personalize content, one may expect the emergence of positive feedback loops that incentivize users to provide more feedback as recommenders learn their preferences (Adomavicius et al. 2013; Shin 2020). However, reports from the literature indicate that explicit feedback provision rates in commercial settings have remained extremely low, such that a 1% full user–item matrix is considered ‘dense’ (Steck 2013; Xu et al. 2020; Dooms et al. 2011). This raises doubts over whether users are aware of and are motivated by the incremental benefits of providing explicit feedback to recommenders. Despite the proliferation of commercial recommenders, we currently lack an adequate behavioral understanding of the influences behind the data sparsity problem. Instead, most existing approaches in the literature frame sparsity as an algorithmic problem rather than one that also concerns user–recommender interactions (Singh 2020; Idrissi and Zellou 2020). This is despite some evidence suggesting that even small improvements to the degree of sparsity can positively affect recommendation accuracy (Zhang. et al. 2020).

From the user–recommender interaction perspective, exploring antecedents to feedback-providing intentions should be an essential first step. Yet, to this day, it is surprising how few studies have examined what motivates users to provide explicit feedback despite the many papers calling for more research over the years (Knijnenburg et al. 2012; Jawaheer et al. 2014; Mican et al. 2020; Kim and Kim 2018; Jugovac et al. 2017). Although some papers have investigated the effects of system features like algorithms (Knijnenburg et al. 2012), user-interface designs (Pommeranz et al. 2012; Sparling and Sen 2011; McNee et al. 2003; Schnabel et al. 2018), and contextualized messaging (Kobsa and Teltzrow 2005; Falconnet et al. 2021; Liao and Sundar 2021) on feedback-providing behaviors, past studies considering user motivations have only explored a limited range of possible constructs (Harper et al. 2005; Knijnenburg et al. 2012; Ziesemer et al. 2014) or have failed to specify antecedents relevant to motivating specific feedback behaviors (Kim and Kim 2018; Mican et al. 2020).

While substantial research efforts in neighboring research perspectives such as personal information and electronic-Word-of-Mouth (eWOM) have attempted to uncover constructs influencing rating and review behaviors (Mican et al. 2020; Ismagilova et al. 2021; Kim and Kim 2018; Picazo-Vela et al. 2010; Cheung and Lee 2012; Fu et al. 2015), empirical findings have not translated to meaningful improvements in data sparsity for recommendation systems. We suspect that this is because: (1) studies have not contextualized feedback behaviors for recommenders; (2) empirical findings are difficult to apply in practice; or (3) developers have not considered or successfully implemented interaction-based solutions that incorporate the findings.

To the best of our knowledge, no studies have applied established behavioral intention models from social psychology to examine constructs influencing specific explicit feedback intentions to recommenders. To address this research gap, we selected ratings and reviews as two representative forms of explicit feedback (Jawaheer et al. 2014) and explored a range of constructs that may influence providing intentions. We narrowed our research focus to food delivery platforms (e.g., DoorDash, Uber Eats, and Grubhub) because all major platforms collect ratings and reviews and actively develop in-house recommendation systems,Footnote 1Footnote 2 (Egg 2021).

Specifically, we aimed to answer the following research question:

-

RQ1 What constructs influence user intentions to provide ratings and reviews on food delivery platforms?

In answering this research question, we contribute to the literature by furthering the empirical understanding of the psychological influences underlying feedback-providing behaviors. By gaining an understanding of whether user motivations are aligned with current feedback collection practices, we aim to provide behavioral reasons for the persistent lack of explicit feedback. Our key findings on food delivery platforms, such as the influence of subjective norms, fundamentally challenge conventional thinking and practices surrounding explicit feedback elicitation. With careful consideration of domain differences, we believe that many of our actionable insights could be generalized and tested on recommenders in other contexts beyond food delivery platforms.

The rest of the paper is structured as follows: In Sect. 2, we review background information and related literature; in Sect. 3, we develop hypotheses and specify construct definitions for our research model; in Sect. 4, we outline the research methodology; in Sect. 5, we present the results; in Sect. 6 we discuss the key implications; in Sect. 7, we outline the study’s limitations and consider future research directions; and finally, in Sect. 8, we summarize our key findings and implications in the conclusion.

2 Background and related work

2.1 Explicit feedback providing intentions

Intentions to provide explicit feedback have received virtually no academic attention apart from the below exceptions. Harper et al. (2005) found that user enjoyment of the feedback process and other behavioral metrics such as ‘uncommon tastes’ predicted ratings. Knijnenburg et al. (2012) performed experiments that found recommendation choice satisfaction, perceived system effectiveness (experiment 1), and trust (experiment 2) positively influenced providing intentions, while system-specific privacy concerns (experiment 1) and effort to use the system (experiment 2) recorded negative effects. Although explicit feedback has received very little attention as a standalone behavior, intentions to provide ratings and reviews have been studied from other research perspectives, such as personal information (Kim and Kim 2018; Mican et al. 2020) and eWOM (Ismagilova et al. 2021; Picazo-Vela et al. 2010; Cheung and Lee 2012). However, despite apparent similarities, there are important behavioral differences to consider.

Firstly, compared to personal information in which names, addresses, and other information are generally accepted or provided in ‘one-off’ actions (Jeckmans et al. 2013), explicit feedback requires direct user input on every elicitation instance. As these represent very different actions, providing intentions should be measured by constructs that are relevant and specific to the individual behaviors (Fishbein and Ajzen 2009). Yet, recent studies in personal information considering explicit feedback have made no such attempts to specify compatible antecedents (Kim and Kim 2018; Mican et al. 2020). In contrast, eWOM studies have not controlled the presence of recommenders; thus, it remains to be seen if antecedent constructs will have similar effects.

Explicit feedback is a unique behavior because while its provision can complement and enhance recommendations, these benefits are not immediately evident to users (Kobsa and Teltzrow 2005; Jugovac et al. 2017). This is because there are delays between providing feedback and receiving recommendations (Swearingen and Sinha 2002), and recommenders are often not visible during feedback collection (Kobsa and Teltzrow 2005; Jugovac et al. 2017). Indeed, in the case of US food delivery platforms, we found that none of the three major platforms displayed recommenders during feedback elicitation (as at December 2021). Despite the subtle physical presence of recommendation systems in many commercial applications, we found that past studies have prefaced their research by providing users with summarized explanations of recommendation systems before administering questionnaires (Mican et al. 2020; Kim and Kim 2018). We believe this practice may introduce response biases from goal-framing effects by indirectly highlighting the benefits of providing feedback (Levin et al. 1998). Indeed, in said studies we found that individual indicators of explicit feedback disclosure intentions (Kim and Kim 2018) and user acceptance and storage attitudes (Mican et al. 2020) were up to 2.79 times higher than other forms of personal information and implicit feedback. We question the validity of these results as they contradict the typical data mix provided to recommenders, in which personal and implicit data are far more available than their explicit counterparts (Jawaheer et al. 2014). Therefore, to minimize these biases, we used a novel approach to indirectly ensure the presence of recommenders instead of alerting participants. We did so by targeting users in the USA on Prolific,Footnote 3 where more than 90% of the food delivery companies by market shareFootnote 4 use recommendation engines. In doing so, we aimed to ensure that the users’ response context was tied to recommenders without directly communicating the survey context. While noting these subtle but important differences between explicit feedback, personal information, and eWOM, antecedents that have been previously studied in these neighboring research perspectives are relevant to our understanding of feedback behaviors.

2.2 Personal information disclosure and the personalization–privacy paradox

Past studies on personal information disclosure to personalization services such as recommenders have mostly focused on the effects of privacy risk perceptions (Kim and Kim 2018; Knijnenburg et al. 2012; Kobsa et al. 2016; Li and Unger 2012; Liao et al. 2021). The central theory tested across many contexts is the notion of a privacy calculus, in which consumers weigh the perceived risks and benefits of information disclosure (Li 2012). It has also been well-cited as the personalization–privacy paradox to describe how personalization services come at the expense of user privacy (Awad and Krishnan 2006; Kobsa et al. 2016). To date, evidence from papers on recommendation systems suggests that privacy risks negatively impact disclosure intentions (Kobsa et al. 2016; Kim and Kim 2018; Knijnenburg et al. 2012), although earlier works indicated increased intentions in light of personalization benefits (Spiekermann et al. 2001; Kobsa and Teltzrow 2005).

At first glance, this body of research appears to have addressed our research questions. However, closer inspection reveals that prior studies have one or more of the following characteristics: (1) preference information such as ratings and reviews was specifically not included in the types of personal information studied (Kobsa et al. 2016; Knijnenburg and Kobsa 2013; Zhang et al. 2014); (2) privacy concerns were modeled for personal information as a single variable without separating the types of information such as preferences and personally identifiable information (Chellappa and Sin 2005; Li and Unger 2012; Awad and Krishnan 2006); and (3) privacy concerns were modeled on recommendation system-use rather than on data providing behaviors (Chellappa and Sin 2005; Li and Unger 2012; Burbach et al. 2018). Specifically, we question whether the personalization–privacy paradox extends to providing preferences through ratings and reviews since, in most recommendation contexts, these appear to pose far fewer privacy risks than the personally identifiable information provided by users' volition as a precondition of service use. For instance, on food delivery platforms, we consider personal information required to use the service, such as phone numbers, email addresses, delivery addresses, and payment details to pose greater risks. However, we note that past literature illustrates otherwise (Jeckmans et al. 2013).

The extant literature’s lack of focus on preference information is surprising since they are arguably far more important features for prediction and ranking tasks (Kobsa et al. 2016; Knijnenburg et al. 2013; Zhang et al. 2014). In one of the rare exceptions, Kim and Kim (2018) found that disclosure intentions of reviews, ratings, and preferences were positively influenced by self-efficacy and negatively influenced by the perceived severity of privacy risks associated with providing data to recommendation systems. In e-Commerce, Mican et al. (2020) found that the perceived usefulness of recommendations based on personal shopping behavior and general trends positively influenced user acceptance and storage of ratings, reviews, and interests. However, we question how practitioners could act on these antecedents to increase feedback beyond changing algorithms and associated messaging. Furthermore, as noted in section 2.1, these two studies did not specify antecedents to measure beliefs about specific providing behaviors, and user responses indicated biases from being alerted to the presence of recommenders in the survey. Overall, we found that the scope of previously studied constructs has been highly limited. Therefore, our study aimed to explore a range of other constructs affecting behavioral intentions, with a specific focus on establishing whether there is a relationship between privacy risks and preference information disclosure.

2.3 eWOM providing intentions

On the surface, there are few differences between eWOM and explicit feedback. Ratings, reviews, likes, and dislikes can all be classified as different forms of eWOM and explicit feedback. From a user–recommender perspective, a key difference between these ontologies is the tacit messaging intentions of users; explicit feedback assumes communicating relevance information to systems such as recommenders and search engines, whereas eWOM does not. We say the messaging intentions are ‘tacit’ because systems will generally not know whether ratings and reviews were directed at recommenders, restaurant owners, or other platform users (Fischer 2001). Although studies of eWOM provide insights into constructs that influence feedback behaviors, in order to answer our research questions, we need to measure intentions in the presence of recommenders as perceptions change according to the specific providing contexts (Fishbein and Ajzen 2009). Specifically, the existence of recommendation systems changes incentives such that users providing explicit feedback can receive direct rather than indirect benefits (e.g., altruism or opinion leadership) in the form of incrementally better recommendations (Xiao and Benbasat 2007; Knijnenburg et al. 2012).

Nevertheless, eWOM intentions have been studied broadly in a variety of contexts, such as opinion platforms (Cheung and Lee 2012; Hennig-Thurau et al. 2004), e-Commerce (Fu et al. 2015; Picazo-Vela et al. 2010), and hospitality and tourism (Wu et al. 2016; Yen and Tang 2015). Ismagilova et al. (2021) conducted a meta-analysis of constructs affecting providing intentions, broadly classifying them into personal (e.g., self-enhancement, commitment), perceptual (e.g., perceived risks, personal benefits), social (e.g., the influence of others, reciprocity), and consumption-based factors (e.g., customer satisfaction, involvement). Unlike the focus on privacy concerns in personal information, the selection of constructs has depended more on the research context. And in contexts closer to our study, we noted that providing intentions have been studied in restaurant and café settings, with a predominant focus on customer experiences (Kim 2017; Jeong and Jang 2011; Yang 2017; Kim et al. 2015).

2.4 Model selection

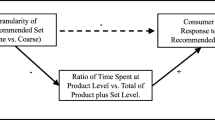

To test explicit feedback intentions, we initially selected the technology acceptance model (TAM) (Davis 1989; Davis et al. 1989). TAM is a robust model that has been empirically tested across various studies in different contexts (King and He 2006). Thus, we deemed it appropriate for the novel context of explicit feedback. However, as we were equally concerned about gaining useful and actionable insights, we extended our model with the theory of planned behavior (TPB) (Mathieson 1991; Li 2012). In similar contexts, TPB has explained intentions to provide online reviews (Picazo-Vela et al. 2010) and positive and negative eWOM (Fu et al. 2015). Ultimately, we selected the augmented approach that combines TAM and TPB (Taylor and Todd 1995). We further extended the model with constructs from the personal information and eWOM literature that were relevant to the context of our study. See Fig. 2 for an illustration of the TAM, TPB and augmented TAM-TPB models.

2.5 Food delivery platforms

Food delivery platforms experienced rapid growth during the coronavirus (COVID-19) pandemic (Kumar and Shah 2021; Yang et al. 2021). To stay ahead of the competition in this environment, leading food delivery platforms actively develop in-house recommendation systems. One engineer from Grubhub has even stated that “recommendations drive 80% of revenue” (Egg 2021), which highlights the vital role these systems play in delivering value to food delivery platforms. Previous research has also identified “the efficient searching and hunting of restaurants” as a key determinant in explaining food delivery app use (Ray et al. 2019). Ratings and reviews can fulfill this purpose by acting as effort-reducing heuristics to help customers identify target products (Hu et al. 2014). Thus, ratings and reviews act as valuable information sources for recommendation systems to deliver better recommendations, reduce browsing effort, and help customers determine which vendors to order from. Furthermore, unlike natively digital services such as music and video-streaming, meals delivered by food delivery applications are consumed entirely outside the application (i.e., in the physical world). This means that implicit feedback is far less effective in monitoring meal-level customer experiences. Consequently, how platforms collect and apply feedback should be seen as a key competitive advantage (i.e., differentiator).

This is why food delivery platforms appear to be constantly experimenting with different user-interface designs to obtain more explicit feedback. Even during our study period, we noticed platforms using variety of interfaces and feedback options for different users across regions. For our study, we reviewed the top three platforms by market share in the USA and found that they collected a mix of binary responses (thumbs up/down; yes/no questions), star ratings, reviews, and other textual feedback in which users selected predefined tags such as “Tasty.”Footnote 5,Footnote 6,Footnote 7 We noted that feedback was typically elicited for restaurants, menu items, and delivery drivers. To test our hypotheses, we focused on ratings and reviews of food-related experiences because all the largest platforms collected them and because they are widely adopted in other domains. We specifically excluded delivery feedback from our study because it is less relevant for product recommendations. One study also found that reviews on outcome qualities (e.g., taste, portion, etc.) were far more frequently given than on deliveries in the context of food delivery platforms (Yang et al. 2021).

3 Research model, hypotheses, and construct definitions

To explore the influence of constructs on the behavioral intentions of ratings and reviews (hereafter referred to as comments), we hypothesized two separate research models with the same structural relations, as shown in Fig. 3.

We describe the constructs and hypotheses below:

3.1 Behavior and behavioral intentions

We used self-reported past behavior as an indicator of behaviors (Fishbein and Ajzen 2009). Behavioral intentions are subjective probabilities assigned to perform a target behavior, where the stronger the intention, the more likely the person will perform the given behavior (Fishbein and Ajzen 2009). Intentions are generally higher than actual behaviors, and previous studies across many contexts have demonstrated significant positive correlations between these constructs (Fishbein and Ajzen 2009). We hypothesize that:

H1 Intentions to provide will positively affect the rating and comment behaviors.

3.2 Perceived usefulness (TAM)

After decades of research and development of recommendation systems across academia and industry, one may expect that there are positive feedback loops between feedback-providing actions and improved recommendation quality that incentivize users to consistently provide explicit feedback. However, data from the field show that explicit feedback provision has remained stubbornly low (Steck 2013; Xu et al. 2020; Dooms et al. 2011), suggesting that alternative mechanisms may explain this phenomenon. Are users not aware of the benefits of providing feedback? Or are these benefits insufficient to motivate user interactions? In our study, we specified perceived usefulness as a key measure to assess whether users were influenced by various benefits to provide ratings and comments to food delivery platforms.

Perceived usefulness was originally defined as “the degree to which a person believes that using a particular system would enhance his or her job performance” (Davis 1989). In our study, we conceptualized usefulness as multidimensional outcome expectations (i.e., the benefits or utility) of the feedback-providing behavior (Compeau and Higgins 1995; Xiao and Benbasat 2007). While our primary motivation is to investigate perceptions of recommendation benefits, feedback's perceived usefulness is not isolated to this dimension. Therefore, we considered feedback's utility more broadly from the literature to identify different facets. For example, the perceived usefulness of feedback can be seen as its ability to serve a positive or corrective message (Waldersee and Luthans 1994; Simonian and Brand 2022). And in eWOM, feedback’s purpose has been viewed as self-focused, other-focused, or company-focused (Kim 2017; Fu et al. 2015). Using these lenses, we identified four possible benefits from the related literature (Table 1).

Firstly, the perceived usefulness for preference learning is designed to measure user perceptions of how explicit feedback can help recommendation systems learn their preferences. If users perceive that systems can effectively learn their taste profiles through feedback behaviors, then we should expect correlations with feedback intentions. The related dimension of perceived usefulness for browsing optimization focuses on the benefits afforded by reduced search costs that are expected from providing explicit feedback to recommendation systems. For example, if a user gave a 5-star rating, the system could position that vendor (or similarly favorable vendors) in a more accessible location of the user interface to reduce browsing effort in future browsing instances. The perceived usefulness for information displayed to other users covers a more altruistic dimension that considers the benefits of providing feedback flowing to other users who may use the ratings and comments to inform purchase decisions. Finally, the perceived usefulness for order quality captures the idea that customer feedback can be used to correct or improve products and services. Taken together, we believe these four dimensions encompass the content domain of perceived usefulness for this study (MacKenzie et al. 2011). However, we do not make any claims about their broader content validity in other study contexts.

In previous studies, perceived usefulness has recorded significant positive effects on user acceptance attitudes and intentions (Mican et al. 2020; and Yang 2017). However, in contrast to these findings, Kim and Kim (2018) reported insignificant effects of perceived benefits, a concept that is very similar to perceived usefulness. Overall, we hypothesize that perceived usefulness will affect attitudes and behavioral intentions as follows:

3.2.1 Direct effects of perceived usefulness dimensions on attitudes

-

H2.1.PU-PL: Perceived usefulness for learning user preferences will positively affect attitudes toward ratings and comments.

-

H2.1.PU-BO: Perceived usefulness for browsing optimization will positively affect attitudes toward ratings and comments.

-

H2.1.PU-ID: Perceived usefulness for information displayed to other users will positively affect attitudes toward ratings and comments.

-

H2.1.PU-OQ: Perceived usefulness for order quality will positively affect attitudes toward ratings and comments.

3.2.2 Direct effects of perceived usefulness dimensions on behavioral intentions

-

H2.2.PU-PL: Perceived usefulness for learning user preferences will positively affect rating and comment intentions.

-

H2.2.PU-BO: Perceived usefulness for browsing optimization will positively affect rating and comment intentions.

-

H2.2.PU-ID: Perceived usefulness for information displayed to other users will positively affect rating and comment intentions.

-

H2.2.PU-OQ: Perceived usefulness for order quality will positively affect rating and comment intentions.

3.2.3 Indirect effects of perceived usefulness dimensions through attitudes on behavioral intentions

-

H2.3.PU-PL: The effect of perceived usefulness for learning user preferences on rating and comment intentions is mediated by attitudes.

-

H2.3.PU-BO: The effect of perceived usefulness for browsing optimization on rating and comment intentions is mediated by attitudes.

-

H2.3.PU-ID: The effect of perceived usefulness for information displayed to other users on rating and comment intentions is mediated by attitudes.

-

H2.3.PU-OQ: The effect of perceived usefulness for order quality on rating and comment intentions is mediated by attitudes.

3.3 Perceived ease of use (TAM)

Perceived ease of use is “the degree to which a person believes that using a particular system would be free from effort” (Davis 1989). It is modeled to affect perceived usefulness and attitudes in the augmented approach as a decomposition of attitudes toward technology use (Taylor and Todd 1995). However, as our conceptualization of perceived usefulness is specific to user expectations, it makes little sense to hypothesize relations between perceived usefulness and perceived ease of use (e.g., the ease of providing ratings should not affect its perceived usefulness for order quality). Therefore, our research model will only model the effects on attitudes. If explicit feedback features are easy to use, they are expected to lead to favorable user attitudes. Therefore, we hypothesize that:

H3: Perceived ease of use will positively affect attitudes toward ratings and comments.

3.4 Attitudes (TPB)

Attitude is defined in this study as a measure of one’s evaluative beliefs toward providing explicit feedback as favorable or unfavorable (Fishbein and Ajzen 2009). Attitudes were measured on a semantic differential scale with bipolar adjectives (e.g., good…bad) instead of using the expectancy-value model to avoid performing an elicitation study to identify specific attributes that shape attitudes toward providing feedback. Attitudes have recorded positive effects on behavioral intentions in many TPB studies (Armitage and Conner 2001), including for online reviews (Picazo-Vela et al. 2010). In the context of food delivery, users with positive attitudes toward ratings and comments are expected to have higher behavioral intentions. Therefore, we hypothesize that:

H4: Attitudes will positively affect rating and comment intentions.

3.5 Subjective Norms (TPB)

Subjective norms refer to “the perceived social pressure to perform or not perform a certain behavior” (Ajzen 1991). Subjective norms stem from two normative beliefs called injunctive and descriptive norms. Injunctive norms relate to perceptions people hold toward what important people in life think we should (or should not) perform. Descriptive norms concern whether we sense important people perform the behavior themselves (Fishbein and Ajzen 2009). For eWOM, the construct has been a significant predictor of intentions in past studies (Fu et al. 2015; Wolny and Mueller 2013). The presence or absence of perceived social norms to provide feedback should positively correlate with user intentions to provide ratings and comments. That is, users are more likely to want to provide feedback if they perceive that important people in their lives such as friends and family think it is important and provide feedback themselves. Therefore, we hypothesize that:

H5: Subjective norms will positively affect rating and comment intentions.

3.6 Perceived behavioral control (TPB)

Perceived behavioral control encompasses “the availability of information, skills, opportunities and other resources required to perform the behavior” (Fishbein and Ajzen 2009). In the TPB, the construct is modeled to affect the intention and behavior. Although PBC did not affect behavioral intentions in Picazo-Vela et al. (2010), self-efficacy, which shares conceptual similarities, reported significant effects in Kim and Kim (2018). It is expected that if users have high behavioral control over their ability to give feedback, they should have stronger rating and comment intentions. We hypothesize that:

H6.1: Perceived behavioral control will positively affect rating and comment intentions.

H6.2: Perceived behavioral control will positively affect rating and comment behaviors.

3.7 Feedback intent on positive and negative experiences

Customer experiences, often characterized by valence as positive and negative experiences, are key influences of feedback intentions in prior studies of restaurants and food services (Jeong and Jang 2011; Kim 2017; Yang 2017). In this study, we focused on whether positive and negative experiences trigger the provision of explicit feedback rather than measuring general satisfaction levels. This is because the effects of satisfaction on feedback intentions are expected to stem from idiosyncratic experiences of individual meals that are virtually immeasurable in online surveys. We expect that intentions to provide feedback after positive and negative experiences (hereafter as ‘feedback intent on positive experiences’ and ‘feedback intent on negative experiences’) will influence general user intentions to provide feedback as follows:

H7.1: Feedback intent on positive experiences will positively affect rating and comment intentions.

H7.2: Feedback intent on negative experiences will positively affect rating and comment intentions.

3.8 Privacy concerns

Privacy concerns are subjective views on the control and use of personal information (Malhotra et al. 2004). Privacy concerns and associated risk perceptions have recorded negative correlations with intentions to provide feedback (Kim and Kim 2018; Knijnenburg et al. 2012; Chellappa and Sin 2005). If users are concerned over how food delivery platforms handle their preference information, we expect these concerns to reduce the feedback intentions. Therefore, we hypothesize that:

H8: Privacy concerns will negatively affect rating and comment intentions.

3.9 Perceived pressure

Perceived pressure reflects perceptions toward the “degree of push” (e.g., follow-ups to provide feedback) users feel to provide feedback (Picazo-Vela et al. 2010). Because food delivery platforms actively request feedback through notifications and pop-ups after meals are delivered, we expect that users may feel pressure to provide ratings and comments. We noted that previous studies have found significant positive effects of perceived pressure on behavioral intentions (Picazo-Vela et al. 2010; Hardgrave et al. 2003). Specifically, in Picazo-Vela et al. (2010), perceived pressure was found to positively affect behavioral intentions to provide online reviews in an extended model of TPB. Therefore, we hypothesize that:

H9: Perceived pressure will positively affect rating and comment intentions.

4 Research methodology

4.1 Materials and methods

We recruited 800 food delivery platform users from the USA in two batches on December 17 and 27, 2021, through Prolific (www.prolific.com) and directed them to a structured survey on Qualtrics (www.qualtrics.com). Participants received an overview of food delivery platforms, plus definitions and illustrations of ratings and comments. Although the term reviews is more common across the literature, as both ratings and reviews start with the letter ‘r’ we wanted to make the difference in the target behaviors more salient by using the alternative word comments. We asked two pre-survey questions to confirm users’ understanding of the instructions, including the types and names of target feedback behaviors, and confirming that feedback provision is not compulsory on food delivery platforms. The answers to these questions were clear if participants read the materials carefully; thus, we restricted participation in the survey if they could not correctly answer the pre-survey questions. In the pre-survey materials, we intentionally avoided mentioning recommendation systems or personalization benefits in the survey as it may bias user responses.

We used a 7-point semantic differential scale with bipolar adjectives for attitudes and one of the past feedback behaviors. The remaining indicators for constructs were measured using a 7-point Likert scale ranging from “1 = strongly disagree” to “7 = strongly agree”. Survey questions were randomized within thematically related blocks on Qualtrics (consisting of approximately 10–15 statements per feedback type), and we also used two negatively worded questions to reduce response biases. The survey was designed so participants would take no longer than 15 min to complete, lessening any potential impacts of response fatigue (Revilla and Ochoa 2017).

4.2 Operationalization of constructs

To operationalize constructs for our study, we adapted instruments from prior studies (Table 5). For the dimensions of perceived usefulness, unique to this study, we adapted items by inserting our hypothesized outcome expectations into the grammatical frame used in Davis (1989). Since these dimensions were newly developed, we performed an exploratory factor analysis (EFA) over the observed responses to ensure that the factor structure existed as hypothesized. Indicators for positive and negative experiences, privacy concerns, and perceived pressure were operationalized as global constructs rather than for individual feedback behaviors because they relate more to perceptions of individual orders or the system. We altered these by replacing verb phrases such as “provide ratings” and “leave comments” with “provide feedback.” Participants were alerted to this change in the pre-survey explanations and as the behavioral targets changed to ensure they understood the target behaviors.

4.2.1 Statistical analyses

EFA was performed on the perceived usefulness dimensions using the ‘psych’ package (Revelle 2022) and RStudio (R Core Team 2020). We used polychoric correlations and selected oblique rotation (oblimin) with principal axis as the estimation method (Watkins 2018; Baglin 2014). After identification of the factor structure, we analyzed the data using partial least squares structural equation modeling (PLS-SEM) in SmartPLS 3.0 (Ringle et al. 2015) and SmartPLS 4.0 (Ringle et al. 2022).

Although TAM and TPB are well-developed theories, explicit feedback intentions are not well-established. We selected PLS-SEM over covariance-based structural equation modeling because of its exploratory nature, which allows us to test a large number of potential constructs affecting feedback-providing intentions in light of limited prior empirical work (Russo and Stol 2021; Henseler et al. 2009). PLS-SEM focuses on maximizing the explanation of the dependent variable (R2) rather than aiming to reduce the discrepancy between the empirical and model-implied covariance matrices (Russo and Stol 2021; Henseler et al. 2009). We tested the hypotheses by bootstrapping the results with 10,000 subsamples (Hair Jr et al. 2021). The SmartPLS software automatically standardizes indicator scores, latent variable scores, and path coefficients.

5 Results

5.1 Results of the survey

We collected 796 responses and excluded 18 from further analysis based on evidence of straight-lining and inconsistent responses to negatively worded questions (n = 778). The mean response time was approximately 12.54 min, and the median was 10.48 min, which indicated that the survey length was appropriate (Revilla and Ochoa 2017; Galesic and Bosnjak 2009). Table 2 contains the descriptive statistics. Table 3 shows the unstandardized means and standard deviations of responses.

5.2 Results of the exploratory factor analysis on perceived usefulness dimensions

Contrary to our hypothesized four-factor model, the factor loadings and explained variance suggested that a three-factor model was more appropriate (see Table 4). The three-factor solution recorded an RMSEA of 0.06 (90% CI 0.049–0.071) for the ratings model and 0.049 (90% CI 0.036–0.063) for comments. These are, respectively, considered acceptable (RMSEA: 0.05–0.08) and close fits (RMSEA: < 0.05) (Fabrigar and Wegener 2012). In addition, all of the interfactor correlations were below 0.80, indicating sufficient discriminant validity (Watkins 2018). As two of our hypothesized dimensions of user preference learning and browsing optimization were not identified, we combined these into a single dimension called ‘recommendation quality’ for further analysis (i.e., PU-PL and PU-BO changed to PU-RQ).

5.3 Results of partial least squares structural equation model (PLS-SEM)

5.3.1 Results of the measurement model

The measurement model was assessed for indicator reliability, internal consistency, convergent validity (Table 5), and discriminant validity (Table 6). For indicator reliability, items that recorded outer loadings (OL) below 0.7 were considered for deletion by iteratively assessing their effects on other internal consistency and convergent validity measures (Hair Jr et al. 2021). During this process, we deleted an indicator for attitudes and privacy concerns in both models as these loaded poorly and adversely impacted other measures (see notes to Table 5). In contrast, two indicators for perceived pressure were kept, despite OL being below 0.7, as their deletion adversely affected other construct measures. We reviewed Cronbach’s alpha coefficient (CA) and composite reliability (CR) for internal consistency. Across both models, all constructs except privacy concerns (Ratings and Comments: CA = 0.60) and perceived pressure (Ratings and Comments: CA = 0.66) were above the acceptable threshold of 0.7 (Hair Jr et al. 2021). We nonetheless accepted these scores below the threshold because CA represents a conservative estimate, and both constructs recorded CR (upper range of internal consistency) above 0.7 and average variance extracted (AVE) above 0.5 (Hair Jr et al. 2021). The remaining constructs also recorded AVE scores greater than 0.5, which supported their convergent validity (Hair Jr et al. 2021). To ensure discriminant validity, we used the Fornell–Larcker criterion, which requires the square root of the AVE to be greater than latent variable intercorrelations and all intercorrelations to be less than 0.85 (Fornell and Larcker 1981). The results in Table 6 show that all constructs met the criterion. We also examined the heterotrait–monotrait ratio of correlations (HTMT) to cross-check the results. We found that all confidence intervals constructed at the 95% quantile were below the recommended HTMT threshold of 0.85 to support the discriminant validity of constructs in this study (Benitez et al. 2020).

5.3.2 Results of the structural model

To check the results of the structural model, we first checked the models for signs of collinearity. We noted that the variance inflation factors of all constructs in both models were below the ideal threshold of 3 (Hair Jr et al. 2021).

The hypothesis testing results are summarized in Table 7. We also included a graphical illustration of the model in Fig. 4.

Behavioral intentions explained a large portion of the variance in ratings (R2 = 0.62, p < 0.001) and comments (R2 = 0.59, p < 0.001) (H1: supported). For constructs with direct effects on intentions, we found attitudes (H4: supported), subjective norms (H5: supported), and feedback intent on positive experiences (H7.1: supported) recorded statistically significant positive effects on rating and comment intentions at p < 0.001, supporting the respective hypotheses. In addition, feedback intent on negative experiences recorded positive effects on rating and comment intentions at p < 0.05 (H7.2: supported). Perceived behavioral control had insignificant effects on intentions and behaviors (H6.1 and H6.2: not supported). Likewise, no significant effects were found on privacy concerns (H8: not supported) and perceived pressure (H9: not supported). Finally, perceived ease of use positively affected attitudes at p < 0.001 (H3: supported). However, the perceived usefulness dimensions, which include mediation effects through attitudes, recorded mixed results.

For perceived usefulness toward recommendation quality, the direct effects on attitudes of ratings (β = 0.14, p < 0.01) and comments (β = 0.23, p < 0.001) (H2.1.PU-RQ: supported), the direct effects on intentions of comments (β = 0.08, p < 0.05) but not ratings (H2.2.PU-RQ: partially supported), and the indirect effects on intentions mediated through attitudes of ratings (β = 0.03, p < 0.01) and comments (β = 0.06, p < 0.001) (H2.3.PU-RQ: supported) recorded statistically significant effects. Following the methodology of Zhao et al. (2010) to categorize the type of mediation effects, we found that this dimension recorded indirect-only effects on rating intentions and complementary effects on comment intentions. For perceived usefulness of information displayed to other users, the direct effects on attitudes of ratings (β = 0.22, p < 0.001) and comments (β = 0.17, p < 0.001) (H2.1.PU-ID: supported), the direct effects on intentions of ratings (β = 0.06, p < 0.05) but not comments (H2.2.PU-ID: partially supported), and the indirect effects on intentions mediated through attitudes of ratings (β = 0.05, p < 0.001) and comments (β = 0.04, p < 0.001) (H2.3.PU-ID: supported) recorded statistically significant effects. This means that the perceived usefulness of information displayed to other users recorded complementary effects on the ratings model and indirect-only effects for comments (Zhao et al. 2010). For perceived usefulness toward order quality, the direct effects on attitudes of ratings (β = 0.20, p < 0.001) and comments (β = 0.19, p < 0.001) (H2.1.PU-OQ: supported) and the indirect effects on intentions mediated through attitudes of ratings (β = 0.04, p < 0.001) and comments (β = 0.05, p < 0.001) (H2.3.PU-OQ: supported) recorded statistically significant effects. However, the direct effects on intentions of both ratings and comments failed to record significant effects (H2.2.PU-OQ: not supported). Therefore, both models recorded indirect-only effects of perceived usefulness toward order quality on providing intentions (Zhao et al. 2010).

We used Cohen’s effect size (f2)—which represents the impact on the explained variance (R2) of endogenous constructs when a construct is removed from the model—based on the thresholds of 0.02, 0.15, and 0.35 as small, medium, and large effect sizes (Chin 1998). We found that subjective norms recorded medium effect sizes on rating intentions (f2 = 0.27) and comment intentions (f2 = 0.28). Feedback intent of positive experiences (f2ratings = 0.09; f2comments = 0.08) and attitudes (f2ratings = 0.07; f2comments = 0.11) recorded small effect sizes on both rating and comment intentions. All other constructs recorded insubstantial effect sizes on behavioral intentions (see Sect. 6 for discussion of results). In particular, none of the perceived usefulness dimensions recorded substantial effect sizes to explain rating and comment intentions.

For effect sizes on attitudes, all perceived usefulness dimensions except the perceived usefulness of recommendation quality on attitudes recorded small effect sizes. Perceived ease of use also recorded small effect sizes on attitudes (f2ratings = 0.09; f2comments = 0.07).

We recorded substantial explanatory power (Chin 1998) in terms of its explained variance for rating intentions (R2 = 0.67) and comment intentions (R2 = 0.69). While reporting model fit statistics in the context of PLS-SEM is debated, we recorded a standardized root-mean-square residual (SRMR) of 0.07 for ratings and comments, which are below the acceptable heuristic fit threshold of 0.08 (Benitez et al. 2020).

5.3.3 Consideration of alternative structural paths

Following our hypothesis testing, we also explored several alternative structural paths to assess whether the explanatory power on behavioral intentions could be improved (Sharma et al. 2019). Specifically, we considered the following plausible structural relations:

-

1.

Perceived ease of use may directly and indirectly correlate with behavioral intentions through attitudes to increase the explained variance of intentions.

-

2.

Subjective norms correlated highly with attitudes; thus, specifying a direct relation may increase the explained variance of intentions.

-

3.

Subjective norms correlated highly with perceived usefulness for recommendation quality; thus, specifying a direct relation may increase the explained variance of intentions.

-

4.

Subjective norms correlated highly with perceived usefulness for order quality; thus, specifying a direct relation may increase the explained variance of intentions.

-

5.

The effects of perceived pressure on behavioral intentions could be moderated by privacy concerns and may increase the explained variance of behavioral intentions.

See Table 8 for the results. The only new structural relation that contributed to an increase in the explained variance of behavioral intentions was from the perceived ease of use in the ratings model. This new path resulted in a small increase of the explained variance (R2) by 0.01 (#1: EoU → BI and EoU → ATT → BI) and recorded a small effect size on behavioral intentions (f2 = 0.03). However, the new structural path resulted in a corresponding decrease in the effect size of attitudes on behavioral intentions (Δf2 = − 0.02 = 0.05 (new path - Table 8) - 0.07(original model - Table 7). Although the effects of perceived ease of use on comment intentions were insubstantial, consistent with the ratings model, the increase in effect size was accompanied by a decrease in the effect size of attitudes on intentions (Δf2 = − 0.02 = 0.09 (new path - Table 8) - 0.11 (original model - Table 7).

Of the alternative paths with no increases to the explained variance of behavioral intentions, we noted subjective norms on attitudes (#2: SN → ATT) recorded small effect sizes in both the rating and comment models (f2ratings = 0.09; f2comments = 0.13). The paths from subjective norms to the perceived usefulness dimensions (#3: SN → RQ and #4: SN → OQ) recorded medium-to-large effect sizes in the rating and comment models but also resulted in SRMR scores above the 0.08 heuristic threshold (Benitez et al. 2020). The moderating effects of privacy concerns on perceived pressure (#5: PC × PP → BI) resulted in a statistically significant effect on rating intentions (β = − 0.05; p < 0.05), but the relations to comment intentions remained insignificant. This also changed the result of the direct effects of privacy concerns on ratings intentions to become significant (β = − 0.05; p < 0.05). However, regardless of the inclusion of moderating effects, the effect sizes remained insubstantial. Given the minimal changes to the explained variance of behavioral intentions from these new structural relations, we did not proceed to calculate other model comparison metrics such as the Bayesian information criterion (Sharma et al. 2019). Overall, as these specifications revealed minimal-to-no improvements in the explained variance (R2) of behavioral intentions, we decided to interpret and discuss the results based on our original model in section 6.

In addition, for the ratings model, we also considered the original TPB, TAM and augmented TAM-TPB (see the respective path structures in Fig. 2). Because of the more parsimonious structure, SRMR values improved in the TAM (SRMR = 0.06; Δ − 0.01) and TPB (SRMR = 0.06; Δ − 0.01) models. However, even using the adjusted R2 values to compare models with different numbers of predictors, we found decreases in the explained variances of rating intentions in the TAM (adjusted R2 = 0.42; Δ − 0.25) and TPB (adjusted R2 = 0.60; Δ − 0.07) models. The scores for the augmented TAM-TPB model deteriorated for both model fit (SRMR = 0.12; Δ + 0.05) and explained variance (adjusted R2 = 0.61; Δ − 0.05). As we are concerned with maximizing the explained variance of behavioral intentions, and ratings and comments exhibited very similar correlations, we did not extend the analysis to the comments model.

6 Discussion

6.1 The power and potential of explicit feedback elicited through user-to-user relationships

We have long known that explicit feedback is difficult to collect. Yet, it seems that academia and industry shifted their focus to implicit feedback-based recommendation systems before understanding the factors that motivate feedback behaviors. Our results showed that the design and collection of feedback may have been misaligned with users’ primary motivational driver all along—our willingness to provide feedback because of important others in life. Out of the ten predictors of intentions, subjective norms recorded the highest structural relations with intentions to provide ratings (β = 0.41, p < 0.001) and comments (β = 0.41, p < 0.001), and it was the only construct to record a medium effect size on rating (f2 = 0.27) and comment (f2 = 0.28) intentions. Furthermore, the mean scores in Table 3 show that subjective norms for ratings (x̄ = 3.69) and comments (x̄ = 3.46) were among the lowest of all predictors. These results have important implications for feedback elicitation. First, as the strongest predictor in both models, when individuals perceive strong subjective norms, they are more inclined to leave ratings and comments. Second, the low average scores for subjective norms indicate that most individuals do not currently feel these social norms—e.g., pressures or expectations—to provide feedback. These findings collectively suggest that the data sparsity problem facing recommendation systems may be caused by the formation of implicit social norms in which there is a common understanding among users that very few individuals provide feedback. Intuitively, this simple yet novel result explains why we tend to ignore feedback requests from systems that can learn and discover our preferences but will happily offer it to friends and family when asked.

We believe these results call for a major change in the way explicit feedback is elicited. Specifically, while most recommendation systems currently elicit feedback based on user-to-provider relationships, our results suggested that preference elicitation may be better motivated by social ties that manifest in user-to-user relationships. That is, instead of providers directly requesting or creating perceived pressures to provide feedback (PP recorded no significant effects on intentions), users could be encouraged to share feedback on items and stores with friends, family and other users with similar preference profiles to highlight the role of social dependencies.

There are many approaches food delivery platforms could take to drive these initiatives. For example, by integrating social media accounts to connect with friends and family, platforms could help compile categories such as “inspiration from friends and family” that are based on past interactions including ratings and comments. Users could be prompted to rate several past orders at once to “let your friends know your favorite dishes”. Platforms could also enhance social ties by calculating percentages representing the degree to which your taste profile matches with friends, family and other users willing to share their preferences. Alternatively, for established categories of food such as burgers, users could be asked to vote on the “best burgers in your area” to highlight the value of social voting mechanisms. Social ties could be further enhanced through the promotion of reciprocity by requesting users to answer questions before they can benefit from these new recommendation features. Spotify Wrapped, a viral annual campaign in which users share their top songs, albums, and genres, is one example of how platforms can encourage preference sharing across social networks in a user-to-user manner. While we concede that integrating said data into recommenders is not straightforward and the user acceptability of such initiatives is difficult to know without costly testing, empirical evidence does support the idea of improving recommendation accuracy and diversity by bringing your ‘friends-in-the-loop’ of recommendations (Piao et al. 2021).

Interestingly, the effects of social influences were only salient for those considered ‘important,’ as the more altruistic motivator to improve the information displayed for other platform users (PU-ID) recorded insubstantial effect sizes across ratings and comments. Although some may argue that ratings and comments do not involve any social pressure or conformity, we take the view that subjective norms are “unwritten and often unspoken rules for how we should behave” (Smith and Louis 2009). Over the years of poorly motivated elicitation practices, we believe an unspoken understanding has likely formed between users that no one provides feedback. Thus, reimagining elicitation through user-to-user relationships may be the long-awaited solution to increase explicit feedback.

However, while the promise of social matching has long been touted (Terveen and McDonald 2005; Terveen and Hill 2001), its prevalence among commercial recommenders appears limited. This hints at the potential difficulties of designing and developing positive user–recommender interactions through user-to-user relationships. These difficulties are magnified in food delivery services as they are inherently less social than traditional dining experiences, and delivery areas constrain product diversity. Yet, the power of explicit feedback extends beyond its mere potential to personalize the display of menu items. In the food sector, because inputs such as salt and sugar are easily interchangeable while outputs are highly diverse, explicit feedback could be utilized to personalize the production of menu items by identifying features for improvement (Mourtzis et al. 2018; Wang et al. 2017). Although it is unclear how much customer-level feedback is currently used by food delivery platforms and participating restaurants to improve orders according to individual preferences, at least the potential exists. For now, however, our results showed that feedback’s perceived usefulness in improving the order quality had insubstantial effect sizes to explain feedback-providing intentions. If food delivery platforms are able to incorporate fine-grained food preferences from customer feedback to improve individual meals and effectively communicate the benefits, the effects of this dimension on behavioral intentions should increase.

6.2 Effects of perceived usefulness dimensions and attitudes on feedback intentions

Beyond the effects of subjective norms, a key expectation from our literature review was that explicit feedback may be stubbornly low because users are not aware of or are poorly influenced by the perceived benefits of providing explicit feedback to recommendation systems. Although we identified through the mean scores of responses that users were somewhat aware of the perceived usefulness of providing ratings and comments, users perceived that these benefits flowed the most to other users. Specifically, the perceived usefulness of providing feedback to improve the information displayed for other users (PU-ID: x̄ratings = 5.70; x̄comments = 5.71) was notably higher than the perceived usefulness scores for improving recommendation (PU-RQ: x̄ratings = 4.84; x̄comments = 4.34) and order quality (PU-OQ: x̄ratings = 4.14; x̄comments = 3.92).

When considering the total effects (direct+indirect effects) of perceived usefulness on behavioral intentions, all dimensions recorded statistically significant relations for comments. However, only the perceived usefulness for information displayed to others was significant for ratings. The greater influence of perceived usefulness dimensions on comment intentions may be reflective of the increased information content and cognitive effort typically attributed to reviews.

Overall, however, while we found several statistically significant structural relations for the perceived usefulness dimensions across both models, the effects were largely mediated through attitudes. Importantly, when looking at the total effects, none of the perceived usefulness dimensions individually recorded statistically significant effect sizes to help explain the behavioral intentions of ratings and comments. It was only when we removed all perceived usefulness dimensions (recommendation and non-recommendation related) at once to calculate the effect size for the comments model that any substantive effect size (f2 = 0.02) was recorded. For the ratings model, the effect sizes were insubstantial regardless of whether we calculated f-square values on individual dimensions, or all dimensions taken together. These results imply that usefulness perceptions are currently poor predictors of feedback-providing intentions. However, said relations are atypical of behavioral intention models in most contexts, in which perceived usefulness and attitudes are typically the strongest predictors of behavioral intentions (King and He 2006; Fishbein and Ajzen 2009). Therefore, we believe that management and development efforts are needed to strengthen the relationship between feedback behaviors and associated benefits.

To strengthen the associations between feedback behaviors and user perceptions, food delivery platforms should test various messaging strategies (Kobsa and Teltzrow 2005). For example, recommenders could signal how user preference profiles have been updated after providing explicit feedback, as even simple interface cues have been shown to improve perceptions of system effort and recommendation quality (Tsekouras et al. 2022). Alternatively, platforms could display recommendation lists with labeled explanations that highlight the direct connection with past explicit feedback behaviors, such as “Because you rated this product” or “Rated highly by similar users” (Jugovac et al. 2017). Recommenders could also elicit ratings and reviews of several past purchases at once on the premise of helping users discover new items. However, such initiatives may ‘backfire’ if the subsequent recommendations are perceived to be low quality. Furthermore, as user attitudes were one of the few constructs that recorded small but substantive effect sizes on behavioral intentions (f2ratings = 0.07; f2comments = 0.11), and attitudes were explained moderately by usefulness perceptions and ease of use (R2ratings = 0.40; R2comments = 0.41), when designing elicitation techniques, it is important to ensure that food delivery platforms balance the effectiveness of features with its ease of use. Merely stating that feedback will be used for personalization will not be enough for users to confirm its usefulness and influence providing intentions over time (Oliver 1980).

6.3 Effects of privacy concerns on feedback intentions

Significantly, our results provide additional empirical support to reject the much-cited personalization–privacy trade-off in the context of rating and review-based preference information provided to recommendation systems (Schnabel et al. 2020). We contend that once users have given up personally sensitive information to use platform services, privacy concerns are no longer a predictor of providing intentions. This relationship is important, as preference information is arguably far more important for recommenders than personally sensitive information. However, further research is required to validate these findings as the sample characteristics of participants recruited online may have affected the results. Furthermore, these results may stem from the fact that some platforms did not display user profile details associated with ratings and reviews, which may have provided psychological safety for users to freely express their preferences.

6.4 Effects of perceived behavioral control and perceived pressure on feedback intentions

We also found that perceived behavioral control had insignificant effects on user intentions despite having the highest mean response of 6.02 for ratings and comments (Table 3). In addition, unlike the results in Picazo-Vela et al. (2010), perceived pressure also recorded insignificant effects. These results are intriguing because although food delivery platforms appear to make feedback requests after every purchase, neither users’ high perceived abilities to provide feedback nor perceived pressures influenced providing intentions. While overall user engagement may be higher when users are constantly elicited, they may be accompanied by potential declines in user experiences.Footnote 8 Done poorly, these strategies may instill habits of dismissing feedback requests altogether, which is particularly troubling if said feedback is seen to provide little tangible benefit (e.g., does a restaurant with thousands of 5-star ratings need more?). Although this study did not establish that persistent feedback requests negatively affect attitudes or customer experiences, since attitudes and feedback intent on positive experiences were two of the three constructs to record statistically significant effect sizes, how to preserve them (e.g., by respecting users’ attention) should be an important consideration.

6.5 Effects of customer experiences on feedback intentions

For experience-based constructs, positive (βratings = 0.25, p < 0.001; βcomments = 0.21, p < 0.001) and negative experiences (βratings = 0.08, p < 0.05; βcomments = 0.07, p < 0.05) recorded significant positive effects on rating and comment intentions, confirming the importance of customer satisfaction on feedback-providing intentions. While our results showed that positive and negative experiences were both statistically significant predictors of overall feedback intentions, only positive experiences had statistically significant effect sizes (f2ratings = 0.09; f2comments = 0.08). This tells us that satisfied users are more likely to share feedback, which is generally consistent with results from prior studies across different contexts (Wu et al. 2016; Sparling et al. 2011; Büttner and Göritz 2008). However, it may also be because negative experiences are less frequent and idiosyncratic to the individual meals that we fail to see stronger relations with behavioral intentions. For food delivery applications, because the consumption experience occurs entirely outside the application, explicit feedback will continue to be an important tool for monitoring customer satisfaction.

6.6 Other discussions on the rating and comment models

To the best of our knowledge, this paper was the first to model constructs influencing two forms of explicit feedback. Consistent with our understanding, we found that the mean scores for rating and comment intentions were lower than those for actual providing behaviors. Furthermore, both feedback intentions and providing behaviors recorded mid-to-lower ranges of the average scores, which indicated improved validity of responses compared to similar prior studies in which feedback information recorded high intentions that failed to reflect the underlying sparsity problem (Kim and Kim 2018; Mican et al. 2020). Although we expected rating and comment intentions to manifest some heterogeneity because of differences in feedback characteristics like cognitive effort (Hu et al. 2014; Sparling et al. 2011), our study showed that the relevance and significance of structural relations were mostly similar across the two behaviors. We could have also performed statistical analyses to test whether the ratings and comments model recorded statistically significant differences in path coefficients. However, considering the limited observable differences between the two models, we focused our analysis on exploring the implications of constructs by comparing differences in hypothesized relations.

7 Limitations and future research directions

This study should be interpreted in the context of its limitations. Firstly, users stated that intentions might not correlate with actual feedback behavior (Barth and de Jong 2017; Spiekermann et al. 2001; Knijnenburg et al. 2012). Secondly, explicit feedback perceptions may vary significantly across domains. Therefore, practitioners must be careful in generalizing the results to other domains. For example, in e-commerce, product variety makes identifying relevant evaluative dimensions significantly harder because user interests are heterogeneous across sessions but homogenous within sessions (Feng et al. 2019). Thus, it is difficult to foresee how users could directly and incrementally benefit from providing consistent feedback. In this sense, feedback is more beneficial in domains with shorter feedback cycles within defined categories of products, such as cuisines of food and genres of music, online news, and short videos, so that systems can explore and exploit evolving user tastes. Thirdly, even if platforms collected feedback in line with the constructs identified in this study, user behaviors might not change because of ingrained habits and mindsets. In other words, user motivations may not equal or explain amotivations—i.e., a lack of intention or motivation to engage in the behavior. Moreover, our measures do not capture idiosyncrasies of user perceptions at the individual meal level that are expected to play an important role in influencing user intentions. Finally, as our model was designed to explore the relevance of a range of constructs, future work should derive more parsimonious models in confirmatory settings.

Our work raises some interesting research directions for user–recommender interactions. For example, the key psychological influences we identified in this study could motivate future user studies using different elicitation mechanisms. Specifically, future works should consider evaluating the effects of feedback elicitation techniques utilizing user-to-user relationships. In addition, while our results showed that correlations between perceived usefulness and explicit feedback intentions were mostly insubstantial, these are general user perceptions that do not necessarily establish a clear causal connection between incremental user feedback, recommendation adjustments, and user perceptions of usefulness. Indeed, experimental settings manipulating objective system aspects may reveal stronger correlations between user effort and perceived benefits. For instance, studies could manipulate the type of algorithm—whether explicit or implicit—or the relative weights associated with each feedback type in mixed models to assess the effects on user perceptions of usefulness toward feedback actions. Studies may find that perceived benefits of providing feedback are only felt by knowledgeable users or that benefits diminish over time depending on the size and variety of candidate items in the search space. Recent work in user control over algorithms to influence recommendations (i.e., not feedback on items recommended or consumed) shows mixed empirical results over whether the perceived effectiveness of controls encourages more interactions (Rani et al. 2022; Jin et al. 2017; Vaccaro et al. 2018). However, for other forms of user–recommender interactions, the causal mechanisms behind recommendations, interactions and user perceptions appear to be present (Kamehkhosh et al. 2020; Liang and Willemsen 2022). Thus, we encourage similar work to be carried out for explicit feedback behaviors to better understand the causal mechanisms driving feedback-providing behaviors.

8 Conclusion

Based on our results and discussion, we offer the following practical measures to motivate explicit feedback provision. Firstly, and most importantly, platforms should motivate feedback provision through social ties that manifest in user-to-user relationships rather than relying on existing motivations manifesting in user-to-provider relationships. In food delivery systems and beyond, the focus for explicit feedback is often placed on providing ratings and reviews to platforms, products, and providers, which do not evoke the power of social ties. By evoking the presence of friends, family and other local or similar users, platforms can establish a connection with subjective norms, making users feel that their feedback can positively influence the behaviors of important others in life. We believe that shifting user motivations toward user-to-user relationships in this manner may be the missing key to counter what appears to be existing social norms surrounding users’ unwillingness to provide feedback. Secondly, we found hypothesized facets of feedback’s perceived usefulness recorded individually insubstantial effect sizes on providing intentions. As user attitudes and perceived usefulness are typically the strongest predictors of behavioral intentions, we believe that platforms need to strengthen the association between feedback behaviors and associated benefits. Specifically, we suggest that platforms should highlight how recommenders can help users discover new and better items through the provision of feedback by considering various messaging strategies. In addition, because perceived behavioral control recorded high mean scores, but the effects of this construct and perceived pressure were insignificant on behavioral intentions, platforms should reexamine the effectiveness of frequently prompting users for feedback as a possible means to preserve user attitudes and satisfaction. Finally, we delineated ratings and reviews from other forms of personal information and found that privacy concerns were insignificant influences of providing intentions, going against the much-cited personalization-privacy paradox.

Collecting more explicit feedback alone is unlikely to be the panacea for recommenders. Indeed, we expect that implicit feedback and other algorithmic solutions will continue to play a larger role in the development of recommendation systems. Yet, explicit feedback should not be forgotten as a valuable information source for user modeling. Specifically, even if models are trained on implicit feedback, explicit feedback’s ability to reduce or expand the search space and communicate positive and negative evaluations is an important feature to improve recommendations. Explicit feedback has been shown to improve recommendation metrics in mixed models that blend both feedback types and shows promise in real-time recommendations to quickly update candidate items. For recommendation scenarios such as food delivery where the consumption experience is entirely outside of digital environments, explicit feedback may be the only means to effectively measure customer satisfaction at the product level, especially if they are negative. Given the growing importance of recommenders, we expect our novel findings to lay the foundation for future work in user–recommender interactions and help improve preference elicitation practices in food delivery and beyond.

Data availability

The dataset for the study is not publicly available as our ethics approval restricts distribution for purposes outside academic publications. As we require additional approval from the Human Subjects Research Ethics Review Committee of Tokyo Institute of Technology to distribute the data to outside parties, we will only consider inquiries establishing a valid scientific basis. Any documents that are required to support the ethics committee review will need to be compiled by the requestor (contact the corresponding author for details).

Notes

Food Discovery with Uber Eats: Using Graph Learning to Power Recommendations. https://eng.uber.com/uber-eats-graph-learning/

Homepage Recommendation with Exploitation and Exploration. DoorDash Engineering Blog. https://doordash.engineering/2022/10/05/homepage-recommendation-with-exploitation-and-exploration/

Prolific performs identity checks on participants to provide evidence that users are based in their registered locations. See page for details: https://www.prolific.co/blog/why-participants-get-banned