Abstract

This article contributes to the discussion regarding the relationship between idealisation, de-idealisation and cognitive scientific progress. In this, I raise the question of the significance of the gradual de-idealisation procedure for constructing political science theories. I show that conceptions that assume the reversibility of the idealisation process can be an extremely useful theoretical perspective in reconstructions of political science modelling and analyses of scientific progress in political science. I base my position on the results of the methodological reconstruction of Richard Jankowski’s theory of voting. My reconstruction and results of empirical studies show that by gradually removing simplifying assumptions, models can emerge that more accurately identify the determinants of the voting decision and the corresponding relationships. In the case I analysed, the transition from coarse-grained to fine-grained models likely demarcates the line of scientific progress.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Through modelling, theorists can obtain indirect access to real-world phenomena. Representing and analysing empirical reality through models is one of the most significant kinds of theorising, as evidenced by the continuing interest of philosophers of science in the process of construction, structure, and functions of physical and abstract constructs representing selected parts of the factual world. Depending on their research goals and assumptions, theorists use different techniques and tools to build models. The multiplicity of modelling strategies and model types has significant theoretical implications. It makes it much more difficult for philosophers to formulate sufficiently broad and cognitively valuable generalisations. Discussions on idealisation and de-idealisation reflect difficulties of this kind.

Using the idealisation method, the model becomes a simplification of the represented phenomenon. Earlier work on model-based theorising emphasised the importance of the reversibility of the idealisation and de-idealisation processes (McMullin, 1985; Nowak, 1977, 1980). According to this view, de-idealisationFootnote 1 is a procedure that serves as a condition for cognitive scientific progress. As a consequence of the gradual removal of successive idealising assumptions, the theory becomes a sequence of increasingly detailed and complex models. The process of gradual approximation of a theory to empirical reality ends when the most realistic model becomes a sufficient approximation of the target phenomenon.

More recent analyses either criticise the picture of modelling described above (Knuuttila & Morgan, 2019; Reiss, 2012; Rice, 2019) or point out the complex nature of the relationship occurring between idealisation and de-idealisation while avoiding overly categorical statements (Cassini, 2021; Peruzzi & Cevolani, 2022). Gradual de-idealisation is not always feasible, as some idealising assumptions are permanently ineliminable. For example, human cognitive limits or absolute computational limits may stand in the way of advancing to a more detailed model. Gradual de-idealisation may also not be convenient from a practical standpoint. Some idealising assumptions are ineliminable at a given stage of the research, e.g., due to methodological limitations, while others, although they can be removed, are retained, as they provide the model with sufficient flexibility and universality (see, e.g., Batterman, 2002, 2009; Potochnik, 2017; Shech, 2023). Therefore, capturing progress in science in terms of transitioning from more idealised models to less idealised models has some limitations. It leads to conclusions with significant cognitive value only in specific fields and cases.

This article supports the concepts emphasising the significance of gradual de-idealisation in the process of constructing scientific theories. Based on an analysis of the methodological aspects of the construction of Richard Jankowski’s (2015) theory of voting, I show that concepts assuming the reversibility of the idealisation process can provide an extremely fruitful theoretical perspective in reconstructions of political science modelling and analyses of cognitive progress in political science.Footnote 2 My reconstruction and the results of empirical studies show that by gradually removing simplifying assumptions, models can emerge that more accurately identify the determinants of the voting decision and the corresponding relationships. In the case I am discussing, the transition from more idealised models to less idealised models likely demarcates the line of scientific progress. My findings complement the knowledge produced within the mainstream research on idealisation, de-idealisation and related cognitive procedures, which are dominated by reconstructions of theories derived from the natural sciences and economics.Footnote 3

The remainder of this paper is structured as follows. In Sect. 2, I describe the procedures of idealisation and de-idealisation, discuss the relationship between them and the importance philosophers ascribe to them in the process of building scientific theories. An important part of my musings is taken up by the characterisation of the scheme of idealisation—gradual de-idealisation in terms of the idealisational theory of science (hereinafter: ITS), a concept created in the second half of the twentieth century by Leszek Nowak and his colleagues as part of the Poznań School of Methodology. In Sect. 3, I briefly discuss the main theories of scientific progress, focusing primarily on the approach elaborated within the scope of ITS. In Sect. 4, I apply the methodological tools of ITS to reconstruct Jankowski’s theory of voting. The analysis of the models that make up Jankowski’s theory is the starting point for the discussion, in Sect. 5, regarding the role of the gradual de-idealisation procedure in the process of cognitive progress in political science. In the final section of the text, I summarise the main findings and conclusions of the study.

2 De-idealisation

One of the key common features of the various fields of human creative activity is the use of various types of deformation procedures (Nowak, 2012; Van Fraassen, 2013). They play a fairly significant role not only in literature, painting or sculpture, where they are not usually questioned and are considered to be typical means of artistic expression but also in science, which already generates some questions and disputes as it questions the common image of science as an activity whose goal should be adequate description of reality.Footnote 4 The nature of complex knowledge generation processes, particularly model-based theorising, challenges the factualist image of science. Reconstructions from different, often radically different, scientific disciplines show that theory building is not based on a faithful representation of the fragment of the analysed reality but on its skilful deformation (Godfrey-Smith, 2009; Jebeile & Kennedy, 2015; Morgan, 2006; Nowak, 2000a; Peruzzi & Cevolani, 2022; Seifert, 2020; Svetlova, 2013). One method that enables the theorist to deform the subject of consideration in a cognitively valuable manner is idealisation. Roughly speaking, it involves the deliberate, permanent, or merely temporary counterfactual assignment of extreme values (i.e., zero or infinity) to some parameters of the modelled system. Consequently, the system is represented as if it does not have the features, it actually possesses, which is reflected in a counterfactually deformed model.Footnote 5

The use of the idealisation method is mainly dictated by human cognitive limitations and methodological constraints that researchers face in a given discipline at a given time. The aspect-based nature of human cognition and the limited power of non-biological computing systems mean that the researcher frequently finds the analysis of all the determinants of the analysed phenomenon to be beyond their reach. For this reason, it simplifies the modelled fragment of reality by focusing on certain features and relationships while ignoring others. This strategy provides the basis for achieving set cognitive goals, such as exposing the relationship between the analysed magnitude and its principal factor (Nowak, 2000b), representing key causal patterns (Potochnik, 2017), isolating core mechanisms and dependencies (Mäki, 1994), facilitating scientific exploration (Shech & Gelfert, 2019), facilitating explanation and understanding (Batterman, 2002; Bokulich, 2008; Shech, 2018, 2022), improving the mathematical tractability and efficacy of the model (McMullin, 1985), and building and developing theory (Rohwer & Rice, 2013; Ruetsche, 2011).

In earlier discussions of scientific modelling, the prevailing view was that idealisations only indicate elements of secondary explanatory (causal) significance (Elgin & Sober, 2002; McMullin, 1985; Nowak, 2000b; Strevens, 2008; Woodward, 2004). One of the non-trivial theoretical consequences of viewing idealisation in terms of assumptions with an immeasurably less significant role in knowledge generation than realistic assumptions is the reversibility of the idealisation process. Therefore, the simplifications introduced in the model are justified by future elimination. With the emergence of new cognitive needs and methodological development, the researcher can gradually remove successive idealising assumptions, moving from a simple initial model, taking into account only the influence of factors most relevant to the theoretical perspective adopted, to more detailed models that also take into account the influence of secondary factors. According to this view, de-idealisation is a cognitive procedure through which scientific progress occurs.

The belief in the secondary nature of the de-idealisation procedure is perfectly illustrated by ITS, a concept founded on essentialist ontology (Nowak, 1977, 1980, 2000b). According to it, the construction of the theory consists of several stages. First, on the basis of the ontological perspective adopted, the theorist introduces a division into factors that influence and do not influence the analysed magnitude F. The former form the image of the space of essential factors for F,Footnote 6 and the latter are reduced. The theorist then distinguishes two subsets within the set of essential factors: principal factors and secondary factors. They make this division based on the theoretical perspective adopted. By prioritising the power of influence of individual principal and secondary factors, an image of the essential structure of F is formed.Footnote 7 Theorist adopts idealising assumptions that omit secondary influences in the next step. With the emergence of new cognitive needs and methodological developments, they gradually concretise the basic model, removing successive idealising assumptions. Concretisation follows a strict order. The order in which the idealising assumptions are removed is determined by the magnitude of the influence of each particular secondary factor. The secondary factors that have the greatest influence on the analysed magnitude are considered first, followed by factors with a relatively less significant influence. Usually, the theorist does not carry out final concretisation because they lack sufficient information about the influence of some secondary factors. Their influence is then determined by approximation.

Examples of idealisational statements often analysed in ITS include Galileo’s law of free fall (Nowak, 1977, 2000a) and Marx’s law of value (Nowak, 1977, 1980). Both laws propose a highly simplified view of reality. They emphasise the influence of principal factors while ignoring the influence of secondary factors. Galileo formulated the law of free fall for frictionless motion, which does not occur in a factual world. The transition from the idealised conditions proposed by Galileo to concrete empirical situations thus requires consideration of the influence of secondary factors, such as the resistance of the medium. The same is true of the law of value, which Marx formulated for an idealised economy with a permanent equilibrium between demand and supply. By ignoring the effects of fluctuations in demand and supply on the price of goods, Marx obtained a picture of an economy in which the price of a commodity corresponds to its value. Taking the secondary factor into account results in a more realistic version of the simple initial model. The concretised model stipulates that when the absolute value of the difference between the demand for and supply of a commodity is not zero, the price of that commodity corresponds to its market value.

According to ITS, the course of the de-idealisation process determines the structure of the idealisational theory. Some idealisational theories have a linear structure, whereas others have a star-shaped structure. In addition, numerous intermediate forms are located between the linear structure and the star-shaped structure (Nowak, 2000b). In theories with a linear structure, secondary factors are introduced by the researcher into the last model of a given theory, i.e., the one with the least idealising assumptions. In effect, such a theory becomes a linear sequence of models that differ in the number of simplifications. Star-shaped idealisational theories, on the other hand, distinguish between a basic model with a certain number of idealising assumptions and a set of derivative models that have one idealising assumption fewer. In theories of this type, the researcher, wishing to learn about the effect of another secondary factor, does not concretise the derivative model but returns to the basic model and removes the idealising assumption necessary to study the secondary influences of interest.

The essentialism of ITS is also reflected in the idealisational conceptualisation of the explanation. Accordingly, explaining a phenomenon’s behaviour is to show how the phenomenon depends on its most significant determinant and how less significant determinants modify this dependence. The explanation, therefore, requires that a de-idealisation procedure complements the idealisation procedure. However, showing the influence of secondary factors does not always occur due to removing idealising assumptions. In addition to strictly counterfactual statements, i.e., assuming exception-less non-importance of certain factors, it is also possible to introduce into the model and remove assumptions that may be fulfilled under certain conditions and not under others. On ITS grounds, these are called quasi-idealising assumptions (Nowak, 1974). Marx’s equilibrium between demand and supply is a quasi-idealising assumption. It will take the form of an idealising assumption when the difference between the magnitude of demand and supply in a given economy is not equal to zero; otherwise, i.e. when fulfilled in the empirical world, it will be a realistic assumption. Examples of assumptions of this type are provided by the reconstruction of Jankowski’s theory of voting, found in Sect. 4.2.

Conceptions that emphasise the secondary nature of the de-idealisation method can provide an extremely interesting and cognitively fruitful theoretical perspective in reconstructions of scientific modelling and analyses of the development of science. However, they have their limitations. The belief that idealisations only point to elements secondary in explanatory power and the resulting assumption of the reversibility of the idealisation process are, in themselves, far-reaching simplifications. This fact has been pointed out by philosophers showing the limited application of conceptions such as ITS in the analyses of selected physical, cosmological, biological, and economic theories (Alexandrova, 2008; Carrillo & Knuuttila, 2022; Kennedy, 2012; Knuuttila & Morgan, 2019; Reiss, 2012; Rice, 2018, 2019). These analyses raise the cognitive benefits of a non-representationalist approach to model explanation (Kennedy, 2012), seeing and analysing idealised models in terms of distorted representations not in a piecemeal manner but holistically (Carrillo & Knuuttila, 2022; Rice, 2018, 2019) and treating de-idealisation as an independent cognitive procedure rather than as a procedure implied by idealisation (Knuuttila & Morgan, 2019). However, it seems that both approaches that emphasise the secondary nature of the de-idealisation method and the approaches contrary to them—are based on overarching generalisations. This is because, in practice, the cognitive values of conceptions such as ITS are multifactorial and diverse in terms of field (Cassini, 2021; Peruzzi & Cevolani, 2022). In some fields, the de-idealisation method plays an important role, being one of the mechanisms of theoretical development, as I demonstrate in this article. In other fields, on the other hand, it is of marginal importance; knowledge accumulation in them does not occur “vertically” but “horizontally”, which manifests itself in the construction of multiple, not always mutually consistent, models of the same phenomenon.

3 Scientific progress

Like other fields of human cultural activity, science is not a static creation with once-defined boundaries and content, but a process that takes place gradually, step by step, or by periodic qualitative leaps. Historians and philosophers who view science through the lens of directional transformation analyse scientific changes using the axiologically entangled notion of progress. Analyses of scientific progress mainly focus on improvements over time in representations of the empirical world (laws, theories, models, etc.) built by scientists. In addition to cognitive scientific progress, other forms of scientific improvement are also possible. These include, for example, an increase in funding for research, improvement of material and technical conditions for the practice of science, methodological development, or intensification of activities that foster the construction of an inclusive academic culture (Dellsén et al., 2022; Niiniluoto, 2019). The object of my interest in this article is exclusively cognitive scientific progress. Philosophers usually distinguish four accounts of it, all of which can be found in different variants. These include functionalist, noetic, semantic, and epistemic accounts (e.g., Dellsén, 2018; Lawler, 2023; Niiniluoto, 2023; Rowbottom, 2023).

The foundations for the functionalist account were laid by Kuhn (1962), while it was developed and detailed by Laudan (1977). This account defines progress in terms of effectiveness in solving scientific problems. Progress occurs when the number of important unsolved problems decreases. Some problems are solved, others are discarded or their significance declines. The degree of significance of the problems is determined by the research tradition within which the problems were formulated. The relativisation of problems to the framework of traditions means that a problem in one tradition may not be a problem in another one.Footnote 8 Developed by Dellsén (2016, 2021, 2023), the noetic account links scientific progress with increased scientific understanding. Understanding forms a spectrum, meaning that the researchers can understand the target phenomenon to a higher or lower degree. A prerequisite for a better understanding of the analysed phenomenon is to have a model that more precisely defines which relationships the phenomenon is entangled. The semantic account is based on Popper’s (1963) initial assumptions, which were later developed mainly by Niiniluoto (1984, 1987, 2014). This account ties scientific progress to an increase in the truthlikeness of a theory. The concept of truthlikeness indicates that certain theories may be closer to the truth (more truthlike) than others. Scientific progress occurs between two theories when the later theory is more truthlike than the earlier one.Footnote 9 According to the epistemic account developed by Bird (2007, 2008, 2016), scientific progress occurs as a result of knowledge accumulation, i.e., through a process by which new elements are added to the existing body of knowledge. Bird agrees with the view that knowledge requires truth, belief, and some form of justification. In his view, scientific progress between two theories occurs only when “the later theory contains more or stronger propositions that are true, believed, and justified by the scientific evidence than does the earlier theory” (Dellsén, 2018, p. 6).Footnote 10

One highly interesting and theoretically relevant account of scientific progress is also proposed by ITS (Nowak, 1977, 2000b), which defines not only what constitutes progress but also what promotes and realises said progress. ITS assumes that some theories are essentially more truthful than others. It is based on the essentialist concept of truth, according to which

the truth of a phenomenon is to be contained in its essence; that is why, in order to describe the phenomenon truly, one has to leave out its ‘appearance’. In this sense one can talk about the truthfulness of a caricature which is based on the omission of some less significant features of a given person or situation, and on the exaggeration of its more significant features. In such a sense one can also talk about the truthfulness of idealizational statements which aim to omit secondary factors and take primary ones into account (Nowak, 2000b, p. 71).

In this approach, cognitive scientific progress occurs when statements are formulated that more accurately (more adequately) reproduce the essential and nomological structuresFootnote 11 of the analysed phenomenon. The line of progress in science is thus marked by a series of statements, each of which is characterised by a higher level of essential truthfulness, i.e., it more accurately identifies the principal and secondary factors for the target phenomenon and more accurately reflects the hierarchisation of the influence power of the distinguished factors and the corresponding relationships (Nowak, 2000b; Nowakowa, 1992a). Depending on the type of factors recognised by the researcher, a given statement may be partially, relatively, or absolutely true:

[…] a (simple, linear) idealizational theory is partly true if in the idealizational law it takes into account some, but not all, main factors for the magnitude which is being explained. It is relatively true if in the idealizational law it takes into account all and only main factors for the explained magnitude. The more secondary factors are taken into account in the derived models (i.e. concretizations of the law), the higher is the level of its (relative) essential truthfulness. In the border case, if the idealizational theory considers all factors relevant for the explained magnitude, and if it does it in the sequence which corresponds to their significance for this magnitude, we say that the theory is absolutely true (Nowakowa, 1992b, p. 238).Footnote 12

The explanatory power and accuracyFootnote 13 of idealisational theory depend on the degree of “proximity” of the statement to the actual essential and nomological structures. The starting point of the explanation process is an idealisational statement showing how the states of the analysed phenomenon depend on the principal factor related to it. More realistic concretisations that take into account the influence of secondary factors are then derived from this statement. In a major simplification, “to explain a phenomenon is to reveal its essence and to show how, thanks to accidental circumstances, this essence leads to the occurrence of this phenomenon” (Nowak, 1977, pp. 93, 94).

In the following two sections, I show that ITS can be an important and fruitful tool in the reconstruction of political science modelling and analyses of cognitive progress in political science. Unlike other theories of science, ITS explicitly links idealisation and de-idealisation procedures to cognitive scientific progress. Furthermore, ITS provides methodological tools applicable to the reconstruction of theories built using idealisation and gradual de-idealisation procedures. In Sect. 4, I present a reconstruction of Jankowski’s theory of voting. The idealisational reconstruction makes it possible, first of all, to clarify the methodological structure of the analysed theory. Thanks to it, one can distinguish: the simplifying assumptions made by the researcher, the basic model, and the sequence of derivative models resulting from subsequent concretisations. The idealisational reconstruction, moreover, enables linking subsequent models to key theoretical concepts, which is of colossal importance as it sheds light on the evolution of the theoretical perspective adopted in the study (Nowak, 2000b). The results of the reconstruction and the findings I made based on them serve me in Sect. 5 as a starting point for discussing the role of gradual de-idealisation in the occurrence of cognitive progress in political science.

4 Case study: methodological reconstruction of Richard Jankowski’s theory of voting

4.1 Towards the single best explanation of voting

Voting is one of the most common, and perhaps most essential, democratic procedures. It enjoys the continued interest of political scientists, who eagerly seek answers to questions about its principles, mechanisms, functions, and consequences. The study of electoral participation was dominated after World War II by behaviouralists, placing particular emphasis on the use of empirical quantitative techniques. However, attempts to quantify the observable behaviour of individuals, despite a number of advantages and not inconsiderable relevancy, have all too rarely led to the theoretical integration and interpretation of the results obtained to the construction of coherent and comprehensive voting theories with high explanatory power and accuracy. The disadvantages of the apparent dominance of empiricism over theory were pointed out by advocates of formalist approaches, among whom rational choice theorists led the way. In the hyperfactualism of voter turnout research, they saw a departure from the standard paths of scientific knowledge development, familiar from more methodologically advanced sciences such as physics, biology or economics, for example. As a result, they attempted to construct a “single, systematic, rigorous, and parsimonious explanation” (Blais et al., 2000, p. 181) that would integrate existing partial explanations in the literature, showing how a range of different types of factors affect voter participation and abstention.

A pioneering example of the use of rational choice theory in the study of voter turnout is the standard calculus of voting model, the foundation for which was laid by Downs (1957). It assumes the investment nature of elections, meaning that individuals vote when the expected utility of participating in elections is positive, i.e., when the personal benefits exceed the costs. Such an account, however, corresponded very poorly with empirical reality, so it required necessary modifications and additions. Riker and Ordeshook (1968), for example, added to the standard calculus of voting a factor denoting the following consumption benefits: the satisfaction of confirming one’s political preferences, the satisfaction of acting in accordance with the ethics of voting, the satisfaction of confirming one’s affiliation and loyalty to the political system, the satisfaction of deciding to participate in voting, and the satisfaction of confirming one’s self-efficacy in the democratic system. Fiorina (1976) and Aldrich (1997) did the same, but with the difference that they clearly separated the benefits obtained from voting determined by a sense of civic duty from the benefits obtained from voting determined by expressive motivation. Tullock (1967), Edlin et al. (2007), and Jankowski (2015) included social benefits in the standard calculus of voting. In contrast, a slightly different path was taken by Cebula et al. (2003), capturing the benefits in the form of a function indicating the relevancy of such determinants as the importance of the office involved in a given election, the desire for change and the assessment of the functional effectiveness of a given political system.

Some of the modifications mentioned above were made to the standard calculus of voting by using the de-idealisation method. A particularly interesting case is Jankowski’s theory of voting, built on the gradual removal of quasi-idealising assumptions.

4.2 Jankowski’s models

Jankowski (2015) has set himself a very ambitious task. He set out to create a voting calculus that would explain the following empirical facts about voting behaviour in the United States:

-

reductions in voting costs tend to lead to a higher level of voter turnout (Li et al., 2018);

-

voter turnout varies according to the election level. Data from recent decades show that some 50–60% of eligible voters vote during presidential election years, approx. 40% in midterm elections and approx. 25–30% in off-year elections for state legislators and local elections (Shaw & Petrocik, 2020);

-

better educated and more informed voters manifest a higher propensity to vote (Gallego, 2010);

-

voters tend to abstain from voting in some elections on the ballot selectively (roll-off phenomenon) (Frisina et al., 2008; Wattenberg et al., 2000). Typically, the roll-off includes the elections from the bottom part of the ballot, although the reverse is sometimes the case (Knack & Kropf, 2003);

-

voters often vote strategically, i.e., they shift their support to the second-most-preferred candidate if their most-preferred candidate has little chance of winning (Burden & Jones, 2009).

4.2.1 Basic model

Jankowski began modelling by including in the standard calculus of voting the probability that a voter’s vote will either lead to a tie between two candidates (in case one of them has a plurality of one vote) or break the tie between them. After adding a fifty per cent chance that the candidate preferred by the voter will win, Jankowski’s basic model took the following form:

where \({EU}_{i}\) stands for the expected utility of voting for the voter \(i\), \({p}_{1}\) is the probability of the voter \(i\) casting the deciding vote for the preferred candidate’s victory, \({B}_{1}\) stands for the personal benefits of the preferred election outcome, and \(C\) represents the costs of voting.

Equation (1) implies the instrumental nature of elections. A rational individual votes only when the expected utility of participating in elections is positive, that is, when the personal and self-interested benefits of making a particular choice outweigh the costs of acquiring, processing and assimilating information about candidates and political parties and the costs of having to go to the polling station and cast a ballot,Footnote 14 which can be formally expressed as follows: \(\frac{{p}_{1}{B}_{1}}{2}>C\). However, such estimated voting utility is only relevant if there is a high probability that a particular voter’s vote will affect the final outcome of the election. In fact, the probability of a decisive vote is infinitesimally small,Footnote 15 in effect: \(\frac{{p}_{1}{B}_{1}}{2}<C\). Thus Eq. (1) predicts voter abstention, which starkly contrasts with empirical reality. The inconsistency between theory and the level of real turnout rates is known as the “paradox of (not) voting” (Mueller, 2003).

Jankowski began building the theory with a decisive deformation of reality.Footnote 16 His first most idealised model is based on many quasi-idealising assumptions. Here are the most important ones:

(a1) an individual makes a choice between only two candidates;

(a2) the sense of civic duty does not affect the voting decision;

(a3) altruistic motivation does not influence the decision to vote;

(a4) the probability of the influence of elected officials on legislation is irrelevant to the decision to vote;

(a5) the degree of certainty as to the knowledge regarding the candidates’ policy positions is not relevant to the voting decision.Footnote 17

In an idealisational reconstruction, the basic model can be represented using the following statement:

Statement (2) contains a series of quasi-idealising assumptions that determine the conditions for the applicability of the model theory. This statement will only be non-emptily fulfilled if we assume the non-influence of secondary factors.

As a result of the idealisation method, Jankowski obtained a highly simplified picture of the analysed phenomenon. According to the basic model, solely the calculation of personal benefits and costs related to the likelihood of a decisive vote lies at the heart of voter participation and abstention. Such a reduced view of voting has its strengths and weaknesses. This is because, on the one hand, it allows the researcher to focus on the aspect of the analysed phenomenon that interests him, leaving out permanently or only temporarily factors considered secondary. Isolating the object of study from the thicket of extremely complex networks of relationships and dependencies can provide considerable cognitive benefits. One of the key ones seems to be a better understanding of the nature of the modelled system. On the other hand, however, the omission of multiple factors and the relationships that occur between these factors usually results in the low explanatory power of the model in the social sciences. As a result, there is enough inconsistency between theory and reality that it generates numerous tensions and problems, ultimately prompting researchers to modify or abandon the adopted theoretical perspective. The de-idealisation method used by Jankowski provides one possibility for modifying the theoretical assumptions made.

4.2.2 Civic duty model

The narrow (economic) view of rationality implied by the basic model is of limited use in the study of voting behaviour. It usually provides a starting point for building more detailed theories that better describe how humans function within complex networks of political dependencies (Geys, 2006). The inconsistency between theory and reality motivates researchers to move away from conceptions that describe individuals as if they were omniscient calculators and construct more adequate models. Jankowski also followed this path, de-idealising the basic model. He assumed, following the example of Riker and Ordeshook (1968), that a sense of civic duty (\(D\)) can influence the decision to vote. Thus, he led to the transformation of Eq. (1) to the form:

The reconstructed model of civic duty takes the form of the following statement:

By including the \(D\) factor in the basic model, which is independent of the probability of casting a decisive vote, a consumption component was added to the investment component of voting. Jankowski noted that two categories of civic duty could determine the decision to vote. He called one a strong duty and the other a weak duty. Strong duty is a kind of moral obligation that is independent of the cost of voting. On the other hand, weak duty is, depending on the cost of voting, the satisfaction derived from an individual’s fulfilment of their moral obligations. Jankowski said that the \(D\) factor solves the main problem of the basic model, i.e., the prevalence of positive turnout rates, but does so at the expense of the predictive power of the rational choice hypothesis. Another feature of the model expressed by Eq. (3), which is of considerable importance for Jankowski’s analysis, is its inability to explain the variation in the level of voter turnout between different types of elections and strategic voting.

4.2.3 Altruistic model

The \(D\) factor clearly indicates the motivation for voting. A voter driven by a strong sense of civic duty will vote, even if both the private and social benefits of a particular choice are small. Thus, the fulfilment of civic duty is not dependent on either concern for personal welfare or concern for the welfare of others. However, the situation is different if we assume that the voter can be influenced by altruistic motivation. Individuals for whom the well-being of other members of the political community is important will choose to vote only if they believe that the political platform of one of the candidates is socially preferable and the altruistic benefit is sufficiently large (Fowler, 2006).

The limitations of the civic duty model, mainly its difficulty in explaining the variation in turnout between different types of elections, led Jankowski to assume that the benefits a voter receives “from seeing others’ increased happiness through the adoption of candidate A’s platform relative to that of candidate B” (Jankowski, 2015, p. 18) may influence voting decisions. Jankowski again de-idealised the basic model, introducing the \({B}_{2}\) factor denoting pure altruistic benefits.

As a result of de-idealisation, Eq. (1) was transformed into the following form:

The idealisational reconstruction of the altruistic model takes the form of the following statement:

According to the altruistic model, individuals can vote based on their concerns for the happiness of others. The choice of a particular candidate is then dictated by their political platform—an individual chooses a candidate whose platform provides more assistance to those in need than that of an alternative candidate. Including the \({B}_{2}\) factor in the calculus of voting, as Jankowski explicitly emphasised, offsets the low probability of casting a decisive vote, thus providing the basis for obtaining a calculation in which the expected utility of voting will be positive.

The inclusion of altruistic motivation in the calculus of voting, in particular, gave Jankowski the basis for explaining the differences in turnout between different levels of elections, specifically, the higher turnout in federal elections compared to state and local elections. Jankowski noted that the social demands contained in the political platforms of candidates running in federal elections can have an influence on the lives of many more people than the social demands proclaimed by candidates running for office from lower levels of the political system. A voter motivated by altruism will thus benefit more by electing a president or congressman than by participating in the election of a governor or state senator. However, this reasoning has a fundamental flaw, as it does not explain the differences in turnout rates occurring between presidential and midterm election years.

The altruistic motivation embodied in Eq. (5) further allowed Jankowski to explain strategic voting and the relationship between voting costs and voter turnout. People shift their support to their second-most preferred candidate if their most preferred candidate has little chance of winning. The change in voting preferences is, therefore, significantly related to the probability of being decisive, and for this reason, as Jankowski pointed out, it cannot be explained by the civic duty model, which predicts that given a strong enough influence of \(D\), an individual will vote regardless of the possible outcome of the election, self-interest and costs incurred. The independence of \(D\) from the cost of information means that the civic duty model also fails to explain the influence of reducing the cost of information on increasing the level of voter turnout. People may be more likely to vote in federal elections than in state and local elections because, in the former, the cost of being informed is relatively lower.Footnote 18 They vote despite the fact that \(\frac{{p}_{1}{B}_{1}}{2}<C\). However, according to Jankowski, this fact becomes understandable only after pure altruism is included in the analysis.

4.2.4 Uncertainty model

Jankowski finally noted that voters are also affected by other types of uncertainty than the uncertainty generated by the probability of casting a decisive vote. They can affect voting behaviour and are due to the nature of the process of enacting and implementing policy decisions. First, the voter has no guarantee that the candidate for whom they will cast their vote will be able (and willing) to implement their election program. This state of uncertainty is further exacerbated by the scarcity of information on candidates’ policy positions. To account for the types of uncertainty mentioned above in the analysis, Jankowski simultaneously introduced two factors into Eq. (5): \({p}_{2}\), which denotes the probability that the elected politician will have a decisive influence on the legislative process, and \(\varphi\), which refers to the degree of certainty of an individual’s knowledge of the candidates’ policy positions. Following the changes, the final voting equation was created in the form:

In an idealisational reconstruction, the uncertainty model can be expressed by the following statement:

Linking \({p}_{2}\) and \(\varphi\) to \({B}_{2}\) provided a basis for explaining the differences in voter turnout rates between presidential and midterm election years. According to Jankowski, the higher turnout in the former may be due to two reasons. First, the president has much more power to shape policies than individual senators and House members who make decisions collegially. By casting a vote in presidential elections, voters are more confident that their candidate will be able to deliver on their election promises once in office. Second, the cost of acquiring information is much lower for presidential elections, allowing voters to reduce uncertainty due to insufficient knowledge of candidates’ policy positions. There is also another explanation for the discussed differences in turnout, which does not refer to the strength of the office’s influence on policy:

In presidential election years voters elect a president, a House representative, and periodically a Senate member. In off-year elections [midterm elections—M.W.], they elect a House member and, with the same frequency, a Senate member. Hence, their probability of affecting legislation is highest in presidential election years because of the extra office (the presidency) that individuals vote for (Jankowski, 2015, p. 21).

Linking \(\varphi\) to \({B}_{2}\) further created an opportunity for Jankowski to explain two other empirical facts: the greater propensity of better-educated and informed people to vote and the roll-off phenomenon. According to the assumptions of the model expressed in Eq. (7), the reduction in uncertainty generated by insufficient knowledge of candidates’ policy positions increases the level of voter turnout. Increased knowledge, however, results in a greater propensity to vote only if, as Jankowski strongly emphasised, individuals go to the polling stations motivated not by their own self-interest but by the welfare of others. The chance that well-informed but self-interested voters will vote is extremely low because, in their calculations, the costs of voting still exceed the expected benefits. A similar situation occurs in the case of roll-off voting. Individuals vote more often in elections located at the top of the ballot. To explain this fact, according to Jankowski, it is not enough simply to observe that the elections at the top of the ballot tend to have lower information acquisition costs than those at the bottom of the ballot. Information costs become a significant explanatory factor only with the assumption that the welfare of other people is significant to voters.

4.2.5 Relationships between the models

The reconstructed theory of voting shows that one of the most cognitively fruitful methods of theorising is the idealisation of the analysed reality. The application of the idealisation method may result from numerous factors, such as the limitations of human brains and minds, concerns about the mathematical feasibility of the model, or the desire to focus on a specific causal pattern. A modeller wishing to know better and understand a selected fragment of reality does not consider it in all possible aspects but concentrates on those features and relationships that they consider most significant from the point of view of the adopted theoretical perspective while permanently or only temporarily overlooking features and relationships to which they ascribe less importance. My findings, therefore, support the notion that the objective of model-based theorising is not to strive for the most accurate representation of the analysed phenomenon, but its appropriate deformation. For this reason, the modeller’s work resembles more the creative activity of a caricaturist, greatly simplifying and distorting the image of reality, rather than the photographer’s efforts to depict the chosen object in all its details.

According to ITS, the idealisation method marks the first stage of scientific inquiry. In stage two, the de-idealisation method is used. The acceptable level of idealisation of a theory depends on many factors. It is relativised to a particular field; in addition, it can be influenced by cognitive needs emerging in a particular scientific community at a particular time, the level of methodological development of a particular field, or the degree of competition in the market for the exchange of ideas (pressure exerted by alternative theories). Therefore, if, for some reason, a theory no longer meets the expectations formulated for it, e.g., it does not explain the analysed phenomenon as well as the competing theory, the models of that theory can be made more specific. The process of making a deformed reality more realistic occurs by introducing previously omitted features and relationships into the model. When it proceeds gradually, the theory becomes a sequence of models that differ in the number of idealising assumptions made. This is how Jankowski’s theory of voting is structured.

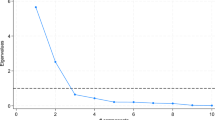

Jankowski’s theory is a combination of a star-shaped system and a linear system. The basic model assumes the greatest number of simplifications and is, therefore, the starting point in theory building. According to it, the main determinant of voting is the desire to maximise narrowly (economically) understood utility. Voters appear in this view as omniscient calculators. Jankowski omits factors in the basic model whose impact on the analysed magnitude he considers secondary. These include social and psychological factors. Simplifying assumptions are emptily fulfilled in the empirical world, in which the analysed phenomenon is affected by both principal and secondary determinants. However, this does not mean that the use of the idealisation method is unwarranted or cognitively impoverishing. Its main advantage is that it makes it possible to isolate the analysed magnitude from the multitude of complex conditions and relationships and show how this magnitude depends on its main factor. The inconsistency between theory and reality prompted Jankowski to begin the second stage of modelling, which involved gradually removing counterfactual assumptions and taking into account secondary factors. Figure 1 reflects the process of gradual de-idealisation, which determines the relationship between the models of Jankowski’s theory.

First, Jankowski removed the quasi-idealising assumption that a sense of civic duty does not influence the decision to vote. The introduction of factor \(D\) into the basic model allowed him to clarify the main problem of standard rational-actor voting models, the prevalence of positive turnout. The civic duty model provides a basis for explaining the electoral participation of a sizable portion of the American public, but it does not explain other empirical facts regarding the behaviour of American voters. For this reason, Jankowski returned to the basic model and removed another quasi-idealising assumption. He made the basic model more realistic by introducing altruistic motivations. As a result, he obtained an altruistic model in the form of Eq. (5). Allowing for the decision to vote to be influenced by pure altruism allowed Jankowski to explain the variation in turnout between different levels of elections, strategic voting, and the effect of reducing voting costs on increasing the level of voter turnout. In the final modelling phase, Jankowski removed the two quasi-idealising assumptions. Simultaneously, he introduced into Eq. (5) the degree of probability that an elected politician will have a decisive influence on the legislative process and the degree of certainty of an individual’s knowledge of candidates’ policy positions. Linking \({p}_{2}\) and \(\varphi\) to \({B}_{2}\) provided a basis for explaining differences in turnout rates between presidential and midterm election years, the greater propensity of better-educated and informed people to vote, and the roll-off phenomenon.

5 Gradual de-idealisation and scientific progress

The theoretical argumentation adopted by Jankowski gives rise to the hypothesis that each successive model of the theory more accurately represents the analysed phenomenon so that the theory achieves a higher and higher level of (relative) essential truthfulness. This hypothesis is confirmed, to varying degrees and extents, by the results of empirical studies.

The basic model, despite not explaining the key issue (i.e., the prevalence of positive turnout), is useful, to some extent, in explaining turnout at the margin, as shown, among other things, by a meta-analysis conducted by Cancela and Geys (2016), covering 185 studies published between 1968 and 2015. A meta-analytic assessment of the conditions of voter turnout indicated the relevance of factors such as campaign expenditures, election closeness, registration requirements, population size and composition, concurrent elections, and the electoral system. Thus, it indirectly confirms that the probability of casting a decisive vote and the instrumental benefits and costs can affect differences in voter turnout. However, this is not a confirmation of the explanatory utility of the entire model but only of its components, which act independently of each other. The explanatory difficulties of pure rational choice theory were pointed out by Dowding (2005, p. 446):

There is no doubt that the marginal defence of rational choice has some merit. The simple equation does point to influences on marginal changes in turnout. But, at the end of the day, it only shows that the variables in the simple equation matter, not that when people vote they do so rationally.

Empirical studies show that a sense of civic duty (e.g., Ashenfelter & Kelley, 1975; Blais & Achen, 2019; Blais et al., 2000; François & Gergaud, 2019; Knack, 1994; Riker & Ordeshook, 1968) and uncertainty (e.g., Bartels, 1986; Gant, 1983; Sanders, 2001) can be important predictors of individual voter turnout. People often feel obliged to vote. In doing so, they believe they are fulfilling their duty to the political system. By participating in the democratic procedure for selecting political representation, they affirm their membership in the political community and express their commitment to democratic values, which underlie the legal norms governing the functioning of society and the state. They also often make the decision to vote under the influence of reducing uncertainty about candidates.

Empirical findings also support the introduction of altruistic motivation into the analysis. They suggest that for some voters, social benefits may be significant. Knack (1992), for example, using 1991 National Election Study (NES) data, identified a positive relationship between social altruism (charity, volunteerism, etc.) and voter turnout. Fowler (2006) conducted a laboratory experiment in which he used the dictator game to measure the altruism of individuals. The results of the experiment showed that people often choose to incur costs to improve the situation of others. Altruistic behaviour was correlated with reported voting. A positive relationship between altruism and attendance was also found by Jankowski (2007, 2015), analysing data from the 1995 NES Pilot Study. Questions measuring the humanitarian values of the respondents formed the basis of his analysis.

The empirical results thus warrant a cautious conclusion that Jankowski’s gradual removing of simplifying assumptions is probably related to the occurrence of cognitive scientific progress. Building more adequate models allows Jankowski to formulate better and better explanations. Each successive (less idealised) model of the theory explains the same facts as the preceding (more idealised) model and new facts. Thus, in the course of the theory’s development, there is no loss of explanatory power but rather a strengthening of it.

Jankowski’s theory reconstruction points out some limitations of using ITS to study scientific progress. ITS assumes that idealisations apply only to secondary factors. According to this assumption, only the most important factors from the theoretical perspective adopted should be adequately represented in the basic model. However, it turns out that in many physical and social systems, principal factor analysis is not a sufficient condition for satisfactory prediction of the phenomenon’s future states, even when the phenomenon occurs with such regularity as elections. This is due to the fact that the influence of the principal factor can be noticeably less than the summary effect of secondary factors. It would therefore be a mistake to underestimate the influence of secondary factors, especially since many complex systems are characterised by great sensitivity to small perturbations, with the result that small causes generate in them, from time to time, unimaginably large effects (Brzechczyn, 2020; Ladyman & Wiesner, 2020).

Cognitive scientific progress, realised through the application of the de-idealisation method, can occur within a single field or at the intersection of two or more fields. In scenario one, the process of removing successive simplifying assumptions leads to the development of intra-field theories. This situation is reflected, among other things, in the often described and analysed transition from the simple pendulum model to more detailed models (Morrison, 2015). Scenario two is more complicated, as it involves changing the nature of the theory. According to it, the use of the de-idealisation method results in inter-field theories. Jankowski’s theory of voting illustrates this case perfectly. It points to the epistemic advantages of creating dense theoretical networks within the social sciences. Crossing the historical boundaries of disciplines and building bridges between them is particularly important in an era of rapidly increasing specialisation and the dynamic diversification of science.

The basic model of Jankowski’s theory assumes an economic view of rationality, according to which individuals are guided by narrow self-interest. They do the best they can for themselves, that is, maximise their utility. In this view, a rational voter is an egoist who: (1) when faced with multiple alternatives, is able to make a decision; (2) constructs a ranking of alternatives based on which one is more preferred than the other; (3) preference ranking is transitive (if A is better than B and B is better than C, then A must be better than C); (4) chooses among the alternatives that are in the top spots of the preference ranking; (5) finally, under unchanged conditions, will always make the same decision (Downs, 1957). While the inclusion of motivations other than purely self-interested in the basic model increases the potential explanatory and predictive power of the model, it also significantly reduces the cognitive values of the rational voter hypothesis, as Mueller (2003, p. 306) aptly pointed out, using the following market metaphor:

Any hypothesis can be reconciled with any conflicting piece of evidence with the addition of the appropriate auxiliary hypothesis. If I find that the quantity of Mercedes autos demanded increases following an increase in their price, I need not reject the law of demand, I need only set it aside, in this case by assuming a taste for “snob appeal.” But in so doing I weaken the law of demand, as a hypothesis let alone as a law, unless I have a tight logical argument for predicting this taste for snob appeal.

The process of expanding the scope of the concept of rationality brings an explanation of rational choice dangerously close to that of tautology. The theorist’s recognition that any action can bring an individual utility makes it possible to build an account according to which voting will be “rational” (the benefits of voting will outweigh the costs) regardless of the type of motivation. The most effective yet simple and theoretically parsimonious solution to the inconsistency between the rational choice hypothesis and the nature of de-idealised models seems to be to abandon the clinging to an economic view of rationality and to assume that one of the key consequences of using, by Jankowski, the de-idealisation method is a change in the theoretical perspective of model interpretation. In its first and most basic model, Jankowski’s theory exemplifies the application of the rational choice paradigm developed by neoclassical economists to political science research. However, already the introduction of the first secondary factor into the basic model significantly modifies the rational choice framework. It transforms Jankowski’s theory from a conception of the borderline between economics and political science into one with a significant psychological component. De-idealisation, by virtue of which a sense of civic duty was included among the determinants of voting, therefore sets the borders of applicability of the rational choice paradigm.Footnote 19

My investigation shows that conceptions that assume the reversibility of the idealisation process can be an important and fruitful theoretical perspective in reconstructions of political science modelling and analyses of cognitive progress in political science. The accumulation of theoretical knowledge occurs in political science not only “horizontally”, i.e., by building many different and not always consistent models of the same phenomenon, but also “vertically”, i.e., through linear development, when statements with fewer simplifications replace statements containing more simplifications. In the second case, a key role is played by gradual de-idealisation, i.e., a procedure that enables the transition from coarse-grained models to fine-grained models. “Vertical” cognitive development can be interpreted using a variety of theoretical categories, depending on the view of scientific progress adopted by the methodologist in the reconstruction being carried out. According to the ITS-implied view used in my study, improvements toward less idealised models entail an increase in the degree of essential truthfulness of the theory.

6 Summary

In this article, I presented and discussed the results of the methodological reconstruction of Jankowski’s theory of voting. This reconstruction allows us to draw several cautious conclusions about the role of the gradual de-idealisation procedure in the processes of political science theory building and the occurrence of cognitive progress in political science. However, it should be noted that my findings are based on a single case study; therefore, they are insufficient to justify a full-fledged methodological claim. They require many additions and confirmation by reconstructions of theories from other areas of political science research than voting behaviour. The main findings and conclusions of the investigation are summarised as follows:

-

the methodological reconstruction of Jankowski’s theory showed that conceptions assuming the reversibility of the idealisation process can be a very fruitful theoretical perspective in the analysis of political science modelling and cognitive progress in political science;

-

in reconstructing Jankowski’s theory, I applied the ITS, a conception that appeals to essentialism. ITS assumes that more complex models, i.e., those including fewer idealisations, better represent the target phenomena than simpler and more idealised models. The epistemic preference of the former over the latter is determined by a higher level of adequacy, giving rise to the construction of better explanations;

-

the choice of the theoretical perspective of the reconstruction carried out was dictated by the nature and structure of Jankowski’s theory. ITS is only fully applicable in the analysis of theories formed by the gradual removal of simplifying assumptions;

-

in the case of Jankowski’s theory, the transition from more idealised models to less idealised models probably marks the line of scientific progress. Statements that better identify the secondary factors of the analysed phenomenon are characterised by a higher degree of (relative) essential truthfulness;

-

the shift from the coarse-grained level of modelling to the fine-grained level has resulted in a change in the study’s theoretical perspective. Jankowski began building the theory with a model embedded in the classical rational choice paradigm. Successive de-idealisations have led to a theory that combines economic and political science approaches with sociological and psychological approaches;

-

Jankowski’s basic model has different functions in theory than derived models. It focuses on a single causal pattern, effectively deforming the analysed phenomenon to the highest degree. However, the simplicity of the basic model is not due to chance but is dictated by specific theoretical needs. This circumstance calls into question the strong criticism faced by the classical rational choice theory;

-

the results of my study support the notion that the finding of the usefulness or lack of usefulness of conceptions that assume the reversibility of the idealisation process in the reconstructions of theories should not be the basis for overarching generalisations. The sheer number of modelling strategies and techniques means that each case requires a separate analysis;

-

the reconstruction of the theory is a procedure that requires the adoption of multiple simplifications. In analysing Jankowski’s theory, I focused solely on the methodological aspect while ignoring other aspects and conditions, such as institutional or social ones. Adequate recognition of the influence of the institutional or social environment on the modelling of political phenomena will require detailed research in the sociology of knowledge. Perhaps not only cognitive and methodological factors limit the use of the gradual de-idealisation method, but also the institutional organisation of science and social expectations towards the researchers. Today’s following by part of the scientific community, of periodic research trends (taking up issues that increase the chance of publication success and, in prospect, career advancement), the strong emphasis on the originality of the research conducted, and the ever-increasing non-scientific responsibilities, make it much more difficult to gain an in-depth understanding of the fragments of the analysed reality.Footnote 20

Notes

In this article, I focus only on internal progress, i.e., the changes taking place inside certain research traditions (research programs or paradigms). However, I leave the global progress of a discipline outside the scope of my interest (see Dryzek, 1986).

The scant interest of philosophers of the social sciences in issues concerning the methodological aspects of building theoretical models of political phenomena seems to be a consequence of the peculiar approach of quite a few political scientists to the relationship between empirical research and the theoretical development of their own discipline. The essence of this approach is hyper-empiricism, as aptly pointed out by Goldfarb and Sigelman (2010, p. 295): “The discipline of political science is far less paradigm-driven than economics, and political scientists tend to be motivated, first and foremost, by the desire to provide convincing accounts of specific behaviors and outcomes. For the most part, then, political scientists start with a puzzle and look around for plausible solutions. Their explanations are likely to be amalgams of intuitions, interpretations of previously reported empirical results, and scraps of theory borrowed from various neighboring disciplines (especially psychology and economics).”.

In the literature, we can find different approaches to the method of idealisation. See Shech (2023) for a summary and overview of major approaches.

The image of the space of essential factors for F is different from the space of factors essential for F. The first concept means a construct created by the researcher extracting, more or less accurately, the determinants of the analysed magnitude. The second concept, on the other hand, involves the actual set of determinants of the analysed magnitude.

The image of the essential structure of F is a construct created by the researcher, while the essential structure of F is the actual hierarchisation of the power of the influence of individual factors on the analysed magnitude F.

However, this claim will be correct when one adopts a definition of the concept of theory broad enough to include “anything that could be truthlike according one’s definition of truthlikeness” (Dellsén et al., 2022, p. 22). For details and discussion see, e.g., Cevolani and Tambolo (2013) and Rowbottom (2023).

The problem of the epistemic approach is the occurrence of progress with the transition from one false theory to another that is also false. Bird (2007, 2016) tried to solve it by proposing a strategy for transforming false theories into true theories and pointing out the importance of accumulating the true consequences of accepted false theories. See Rowbottom (2008), Cevolani and Tambolo (2013), Niiniluoto (2014), Dellsén (2018) and Saatsi (2019) for much detailed discussions on this and related issues.

According to ITS, the nomological structure of the analysed magnitude F in scope Z is a hierarchy of the dependencies of F on essential factors (Nowak, 1977).

The process of acquiring a progressively higher degree of essential truthfulness by the idealisational theory is explained in ITS through a lens of a three-stage model of cognitive development. The period of prescientific cognition ends with the discovery of partial truth regarding the studied phenomenon, while the discovery of relative truth ends the period of pretheoretical science and is followed by the period of mature science (Nowakowa, 1992a).

Explanatory power is defined by “the proportion of facts explained by the theory to the facts in a given domain”, while explanatory accuracy is defined by “the difference between the theoretical and the empirical value of a given magnitude under explanation” (Nowak, 2012, pp. 38, 39).

Information costs tend to be higher than the direct costs of participating in elections.

This is especially true for large populations.

Jankowski shows no methodological awareness of the use of idealisation and de-idealisation methods.

The quasi-idealising assumptions of the basic model ignore influences that have been repeatedly discussed in the literature. Downs himself has already pointed out the relevancy of other types of benefits than strictly self-interested ones. He noted, for example, that a rational voter is willing to participate in elections even when in the short term the costs of that participation are higher than the benefits. The reason for this is the long-run participation value that is associated with the existence of democracy: “The advantage of voting per se is that it makes democracy possible. If no one votes, then the system collapses because no government is chosen. We assume that the citizens of a democracy subscribe to its principles and therefore derive benefits from its continuance; hence they do not want it to collapse. For this reason they attach value to the act of voting per se and receive a return from it” (Downs, 1957, pp. 261, 262). Downs also pointed to the potential importance of altruistic motivation: “It is possible for a citizen to receive utility from events that are only remotely connected to his own material income. For example, some citizens would regard their utility incomes as raised if the government increased taxes upon them in order to distribute free food to starving Chinese. There can be no simple identification of “acting for one’s own greatest benefit” with selfishness in the narrow sense because self-denying charity is often a great source of benefits to oneself. Thus, our model leaves room for altruism in spite of its basic reliance upon the self-interest axiom” (Downs, 1957, p. 37) and uncertainty resulting from insufficient information: “Many citizens […] are uncertain about how to vote. Either they have not made up their minds at all, or they have reached some decision but feel that further information might alter it” (Downs, 1957, p. 85).

Greater availability of information reduces the cost of acquiring it.

See Goldfarb and Sigelman (2010) for much more detailed discussions on this and related issues.

The social and institutional limitations of using the gradual de-idealisation method were brought to my attention by Krzysztof Brzechczyn, for which I am grateful to him.

References

Aldrich, J. N. (1997). When is it rational to vote? In D. C. Mueller (Ed.), Perspectives on public choice: A handbook. Cambridge University Press.

Alexandrova, A. (2008). Making models count. Philosophy of Science, 75(3), 383–404. https://doi.org/10.1086/592952

Ashenfelter, O., & Kelley, S. (1975). Determinants of participation in presidential elections. The Journal of Law & Economics, 18(3), 695–733. https://doi.org/10.1086/466834

Bartels, L. M. (1986). Issue voting under uncertainty: An empirical test. American Journal of Political Science, 30(4), 709–728. https://doi.org/10.2307/2111269

Batterman, R. W. (2002). The devil in the details: Asymptotic reasoning in explanation, reduction, and emergence. Oxford University Press.

Batterman, R. W. (2009). Idealization and modeling. Synthese, 169(3), 427–446. https://doi.org/10.1007/s11229-008-9436-1

Bird, A. (2007). What is scientific progress? Noûs, 41(1), 64–86. https://doi.org/10.1111/j.1468-0068.2007.00638.x

Bird, A. (2008). Scientific progress as accumulation of knowledge: A reply to Rowbottom. Studies in History and Philosophy of Science Part A, 39(2), 279–281. https://doi.org/10.1016/j.shpsa.2008.03.019

Bird, A. (2016). Scientific progress. In P. Humphreys (Ed.), The Oxford Handbook of Philosophy of Science (pp. 544–563). Oxford University Press.

Blais, A., & Achen, C. H. (2019). Civic duty and voter turnout. Political Behavior, 41(2), 473–497. https://doi.org/10.1007/s11109-018-9459-3

Blais, A., Young, R., & Lapp, M. (2000). The calculus of voting: An empirical test. European Journal of Political Research, 37(2), 181–201. https://doi.org/10.1023/A:1007061304922

Bokulich, A. (2008). Reexamining the quantum-classical relation: Beyond reductionism and pluralism. Cambridge University Press.

Brzechczyn, K. (2020). The historical distinctiveness of Central Europe: A study in the philosophy of history. Peter Lang.

Burden, B. C., & Jones, P. E. (2009). Strategic voting in the US. In S. Bowler, A. Blais, & B. Grofman (Eds.), Duverger’s law of plurality voting: The logic of party competition in Canada, India, the United Kingdom and the United States (pp. 47–64). Springer.

Cancela, J., & Geys, B. (2016). Explaining voter turnout: A meta-analysis of national and subnational elections. Electoral Studies, 42, 264–275. https://doi.org/10.1016/j.electstud.2016.03.005

Carrillo, N., & Knuuttila, T. (2022). Holistic idealization: An artifactual standpoint. Studies in History and Philosophy of Science, 91, 49–59. https://doi.org/10.1016/j.shpsa.2021.10.009

Cassini, A. (2021). Deidealized models. In A. Cassini & J. Redmond (Eds.), Models and idealizations in science: Artifactual and fictional approaches (pp. 87–113). Springer International Publishing.

Cebula, R., McGrath, R., & Paul, C. (2003). A cost benefit analysis of voting. Academy of Economics and Finance Papers and Proceedings, 30(1), 65–68.

Cevolani, G., & Tambolo, L. (2013). Progress as approximation to the truth: A defence of the verisimilitudinarian approach. Erkenntnis, 78(4), 921–935. https://doi.org/10.1007/s10670-012-9362-y

Dellsén, F. (2016). Scientific progress: Knowledge versus understanding. Studies in History and Philosophy of Science Part A, 56, 72–83. https://doi.org/10.1016/j.shpsa.2016.01.003

Dellsén, F. (2018). Scientific progress: Four accounts. Philosophy Compass, 13(11), e12525. https://doi.org/10.1111/phc3.12525

Dellsén, F. (2021). Understanding scientific progress: The noetic account. Synthese, 199(3), 11249–11278. https://doi.org/10.1007/s11229-021-03289-z

Dellsén, F. (2023). The noetic approach: Scientific progress as enabling understanding. In Y. Shan (Ed.), New philosophical perspectives on scientific progress (pp. 62–81). Routledge.

Dellsén, F., Lawler, I., & Norton, J. (2022). Thinking about progress: From science to philosophy. Noûs, 56(4), 814–840. https://doi.org/10.1111/nous.12383

Dowding, K. (2005). Is it rational to vote? Five types of answer and a suggestion. The British Journal of Politics and International Relations, 7(3), 442–459. https://doi.org/10.1111/j.1467-856X.2005.00188.x

Downs, A. (1957). An economic theory of democracy. Harper & Row.

Dryzek, J. S. (1986). The progress of political science. The Journal of Politics, 48(2), 301–320. https://doi.org/10.2307/2131095

Edlin, A., Gelman, A., & Kaplan, N. (2007). Voting as a rational choice: Why and how people vote to improve the well-being of others. Rationality and Society, 19(3), 293–314. https://doi.org/10.1177/1043463107077384

Elgin, M., & Sober, E. (2002). Cartwright on explanation and idealization. Erkenntnis, 57(3), 441–450. https://doi.org/10.1023/A:1021502932490

Fiorina, M. P. (1976). The voting decision: Instrumental and expressive aspects. The Journal of Politics, 38(2), 390–413. https://doi.org/10.2307/2129541

Fowler, J. H. (2006). Altruism and turnout. The Journal of Politics, 68(3), 674–683. https://doi.org/10.1111/j.1468-2508.2006.00453.x

François, A., & Gergaud, O. (2019). Is civic duty the solution to the paradox of voting? Public Choice, 180(3), 257–283. https://doi.org/10.1007/s11127-018-00635-7

Frisina, L., Herron, M. C., Honaker, J., & Lewis, J. B. (2008). Ballot formats, touchscreens, and undervotes: A study of the 2006 midterm elections in Florida. Election Law Journal: Rules, Politics, and Policy, 7(1), 25–47. https://doi.org/10.1089/elj.2008.7103

Gallego, A. (2010). Understanding unequal turnout: Education and voting in comparative perspective. Electoral Studies, 29(2), 239–248. https://doi.org/10.1016/j.electstud.2009.11.002

Gant, M. M. (1983). Citizen uncertainty and turnout in the 1980 presidential campaign. Political Behavior, 5(3), 257–275. https://doi.org/10.1007/BF00988577

Geys, B. (2006). ‘Rational’ theories of voter turnout: A review. Political Studies Review, 4(1), 16–35. https://doi.org/10.1111/j.1478-9299.2006.00034.x

Godfrey-Smith, P. (2009). Abstractions, idealizations, and evolutionary biology. In A. Barberousse, M. Morange, & T. Pradeu (Eds.), Mapping the future of biology (pp. 47–56). Springer.

Goldfarb, R. S., & Sigelman, L. (2010). Does ‘civic duty’ ‘solve’ the rational choice voter turnout puzzle? Journal of Theoretical Politics, 22(3), 275–300. https://doi.org/10.1177/0951629810365798

Jankowski, R. (2007). Altruism and the decision to vote: Explaining and testing high voter turnout. Rationality and Society, 19(1), 5–34. https://doi.org/10.1177/1043463107075107

Jankowski, R. (2015). Altruism and self-interest in democracies: Individual participation in government. Palgrave Macmillan.

Jebeile, J., & Kennedy, A. G. (2015). Explaining with models: The role of idealizations. International Studies in the Philosophy of Science, 29(4), 383–392. https://doi.org/10.1080/02698595.2015.1195143

Kennedy, A. G. (2012). A non representationalist view of model explanation. Studies in History and Philosophy of Science Part A, 43(2), 326–332. https://doi.org/10.1016/j.shpsa.2011.12.029

Knack, S. (1992). Social altruism and voter turnout: Evidence from the 1991 NES Pilot Study (nes002294; NES Pilot Study Report).

Knack, S. (1994). Does rain help the republicans? Theory and evidence on turnout and the vote. Public Choice, 79(1), 187–209. https://doi.org/10.1007/BF01047926

Knack, S., & Kropf, M. (2003). Roll-off at the top of the ballot: International undervoting in American presidential elections. Politics & Policy, 31(4), 575–594. https://doi.org/10.1111/j.1747-1346.2003.tb00163.x

Knuuttila, T., & Morgan, M. S. (2019). Deidealization: No easy reversals. Philosophy of Science, 86(4), 641–661. https://doi.org/10.1086/704975

Krajewski, W. (1977). Idealization and factualization in science. Erkenntnis, 11(1), 323–339. https://doi.org/10.1007/BF00169860

Kuhn, T. S. (1962). The structure of scientific revolutions. University of Chicago Press.

Ladyman, J., & Wiesner, K. (2020). What is a complex system? Yale University Press.

Laudan, L. (1977). Progress and its problems: Toward a theory of scientific growth. University of California Press.

Lawler, I. (2023). Scientific progress and idealisation. In Y. Shan (Ed.), New philosophical perspectives on scientific progress (pp. 332–354). Routledge.

Li, Q., Pomante, M. J., & Schraufnagel, S. (2018). Cost of voting in the American states. Election Law Journal: Rules, Politics, and Policy, 17(3), 234–247. https://doi.org/10.1089/elj.2017.0478

Mäki, U. (1994). Isolation, idealization and truth in economics. In B. Hamminga & N. B. De Marchi (Eds.), Idealization VI: Idealization in economics (pp. 147–168). Rodopi.

McMullin, E. (1985). Galilean idealization. Studies in History and Philosophy of Science, 16(3), 247–273. https://doi.org/10.1016/0039-3681(85)90003-2

Morgan, M. S. (2006). Economic man as model man: Ideal types, idealization and caricatures. Journal of the History of Economic Thought, 28(1), 1–27. https://doi.org/10.1080/10427710500509763

Morrison, M. (2015). Reconstructing reality: Models, mathematics, and simulations. Oxford University Press.

Mueller, D. C. (2003). Public choice III. Cambridge University Press.

Niiniluoto, I. (1984). Is science progressive? Reidel.

Niiniluoto, I. (1987). Truthlikeness. Reidel.