Abstract

We define two maps, one map from the set of conditional probability systems (CPS’s) onto the set of lexicographic probability systems (LPS’s), and another map from the set of LPS’s with full support onto the set of CPS’s. We use these maps to establish a relationship between strong belief (defined on CPS’s) and assumption (defined on LPS’s). This establishes a relationship at the abstract level between these two widely used notions of belief in an extended probability-theoretic setting.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the analysis of games, rationality and common belief of rationality is a benchmark model of strategic reasoning. Under that analysis, a player, say Ann, holds a probabilistic belief about the strategies and beliefs of the other player, say Bob.Footnote 1 Rationality requires that Ann maximizes her subjective expected utility, given her belief about how Bob plays the game. Belief of rationality requires that Ann assign probability one to Bob’s rationality. And so on.

It is well established that, for certain game-theoretic analyses, this benchmark model does not suffice. Two prominent examples involve sequential games and admissibility. We begin by reviewing why those analyses require going beyond ordinary probabilities and the solutions offered by the literature.Footnote 2

Sequential Games. In simultaneous-move games, Ann makes a choice without observing any action chosen by Bob. By contrast, in sequential games, Ann may gain information—specifically, she may observe actions taken by Bob—before making (some of) her own choices. (That is, she does not commit to a strategy upfront.) If Ann observes Bob choose an action to which she assigned ex ante positive probability, she can simply update her belief about Bob before making her own choice. However, if she is surprised by Bob’s action—that is, if she observes Bob choose an action to which she assigned ex ante zero probability—she will need to form a new belief. These surprise events may arise naturally: If Ann believes ex ante that Bob is “strategic,” she may be forced to assign zero probability to his choosing certain actions. At the same time, Bob’s own strategic considerations may depend on what he believes Ann will believe conditional upon observing a surprise event .

Battigalli and Siniscalchi (2002) provide a model of strategic reasoning that is well suited to capturing these endogenous surprise events. They endow players with conditional probability systems (Myerson, 1986a, b; Rényi, 1955), instead of ordinary probabilistic beliefs. A conditional probability system (CPS) is a sequence of hypotheses—one for each information set—that satisfies the rules of conditional probability when possible. Instead of requiring that Ann simply believes that Bob is rational at the start of the game, Battigalli and Siniscalchi ask Ann to believe Bob is rational, so long as this is consistent with the information she has learned. In this case, Ann is said to strongly believe that Bob is rational. Strong belief of rationality captures a form of forward-induction reasoning.

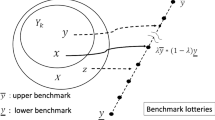

Admissibility. The admissibility criterion says that a rational player should not choose a weakly dominated strategy. Formally, this is equivalent to the requirement that a rational Ann maximizes her subjective expected utility, given a full-support belief about the strategy Bob plays. From this, rationality appears to require that Ann considers all possibilities. At the same time, belief of rationality requires that Ann rules out the possibility that Bob chooses a weakly dominated strategy. So, incorporating an admissibility criterion into the analysis of games creates a tension: On the one hand, Ann’s belief should allow all possibilities; on the other hand, it should rule out certain possibilities (Samuelson, 1992).

Brandenburger et al. (2008) provide a model of strategic reasoning that resolves this tension.Footnote 3 They endow players with lexicographic probability systems (Blume et al., 1991), instead of ordinary probabilistic beliefs. A lexicographic probability system (LPS) is a sequence of hypotheses, i.e., some \((\mu _{0},\ldots ,\mu _{n-1})\): Ann begins with a primary hypothesis \(\mu _0\) and only abandons the hypothesis if it does not determine strict preference; in that case, she turns to her secondary hypothesis \(\mu _{1}\), etc. Informally, she deems her primary hypothesis \(\mu _0\) infinitely more likely than her secondary hypothesis \(\mu _1\), which is, in turn, deemed infinitely more likely than \(\mu _2\), etc. Ann considers all possibilities if the LPS has full support. But, she can also rule out the possibility that Bob is irrational, if she views his rationality as infinitely more likely than his irrationality. In this latter case, Ann is said to assume that Bob is rational.

CPS’s and LPS’s both involve a sequence of hypotheses. Likewise, strong belief and assumption both capture the idea that Ann holds on to a hypothesis so long as she can. This raises the question: What is the relationship between strong belief and assumption? This paper aims to address just that question.

We address the question at the level of abstract probability theory. In particular, we define two maps—one from CPS’s to LPS’s and the other from LPS’s to CPS’s—that enable us to go from strong belief to assumption, and vice versa. Section 3 shows that (under appropriate hypotheses) an event will be assumed under an LPS \(\sigma \) if and only if it is strongly believed under some pre-image of \(\sigma \). Section 4 shows that (under appropriate hypotheses) an event will be strongly believed under a CPS p if and only if it is assumed under some pre-image of p.

To the best of our knowledge, this is the first paper to explore the relationship between strong belief and assumption. However, there are other papers that explore the relationship between extended probabilities, importantly, Hammond (1994) and Halpern (2010). Hammond focuses on finite spaces and considers three notions of extended probability: complete CPS’s (where there is conditioning on all events); full-support LPS’s (where every state gets positive probability under some measure); and logarithmic likelihood ratio functions (due to McLennan (1989a, b)). Halpern considers Popper spaces (a particular type of CPS) and structured LPS’s (a generalization of LPS’s). Section 5 elaborates on the connection to these papers.

2 Main definitions

This section gives the main definitions used throughout the paper. Let \(\Omega \) be a Polish space, and let \(\mathcal {A}\) be the Borel \(\sigma \)-algebra on \(\Omega \). Elements of \(\mathcal {A}\) are called events. A set of conditioning events is a nonempty collection of sets in \(\mathcal {A}\) that does not include the empty set. Write \(\mathcal {B}\) for a set of conditioning events. Throughout, we keep the measurable space \((\Omega ,\mathcal {A})\) fixed; we will vary the set of conditioning events.

In the game-theoretic application, the space \(\Omega \) typically incorporates the set of strategies and the (relevant) beliefs of the other players. In a finite game, this can be an uncountable set, since the set of all beliefs of the other players is uncountable. The set of conditioning events \(\mathcal {B}\) is determined by the information sets. In a finite game, the set \(\mathcal {B}\) is finite and each conditioning event is clopen.

Definition 2.1

A conditional probability system (CPS) on \((\Omega ,\mathcal {A},\mathcal {B})\) is a map \(p:\mathcal {A}\times \mathcal {B} \rightarrow [0,1]\) such that:

-

(i)

for all \(B\in \mathcal {B}\), \(p(B\mid B)=1\);

-

(ii)

for all \(B\in \mathcal {B}\), \(p(\cdot \mid B)\) is a probability measure on \((\Omega ,\mathcal {A})\);

-

(iii)

for all \(A\in \mathcal {A}\) and \(B,C\in \mathcal {B}\), if \(A\subseteq B \subseteq C\) then \(p(A\mid C)=p(A\mid B)p(B\mid C)\).

Let \(\mathcal {C}_{\mathcal {B}}\) be the set of all CPS’s on \((\Omega , \mathcal {A}, \mathcal {B})\).

Conditions (i)–(ii) require that a CPS maps each conditioning event \(B \in \mathcal {B}\) to a probability measure on B. Condition (iii) requires that the CPS satisfies the rules of conditional probability, when possible. Because the underlying measure space \((\Omega ,\mathcal {A})\) is fixed throughout, we refer at times to a CPS on \((\Omega ,\mathcal {A},\mathcal {B})\) as simply a CPS on \(\mathcal {B}\).

Now we can say what it means for a CPS to “reason” that an event is true. We take this to mean strong belief:

Definition 2.2

(Battigalli & Siniscalchi, 2002) Fix a CPS \(p \in \mathcal {C}_{\mathcal {B}}\). An event \(E \in \mathcal {A}\) is strongly believed under p if, for all \(B\in \mathcal {B}\), \(E\cap B\ne \emptyset \) implies \(p(E\mid B)=1\).

So, a CPS strongly believes an event E if it assigns probability 1 to the event, whenever it is consistent with the conditioning event.

Next is the definition of an LPS. This will be defined on the measure space \((\Omega ,\mathcal {A})\) without making reference to any conditioning events.

Definition 2.3

A lexicographic probability system (LPS) on \((\Omega ,\mathcal {A})\) is a finite sequence \(\sigma =(\nu _0,\ldots ,\nu _{n-1})\) of probability measures on \((\Omega , \mathcal {A})\) such that:

-

(i)

for \(i=0,\ldots ,n-1\), there are Borel sets \(U_i\) in \(\Omega \) with \(\nu _i(U_i)=1\) and \(\nu _i(U_j)=0\) for \(j\ne i\) (that is: \(\sigma \) is mutually singular).

Let \(\mathcal {L}\) be the set of all LPS’s on \((\Omega , \mathcal {A})\).

An LPS is a sequences of measures \((\nu _0,\ldots ,\nu _{n-1})\) for some n. (We refer to n as the length of the LPS.) Condition (i) requires that the measures are mutually singular, in the sense that each measure \(\nu _{i}\) is associated with a hypothesis \(U_{i}\) that is probability 1 under \(\nu _i\) and probability 0 under each \(\nu _{j}\) for \(j \ne i\).Footnote 4 With this in mind, we think informally of the measure \(\nu _{i}\) as the hypothesis itself. An LPS is often interpreted as a sequence of hypotheses, where the \(i{\text {th}}\) hypothesis \(\nu _{i}\) is infinitely more likely \((i+1){\text {th}}\) hypothesis \(\nu _{i+1}\).

Now we can say what it means for an LPS to “reason” that an event is true. We take this to mean assumption.

Definition 2.4

(Brandenburger et al., 2008) Fix an LPS \(\sigma =(\nu _0,\ldots ,\nu _{n-1})\). An event \(E \in \mathcal {A}\) is assumed under \(\sigma \) if there exists some j with \(n > j \ge 0\) so that:

-

(i)

\(\nu _i(E)=1\) for all \(i\le j\);

-

(ii)

\(\nu _i(E)=0\) for all \(i>j\);

-

(iii)

for each open set U with \(E\cap U\ne \emptyset \), there is some i with \(\mu _i(E\cap U)>0\).

In this case, say that E is assumed at level j under \(\sigma \).

Conditions (i)–(ii) require that there is some hypothesis \(\nu _{j}\) so that \(\nu _{i}\) assigns probability 1 to the event if \(i \le j\) and \(\nu _{i}\) assigns probability 0 to the event otherwise. Condition (iii) requires that all of E is considered possible under some hypothesis. Brandenburger et al. (2008) shows that Condition (iii) can be replaced by the requirement that E is contained in the union of the supports of \(\nu _{0},\ldots ,\nu _{j}\).Footnote 5

Lemma 2.1

Fix an LPS \(\sigma =(\nu _0,\ldots ,\nu _{n-1})\). An event E is assumed at level j under \(\sigma \) if and only if \(\sigma \) satisfies conditions (i)–(ii) of Definition 2.4 and: \((iii^\prime )\) \(E\subseteq \bigcup _{i=0}^j\mathrm {Supp\,}\nu _i\).

3 From CPS’s to LPS’s

In this section, we introduce a natural function f that maps a class of CPS’s surjectively onto the LPS’s. For full-support CPS’s, the mapping will translate strong belief to assumption. That is, we will show that, if a CPS p has full support and strongly believes E, then f(p) has full support and assumes E. We will use the mapping to provide a characterization of assumption.

Importantly, the mapping will only be defined when the set of conditioning events \(\mathcal {B}\) is a finite algebra, i.e., when \(\mathcal {B}\cup \{\emptyset \}\) is a finite Boolean subalgebra of \(\mathcal {A}\). Thus, the characterization will link assumption to the requirement that there are enough conditioning events. Specifically, fix a full-support LPS \(\sigma \). The characterization will say: An event E is assumed under \(\sigma \) if and only if there is a finite algebra \(\mathcal {B}\) and a full-support CPS \(p\in f^{-1}(\sigma )\) on \(\mathcal {B}\) such that E is strongly believed under p.

With this in mind, call a CPS p on \(\mathcal {B}\) finitary if \(\mathcal {B}\) is a finite algebra. Observe that, for a given finite algebra \(\mathcal {B}\), all CPS’s on \(\mathcal {B}\) are finitary. But, when \(\Omega \) has at least two elements, there are finitary CPS’s that do not belong to \(\mathcal {C_B}\). To see this, fix finite algebras \(\mathcal {B}\) and \(\mathcal {D}\) with \(\mathcal {B} \ne \mathcal {D}\). Then, \({{\mathcal {C}}}_{{\mathcal {B}}}\) and \({{\mathcal {C}}}_{{\mathcal {D}}}\) are disjoint, because their members have different domains. Members of \(\mathcal {C_D}\) are finitary CPS’s that are not contained in \(\mathcal {C_B}\).

The requirement that the conditioning events \(\mathcal {B}\) form a finite algebra is natural from the perspective of establishing a map from CPS’s to LPS’s. This said, the conditioning events in a game tree do not, in general, constitute a finite algebra. Accordingly, in that case, there is no immediate natural way to go from CPS’s to LPS’s.

3.1 The natural map

Fix a finite algebra of conditioning events \(\mathcal {B}\). In this case, \(\mathcal {B}\) is generated by a finite partition. We refer to the cells of the partition as atoms of \(\mathcal {B}\).

Fix a CPS p on a finite algebra \(\mathcal {B}\). We will construct an LPS \(f_{\mathcal {B}}(p)\). To do so, set \(V_{-1}=\emptyset \). Inductively define sets \(W_i,V_i \in \mathcal {B}\) and a probability measure \(\mu _i \) as follows: Let \(W_0=\Omega \backslash V_{-1}\) and \(\mu _0=p(\cdot \mid W_0)\). Take \(V_0\) to be the union over all atoms \(B\in \mathcal {B}\) with \(\mu _0(B)>0\). Since \(\mathcal {B}\) is an algebra, \(W_{0},V_{0} \in \mathcal {B}\). Assume we have defined sets \(W_i,V_i \in \mathcal {B}\) and a probability measure \(\mu _i\). If \(\Omega =\bigcup _{k\le i}V_k\), set \(f_{\mathcal {B}}(p)=(\mu _0,\ldots ,\mu _i)\) and put \(n=i+1\). Otherwise, set

and, note, since \(\mathcal {B}\) is an algebra, \(W_{i+1} \in \mathcal {B}\). Set \(\mu _{i+1}=p(\cdot \mid W_{i+1})\). Let \(V_{i+1}\) be the union over atoms \(B\in \mathcal {B}\) with \(\mu _{i+1}(B)>0\) and note that \(V_{i+1} \in \mathcal {B}\) since \(\mathcal {B}\) is an algebra. Also, observe that the construction terminates at some finite n, since \(\mathcal {B}\) is finite. In particular, the number \(n-1\) will be less than or equal to the number of atoms of \(\mathcal {B}\).

We will first show that \(f_{\mathcal {B}}\) defines a mapping from CPS’s on a finite algebra \(\mathcal {B}\) to LPS’s.

Lemma 3.1

Fix a CPS \(p \in \mathcal {C_B}\), where \(\mathcal {B}\) is a finite algebra. Then \(f_{\mathcal {B}}(p)\) is an LPS on \((\Omega ,\mathcal {A})\).

To prove Lemma 3.1, it will be useful to have two lemmas.

Lemma 3.2

For each \(i<n\), \(p(\cdot \mid V_i)=p(\cdot \mid W_i)=\mu _i\).

Proof

Fix an event \(A\in \mathcal {A}\). It suffices to show that \(p(A\mid V_i)=p(A\mid W_i)\). Note, \(V_{i} \subseteq W_{i}\) with \(p(V_{i} \mid W_{i})=1\). Thus,

where the second equality comes from condition (iii) of a CPS. \(\square \)

Lemma 3.3

The set \(\{ V_0,\ldots ,V_{n-1} \}\) is a partition of \(\Omega \) with

-

(i)

\(\mu _i(V_i)=1\) for all \(i=0,\ldots , n-1\); and

-

(ii)

\(\mu _i(V_j)=0\) for all \(j\ne i\).

Proof

By construction \(\Omega =\bigcup _{i<n}V_i\). Moreover, for each i, \(V_i\subseteq W_i=\Omega \backslash \bigcup _{k<i}V_k\). Thus, \(V_i\) and \(V_j\) are disjoint whenever \(i\ne j\).

By Lemma 3.2, \(\mu _i(V_i)=1\) for each i. For \(j<i\),

so that \(\mu _i(V_j)=p(V_j\mid W_i)=0\). For \(j>i\),

Since \(\mu _i(V_i)=1\), \(\mu _i(\Omega \backslash V_i)=\mu _i(V_j)=0\). \(\square \)

Now notice that Lemma 3.1 is a corollary of Lemma 3.3. We next show that this mapping is injective, but need not be surjective.

Lemma 3.4

Let \(\mathcal {B}\) be a finite algebra.

-

(i)

The mapping \(f_{\mathcal {B}}: \mathcal {C}_{\mathcal {B}} \rightarrow \mathcal {L}\) is injective.

-

(ii)

If \(\Omega \) is infinite, then \(f_{\mathcal {B}}: \mathcal {C}_{\mathcal {B}} \rightarrow \mathcal {L}\) is not surjective.

Proof

To show \(f_{\mathcal {B}}: \mathcal {C}_{\mathcal {B}} \rightarrow \mathcal {L}\) is injective, fix two distinct CPS’s \(p,p'\) on \(\mathcal {B}\). Then there exists some \(B \in \mathcal {B}\) and \( A \in \mathcal {A}\) with \( A \subseteq B\) so that \(p(A \mid B) \ne p'(A \mid B)\). Write \(f_{\mathcal {B}}(p)=(\mu _{0},\ldots ,\mu _{n-1})\) and \(f_{\mathcal {B}}(p')=(\mu _{0}',\ldots ,\mu _{m-1}')\). We will show that there exists some i, so that \(\mu _{i} \ne \mu _{i}'\).

Observe that \(W_0=W_0'=\Omega \). Thus, \(A \subseteq B \subseteq W_0=W_0'\). Suppose it is not the case that \(p(B \mid W_{0}) = p'(B \mid W_{0}') = 0\). Then either \(p(A \mid W_{0}) \ne p'(A \mid W_{0}')\) or \(p(B \mid W_{0}) \ne p'(B \mid W_{0}')\). In either case, \(\mu _{0}\ne \mu _{0}'\) and we are done. So suppose that \(p(B \mid W_{0}) = p'(B \mid W_{0}') = 0\). Note, for each atom \(D \subseteq B\), \(p(D \mid W_{0}) = p'(D \mid W_{0}') = 0\). So, \(A \subseteq B \subseteq W_1=W_1'\). Repeating the preceding argument, either \(p(B \mid W_{1}) = p'(B \mid W_{1}') = 0\) or \(p(\cdot \mid W_{1}) \ne p'(\cdot \mid W_{1}')\). In the latter case, \(\mu _{1}\ne \mu _{1}'\) and we are done. In the former case, we can repeat the argument above. Since the algebra \(\mathcal {B}\) is finite, eventually we will hit some i with \(p(\cdot \mid W_{i}) \ne p'(\cdot \mid W_{i}')\).

Next, note, when \(\Omega \) is infinite, for each \(k \ge 1\), there is an LPS of length strictly greater than k. However, \(f_{\mathcal {B}}(\mathcal {C}_{\mathcal {B}})\) only includes LPS’s of length less than or equal to the number of atoms in \(\mathcal {B}\). \(\square \)

One takeaway of Lemma 3.4 is that, when the state space is infinite, looking at the CPS’s defined on a given finite algebra does not suffice to capture all of the LPS’s. With this in mind, we introduce a mapping \(f: \mathcal {C}_{\textrm{FIN}} \rightarrow \mathcal {L}\) so that, for each finitary CPS p on \(\mathcal {B}\), \(f(p)=f_{\mathcal {B}}(p)\).

Proposition 3.1

The mapping \(f: \mathcal {C}_{\textrm{FIN}} \rightarrow \mathcal {L}\) is surjective.

Proof

Fix \(\sigma =(\nu _0,\ldots ,\nu _{n-1})\in \mathcal {L}\). We begin by constructing a finite algebra \(\mathcal {B}\). To do so, note that, there are Borel sets \(U_i\) such that \(\nu _i(U_i)=1\) and \(\nu _j(U_i)=0\) for \(i\ne j\). Let \(X_0=U_0\); for \(0<i<n-1\), let \(X_i=U_i\backslash \bigcup _{k<i}X_k\); and let \(X_{n-1}=\Omega \backslash \bigcup _{k<n-1}X_k\). The set \(\{ X_0,\ldots ,X_{n-1} \}\) is a partition of \(\Omega \). Moreover, for each i, \(X_{i}\) is Borel, \(\nu _i(X_i)=1\), and, for each \(j\ne i\), \(\nu _j(X_i)=0\). Let \(\mathcal {B}\) be the unique (finite) algebra with the atoms \(X_0,\ldots ,X_{n-1}\).

Define a mapping \(p:\mathcal {A}\times \mathcal {B}\rightarrow [0,1]\) as follows: For each \(B\in \mathcal {B}\), there is a least \(i<n\) such that \(X_i\subseteq B\). Fix that minimal i and let \(p(\cdot \mid B)=\nu _i(\cdot )\). It suffices to show that \(p\in \mathcal {C}_{\textrm{FIN}}\) and \(f(p)=f_{\mathcal {B}}(p)=\sigma \).

First, we show that p is a CPS on \(\mathcal {B}\). Note, conditions (i)–(ii) are immediate. To prove (iii), fix \(A\in \mathcal {A}\) and \(B,C\in \mathcal {B}\) with \(A\subseteq B\subseteq C\). Take the least i and j such that \(X_i\subseteq B\) and \(X_j\subseteq C\). Since \(B\subseteq C\), \(i\ge j\). If \(i=j\), then \(p(B\mid C)=\nu _j(B)=\nu _i(B)=1\), so

and (iii) holds. If \(i>j\), we have \(p(B\mid C)=\nu _j(B)=0\), so

and (iii) holds in this case as well. Thus \(p\in \mathcal {C}_{\mathcal {B}}\) and, since \(\mathcal {B}\) is a finite algebra, \(p\in \mathcal {C}_{\textrm{FIN}}\).

Finally, starting with p, we have by induction that \(V_i=X_i\) for each \(i<n\). By Lemma 3.2, \(\mu _i=p(\cdot \mid W_i)=p(\cdot \mid V_i)=p(\cdot \mid X_i)=\nu _i\), and thus \(f_{\mathcal {B}}(p)=\sigma \). \(\square \)

While the mapping \(f: \mathcal {C}_{\textrm{FIN}} \rightarrow \mathcal {L}\) is surjective, it is not injective. The following example illustrates this point.

Example 3.1

To show that \(f: \mathcal {C}_{\textrm{FIN}} \rightarrow \mathcal {L}\) is not injective, let \(\Omega =\{ a,b \}\), \(\mathcal {A}=2^{\Omega } \), and \( \mu (\{a\})=\mu (\{b\})={1}/{2}\). Consider two sets of conditioning events \(\mathcal {B}=\{\Omega ,\{a\},\{b\}\}\), and let \(\mathcal {D} = \{\Omega \}\). Let p be the CPS on \((\Omega , \mathcal {A},\mathcal {B})\) obtained by conditioning \(\mu \) on each member of \(\mathcal {B}\) and let q be the CPS on \((\Omega , \mathcal {A},\mathcal {D})\) obtained by conditioning \(\mu \) on each member of \(\mathcal {D}\). Then \(f(p)=f(q)=(\mu )\), but \(p \ne q\).

3.2 Full support

Say a CPS p has full support if, for each atom B of \(\mathcal {B}\), we have \(B\subseteq \mathrm {Supp\,}p(\cdot \mid B)\). An LPS \(\sigma =(\nu _0,\ldots ,\nu _{n-1})\) has full support if \(\Omega =\bigcup _{i<n}\mathrm {Supp\,}\nu _i\). This section establishes a relationship between full-support CPS’s and full-support LPS’s.

Proposition 3.2

The map \(f: \mathcal {C}_{\textrm{FIN}} \rightarrow \mathcal {L}\) takes the set of finitary full-support CPS’s surjectively onto the set of full-support LPS’s. That is, an LPS \(\sigma \) has full support if and only if there exists a finite algebra \(\mathcal {B}\) and a full-support CPS p on \(\mathcal {B}\) with \(\sigma =f(p)\).

Proposition 3.2 has two parts. First, if p is a finitary full-support CPS, then f(p) is a full-support LPS. Second, if \(\sigma \) is a full-support LPS, there exists a finitary full-support CPS so that \(f(p)=\sigma \). Note, the proposition allows that a full-support CPS and a non-full-support CPS may be mapped to the same full-support LPS. This is illustrated by the following example.

Example 3.2

Let \(\Omega \) be the real interval [0, 2], and let \(\mathcal {A}\) be the algebra of Borel subsets of \(\Omega \). Pick a point \(a\in (1,2)\) and let \(Y=[0,1] \cup \{a\}\) and \(\mathcal {B}=\{[0,2], Y,[0,2]\backslash Y\}\). Let p be a CPS on \(\mathcal {B}\), where \(p(\cdot \mid \Omega )\) is the uniform Borel measure conditioned on [0, 1] and \(p(\cdot \mid [0,2] \backslash Y)\) is the uniform Borel measure conditioned on [1, 2]. (Because \(p(Y |\Omega ) > 0\) and \(Y \subseteq \Omega \), p(. |Y) is the uniform Borel measure conditioned on [0, 1].) Then \(f(p)=(\mu _0,\mu _1)\) is such that \(\mu _0=p(\cdot \mid \Omega )\) and \(\mu _1=p(\cdot \mid [0,2]\backslash Y)\). The LPS f(p) has full support, but p does not have full support because \(a\in Y\backslash \mathrm {Supp\,}p(\cdot \mid Y)\).

Note, there exists another finite algebra \(\mathcal {B}'=\{[0,2], [0,1],(1,2] \}\) and a full-support CPS \(p'\) on \((\Omega ,\mathcal {A},\mathcal {B}')\) so that \(f(p')=(\mu _0,\mu _1)\). Let \(p'(\cdot \mid \Omega )\) be the uniform Borel measure conditioned on [0, 1] and let \(p'(\cdot \mid (1,2])\) be the uniform Borel measure conditioned on (1, 2]. Then, \(p'\) has full support and \(f(p')=f(p)=(\mu _0,\mu _1)\).

To prove Proposition 3.2, we will make use of the following lemmas.

Lemma 3.5

Fix a CPS \(p \in \mathcal {C}_{\mathcal {B}}\) and events \(B,C\in \mathcal {B}\) with \(B\subseteq C\) and \(p(B\mid C)>0\). Then \(\mathrm {Supp\,}p(\cdot \mid B)\subseteq \mathrm {Supp\,}p(\cdot \mid C)\).

Proof

Let \(A=\mathrm {Supp\,}p(\cdot \mid C)\). We have \(p(A\mid C)=1\) and \(p(B\mid C)>0\), so \(p(A\cap B\mid C)=p(B\mid C)\). Since p is a CPS,

Therefore \(p(A\mid B)\ge p(A\cap B\mid B)=1\). Since A is also closed, it must contain \(\mathrm {Supp\,}p(\cdot \mid B)\). \(\square \)

Lemma 3.6

Fix a finitary full-support CPS \(p \in \mathcal {C}_{\mathcal {B}}\) and the associated LPS \(f(p)=(\mu _0,\ldots ,\mu _{n-1})\). Then \(V_i\subseteq \mathrm {Supp\,}\mu _i\) for each \(i<n\).

Proof

Each of the sets \(V_i\) can be written as the union of the atoms \(B\in \mathcal {B}\) where \(p(B\mid W_i)>0\), or, equivalently, using Lemma 3.2, where \(p(B\mid V_i)>0\). So Lemma 3.5 implies \(\mathrm {Supp\,}p(\cdot \mid B)\subseteq \mathrm {Supp\,}p(\cdot \mid V_i)\). Since p has full support, \(B\subseteq \mathrm {Supp\,}p(\cdot \mid B) \subseteq \mathrm {Supp\,}p(\cdot \mid V_i)\). Finally, by Lemma 3.2, \(\mathrm {Supp\,}p(\cdot \mid V_i)= \mathrm {Supp\,}\mu _i\). \(\square \)

Proof of Proposition 3.2

Fix a finitary full-support CPS p and let the associated LPS be \(f(p)=(\mu _0,\ldots ,\mu _{n-1})\). By Lemmas 3.3 and 3.6,

as required.

Now let \(\sigma =(\nu _0,\ldots ,\nu _{n-1})\) be a full-support LPS. In the proof of Proposition 3.1, the sets \(X_i\) may be taken so that \(X_i\subseteq \mathrm {Supp\,}\nu _i\) for each \(i<n\). This can be done by first replacing each of the original sets \(X_i\) by its intersection with \(\mathrm {Supp\,}\nu _i\). Then put each point \(\omega \) of \(\Omega \backslash \bigcup _{i<n}X_i\) into \(X_j\) where j is the first integer such that \(\omega \in \mathrm {Supp\,}\nu _j\). The proof of Proposition 3.1 will now give us a full-support CPS p with \(f(p)=\sigma \). \(\square \)

3.3 From strong belief to assumption

For a finitary full-support CPS, strong belief goes over to assumption, under the mapping f.

Proposition 3.3

Fix a finitary full-support CPS p. If E is strongly believed under p then E is assumed under f(p).

Proof

Suppose E is strongly believed under p. Then \(\mu _0(E)=p(E\mid \Omega )=1\). Also note that, if \(E\cap W_i=\emptyset \), then \(E\cap W_{i+1}=\emptyset \). So, there is \(j\ge 0\) with: (i) \(\mu _i(E)=1\) for all \(i\le j\), and (ii) \(E\cap W_i=\emptyset \) and \(\mu _i(E)=0\) for all \(i>j\). Now note that \(E\cap W_{j+1}=\emptyset \) implies \(E\subseteq \bigcup _{i\le j} V_i\). By Lemma 3.6, \(E\subseteq \bigcup _{i\le j}\mathrm {Supp\,}\mu _i\) as required. \(\square \)

The assumption that p has full support is important for Proposition 3.3. This is illustrated in the following example.

Example 3.3

If a finitary CPS p does not have full support, then an event E can be strongly believed under p even though it is not assumed under f(p). To see this, let \(\Omega =\{ a,b,c \}\) and \(\mathcal {B}=\{\Omega , \{a,b\},\{c\}\}\). Let \(p(a\mid \Omega )=p(a\mid \{a,b\})=1\) and \(p(c\mid \{c\})=1\). Then \(f(p)=(\mu _0,\mu _1)\) where \(\mu _0(a)=1\) and \(\mu _1(c)=1\). Take \(E=\{a,b\}\) and note that p strongly believes E but f(p) does not assume E. (Condition (iii) is violated.)

Even if p has full support, f(p) may assume an event even though p does not strongly believe the event. The following example illustrates this point.

Example 3.4

Take \(\Omega =[0,2]\) and let \(\mathcal {B}\) be generated by the partition \(\{\{0\},(0,1),[1,2),\{2\}\}\). Also, choose a CPS p so that \(p(\cdot \mid \Omega )=p(\cdot \mid [1,2))\) is uniform on [1, 2), \(p(\cdot \mid (0,1))\) is uniform on (0, 1), and \(p(0\mid \{0,2\})=p(2\mid \{0,2\})=\tfrac{1}{2}\). Note, p has full support. Moreover, \(f(p)=(\mu _0,\mu _1)\) is such that \(\mu _0\) is uniform on [1, 2) and \(\mu _1\) is uniform on (0, 1). Note, \(\mathrm {Supp\,}\mu _0=[1,2]\) and \(\mathrm {Supp\,}\mu _1=[0,1]\), so that [1, 2] is assumed under f(p). But \([1,2]\cap \{0,2\}\ne \emptyset \) and \(p([1,2]\mid \{0,2\})=\tfrac{1}{2}\). So, E is not strongly believed under p.

That said, we do have the following converse:

Proposition 3.4

Let \(\sigma =(\nu _0,\ldots ,\nu _{n-1})\) be an LPS with full support. A set E is assumed under \(\sigma \) if and only if there exists a finitary full-support CPS \(p \in f^{-1}(\sigma )\) so that E is strongly believed under p.

Proposition 3.4 says that, for each full-support LPS \(\sigma \) that assumes E, we can find a finite algebra \(\mathcal {B}\) and a full-support CPS p on \(\mathcal {B}\) with \(f_{\mathcal {B}}(p)=\sigma \), so that p strongly believes E if and only if \(\sigma \) assumes E. Note, this does not contradict Example 3.4: In that example, a given full-support CPS q on a finite algebra \(\mathcal {D}\) generated an LPS \(f_{\mathcal {D}}(p)=\sigma \) that assumed E, even though q did not strongly believe E. Proposition 3.4 says that, in that case, we can always find some distinct finite algebra \(\mathcal {B}\) and a full-support CPS p on \(\mathcal {B}\), so that \(f_{\mathcal {B}}(p)=\sigma \) and p strongly believes E. To prove Proposition 3.4, it will be useful to have the following lemma.

Lemma 3.7

Suppose \(\sigma =(\nu _0,\ldots ,\nu _{n-1})\) is an LPS with full support and the set E is assumed by \(\sigma \) at level j. Then there is a partition of \(\Omega \) into Borel sets \(U_0,\ldots ,U_{n-1}\) such that \(E\subseteq U_0 \cup \cdots \cup U_j\) and, for each \(i<n\), \(\nu _i(U_i)=1\) and \(U_i\subseteq \mathrm {Supp\,}\nu _i\).

Proof

We argue by induction on j. Suppose E is assumed by \(\sigma \) at level 0. By the proof of Proposition 3.2, there is a partition \(X_0,\ldots , X_{n-1}\) of \(\Omega \) such that for each \(i<n\), \(\nu _i(X_i)=1\) and \(X_i\subseteq \mathrm {Supp\,}\nu _i\). Take \(U_0=E\cup X_0\), and \(U_i=X_i\backslash E\) for \(0<i<n\). Since E is assumed at level 0, certainly \(\nu _0(U_0)=1\) and \(U_0\subseteq \mathrm {Supp\,}\nu _0\). For \(i\ge 1\), note that, using the fact that E is assumed, \(\nu _i(X_i\cap E)=0\), so that \(\nu _i(U_i)=1\). Moreover, \(X_i\backslash E \subseteq X_i \subseteq \mathrm {Supp\,}\nu _i\), as required.

Now suppose E is assumed by \(\sigma \) at level \(j\ge 1\). Choose a set F which is assumed by \(\sigma \) at level \(j-1\). Let \(G=(E\backslash F)\cap \mathrm {Supp\,}\nu _j\). Then \(\nu _j(F)=0\) so that \(\nu _j(G)=1\). Certainly, \(G\subseteq \mathrm {Supp\,}\nu _j\). Also note that, for \(k\ne j\), \(\nu _k(G)=0\). For \(k<j\), this follows from the fact that \(\nu _k(F)=0\) so that \(\nu _k(E\backslash F)=0\). When \(k>j\), this follows from the fact that E is assumed at level j.

Using these facts, the set \(E\backslash G\) is assumed by \(\sigma \) at level \(j-1\). To see this, note that \(j-1\ge 0\). For all \(k\le j-1\), \(\nu _k(E)=1\) and \(\nu _k(G)=0\), since \(j\ne k\), so that \(\nu _k(E\backslash G)=1\). Moreover, \(\nu _j(G)=1\), so that \(\nu _j(E\backslash G)=0\). For all \(k>j\), \(\nu _k(E)=0\), so that \(\nu _k(E\backslash G)=0\). Finally, by assumption, \(E\backslash G \subseteq \bigcup _{k\le j} \mathrm {Supp\,}\nu _k\). By construction, \((E\backslash G) \cap \mathrm {Supp\,}\nu _j=\emptyset \). So, \(E\backslash G \subseteq \bigcup _{k\le j-1} \mathrm {Supp\,}\nu _k\).

The inductive hypothesis for \(E\backslash G\) gives us a partition of \(\Omega \) into Borel sets \(Y_0,\ldots , Y_{n-1}\) such that \(E\backslash G \subseteq Y_0 \cup \cdots \cup Y_{j-1}\), and \(\nu _i(Y_i)=1\) and \(Y_i\subseteq \mathrm {Supp\,}\nu _i\) for each \(i<n\). The required sets are \(U_i=Y_i\) for \(i<j\), \(U_j=Y_j\cup G\), and \(U_i=Y_i\backslash G\) for \(i>j\). \(\square \)

Proof of Proposition 3.4

The “if” direction follows from Proposition 3.3. We focus on the “only if” direction.

Suppose that E is assumed under \(\sigma \) at level j. Let \(U_0,\ldots , U_{n-1}\) be the partition given by Lemma 3.7. Let \(\mathcal {B}\) be the finite algebra of sets with atoms \(U_0,\ldots , U_{n-1}\). The proof of Proposition 3.2 gives us a CPS \(p\in \mathcal {C}_{\mathcal {B}}\) with full support such that \(f(p)=\sigma \) and, for each i, \(\nu _i=p(\cdot \mid W_i)=p(\cdot \mid U_i)\). (The last equality follows from Lemma 3.2.) The CPS p satisfies the following properties: For each \(i\le j\), \(p(E \mid U_i) = p(E\mid W_i) = \nu _i(E) = 1\). For each \(i>j\), \(E \cap U_i=\emptyset \).

Fix a conditioning event \(B\in \mathcal {B}\) and note that B can be taken to be a union of atoms. Suppose \(E\cap B \ne \emptyset \). Then there exists some \(U_i \subseteq B\) with \(E\cap U_i \ne \emptyset \). It follows that \(i\le j\) and \(p(E \mid U_i)=1\). Choose \(U_i\) so that there does not exist some \(U_k\subseteq B\) with \(E\cap U_k \ne \emptyset \) and \(k<i\). Then \(B\subseteq W_i=\Omega \backslash \bigcup _{k<i}U_k\). Using condition (iii) of a CPS,

We have that \(p(U_i\mid W_i)=\nu _i(U_i)=1\) and so \(p(B\mid W_i)=1\). Also,

where the first inequality follows from Lemma 3.2 and the second follows from the fact that \(U_i\subseteq B\). But then,

or \(p(E\mid B)=1\). \(\square \)

4 From LPS’s to CPS’s

Section 3 showed how we can map a given finitary CPS to an LPS, via a mapping f. The inverse image of f gives a correspondence from LPS’s to finitary CPS’s. Importantly, for a given LPS \(\sigma \), there may be multiple finitary CPS’s that map to \(\sigma \). However, if two different finitary CPS’s map to same LPS, they must be associated with different conditioning events. This reflects the fact that LPS’s do not come with a fixed set of conditioning events.

This section is concerned with a converse. For a given LPS \(\sigma \), we look for conditioning events \(\mathcal {B}\) so that there exists a CPS \(p \in \mathcal {C_B}\) associated with \(\sigma \). The key will turn out to be whether or not the conditioning events are covered by the given LPS.

Definition 4.1

An LPS \(\sigma =(\nu _0,\ldots ,\nu _{n-1})\) covers \(\mathcal {B}\) if, for each \(B\in \mathcal {B}\), there is an \(i<n\) with \(\nu _i(B)>0\).

Write \(\mathcal {L_B}\) for the set of all LPS’s that cover \(\mathcal {B}\).

To better understand this definition, notice that, if \(\Omega \) is finite or countable, then a full-support LPS covers any set of conditioning events \(\mathcal {B}\). However, if \(\Omega \) is uncountable, this need not hold. The following example illustrates this point.

Example 4.1

Let \(\Omega =[0,1]\) and let \(\mathcal {A}\) be the Borel \(\sigma \)-algebra. Let \(\mathcal {B}=\{\Omega , [0,1), \{ 1 \} \}\). Consider the LPS \(\sigma =(\mu _{0})\), where \(\mu _0\) is the uniform distribution on \(\Omega \). Note, \(\sigma \) has full support even though \(\sigma \) does not cover \(\mathcal {B}\).

Important to Example 4.1 is that the conditioning events are not open. Call \(\mathcal {B}\) open if each \(B \in \mathcal {B}\) is open.

Lemma 4.1

Let \(\mathcal {B}\) be open. Then every full-support LPS covers \(\mathcal {B}\).

Proof

Suppose not, i.e., there exists some full-support LPS \(\sigma =(\mu _{0},\ldots ,\mu _{n-1})\) and some open set \(B \in \mathcal {B}\) so that, for each i, \(\mu _{i}(B)=0\). Since \(\sigma \) has full support, there exists i so that \(B \cap \mathrm {Supp\,}\mu _{i} \ne \emptyset \). Using the fact that B is open, \(X = \mathrm {Supp\,}\mu _{i} \backslash B \) is a closed set with \(X \subsetneq \mathrm {Supp\,}\mu _{i}\) and \(\mu _{i}(X)=1\). This contradicts \(\mathrm {Supp\,}\mu _{i}\) being the support of \(\mu _{i}\). \(\square \)

4.1 The natural map

We begin by a defining a function \(g_\mathcal {B}\) from the LPS’s that cover \(\mathcal {B}\) to the set of CPS’s on \(\mathcal {B}\). To do so, fix an LPS \(\sigma =(\nu _0,\ldots ,\nu _{n-1})\in \mathcal {L_B}\). Define \(g_{\mathcal {B}}(\sigma )\) to be the mapping \(p:\mathcal {A}\times \mathcal {B}\rightarrow [0,1]\) that satisfies the following: For each \(B\in \mathcal {B}\), \(p(\cdot \mid B)=\nu _k(\cdot \mid B)\), where k is the least integer such that \(\nu _k(B)>0\). To show that \(g_{\mathcal {B}}\) maps \(\mathcal {L}_{\mathcal {B}}\) to \(\mathcal {C}_{\mathcal {B}}\), it suffices to show that \(g_{\mathcal {B}}(\sigma )\) is a CPS.

Lemma 4.2

For each LPS \(\sigma =(\nu _0,\ldots ,\nu _{n-1})\in \mathcal {L_B}\), \(g_{\mathcal {B}}(\sigma )\) is a CPS on \(\mathcal {C}_{\mathcal {B}}\).

Proof

Write \(g_{\mathcal {B}}(\sigma ) = p\). It is immediate that p satisfies conditions (i)–(ii) of a CPS. To show condition (iii), fix \(A\in \mathcal {A}\) and \(B,C\in \mathcal {B}\) with \(A\subseteq B\subseteq C\). Let j be the minimum i with \(\nu _i(B)>0\) and let k be the minimum i with \(\nu _i(C)>0\). (This is well defined since \(\sigma \) covers \(\mathcal {B}\).) Note \(k\le j\) since \(B\subseteq C\). First suppose that \(v_k(B)>0\). Then using the fact that \(A\subseteq B \subseteq C\),

Next suppose that \(\nu _k(B)=0\). Then \(\nu _k(A)=0\) so that \(p(A\mid C)=0=p(A\mid B)p(B\mid C)\). \(\square \)

The mapping \(g_{\mathcal {B}}\) goes from the set of LPS’s that cover \(\mathcal {B}\) to the set of CPS’s on \(\mathcal {B}\). It is defined even if \(\mathcal {B}\) is not a finite algebra. However, as we will now see, when \(\mathcal {B}\) is a finite algebra, the mapping \(g_{\mathcal {B}}\) can be seen as an “extended inverse” of the mapping \(f_{\mathcal {B}}\).

To see this, we begin with the observation that \(f_{\mathcal {B}}\) maps CPS’s on a finite algebra \(\mathcal {B}\) to LPS’s that cover \(\mathcal {B}\).

Lemma 4.3

Fix a finite algebra of conditioning events \(\mathcal {B}\). The function \(f_{\mathcal {B}}\) maps \(\mathcal {C}_{\mathcal {B}}\) into \(\mathcal {L_B}\).

Proof

Fix \(p\in \mathcal {C_B}\) and let \(f_{\mathcal {B}}(p)=(\mu _0,\ldots ,\mu _{n-1})\). By Lemma 3.4, it suffices to show that \(f_{\mathcal {B}}(p)=(\mu _0,\ldots ,\mu _{n-1})\) covers \(\mathcal {B}\).

Since the sets \(V_0,\ldots ,V_{n-1}\) belong to \(\mathcal {B}\) and form a partition of \(\Omega \) (Lemma 3.3), each atom B of \(\mathcal {B}\) is contained in exactly one of the sets \(V_i\). Fix one atom B and assume \(B \subseteq V_{i}\). By the definition of \(f_{\mathcal {B}}(p)\), \(\mu _j(B)>0\) if and only if \(j=i\). Thus \(f_{\mathcal {B}}(p)\in \mathcal {L_B}\). \(\square \)

While \(f_{\mathcal {B}}\) maps CPS’s on \(\mathcal {B}\) into LPS’s that cover \(\mathcal {B}\), it does not map onto all LPS’s that cover \(\mathcal {B}\). The following example illustrates this point.

Example 4.2

Let \(\Omega =\{a,b,c,d\}\) and \(\mathcal {B}\) be the subalgebra with atoms \(\{a,b\}\) and \(\{c,d\}\). Let \(\sigma =(\mu _0,\mu _1)\) be the full-support LPS with \(\mu _0(a)=\mu _0(c)=1/2\) and \(\mu _1(b)=\mu _1(d)=1/2\). Note, \(\sigma \) covers \(\mathcal {B}\). Yet, there is no \(p\in \mathcal {C}_{\mathcal {B}}\) such that \(f_{\mathcal {B}}(p)=\sigma \). If there were such a p, then \(p(\cdot \mid \Omega ) = \mu _{0}\). But, because \(\mu _0\) assigns positive probability to both atoms, \(f_{\mathcal {B}}(p) = (\mu _{0})\), a strict subsequence of \(\sigma \).

This suggests a sense in which \(g_{\mathcal {B}}\) can be seen as an inverse of \(f_{\mathcal {B}}\).

Proposition 4.1

Fix a finite algebra of conditioning events \(\mathcal {B}\).

-

(i)

For each \(p\in \mathcal {C_B}\), \(g_\mathcal {B}(f_{\mathcal {B}}(p))=p\).

-

(ii)

For each \(\sigma \in \mathcal {L_B}\), \(f_{\mathcal {B}}(g_\mathcal {B}(\sigma ))\) is a subsequence of \(\sigma \).

-

(iii)

For each \(\sigma \in f_\mathcal {B}(\mathcal {C_B})\), \(f_{\mathcal {B}}(g_\mathcal {B}(\sigma ))=\sigma \).

For a given CPS p on a finite algebra \(\mathcal {B}\), \(f_{\mathcal {B}}\) maps p to an LPS \(\sigma \) that covers \(\mathcal {B}\). Part (i) says that \(g_{\mathcal {B}}\) maps \(\sigma \) back to p. Conversely, for a given LPS \(\sigma \), \(g_{\mathcal {B}}\) maps \(\sigma \) to a CPS p on \(\mathcal {B}\). Part (ii) says that \(f_{\mathcal {B}}\) maps p back to a subsequence of \(\sigma \). Part (iii) adds that this subsequence is only a strict subsequence of \(\sigma \), if \(\sigma \) is not in the image of \(f_\mathcal {B}\).

Proof of Proposition 4.1

Begin with part (i). Fix some CPS p on \(\mathcal {B}\) and write \(f_{\mathcal {B}}(p)=(\mu _0,\ldots ,\mu _{n-1})\). Note that, for each atom A of \(\mathcal {B}\), there is an i with \(\mu _i(A)>0\). So, for any event B in \(\mathcal {B}\), there is a minimum i with \(\mu _i(B)>0\). For this i, we have \(\mu _i(B)=p(B\mid W_i)\). Then, for any event E,

or

But note that

Now we turn to part (ii). Let \(\sigma =(\nu _0,\ldots ,\nu _{n-1})\). From the definitions of \(f_{\mathcal {B}}\) and \(g_\mathcal {B}\), \(f_{\mathcal {B}}(g_\mathcal {B}(\sigma ))\) is the subsequence of \(\sigma \) consisting of those \(\nu _i\) such that, for some atom B of \(\mathcal {B}\), i is the least integer with \(\nu _i(B)>0\).

Finally, we turn to part (iii). Fix some \(\sigma \in f_\mathcal {B}(\mathcal {C_B})\). Then there exists some CPS \(p \in \mathcal {C_B}\) so that \( f_\mathcal {B}(p)=\sigma \). By part (i), \(g_\mathcal {B}(\sigma ) = g_\mathcal {B}(f_\mathcal {B}(p)) = p\). So, \(f_\mathcal {B}(g_\mathcal {B}(\sigma )) = f_\mathcal {B}(p) = \sigma \). \(\square \)

4.2 Full support

This section revisits the relationship between full-support CPS’s and full-support LPS’s, when the set of conditioning events is both a finite algebra and consists of open sets. In that case, each conditioning event is clopen.

Recall, for a given full-support LPS \(\sigma \), there exists a finite algebra \(\mathcal {B}\) and some full-support CPS \(p \in \mathcal {C_B}\) so that \(f(p)=f_{\mathcal {B}}(p)=\sigma \). (See Proposition 3.2.) However, it is not the case that for each finite algebra \(\mathcal {B}\) and each \(p \in \mathcal {C_{B}}\) with \(p \in f^{-1}(\sigma )\), p is a full-support CPS on \(\mathcal {B}\). (See Example 3.2.) The next lemma establishes that the conclusion does hold, when sets in the finite algebra \(\mathcal {B}\) are open.

Lemma 4.4

Let \(\mathcal {B}\) be an open finite algebra. If \(p\in \mathcal {C_B}\) and \(f_{\mathcal {B}}(p)\) has full support, then p has full support.

Proof

Fix \(f_{\mathcal {B}}(p)=(\mu _0,\ldots ,\mu _{n-1})\), where \((\mu _0,\ldots ,\mu _{n-1})\) has full support. Let B be an atom of \(\mathcal {B}\), and let U be a nonempty open subset of B. We must show that \(p(U\mid B)>0\).

By Lemma 3.3, there is a unique \(i<n\) such that \(B\subseteq V_i\). Since \(V_i\) is clopen and \(\mu _j(V_i)=0\) when \(j\ne i\), \(\mathrm {Supp\,}\mu _j\cap V_i=\emptyset \) when \(j\ne i\). Therefore \(V_i\subseteq \mathrm {Supp\,}\mu _i\). By defintion, \(\mu _i=p(\cdot \mid V_i)\), so \(V_i\subseteq \mathrm {Supp\,}p(\cdot \mid V_i)\). It follows that \(p(U\mid V_i)>0\). Since \(U\subseteq B\subseteq V_i\), we have \(p(U\mid V_i)=p(U\mid B)p(B\mid V_i)\), and hence \(p(U\mid B)>0\) as required. \(\square \)

We now consider the reverse implication. We ask if for a given CPS on \(\mathcal {B}\) (whether or not it is full support), there exists a full-support LPS that maps onto the CPS. The answer is yes, if \(\mathcal {B}\) is an open finite algebra.

Lemma 4.5

Let \(\mathcal {B}\) be an open finite algebra. Then \(g_\mathcal {B}\) maps the set of full-support LPS’s that cover \(\mathcal {B}\) surjectively onto \(\mathcal {C}_{\mathcal {B}}\).

Proof

Take \(p\in \mathcal {C_B}\). If \(f_{\mathcal {B}}(p)=\sigma \) has full support, then we are done since \(g_\mathcal {B}(\sigma )=p\). (See Proposition 4.1(i).) So, suppose that \(\sigma =(\mu _0,\ldots ,\mu _{n-1})\) with \(S = \Omega \backslash (\bigcup _{i<n}\mathrm {Supp\,}\mu _i) \ne \emptyset \). Note, S is an open set. Since \(\Omega \) is Polish, \(\Omega \backslash S\) has a countable dense subset U. We may choose a probability measure \(\mu _n\) such that \(\mu _n(U)=1\) and \(\mu _n(\{a\})>0\) for each \(a\in U\). Then \(\tau =(\mu _0, \ldots ,\mu _n)\) is an LPS with full suport, and \(g_\mathcal {B}(\tau )=g_\mathcal {B}(f_\mathcal {B}(p))=p\). \(\square \)

4.3 From assumption to strong belief

We first show that, assumption goes over to strong belief under the mapping \(g_\mathcal {B}\), provided the set of conditioning events is open.

Proposition 4.2

Fix some open \(\mathcal {B}\). If \(\sigma \in \mathcal {L_B}\) and E is assumed under \(\sigma \), then E is strongly believed under \(g_\mathcal {B}(\sigma )\).

Proof

Suppose E is assumed under \(\sigma =(\nu _0,\ldots ,\nu _{n-1})\) at level j. Let \(B\in \mathcal {B}\) with \(E\cap B\ne \emptyset \). By condition (i) of assumption, for each \(i\le j\), \(\nu _i(B)=\nu _i(B\cap E)\). So, using conditions (ii)–(iii) of assumption, there is some \(i\le j\) with \(\nu _i(B)>0\). Let k be the minimum i with \(\nu _i(B)>0\). Then \(g_\mathcal {B}(\sigma )(\cdot \mid B)=\nu _k(\cdot \mid B)\). We also have

so that \(g_\mathcal {B}(\sigma )(E\mid B)=\nu _k(E\mid B)=1\) as required. \(\square \)

As a corollary of Proposition 4.2 we get a partial converse to Proposition 3.3.

Corollary 4.1

Fix some \(\mathcal {B}\) that is an open finite algebra. If \(p \in \mathcal {C_{B}}\) and \(f_{\mathcal {B}}(p)\) assumes E, then p strongly believes E.

Corollary 4.2

Fix an open finite algebra \(\mathcal {B}\) and some \(p\in \mathcal {C_B}\) that has full support. Then E is assumed under f(p) if and only if E is strongly believed under p.

Corollary 4.1 is an immediate implication of Proposition 4.1(i) and Proposition 4.2. Corollary 4.2 is an immediate implication of Proposition 3.3 and Corollary 4.1. To better understand Corollary 4.1, it will be useful to compare it with Example 3.4. There, we began with a finite algebra \(\mathcal {B}\) and a CPS p on \(\mathcal {B}\). We saw that \(f_{\mathcal {B}}(p)\) assumed an event even though p did not strongly believe the same event. Importantly, the conditioning events there were not open.

Corollary 4.2 might suggest a converse to Proposition 4.2. However, this proposition cannot be improved to say: An event E is assumed under \(\sigma \) if and only if it is strongly believed under \(g_\mathcal {B}(\sigma )\). The next example illustrates this point.

Example 4.3

Take \(\Omega =(0,2)\) and \(\sigma =(\nu _0,\nu _1,\nu _2)\) where \(\nu _0\) is uniform on (0, 1), \(\nu _1(1)=1\), and \(\nu _2\) is uniform on (1, 2). Let \(E=(0,1)\cup (1,2)\). Then E is not assumed under \(\sigma \). But, for any open \(\mathcal {B}\), \(g_\mathcal {B}(\sigma )\) strongly believes E. To see this, fix an open \(\mathcal {B}\) and some \(B\in \mathcal {B}\) with \(B\cap [(0,1)\cup (1,2)]\ne \emptyset \). Write \(U_1=B\cap (0,1)\) and \(U_2=B\cap (1,2)\). Suppose \(U_1\ne \emptyset \). Then \(\nu _0(B)\ge \nu _0(U_1)>0\), so

as required. If \(U_1=\emptyset \) then \(U_2\ne \emptyset \) and again \(\nu _2(B)\ge \nu _2(U_2)>0\), so

as required.

The example shows that we may have a full-support LPS so that, for each open \(\mathcal {B}\), \(g_{\mathcal {B}}(\sigma )\) strongly believes E even though \(\sigma \) does not assume E. That said, if \(\mathcal {B}\) is also a finite algebra, we will be able to find some LPS \(\tau \in g_{\mathcal {B}}^{-1}(\{ g_{\mathcal {B}}(\sigma ) \})\), so that \(\tau \) does assume E. We next show this result.

Proposition 4.3

Suppose \(\mathcal {B}\) is an open finite algebra. Fix \(p\in \mathcal {C_B}\). Then E is strongly believed under p if and only if E is assumed under some full-support LPS \(\sigma \) such that \(g_\mathcal {B}(\sigma )=p\).

Proof

Fix \(\mathcal {B}\) that is an open finite algebra. First suppose that E is assumed under a full-support LPS \(\sigma \). That LPS covers \(\mathcal {B}\). (See Lemma 4.1.) By Proposition 4.2, E is strongly believed under \(g_\mathcal {B}(\sigma )\).

Now suppose that E is strongly believed under p. Let

Then, there exists some \(j<n\) so that (i) \( E\subseteq \bigcup _{i=0}^j V_i\), and (ii)\(\mu _i(E\cap V_i)=1\) for each \(i\le j\). We will construct an LPS \(\tau \) which is a sequence of the form

where some of the \(\nu _i\) and \(\kappa \) may be missing. The idea is that \(\nu _i\) will capture the part of \(E\cap V_i\) which is outside the support of \(\mu _i\), and \(\kappa \) will capture the part of \(\Omega \) outside the supports of the remainder of \(\tau \). For \(i\le j\), if \(E\cap V_i\subseteq \mathrm {Supp\,}\mu _i\), then \(\nu _i\) is missing. Otherwise, take a countable dense subset \(U_i\) of \(E\cap V_i\backslash \mathrm {Supp\,}\mu _i\), and let \(\nu _i\) be a measure such that \(\nu _i(U_i)=1\) and \(\nu _i\) gives positive measure to each point of \(U_i\). Now let

If \(Y=\Omega \), \(\kappa \) is missing. Otherwise take a countable dense subset Z of \(\Omega \backslash Y\), and let \(\kappa \) be a measure such that \(\kappa (Z)=1\) and \(\kappa \) gives positive measure to each point of Z. Since each \(U_i\) is outside the support of \(\mu _i\), \(\sigma \) is a sequence of mutually singular measures, and hence is an LPS. We have \(Z\subseteq \mathrm {Supp\,}\kappa \), so \(\sigma \) has full support. Also, \(E\subseteq \bigcup _{i=0}^j(\mathrm {Supp\,}\mu _i \cup \mathrm {Supp\,}\nu _i)\). Therefore E is assumed under \(\sigma \). Finally, \(g_\mathcal {B}(\sigma )=p\), because for each i and each atom \(B\subseteq V_i\), \(\mu _i\) is the first measure in the sequence \(\sigma \) which gives positive measure to B. \(\square \)

5 Literature

To establish a relationship between strong belief and assumption, the paper began by establishing relationships between CPS’s and LPS’s. This is not the first paper to draw connections between forms of extended probabilities. We now review two prominent approaches. (Neither paper addresses the relationship between strong belief and assumption.)

1. Hammond (1994) This work focuses on a state space \(\Omega \) that is finite and a set of conditioning events \(\mathcal {B}\) that is complete, in the sense of including every nonempty subset. The choice of a complete set of conditioning events follows Myerson (1986a, b). In the finite setting, a complete \(\mathcal {B}\) is an example of an open finite algebra. Hammond exhibits an equivalence between three spaces: the space of full-support LPS’s, the space of complete CPS’s, and the space of logarithmic likelihood ratio functions (McLennan, 1989a, b).Footnote 6 Indeed, he shows that the composition of these three maps is the identity, so that, given any member of one of these three spaces, there is a unique corresponding member of the the other two spaces.

2. Halpern (2010) This work focuses on a measurable state space, without imposing Polishness. Halpern considers a particular type of CPS, which he calls a Popper space, and a generalization of an LPS, that he calls a structured LPS (SLPS). A Popper space is a CPS that adds two requirements: (i) if \(B\in \mathcal {B}\), \(B^\prime \in \mathcal {A}\), and \(B \subseteq B^\prime \), then \(B^\prime \in \mathcal {B}\); and (ii) if \(B \in \mathcal {B}\) and \(p(C | B) >0\) then \(B \cap C \in \mathcal {B}\).Footnote 7 A structured LPS is a (possibly infinite) sequence of probability measures \((\nu _0, \nu _1, \ldots )\) so that there are sets \(U_i\) in \(\mathcal {A}\) with \(\nu _i(U_i)=1\) and \(\nu _i(U_j)=0\) for \(j > i\). (So a structured LPS need not be an LPS.)

Halpern establishes a bijection between SLPS’s and Popper spaces. This bijection is natural in preserving conditional probabilities: Given a conditioning event \(B\in \mathcal {B}\), the associated Popper measure \(p(\cdot | B)\) is equal to the conditional probability \(\nu _{i_0}(\cdot | U)\), where \(\nu _{i_0}\) is the first measure in the SLPS \((\nu _0, \nu _1, \ldots )\) that gives U strictly positive probability. Unlike us, Halpern treats finitely as well as countably additive probability measures.

Notes

We frame the discussion as a two-player game, but this is immaterial.

The literature often refers to mutually singular LPS’s as lexicographic conditional probability systems. To emphasize that there is no conditioning, we simply use the term LPS.

Recall, the support of \(\nu _{i}\) is the smallest closed set that gets probability 1.

For a finite set \(\Omega \), the logarithmic likelihood ratio function is defined by \(\psi (\omega , \omega ^\prime ) = \textrm{ln}[p(\omega )/p(\omega ^\prime )]\), where \(\psi \) is allowed to take values in the extended reals (\(+\infty \) or \(-\infty \)).

Note, despite the terminology, a Popper space is not a space but a function.

References

Battigalli, P., & Siniscalchi, M. (2002). Strong belief and forward induction reasoning. Journal of Economic Theory, 106(2), 356–391.

Blume, L., Brandenburger, A., & Dekel, E. (1991). Lexicographic probabilities and choice under uncertainty. Econometrica, 59(1), 61–79.

Brandenburger, A. (2007). The power of paradox: Some recent developments in interactive epistemology. International Journal of Game Theory, 35(4), 465–492.

Brandenburger, A., Friedenberg, A., & Keisler, H. J. (2008). Admissibility in games. Econometrica, 76(2), 307.

Catonini, E., & De Vito, N. (2014). Common assumption of cautious rationality and iterated admissibility.

Dekel, E., Friedenberg, A., & Siniscalchi, M. (2016). Lexicographic beliefs and assumption. Journal of Economic Theory, 163, 955–985.

Dekel, E., & Siniscalchi, M. (2015). Epistemic game theory. In Handbook of game theory with economic applications (Vol. 4, pp. 619–702).

Halpern, J. Y. (2010). Lexicographic probability, conditional probability, and nonstandard probability. Games and Economic Behavior, 68(1), 155–179.

Hammond, P. (1994). Elementary non-archimedean representations of probability for decision theory and games. In P. Humphrey (Ed.), Patrick Suppes: Scientific philosopher, Vol. I: Probability and probabilistic causality. (pp. 25–61). Kluwer.

Lee, B. S. (2016). Admissibility and assumption. Journal of Economic Theory, 163, 42–72.

McLennan, A. (1989a). Consistent conditional systems in noncooperative game theory. International Journal of Game Theory, 18(2), 141–174.

McLennan, A. (1989b). The space of conditional systems is a ball. International Journal of Game Theory, 18(2), 125–139.

Myerson, R. B. (1986a). Axiomatic foundations of Bayesian Decision Theory. Technical report Discussion paper.

Myerson, R. B. (1986b). Multistage games with communication. Econometrica, 54(2), 323–358.

Rényi, A. (1955). On a new axiomatic theory of probability. Acta Mathematica Hungarica, 6(3), 285–335.

Samuelson, L. (1992). Dominated strategies and common knowledge. Games and Economic Behavior, 4(2), 284–313.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

We thank Pierpaolo Battigalli for helpful conversations, Nicolas Rodriguez Gonzalez for excellent research assistance, and two referees and the editor for valuable feedback. Financial support from the Stern School of Business, NYU Shanghai, J.P. Valles, and the Olin School of Business is gratefully acknowledged.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Brandenburger, A., Friedenberg, A. & Keisler, H.J. The relationship between strong belief and assumption. Synthese 201, 175 (2023). https://doi.org/10.1007/s11229-023-04167-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11229-023-04167-6