Two roads diverged in a yellow wood,And sorry I could not travel bothAnd be one traveler, long I stoodAnd looked down one as far as I couldTo where it bent in the undergrowth

Robert Frost, The Road Not Taken.

Abstract

This paper articulates in formal terms a crucial distinction concerning future contingents, the distinction between what is true about the future and what is reasonable to believe about the future. Its key idea is that the branching structures that have been used so far to model truth can be employed to define an epistemic property, credibility, which we take to be closely related to knowledge and assertibility, and which is ultimately reducible to probability. As a result, two kinds of claims about future contingents—one concerning truth, the other concerning credibility—can be smoothly handled within a single semantic framework.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The philosophical disputes about future contingents—sentences that concern future events that are neither determined to occur nor determined not to occur—hinge on the metaphysical implications of bivalence. The main views that have been advanced differ precisely as to the question whether future contingents are either true or false. Aristotelians claim that future contingents are neither true nor false because they are true in some possible futures and false in other possible futures. Peirceans claim that future contingents are false because they are not true in all possible futures. Ockhamists claim that future contingents are either true or false because they are either true or false in the actual future. In each of the three cases, the analysis provided applies uniformly to all future contingents, so it turns out that all future contingents are alike in some sense.Footnote 1

Yet there is an important sense in which future contingents are not all alike: some of them express contents that is reasonable to believe, others do not. If you normally eat breakfast every morning, your breakfast supplies are safely stored in the kitchen, and you have no intention to change your usual routine, then you may reasonably believe what follows:

-

(1) I will have breakfast tomorrow

By contrast, the negation of (1) does not have the same property:

-

(2) I will not have breakfast tomorrow

Today your confidence in (1) is definitely high. Of course, there is a remote possibility that an earthquake destroys your house just before you wake up. But the mere existence of that possibility does not prevent your belief in (1), and your disbelief in (2), from being reasonable.Footnote 2

The apparent difference between (1) and (2) is clearly not explainable in terms of truth values. Consider the following sentences, which do not exhibit such a difference:

-

(3) The coin will land head

-

(4) The coin will land tails

As far as truth values are concerned, (1) and (2) are exactly like (3) and (4), on any view of future contingents. According to Aristotelianism, both (1) and (2) lack truth value. According Peirceanism, they are both false. According to Ockhamism, one of them is true and the other is false. In each of the three cases, what holds for (1) and (2) also holds for (3) and (4).

The difference between (1) and (2) is rather a difference of epistemic value. That is, it seems that (1) is better than (2) in some epistemic sense in which (3) is not better than (4). We will call credibility this epistemic value. To say that (1) is highly credible is to say that it is highly reasonable to believe (1). Similarly, to say that the credibility of (1) is higher than the credibility of (2) is to say that believing (1) is more reasonable than believing (2).Footnote 3

The distinction between truth and credibility emerges clearly when one considers retrospective assessments of past predictions. Imagine that you utter (1) today, and that tomorrow an earthquake destroys your house before breakfast time. Tomorrow, amid the ruins of your house, it seems correct to say that your prediction was false. But this does not prevent it from being reasonable. So it seems consistent to say both that yesterday it was reasonable for you to believe that you would have breakfast today and that as a matter of fact you did not have breakfast today. A prediction can be highly credible but false.

The opposite can also happen, of course. Imagine that you utter (2) today just because some magician hypnothized you to say that sequence of words, and that the earthquake destroys your house as before. In this case, from the vantage point of tomorrow, it seems correct to say that your prediction was true. But this does not prevent it from being ungrounded. So it seems consistent to say both that yesterday it was not reasonable for you to believe that you would not have breakfast today and that as a matter of fact you did not have breakfast today. A prediction can be true in spite of being hardly credible.

A slightly more nuanced example is the following. Suppose that, on Monday, the estimated probability that it rains on Wednesday is \(50\%\), but that, as the meteorological situation evolves, on Tuesday the estimated probability that it rains on Wednesday is \(90\%\). Suppose also that the following predictions are made on Monday and Tuesday respectively:

-

(5) It will rain in two days

-

(6) It will rain tomorrow

It seems correct to say that if the second prediction is true, the first was true as well. But this does not mean that if the second prediction is reasonable, the first was equally reasonable. Even though (6) as uttered on Tuesday is highly credible, the credibility of (5) as uttered on Monday was definitely lower.

This paper outlines a formal account of the distinction between truth and credibility. Section 2 provides a first informal characterization of credibility. Section 3 defines a standard branching time semantics. Sections 4 and 5 set out the definitions of truth and credibility by relying on that semantics. Section 6 draws attention to some main logical implications. Finally, Sect. 7 explains how the account outlined may shed light on some important issues concerning knowledge and assertibility.

2 Some basic assumptions

Let us start with four basic assumptions that we regard as minimal constraints on an adequate account of credibility. The first assumption—the gradability constraint—is that credibility is a gradable property. In ordinary talk, it is quite common to express comparative epistemic judgments about claims or conjectures that concern future events. For example, one can say that a certain future event is more likely than another, or that the evidence for believing that it will occur is stronger than the evidence for believing that it will not occur. This suggests that future contingents can have different degrees of credibility.

In our account, credibility values will be assigned to formulas in such a way that, for any formula \(\alpha \), the credibility of \(\alpha \) is indicated by a real number n such that \(0\le n\le 1\), where 0 is the lowest point in the scale, representing no credibility at all, and 1 is the highest point, representing full credibility. The sum of the value of \(\alpha \) and the value of \(\lnot \alpha \) will be 1 for any assignment, as is plausible to expect. So, the negation of a formula with credibility 1 will be 0, and the negation of a formula with credibility 0 will be 1.

The second assumption—the finiteness constraint—concerns the kind of subjects that we expect to make credibility judgments. We will restrict consideration to finite subjects, that is, subjects with finite memory, information, representational capacities, and so on. Accordingly, we will assume that a credibility judgment can be made by examining a finite number of options. It would be highly implausible to require that, in order to assess a prediction about the future, an infinite number of possibilities is taken into account, for no mundane agent has the cognitive resources to do so. Moreover, there are practical reasons to prefer finite sets of options over infinite sets of options. The former prove more apt to implementation in engineered computational systems, where having a finite number of items to manipulate is usually a crucial element.

Historically, the question whether future contingents are either true or false has been extensively discussed in connection with the issue of divine foreknowledge. Although we recognize the intrinsic theoretical interest of this issue, we think that modelling the cognitive behaviour of agents with limited epistemic resources is no less important than investigating the idea of an omniscient being. So, here we will stick with the finiteness constraint, and reason as if there is no God.Footnote 4

In the structures that we will adopt as models, the options to be examined are represented as branches of a tree, each of which stands for a possible continuation of the present state of affairs. So, in accordance with the finiteness constraint, we will restrict consideration to trees with a finite number of branches. This restriction warrants that the level of idealization of the formal account is not too high, which we take to be a virtue.

The third assumption—the deduction constraint—is that credibility is deductively closed in the following sense: whenever a sentence logically follows from another sentence, its credibility must be at least as high as the credibility of that sentence. For example, the inference from (6) to the following sentence must preserve its degree of credibility:

-

(7) Either it will rain tomorrow or it will snow tomorrow

The obvious plausibility of this constraint is due to its weakness. Deductive closure so understood can be distinguished from a stronger principle according to which any logical consequence of a credible set of sentences must be credible. The latter principle, which would be more contentious, has been widely discussed under the label of ‘deductive closure’ in connection with the notion of rational belief.Footnote 5

Note that the deduction constraint entails that logical truths are fully credible, for a logical truth logically follows from any sentence. Thus, the formula that represents the following sentence must have value 1:

-

(8) Either it will rain tomorrow or it will not rain tomorrow

Since a contradiction amounts to the negation of a logical truth, we also get that contradictions are not credible at all. Thus, the formula that represents the following sentence must have value 0:

-

(9) It will rain tomorrow and it will not rain tomorrow

The fourth assumption—the probability constraint—concerns the relation between credibility and probability, where the latter is understood as objective chance. It seems reasonable to conjecture that the credibility of a claim about the future is directly proportional to its objective chance. For example, (1) is highly probable, and it is natural to expect that its high credibility is somehow related to this fact. Similarly the probability of (2) is very low, and it is natural to expect that its low credibility is somehow related to this fact. So, we will assume that an adequate account of credibility must be consistent with this conjecture, independently of any further question concerning the nature of objective chance.

As it will turn out, our account of credibility satisfies the probability constraint insofar as it entails that credibility is itself a kind of probability. We take this to be a remarkable result, because it shows a convergence between a coherent formal treatment of the epistemology of future contingents and an independently grounded mathematical theory.

3 Branching time semantics

Our formal semantics relies on two main ideas that are widely shared in the debate on future contingents, and that we take to be theoretically neutral. The first is that the logical form of sentences that talk about future times are adequately formalized by using the metric tense operator \(F_{n}\). For our purposes it will suffice to take days as time units, so that \(F_{n}\) is read as ‘It will be the case n days from now that’. So we will adopt a language \(\mathsf {L}\) defined as follows:

Definition 1

- 1:

-

Every sentence letter is a formula;

- 2:

-

If \(\alpha \) is a formula \(\lnot \alpha \) is a formula;

- 3:

-

If \(\alpha \) and \(\beta \) are formulas, \((\alpha \wedge \beta )\) is a formula;

- 4:

-

If \(\alpha \) and \(\beta \) are formulas, \((\alpha \vee \beta )\) is a formula;

- 5:

-

If \(\alpha \) and \(\beta \) are formulas, \((\alpha \supset \beta )\) is a formula;

- 6:

-

If \(\alpha \) is a formula, for any n, \(F_{n}\alpha \) is a formula.

It is easy to see how \(\mathsf {L}\) can be enriched by adding further tense operators, such as \(P_{n}\), or the usual modal operators \(\Box \) and \(\Diamond \). However, Definition 1 will suffice for the purposes at hand.

The second idea is that a branching time model provides an adequate representation of the spectrum of future possibilities that are open at a given moment. A branching time model is usually defined as a triple \(\langle M,<,V\rangle \), where \(\langle M,<\rangle \) is a frame formed by a non-empty set of moments and a strict partial order on M, and V is a valuation function that assigns truth values to atomic formulas relative to moment–history pairs. A moment–history pair m/h is a point of evaluation constituted by a moment m and a history h—a maximal linearly ordered subset of M—such that \(m\in h\). The models that we will adopt are very similar, in that we only add a further item in order to account for the fact that different possible futures may have different degrees of proximity to the present state of affairs insofar as some of them may be more likely than others. This additional item, which we call a proximity assignment over \(\langle M,<\rangle \), is a function that assigns proximity values to histories relative to moments. More precisely, if \(H_{m}\) is the set of histories that go through m—assuming that \(H_{m}\) is finite for the reasons explained in Sect. 2—each history in \(H_{m}\) is assigned a real number greater than 0 in such a way that the total sum of the values assigned is 1. Intuitively, the value that the function takes for each history in \(H_{m}\) indicates how likely is that history at m.Footnote 6

The models that we will adopt are thus defined as follows:

Definition 2

A model for \(\mathsf {L}\) is a quadruple \(\langle M,<,V,P\rangle \), where M is a non-empty set, < is a strict partial order on M, V is a function that assigns 1 or 0 to each sentence letter of \(\mathsf {L}\) relative to each m/h, and P is a proximity assignment over \(\langle M,<\rangle \).

Let \(P(H_{m})\) be the sum of the values that P assigns to the histories in \(H_{m}\), that is, \(P(H_{m})=\sum _{h\in H_{m}}P(h)=1\). When P assigns the same value to every h in \(H_{m}\)—so that \(P(h)=P(H_{m})/|H_{m}|\)—we say that P is equitable relative to m. Similarly, when P is equitable relative to every m in a model, we call equitable the model itself. Equitable models involve a simplification that may profitably be adopted whenever no difference of likelyhood is expected between the possible futures considered. For example, if the alternative to be represented is between two possible outcomes of the flip of a coin, as in the case of (3) and (4), it is plausible to imagine two histories that have the same proximity value relative to the moment of evaluation.Footnote 7

The notion of truth at a moment–history pair that we will adopt is defined exactly as in traditional branching time models, for the additional item that characterize our models make no difference with respect to this notion.

Definition 3

- 1:

-

If \(\alpha \) is atomic, \([\alpha ]_{m/h}=1\) iff \(V(\alpha )_{m/h}=1\);

- 2:

-

\([\lnot \alpha ]_{m/h}=1\) iff \([\alpha ]_{m/h}=0\);

- 3:

-

\([\alpha \wedge \beta ]_{m/h}=1\) iff \([\alpha ]_{m/h}=1\) and \([\beta ]_{m/h}=1\);

- 4:

-

\([\alpha \vee \beta ]_{m/h}=1\) iff \([\alpha ]_{m/h}=1\) or \([\beta ]_{m/h}=1\);

- 5:

-

\([\alpha \supset \beta ]_{m/h}=1\) iff \([\alpha ]_{m/h}=0\) or \([\beta ]_{m/h}=1\);

- 6:

-

\([F_{n}\alpha ]_{m/h}=1\) iff \(m'\) is n units after m along h and \([\alpha ]_{m'/h}=1\).

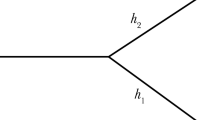

Figure 1 provides an illustration. Here we suppose that each of the ten moments \(m_{1}-m_{10}\) is one unit after \(m_{0}\), and that these moments are located in ten distinct histories that overlap until \(m_{0}\), namely, \(h_{1}-h_{10}\). In this case \([F_{1}p]_{m_{0}/h_{1}}=1\), because \([p]_{m_{1}/h_{1}}=1\), and the same goes for any other history in which p becomes true. Instead, \([F_{1}p]_{m_{0}/h_{7}}=0\), because \([p]_{m_{7}/h_{7}}=0\).Footnote 8

Validity and logical consequence are defined accordingly.

Definition 4

\(\vDash \alpha \) iff \([\alpha ]_{m/h}=1\) for every m/h in every model.

Definition 5

\(\beta _{1},\dots ,\beta _{n}\vDash \alpha \) iff \(\vDash (\beta _{1}\wedge ,\dots ,\wedge \beta _{n})\supset \alpha \).

For example, from Definition 4 we get that \(\vDash F_{1}p\vee \lnot F_{1}p\), because \([F_{1}p\vee \lnot F_{1}p]_{m/h}=1\) for every m/h in every model. From Definition 5 we get that \(F_{1}p\vDash F_{1}p\vee F_{1}q\), because \(\vDash F_{1}p\supset (F_{1}p\vee F_{1}q)\).

The semantics just outlined has been adopted by several authors as a basic building block of more sophisticated formal accounts of tensed sentences. Although most of these accounts take for granted that the histories in the model represent metaphysically possible courses of events, the semantics itself is neutral with respect to the distinction between metaphysical and epistemic possibility, and we will use it to model the latter, assuming that histories represent epistemically possible courses of events. Of course, what is epistemically possible can also be metaphysically possible. So, our use of branching tree models is consistent with the hypothesis that there is a correspondent plurality of metaphysically possible courses of events. But it does not depend on that hypothesis, and does not imply any specific view about the nature or the ontology of time.

4 Definition of truth

The semantics outlined in the previous section provides a technical notion, truth at a moment–history pair, which has no counterpart at the intuitive level. Now we will explain how this technical notion can be used to define truth and credibility, two properties that we take to apply to sentences relative to moments. Using a distinction introduced by MacFarlane and adopted by other authors in the debate on future contingents, Definition 3 provides the semantics proper, while the definitions of truth and credibility set out in this section and in the next belong to the postsemantics.Footnote 9

In order to define truth, we will rely on a specific view of future contingents, Ockhamism. According to Ockhamism, future contingents are either true or false, although they are neither determinately true nor determinately false. Their truth or falsity depends on what happens in the actual history. That is,

-

(T) The truth value of \(\alpha \) at m is the value that \(\alpha \) has at m relative to the actual history.

The thought that underlies (T) is that, when a future contingent is uttered at m, the utterance involves reference to one in particular among the many courses of events that are possible at m, the actual course of events. Therefore, a formal semantics in which truth is defined for a set of histories can provide a characterization of plain truth to the extent that one of the histories in the set represents that course of events. The truth value of \(\alpha \) at m, indicated as \(T(\alpha )_{m}\), can be defined as follows, assuming that one of the histories in \(H_{m}\) is the actual history:

Definition 6

\(T(\alpha )_{m}=[\alpha ]_{m/h}\), where h is the actual history.

Since \(\alpha \) can take either 1 or 0 at m/h, it follows that either \(T(\alpha )_{m}=1\) or \(T(\alpha )_{m}=0\). Thus, every sentence of the form \(F_{n}p\), such as (1), is either true or false.Footnote 10

It is important to note that, in the context of the present discussion, the choice of Definition 6 is purely instrumental. Our distinction between truth and credibility does not essentially depend on this definition, and could equally be phrased by adopting a non-Ockhamist post-semantics. At least two conceivable alternatives deserve mention. One is the supervaluationist definition of truth, which expresses a widely accepted construal of Aristotelianism: \(\alpha \) is true at m—or supertrue—just in case \([\alpha ]_{m/h}=1\) for every h, \(\alpha \) is false at m—or superfalse—just in case \([\alpha ]_{m/h}=0\) for every h, and \(\alpha \) is neither true nor false at m otherwise.Footnote 11 The other conveys the core idea of Peirceanism: \(\alpha \) is true at m just in case \([\alpha ]_{m/h}=1\) for every h, and false otherwise.Footnote 12 In the first case future contingents turn out to be neither true nor false, in the second they turn out to be false. But at any rate, what matters here is not the truth values themselves. In order to draw the distinction between truth and credibility, all that is needed is that epistemic values vary independently of truth values, however the latter are defined.

5 Definition of credibility

Our definition of credibility is somehow analogous to the supervaluationist definition of truth. The analogy lies in the fact that we take the credibility of \(\alpha \) at m to be determined by the values of \(\alpha \) at m relative to the histories that pass through m. The crucial difference is that credibility is quantitative rather than qualitative. Let \(A_{m}\) be the subset of \(H_{m}\) that contains exactly the histories in which \(\alpha \) holds, that is, \(\{h:[\alpha ]_{m/h}=1\}\). The key idea of our definition is the following:

-

(C) The credibility value of \(\alpha \) at m is determined by the proportion between \(A_{m}\) and \(H_{m}\).

In order to make this idea precise, it must be taken into account that the histories in \(H_{m}\) can have different degrees of proximity, that is, the function P in the model can assign different numbers to them. The credibility value of \(\alpha \) at m, indicated as \(C(\alpha )_{m}\), is thus defined as the sum of the proximity values of the members of \(A_{m}\):

Definition 7

\(C(\alpha )_{m}=P(A_{m})\)

Here \(P(A_{m})\) is the sum of the values that P assigns to the histories in \(A_{m}\), that is, \(\sum _{h\in A_{m}}P(h)\). Since \(P(H_{m})=1\) and \(A_{m}\subseteq H_{m}\), we have that \(P(A_{m})\le 1\). This allows for two limiting cases. One is that in which \(P(A_{m})=0\) because \(A_{m}=\emptyset \). The other is that in which \(P(A_{m})=1\) because \(A_{m}=H_{m}\). As a result, \(0\le C(\alpha )_{m}\le 1\).Footnote 13

Note that, when P is equitable relative to m, Definition 7 boils down to the following equation: \(C(\alpha )_{m}\) is the ratio of \(|A_{m}|\) to \(|H_{m}|\). Suppose for example that \(H_{m}\) contains ten histories, and that each of them has proximity 0.1. If \(\alpha \) is true in nine of these histories, it credibility value according to Definition 7 is 0.9. This is nothing but the ratio 9/10, that is, the number of histories in which \(\alpha \) holds divided by the total number of histories. In the examples below, we will take equitability for granted in order to keep things as simple as possible.

Although credibility is a gradable property, unlike supertruth, when it comes to assigning the maximum or the minimum value, Definition 7 behaves exactly like the supervaluationist definition: \(\alpha \) has value 1 at m if and only if it holds in all the histories passing through m, and it has value 0 at m if and only if it holds in no history passing through m. Another important analogy is that the truth-functional connectives behave classically at the level of semantics proper but non-classically at the level of postsemantics. In particular, just like a disjunction can be supertrue even though neither of its disjuncts is supertrue, a disjunction can be credible even though neither of its disjunct is credible. For example, the credibility of (7) and (8) does not distribute over their disjuncts. The case of conjunction is different, though, for while \(\alpha \wedge \beta \) is supertrue whenever \(\alpha \) and \(\beta \) are supertrue, the credibility of \(\alpha \wedge \beta \) can be lower than that of \(\alpha \) and \(\beta \) taken separately. This failure of aggregation is very plausible, we submit. For example, the credibility of (1) and (5) may easily be higher than the credibility of their conjunction.

The distinction between T and C can be illustrated by recalling Fig. 1. Let \(F_{1}p\) stand for (1) and \(m_{0}\) be today. Since \([F_{1}p]_{m_{0}/h_{1}}=1\), and the same goes for the other eight histories in which p becomes true, we get that \(C(F_{1}p)_{m_{0}}=0.9\). Suppose that \(h_{7}\) is the actual history, as in the unlikely scenario in which an earthquake destroys your house. Then, \(T(F_{1}p)_{m_{0}}=0\). So, tomorrow it is correct to say that (1) was false as uttered today, in spite of the fact that (1) was highly credible.

Now let \(\lnot F_{1}p\) stand for (2). Since \([\lnot F_{1}p]_{m_{0}/h_{1}}=0\), and the same goes for the other eight histories in which p becomes true, we get that \(C(\lnot F_{1}p)_{m_{0}}=0.1\). Suppose again that \(h_{7}\) is the actual history. Then, \(T(\lnot F_{1}p)_{m_{0}}=1\). So, tomorrow it is correct to say that (2) was true as uttered today, in spite of the fact that its credibility was very low.

In order to deal with the other example considered in Sect. 1, we need a more complex diagram. Figure 2 represents three temporal units rather than two. Suppose that \(m_{0}\) is Monday, and that \(m_{1}\) and \(m_{2}\) are two alternative Tuesdays, each of which leads to ten possible Wednesdays. Let \(F_{2}p\) stand for (5) and \(F_{1}p\) stand for (6). In this case we get that, if the actual history is one of those where p becomes true, then \(T(F_{1}p)_{m_{1}}=T(F_{2}p)_{m_{0}}=1\). This means that the two predictions made by uttering (5) and (6) are equivalent. Instead, they do not have the same credibility, because \(C(F_{1}p)_{m_{1}}=0.9\), whereas \(C(F_{2}p)_{m_{0}}=0.5\). The credibility of rain on Wednesdays increases as we move from Monday to Tuesday.

6 Logical consequence and logical truth

From what has been said so far it turns out that the definition of credibility satisfies the first two constraints stated in Sect. 2, the gradability constraint and the finiteness constraint. So it remains to be shown that it satisfies the other two constraints, the deduction constraint and the probability constraint. The following fact expresses the closure principle required by the deduction constraint:

Fact 1

If \(\alpha \vDash \beta \), then, for every m, \(C(\alpha )_{m}\le C(\beta )_{m}\).

Proof

Assume that \(\alpha \vDash \beta \). If \(A_{m}\) is the subset of \(H_{m}\) in which \(\alpha \) holds, and \(B_{m}\) is the subset of \(H_{m}\) in which \(\beta \) holds, then \(A_{m}\subseteq B_{m}\). It follows that \(P(A_{m})\le P(B_{m})\), hence that \(C(\alpha )_{m}\le C(\beta )_{m}\). \(\square \)

From fact 1 we get that \(C(F_{1}p)_{m}\le C(F_{1}p\vee F_{1}q)_{m}\) for every m, since \(F_{1}p\vDash F_{1}p\vee F_{1}q\), as noted in Sect. 3. This is a principled reason for saying that the inference from (6) to (7) preserves credibility.

Note that fact 1 does not entail that, for any finite set of formulas \(\Gamma \) and any formula \(\alpha \), if \(\Gamma \vDash \alpha \), then \(\alpha \) is at least as credible as each member of \(\Gamma \). To see that this stronger closure principle does not hold it suffices to think about what has been said about the failure of aggregation. If \(\Gamma =\{\beta ,\gamma \}\) and \(\alpha =\beta \wedge \gamma \), there is no guarantee that the credibility of \(\alpha \) is at least as high as that of the members of \(\Gamma \). What does hold instead is that, if \(\Gamma \vDash \alpha \), then \(\alpha \) is at least as credible as the conjunction of the members of \(\Gamma \). This is a direct corollary of fact 1, given that if \(\Gamma \vDash \alpha \), then \(\alpha \) logically follows from the conjunction of the members of \(\Gamma \).Footnote 14

The following fact shows that logical truths are fully credible:

Fact 2

If \(\vDash \alpha \), then, for every m, \(C(\alpha )_{m}=1\).

Proof

Assume that \(\vDash \alpha \). Then, by Definition 4, \(A_{m}=H_{m}\), so \(P(A_{m})=P(H_{m})\). Therefore, \(C(\alpha )_{m}=1\). \(\square \)

From fact 2 we get that \(C(F_{1}p\vee \lnot F_{1}p)_{m}=1\), since \(\vDash F_{1}p\vee \lnot F_{1}p\), as noted in Sect. 3. So, (8) is fully credible. Moreover, we get that \(C(F_{1}p\wedge \lnot F_{1}p)=0\), for \(\vDash \lnot (F_{1}p\wedge \lnot F_{1}p)\), which entails that \(C(\lnot (F_{1}p\wedge \lnot F_{1}p))=1\). So, (9) is not credible at all.

In order to show that our definition of credibility satisfies the probability constraint, we will rely on the standard definition of probability function.

Definition 8

A function F from a language to the set of real numbers is a probability function if and only if the following holds for any two formulas \(\alpha \) and \(\beta \) in the language:

-

1. \(F(\alpha )\ge 0\); (Non-negativity)

-

2. \(F(\alpha )=1\) if \(\alpha \) is logically true; (Normalization)

-

3. \(F(\alpha \vee \beta )=F(\alpha )+F(\beta )\) if \(\alpha \wedge \beta \) is logically false. (Additivity)

From Definition 7 it turns out that C is a probability function, as it assigns values to formulas relative to moments in accordance with conditions 1-3:

Fact 3

C is a probability function.

Proof

Let \(\alpha \) and \(\beta \) be formulas of \(\mathsf {L}\), and consider any m. Non-negativity holds because \(C(\alpha )_{m}\ge 0\). Normalization holds by Fact 2. Finally, Additivity holds for the following reason. Let \(A_{m}\) and \(B_{m}\) be the subsets of \(H_{m}\) in which \(\alpha \) and \(\beta \) hold respectively, so that \(A_{m}\cup B_{m}\) is the subset of \(H_{m}\) in which \(\alpha \vee \beta \) holds. If \(\alpha \wedge \beta \) is logically false, \(A_{m}\cap B_{m}=\emptyset \), hence \(P(A_{m}\cup B_{m})=P(A_{m})+P(B_{m})\). Therefore, \(C(\alpha \vee \beta )_{m}=C(\alpha )_{m}+C(\beta )_{m}\). \(\square \)

This fact shows that credibility is a kind of probability, in that it obeys the laws of probability theory. So, the relation between credibility and objective chance may be understood as a relation between two kinds of probability. Just as credibility values can be assigned to sentences relative to moments, the same goes for chance values, no matter how the latter are understood. For example, it is reasonable to expect that the pretheoretical judgements about the credibility of (1)–(4) invoked in Sect. 1 are based on our beliefs about the objective chance of (1)–(4). To the extent that the representation of possible futures provided by a branching time model—and more specifically its proximity assigment—is assumed to be accurate in terms of objective chance, it turns out that credibility is directly proportional to objective chance, as is plausible to expect.

One way to spell out this accuracy assumption is to define the relation between credibility and objective chance in terms of the Principal Principle suggested by Lewis. For any \(\alpha \) and m, let X be the proposition that the chance of \(\alpha \) at m is x, let E be a proposition compatible with X that expresses the present total evidence, and let \(C(\alpha |XE)_{m}\) be the credibility at m of \(\alpha \) conditional on X and E. The Principal Principle says that \(C(\alpha |XE)_{m}=x\). So, for example, if \(\alpha \) is (3), m is the present moment, and X is the proposition that the chance of heads is 0.5, then the credibility of \(\alpha \) at m conditional on that proposition and on the present total evidence is 0.5. In the limiting case in which it is certain that the chance of heads is 0.5, that is, the credibility of X given E is 1, the unconditional credibility of \(\alpha \) at m is 0.5. This is one way of explaining how our judgements about credibility are guided by our beliefs about objective chance. In Lewis’ words,

If your present degrees of belief are reasonable—or at least if they come from some reasonable initial credence function by condizionalizing on your total evidence—then the Principal Principle applies. Your credences about outcomes conform to your firm beliefs and your partial beliefs about chances. Then the latter guide your life because the former do. The greater chance you think the ticket has of winning, the greater should be your degree of belief that it will win.Footnote 15

7 Knowledge and assertibility

This last section is intended to show how the formal treatment of credibility provided in the foregoing sections may shed light on some important issues concerning knowledge and assertibility. As is well known, knowledge and assertibility are hard to define. A detailed discussion of their nature would go far beyond the scope of the present work. Here we will just draw attention to some straightforward connections between credibility, knowledge, and assertibility, while remaining neutral on the definitions of knowledge and assertibility.Footnote 16

First of all, it seems indisputable that knowledge entails credibility. Whenever it is reasonable to ascribe knowledge of a proposition to someone, it is reasonable to say that the proposition known is highly credible, where the interpretation of ‘highly’ may vary from context to context. For example, if one knows that 2+2=4, then it is highly credible that 2+2=4; if one knows that dinosaurs are extinct, then it is highly credible that dinosaurs are extinct, and so on. More generally,

-

(KC) If one knows \(\alpha \), then \(\alpha \) is highly credible.

Future contingents are no exception in this respect: if one knows that things will go a certain way, then it is highly credible that things will go that way. Thus, for example, you know that you will have breakfast tomorrow only if (1) is highly credible. Certainly, in the context of a discussion on future contingents it cannot be taken for granted that ‘one knows \(\alpha \)’ is true for some \(\alpha \), provided that knowledge is assumed to be factive, for we have seen that some views deny that future contingents can be true. However, even if one endorses such a view, one can still recognize the connection between knowledge and credibility expressed by (KC).Footnote 17

A principle similar to (KC) seems to hold for assertibility. Arguably, a proposition is assertible only if it is highly credible. If it is assertible that 2+2=4, then it is highly credible that 2+2=4; if it is assertible that dinosaurs are extinct, then it is highly credible that dinosaurs are extinct, and so on. More generally,

-

(AC) If \(\alpha \) is assertible, then \(\alpha \) is highly credible.

Again, future contingents are no exception in this respect: if it is assertible that things will go a certain way, then it is highly credible that things will go that way. Thus, for example, (1) is assertible only if it is highly credible. As in the case of knowledge, in the context of a discussion on future contingents it cannot be taken for granted that ‘\(\alpha \) is assertible’ is true for some \(\alpha \), at least as long as assertibility is assumed to be factive. However, this does not prevent (AC) from being compelling.Footnote 18

The connection between assertibility and credibility expressed by (AC) is compatible with at least two well-known accounts of the constitutive norm of assertion. One defines assertibility in terms of justification, by saying that one must assert \(\alpha \) only if one is justified in believing \(\alpha \). The other defines assertibility in terms of knowledge, by saying that one must assert \(\alpha \) only if one knows \(\alpha \). In both cases (AC) holds because justification and knowledge entail credibility, although the two accounts differ as to whether assertibility is factive.Footnote 19

Note that the converses of (KC) and (AC) are not warranted. The converse of (KC) is false on the assumption that knowledge is factive, for credibility is not factive. Our framework can easily account for cases in which \(\alpha \) is highly credible without being known, as in the earthquake scenario discussed above. In that case, the credibility of (1) does not count per se as knowledge. The converse of (AC) is also problematic. If assertibility is assumed to be factive, as in the knowledge account, then we get exactly the same kind of counterexamples. But even if it is not assumed to be factive, as in the justification account, it is still not obvious that high credibility entails assertibility, because it is not obvious that high credibility suffices for justification.

As a concluding remark, it may be observed that (KC) and (AC) do not settle the question whether aggregation principles similar to that discussed in Sects. 5 and 6 hold for knowledge and assertibility. One might be apt to think that the following principles hold:

-

(AK) If one knows \(\alpha \) and one knows \(\beta \), then one knows \(\alpha \wedge \beta \).

-

(AA) If \(\alpha \) is assertible and \(\beta \) is assertible, then \(\alpha \wedge \beta \) is assertible.

From (KC) and (AK) one would get that credibility is closed under conjunction insofar as the conjuncts are known: if \(\alpha \) and \(\beta \) are highly credible in virtue of being known, then \(\alpha \wedge \beta \) must be highly credible as well. Similarly, from (AA) and (AC) one would get that credibility is closed under conjunction insofar as the conjuncts are assertible: if \(\alpha \) and \(\beta \) are highly credible in virtue of being assertible, then \(\alpha \wedge \beta \) must be highly credible as well. But such conclusions are not inconsistent with the account of credibility suggested here, for they leave room for the possibility that the cases in which credibility is not preserved are cases in which the conjuncts are not known or assertible.

The lottery paradox might be used as an illustration of this possibility. Suppose that 1000 lottery tickets are sold to 1000 persons \(P_{1},\dots ,P_{1000}\). For each \(P_{n}\) such that \(1\le n\le 1000\), it is highly probable that \(P_{n}\) will not win, so it seems rational to accept ‘\(P_{n}\) will not win’. But it does not seem rational to accept the conjunction of the 1000 sentences so constructed, for that would amount to holding that nobody will win. Our account of credibility vindicates these two intuitions. Imagine a tree where 1000 branches depart from a single point, one branch for each possible winner. Each of the 1000 sentences of the form ‘\(P_{n}\) will not win’ is highly credible, for its credibility value is 0.999. But the conjunction of these sentences is not credible at all, for its credibility value is 0: in each history, it is false now that nobody will win. Note, however, that in this case it is far from obvious that each of the 1000 sentences is known or assertible. If it is not, then aggregation fails without contradicting (AK) or (AA).Footnote 20

Notes

Aristotelianism so understood is advocated in Thomason (1970), Perloff et al. (2001), MacFarlane (2003) and among other works. Peirceanism goes back to Prior (1967), and is defended in Todd (2016). Ockhamism is advocated in Øhrstrøm (2009), Rosenkranz (2012), Iacona (2013), Malpass and Wawer (2020) and among other works.

The term ‘credibility’ so understood clearly differs from the term ‘credence’, which is mostly used descriptively to indicate degree of belief. While it makes perfect sense to apply adjectives such as ‘rational’ or ‘justified’ to ‘credence’, it would be redundant to apply them to ‘credibility’.

Note that the notion of proximity assignment does not require the assumption that \(H_{m}\) is finite. This notion can easily be adapted to a countably infinite set of histories by employing sigma-additivity and defining their total likelihood as the (countably infinite) sum of the likelihoods of the histories in the set. Note also that this is not the only way to provide a measure of likelihood of the kind required. An alternative way is to assign absolute proximity values to histories, obtain the proximity value of each moment m as the sum of the proximity values of the histories that go through m, and then define the proximity value of a history h relative to a moment m by conditionalizing on m.

The idea of a measure of likelyhood is not new, see for example Konur et al. (2013, pp. 65–68).

The definition of truth at a moment–history pair goes back to Prior (1967, pp. 126–127).

MacFarlane (2003, pp. 329–330).

It is worth mentioning that, if one were to adjust clause 6 of Definition 3 in the way explained in footnote 12, one would end up with the unpalatable result that every future-tense sentence has credibility 0 or 1, without intermediate values. Todd (forthcoming) discusses the implications of this result.

As Kyburg (1970, p. 59) shows the stronger principle considered is obtained precisely by adding aggregation to our weak principle.

Lewis (1986), p. 109. Note that we are using the Principal Principle as a bridge principle in order to spell out the relation between two kinds of probability, credibility and objective chance. This use is not meant to suggest that credibility is to be identified with subjective probability as understood by Lewis.

Of course, given our focus on limited cognitive agents (Sect. 2), what we say does not directly apply to the case of an omniscient being.

Iacona (2021) advocates the view that future contingents are knowable and shows how we can make sense of their knowability within an Ockhamist framework. Cariani (forthcoming) argues in a similar spirit that the openness of the future does not prevent future contingents from being knowable.

References

Armstrong, D. M. (1973). Belief, truth and knowledge. Cambridge: Cambridge University Press.

Barnes, E., & Cameron, R. (2011). Back to the open future. Philosophical Perspectives, 25, 1–26.

Cariani, F. (forthcoming). Human Foreknowledge. Philosophical Perspectives.

Cariani, F., & Santorio, P. (2018). Will done better: Selection semantics, future credence, and indeterminacy. Mind, 127, 129–165.

DeRose, K. (1996). Knowledge, assertion and lotteries. Australasian Journal of Philosophy, 74, 568–580.

Hattiangadi, A., & Besson, C. (2014). The open future, bivalence and assertion. Philosophical Studies, 162, 251–271.

Hempel, C. (1962). Deductive-nomological vs statistical explanation. In H. Feigl & G. Maxwell (Eds.), Minnesota studies in the philosophy of science, III (pp. 98–169). Minneapolis: University of Minnesota Press.

Iacona, A. (2013). Timeless truth. In F. Correia & A. Iacona (Eds.), Around the tree: Semantic and metaphysical issues concerning branching and the open future (pp. 29–45). Chaim: Springer.

Iacona, A. (2014). Ockhamism without thin red lines. Synthese, 191, 2633–2652.

Iacona, A. (2021). Knowledge of future contingents. Philosophical Studies, online.

Kvanvig, J. L. (2009). Assertions, knowledge, and lotteries. In P. Greenough & D. Pritchard (Eds.), Williamson on knowledge. Oxford: Oxford University Press.

Kyburg, H. E. (1970). Conjunctivitis. In M. Swain (Ed.), Induction, acceptance, and rational belief (pp. 55–82). Berlin: Springer.

Lackey, J. (2007). Norms of assertion. Noûs, 41, 594–626.

Lewis, D. (1986). A subjectivist’s guide to objective chance. In Philosophical papers II (pp. 83–113). Oxford: Oxford University Press.

Lewis, D. (1996). Elusive knowledge. Australasian Journal of Philosophy, 74, 549–567.

MacFarlane, J. (2003). Future contingents and relative truth. Philosophical Quarterly, 53, 321–336.

MacFarlane, J. (2014). Assessment sensitivity. Oxford: Oxford University Press.

Malpass, A., & Wawer, J. (2020). Back to the actual future. Synthese, 197, 2193–2213.

Perloff, M., Belnap, N., & Xu, M. (2001). Facing the future. Oxford: Oxford University Press.

Neta, R. (2009). Treating something as a reason for action. Noûs, 43, 684–699.

Øhrstrøm, P. (2009). In defence of the thin red line: A case for Ockhamism. Humana Mente, 8, 17–32.

Øhrstrøm, P., & Hasle, P. (2020). Future contingents. In E. Zalta (Ed.), Stanford encyclopedia of philosophy. http://plato.stanford.edu/archives/sum2011/entries/future-contingents/

Prior, A. N. (1967). Past, present and future. Oxford: Clarendon Press.

Rosenkranz, S. (2012). Defence of Ockhamism. Philosophia, 40, 617–31.

Konur, S., Fisher, M., & Schewe, S. (2013). Combined model checking for temporal, probabilistic, and real-time logics. Theoretical Computer Science, 503, 61–88.

Santelli, A. (2020). Future contingents. Philosophia: Branching Time and Assertion.

Stojanovic, I. (2014). Talking about the future: Unsettled truth and assertion. In P. De Brabanter, M. Kissine, & S. Sharifzadeh (Eds.), Future times, future tenses. Oxford: Oxford University Press.

Thomason, R. H. (1970). Indeterminist time and truth-value gaps. Theoria, 36, 264–281.

Todd, P. (2016). Future contingents are all false! on behalf of a Russellian open future. Mind, 125, 775–798.

Todd, P. (forthcoming). The Open Future: Why Future Contingents are All False. Oxford University Press.

Todd, P., & Rabern, B. (2021). Future contingents and the logic of temporal omniscience. Noûs, 55, 102–127.

van Fraassen, B. (1966). Singular terms, truth-value gaps, and free logic. Journal of Philosophy, 63, 481–495.

Williamson, T. (1996). Knowing and asserting. Philosophical Review, 105, 489–523.

Williamson, T. (2000). Knowledge and its limits. Oxford: Oxford University Press.

Acknowledgements

We presented this paper at the University of Padua in November 2020 and at the Autonomous University of Barcelona in December 2020. On both occasions we received a lot of helpful questions and suggestions, especially by Massimiliano Carrara, Roberto Ciuni, Giuseppe Spolaore, and Giuliano Torrengo. We would also like to thank Enzo Crupi and Jan Sprenger for discussing this paper with us at its initial stage, and two anonymous referees for their accurate comments on the submitted version.

Funding

Open access funding provided by Università degli Studi di Torino within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Iacona, A., Iaquinto, S. Credible Futures. Synthese 199, 10953–10968 (2021). https://doi.org/10.1007/s11229-021-03275-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11229-021-03275-5