Abstract

This paper presents a new mesh data layout for parallel I/O of linear unstructured polyhedral meshes. The new mesh representation infers coherence across entities of different topological dimensions, i.e., grid cells, faces, and points. The coherence due to cell-to-face and face-to-point connectivities of the mesh is formulated as a tree data structure distributed across processors. The mesh distribution across processors creates consecutive and contiguous slices that render an optimized data access pattern for parallel I/O. A file format using the coherent mesh representation, developed and tested with OpenFOAM, enables the usability of the software at unprecedented scales. Further implications of the coherent and sliceable mesh representation arise due to simplifications in partitioning and diminished pre- and post-processing overheads.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The finite-volume method (FVM) is widely used in science and engineering to simulate systems of meso- and macroscopic physical phenomena [1,2,3]. Fundamental to FVM is the representation of a physical domain into a set of small control volumes by discretization through structured or unstructured grids/meshes. The advantage of unstructured grids is their generality as they conform to nearly arbitrary geometries [4], which is crucial for real-world industrial applications.

The mesh layout dictates the access pattern for storage and data management of CFD codes because the data management of physical variables/fields adheres to the mesh representation. Mesh storage includes the mesh geometry information corresponding to graph nodal coordinates, i.e., points, the mesh topology information in terms of graph connectivity, i.e., the cell graph, and adjacencies between entities of different topological dimensions, i.e., cells, faces, and points. The adjacencies between mesh entities can be of a downward or upward nature. A downward topological connection encodes the connection from higher dimensional mesh entities to lower dimensional entities, such as cell-to-face connectivity. An upward topological connection encodes a connection from lower to higher dimensional entities, such as face-to-cell connectivity.

This paper focuses on (linear) unstructured polyhedral meshes used for the FVM with co-located variables/fields like in the open-source CFD software package OpenFOAM [5]. A polyhedral mesh representation that effectively utilizes modern file systems and simplifies data handling, in general and for OpenFOAM, requires:

-

Read and write global mesh and field data directly to and from target processors in parallel, without storing data for each processor separately. Loading of distributed subsets should directly map to consecutive mesh slices for a contiguous data access pattern in storage. In that regard, mesh input should establish a distributed mesh representation right from the beginning.

-

The mesh representation must connect mesh entities of subsequent topological dimensions, i.e., grid cells, faces, and points. Downwards adjacency queries to the smallest dimensional entities, i.e., points should be efficient when selecting subsets of grid cells. Namely the mesh representation should connect mesh entities such that a slice of cells determines associated faces which, in turn, determine associated points.

-

Collect data of several processes into a fixed number of files. In particular, change the file structure in decomposed cases of OpenFOAM by using parallel I/O on a flexible number of files. The number of files must not scale with the degree of parallelism, namely the number of processors.

This paper presents a data structure for polyhedral meshes that establishes a coherence between all topological dimensions by cell-to-face and face-to-point connectivities. A newly imposed sorting of faces by cell ownership establishes a cell-to-face connectivity. The sorted enumeration of faces is based on a walking order through connected cells. The face-to-point adjacency is based on sorting points by face ownership. Consequently, the reading and writing of contiguous slices from different mesh entities cohere from top to bottom, i.e., grid cells to points. This sliceablity enables mesh division during parallel access of contiguous chunks from all topological dimensions that cohere through mesh adjacencies. The coherent mesh has two major advantages. On the one hand, the mesh slices are loaded as contiguous subarrays onto an arbitrary number of processors which optimizes the access pattern for parallel I/O. On the other hand, the coherent mesh makes post-processing for mesh and field reconstruction redundant. In general, the new coherent data format of unstructured polyhedral meshes and their co-located physical properties is optimized for parallel I/O capabilities at all scales.

The ADIOS2 library [6, 7] is used as a parallel I/O backend, which implements parallel I/O schemes adhering to a contiguous data access pattern. In particular, the number of files shared among a subset of data loading processors can be chosen at run time. Contiguous data blocks can be aggregated onto a subset of processors that write into individual or shared files for several aggregating processors. Flexibility in the number of shared files is advantageous and recommended over fixing a single shared file for which file system contention arises at large scales. Moreover, the contiguous data arrays can be labeled, stored, and retrieved by string identifiers using ADIOS2 with its self-describing file format. Other parallel I/O libraries like MPI-IO [8, 9], NetCDF [10, 11], or HDF5 [12] would be possible alternatives, however, the mentioned features of ADIOS2 meet the appropriate requirements for the presented work. Also, ADIOS2 has been successfully reported as scalable parallel I/O on supercomputers [13,14,15].

In the following section, related mesh data formats are briefly introduced. Due to the focus on OpenFOAM, the software’s existing mesh data management is depicted in detail. Then, the theory of the coherent mesh representation is explained using a minimal example of a 2D mesh. This includes the description of the required sorting algorithms and the strategies for parallel decomposition and reconstruction on distributed computing architectures. The paper continues by depicting a tree-based data structure to implement the coherent data format into OpenFOAM. Next, the methods section describes further usage details in OpenFOAM and reports the settings for benchmark runs. Finally, the results section presents the performance gains, such as a significant reduction in the time spent on case preparation. The capability for OpenFOAM to scale up to 4096 nodes (equivalent to 524’288 CPU cores) of the Hawk supercomputer is demonstrated based on and facilitated by the coherent file format.

2 State-of-the-art

2.1 Related work

Similar approaches for distributed mesh data management of unstructured grids have been reported by previous work.

The mesh representation in PETSc DMPlex [16, 17] is based on a directed acyclic graph in which mesh entities are represented as vertices and the graph edges create their connectivities. It establishes a fully connected mesh for arbitrary mesh dimensionality, shape, and entity types. Downward and upward connectivities are used for direct partitioning and load balancing during the initialization phase. The parallel I/O capabilities of PETSc DMPlex have been concisely reported and demonstrated, for instance, in application to waveform modeling [17].

A related mesh representation is DOLFIN [18, 19] which is part of the FEniCS project [20]. It uses similar concepts like DMPlex and supports parallel I/O.

MOAB [21] uses a representation for evaluating structured and unstructured meshes. It is optimized by accessing mesh chunks for runtime and storage efficiency. Storage includes grid cells and points while adjacency of other mesh entities like faces have to be computed. Distributed mesh representation must assign mesh entities to processors during initial partitioning before the mesh can be loaded in parallel.

The High Order Preprocessor (HOPR) [22] introduces a mesh format for high-order approximation of curved geometries. The grid cell data is stored element-wise such that the connected mesh entities, i.e., faces and points are stored multiple times for each connected grid cell. This way, HOPR ensures non-overlapping contiguous data access, retrieving all required mesh information with minimal need for communication between processors. The cell graph is established by storing neighbor element indices and the associated local face index on the neighboring element. Furthermore, the HOPR file format explicitly encodes and stores face orientation, cell types, and face types.

The Parallel Unstructured Mesh Infrastructure (PUMI) [23,24,25] uses an adaptive mesh representation with distributed mesh control routines for massively parallel computers. Adjacencies between topological dimensions can be flexibly generated. Serial I/O is routed through the master rank, which distributes/collects mesh partitions to/from the other processes. Pre-partitioning is possible where each process loads an individual file. In that case, the number of files scales with the number of processes. Similarly, distributed mesh management systems like STK [26, 27] and MSTK [28] using the mesh file formats GMV [29] and Exodus II [30] currently omit parallel I/O functionality.

The Algorithm Oriented Mesh Database (AOMD) [31] adeptly manages diverse sets of adjacencies, offering flexibility in handling topological relationships without the need to store all adjacencies. Consequently, it proves versatile for accommodating the varied requirements of multiple applications, such as partial differential equation solvers, mesh generators, post-processing tools, and visualization. AOMD’s mesh representations encompass comprehensive cell annotation using tetrahedron and hexahedron elements. The extension of AOMD to distributed computing incorporates features for parallel mesh adaptation and dynamic load balancing [32], without including parallel I/O functionality.

The mentioned mesh formats stand out in power and flexibility for various CFD schemes and use cases. However, the frameworks include more data for the usage with high-order schemes than the FVM on unstructured polyhedral meshes requires. For instance, explicit storage of cell types is redundant information for OpenFOAM. Representations that cover down- and upward adjacencies are powerful for finite element codes. This work presents plain downward adjacencies as the minimum requirement to create a contiguous data access pattern optimal for parallel I/O of the linear unstructured polyhedral meshes used in FVM schemes such as OpenFOAM.

2.2 Polyhedral mesh in OpenFOAM

The polyhedral mesh representation in OpenFOAM uses downward and upward connectivities starting from faces. (l.h.s.) An example 2D mesh illustrates enumeration of cells, faces, and points. (r.h.s.) Stored adjacencies include an upward face-to-cell connectivity and a downward face-to-point connectivity

In OpenFOAM, the description of the mesh is based around faces. A face \(f_i\) is defined and stored by an ordered list of points \(f_i = \{p_u, p_v, \dots , p_w\}\), hence, storing a downward face-to-point connectivity. Compare the illustrated face list on the r.h.s. of Fig. 1.

The composition of cells by faces is stored by the upward face-to-cell connectivity, namely the owner list illustrated in Fig. 1. Faces are the edges of the cell graph. The connectivity of the graph is stored as a face-to-cell neighborhood relation, namely a neighbor list. Hence, owner and neighbor lists are face-indexed and contain cell indices.

The legacy mesh format of OpenFOAM stores the files points, faces, owner, neighbour, and boundary. Points are stored as a list of coordinates. As mentioned above, faces are stored as lists of point indices representing the face-to-point connectivity. Faces are organized according to a particular order. The first part of the list refers to the internal faces. Subsequent parts refer to the faces that belong to boundary patches. The first sublist refers to the internal faces. Subsequent sublists refer to the faces that belong to boundary patches. The start/offset and size of the boundary sublists are stored in the boundary file next to other boundary configurations such as the boundary type. Processor boundaries are included in boundary if the mesh is decomposed. The sublisting is illustrated by the face numbering on the exemplary grid on the l.h.s. of Fig. 1.

This format establishes an explicit storage of linear polyhedral meshes and has few constraints on the enumeration of the mesh entities. The point enumeration is unconstrained, however, the point order inside a face obeys the right-hand rule such that its normal vector points outside of the owner cell. The internal faces have a more intricate constraint to be sorted in upper triangular order. This means that internal faces are ordered such that when stepping through higher-numbered neighboring cells in incremental order, one also steps through the corresponding faces in incremental order. Consequently, the owner cell of an internal face always has a smaller cell index than the neighboring cell.

While point enumeration remains unconstrained, the sublisting and upper triangular order in face enumeration are also maintained for local partitions if a mesh is decomposed for parallel execution. Namely the internal faces of the global mesh that constitute processor boundary patches are shifted into the boundary sublists. Hence, the processor-local sublists fragment local to global associativity of faces. Mapping/addressing of processor-local and global enumerations of cells, faces, and points must be explicitly stored for a given decomposition. The face-to-cell connectivity and the fragmentation in the face enumerations complicate the parallel execution of OpenFOAM cases by the following aspects:

-

The local mesh enumeration is unique to a given partitioning, i.e., the number of processors. Changing the number of processors requires reiteration of preprocessing steps for decomposition.

-

Post-processing often requires reconstructing all data after simulation based on the stored local to global addressing. The associated steps significantly contribute to the overall time-to-solution, are hardly parallelisable, and their time complexity scales with the number of partitions.

-

Storage of processor boundary patches duplicates the storage of constituting faces and points. Faces are typically duplicated. Points cornering between more than two partitions, so-called globally shared points, are stored multiple times.

3 Coherent mesh representation

We propose a new mesh format that facilitates a global mesh layout with deductible slicing of the mesh through coherence in a new downward cell-to-face and face-to-point connectivity. The proposed coherent and sliceable mesh format avoids fragmentation and renders a contiguous access pattern for parallel I/O.

The upper triangular order of the face enumeration is extended to boundary patch faces that interleave with internal faces. Further, a new constraint sorts the point enumeration based on a ‘walking’ order corresponding to the occurrence in faces. The coherent mesh layout can be established by small but fundamental changes in the mesh connectivity which are referred to as coherence rules:

-

1)

Upper triangular and walking order for faces: Incrementally walking through cells enumerates the faces by ownership and upper triangular order with respect to neighbors.

-

2)

Walking order for points: Enumerate points in walking order concerning the occurrence in the faces that are readily ordered by 1.

-

3)

Downward cell-to-face connectivity: Represent the ownership of faces by cells in a list of face index offsets marking the start of cell-owned face slices.

The coherence rule 3 can be considered a mere consequence of the coherence rule 1. After applying coherence rule 1, the corresponding upward face-to-cell connectivity represented by an owner list as in Fig. 1 is partitioned into chunks of equal cell IDs. Hence, the ownership of faces by cells can be stored as suggested by coherence rule 3. The new list is indexed by cell IDs representing the downward cell-to-face connectivity.

Figure 2 exemplifies the new enumeration that interleaves boundary and internal faces into an upper triangular and walking order through cells. The exemplified mesh data are listed in the Table 1. The ownerStarts list establishes the downward cell-to-face connectivity imposed by coherence rule 3. Hence, the coherent mesh representation only stores the downward cell-to-face adjacency, explicitly. The upward face-to-cell adjacency can be generated by plain expansion of the cell-ordered ownerStarts into the face-ordered owner list. The neighbour list interleaves boundary patch faces that are encoded by \(-(\mathcal {B}_i+1)\) where \(\mathcal {B}_i\) is the global boundary index.

3.1 Coherence of sliceable mesh entities

The enumeration of mesh entities by walking order/ownership combined with the cell-to-face and face-to-point connectivities establish a coherence between mesh entities. The coherence renders a rational, downward connection between cell-indexed, face-indexed, and point-indexed lists.

Slices of a given topological dimension are defined by the starting offset indices \(\mathcal {I}^\textrm{dim}_l\) and \(\mathcal {I}^\textrm{dim}_m\) where \(\mathcal {I}^\textrm{dim}_l < \mathcal {I}^\textrm{dim}_m\) for consecutive slices l and m (\(l<m\)). The size of a slice is determined by \(\Delta _l=\mathcal {I}_m - \mathcal {I}_l\). Alternatively, the terminating offset indices \(\mathcal {S}_k\) and \(\mathcal {S}_l\) determine the end and size of consecutive slices k and l (\(k<l<m\)). Starting and terminating offsets of consecutive slices are straightforwardly related as \(\mathcal {I}_l = \mathcal {S}_k + 1\). The downward topological adjacencies of the coherent mesh representation determine the starting (or terminating) offsets of slices between topological dimensions.

The coherence can be retraced in Table 1 starting from the ownerStarts list. Defining consecutive cell slices \(k<l<m\) starting at \(\mathcal {I}^\textrm{cell}_k\), \(\mathcal {I}^\textrm{cell}_l\) and \(\mathcal {I}^\textrm{cell}_m\) determine the size of the cell slice l by \(\Delta ^\textrm{cell}_l=\mathcal {I}^\textrm{cell}_m - \mathcal {I}^\textrm{cell}_l\).

-

Cell-to-face adjacency: The face index at position \(\mathcal {I}^\textrm{cell}_l\) of the ownerStarts list refers to the first face \(\mathcal {I}^\textrm{face}_l\) owned by the cell slice l. The face index at \(\mathcal {I}^\textrm{cell}_m\) in ownerStarts is the starting face offset \(\mathcal {I}^\textrm{face}_m\). Hence, reading the starting face offsets for slices k, l, and m from the ownerStarts list, straightforwardly determines the sizes of slices k and l.

-

Face-to-point adjacency: The coherent slice in point-indexed lists must be determined by the terminating point offsets. The maximum point index in the slice k of faces is the terminating index \(\mathcal {S}^\textrm{point}_k\) of the point slice. Hence, the starting point offset for slice l is \(\mathcal {I}^\textrm{point}_l = \mathcal {S}^\textrm{point}_k + 1\). Hence, point slice offsets are deduced from the terminating point offsets as the maximum point index in the slice of faces.

The coherence of a sliced mesh of n slices defines n mesh partitions bound by the offsets of slices in each dimensionality. Hence, generation of the offset lists \(\mathcal {I}^{dim} = [\mathcal {I}^{dim}_0, \mathcal {I}^{dim}_1, \dots , \mathcal {I}^{dim}_n]\) for each topological dimension \(dim=[\textrm{cell},\textrm{face},\textrm{point}]\) partitions the mesh into contiguous slices of coherent topological entities.

FVM software packages like OpenFOAM often require partitioned meshes with complete cells on each processor rank to make the constituting faces and points accessible. This is typically handled during the costly preprocessing steps for decomposition in OpenFOAM. Further, if the mesh changes topologically on some or all partitions, a recombination/reconstruction is required to establish the globally modified coherence of the mesh. Note that reconstruction of the coherent mesh representation is only needed if topological changes occur. Decomposition and reconstruction of a coherent mesh can be incorporated on the fly during mesh input and output. These advantages of the coherent mesh representation are depicted in the following.

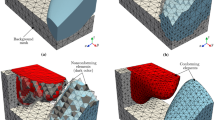

3.1.1 On the fly decomposition of the coherent mesh

Decomposition of coherent mesh when loading the mesh from storage. (l.h.s.) Partitioning onto two processors separates the mesh by contiguous cell slices. Processor patch faces are constructed after sliced reading including communication upward in processor rank. (r.h.s.) Partitioning onto six processors separates the cells and loads the adjacent mesh entities. A rank missing globally shared points must query them from the lower processor ranks. Therefore, point-to-point queries can be established because every process has a copy of the list of global point offsets

Slicing of the coherent mesh can be directly used for partitioning, i.e., case parallelization. Figure 3 represents the decompositions of the exemplary mesh into two and six partitions. The mesh slices render an optimized access pattern for parallel I/O of contiguous data chunks. Hence, a given processor loads a dedicated and contiguous slice of cells with coherently connected slices of faces and points.

Clearly, only the lowest process owning cells 0–2 (or cell 0 on six partitions) in Fig. 3 has all mesh entities to create a wholesome local mesh. The remaining partitions lack the mesh data associated with their downward processor neighborhood. Hence, processor boundaries must be constructed through processor-processor communication. Note that the communication pattern is directed upwards in processor ranks because of the coherence rules 1 and 2 of the coherent mesh format. Generally, the processor patch construction requires two steps:

-

a)

Processor patch faces and points: The lower participating processor of a processor boundary loads the mesh slice including the neighbor data. Due to coherence rule 1, the neighbor data includes the cell graph connections across processor boundaries upwards in processor rank. The combination of the face offsets list \(\mathcal {I}^{face}\) and the cell graph connections sets up the point-to-point communication of processor patch faces and points to the upper participating processor. The black and gray arrows in Fig. 3 represent the communication of processor patches.

-

b)

Globally shared points: After receipt of processor patch faces, each processor tests for missing points that have been loaded by a processor other than the partner processor of the boundary. These points are referred to as globally shared points. The point index is then looked up in the point offset list \(\mathcal {I}^\textrm{point}\) to identify the slice/partition, and consequently, the processor that read the corresponding point coordinates. Subsequently, this processor must be queried to send the coordinates. The red arrows on the r.h.s. of Fig. 3 show the two cases of globally shared points in the exemplified mesh decomposed into six partitions.

3.1.2 On the fly reconstruction of the coherent mesh

Reconstruction of the example 2D mesh partitioned on two and six processors on the l.h.s. and r.h.s., respectively. Sorting of the faces and points by walking order must be applied locally. Faces and points of processor patch faces are only kept on the lower participating rank of a processor boundary. Globally shared point indices must be queried along processor boundaries indicated as red arrows on the r.h.s

The reconstruction of the coherent mesh must establish the global enumeration as shown in Fig. 3. As a first step the processor-local mesh enumeration on each processor must be set to fulfill the coherence rules 1 and 2.

Figure 4 illustrates the enumeration stage after application of the walking order for faces and points on two (l.h.s.) and six processors (r.h.s.) including processor labeling. In a second step, the local counts of cells, faces, and points can be used to establish the global offset lists \(\mathcal {I}^\textrm{cell}\), \(\mathcal {I}^\textrm{face}\), and \(\mathcal {I}^\textrm{point}\). Note that faces and points from processor patches belong to the lower processor rank and must be neglected on the upper processor rank when offsets lists are generated.

-

a)

Processor patch faces and points: The face list must be updated regarding the local point offset. Additionally, the global point indices of points in processor patches must be communicated upward in processor rank. Note that global point indices are calculated by addition of processor-local starting point offset and point index.

-

b)

Globally shared points: Remaining global point indices that could not be provided by the lower patch processor have to be queried for downward along processor patches. This can be routed along processor patches illustrated as red arrows on the r.h.s. of Fig. 4.

3.2 Tree-based data structure for coherent mesh representation

The coherent mesh can be implemented as a tree-based composite pattern. The l.h.s. draws the three classes of the composite for coherence between mesh components. Branching and leaf components are based on the common base which is the basis for pointer linkages between tree nodes. The r.h.s. illustrates the connection of mesh components in an instantiated coherent mesh tree

The coherence, namely the downward adjacencies between the mesh entities can be implemented by a tree-based data structure such as the composite design pattern. The composite pattern comprises three classes. A ‘component’ class is the base class to a ‘composite’ and a ‘leaf’ class. The ‘composite’ class serves as branching components of the tree data structure and contains pointers to branching nodes to create tree connections. The ‘leaf’ class terminates a branch and cannot link to further tree nodes.

The data structure implemented for the coherent mesh uses the composite design pattern as drawn in Fig. 5. The DataComponent is the base class of the tree data structure, namely the ‘component’. It has a pointer to parent nodes such that the coherent mesh tree can be doubly linked. Calculation rules for slice offset and size can be injected into the DataComponent by the strategy pattern. The IndexComponent represents the branching ‘composite’ nodes. Hence, the IndexComponent class contains a list of pointers to DataComponent to connect to branching instances. It loads integer valued data such as owners, neighbors, and faces of the coherent mesh. The class template FieldComponent serves as the ‘leaf’ class. It stores data of the templated type and, thus, can be used to attach fields. For the coherent mesh, the FieldComponent is used to load point coordinates as a vector field.

Figure 5 illustrates the instantiation of a coherent mesh tree using the composite implementation. The IndexComponent for owner offsets represents the cell-ordered data. The subsequent nodes of type IndexComponent connect the face-ordered data, i.e., face offsets and neighbors. Note that the faces have been flattened for an array-based storage management of face offsets and flattened faces. The flattened faces are represented in the child node to the face offsets. Finally, a FieldComponent node containing point coordinates connects to the node of the flattened faces.

Coherence of the data is created by the rules for offsets \(\mathcal {I}\) and sizes \(\Delta\) depicted in the previous subsection. For instance, the offset and size of the face-ordered neighbours node are determined by the owner offset data from the parent node. The injection of the functionals for \(\mathcal {I}\) and \(\Delta\) is performed during node construction using std::function. Generally, the functionals can be passed by other dependency injection mechanisms.

4 Methods

The coherent mesh is implemented and tested as data access pattern for parallel I/O using OpenFOAM based on foam-extend 4.1 [33]. The ADIOS2 library has been used to implement the parallel I/O scheme into OpenFOAM. The implementation features the parallel I/O of the polyMesh and GeometricField classes of OpenFOAM. The coherent file format is tested and benchmarked using the preprocessing tools blockMesh and decomposePar, and the solver icoFoam with the 3D cavity case [34].

The problem size of the cavity case is varied for grid sizes with 1, 2, 4, 8, 16, 32, 64, 128, 256, and 512 million cells. To measure the wall times of the preprocessing steps (meshing + decomposition), the decomposition ratios are fixed to 128 processors per one million cells. For instance, the case size with 16 million cells is decomposed for partitioning onto 2048 processor cores. The wall times of blockMesh and decomposePar are measured for the coherent file format implemented in the ubuntu2004 branch of foam-extend 4.1 [33] and the collated file format in OpenFOAMv2306 [35].

During the case simulation with icoFoam, the wall times of writing the velocity, pressure, and flux field data are measured using three modes of the coherent file format. The first mode stores the field data in the ADIOS2 data.bp files located in the corresponding time folders, which is referred to as ‘time-coherent’. A second mode called ‘case-coherent’, writes the data of the time steps in a single data.bp file located in the case folder, which is shared among iterations. The third mode enables the asynchronous writing of ADIOS2 while the data.bp file is located in the case folder. The latter mode is referred to as ‘async-case-coherent’. The number of aggregators in ADIOS2 is fixed to one aggregator and, thus, one shared file per node.

The three modes of the coherent file format are compared to benchmarks using the collated format in OpenFOAMv2306. One collating rank per node is fixed for all benchmarks using the collated format such that the scheme compares to the aggregator scheme in ADIOS2. Note that the collated format stores several files within a collating folder while ADIOS2 aggregates data into one shared file per aggregating process.

The wall time of field output is measured for writing 20 consecutive time steps. The measurements are conducted along a strong scaling scheme regarding the mesh size using the 64, 128, 256, and 512 million cases while varying the number of compute nodes. Measurements regarding the 64 million case range from 1 to 64 nodes in steps of factor two. Equivalently, the measurements of the 128 and 256 million cell cases start from 2 and 4 nodes, respectively, with the largest decomposition onto 128 and 256 nodes, respectively. The measurements of the 512 million cell case range from 8 up to 4096 nodes. Note that the collated format is only used for the cases with 64 and 128 million grid cells.

All measurements are conducted on the supercomputer Hawk. A compute node of Hawk comprises two AMD EPYC 7742 CPUs with 64 processor cores per CPU. The file storage uses a Lustre file system with 40 object storage targets (OSTs) and 20 object storage servers (OSS). The striping is fixed to 1 MiB stripe size and a stripe count of 40 during all measurements. Note that all measurements are conducted during the production phase of Hawk. Thus, the Lustre file system is shared among all I/O accesses from other jobs and users, which create noise.

5 Results

The coherent file format outperforms OpenFOAM’s collated format by orders of magnitude. The plot shows the wall time for preprocessing (meshing and decomposition) using the collated and coherent format in purple and green, respectively. The histograms are plotted on a logarithmic scale while contributions of meshing (solid) and decomposition (checkered) are accumulated on top of each other

Figure 6 plots the wall times of the serial preprocessing tools blockMesh (solid bars) and decomposePar (dashed bars) as histograms based on a logarithmic ordinate. The wall times are measured for the collated (purple bars) and the coherent format (green bars). As a guideline, the plot draws horizontal lines at 24 h and seven days, where the former is a job’s wall time limit on Hawk. Note that the case preprocessing with the collated format, in particular the decomposition, could not exceed 128 million grid cells within the wall time limit on Hawk.

The comparison of meshing time up to 128 million grid cells shows that blockMesh takes roughly twice as long using the coherent format than the collated format. The mesh generation and in application format in the polyMesh class remains the old mesh representation with reduced requirements such as the upper triangular order on internal faces, arbitrary order of points, and partitioned boundary patch faces. Hence, using blockMesh with the coherent mesh representation and file format requires additional computation due to sorting and conversion steps to impose the stricter coherence rules described in Sect. 3. The additional computation adds to the wall time of mesh generation with blockMesh.

Decomposition is the dominant contribution to the wall time of preprocessing when using the collated file format. The decomposition of the case with 64 million grid cells onto 8192 processors takes approximately 2.5 h. Decomposing the 128 million cell case onto 16384 processors takes more than 11.8 h.

Decomposition using the coherent mesh format omits and skips computation of global to processor-local addressing of mesh entities because the coherent mesh is globally represented also upon decomposition. Saving the computation of addressing, the wall time of decomposePar is reduced to less than 7 min and less than 11 min for the 64 million and 128 million grid cell cases, respectively. Furthermore, the decomposition of 256 million and 512 million grid cells amount to roughly 20 min and 45 min, respectively.

The coherent file format scales well to the largest partition of the Hawk supercomputer. The wall time of 20 output steps in box plots is drawn against the number of compute nodes while fixing the case size to 64, 128, 256, and 512 million grid cells in panel A, B, C, and D, respectively. Comparisons of time-coherent, case-coherent, and async-case-coherent include all mesh sizes. The comparison with the collated format can only be provided for the mesh sizes corresponding to panels A and B

The wall time of writing field data during production runs is measured and compared using the collated and coherent file format. For the cavity case, the output of simulation results refers to writing pressure, velocity, and flux fields when using the icoFoam solver.

Figure 7 shows four panels, A, B, C, and D, corresponding to writing the field data of simulation sizes with 64, 128, 256, and 512 million grid sizes, respectively. The box plots show the measurements for writing with the collated format and the three modes of the coherent format, i.e., time-coherent, case-coherent, and async-case-coherent. Only the 64 million and 128 million cases were readily pre-processed with the collated format so that these panels contain corresponding measurement wall times as gray box plots. Note that measurements (data not shown) using the legacy file format of OpenFOAM avoiding file collation show quantitatively similar and qualitatively equal results to those using the collated file format.

The time-coherent and case-coherent output modes show comparable or slightly faster wall times than the collated format. The overall trend of decreasing wall times for the strong scaling benchmarks prevails for small to medium node counts up to 64 nodes in all (synchronous) output formats. A turning point typically arises at 128 nodes, regardless of mesh size.

When asynchronous writing is enabled with the async-case-coherent mode the time spent in the profiled writing functionality of OpenFOAM levels to sub-second ranges. The asynchronous mode hides the latencies and bandwidths of data caching and writing to the file system. Still, the wall times of the asynchronous mode scale up with the number of nodes for 128 nodes and higher.

Detailed profiling of the I/O backend (data not shown) identifies adios2::Engine::EndStep as the single most dominant function in the asynchronous case-coherent mode. This method prepares and synchronizes the global data view of ADIOS2 based on offsets and sizes.

Moreover, a breakthrough is documented in panel D in Fig. 7. Using the async-case-coherent mode of the coherent file format, the wall time of writing the field data for 20 time steps using the 512 million cell case was measured while employing up to 4096 nodes on Hawk, which amounts to 524,288 CPU cores. This is the largest OpenFOAM run reported to date that is known to the authors. The legacy format without file collation cannot be used on this scale as it would exceed quota limitations for the Lustre system, and the legacy collated format hinders the feasibility due to wall time limitations in the preprocessing steps.

6 Discussion

The coherent mesh representation is harshly reduced and minimal when compared to representations in DMPlex, HOPR, MOAB, DOLFIN, PUMI, and AOMD which have been designed for the finite element method (FEM), high-order discontinuous Galerkin (DG) methods, or general numerics of partial differential equation. The FVM method with co-located variables utilized in OpenFOAM suffices a representation neglecting element or face types. Still, full representation and duplication of face and point data for each cell like in the HOPR mesh format can be optimized with approaches like the presented coherent mesh layout.

The required upper triangular order for the coherence rule of faces is inherently applied in OpenFOAM. Generally, the newly presented coherent mesh representation can be utilized by other engineering software using linear polyhedral grid representations.

Software malleable for the presented tree data structure could profit from the design suggestion in Sect. 3.2. The coherent mesh tree inverts the data management if compared to the legacy formats in OpenFOAM. The mesh class in OpenFOAM contains various data arrays for the different mesh entities. Field classes contain a reference to the ‘global’ mesh instance of the simulation which discloses the entire interface of the mesh class into field instances. The coherent mesh tree stores the data of mesh entities in individual tree nodes and links them by their rational connections. Hence, the tree structure abstracts the rational of individual connections and only couples related mesh entities directly. Fields can be inserted into the tree by attachment to the node corresponding to the relevant topological dimension. Hence, the coupling between mesh and fields can be loosened compared to OpenFOAM’s mesh and field coupling by full interface containment. The coupling of fields to mesh components was not implemented in this study because the remaining design of the test application in OpenFOAM would require even deeper refactoring. Potential future work could investigate how the coherent mesh tree structure can be used to coherently bind co-located fields into the tree data management.

Further, note that the workflow used for the test case only included the serial preprocessing tools for meshing and decomposition, namely the OpenFOAM tools blockMesh and decomposePar. The preprocessing step for decomposition includes a partitioning logic aimed at optimizing processor boundaries to reduce communication during parallel solver execution. In practical terms, the cells of the coherent mesh are rearranged accordingly, and the offsets for partitioned slices are saved as an additional variable in the ADIOS2 mesh data file. While the current approach necessitates pre-partitioning, future efforts will integrate the partitioning logic on the fly. It is emphasized that partitioning the coherent mesh representation through straightforward mesh division, relying on the sliceability of the coherent mesh, cannot be deemed suitable for optimized, parallel execution at scale.

Further, legacy file formats of OpenFOAM require reconstruction of a decomposed case after parallel simulation using the reconstructPar tool. For the coherent file format, the tool for reconstruction can be omitted if the mesh enumeration remains unchanged. Only if topological changes apply to the mesh during simulation, on the fly reconstruction is required. The reconstruction algorithm for the coherent mesh representation is described in Sect. 3.1.2. This algorithm was not benchmarked in this study. This will be explored in future work with a focus on test cases, including parallel meshing workflows or dynamic mesh simulation.

The results for the preprocessing workflow benchmarks in Sect. 5 exhibit major improvements in the decomposition. Implementing the coherent mesh in decomposePar avoids the generation of local to global mesh addressing. In practice, the corresponding algorithms contain nested loops for which the asymptotic time complexity is \(O(N^2)\). This computational work can be omitted due to the inherently global data management of the coherent mesh representation. Hence, preprocessing in the coherent file format outperforms the collated format by orders of magnitude at scale, when the improvement in algorithmic time complexity is crucial.

Specifically, coherence rule 1 entails an initial step of sorting faces in incremental order as one progresses through higher-numbered neighboring cells in incremental order (upper triangular order). Subsequently, the faces undergo a stable sorting based on their ownership by cells, following the walking order. Therefore, sorting by owner cells necessitates the application of a stable sort algorithm, such as merge sort. This algorithm exhibits poly log-linear time complexity, denoted as \(O(N_f\cdot \textrm{log}(N_f)^2)\), and can be reduced to log-linear time complexity, \(O(N_f\cdot \textrm{log}(N_f))\), with sufficient additional memory, where \(N_f\) represents the number of faces.

The walking order for points in coherence rule 2 requires a single loop iterating over all points within the face list to enumerate unvisited points and store the reverse mapping to old point indices. This operation is characterized by linear time complexity, specifically a loop with approximately \(\langle N_{ppf}\rangle \times N_f\) iterations, where \(\langle N_{ppf}\rangle\) denotes the average number of points per face. In a hexahedral mesh \(N_{ppf}=4\) for all faces in the face list.

Similarly, coherence rule 3 can be implemented with linear time complexity concerning the number of faces. The application of permutations on face-ordered and point-ordered lists also exhibits linear time complexity relative to the number of faces and points, respectively.

Furthermore, the post-processing step of mesh and field reconstruction is required after an OpenFOAM simulation using the collated format. Reconstruction as post-processing is redundant if the coherent data format is utilized, which renders another source for time savings during the workflow toward case analysis. Such potential time savings are not quantified in this paper.

The benchmarks using the coherent file format for field output during simulation were slightly faster than the output using the collated format, however, the coherent file format implements additional conversion computation. Note that the I/O of co-located fields naturally binds to the coherent mesh representation. OpenFOAM’s internal field (and mesh) data structure remains unchanged, for instance, partitioning internal and boundary field data. Hence, the output of fields has to convert the in-application field representation to the coherent data structure for optimized I/O data access patterns. Hence, despite additional conversion work involving global communication the output using the parallel I/O from ADIOS2 showed improvements over the direct I/O of OpenFOAM-native data structures in the collated file format.

Usage of ADIOS2 also facilitates asynchronous writing if activating the runtime switch AsyncWrite in a designated runtime configuration file. The wall time of asynchronous output in ADIOS2 increased in all measurements with node counts larger than 64 nodes. The Engine::EndStep() function was identified as the sole bottleneck yielding such an issue in scalability. This function prepares the metadata for data aggregation. In particular, communication and synchronization of metadata are performed in every call to Engine::EndStep() which is used after writing each simulation time step. ADIOS2 provides the function Engine::LockWriterDefinitions() to lock the metadata if unchanged and repeated communication could be omitted. However, the function is not implemented for the BP5 engine as of version 2.9.1, used in this study.

Further, a best-practice implementation of asynchronous I/O with ADIOS2 requires indicating a communication-free computation block inside the application using the EnterComputationBlock() and ExitComputationBlock(). ADIOS2 defers data aggregation into these blocks to avoid interfering with the application’s communication. This was omitted in this study and will be investigated in future work to separate communication of the asynchronous I/O and the solver.

7 Conclusion

This paper presented a representation and algorithmic strategies for managing linear unstructured polyhedral meshes. The coherent mesh representation has been introduced, especially focused on optimized data access patterns for parallel I/O on distributed file systems. The coherence facilitates an inherently global comprehension across processors, namely a contiguous data access pattern, that naturally partitions the data. The parallel decomposition and reconstruction capabilities were explained, relying on the inherent global comprehension. The resolution of topological adjacencies and cell graph connectivities are handled during parallel I/O with the contiguous data access pattern.

The algorithmic improvements were demonstrated in an implementation and application using OpenFOAM’s core libraries. The parallelization and global layout of the coherent mesh improved the time-to-solution of the OpenFOAM workflow by orders of magnitude. Moreover, the addition of the parallel I/O capability into OpenFOAM enabled large-scale runs on larger core counts and using larger grid sizes, which could not be realized with either of the legacy formats.

Future work will investigate the usability of the coherent mesh representation with extended features of the FVM. While dynamically moving/morphing meshes can apply the presented reconstruction scheme, topologically dynamic/adaptive meshes must be investigated algorithmically. Further, the utilization of a partitioning algorithm after mesh loading, as well as dynamic load balancing, will be investigated. Implications for post-processing of selected regions arise while arbitrary cell selection can easily load coherent faces, points, and fields.

Code availability

The source code implementing the coherent file format into foam-extend 4.1 can be found at https://code.hlrs.de/exaFOAM.

References

Peric M (1985) A finite volume method for the prediction of three-dimensional fluid flow in complex ducts [Ph.D. thesis]. Imperial College London (University of London); Available from: http://hdl.handle.net/10044/1/7601

Versteeg HK, Malalasekera W (1995) An introduction to computational fluid dynamics—the finite volume method. Addison-Wesley-Longman

Moukalled F, Mangani L, Darwish M, Moukalled F, Mangani L, Darwish M (2016) The finite volume method. Springer

Mavriplis DJ (1997) Unstructured grid techniques. Annu Rev Fluid Mech 29(1):473–514. https://doi.org/10.1146/annurev.fluid.29.1.473

Weller HG, Tabor G, Jasak H, Fureby C (1998) A tensorial approach to computational continuum mechanics using object-oriented techniques. Comput Phys 12(6):620–631. https://doi.org/10.1063/1.168744

Godoy WF, Podhorszki N, Wang R, Atkins C, Eisenhauer G, Gu J, et al (2020) ADIOS 2: the adaptable input output system. A framework for high-performance data management. SoftwareX. 12:100561. https://doi.org/10.1016/j.softx.2020.100561

Atkins C, Eisenhauer GS, Godoy WF, Gu J, Poshorszki N, Wang RJ. ADIOS2: the adaptable input output system version 2. Available from: https://github.com/ornladios/ADIOS2

Thakur R, Gropp W, Lusk E (2002) Optimizing noncontiguous accesses in MPI-IO. Parallel Comput 28(1):83–105. https://doi.org/10.1016/S0167-8191(01)00129-6

Gropp W, Hoefler T, Thakur R, Lusk E (2014) Using advanced MPI: modern features of the message-passing interface. The MIT Press

Rew R, Davis G (1990) NetCDF: an interface for scientific data access. IEEE Comput Graphics Appl 10(4):76–82. https://doi.org/10.1109/38.56302

Brown SA, Folk M, Goucher G, Rew R, Dubois PF (1993) Software for portable scientific data management. Comput Phys 7(3):304–308. https://doi.org/10.1063/1.4823180

The HDF Group, Koziol Q.: HDF5-Version 1.12.0. https://doi.org/10.11578/dc.20180330.1

Poeschel F, Juncheng E, Godoy WF, Podhorszki N, Klasky S, Eisenhauer G, et al (2021) Transitioning from file-based HPC workflows to streaming data pipelines with openPMD and ADIOS2. In: Smoky Mountains Computational Sciences and Engineering Conference. Springer; p. 99–118. https://doi.org/10.1007/978-3-030-96498-6_6

Laufer M, Fredj E. High performance parallel I/O and in-situ analysis in the WRF model with ADIOS2.https://doi.org/10.48550/arXiv.2201.08228

Fredj E, Delorme Y, Jubran S, Wasserman M, Ding Z, Laufer M. Accelerating WRF I/O performance with ADIOS2 and network-based streaming. https://doi.org/10.48550/arXiv.2304.06603

Isaac T, Knepley MG. Support for non-conformal meshes in PETSc’s DMPlex interface. https://doi.org/10.48550/arXiv.1508.02470

Hapla V, Knepley MG, Afanasiev M, Boehm C, van Driel M, Krischer L et al (2021) Fully parallel mesh I/O using PETSc DMPlex with an application to waveform modeling. SIAM J Sci Comput 43(2):C127–C153. https://doi.org/10.1137/20M1332748

Logg A (2009) Efficient representation of computational meshes. Int J Comput Sci Eng 4(4):283–295. https://doi.org/10.1504/IJCSE.2009.029164

Logg A, Wells GN (2010) DOLFIN: automated finite element computing. ACM Trans Math Softw. 37(2). https://doi.org/10.1145/1731022.1731030

Alnæs MS, Blechta J, Hake J, Johansson A, Kehlet B, Logg A, et al (2015) The FEniCS project version 1.5. Arch Numer Softw. 3(100). https://doi.org/10.11588/ans.2015.100.20553

Tautges TJ, Ernst C, Stimpson C, Meyers RJ, Merkley K (2004) MOAB: a mesh-oriented database. Tech Report SAND2004-1592, Sandia National Laboratories. https://doi.org/10.1016/C2009-0-24909-9

Hindenlang F, Bolemann T, Munz CD (2015) Mesh curving techniques for high order discontinuous Galerkin simulations. In: IDIHOM: Industrialization of High-order Methods - A Top-down Approach: Results of a Collaborative Research Project Funded by the European Union, 2010–2014. Cham: Springer; p. 133–152. https://doi.org/10.1007/978-3-319-12886-3_8

Ibanez DA, Seol ES, Smith CW, Shephard MS (2016) PUMI: parallel unstructured mesh infrastructure. ACM Trans Math Softw. 42(3).https://doi.org/10.1145/2814935

Shephard MS, Seol S (2009) 19. In: Flexible Distributed Mesh Data Structure for Parallel Adaptive Analysis. Wiley; pp 407–435. https://doi.org/10.1002/9780470558027.ch19

Seol S, Smith CW, Ibanez DA, Shephard MS (2012) A parallel unstructured mesh infrastructure. In: 2012 SC Companion: High Performance Computing, Networking Storage and Analysis; pp 1124–1132. https://doi.org/10.1109/SC.Companion.2012.135

Baur DG, Edwards HC, Cochran WK, Williams AB, Sjaardema GD. SIERRA toolkit computational mesh conceptual model. https://doi.org/10.2172/976950

Sierra Toolkit Manual Version 4.48. 2018; https://doi.org/10.2172/1429968

Garimella RV (2002) Mesh data structure selection for mesh generation and FEA applications. Int J Numer Meth Eng 55(4):451–478. https://doi.org/10.1002/nme.509

Jones T. CPFDSoftware/GMV: GMV (general mesh viewer) repository for GPLv3 source code, managed by CPFD software. Available from: https://github.com/CPFDSoftware/gmv

Schoof LA, Yarberry VR (1994) EXODUS II: a finite element data model. https://doi.org/10.2172/10102115

Remacle JF, Shephard MS (2003) An algorithm oriented mesh database. Int J Numer Meth Eng 58(2):349–374. https://doi.org/10.1002/nme.774

Remacle JF, Klaas O, Flaherty JE, Shephard MS (2002) Parallel algorithm oriented mesh database. Eng Comput 18:274–284. https://doi.org/10.1007/s003660200024

Gschaider B, Nilsson H, Rusche H, Jasak H, Beaudoin M, Skuric V. foam-extend-4.1 ubuntu2004 branch. https://sourceforge.net/p/foam-extend/foam-extend-4.1/ci/ubuntu2004/tree/

High Performance Computing Technical Committee.: OpenFOAM HPC Benchmark suite. https://develop.openfoam.com/committees/hpc/-/tree/develop/

OpenFOAM by OpenCFD Ltd .: https://develop.openfoam.com/Development/openfoam

Acknowledgements

The authors thank Mark Oleson and Ruonan (Jason) Wang for their helpful discussions.

Funding

Open Access funding enabled and organized by Projekt DEAL. The simulations were performed on the national supercomputer HPE Apollo Hawk at the High-Performance Computing Center Stuttgart (HLRS) under the grant number HLRSexaFOAM/44230. The exaFOAM project on which this work is based has received funding from the European High-Performance Computing Joint Undertaking (JU) under grant agreement No 956416. The JU receives support from the European Union’s Horizon 2020 research and innovation program and France, Germany, Spain, Italy, Croatia, Greece, and Portugal. The project on which this report is based was funded by the German Federal Ministry of Education and Research under the funding codes 16HPC022K and 16HPC024. The responsibility for the content of this publication lies with the authors.

Author information

Authors and Affiliations

Contributions

R.G.W., H.R., and S.L. contributed to the study conception and design. Software development was performed by R.G.W. and S.L. Data collection was performed by R.G.W. and A.R. Analysis was performed by all authors. Funding was acquired by H.R. and A.R. Compute resources were acquired by F.C.C.G. The first draft of the manuscript was written by R.G.W. and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Ethics approval

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Weiß, R.G., Lesnik, S., Galeazzo, F.C.C. et al. Coherent mesh representation for parallel I/O of unstructured polyhedral meshes. J Supercomput 80, 16112–16132 (2024). https://doi.org/10.1007/s11227-024-06051-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-024-06051-7