Abstract

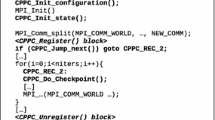

Future exascale systems, formed by millions of cores, will present high failure rates, and long-running applications will need to make use of new fault tolerance techniques to ensure successful execution completion. The Fault Tolerance Working Group, within the MPI forum, has presented the User Level Failure Mitigation (ULFM) proposal, providing new functionalities for the implementation of resilient MPI applications. In this work, the CPPC checkpointing framework is extended to exploit the new ULFM functionalities. The proposed solution transparently obtains resilient MPI applications by instrumenting the original application code. Besides, a multithreaded multilevel checkpointing, in which the checkpoint files are saved in different memory levels, improves the scalability of the solution. The experimental evaluation shows a low overhead when tolerating failures in one or several MPI processes.

Similar content being viewed by others

References

Ali M, Southern J, Strazdins P, Harding B (2014) Application level fault recovery: using fault-tolerant open MPI in a PDE solver. In: IEEE international parallel distributed processing symposium workshops, pp 1169–1178

ASC Sequoia Benchmark Codes: https://asc.llnl.gov/sequoia/benchmarks/. Last accessed September 2015

Aulwes R, Daniel D, Desai N, Graham R, Risinger L, Taylor MA, Woodall T, Sukalski M (2004) Architecture of LA-MPI, a network-fault-tolerant MPI. In: International parallel and distributed processing symposium, p 15

Bland W, Bouteiller A, Herault T, Hursey J, Bosilca G, Dongarra J (2012) An evaluation of user-level failure mitigation support in MPI. In: Recent advances in the message passing interface. Lecture notes in computer science, vol 7490. Springer, Berlin, , pp 193–203

Broquedis F, Clet-Ortega J, Moreaud S, Furmento N, Goglin B, Mercier G, Thibault S, Namyst R (2010) hwloc: a generic framework for managing hardware affinities in HPC applications. In: Euromicro international conference on parallel, distributed and network-based computing. Pisa, Italy

Cappello F (2009) Fault tolerance in petascale/exascale systems: current knowledge, challenges and research opportunities. Int J High Perform Comput Appl 23(3):212–226

Cores I, Rodríguez G, González P, Martín M (2014) Failure avoidance in MPI applications using an application-level approach. Comput J 57(1):100–114

Cores I, Rodríguez G, Martín M, González P, Osorio R (2013) Improving scalability of application-level checkpoint-recovery by reducing checkpoint sizes. New Gener Comput 31(3):163–185

Fagg G, Dongarra J (2000) FT-MPI: fault tolerant MPI, supporting dynamic applications in a dynamic world. In: Recent advances in parallel virtual machine and message passing interface, vol 1908. Springer, Berlin, pp 346–353

Himeno Benchmark: http://accc.riken.jp/2444.htm. Last accessed September 2015

Laguna I, Richards D, Gamblin T, Schulz M, de Supinski B (2014) Evaluating user-level fault tolerance for MPI applications. In: European MPI Users’ Group Meeting, EuroMPI/ASIA ’14, pp 57–62

Rodríguez G, Martín M, González P, Touriño J, Doallo R (2010) CPPC: a compiler-assisted tool for portable checkpointing of message-passing applications. Concurr Comput Pract Exp 22(6):749–766

Sato K, Moody A, Mohror K, Gamblin T, De Supinski B, Maruyama N, Matsuoka S (2014) FMI: fault tolerant messaging interface for fast and transparent recovery. In: IEEE international parallel and distributed processing symposium, pp 1225–1234

Schroeder B, Gibson G (2010) A large-scale study of failures in high-performance computing systems. IEEE Trans Dependable Secure Comput 7(4):337–350

Teranishi K, Heroux M (2014) Toward local failure local recovery resilience model using MPI-ULFM. In: European MPI Users’ Group Meeting, pp 51–56

Wang C, Mueller F, Engelmann C, Scott S (2008) Proactive process-level live migration in HPC environments. In: ACM/IEEE conference on Supercomputing, pp 1–12

Wolters E, Smith M (2013) MOCFE-Bone: the 3D MOC mini-application for exascale research. Tech. rep, Argonne National Laboratory (ANL)

Acknowledgments

This research was supported by the Ministry of Economy and Competitiveness of Spain and FEDER funds of the EU (Project TIN2013-42148-P, CAPAP-H5 network TIN2014-53522-REDT, and the predoctoral Grant of Nuria Losada ref. BES-2014-068066) and by the Galician Government (Xunta de Galicia) and FEDER funds of the EU under the Consolidation Program of Competitive Research (ref. GRC2013/055).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Losada, N., Cores, I., Martín, M.J. et al. Resilient MPI applications using an application-level checkpointing framework and ULFM. J Supercomput 73, 100–113 (2017). https://doi.org/10.1007/s11227-016-1629-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-016-1629-7