Abstract

Generalized fused Lasso (GFL) is a powerful method based on adjacent relationships or the network structure of data. It is used in a number of research areas, including clustering, discrete smoothing, and spatio-temporal analysis. When applying GFL, the specific optimization method used is an important issue. In generalized linear models, efficient algorithms based on the coordinate descent method have been developed for trend filtering under the binomial and Poisson distributions. However, to apply GFL to other distributions, such as the negative binomial distribution, which is used to deal with overdispersion in the Poisson distribution, or the gamma and inverse Gaussian distributions, which are used for positive continuous data, an algorithm for each individual distribution must be developed. To unify GFL for distributions in the exponential family, this paper proposes a coordinate descent algorithm for generalized linear models. To illustrate the method, a real data example of spatio-temporal analysis is provided.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

Assume we have grouped data such that \(y_{j 1}, \ldots , y_{j n_j}\) are observations of the jth group (\(j \in \{ 1, \ldots , m \}\)) for m groups. Further, assume the following generalized linear models (GLMs; Nelder and Wedderburn 1972) with canonical parameter \(\theta _{ji}\ (i \in \{ 1, \ldots , n_j \})\) and dispersion parameter \(\phi > 0\):

where \(y_{j i}\) is independent with respect to j and i, \(a_{ji}\) is a constant defined by

\(a (\cdot ) > 0\), \(b (\cdot )\), and \(c (\cdot )\) are known functions, and \(b (\cdot )\) is differentiable.

The \(\theta _{ji}\) has the following structure:

where \(h (\cdot )\) is a known differentiable function, \(\beta _j\) is an unknown parameter, and \(q_{ji}\) is a known term called the offset, which is zero in many cases. Although \(\theta _{ji}\) depends not only on the group but also on the individual, the jth group is characterized by a common parameter \(\beta _j\). We are thus interested in describing the relationship among the m groups. Here, the expectation of \(y_{ji}\) is given by

where \(\mu (\cdot )\) is a known function and \(\dot{b} (\cdot )\) is a derivative of \(b (\cdot )\), i.e., \(\dot{b} (\theta ) = d b (\theta ) / d \theta \). Furthermore, \(\mu ^{-1} (\cdot )\) is a link function, and \(h (\cdot )\) is an identify function, i.e., \(h (\eta ) = \eta \), when \(\mu ^{-1} (\cdot )\) is a canonical link. Tables 1, 2, and 3 summarize the relationships between model (1) and each individual distribution. In this paper, we consider clustering for m groups or discrete smoothing via generalized fused Lasso (GFL; e.g., Höfling et al. 2010; Ohishi et al. 2021).

GFL is an extension of fused Lasso (Tibshirani et al. 2005) which can incorporate relationships among multiple variables, such as adjacent relationships and network structure, into parameter estimation. For example, Xin et al. (2014) applied GFL to the diagnosis of Alzheimer’s disease by expressing the structure of structural magnetic resonance images of human brains as a 3D grid graph; Ohishi et al. (2021) applied GFL to model spatial data based on geographical adjacency. Although the GFL in these particular instances is based on one factor (brain structure or a geographical relationship), it can deal with relationships based on multiple factors. For example, we can define an adjacent relationship for spatio-temporal cases based on two factors by combining geographical adjacency and the order of time. Yamamura et al. (2021), Ohishi et al. (2022), and Yamamura et al. (2023) dealt with multivariate trend filtering (e.g., Tibshirani 2014) based on multiple factors via GFL and applied it to the estimation of spatio-temporal trends. Yamamura et al. (2021) and Ohishi et al. (2022) used a logistic regression model, which coincides with model (1) when \(n_j = 1, q_{j i} = 0\ (\forall j \in \{ 1, \ldots , m \}; \forall i \in \{ 1, \ldots , n_j \})\) under a binomial distribution. Since this relationship holds by the reproductive property of the binomial distribution, their methods can also be applied to grouped data. Yamamura et al. (2023) used a Poisson regression model, which coincides with model (1) when \(n_j = 1\ (\forall j \in \{ 1, \ldots , m \})\) under a Poisson distribution. As is the case for Yamamura et al. (2021) and Ohishi et al. (2022), the method of Yamamura et al. (2023) can also be applied to grouped data from the reproductive property of the Poisson distribution. Yamamura et al. (2021), Ohishi et al. (2022) and Yamamura et al. (2023) proposed coordinate descent algorithms to obtain the GFL estimator. Although optimization problems for GLMs, such as logistic and Poisson regression models, are generally solved by linear approximation, Ohishi et al. (2022) and Yamamura et al. (2023) directly minimize coordinate-wise objective functions and derive update equations of a solution in closed form. Although Yamamura et al. (2021) minimized the coordinate-wise objective functions using linear approximation, Ohishi et al. (2022) showed numerically that direct minimization can provide the solution faster and more accurately than minimization using a linear approximation. Ohishi et al. (2021) also derived an explicit update equation for the coordinate descent algorithm, which corresponds to model (1) under the Gaussian distribution. As described, coordinate descent algorithms have been developed to produce GFL estimators for three specific distributions; however, none have been proposed for other distributions. For example, we have an option of using the negative binomial distribution to deal with overdispersion in the Poisson distribution (e.g., Gardner et al. 1995; Ver Hoef and Boveng 2007), or the gamma or inverse Gaussian distribution for positive continuous data. To apply GFL to these distributions, it is necessary to derive update equations for each distribution individually.

In this paper, we propose a coordinate descent algorithm to obtain GFL estimators for model (1) in order to unify the GFL approach for distributions in the exponential family. The negative log-likelihood function for model (1) is given by

We estimate parameter vector \({\varvec{\beta }}= (\beta _1, \ldots , \beta _m)'\) by minimizing the following function defined by removing terms that do not depend on \({\varvec{\beta }}\) from the above equation and by adding a GFL penalty:

where \(\lambda \) is a non-negative tuning parameter, \(D_j \subseteq \{ 1, \ldots , m \} \backslash \{ j \}\) is an index set expressing adjacent relationship among groups and satisfying \(\ell \in D_j \Leftrightarrow j \in D_\ell \), and \(w_{j \ell }\) is a positive weight satisfying \(w_{j \ell } = w_{\ell j}\). The GFL penalty shrinks the difference between two adjacent groups \(|\beta _j - \beta _\ell |\) and often gives a solution satisfying \(|\beta _j - \beta _\ell | = 0\ (\Leftrightarrow \beta _j = \beta _\ell )\). That is, GFL can estimate some parameters to be exactly equal, thus enabling the clustering of m groups or the accomplishment of discrete smoothing. To obtain the GFL estimator for \({\varvec{\beta }}\), we minimize the objective function (3) via a coordinate descent algorithm. As Ohishi et al. (2022) and Yamamura et al. (2023), we directly minimize coordinate-wise objective functions without the use of approximations. For ordinary situations, where a canonical link (\(h (\eta ) = \eta \)) is used and there is no offset (\(q_{j i} = 0\)), and for several other situations, the update equation of a solution can be derived in closed form.

Table 4 summarizes relationships between an individual distribution and an update equation. Here, \(\bigcirc \) indicates that the update equation can be obtained in closed form, and \(\times \) indicates that it cannot. Even when the update equation cannot be obtained in closed form, the proposed method can specify an interval that includes the solution, which means we can easily obtain the solution by a simple numerical search. Note that the proposed method is provided via R package GFLglm (Ohishi 2024). The dataset used in a real data example is available via GFLglm.

As a related work, Tang and Song (2016) proposed a regression coefficients clustering via fused Lasso approach, namely FLARCC. In this study, regression coefficients are estimated by minimizing a negative log-likelihood function with fused Lasso type penalty. However, our GFL approach in (3) shrinks and estimates parameters based on adjacent relationship, while FLARCC restricts pairs of two parameters used in the penalty based on the order of initial parameter values. Hence, FLARCC cannot be applied to minimize (3) and differs from our purpose. However, when using the complete graph structure as an adjacent relationship in (3), although the two objective functions of FLARCC and our method are different, their purposes are equivalent in terms of clustering without any constraint. Devriendt et al. (2021) proposed an algorithm based on a proximal gradient method for multi-type penalized sparse regression, namely SMuRF algorithm, which can be applied to minimize (3). As demonstrated in Ohishi et al. (2022), since the proximal gradient method involves an approximation of an objective function, its minimization procedure may be inefficient. That is, we can expect that our algorithm, which minimizes the objective function directly, can provide the solution faster and more accurately than SMuRF algorithm. Furthermore, Choi and Lee (2019) showed a serious phenomenon in fused Lasso approach for binomial distribution: a fusion phenomenon among parameters does not occur. It is possible for such a phenomenon to occur for modeling of a discrete response, such as logistic regression and Poisson regression. However, although our framework includes the situation that the phenomenon occurs as a special case, there is no practical concern.

The remainder of the paper is organized as follows: In Sect. 2, we give an overview of coordinate descent algorithm and derive the objective functions for each step. In Sect. 3, we discuss coordinate-wise minimization of the coordinate descent algorithm and derive update equations in closed form in many cases. In Sect. 4, we evaluate the performance of the proposed method via numerical simulation. In Sect. 5, we provide a real data example. Section 6 concludes the paper. Technical details are given in the Appendix.

2 Preliminaries

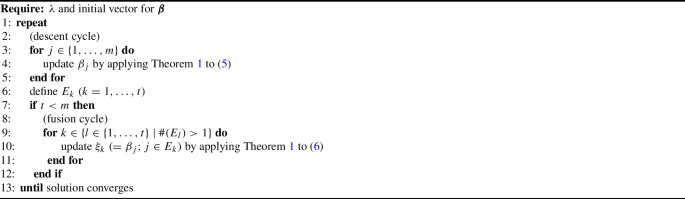

As in Ohishi et al. (2022) and Yamamura et al. (2023), we minimize the objective function (3) using a coordinate descent algorithm. Algorithm 1 gives an overview of the algorithm.

The descent cycle updates the parameters separately, and several parameters are often updated to be exactly equal. If several parameters are exactly equal, their updates can become stuck. To avoid this, the fusion cycle simultaneously updates equal parameters (see Friedman et al. 2007). In each cycle of the coordinate descent, the following function is essentially minimized:

where \(a_i\) and \(w_\ell \) are positive constants and \(z_\ell \ (\ell = 1, \ldots , r)\) are constants satisfying \(z_1< \cdots < z_{r}\). The minimization of f(x) is described in Sect. 3, and the following subsections show that an objective function in each cycle is essentially equal to f(x).

2.1 Descent cycle

The descent cycle repeats coordinate-wise minimizations of the objective function \(L ({\varvec{\beta }})\) in (3). To obtain a coordinate-wise objective function, we extract terms that depend on \(\beta _j\ (j \in \{ 1, \ldots , m \})\) from \(L ({\varvec{\beta }})\). As described in Ohishi et al. (2021), the penalty term can be decomposed as

Then, only the first term depends on \(\beta _j\). By regarding terms that do not depend on \(\beta _j\) as constants and removing them from \(L ({\varvec{\beta }})\), the coordinate-wise objective function is obtained as

where \(\hat{\beta }_\ell \) indicates \(\beta _\ell \) is given. By sorting elements of \(D_j\) in increasing order of \(\hat{\beta }_\ell \ (\forall \ell \in D_j)\), we can see that \(L_j (\beta )\) essentially equals f(x) in (4). If there exist \(\ell _1, \ell _2 \in D_j\ (\ell _1 \ne \ell _2)\) such that \(\hat{\beta }_{\ell _1} = \hat{\beta }_{\ell _2}\), we can temporarily redefine \(D_j\) and \(w_{j \ell }\) as

Since GFL estimates several parameters as being equal, this redefinition is required in most updates.

2.2 Fusion cycle

In the fusion cycle, equal parameters are replaced by a common parameter and \(L ({\varvec{\beta }})\) is minimized with respect to the common parameter. Let \(\hat{\beta }_1, \ldots , \hat{\beta }_m\) be current solutions for \(\beta _1, \ldots , \beta _m\), and \(\hat{\xi }_1, \ldots , \hat{\xi }_t\ (t < m)\) be their distinct values. The relationship among the current solutions and their distinct values is specified as

That is, the following statements are true:

Then, the \(\beta _j\ (\forall j \in E_k)\) are replaced by a common parameter \(\xi _k\) and \(L ({\varvec{\beta }})\) is minimized with respect to \(\xi _k\). Hence, to obtain a coordinate-wise objective function, we extract terms that depend on \(\xi _k\ (k = 1, \ldots , t)\) from \(L ({\varvec{\beta }})\).

We can decompose the first term of \(L ({\varvec{\beta }})\) as

Furthermore, as Ohishi et al. (2021), the penalty term of \(L ({\varvec{\beta }})\) can be decomposed as

By regarding terms that do not depend on \(\xi _k\) as constants and removing them from \(L ({\varvec{\beta }})\), the coordinate-wise objective function is obtained as

As in the descent cycle, we can see that \(L_k^*(\xi )\) essentially equals f(x) in (4).

3 Main results

In this section, to obtain update equations for the descent and fusion cycles of the coordinate descent algorithm, we describe the minimization of f(x) in (4). Following Ohishi et al. (2022) and Yamamura et al. (2023), we directly minimize f(x). One of the difficulties of the minimization of f(x) is that f(x) has multiple non-differentiable points \(z_1, \ldots , z_r\). We cope with this difficulty by using a subdifferential. The subdifferential of f(x) at \(\tilde{x} \in \mathbb {R}\) is given by

where \(g_- (x)\) and \(g_+ (x)\) are left and right derivatives defined by

Then, \(\tilde{x}\) is a stationary point of f(x) if \(0 \in \partial f (\tilde{x})\). For details of a subdifferential, see, e.g., Rockafellar (1970), Parts V and VI. In the following subsections, we separately describe the minimization of f(x) in cases where a canonical link and a general link are used.

3.1 Canonical link

We first describe the minimization of f(x) in (4) with a canonical link, i.e., \(h (\eta ) = \eta \). That is, the update equation of the coordinate descent algorithm is given by minimizing the following function:

Notice that f(x) in (7) is strictly convex. Hence, \(\tilde{x}\) is the minimizer of f(x) if and only if \(0 \in \partial f (\tilde{x})\). First, based on this relationship, we derive the condition that f(x) attains the minimum at a non-differentiable point \(z_\ell \).

The subdifferential of f(x) at \(z_\ell \) is given by

Hence, if there exists \(\ell _\star \in \{ 1, \ldots , r \}\) such that \(0 \in \partial f (z_{\ell _\star })\), f(x) attains the minimum at \(x = z_{\ell _\star }\) and \(\ell _\star \) uniquely exists because of the strict convexity of f(x).

On the other hand, when \(\ell _\star \) does not exist, we can specify an interval that includes the minimizer by checking the signs of the left and right derivatives at each non-differentiable point. Let \(s (x) = (\textrm{sign}(g_- (x)), \textrm{sign}(g_+ (x)))\). From \(z_1< \cdots < z_r\) and the strict convexity of f(x), we have

Then, the minimizer of f(x) exists in the following interval:

Hence, it is sufficient to search for the minimizer in \(R_*\). For all \(x \in R_*\), the following equation holds:

This result allows us to rewrite the penalty term in f(x) as

Hence, f(x) is rewritten in non-absolute form as

The f(x) is differentiable when \(x \in R_*\) and its derivative is given by

Then, the solution \(x_*\) of \(d f (x) / d x = 0\) is the minimizer of f(x). Hence, we have the following theorem.

Theorem 1

Let \(\hat{x}\) be the minimizer of f(x) in (7). Then, \(\hat{x}\) is given by

where \(\ell _*\) exists if and only if \(\ell _\star \) does not exist.

We can execute Algorithm 1 by applying Theorem 1 to (5) and (6) in the descent and fusion cycles, respectively. Thus, a detailed implementation of Algorithm 1 when using a canonical link is provided in Algorithm 2.

To apply Theorem 1, we need to obtain \(x_*\). In many cases, \(x_*\) can be obtained in closed form according to the following proposition.

Proposition 2

Let \(x_*\) be the solution of \(d f (x) / d x = 0\) and \(q_0\) be a value such that \(q_1 = \cdots = q_d = q_0\). Then, \(x_*\) is given as follows:

-

1.

When \(q_0\) exists, \(x_*\) is given in a general form as

$$\begin{aligned} x_*= \mu ^{-1} \left( -u / \sum _{i=1}^d a_i \right) - q_0. \end{aligned}$$ -

2.

Even when \(q_0\) does not exist, \(x_*\) for the Gaussian distribution is given by

$$\begin{aligned} x_*= \dfrac{1}{d} \left( - \sum _{i=1}^d q_i - u \right) , \end{aligned}$$and \(x_*\) for the Poisson distribution is given by

$$\begin{aligned} x_*= \log (-u) - \log \left( \sum _{i=1}^d \exp (q_i) \right) . \end{aligned}$$

For example, \(q_0\) exists and \(q_0 = 0\) holds for GLMs without an offset. When \(q_0\) does not exist, \(x_*\) can be obtained for each distribution. For the Gaussian and Poisson distributions, since \(\mu (x + q)\) can be divided with respect to x and q, \(x_*\) can be obtained in closed form. Note that \(x_*\) for a Gaussian distribution when \(q_0\) exists and equals 0 coincides with the result in Ohishi et al. (2021). For distributions for which such a decomposition is impossible, such as the binomial distribution, a numerical search is required to obtain \(x_*\). However, we can easily obtain \(x_*\) by a simple algorithm, such as a line search, because f(x) is strictly convex and has its minimizer in the interval \(R_*\). Moreover, when \(x_*\) can be obtained in closed form as in Proposition 2, the minimization of f(x) requires the computational complexity of \(O (r (d + r))\).

3.2 General link

Here, we consider the minimization of f(x) in (4) with a general link, i.e., \(h (\cdot )\) is a generally differentiable function. Then, although strict convexity of f(x) is not guaranteed, its continuity is maintained. This means the uniqueness of the minimizer of f(x) is not guaranteed, but we can obtain minimizer candidates by using the same procedure as in the previous subsection.

The subdifferential of f(x) at \(z_\ell \) is given by

where \(\dot{h} (x) = d h (x) / d x\). Since \(z_\ell \) satisfying \(0 \in \partial f (z_\ell )\) is a stationary point of f(x), such points are minimizer candidates of f(x). Next, we define intervals as \(R_\ell = (z_\ell , z_{\ell +1})\ (\ell = 0, 1, \ldots , r)\). For \(x \in R_\ell \), f(x) can be written in non-absolute form as

We can then search for minimizer candidates of f(x) by piecewise minimization. That is, \(x \in R_\ell \) minimizing \(f_\ell (x)\) is a minimizer candidate. Hence, we have the following theorem.

Theorem 3

Let \(\hat{x}\) be the minimizer of f(x) in (4) and define a set \(\mathcal {S}\) by

Now, suppose that

where \(\dot{f}_\ell (x) = d f_\ell (x) / dx\). Then, \(\mathcal {S}\) is the set of minimizer candidates of f(x) and \(\hat{x}\) is given by

The assumption (8) excludes the case in which f(x) attains the minimum at \(x = \pm \infty \). Moreover, we have the following corollary (the proof is given in Appendix A).

Corollary 4

Suppose that for all \(\ell \in \{ 0, 1, \ldots , r \}\),

is true, and that (8) holds. Then, f(x) is strictly convex and \(\# (\mathcal {S}) = 1\), where \(\mathcal {S}\) is given in Theorem 3. Moreover, the unique element of \(\mathcal {S}\) is the minimizer of f(x) and is given as in Theorem 1.

To execute Algorithm 1 for GLMs with a general link, we can replace Theorem 1 with Theorem 3 or Corollary 4 in Algorithm 2. The next subsection gives specific examples of using a general link.

3.2.1 Examples

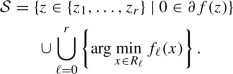

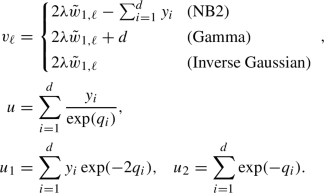

This subsection focuses on the negative binomial, gamma, and inverse Gaussian distributions with a log-link as examples of using a general link. In the framework of regression, the negative binomial distribution is often used to deal with overdispersion in Poisson regression, making it natural to use a log-link. Note that NB-C and NB2 indicate negative binomial regression with canonical and log-links, respectively (for details, see, e.g., Hilbe 2011). The gamma and inverse Gaussian distributions are used to model positive continuous data. Their expectations must be positive. However, their canonical links do not guarantee that their expectations will, in fact, be positive. Hence, a log-link rather than a canonical link is often used for these distributions (e.g., Algamal 2018; Dunn and Smyth 2018, Chap. 11). Here, we consider coordinate-wise minimizations for the three distributions with a log-link.

For \(x \in R_\ell \), f(x) in (4) and its first- and second-order derivatives are given by

-

NB2:

$$\begin{aligned} f (x)&= f_\ell (x) \\&= \sum _{i=1}^{d} (\phi ^{-1} + y_i) \log \left\{ \phi ^{-1} + \exp (x + q_i) \right\} \\&\qquad + v_\ell x - 2 \lambda \tilde{w}_{2, \ell } - \sum _{i=1}^d y_i q_i, \\ \dot{f}_\ell (x)&= \sum _{i=1}^d \dfrac{ ( \phi ^{-1} + y_i ) \exp (x + q_i) }{ \{ \phi ^{-1} + \exp (x + q_i) \} } + v_\ell , \\ \ddot{f}_\ell (x)&= \phi ^{-1} \sum _{i=1}^d \dfrac{ ( \phi ^{-1} + y_i ) \exp (x + q_i) }{ \{ \phi ^{-1} + \exp (x + q_i) \}^2 }. \end{aligned}$$ -

Gamma:

$$\begin{aligned} f (x)&= f_\ell (x) \\&= u \exp (-x) + v_\ell x - 2 \lambda \tilde{w}_{2,\ell } + \sum _{i=1}^d q_i, \\ \dot{f}_\ell (x)&= - u \exp (-x) + v_\ell , \\ \ddot{f}_\ell (x)&= u \exp (-x). \end{aligned}$$ -

Inverse Gaussian:

$$\begin{aligned} f (x)&= f_\ell (x) \\&= \exp (-x) \left\{ u_1 \exp (- x) - 2 u_2 \right\} \\&\qquad + v_\ell x - 2 \lambda \tilde{w}_{2, \ell }, \\ \dot{f}_\ell (x)&= 2 \exp (-x) \{ u_2 - u_1 \exp (-x) \} + v_\ell , \\ \ddot{f}_\ell (x)&= 2 \exp (-x) \{ 2 u_1 \exp (-x) - u_2 \}. \end{aligned}$$

Here,

We can see that \(\ddot{f}_\ell (x) > 0\) holds for all \(\ell \in \{ 0, 1, \ldots , r \}\), for NB2 and the gamma distribution. Hence, the minimizers of f(x) can be uniquely obtained from Corollary 4. On the other hand, the uniqueness of the minimizer for the inverse Gaussian distribution is not guaranteed; however, we have \(v_0 < 0\), \(v_r > 0\), and

This implies \(x< \min \{\log (u_1 / u_2), z_1 \} \Rightarrow \dot{f}_0 (x) < 0\) and \(x> \max \{ \log (u_1 / u_2), z_r \} \Rightarrow \dot{f}_r (x) > 0\). Hence, the minimizer for the inverse Gaussian distribution can be obtained by Theorem 3.

We now give specific solutions. From above, we have the following proposition.

Proposition 5

Let \(\tilde{x}_\ell \) be a stationary point of \(f_\ell (x)\). If \(\tilde{x}_\ell \) exists, it is given by

-

NB2 only when \(\exists q_0\ s.t.\ q_1 = \cdots = q_d = q_0\):

$$\begin{aligned} \tilde{x}_\ell = \log (- v_\ell / \phi ) - \log \left( 2 \lambda \tilde{w}_{1, \ell } + d/\phi \right) - q_0. \end{aligned}$$ -

Gamma:

$$\begin{aligned} \tilde{x}_\ell = \log (u / v_\ell ). \end{aligned}$$ -

Inverse Gaussian:

$$\begin{aligned} \tilde{x}_\ell = \log \left( \left\{ -u_2 + \sqrt{u_2^2 + 2 u_1 v_\ell } \right\} / v_\ell \right) . \end{aligned}$$

Moreover, a relationship between \(\tilde{x}_\ell \) and the minimizer of f(x) is given by

-

NB2 and Gamma:

$$\begin{aligned}&\tilde{x}_\ell \text { exists in }R_\ell \\&\qquad \Longrightarrow \tilde{x}_\ell \text { is the unique minimizer of }f (x). \end{aligned}$$ -

Inverse Gaussian:

$$\begin{aligned}&\tilde{x}_\ell \text { exists in }R_\ell \\&\qquad \Longrightarrow \tilde{x}_\ell \text { is a minimizer candidate of }f (x). \end{aligned}$$

3.3 Some comments regarding implementation

3.3.1 Dispersion parameter estimation

In the previous subsections, we discussed the estimation of \(\beta _j\) which corresponds to the estimation of the canonical parameter \(\theta _{ji}\). The GLMs in (1) also have dispersion parameter \(\phi \). Although \(\phi \) is fixed at one for the binomial and Poisson distributions, it is unknown for other distributions, and, hence, we need to estimate the value of \(\phi \). The Pearson estimator is often used as a suitable estimator (e.g., Dunn and Smyth 2018, Chap. 6). Let \(\hat{\beta }_1, \ldots , \hat{\beta }_m\) be estimators of \(\beta _1, \ldots , \beta _m\), t be the number of distinct values of them, and \(\hat{\zeta }_{ji} = \mu (\hat{\beta }_j + q_{ji})\). Then, the Pearson estimator of \(\phi \) is given by

where \(V (\cdot )\) is a variance function (see Table 2). For distributions other than the negative binomial distribution, the estimator of \(\phi \) can be obtained after \({\varvec{\beta }}\) is estimated since the estimation of \({\varvec{\beta }}\) does not depend on \(\phi \). For the negative binomial distribution, the estimation of \({\varvec{\beta }}\) depends on \(\phi \) because \(\mu (\cdot )\) and \(b (\cdot )\) depend on \(\phi \). Hence, we need to add a step updating \(\phi \) and repeat updates of \({\varvec{\beta }}\) and \(\phi \) alternately. Moreover, this Pearson estimator is used for the diagnosis of overdispersion in the binomial and Poisson distributions. If \(\hat{\phi } > 1\), it is doubtful that the model is appropriate.

3.3.2 Penalty weights

The objective function \(L ({\varvec{\beta }})\) in (3) includes penalty weights, and the GFL estimation proceeds with the given weights. Although setting \(w_{j \ell } = 1\) is usual, this may cause a problem of over-shrinkage because all pairs of parameters are shrunk uniformly by the common \(\lambda \). As one option to avoid this problem, we can use the following weight based on adaptive-Lasso (Zou 2006):

where \(\tilde{\beta }_j\) is an estimator of \(\beta _j\) and the maximum likelihood estimator (MLE) may be a reasonable choice for it. If there exists \(q_{j 0}\) such that \(q_{j1} = \cdots = q_{j n_j} = q_{j 0}\), the MLE is given in the following closed form:

For other cases, see Appendix B.

3.3.3 Tuning parameter selection

It is important for a penalized estimation, such as GFL estimation, to select a tuning parameter, which, in this paper, is represented as \(\lambda \) in (3). Because \(\lambda \) adjusts the strength of the penalty against a model fitting, we need to select a good value of \(\lambda \) in order to obtain a good estimator. The optimal value of \(\lambda \) is commonly selected from candidates based on the minimization of, e.g., cross-validation and a model selection criterion. For a given \(\lambda _{\max }\), candidates for \(\lambda \) are selected from the interval \([0, \lambda _{\max }]\). Following Ohishi et al. (2021), \(\lambda _{\max }\) is defined by a value such that all \(\beta _j\ (j \in \{ 1, \ldots , m \})\) are updated as \(\hat{\beta }_{\max }\) when a current solution of \({\varvec{\beta }}\) is \(\hat{{\varvec{\beta }}}_{\max } = \hat{\beta }_{\max } {\varvec{1}}_m\), where \(\hat{{\varvec{\beta }}}_{\max }\) is the MLE under \({\varvec{\beta }}= \beta {\varvec{1}}_m\) (see Appendix B) and \({\varvec{1}}_m\) is the m-dimensional vector of ones. When a current solution of \({\varvec{\beta }}\) is \(\hat{{\varvec{\beta }}}_{\max }\), the discussion in Sect. 3.2 gives the condition that \(\beta _j\) is updated as \(\hat{\beta }_{\max }\) as

Hence, \(\lambda _{\max }\) is given by

3.3.4 Extension

In this paper, we proposed the algorithm for the model (1) with the structure (2), which means the model does not have any explanatory variables. However, the proposed method can also be applied to the model with explanatory variables by simple modifications.

Let \({\varvec{x}}_{ji}\) and \({\varvec{\beta }}_j\) be p-dimensional vectors of explanatory variables and regression coefficients, respectively. We rewrite \(\eta _{ji}\) in (2) as

Focusing on the kth (\(k \in \{ 1, \ldots , p \}\)) explanatory variable, we have

where \(x_{jil}\) and \(\beta _{jl}\) are the lth elements of \({\varvec{x}}_{ji}\) and \({\varvec{\beta }}_j\), respectively. The coordinate descent method updates each \(\beta _{jk}\) by regarding \(\tilde{q}_{jik}\) as a constant. Thus, in each cycle of the coordinate descent for GFL problem with explanatory variables, the following function is essentially minimized:

where \(z_{0i}\) is a constant. Hence, we can search \(z_\ell \ (\ell \in \{ 1, \ldots , r \})\) satisfying \(0 \in \partial f (z_\ell )\) by the similarly procedure. On the other hand, we can obtain the explicit minimizer of \(f_\ell (x)\ (x \in R_\ell )\) for only Gaussian distribution and cannot obtain it for other distributions because \(\mu (\cdot )\) is not separable with respect to the product. However, the minimizer can also be easily searched here.

4 Simulation

In this section, we focus on modeling using count data and establish whether our proposed method can select the true cluster from the clustering of groups through simulation. For count data, Poisson regression and NB2 are often used. Hence, we compare the performance of the two approaches for various settings of the dispersion parameter. Note that GFL for Poisson regression has already been proposed by Yamamura et al. (2023) and that our contribution is to apply GFL to NB2. Note, too, that simulation studies were not conducted in Yamamura et al. (2023). Moreover, both of R packages metafuse and smurf (e.g., Tang et al. 2016; Reynkens et al. 2023), which implement FLARCC and SMuRF algorithm, respectively, can deal with Poisson regression but cannot deal with NB2.

Let \(m^*\) be the number of true clusters and \(E_k^*\subset \{ 1, \ldots , m \}\ (k \in \{ 1, \ldots , m^*\})\) be an index set specifying groups in the kth true cluster. Then, we generate simulation data from

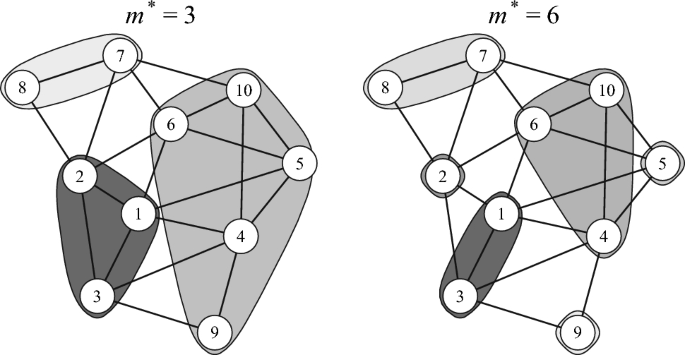

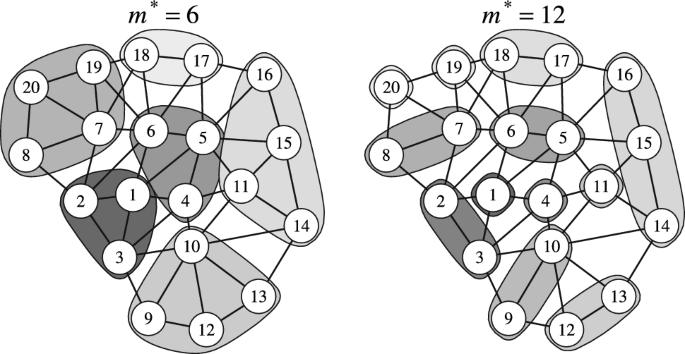

We consider four cases of m and \(m^*\) as \((m, m^*) = (10, 3), (10, 6), (20, 6), (20, 12)\), and use the same settings as Ohishi et al. (2021) for adjacent relationships of m groups and true clusters (see Figs. 1 and 2).

The sample sizes for each group are common, i.e., \(n_1 = \cdots = n_m = n_0\). Furthermore, the estimation of \(\phi \), the definition of the penalty weights, and the candidates for \(\lambda \) follow Sect. 3.3, and the optimal value of \(\lambda \) is selected based on the minimization of BIC (Schwarz 1978) from 100 candidates. Here, the simulation studies are conducted based on Monte Carlo simulation with 1,000 iterations.

4.1 Comparison with existing methods

Before comparing the performances of Poisson regression and NB2, we compare our proposed method with the two existing methods: FLARCC and SMuRF algorithm, for Poisson regression (i.e., \(\phi = 0\)). As described in Sect. 1, although FLARCC differs from our purpose, they are equivalent when using the complete graph structure as adjacent relationship. Hence, we use the complete graph structure at the comparison with FLARCC. On the other hand, SMuRF algorithm can be applied to minimize (3). Thus, we compare the minimum value of the objective function and runtime under given \(\lambda \).

Table 5 summarizes the results of the comparison with FLARCC, in which SP is the selection probability (%) of the true cluster, and time is runtime (in seconds). We can see that the SP values for both methods approach 100% as \(n_0\) increases. Moreover, FLARCC is always better than the proposed method in terms of SP. We can consider that this result is reasonable. In the proposed method, there are many choices of clustering patterns and each group has \(m-1\) choices. On the other hand, each group has only two choices at most in FLARCC because of the restriction. It would be natural to consider that a wrong fusion is hard to occur if choices get fewer. To support this suggestion, the MLE has an important role. In FLARCC, the MLE is used to restrict fusion patterns. On the other hand, in the proposed method, the MLE is used for penalty weights and the penalty weights contribute to identify whether two parameters are equal. If the restriction in FLARCC is correct, penalty weights in the proposed method would also perform well. In such a situation, we can consider that FLARCC which has fewer choices performs better. If the restriction in FLARCC is wrong, penalty weights in the proposed method would not also perform well. In such a situation, we can consider that the proposed method which has more choices is easier to make a wrong fusion. Since the MLE becomes stable as n increases, the difference between the two methods becomes small as \(n_0\) increases. Hence, if the purpose is clustering without any adjacency, using FLARCC is better. However, recall that the proposed method is proposed to minimize the objective function (3). FLARCC cannot be applied to minimize the objective function. Moreover, FLARCC requires a reparameterization for its estimation process and hence, it also requires to transform the estimation results to obtain the estimation results for the original form. This may be the reason why FLARCC is slower than the proposed method.

Table 6 summarizes the results of the comparison with SMuRF algorithm, in which difR, win, and time are defined by

respectively, and \(\lambda _j = \tau _j \lambda _{\max }\), where \(\tau _1 = 1/100\), \(\tau _2 = 1/10\), \(\tau _3 = 1/2\), and \(L_1^\star \) and \(L_2^\star \) are the minimum values of the objective function (3) by the proposed method and SMuRF algorithm, respectively. We can see that the difR value is always positive, the win value is 100% in most cases and even the minimum value is around 70%. This means that the proposed method better minimized the objective function than SMuRF algorithm. Notice that the actual difR value is very small since the displayed value is multiplied by 1,000. That is, the difference between the two minimum values is not large very well. The difR value also tell us that the difference becomes larger as \(\lambda \) increases and becomes smaller as n increases. Moreover, the time value shows that the proposed method was faster than SMuRF algorithm in most cases. Hence, we found that the proposed method can minimize the objective function faster and more accurately than SMuRF algorithm.

4.2 Poisson vs. NB2

In this subsection, we show the comparison of Poisson regression and NB2. Tables 7 and 8 summarize the results for \(m = 10, 20\), respectively, in which SP is the selection probability (%) of the true cluster, \(\hat{\phi }\) is the Pearson estimator of \(\phi \), and time is runtime (in seconds). Table 9 summarizes standard errors of \(\hat{\phi }\). First, focusing on \(\phi =0\), i.e., the true model according to the Poisson distribution, the value of SP using Poisson regression approaches 100% as \(n_0\) increases. Furthermore, we can say that Poisson regression provides good estimation since \(\hat{\phi }\) is approximately 1. On the other hand, NB2 is unable to select the true cluster. The reason for this may be that the dispersion parameter in the negative binomial distribution is positive. Moreover, standard error values tell us that the estimation of Poisson regression is more stable than that of NB2. Next, we focus on \(\phi > 0\). Here, Poisson regression produced overdispersion since \(\hat{\phi }\) is larger than 1, and, hence, it is unable to select the true cluster. On the other hand, the SP value for NB2 approaches 100% as \(n_0\) increases. Furthermore, \(\hat{\phi }\) is roughly the true value, indicating that NB2 can provide good estimation. Standard error values are also evidence for the goodness of NB2. Finally, it is apparent that Poisson regression is always faster than NB2. The reason for this may be that Poisson regression requires only the estimation of \({\varvec{\beta }}\), whereas NB2 requires repeatedly estimating \({\varvec{\beta }}\) and \(\phi \) alternately. We can conclude from this simulation that Poisson regression is better when the true model is according to a Poisson distribution and that NB2 can effectively deal with overdispersion in Poisson regression.

5 Real data example

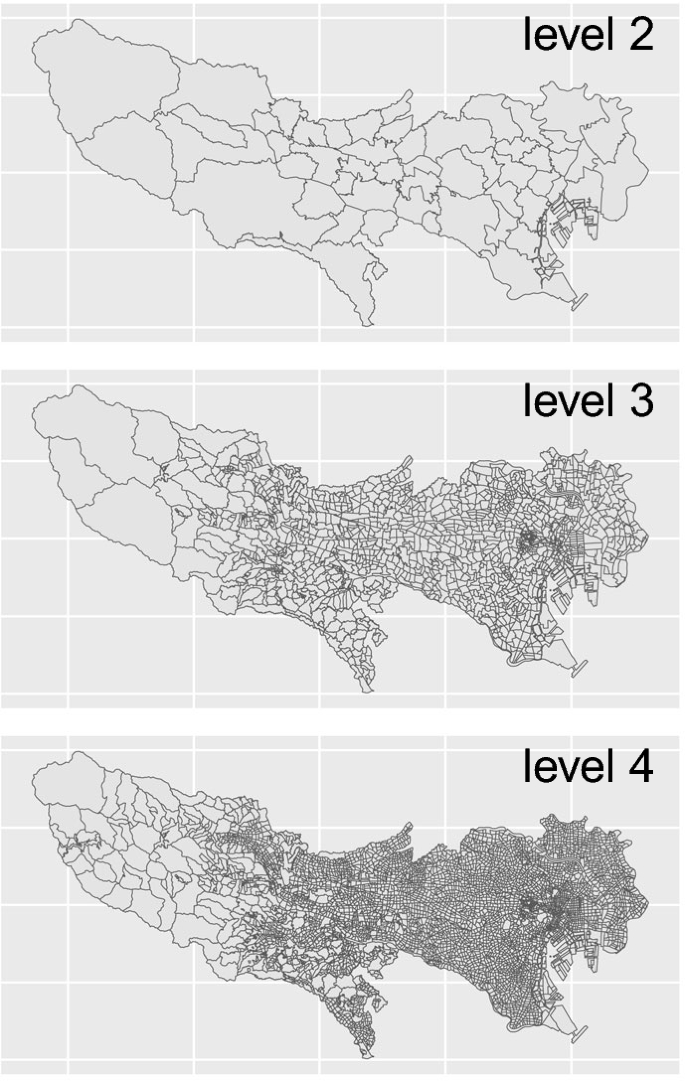

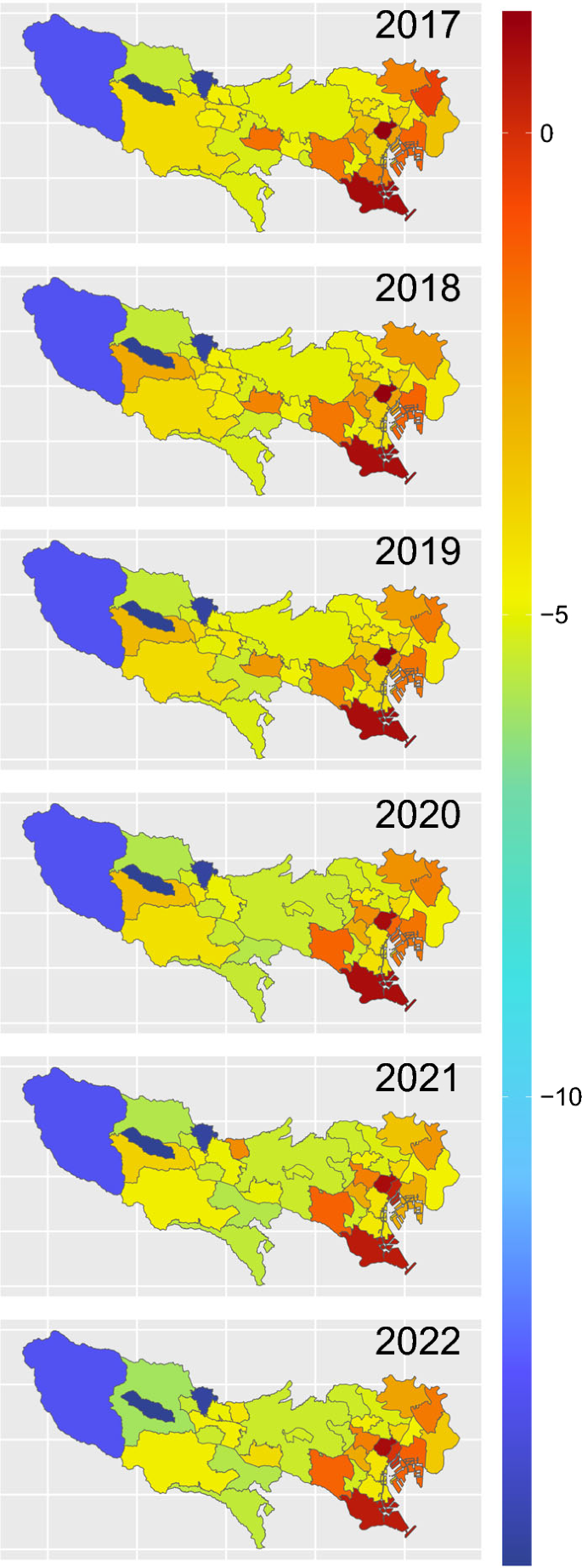

In this section, we apply our method to the estimation of spatio-temporal trend using real crime data. The data consist of the number of recognized crimes committed in the Tokyo area as collected by the Metropolitan Police Department, available at TOKYO OPEN DATA (https://portal.data.metro.tokyo.lg.jp/).Footnote 1 Although these data were aggregated for each chou-chou (level 4), the finest regional division, we integrate the data for each chou-oaza (level 3) and apply our method by regarding level 3 as individuals and the city (level 2) as the group (see Fig. 3).

There are 53 groups as a division of space, and spatial adjacency is defined by the regional relationships of level 2. We use six years of data, from 2017 to 2022. The sample size is \(n = 9{,}570\). Temporal adjacency is defined using a chain graph for the six time points. According to Yamamura et al. (2021), we can define adjacent spatio-temporal relationships for \(m = 318\ (= 53 \times 6)\) groups by combining spatial and temporal adjacencies. Furthermore, following Yamamura et al. (2023), we use population as a variable for the offset. The population data were obtained from the results of the population census, as provided in e-Stat (https://www.e-stat.go.jp/en). Since the population census is conducted every five years, we use the population in 2015 for the crimes in 2017 to 2019 and the population in 2020 for the crimes in 2020 to 2022.

In this analysis, we apply our method to the above crime data, with \(n = 9{,}570\) individuals aggregated into \(m = 318\) groups, and estimate the spatio-temporal trends in the data. Specifically, \(y_{ji}\), the number of crimes in the ith region of the jth group, is modeled based on the Poisson and negative binomial distributions, respectively, as

where \(q_{j i}\) is a logarithm transformation of the population and canonical and log-links are used, respectively. Estimation of the dispersion parameter, the setting of penalty weights, and the candidates for the tuning parameter follow Sect. 3.3. The optimal tuning parameter is selected from 100 candidates based on the minimization of BIC. Table 10 summarizes the estimation results.

The \(\hat{\phi }\) indicates the Pearson estimator of the dispersion parameter. Since the value of \(\hat{\phi }\) in the Poisson regression is far larger than 1, there is overdispersion, and we can say that using Poisson regression is inappropriate. To cope with this overdispersion, we adopted NB2. The cluster value in the table indicates the number of clusters using GFL. Poisson regression and NB2 clustered the \(m = 318\) groups into 160 and 109 groups, respectively. Figure 4 is a yearly choropleth map of the GFL estimates of \({\varvec{\beta }}\) using NB2. The map shows that the larger the value, the easier it is for crime to occur, and that the smaller the value, the harder it is. As in this figure, we can visualize the variation of trend with respect to time and space.

6 Conclusion

To unify models based on a variety of distributions, we proposed a coordinate descent algorithm to obtain GFL estimators for GLMs. Although Yamamura et al. (2021), Ohishi et al. (2022), and Yamamura et al. (2023) dealt with GFL for the binomial and Poisson distributions, our method is more general, covering both these distributions and others. The proposed method repeats the partial update of parameters and directly solves sub-problems without any approximations of the objective function. In many cases, the solution can be updated in closed form. Indeed, in the ordinary situation where a canonical link is used and there is no offset, we can always update the solution in closed form. Moreover, even when an explicit update is impossible, we can easily update the solution using a simple numerical search since the interval containing the solution can be specified. Hence, our algorithm can efficiently search the solution. In simulation studies, it was demonstrated by a computational time that the proposed method is efficient.

Data availibility

Data are available at https://portal.data.metro.tokyo.lg.jp/ and https://www.e-stat.go.jp/en.

Notes

We arranged and used the following production: Tokyo Metropolitan Government & Metropolitan Police Department. The number of recognized cases by region, crime type, and method (yearly total; in Japanese), https://creativecommons.org/licenses/by/4.0/deed.en.

References

Algamal, Z.Y.: Developing a ridge estimator for the gamma regression model. J. Chemom. 32, 3054 (2018). https://doi.org/10.1002/cem.3054

Choi, H., Lee, S.: Convex clustering for binary data. Adv. Data Anal. Classif. 13, 991–1018 (2019). https://doi.org/10.1007/s11634-018-0350-1

Devriendt, S., Antonio, K., Reynkens, T., Verbelen, R.: Sparse regression with multi-type regularized feature modeling. Insur. Math. Econ. 96, 248–261 (2021). https://doi.org/10.1016/j.insmatheco.2020.11.010

Dunn, P.K., Smyth, G.K.: Generalized Linear Models With Examples in R. Springer, New York (2018)

Friedman, J., Hastie, T., Höfling, H., Tibshirani, R.: Pathwise coordinate optimization. Ann. Appl. Stat. 1, 302–332 (2007). https://doi.org/10.1214/07-AOAS131

Gardner, W., Mulvey, E.P., Shaw, E.C.: Regression analyses of counts and rates: Poisson, overdispersed Poisson, and negative binomial models. Psychol. Bull. 118, 392–404 (1995). https://doi.org/10.1037/0033-2909.118.3.392

Hilbe, J.M.: Negative Binomial Regression, 2nd edn. Cambridge University Press, Cambridge (2011)

Höfling, H., Binder, H., Schumacher, M.: A coordinate-wise optimization algorithm for the fused Lasso. arXiv:1011.6409v1 (2010)

Nelder, J.A., Wedderburn, R.W.M.: Generalized linear models. J. R. Stat. Soc. Ser. A 135, 370–384 (1972). https://doi.org/10.2307/2344614

Ohishi, M.: GFLglm: Generalized Fused Lasso for Grouped Data in Generalized Linear Models (2024). R package version 0.1.0. https://github.com/ohishim/GFLglm

Ohishi, M., Fukui, K., Okamura, K., Itoh, Y., Yanagihara, H.: Coordinate optimization for generalized fused Lasso. Comm. Stat. Theory Methods 50, 5955–5973 (2021). https://doi.org/10.1080/03610926.2021.1931888

Ohishi, M., Yamamura, M., Yanagihara, H.: Coordinate descent algorithm of generalized fused Lasso logistic regression for multivariate trend filtering. Jpn. J. Stat. Data Sci. 5, 535–551 (2022). https://doi.org/10.1007/s42081-022-00162-2

Reynkens, T., Devriendt, S., Antonio, K.: Smurf: Sparse Multi-Type Regularized Feature Modeling (2023). R package version 1.1.5. https://CRAN.R-project.org/package=smurf

Rockafellar, R.T.: Convex Analysis. Princeton University Press, New Jersey (1970)

Schwarz, G.: Estimating the dimension of a model. Ann. Stat. 6, 461–464 (1978). https://doi.org/10.1214/aos/1176344136

Tang, L., Song, P.X.K.: Fused Lasso approach in regression coefficients clustering—learning parameter heterogeneity in data integration. J. Mach. Learn. Res. 17, 1–23 (2016)

Tang, L., Zhou, L., Song, P.X.K.: Metafuse: Fused Lasso Approach in Regression Coefficient Clustering (2016). R package version 2.0-1. https://CRAN.R-project.org/package=metafuse

Tibshirani, R.J.: Adaptive piecewise polynomial estimation via trend filtering. Ann. Stat. 42, 285–323 (2014). https://doi.org/10.1214/13-AOS1189

Tibshirani, R., Saunders, M., Rosset, S., Zhu, J., Knight, K.: Sparsity and smoothness via the fused Lasso. J. R. Stat. Soc. Ser. B. Stat. Methodol. 67, 91–108 (2005). https://doi.org/10.1111/j.1467-9868.2005.00490.x

Ver Hoef, J.M., Boveng, P.L.: Quasi-Poisson vs. negative binomial regression: How should we model overdispersed count data? Ecology 88, 2766–2772 (2007). https://doi.org/10.1890/07-0043.1

Xin, B., Kawahara, Y., Wang, Y., Gao, W.: Efficient generalized fused Lasso and its application to the diagnosis of Alzheimer’s disease. In: Proceedings of the Twenty-Eighth AAAI Conference on Artificial Intelligence, pp. 2163–2169. AAAI Press, California (2014)

Yamamura, M., Ohishi, M., Yanagihara, H.: Spatio-temporal adaptive fused Lasso for proportion data. In: Czarnowski, I., Howlett, R.J., Jain, L.C. (eds.) Intelligent Decision Technologies, pp. 479–489. Springer, Singapore (2021). https://doi.org/10.1007/978-981-16-2765-1_40

Yamamura, M., Ohishi, M., Yanagihara, H.: Spatio-temporal analysis of rates derived from count data using generalized fused Lasso. In: Czarnowski, I., Howlett, R.J., Jain, L.C. (eds.) Intelligent Decision Technologies, pp. 225–234. Springer, Singapore (2023). https://doi.org/10.1007/978-981-99-2969-6_20

Zou, H.: The adaptive Lasso and its oracle properties. J. Am. Stat. Assoc. 101, 1418–1429 (2006). https://doi.org/10.1198/016214506000000735

Acknowledgements

The author thanks Prof. Hirokazu Yanagihara of Hiroshima University for his many helpful comments and FORTE Science Communications (https://www.forte-science.co.jp/) for English language editing of the first draft. Moreover, the author also thanks the associate editor and the two reviewers for their valuable comments. Furthermore, this work was partially supported by JSPS KAKENHI Grant Number JP20H04151, JP21K13834, JSPS Bilateral Program Grant Number JPJSBP120219927, and ISM Cooperative Research Program (2023-ISMCRP-4105).

Author information

Authors and Affiliations

Contributions

M.O. contributed the whole paper.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Proof of Corollary 4

Suppose that for all \(\ell \in \{ 0, 1, \ldots , r \}\), the statement

is true and that (8) holds. Then, \(\dot{f}_\ell (x)\) is strictly increasing on \(R_\ell \) and hence, \(f_\ell (x)\) is strictly convex. Moreover, for all \(\ell \in \{ 1, \ldots , r \}\), there is the following relationship among a derivative and one-sided derivatives:

This fact and (8) imply the strict convexity of f(x) on \(\mathbb {R}\) and hence, the minimizer uniquely exists.

Appendix B: Derivation of MLEs

We first describe the derivation of the MLE of \(\beta _j\). For distributions with a convex likelihood function, the MLE is obtained by solving

In Tables 2 and 3, all distributions, with the exception of the inverse Gaussian distribution with log-link, have convexity. The MLE of \(\beta _j\) is given in closed form in the following cases:

-

\(q_{j1} = \cdots = q_{j n_j} = q_{j0}\):

$$\begin{aligned} \tilde{\beta }_j = \mu ^{-1} \left( \sum _{i=1}^{n_j} a_{ji} y_{ji} / \sum _{i=1}^{n_j} a_{ji} \right) - q_{j0}. \end{aligned}$$ -

Gaussian:

$$\begin{aligned} \tilde{\beta }_j = n_j^{-1} \sum _{i=1}^{n_j} (y_{ji} - q_{ji}). \end{aligned}$$ -

Poisson or Gamma with log-link:

$$\begin{aligned} \tilde{\beta }_j = \log \sum _{i=1}^{n_j} y_{ji} \dot{h} (q_{ji}) - \log \sum _{i=1}^{n_j} \dot{h} (q_{ji}) \exp (q_{ji}). \end{aligned}$$

Other distributions, including the inverse Gaussian distribution with log-link, require a numerical search. Furthermore, the negative binomial distribution requires the repeated updating of \({\varvec{\beta }}\) and \(\phi \) alternately.

Next, we describe the derivation of \(\beta _{\max }\). The \(\beta _{\max }\) is the MLE of \(\beta \) under \({\varvec{\beta }}= \beta {\varvec{1}}_m\), and for distributions with a convex likelihood function, its value is obtained by solving

Notice that this is essentially equal to the derivation of the MLE of \(\beta _j\). Hence, \(\beta _{\max }\) is given in closed form in the following cases:

-

\(q_{j i} = q_0\ (\forall j, i)\):

$$\begin{aligned} \tilde{\beta }_j = \mu ^{-1} \left( \sum _{j=1}^m \sum _{i=1}^{n_j} a_{ji} y_{ji} / \sum _{j=1}^m \sum _{i=1}^{n_j} a_{ji} \right) - q_0. \end{aligned}$$ -

Gaussian:

$$\begin{aligned} \tilde{\beta }_j = n^{-1} \sum _{j=1}^m \sum _{i=1}^{n_j} (y_{ji} - q_{ji}). \end{aligned}$$ -

Poisson or Gamma with log-link:

$$\begin{aligned} \tilde{\beta }_j&= \log \sum _{j=1}^m \sum _{i=1}^{n_j} y_{ji} \dot{h} (q_{ji}) \\&\qquad - \log \sum _{j=1}^m \sum _{i=1}^{n_j} \dot{h} (q_{ji}) \exp (q_{ji}). \end{aligned}$$

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ohishi, M. Generalized fused Lasso for grouped data in generalized linear models. Stat Comput 34, 124 (2024). https://doi.org/10.1007/s11222-024-10433-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11222-024-10433-5