Abstract

The restricted polynomially-tilted pairwise interaction (RPPI) distribution gives a flexible model for compositional data. It is particularly well-suited to situations where some of the marginal distributions of the components of a composition are concentrated near zero, possibly with right skewness. This article develops a method of tractable robust estimation for the model by combining two ideas. The first idea is to use score matching estimation after an additive log-ratio transformation. The resulting estimator is automatically insensitive to zeros in the data compositions. The second idea is to incorporate suitable weights in the estimating equations. The resulting estimator is additionally resistant to outliers. These properties are confirmed in simulation studies where we further also demonstrate that our new outlier-robust estimator is efficient in high concentration settings, even in the case when there is no model contamination. An example is given using microbiome data. A user-friendly R package accompanies the article.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The polynomially-tilted pairwise interaction (PPI) model for compositional data was introduced by Scealy and Wood (2023). It is a flexible model for compositional data because it can model high levels of right skewness in the marginal distributions of the components of a composition, and it can capture a wide range of correlation patterns. Empirical investigations in Scealy and Wood (2023) showed that this distribution can successfully describe the behaviour of real data in many settings. They illustrated its effectiveness on a set of microbiome data. Some other recent related articles are Yu et al. (2021) and Weistuch et al. (2022).

Many articles in the literature analysing microbiome data, including for example Cao et al. (2019), He et al. (2021), Mishra and Muller (2022) and Liang et al. (2022), assume that zeros are effectively outliers since they replace all zero counts by 0.5 (or they use some other arbitrary constant to impute the zeros), then take a log-ratio transformation of the proportions and apply Euclidean data analysis methods. This is a form of Winsorisation and depending on the target of inference, this zero replacement method can often lead to bias in parameter estimators. There are typically huge numbers of zeros in microbiome data and we argue that they should not be automatically treated as outliers, but rather they should be treated as legitimate datapoints that occur with relatively high probability.

The purpose of this article is to develop a novel method of estimation for the PPI model with several attractive features: (a) it is tractable, (b) it is insensitive to zero values in any of the components of a data composition, and (c) it is resistant to outliers. Outliers can occur when the majority of the dataset is highly concentrated in a relatively small region of the simplex. An observation not close to the majority would be deemed an outlier. The method is based on score matching estimation (SME) after an additive log-ratio transformation, plus the inclusion of additional weights in the estimating equations for resistance to outliers. Our approach uses the additive log-ratio transformation as a device to obtain parameter estimators for the PPI model and we are not transforming the data itself prior to the analysis as in the Aitchison (1986) approach. Although the method is mathematically well-defined for the full PPI model, it is helpful in practice to focus on a restricted version of the PPI model (the RPPI model) both for identifiability reasons and because the restricted model was shown by Scealy and Wood (2023) to provide a good fit to microbiome data.

The article is organized as follows. The PPI model is presented in Sect. 2. The important distinction between zero components and outliers is explored in Sect. 3. Section 4 gives the score matching algorithm and Sect. 5 gives the modifications needed for resistance to outliers. An application to microbiome data analysis is given in Sect. 6, with simulation studies in Sect. 7. Further technical details and simulation results are given in the Supplementary Material which also includes a document that reproduces the numerical results in this article.

A package designed for the Comprehensive R Archive Network (CRAN 2022) is under development and available at github.com/kasselhingee/scorecompdir. The package contains our new additive log-ratio score matching estimator and its robustified version, other score matching estimators, and a general capacity for implementing score matching estimators.

2 The PPI distribution

The \((p-1)\)-dimensional simplex in \(\mathbb {R}^p\) is defined by

where a composition \(\varvec{u}\) contains p nonnegative components adding to 1. The boundary of the simplex consists of compositions for which one or more components equal zero. The open simplex \(\Delta ^{p-1}_0\) excludes the boundary so that \(u_j>0, \ j=1, \ldots , p\).

The polynomially-tilted pairwise interaction (PPI) model of Scealy and Wood (2023) on \(\Delta ^{p-1}_0\) is defined by the density

with respect to \(d \varvec{u}\), where \(d \varvec{u}\) denotes Lebesgue measure in \(\mathbb {R}^p\) on the hyperplane \(\sum u_j =1\). The density is the product of a Dirichlet factor and an exp-quadratic (i.e. the exponential of a quadratic) factor. To ensure integrability, the Dirichlet parameters must satisfy \(\beta _j>-1, \ j=1, \ldots , p\). Note that if \(-1< \beta _j<0\), the Dirichlet factor blows up as \(u_j \rightarrow \) 0. The matrix \(\varvec{D}\) in the quadratic form is a symmetric matrix. Due to the constraint \(\sum u_j =1\) it may be assumed without loss of generality that \(\varvec{1}^\top \varvec{D}\varvec{1}= 0\).

If the last component is written in terms of the earlier components, \(u_p = 1-\sum _1^{p-1} u_j\), then (2) can be written in the alternative form

with respect to the same Lebesgue measure \(d\varvec{u}\), where \(\varvec{u}_L=(u_1,u_2,\ldots , u_{p-1})^{\top }\), \(\varvec{A}_L\) is a \((p-1) \times (p-1)\)-dimensional symmetric matrix and \(\varvec{b}_L\) is a \((p-1)\)-dimensional vector.

The full PPI model contains \((p^2+ 3p -2)/2\) parameters. Although the parameters are mathematically identifiable, in practice it can be difficult to estimate all of them accurately. Hence it is useful to consider a restricted PPI (RPPI) with a smaller number of free parameters. The RPPI model contains \(q = (p+2)(p-1)/2\) free parameters (the same as for the \((p-1)\)-dimensional multivariate normal distribution) and is defined as follows. First, order the components so that the most abundant component \(u_p\) is listed last. Then set

The above restriction on the parameters leads to a model which is similar to a generalised gamma distribution in \(p-1\) dimensions. This model was shown by Scealy and Wood (2023) to provide a reasonably good fit to microbiome data.

3 Zeros and outliers

Two types of extreme behaviour in compositional data are zeros and outliers, and it is helpful to distinguish between these two concepts.

An outlier is defined to be an observation which has low probability density under the PPI model fitted to the bulk of the data on the simplex. Outliers can occur when the majority of the dataset is highly concentrated in a relatively small region of the simplex. In particular, if most of the data are highly concentrated in the middle of the simplex with small variance, then an observation close to or on the boundary would be deemed to be an outlier.

On the other hand if the marginal distribution for the jth component has a nonvanishing probability density as \(u_j\) tends to 0 (e.g. in the PPI model with \(\beta _j \le 0\)), then a composition \(\varvec{u}\) with \(u_j=0\) would not be considered to be an outlier.

Although the PPI model has no support on the boundary of the simplex, we may still want to fit the model to data sets for which some of the compositions have components which are exact zeros. There are two main ways to think about the presence of zeros in the data. First, they may be due to measurement error; a measurement of zero corresponds to a “true” composition lying in the interior of the simplex. Second the data may arise as counts from a multinomial distribution where the probability vector is viewed as a latent composition coming from the PPI model. See Sects. 4.3, 6 and Scealy and Wood (2023) for further details on the multinomial latent variable model. Then zero counts can occur even though the probability vector lies in \(\Delta ^{p-1}_0\).

The presence of zero components in data poses a major problem for maximum likelihood estimation for the PPI model. In particular, the derivative of the log-likelihood function with respect to \(\beta _j\) for a single composition \(\varvec{u}\),

is unbounded as \(u_j \rightarrow 0\), which leads to singularities in the maximum likelihood estimates.

Hence we look for alternatives to maximum likelihood estimation. One promising general approach is score matching estimation (SME), due to Hyvarinen (2005). A version of SME was used by Scealy and Wood (2023) that involved downweighting observations near the boundary of the simplex. However, their method was somewhat cumbersome due to the requirement to specify a weight function and their estimator is inefficient when many of the parameters \(\beta _j\), \(j=1,2,\ldots , p-1\) are close to \(-1\). This parameter setting is relevant to microbiome data applications.

This article uses another version of SME that we call ALR-SME because it involves an additive log-ratio transformation when constructing the estimators. ALR-SME is tractable and is insensitive to zeros, in the sense that the influence function is bounded as \(u_j \rightarrow 0\) for any j.

Further, following the method of Windham (1995) it is possible to robustify ALR-SME to outliers by incorporating suitable weights in the estimating equations. Details are given in Sect. 5. In this article we make a distinction between robustness to zeros and robustness to outliers, and for clarity we often describe these forms of robustness as insensitive to zeros and resistant to outliers, respectively.

4 Additive log-ratio score matching estimation

In this section we recall the general construction of the score matching estimator due to Hyvarinen (2005), and then apply it to data from the RPPI distribution after first making an additive log-ratio transformation.

4.1 The score matching estimator

The construction of the score matching estimator starts with the Hyvarinen divergence, defined by

where g and \(g_0\) are probability densities on \(\mathbb {R}^{p-1}\) subject to mild regularity conditions (Hyvarinen 2005). Note that \( \Phi (g,g_0) = 0\) if and only if \(g=g_0\).

Let

define an exponential family model, where \(\varvec{\pi }\) is a q-dimensional parameter vector and \(\varvec{t}(\varvec{y})\) is a q-dimensional vector of sufficient statistics. Then for a given density \(g_0\), the “best-fitting” model \(g(\varvec{y}; \varvec{\pi })\) to \(g_0\) can be defined by minimizing (5) over \(\varvec{\pi }\). Since \(\nabla \log g(\varvec{y}) \) is linear in \(\varvec{\pi }\), \(\Phi \) is quadratic in \(\varvec{\pi }\). Differentiating \(\Phi \) with respect to \(\varvec{\pi }\), and setting the derivative to \(\varvec{0}\) yields the estimating equations

where \(\varvec{W}\) and \(\varvec{d}\) have elements

The Laplacian in (8) arises after integration by parts in (5). Hence the the best-fitting value of \(\varvec{\pi }\) is

Given data \(\varvec{y}_i, \ i=1, \ldots , n\), with elements \(y_{ij}, \ j=1, \ldots , p-1\), the integrals can be replaced by empirical averages to yield the estimating equations

where \({\hat{\varvec{W}}}\) and \({\hat{\varvec{d}}}\) have elements

Solving the estimating equations (9) yields the score matching estimator (SME)

4.2 Additive log-ratio transformed compositions

To make use of this result for distributions on the simplex, it is helpful to make an additive log-ratio (ALR) transformation from \(\varvec{u}\in \Delta ^{p-1}_0\) to \(\varvec{y}= (y_1,y_2,\ldots , y_{p-1})^{\top } \in \mathbb {R}^{p-1}\) where

This transformation was popularized by Aitchison (1986). The logistic-normal distribution for \(\varvec{u}\), or equivalently the normal distribution for \(\varvec{y}\) has often been suggested as a model for compositional data (e.g., Aitchison 1986). However, it should be noted that the RPPI distribution has very different properties. In particular, we do not advocate the use of logistic-normal models in situations where zero or very-near-zero compositional components occur frequently. See Scealy and Welsh (2014) for relevant discussion and see Appendix A.3 (Supplementary Material) for further details on the choice of metric and transformation in score matching.

The transformed RPPI distribution has density proportional to

with respect to Lebesgue measure \(d\varvec{y}= dy_1 \cdots dy_{p-1}\) on \(R^{p-1}\), where

and we have used the constraints (4). The density (13) forms a full exponential family with canonical parameter vector

with \(q={p(p-1)}/{2} + (p-1)\) parameters, where \(a_{ij}\) refers to the i, jth element of \(\varvec{A}_L\). The corresponding sufficient statistic, \(\varvec{t}(\varvec{y})=(\varvec{t}_1(\varvec{y})^\top , \varvec{t}_2(\varvec{y})^\top , \varvec{t}_3(\varvec{y})^\top )^\top \) will now be specified: \(\varvec{t}_1(\varvec{y})\) is a \((p-1)\)-vector with jth element

\(\varvec{t}_2(\varvec{y})\) is a \((p-1)(p-2)/2\)-vector with typical element

and \(\varvec{t}_3(\varvec{y})\) is a \((p-1)\)-vector with typical element

The elements of \({\hat{\varvec{W}}}\) and \({\hat{\varvec{d}}}\) in (9) can be expressed in terms of linear combinations of powers and products of the \(u_{ij}\) which are the elements of the data vectors \(\varvec{u}_i\), \(i=1,2,\ldots , n\); see the equations (20) and (21) in Appendix A.1 (Supplementary Material). We refer to the resulting score matching estimator as the ALR-SME. Note that there are no \(\log {(u_{ij})}\) or ratios involving \(u_{ij}\) terms in (20) and (21). The ALR-SME estimator is very different to the standard maximum likelihood estimator for Aitchsion’s logistic normal distribution. We use log-ratios as merely a device in the derivations to obtain our new score matching estimators (we are not actually transforming the data in the analysis since the PPI distribution is defined directly on the simplex).

4.3 Consistency

Next we state a consistency result for the ALR-SME when applied to the multinomial latent variable model. Let \(\varvec{x}_i, \ i=1, \ldots , n\), be independent multinomial count vectors from different multinomial distributions, where the probability vectors \(\varvec{u}_i\) are taken independently from the RPPI model. Let \(m_i = x_{i1} + \cdots + x_{ip}\) denote the total count from the ith multinomial vector. That is, we assume the conditional probability mass function of \(\varvec{x}_i=(x_{i1},x_{i2},\ldots , x_{ip})^{\top } \) given \(\varvec{u}_i\) is \(f(\varvec{x}_i \vert \varvec{u}_i)=m_i! \prod _{j=1}^p \{ u_{ij}^{x_{ij}}/x_{ij}!\}\), where the \(\varvec{u}_i=(u_{i1},u_{i2},\ldots , u_{ip})^{\top }\) are unobserved latent variables. This model is relevant for analysing microbiome data; see Sect. 6. Consider estimating the parameters \(\varvec{A}_L\) and \(\beta _1, \beta _2, \ldots , \beta _{p-1}\) using the ALR-SME where the known proportions \({\hat{\varvec{u}}}_i=\varvec{x}_i/m_i\) are used as substitutes for the unknown true compositions \(\varvec{u}_i\) for \(i=1,2,\ldots , n\). Note that we do not need the extra restrictive conditions in part (III) Theorem 3 of Scealy and Wood (2023) for estimating \(\varvec{\beta }\). The proof of Theorem 1 below is given in Appendix A.2 (Supplementary Material).

Theorem 1

Let \({\hat{\varvec{\pi }}}\) denote the ALR-SME of \(\varvec{\pi }\) (12) based on the (unobserved) compositional vectors \(\varvec{u}_1, \ldots , \varvec{u}_n\) and let \({\hat{\varvec{\pi }}}^{\dagger }\) denote the ALR-SME of \(\varvec{\pi }\) based on the observed vectors of proportions \({\hat{\varvec{u}}}_1, \ldots , {\hat{\varvec{u}}}_n\). Assume that for some constants \(C_1>0\) and \(\alpha >1\), \(\inf _{i=1, \ldots , n} m_i \ge C_1 n^\alpha \).

Then as \(n \rightarrow \infty \)

Theorem 1 above shows that \({\hat{\varvec{\pi }}}^{\dagger }\) is asymptotically equivalent to \({\hat{\varvec{\pi }}}\) to leading order. Note that Theorem 1 does not assume that the population latent variable distribution is a RPPI distribution, but if the RPPI model is correct then \({\hat{\varvec{\pi }}}^{\dagger }\) and \({\hat{\varvec{\pi }}}\) are both consistent estimators of \(\varvec{\pi }\) under the conditions of Theorem 1. Asymptotic normality of \({\hat{\varvec{\pi }}}\) also follows directly from similar arguments to Scealy and Wood (2023). Theorem 1 applies even when the observed data has a large proportion of zeros. This has important implications for analysing microbiome count data with many zeros. Scealy and Wood (2023) were unable to use score matching based on the square root transformation to estimate \(\varvec{\beta }\) when analysing real microbiome data because the extra conditions needed for consistency did not look credible for the real data (there was an extra assumption needed on the marginal distributions of the components of the \(\varvec{u}_i\)). Here, using the ALR-SME we are now able to estimate \(\varvec{\beta }\) directly using score matching. See Sects. 6 and 7 for further details.

It is also insightful to compare the ALR-SME to standard maximum likelihood estimation. The maximum likelihood estimator for \(\varvec{A}_L\) and \(\varvec{\beta }\) based on an iid sample from model (3) solves the estimating equation

where \(\varvec{t}^*(\varvec{u})\) is defined at (19) in Appendix A.1 (Supplementary Material). Denote the maximum likelihood estimator of \(\varvec{\pi }\) by \({\hat{\varvec{\pi }}}_{ML}\). The estimator \({\hat{\varvec{\pi }}}_{ML}\) is difficult to calculate due to the intractable normalising constant \(c_2\). Theorem 1 also does not hold for \({\hat{\varvec{\pi }}}_{ML}\) due to the presence of the \(\log {(u_j)}\) terms in \(\varvec{t}^* (\varvec{u})\) which are unbounded at zero. That is, we cannot simply replace \(\varvec{u}_i\) by \({\hat{\varvec{u}}}_i\) within (15) to obtain a consistent estimator for the multinomial latent variable model. This is a major advantage of the ALR-SME because it leads to computationally simple and consistent estimators, whereas \({\hat{\varvec{\pi }}}_{ML}\) with the latent variables \(\varvec{u}_i, \ i=1,2,\ldots , n\) each replaced with \({\hat{\varvec{u}}}_i\) is inconsistent and computationally not tractable.

4.4 Comments on SME

Score matching estimation has been defined here for probability densities whose support is all of \(\mathbb {R}^d\). This construction can be extended in various ways, and we mention two possibilities here that are relevant for compositional data.

First, the unbounded region \(\mathbb {R}^{p-1}\) in (5) can be replaced by a bounded region such as \(\Delta ^{p-1}_0\). However, there is a price to pay. The integration by parts which underlies the Laplacian term in (8) now includes boundary terms. Scealy and Wood (2023) introduced a weighting function which vanishes on the boundary of the simplex. The effect of this weighting function is to eliminate the boundary terms. However, the weighting function also lessens the contribution of data near the boundary to the estimating equations.

Second, the Hyvarinen divergence in (5) implicitly uses a Riemannian metric in \(\mathbb {R}^{p-1}\), namely Euclidean distance. Other choices of Riemannian metric lead to different estimators. Some comments on these choices in the context of compositional data are discussed in Appendix A.3 (Supplementary Material).

5 An ALR-SME that is resistant to outliers

Although the simplex is a bounded space, outliers/influential points can still occur when the majority of the data is highly concentrated, or equivalently has low dispersion, in certain regions of the simplex. In the case of microbiome data (see Sect. 6), there are a small number of abundant components which have low concentration (e.g. Actinobacteria and Proteobacteria) and these components should be fairly resistant to outliers. However, the components Spirochaetes, Verrucomicrobia, Cyanobacteria/Chloroplast and TM7 are highly concentrated at or near zero and any large values away from zero can be influential. For the highly concentrated microbiome components distributed close to zero, these marginally look to be approximately gamma or generalised gamma distributed; see Figs. 1 and 2 in Sect. 6. Hence there is a need for the Dirichlet component of the density in the RPPI model (3).

We now develop score matching estimators for the RPPI model (3) that are resistant to outliers. Assume that the first \(k^*\) components of \(\varvec{u}\) are highly concentrated near zero where it is expected that possibly \(\beta _1< 0, \beta _2< 0, \ldots , \beta _{k^{*}} < 0\). The remaining components \(u_{k^*+1}, u_{k^*+2},\ldots , u_p\) are assumed to have relatively low concentration. By low concentration we mean moderate to high variance and by high concentration we mean small variance. The robustification which follows is only relevant for highly concentrated components near zero which is why we are distinguishing between the different cases. See Scealy and Wood (2021) for further discussion on standardised bias robustness under high concentration which is relevant to all compact sample spaces including the simplex. When \(k^* < p-1\) partition

where \(\varvec{A}_{KK}\) is a \(k^*\times k^*\) matrix, \(\varvec{A}_{KR}\) is a \(k^* \times (p-1-k^*)\) matrix, \(\varvec{A}_{RK}\) is a \((p-1-k^*) \times k^*\) matrix and \(\varvec{A}_{RR}\) is a \((p-1-k^*) \times (p-1-k^*) \) matrix. When \(k^*=p-1\) then \(\varvec{A}_L=\varvec{A}_{KK}\). The (unweighted) estimating equations for the ALR-SME are given by (9) and can be written slightly more concisely as

where the elements of \(\varvec{W}_1(\varvec{u}_i)\) and \(\varvec{d}_1(\varvec{u}_i)\) are functions of \(\varvec{u}_i\) and are defined at equations (20) and (21) in Appendix A.1 (Supplementary Material) for the RPPI model and are given in a more general form though Eqs. (9)–(11).

Windham (1995) approach to creating robustified estimators is to use weights which are proportional to a positive power of the probability density function. The intuition behind this approach is that outliers under a given distribution will typically have small likelihood and hence a small weight, whereas observations in the central region of the distribution will tend to have larger weights. The Windham (1995) method is an example of a density-based minimum divergence estimator, but with the advantage that the normalising constant in the density does not need to be evaluated in order to apply it. See Windham (1995), Basu et al. (1998), Jones, et al. (2001), Choi et al. (2000), Ribeiro and Ferrari (2020), Kato and Eguchi (2016) and Saraceno et al. (2020) for further discussion and insights. In the setting of the RPPI model for compositional data, there is a choice to be made between the probability densities to use in the weights, that is to use (3) or (13), or in other words should we choose the measure \(d\varvec{u}\) or \(d \varvec{y}\). We prefer \(d \varvec{u}\) because \(d \varvec{y}\) places zero probability density at the simplex boundary and thus always treats zeros as outliers which is not a good property with data concentrated near the simplex boundary.

For the RPPI distribution, taking a power of the density (3) is a bad idea because for those \(\beta _j\) which are negative the weights will diverge to infinity as \(u_j\) tends to 0. To circumvent this issue we only use the exp-quadratic factor in (3) to define the weights. This choice of weighting function is a compromise between wanting the weight of an observation to be smaller if the probability density is smaller and needing to avoid infinite weights on the boundary of the simplex. In fact, typically \(\varvec{u}_{K}^\top \varvec{A}_{KK}\varvec{u}_{K}\), where \(\varvec{u}_{K}=(u_{1},u_{2},\ldots , u_{k^*})^{\top }\), is highly negative whenever \(\varvec{u}\) has a large value in any of the components that are highly concentrated near zero in distribution. It is thus sufficient to use just the \(\exp (\varvec{u}_{K}^\top \varvec{A}_{KK}\varvec{u}_{K})\) factor of (3) in the weights (the influence function in Theorem 2 below confirms this behaviour). Including all elements of \(\varvec{A}_L\) in the weights leads to a large loss in efficiency, so the weights in our robustified ALR-SME estimator are \(\exp (c\varvec{u}_{i,K}^\top \varvec{A}_{KK}\varvec{u}_{i,K})\), \(i=1, \ldots , n\), where \(\varvec{u}_{i,K}=(u_{i1},u_{i2},\ldots , u_{ik^*})^{\top }\). The weighted form of estimating Eq. (16) is then

where \(\varvec{H}\) is a \(q \times q\) diagonal matrix with diagonal elements either equal to \(c+1\) or 1 (the elements corresponding to the parameters \(\varvec{A}_{KK}\) are \(c+1\) and the rest are 1). The estimating Eq. (17) has a very simple form here (i.e. given the weights, the estimating equations are linear in \(\varvec{\pi }\)), whereas the version based on the maximum likelihood estimator does not have such a nice linear form leading to a much more complicated influence function calculation and its interpretation (e.g. Jones, et al. 2001).

An algorithm similar to that in Windham (1995) can be used to solve (17) and involves iteratively solving weighted versions of the score matching estimators. In summary this algorithm is

-

1.

Set \(r=1\) and initialise the parameters: \({\hat{\varvec{\beta }}}^{(0)}\) and \({\hat{\varvec{A}}}_L^{(0)}\) (i.e. choose starting values such as the unweighted ALR-SME). Then repeat steps 2–5 until convergence.

-

2.

Calculate the weights \( \tilde{w}_i= \exp {\left( c\varvec{u}_{i,K}^\top {\hat{\varvec{A}}}_{KK}^{(r-1)} \varvec{u}_{i,K} \right) }\) for \(i=1,2,\ldots , n\) and normalise the weights so that the weights sum to 1 across the sample. Also calculate the additional tuning constants \(\varvec{d}_{\beta }=-c{\hat{\varvec{\beta }}}^{(r-1)}\), \(\varvec{d}_{A_{1}}=-c{\hat{\varvec{A}}}_{RR}^{(r-1)}\), \(\varvec{d}_{A_{2}}=-c{\hat{\varvec{A}}}_{RK}^{(r-1)}\) and \(\varvec{d}_{A_{3}}=-c{\hat{\varvec{A}}}_{KR}^{(r-1)}\).

-

3.

Calculate weighted score matching estimates. That is, replace all sample averages with weighted averages using the normalised weights \(\tilde{w}_i\) calculated in step 2. Denote the resulting estimates as \({\tilde{\varvec{\beta }}}^{(r)}\) and \({\tilde{\varvec{A}}}_L^{(r)}\).

-

4.

The estimates in step 3 are biased and we need to do the following bias correction:

$$\begin{aligned} {\hat{\varvec{\beta }}}^{(r)}= \frac{{\tilde{\varvec{\beta }}}^{(r)}-\varvec{d}_{\beta }}{c+1}, \quad \text {and} \quad {\hat{\varvec{A}}}_{KK}^{(r)}=\frac{{\tilde{\varvec{A}}}_{KK}^{(r)}}{c+1} \end{aligned}$$and

$$\begin{aligned}{} & {} {\hat{\varvec{A}}}_{RR}^{(r)}=\frac{{\tilde{\varvec{A}}}_{RR}^{(r)}-\varvec{d}_{A_1}}{c+1}, \quad {\hat{\varvec{A}}}_{RK}^{(r)}=\frac{{\tilde{\varvec{A}}}_{RK}^{(r)}-\varvec{d}_{A_2}}{c+1},\\{} & {} \text {and} \quad {\hat{\varvec{A}}}_{KR}^{(r)}=\frac{{\tilde{\varvec{A}}}_{KR}^{(r)}-\varvec{d}_{A_3}}{c+1}. \end{aligned}$$This correction is simple because the model is an exponential family; see Windham (1995) for further details.

-

5.

\(r \rightarrow r+1\)

Step 4 in this new robust score matching algorithm above is similar to applying the inverse of \(\tau _c\) in Windham (1995). The tuning constants \(\varvec{d}_{\beta }\), \(\varvec{d}_{A_1}\), \(\varvec{d}_{A_2}\) and \(\varvec{d}_{A_3}\) are required due to our use of a factor of the density in the weights.

This modified version of Windham (1995) method is particularly useful when any \(\beta _j\)’s are negative in order to avoid infinite weights at zero. When the data is concentrated in the simplex interior (i.e. we expect \(\beta _j > 0\), \(j=1,2,\ldots , p\)) then the model density is bounded and we can apply the Windham (1995) method without modification, although efficiency gains may be possible from using well-chosen factors of the model density.

In order to complete the description of the robustified ALR-SME, we need to choose the robustness tuning constant c. In related settings (Kato and Eguchi 2016) use cross validation and Saraceno et al. (2020) calculate theoretical optimal values for a Gaussian linear mixed model. Basak et al. (2021) report that choosing the optimal tuning constant is challenging in general when choosing density power divergence tuning parameters. We agree with the view of (Muller and Welsh 2005, page 1298) that choice of model selection criteria or estimator selector criterion should be independent of the estimation method, otherwise we may excessively favour particular estimators. This is an issue with the Kato and Eguchi (2016) method which is based on an arbitrary choice of divergence which could favor the optimal estimator under that divergence. Instead we use a simulation based method to choose c; see Sect. 6. We also need to decide on the value of \(k^*\); see Sect. 6 for a guide.

We next examine the theoretical properties of our new robustified estimator. The proof of Theorem 2 below is given in Appendix A.4 (Supplementary Material). Let \(\mathcal {F}\) denote the set of probability distributions on the unit simplex \(\Delta ^{p-1} \subset \mathbb {R}^p\), where \(p \ge 3\). Let \(F_0\) denote the population probability measure for a single observation from \(\Delta ^{p-1} \) and write \(\delta _{\varvec{z}}\) for the degenerate distribution on \(\Delta ^{p-1} \) which places unit probability on \(\varvec{z}\in \Delta ^{p-1} \). Consider the ALR-SME functional \(\varvec{\theta }: \mathcal {F} \rightarrow \Theta \subseteq \mathbb {R}^{q}\). It is assumed that \(\varvec{\theta }\) is well defined for all \(\varvec{z}\) at \((1-\lambda )F_0+ \lambda \delta _{\varvec{z}}\) provided \(\lambda \in (0,1)\) is sufficiently small. Then the influence function for \(\varvec{\theta }\) and \(F_0\in \mathcal {F}\) at \(\varvec{z}\) is defined by

Theorem 2

Suppose that the population distribution \(F_0\) on \(\Delta ^{p-1} \) is absolutely continuous with respect to Lebesgue measure on \(\Delta ^{p-1}\). Also assume that \(k^*=p-1\) for exposition simplicity which implies that all of the first \(p-1\) components are concentrated at/near zero. (The proof for the case of \(k^* < p-1\) is similar and is not presented here.) Then

where \(\varvec{\pi }_0\) is the solution to the population estimating equation corresponding to (17) (see equation (27) in Appendix A.4 (Supplementary Material)) and the functions \(\varvec{G}(\varvec{\pi }_0)\) and \(\varvec{t}^{(a)}(\varvec{z})\) are defined in Appendix A.4 (Supplementary Material).

The functions \(\varvec{t}^{(a)}(\varvec{z})\), \(\varvec{W}_1(\varvec{z})\) and \(\varvec{d}_1(\varvec{z})\) contain linear combinations of low order polynomial products, for example terms like \(z_{1}^{r_1}z_{2}^{r_2}z_{3}^{r_3}\), where \(r_1 \ge 0\), \(r_2 \ge 0\), \(r_3 \ge 0\) and \(r_1+r_2+r_3\) is small. Therefore the above influence function is always bounded for all \(\varvec{z}\in \Delta ^{p-1}\) including for any points on the simplex boundary, even when \(c = 0\). The \(\varvec{t}^{(a)}(\varvec{z})^{\top } \varvec{\pi }_0\) in Theorem 2 is equal to \(\varvec{z}_K^{\top } \varvec{A}_{KK} \varvec{z}_K\), where \(\varvec{z}_K = (z_1, z_2,..., z_{k^*})^\top \). For many PPI models, \(\varvec{z}_K^{\top } \varvec{A}_{KK} \varvec{z}_K=\) \(\varvec{t}^{(a)}(\varvec{z})^{\top } \varvec{\pi }_0 < 0\), which means that for the components of \(\varvec{u}\) that are highly concentrated near zero in distribution, any large value away from zero in these components will be down-weighted and have less influence on the estimator. This leads to large efficiency gains in both the contaminated and uncontaminated cases. See Sect. 7 for further details.

It is useful to compare Theorem 2 with the influence function for \({\hat{\varvec{\pi }}}_{ML}\). The maximum likelihood estimator is a standard M-estimator with influence function of the form

where \(\varvec{B}\) is a matrix function of the model parameters (e.g. Maronna et al. 2006, page 71) and \(\varvec{t}^*(\varvec{z})\) is defined at (19) in Appendix A.1 (Supplementary Material). The vector \(\varvec{t}^*(\varvec{z})\) contains the functions \(\log (z_1), \ \log (z_2), \ \ldots , \ \log (z_{p-1})\) and the influence function (18) is unbounded if any \(z_j\) approaches 0, \(j=1,2,\ldots , p-1\). Therefore maximum likelihood estimation for the PPI model is highly sensitive to zeros. Maximum likelihood estimation is also highly sensitive to zeros for the gamma, Beta, Dirichlet and logistic normal distributions for similar reasons.

6 Microbiome data analysis

Microbiome data is challenging to analyse due to the presence of high right skewness, outliers and zeros in the marginal distributions of the bacterial species (e.g. Li 2015; He et al. 2021). Typically microbiome count data is either modelled using a multinomial model with latent variables (e.g. Li 2015; Martin et al. 2018; Zhang and Lin 2019) or the sample counts are normalised and treated as approximately continuous data since the total counts are large (e.g. Cao et al. 2019; He et al. 2021). Here we analyse real microbiome count data by fitting a RPPI multinomial latent variable model using the normalised microbiome counts as estimates of the latent variables; see Sect. 4.3.

In this section we analyse a subset of the longitudinal microbiome dataset obtained from a study carried out in a helminth-endemic area in Indonesia (Martin et al. 2018). In summary, stool samples were collected from 150 subjects in the years 2008 (pre-treatment) and in 2010 (post-treatment). The 16 s rRNA gene from the stool samples was processed and resulted in counts of 18 bacterial phyla. Whether or not an individual was infected by helminth was also determined at both time points. We restricted the analysis to the year 2008 for individuals infected by helminths which resulted in a sample size of \(n=94\), and we treated these individuals as being independent.

Martin et al. (2018) analysed the five most prevalent phyla and pooled the remaining categories. Scealy and Wood (2023) analysed a different set of four phyla including two with a high number of zeros and pooled the remaining categories. Here for demonstrative purposes we will first analyse the same data components as in Scealy and Wood (2023) with the \(p=5\) components representing TM7, Cyanobacteria/Chloroplast, Actinobacteria, Proteobacteria and pooled. The percentage of zeros in each category are \(38\%\), \(41\%\), \(0\%\), \(0\%\) and \(0\%\) respectively. Call this Dataset1. Then for demonstrative purposes we will also analyse a second dataset with \(p=5\) denoted as Dataset2 which contains the components Spirochaetes, Verrucomicrobia, Cyanobacteria/Chloroplast, TM7 and pooled. The percentage of zeros in each category for Dataset2 are \(77\%\), \(75\%\), \(41\%\), \(38\%\) and \(0\%\) respectively. Let \(x_{ij}\), \(i=1,2,\ldots , 94\) and \(j=1,2,3,4,5\) represent the sample counts for a given dataset with total count \(m_i=2000\). The estimated sample proportions were calculated as follows: \(\hat{u}_{ij}=x_{ij}/m_i\), where \(i=1,2,\ldots , 94\) and \(j=1,2,3,4,5\).

Figure 1 is similar to (Scealy and Wood 2023, Figure 3), the only difference being that we have now included the two large proportions in \(\hat{u}_{i1}\) and \(\hat{u}_{i2}\) which were deleted by Scealy and Wood (2023) prior to their analysis because they identified them as outliers. The estimates of \(\beta _1\) and \(\beta _2\) in Scealy and Wood (2023) were negative and close to \(-1\) and the components \(\hat{u}_{i1}\) and \(\hat{u}_{i2}\) are highly concentrated mostly near zero. The components \(\hat{u}_{i3}\) and \(\hat{u}_{i4}\) for this dataset have low concentration. Therefore it makes sense here to choose \(k^*=2\).

Figure 2 contains histograms of the sample proportions in Dataset2. The first four components are highly concentrated near zero and we would expect that \(\beta _1\), \(\beta _2\), \(\beta _3\) and \(\beta _4\) are negative. Therefore it makes sense to choose \(k^*=4\) for this dataset.

6.1 Choice of tuning constant c

For each dataset we let c range over a grid from 0 up to 1.5 and we fitted the model for each value of c. We simulated a single large sample of size \(R=10{,}000\) under the fitted model (3) for each value of c and rounded the simulated data as follows: \(\hat{u}_{ij}^r=\text {round}(\hat{u}_{ij}m_i)/m_i\), where \(\hat{u}_{ij}\) denotes the simulated proportion under the fitted RPPI model for \(i=1,2,\ldots , R\). This mimics the discreteness in the data; see Scealy and Wood (2023) Section 7. Then we compared the simulated proportions with the true sample proportions. Similar to the view of Muller and Welsh (2005) (page 1298) we are interested in fitting the core of the data and we are not specifically interested in fitting in the upper tails which is where outliers can occur in this setting. This means we need to choose a criterion that is not sensitive to the upper tail. When comparing the simulated proportions with the true sample proportions we deleted all observations above the \(95\%\) quantile cutoff in the marginal distribution proportions.

For each dataset, c was chosen to give a compromise between fitting all components to give a small value of the Kolmogorov–Smirnov test statistic and keeping variation in the weights as small as possible to preserve efficiency. See Table 1 for the chosen values of c for each dataset. Note that the p value for Proteobacteria is quite small. This is not surprising as this was also the worst fitting component in Table 5 in Scealy and Wood (2023) in their analysis.

Table 2 contains the parameter estimates for Dataset1. The standard errors (SE) were calculated using a parametric bootstrap by simulating under the fitted multinomial latent variable model. Use of robust non-parametric bootstrap methods such as those in Muller and Welsh (2005) and Salibian-Barrera et al. (2008) is challenging here due to the large numbers of zeros in the data and for that reason we prefer the parametric bootstrap. The parametric bootstrap SE estimates are expected to be a little larger than the ones in Scealy and Wood (2023) since they used asymptotic standard errors which tended to be a slight underestimation as shown in their simulation study. The new robustified ALR-SME of \(\varvec{\beta }\) are reasonably close to the simulation/grid search estimates in Scealy and Wood (2023). Interestingly, \(\beta _3\) is not significantly different from zero; Scealy and Wood (2023) set this parameter to zero based on visual inspection of plots. As expected the estimates of \(\beta _1\) and \(\beta _2\) are negative and are highly significant. We no longer need to treat \(\varvec{\beta }\) as a tuning constant and we can now estimate its standard errors which is an advantage of the new robustified ALR-SME method.

Table 3 contains the parameter estimates for Dataset2. Note that we cannot apply the score matching estimators of Scealy and Wood (2023) to this dataset as every datapoint has a component equal to zero, which means the manifold boundary weight functions in Scealy and Wood (2023) evaluate to zero. However, our new robustified ALR-SME method can handle this dataset with massive numbers of zeros. Again, we used the parametric bootstrap to calculate the standard error estimates. As expected all of \(\beta _1\), \(\beta _2\), \(\beta _3\) and \(\beta _4\) are negative and highly significantly different from zero. The \(\varvec{A}_L\) parameter estimates are all insignificant. So for this dataset perhaps a Dirichlet model might be appropriate. Note that this is not surprising due to the massive numbers of zeros and relatively small sample size; there is not much information available to estimate \(\varvec{A}_L\).

7 Simulation

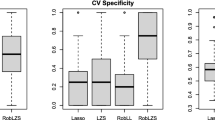

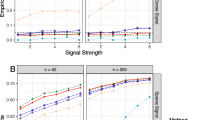

In this section we explore the properties of the new robustified ALR-SME and we compare them with the score matching estimator of Scealy and Wood (2023) based on the manifold boundary weight function \( \min (u_1, u_2, \ldots u_p, a_c^2), \) with various choices of their tuning constant \(a_c \in [0,1]\). We consider eight different simulation settings and in each case we simulated \(R=1000\) samples and for each sample we calculated multiple different score matching estimates and calculated estimated root mean squared errors (RMSE). The eight different simulation settings are now described. We focus on dimension \(p=5\) only.

Simulation 1: The model is the continuous RPPI model (3) with \(\varvec{b}_L=\varvec{0}\) and \(\beta _5=0\) fixed (not estimated). We set \(\beta _1=-0.80\), \(\beta _2=-0.85\), \(\beta _3=0\), \(\beta _4=-0.2\) and \(\varvec{A}_L\) is equal to the parameter estimates given in Table 3 of Scealy and Wood (2023). This model was the best fitting model for Dataset1 in Scealy and Wood (2023) after they deleted two outliers. We set the sample size to \(n=92\) which is consistent with Scealy and Wood (2023). For this model we calculated the new ALR-SME, which is denoted as \(c=0\) in Table 4. We also calculated the new robustified ALR-SME with tuning constants set to \(c=0.01\) and \(c=0.7\). Then we calculated the score matching estimators of Scealy and Wood (2023) with tuning constants set to \(a_c=0.01\), \(a_c=0.000796\) and \(a_c=1\); see columns 5, 6 and 8 in Table 4. Note that \(\varvec{\beta }\) is not estimated in columns 5, 6 and 8 and is treated as known and set equal to the true \(\varvec{\beta }\) (Scealy and Wood 2023 treated \(\varvec{\beta }\) as a tuning constant in their real data application). The 7th column in Table 4 denoted by \(a_c\) given \({\hat{\varvec{\beta }}}\) is a hybrid two step estimator. That is, first we calculated the estimate of \(\varvec{A}_L\) and \(\varvec{\beta }\) using the robustified ALR-SME with \(c=0.7\), then in the second step we updated the \(\varvec{A}_L\) estimate conditional on the robust \(\varvec{\beta }\) estimate using the Scealy and Wood (2023) estimator with \(a_c=0.000796\).

Simulation 2: The model is the multinomial latent variable model with \(m_i=2000\) for \(i=1,2,\ldots , n\) with \(n=92\). The latent variable distribution is set equal to the same RPPI model used in Simulation 1. We calculated the same score matching estimators as in Simulation 1 but instead of using \(\varvec{u}_i\) in estimation we plugged in the discrete simulated proportions \({\hat{\varvec{u}}}_i =\varvec{x}_i /m_i\).

Simulation 3: The same setting as Simulation 1 except we replace \(5.4\%\) of the observations with the outlier \(\varvec{u}_i=(0.4,0.4,0,0,0.2)^{\top }\).

Simulation 4: The same setting as Simulation 2 except we replace \(5.4\%\) of the observations with the outlier \({\hat{\varvec{u}}}_i=\varvec{x}_i/m_i=(0.4,0.4,0,0,0.2)^{\top }\).

Simulation 5: The model is the continuous RPPI model (3) with \(\varvec{b}_L=\varvec{0}\) and \(\beta _5=0\) fixed (not estimated), and remaining parameters set equal to the values given in Table 3. For this model we calculated the new ALR-SME which is denoted as \(c=0\) in Table 6. We also calculated the new robustified ALR-SME with tuning constants set to \(c=0.01\), \(c=0.25\), \(c=0.5\), \(c=0.75\), \(c=1\) and \(c=1.25\). The sample size is the same as Dataset2 which is \(n=94\).

Simulation 6: The model is the multinomial latent variable model with \(m_i=2000\) for \(i=1,2,\ldots , n\) with \(n=94\). The latent variable distribution is set equal to the same RPPI model used in Simulation 5. We calculated the same score matching estimators as in Simulation 5 but instead of using \(\varvec{u}_i\) in estimation we plugged in the discrete simulated proportions \({\hat{\varvec{u}}}_i =\varvec{x}_i /m_i\).

Simulation 7: The same setting as Simulation 5 except we replace \(5.3\%\) of the observations with the outlier \(\varvec{u}_i=(0.4,0.3,0.2,0.1,0)^{\top }\).

Simulation 8: The same setting as Simulation 6 except we replace \(5.3\%\) of the observations with the outlier \({\hat{\varvec{u}}}_i=\varvec{x}_i/m_i=(0.4,0.3,0.2,0.1,0)^{\top }\).

We now discuss the simulation results in Tables 4 and 5. These models are motivated from Dataset1. Dataset1 has two components that are highly right skewed concentrated near zero and three components with low concentration; see Fig. 1. The RMSE’s are of a similar order when comparing the first half of Table 4 with the corresponding cells in the second half of Table 4 and similarly this also occurs within Table 5. This is not surprising because \(m_i\) is large compared with n and the approximation \({\hat{\varvec{u}}}_i\) for \(\varvec{u}_i\) is reasonable. Hence the estimates are insensitive to the large numbers of zeros in \(\hat{u}_{i1}\) and \(\hat{u}_{i2}\). When comparing Table 4 with 5 most of the corresponding cells are fairly similar apart from \(c=0\) which has huge RMSE’s in Table 5. The robustified ALR-SME with \(c> 0\) are clearly resistant to the outliers, whereas the unweighted estimator with \(c=0\) does not exhibit good resistance to outliers. Interestingly, the most efficient estimate of \(\varvec{\beta }\) is given by \(c=0.7\) even when there are no outliers. The efficiency gains for \(\beta _1\) and \(\beta _2\) are substantial when comparing \(c=0\) (no weights) to \(c=0.7\). So the message here is that the weighted version of the ALR-SME is valuable for improving efficiency for estimating the components of \(\varvec{\beta }\) that are negative and close to \(-1\). In the continuous case arguably the Scealy and Wood (2023) estimator with \(a_c=0.000796\) is the most efficient for estimating \(\varvec{A}_L\), whereas in the discrete multinomial case the \(c=0.7\) estimator is arguably the most efficient for \(\varvec{A}_L\).

We now consider the simulation results in Tables 6 and 7. These models are motivated from Dataset2. Dataset2 has four components that are highly right skewed concentrated near zero and one component highly concentrated near one; see Fig. 2. The Scealy and Wood (2023) estimators are omitted because their manifold boundary weight functions evaluate to zero, or very close to zero, for most datapoints in most simulated samples. The RMSE’s are roughly of a similar order when comparing the first half of Table 6 with the corresponding cells in the second half of Table 6 and similarly this also occurs within Table 7. This is not surprising because \(m_i\) is large compared with n and the approximation \({\hat{\varvec{u}}}_i\) for \(\varvec{u}_i\) is reasonable. Hence the estimates were insensitive to the large numbers of zeros in \(\hat{u}_{i1}\), \(\hat{u}_{i2}\), \(\hat{u}_{i3}\) and \(\hat{u}_{i4}\). When comparing Table 6 with 7 most of the corresponding cells are fairly similar apart from \(c=0\) which has huge RMSE’s in Table 7. The unweighted ALR-SME is not resistant to outliers, whereas the estimators with \(c> 0\) are clearly resistant to the outliers. Interestingly, the most efficient estimate of \(\varvec{\beta }\) is arguably given by \(c=1\) or \(c=1.25\) even when there are no outliers. Again the message here is that the weighted version of the ALR-SME is valuable for improving efficiency for estimating the components of \(\varvec{\beta }\) that are negative and close to \(-1\). The most efficient estimator for \(\varvec{A}_L\) is arguably \(c=0.5\) or \(c=0.75\).

Appendix A.5 (Supplementary Material) contains additional simulation results for dimension \(p=10\) and with a broader range of outlier contaminations (4%, 12% and 45%). This simulation also confirms that the robustified ALR-SME with \(c> 0\) are resistant to the outliers, whereas the unweighted estimator with \(c=0\) does not exhibit good resistance to outliers. When there are no outliers, the ALR-SME with \(c> 0\) is also often more efficient than the \(c=0\) estimator.

8 Conclusion

We proposed a log-ratio score matching estimator that produces consistent estimates for \(\varvec{A}_L\) and the first \(p-1\) elements of \(\varvec{\beta }\) for the RPPI model and the multinomial model with RPPI latent probability vectors. This estimator

was insensitive to the huge number of zeroes often encountered in microbiome data, and even performed well when every datapoint had a component that was zero. Our new estimator and modelling approach does not require treating zeros as outliers, which is an improvement on the treatment of zeros in the standard Aitchison log-ratio approach based on the logistic normal distribution. The robustified version of our estimator remained insensitive to zeros, improved resistance to outliers and also improved efficiency over unweighted ALR-SME for well-specified data. We recommend using our estimators when there are many components, many of which have concentrations at/near zero (i.e. many \(\beta _j\), \(j=1,2,\ldots , p-1\) are close to \(-1\)).

References

Aitchison, J.: The Statistical Analysis of Compositional Data, Monographs on Statistics and Applied Probability, vol. 25. Chapman & Hall, London (1986)

Basak, S., Basu, A., Jones, M.C.: On the ‘optimal’ density power divergence tuning parameter. J. Appl. Stat. 48, 536–556 (2021)

Basu, A., Harris, I.R., Hjort, N.L., Jones, M.C.: Robust and efficient estimation by minimising a density power divergence. Biometrika 85, 549–559 (1998)

Cao, Y., Lin, W., Li, H.: Large covariance estimation for compositional data via composition-adjusted thresholding. J. Am. Stat. Assoc. 114, 759–772 (2019)

Choi, E., Hall, P., Presnell, B.: Rendering parametric procedures more robust by empirically tilting the model. Biometrika 87, 453–465 (2000)

CRAN: The comprehensive R archive network. https://cran.r-project.org (2022). Accessed 7 Dec 2022

He, Y., Liu, P., Zhang, X., Zhou, W.: Robust covariance estimation for high-dimensional compositional data with application to microbial communities analysis. Stat. Med. 40(15), 3499–3515 (2021)

Hyvarinen, A.: Estimation of non-normalised statistical models by score matching. J. Mach. Learn. Res. 6, 695–709 (2005)

Jones, M.C., Hjort, N.L., Harris, I.R., Basu, A.: A comparison of related density-based minimum divergence estimators. Biometrika 88, 865–873 (2001)

Kato, S., Eguchi, S.: Robust estimation of location and concentration parameters for the von Mises-Fisher distribution. Stat. Pap. 57, 205–234 (2016)

Li, H.: Microbiome, metagenomics, and high-dimensional compositional data analysis. Annu. Rev. Stat. Its Appl. 2, 73–94 (2015)

Liang, W., Wu, Y., Xiaoyan, M.: Robust sparse precision matrix estimation for high-dimensional compositional data. Stat. Probab. Lett. 184, 109379 (2022)

Martin, I., Uh, H.-W., Supali, T., Mitreva, M., Houwing-Duistermaat, J.J.: The mixed model for the analysis of a repeated-measurement multivariate count data. Stat. Med. 38, 2248–2268 (2018)

Maronna, R., Martin, D., Yohai, V.: Robust Statistics: Theory and Methods. Wiley, Chichester (2006)

Mishra, A., Muller, C.L.: Robust regression with compositional covariates. Comput. Stat. Data Anal. 165, 107315 (2022)

Muller, S., Welsh, A.H.: Outlier robust model selection in linear regression. J. Am. Stat. Assoc. 100, 1297–1310 (2005)

Ribeiro, T.K.A., Ferrari, S.L.P.: Robust estimation in beta regression via maximum \(L_q\)-likelihood (2020). arXiv:2010.11368

Salibian-Barrera, M., Van Aelst, S., Willems, G.: Fast and robust bootstrap. Stat. Methods Appl. 17, 41–71 (2008)

Saraceno, G., Ghosh, A., Basu, A., Agostinelli, C.: Robust estimation under linear mixed models: the minimum density power divergence approach (2020). arXiv:https://arxiv.org/pdf/2010.05593pdf

Scealy, J.L., Welsh, A.H.: Colours and cocktails: compositional data analysis. 2013 Lancaster lecture. Aust. N. Z. J. Stat. 56, 145–169 (2014)

Scealy, J.L., Wood, A.T.A.: Analogues on the sphere of the affine-equivariant spatial median. J. Am. Stat. Assoc. 116, 1457–1471 (2021)

Scealy, J.L., Wood, A.T.A.: Score matching for compositional distributions. J. Am. Stat. Assoc. 118, 1811–1823 (2023)

Weistuch, C., Zhu, J., Deasy, J.O., Tannenbaum, A.R.: The maximum entropy principle for compositional data. BMC Bioinform. 23, 1–13 (2022)

Windham, M.P.: Robustifying model fitting. J. R. Stat. Soc. B 57, 599–609 (1995)

Yu, S., Drton, M., Shojaie, A.: Interaction models and generalized score matching for compositional data (2021). arXiv:2109.04671

Zhang, J., Lin, W.: Scalable estimation and regularization for the logistic normal multinomial model. Biometrics 75, 1098–1108 (2019)

Acknowledgements

This work was supported by Australian Research Council Grant DP220102232. We thank two referees for their constructive comments that helped to improve the final manuscript.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Scealy, J.L., Hingee, K.L., Kent, J.T. et al. Robust score matching for compositional data. Stat Comput 34, 93 (2024). https://doi.org/10.1007/s11222-024-10412-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11222-024-10412-w