Abstract

A new multivariate integer-valued Generalized AutoRegressive Conditional Heteroscedastic (GARCH) process based on a multivariate Poisson generalized inverse Gaussian distribution is proposed. The estimation of parameters of the proposed multivariate heavy-tailed count time series model via maximum likelihood method is challenging since the likelihood function involves a Bessel function that depends on the multivariate counts and its dimension. As a consequence, numerical instability is often experienced in optimization procedures. To overcome this computational problem, two feasible variants of the expectation-maximization (EM) algorithm are proposed for estimating the parameters of our model under low and high-dimensional settings. These EM algorithm variants provide computational benefits and help avoid the difficult direct optimization of the likelihood function from the proposed process. Our model and proposed estimation procedures can handle multiple features such as modeling of multivariate counts, heavy-tailedness, overdispersion, accommodation of outliers, allowances for both positive and negative autocorrelations, estimation of cross/contemporaneous-correlation, and the efficient estimation of parameters from both statistical and computational points of view. Extensive Monte Carlo simulation studies are presented to assess the performance of the proposed EM algorithms. Two empirical applications of our approach are provided. The first application concerns modeling bivariate count time series data on cannabis possession-related offenses in Australia, while the second one involves modeling intraday high-frequency financial transactions data from multiple holdings in the U.S. financial market.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent years, modeling multivariate count time series data has been receiving increasingly more attention from social scientists as well as researchers from other disciplines. Multivariate count time series data can be commonly found in many fields such as economics, sociology, public health, finance and environmental science. Examples include weekly number of deaths from a disease observed across multiple geographical regions (Paul et al. 2008), high-frequency bid and ask volumes of a stock (Pedeli and Karlis 2018), and annual numbers of major hurricanes in the North Atlantic and North Pacific Basins (Livsey et al. 2018), to name a few. Many of these count time series examples exhibit heavy-tailed behavior and this poses a non-trivial modeling challenge.

Several methods exist in the literature for modeling univariate count time series data. Among them is the INteger-valued Generalized AutoRegressive Conditional Heteroscedastic (INGARCH) approach; see Ferland et al. (2006), Fokianos et al. (2009), Fokianos and Tjøstheim (2009) for some examples. Silva and Barreto-Souza (2019) is a recent work that considers a class of INGARCH models using mixed Poisson (MP) distributions. One of their proposed models, the Poisson inverse-Gaussian INGARCH process, viewed as an alternative to the negative binomial model, is capable of handling heavy-tailed behavior in count time series, and is also robust to the presence of outliers. Other existing models to handle univariate heavy-tailed time series of counts are due to Barreto-Souza (2019), Gorgi (2020), and Qian et al. (2020).

Following a number of studies on univariate models for time series of counts, some researchers have made efforts to generalize univariate models to bivariate and multivariate cases. As examples, Pedeli and Karlis (2011) proposed a bivariate INteger-valued AutoRegressive (INAR) model and Fokianos et al. (2020) introduce a multivariate count autoregression by combining copulas and INGARCH approaches. Readers are referred to Davis et al. (1995) and Fokianos et al. (2022) for a more detailed review. Bivariate INGARCH models have been proposed and explored by Cui and Zhu (2018), Lee et al. (2018), Cui et al. (2020), and Piancastelli et al. (2023).

Very limited research is available to address the problem of modeling multivariate count time series that exhibit heavy-tailed behavior. Such a feature is commonly seen in many real-data situations such as numbers of insurance claims for different types of properties (Chen et al. 2023), and high-frequency trading volume in the financial market (Qian et al. 2020), to name a few.

The primary aim of this paper is to introduce a novel multivariate time series model for handling heavy-tailed counts. We consider a Poisson generalized inverse-Gaussian distribution for the count vector given the past thereby allowing for the presence of heavy-tailed behavior and outliers. Moreover, the model construction follows a log-linear INGARCH structure, which enables us to account for both negative and positive autocorrelations. We refer to our model as multivariate Poisson generalized inverse-Gaussian INGARCH (MPGIG-INGARCH) process. A challenging point is that the resulting likelihood function of our multivariate count time series model depends on the Bessel function, posing difficulties and instability in estimating parameters via the conditional maximum likelihood method. Furthermore, as the dimension, denoted by p, increases, the number of parameters (equal to \(2p^2+p+2\)) grows fast. Hence, a direct optimization of the likelihood is computationally problematic, especially when the dimension grows. We properly address this issue by proposing effective expectation-maximization algorithms.

The main contributions of this paper are highlighted in what follows.

-

(i)

The MPGIG-INGARCH process and the proposed estimation procedures can handle multiple features such as modeling of multivariate counts, heavy-tailedness, overdispersion, accommodation of outliers, allowances for both positive and negative autocorrelations, estimation of cross/contemporaneous-correlation, and the efficient estimation of parameters from both statistical and computational points of view. This is the first model capable of simultaneously fulfilling all these functionalities.

-

(ii)

The stochastic representation of our model enables the use of an expectation-maximization (EM) algorithm for efficiently estimating the parameters. This helps avoid computational challenges that come with the direct optimization of the likelihood function of the MPGIG-INGARCH model.

-

(iii)

The development of a new Generalized Monte Carlo expectation-maximization (GMCEM) algorithm that is computationally tractable and stable. For the larger dimensional cases, we also propose a new hybrid version of the GMCEM algorithm that combines the EM approach and quasi-maximum likelihood estimation (QMLE). In this hybrid approach, the QMLE technique is employed to estimate the parameters controlling temporal dependence, while the GMCEM algorithm is used to estimate the parameters that control overdispersion or degree of heavy-tailedness. The performance and computational burden of the GMCEM algorithm is inspected in an extensive simulation study given in Sect. 4. To the best of our knowledge, it is the first time that such a hybrid EM algorithm variant has been suggested, in particular, for dealing with count time series. We believe that this proposed algorithm can be used more generally for problems where a subset of parameters can be well-estimated via quasi-likelihood and the remaining ones can be estimated via an EM algorithm.

-

(iv)

Our model is uniquely positioned to handle heavy-tailed behavior found in many real-data examples and we illustrate two relevant applications. The first application comes from modeling monthly countsFootnote 1 of cannabis possession-related offenses recorded in two regions of New South Wales, Australia. The second application involves analyzing intraday high-frequency transactions dataFootnote 2 from multiple holdings in the U.S. financial market. Our analyses show that the proposed method is uniquely positioned in effectively describing these datasets that include overdispersion and heavy-tailed behavior.

-

(v)

Our modeling approach involves a latent random variable whose parameters relate to contemporaneous correlation. In our exposition, although we place focus on the case where this latent random variable is generalized inverse-Gaussian (GIG) distributed, other cases can be easily introduced/implemented. For instance, by choosing a Gamma distribution for this latent variable, a novel multivariate negative binomial INGARCH model, denoted by MNB-INGARCH, is obtained. In finite sample studies with real data, we apply this MNB-INGARCH model and present the relevant results.

A related work to ours is Chen et al. (2023), where a first-order multivariate count model based on the INAR approach with innovations following a multivariate Poisson generalized inverse-Gaussian distribution is introduced. The estimation of parameters of their model is performed via the method of maximum likelihood. It is worth mentioning that likelihood functions of INAR models are generally quite cumbersome, even in the univariate case, and this issue is exacerbated in the multivariate context, which poses challenges relating to estimation and prediction (Fokianos et al. 2020). Besides this model not having a computationally feasible estimation technique, especially for higher dimensions, it does not handle all the features of our model mentioned in (i) above, for instance, allowing for negative autocorrelations. Moreover, the parameter related to the tail is fixed by Chen et al. (2023) in their model to some values that correspond to certain known/explicit distributions. In our case, we assume that such a parameter is unknown and show that it can be efficiently estimated. Also, a multivariate PGIG model under a regression framework has been proposed by Tzougas and Makariou (2022), but this model cannot handle time series data.

The remaining of this paper is organized as follows. Section 2 introduces the MPGIG-INGARCH model along with the necessary technical details. In Sect. 3, we describe the proposed GMCEM estimation algorithm, and also the hybrid version H-GMCEM algorithm that will be used in the larger dimensional cases. The results of Monte Carlo simulations are discussed in Sect. 4. Two empirical applications of our proposed methodology on crime and financial data modeling are presented in Sect. 5. Concluding remarks are provided in Sect. 6.

2 MPGIG\(_p\)-INGARCH model

In this section we describe our multivariate INGARCH model that is designed to handle heavy-tailed behavior in count time series data. We begin by introducing some notations and definitions that are needed followed by a description of our proposed model.

We say that random variable Z follows a generalized inverse-Gaussian (GIG) distribution if its probability density function assumes the form

where \(a>0\), \(b>0\), and \(\alpha \in {\mathbb {R}}\) are parameters, and \({\mathcal {K}}_{\alpha }(z)=\dfrac{1}{2}\displaystyle \int _0^\infty u^{\alpha -1}\exp \{-z(u+u^{-1})/2\}du\) is the second-kind modified Bessel function. We denote this three-parameter distribution by \(Z\sim \text{ GIG }(a,b,\alpha )\). The bi-parameter case when \(a=b=\phi \), which will be considered in this paper, is denoted by \(Z\sim \text{ GIG }(\phi ,\alpha )\). The multivariate Poisson generalized inverse-Gaussian distribution (Stein et al. 1987), here denoted as MPGIG\(_p\), is defined by assuming that \(Y_1,\ldots ,Y_p\) are conditionally independent random variables given \(Z\sim \text{ GIG }(\phi ,\alpha )\), and that \(Y_i|Z\sim \text{ Poisson }(\lambda _iZ)\), for \(i=1,\ldots ,p\). The joint probability mass function of \((Y_1,\ldots ,Y_p)\) is given by

for \(y_1,\ldots ,y_p\in {\mathbb {N}}_0\equiv \{0,1,2,\ldots \}\). We denote \(\textbf{Y}=(Y_1,\ldots ,Y_p)^\top \sim \text{ MPGIG}_p(\varvec{\lambda },\phi ,\alpha )\), with \(\varvec{\lambda }=(\lambda _1,\ldots ,\lambda _p)^\top \). Now define \({\mathcal {R}}_{\alpha ,k}(\phi )=\dfrac{{\mathcal {K}}_{k+\alpha }(\phi )}{{\mathcal {K}}_\alpha (\phi )}\), for \(k\in {\mathbb {N}}\), with \({\mathcal {R}}_{\alpha }(\phi )\equiv {\mathcal {R}}_{\alpha ,1}(\phi )\). The first two cumulants and covariance of the MPGIG\(_p\) distribution can be expressed by \(E(Y_i)=\lambda _i{\mathcal {R}}_{\alpha }(\phi )\), \(\text{ Var }(Y_i)=\lambda _i{\mathcal {R}}_{\alpha }(\phi )+\lambda _i^2\{{\mathcal {R}}_{\alpha ,2}(\phi )-{\mathcal {R}}^2_{\alpha }(\phi )\}\), for \(i=1,\ldots ,p\), and \(\text{ cov }(Y_i,Y_j)=\lambda _i\lambda _j\{{\mathcal {R}}_{\alpha ,2}(\phi )-{\mathcal {R}}^2_{\alpha }(\phi )\}\), for \(i\ne j\).

In what follows, we use the notation \(\{\textbf{Y}_t\}_{t\ge 1}=\{ ( Y_{1t},\ldots , Y_{pt})\}_{t\ge 1}\) to denote a p-variate time series of counts for defining our multivariate INGARCH model.

Definition 2.1

(\({MPGIG}_p{-INGARCH}\) process) We say that \(\{\textbf{Y}_t\}_{t\ge 1}\) follows a MPGIG\(_p\)-INGARCH process if \(\textbf{Y}_t|{\mathcal {F}}_{t-1}\sim \text{ MPGIG}_p(\varvec{\lambda }_t,\phi ,\alpha )\), with \({\mathcal {F}}_{t-1}=\sigma (\textbf{Y}_{t-1},\ldots , \textbf{Y}_1,\varvec{\lambda }_1)\), and \(\varvec{\nu }_t\equiv \log \varvec{\lambda }_t\) defined componentwise and satisfying the dynamics

where \(\textbf{d}\) is a p dimensional vector with real-valued components, \(\textbf{A}\) and \(\textbf{B}\) are \(p\times p\) real-valued matrices, and \(\textbf{1}_p\) is a p-dimensional vector of ones.

It is worth mentioning that the MPGIG\(_p\)-INGARCH process can accommodate both positive and negative autocorrelations due to the log-linear form assumed in (2). The log-linear link function was previously proposed by Fokianos and Tjøstheim (2009) and Fokianos et al. (2020) for univariate and multivariate count time series cases, respectively. In the latter work, the authors assume that the conditional distribution of each component of \({\textbf {Y}}_t\) follows a univariate Poisson distribution. In contrast, under our setup, the conditional distribution is MPGIG\(_p\), which can handle heavy-tailedness. The random variable Z with density function given in (1) above is latent/unobservable. Going forward, we will assume that the MPGIG\(_p\)-INGARCH model from Definition 2.1 is defined using the log-link function, unless mentioned otherwise.

The model parameters can be estimated by conditional maximum likelihood method. Denote the parameter vector as \(\varvec{\Theta }=\left( \phi ,\alpha ,\varvec{\theta }\right) ^\top \), where \(\varvec{\theta }=\left( {\textbf {d}},\text{ vec }({\textbf {A}}),\text{ vec }({\textbf {B}})\right) ^\top \). The conditional log-likelihood function assumes the form

The conditional maximum likelihood estimator (CMLE) of \(\varvec{\Theta }\) is given by \(\widehat{\varvec{\Theta }}=\text{ argmax}_{\varvec{\Theta }}\ell (\varvec{\Theta })\). Direct optimization of the conditional likelihood function in (3) is not straightforward due to its complicated form, particularly due to the presence of the modified Bessel functions. Additionally, optimization algorithms are likely to become computationally heavier and more unstable as the number of parameters increases. To overcome these computational difficulties, EM algorithms will be proposed in the following section to perform inference for our multivariate count time series model.

3 EM algorithm inference

Here we describe estimation methods for the MPGIG\(_p\)-INGARCH model from Sect. 2. The direct optimization of the observed conditional log-likelihood from the MPGIG\(_p\)-INGARCH model tends to be unstable and computationally expensive due to the presence of a large number of parameters and its complicated form. To overcome this problem, two variants of the EM-algorithm are outlined, and these are computationally feasible techniques meant to handle parameter estimation in the low and high-dimensional settings.

Let \(\{ \textbf{Y}_t \}_{t=1}^{T} = \{ ( Y_{1t} , \ldots , Y_{pt} ) \}_{t=1}^{T} \) denote a sample of size T from the MPGIG\(_p\)-INGARCH model, and let \(\textbf{y}_t\) be a realization of \(\textbf{Y}_t\) at time t. As before, \(\varvec{\Theta }\) is the parameter vector. The complete data is composed of \(\{{(\textbf{Y}_t,Z_t})\}_{t=1}^T\), where \(Z_1,\ldots ,Z_T\) are i.i.d. latent \(\text{ GIG }(\phi ,\alpha )\) random variables obtained from the stochastic representation of a multivariate Poisson GIG distribution discussed at the beginning of Sect. 2. Then, the conditional complete data likelihood can be written as

where \(p({\varvec{y}}_t,z_t\mid {\mathcal {F}}_{t-1})\) denotes the joint density function of \((\textbf{Y}_t,Z_t)\) given \({\mathcal {F}}_{t-1}\), for \(t\ge 2\). Next, by taking the logarithm of \({\mathcal {L}}_C\) from (4), we have the complete data log-likelihood, denoted by \(\ell _{C}(\varvec{\Theta })\), written as

Observe that \(\ell _C({\varvec{\Theta }})\) is decomposed as a sum of a function of \((\phi ,\alpha )\), plus a function of \(\varvec{\theta }\), which makes the optimization procedure easier. We now discuss the E and M steps associated with the EM algorithm.

E-step

A EM-type algorithm can be derived by replacing the t-th term from (5), denoted as \(\ell _t(\varvec{\Theta })\), by a conditional expectation for all t. More specifically, the Q-function to be optimized is given by

where the argument \(\varvec{\Theta }^{(r)}\) means that the conditional expectation is evaluated at the EM estimate at the rth iteration of the algorithm, and the underscript \({\mathcal {F}}_{t-1}\) means that the conditional expectation is taken with respect to the conditional density function \(g(z_t|\textbf{y}_t)=g(\textbf{y}_t,z_t)/p(\textbf{y}_t)\), where \(g(\textbf{y}_t,z_t)\equiv p(\textbf{y}_t,z_t|{\mathcal {F}}_{t-1})\) and \(p(\textbf{y}_t)\equiv p(\textbf{y}_t|{\mathcal {F}}_{t-1})\) is the joint probability function of \(\textbf{Y}_t\) given \({\mathcal {F}}_{t-1}\). The conditional expectations involved in the computation of (7) are derived in the next proposition.

Proposition 3.1

Suppose that \(\left\{ \textbf{Y}_t\right\} _{t\ge 1}\) is a \(\text{ MPGIG}_p\)-INGARCH process with associated latent GIG random variables \(\{Z_t\}_{t\ge 1}\). Then, \(g(z_t|\textbf{y}_t)\) is a density function from a three-parameter \(\text {GIG}\left( 2\displaystyle \sum \nolimits _{i=1}^{p}\lambda _{it}+\phi ,\phi ,\displaystyle \sum \nolimits _{i=1}^{p}y_{it}+\alpha \right) \) distribution. Furthermore, the conditional expectations involved in (7) are given by

Proof of Proposition 3.1

In what follows, the dependence of quantities on \({\mathcal {F}}_{t-1}\) is omitted for simplicity of notation. We have that the conditional density function \(g(z_t|{\varvec{y}}_t)\) is given by

which is the kernel of a \(\text {GIG}\left( 2\displaystyle \sum _{i=1}^{p}\lambda _{it}+\phi ,\phi ,\displaystyle \sum _{i=1}^{p}y_{it}+\alpha \right) \) density function; see (1).

Now, the conditional expectations stated in the proposition are obtained by using the fact that if \(X\sim \text{ GIG }(a,b,\alpha )\), then \(E(X^u)=(b/a)^{u/2}\dfrac{{\mathcal {K}}_{\alpha +u}(\sqrt{ab})}{{\mathcal {K}}_{\alpha }(\sqrt{ab})}\), for \(u\in {\mathbb {R}}\), and \(E(\log X)=\dfrac{d}{d\alpha }\log {\mathcal {K}}_{\alpha }(\sqrt{ab})+\dfrac{1}{2}\log (b/a)\); for instance, see Jørgensen (1982). \(\square \)

Using Proposition 3.1, the Q-function in (7) can be expressed by

where

and \(\zeta _t^{(r)}\), \(\kappa _t^{(r)}\) and \(\xi _t^{(r)}\) being the conditional expectations from Proposition 3.1 evaluated at \(\varvec{\Theta }=\varvec{\Theta }^{(r)}\).

It must be noted that the conditional expectation term \(\xi _t\) is not in closed form due to the derivative of the Bessel function with respect to its order, which makes it hard to evaluate the Q-function. Even the computation of the other conditional expectations \(\zeta _t\) and \(\kappa _t\) might be hard to evaluate numerically in some cases due to a possible instability of the Bessel function. To solve these issues, we will employ a Monte Carlo EM (MCEM) method where the conditional expectations in the E-step are calculated via a Monte Carlo approximation. More specifically, for each \(t=1,2,\cdots ,T\), we first generate m random draws \(\left( Z_{t}^{(1)},Z_{t}^{(2)},\cdots ,Z_{t}^{(m)} \right) \) from the conditional distribution of \(Z_t\) given \(\textbf{Y}_t\) (see Proposition 3.1), and then we approximate the conditional expectations \(\zeta _t\), \(\kappa _t\) and \(\xi _t\) respectively by \({\widehat{\zeta }}_t^{(r)}=\dfrac{1}{m}\sum \nolimits _{i=1}^{m}Z_{t}^{(i)}\), \({\widehat{\kappa }}_t^{(r)}=\dfrac{1}{m}\sum \nolimits _{i=1}^{m}\frac{1}{Z_{t}^{(i)}}\), and \({\widehat{\xi }}_t^{(r)}=\dfrac{1}{m}\sum \nolimits _{i=1}^{m}\log Z_{t}^{(i)} \). More details on MCEM can be found in Chan and Ledholter (1995).

M-step

Since the objective function \(Q(\varvec{\Theta };\varvec{\Theta }^{(r)})\) is decomposed into \(Q_1(\phi ,\alpha ;\varvec{\Theta }^{(r)})\) and \(Q_2(\varvec{\theta };\varvec{\Theta }^{(r)})\), maximization of \(Q(\varvec{\Theta };\varvec{\Theta }^{(r)})\) can be conducted separately as follows:

where the superscript \((r+1)\) denotes the EM-estimate of parameters at the \(r+1\) iteration. Due to the lack of closed-form updating formula, a numerical optimization is needed for both maximizations. Maximizing \(Q_1(\phi ,\alpha )\) is implemented with typical numerical methods such as the BFGS algorithm using the optim function in R, but maximizing \(Q_2(\varvec{\theta })\) can seriously increase the computational burden since many parameters are to be updated. To alleviate this problem, we borrow the idea of the generalized EM (GEM) algorithm (Dempster et al. 1977), in which \(\varvec{\theta }^{(r+1)}\) is selected so that

The GEM algorithm is attractive when maximization of \(Q(\varvec{\theta }^{(r)}|\varvec{\theta }^{(r)})\) is computationally expensive. As a way to achieve (9), Lange (1995) suggested the EM gradient algorithm where a nested loop in the M-step is simply replaced by one iteration of Newton’s method. Because \(Q_1 (\phi ,\alpha ;\varvec{\Theta }^{(r)})\) is just of two dimensions and its derivative with respect to \(\alpha \) is not in closed form, we only apply the one-step update to \(Q_2 (\varvec{\theta };\varvec{\Theta }^{(r)})\). In other words, we have that

where \({\textbf {S}}(\varvec{\theta })=\frac{\partial Q_2 (\varvec{\theta })}{\partial \varvec{\theta }}=\sum \nolimits _{t=1}^n \frac{\partial \varvec{\nu }_t^{\top }}{\partial \varvec{\theta }}\big ({\textbf {Y}}_t-z_t \cdot \exp \big (\varvec{\nu }_t \left( \varvec{\theta }\right) \big ) \big )\) is the gradient of \(Q_2 (\varvec{\theta })\) and \({\textbf {H}}(\varvec{\theta })\) is its associate Hessian matrix. In practice, we use the negative outer product of \({\textbf {S}}(\varvec{\theta })\) as \({\textbf {H}}(\varvec{\theta })\) instead of the Hessian matrix.

The technical results connecting the EM gradient algorithm with the EM and the GEM algorithms, as discussed in Lange (1995), are not guaranteed to hold in our proposed algorithm. However, we empirically confirmed through extensive simulation studies that the monotone increase of the observed log-likelihood generally holds, and the computational feasibility is also improved significantly. For a finite sample illustration of this previous point, please see Fig. 2 in Sect. 4. We refer to our proposed algorithm as the generalized Monte Carlo EM (GMCEM) from now on. We believe that the technical results concerning the log-likelihood’s monotone increasing property deserves further research and could be addressed following ideas from Caffo et al. (2005). The implementation of the MCEM and GMCEM algorithms are summarized in Algorithm 1.

It is well-known that EM algorithm does not automatically yield standard errors for estimates. Consequently, several methods have been proposed in the literature to obtain them such as Louis’s method (Louis 1982) and the supplemented EM algorithm (Meng and Rubin 1991). Taking advantage of the fully parametric nature of our model, we employ a parametric bootstrapping technique to quantify uncertainty. Algorithm 2 outlines the implementation of a parametric bootstrap to obtain standard errors of EM estimates for our model. A simpler and faster alternative is to use a finite differentiation method to evaluate the Hessian matrix of the observed log-likelihood function, enabling the construction of confidence intervals through a normal approximation. A simulation experiment to study the finite sample performance of these two methods using empirical coverage probabilities is presented in the Supplementary Material.

3.1 Hybrid estimation method (H-GMCEM)

When the dimension p of the observed count time series increases, empirical evidence suggests that the MCEM/GMCEM algorithms described in Algorithm 1 have significant computational burden. To address this problem, we propose a hybrid version of the GMCEM algorithm referred to as H-GMCEM. The idea is to combine the quasi-maximum likelihood estimation technique and the GMCEM algorithm, and this is seen to reduce the computational burden.

For the quasi-maximum likelihood estimation part of this approach, we only assume Poisson marginals without specifying the contemporaneous correlation structure, which is the same approach taken in Fokianos et al. (2020). More precisely, we have that

for \(t=2,\cdots ,T\), and \(i=1,\cdots , p\). It should be noted that \({\textbf {d}}^* \) from (10) is not the same as d from Definition 2.1. Since \(E(Y_{it}|{\mathcal {F}}_{t-1})=\lambda _{it}{\mathcal {R}}_\alpha (\phi )\) under our multivariate model, we obtain the following relationship \({\textbf {d}}^*={\textbf {d}}+\log {\mathcal {R}}_\alpha (\phi )(\textbf{I}-{\textbf {A}}){\varvec{1}}_p\). The quasi-likelihood and log-likelihood functions in terms of the parameter vector \(\varvec{\theta }^*\equiv ({\textbf {d}}^*,\text{ vec }({\textbf {A}}),\text{ vec }({\textbf {B}}))^\top \) are given by

where the \(\lambda _{it}\)’s assume the form given in (10). In the H-GMCEM algorithm, the parameter matrices \({\textbf {A}}\) and \({\textbf {B}}\) from Definition 2.1 are first estimated by maximizing the quasi log-likelihood in (11). We denote these quasi-maximum likelihood estimates of \(({\textbf {A}},{\textbf {B}})\) by \((\widetilde{{\textbf {A}}},\widetilde{{\textbf {B}}})\). Then, the other parameters are then estimated by the GMCEM method from Algorithm 1 by plugging \((\widetilde{{\textbf {A}}},\widetilde{{\textbf {B}}})\) into \(({\textbf {A}},{\textbf {B}})\); see Algorithm 3. Finite sample comparisons based on the performances and computing times of the GMCEM and the H-GMCEM estimators are discussed in Sect. 4, especially with regards to increasing dimension p.

4 Simulation study

In this section, we conduct extensive Monte Carlo simulation studies to examine the finite-sample performance of the GMCEM and H-GMCEM algorithms proposed in Sect. 3. Attempts were made to compare the results from the GMCEM method and the direct optimization of the conditional log-likelihood, but the latter was computationally expensive, especially for dimensions higher than three.

For each simulation scheme described below, 500 replications were generated and the corresponding boxplots of the estimates are presented. Tables summarizing the empirical absolute bias (AB) and root mean squared error (RMSE) of the estimators are also presented for all simulation schemes at increasing sample sizes. For Schemes 1-3, sample sizes \(T=200,500,1000\) are considered, and for Schemes 4-6, sample sizes \(T=500,1000\) are taken up. The adopted stopping criterion for the EM-algorithms was \(\max \limits _{i}|\varvec{\Theta }_i^{(r+1)}-\varvec{\Theta }_i^{(r)}|<0.001\), with the maximum number of iterations set to be 500. We generate realizations from the MPGIG\(_p\)-INGARCH model under six schemes specified below.

-

Scheme 1 (\(p=2\))

\(\phi =0.5\), \(\alpha =1.5\), \(\textbf{ d} = \begin{bmatrix} 0 \\ 0 \end{bmatrix}\), \({\textbf{A}} = \begin{bmatrix} 0.3 &{} \quad 0 \\ 0 &{} \quad 0.25 \end{bmatrix}\), \( {\textbf{B}}=\begin{bmatrix} 0.4 &{} \quad 0\\ 0 &{} \quad 0.3 \end{bmatrix}\).

-

Scheme 2 (\(p=2\)) \(\phi =0.5\), \(\alpha =1.5\), \(\textbf{ d} = \begin{bmatrix} 0 \\ 1 \end{bmatrix}\), \({\textbf{A}} = \begin{bmatrix} 0.3 &{} \quad 0 \\ 0 &{} \quad 0.25 \end{bmatrix}\), \( {\textbf{B}}=\begin{bmatrix} 0.4 &{} \quad 0\\ 0 &{} \quad 0.3 \end{bmatrix}\).

-

Scheme 3 (\(p=2\)) \(\phi =0.5\), \(\alpha =-1.5\), \(\textbf{ d} = \begin{bmatrix} 1 \\ 1 \end{bmatrix}\), \({\textbf{A}} = \begin{bmatrix} 0.3 &{} \quad 0 \\ 0 &{} \quad 0.25 \end{bmatrix}\), \( {\textbf{B}}=\begin{bmatrix} 0.4 &{} \quad 0\\ 0 &{} \quad 0.3 \end{bmatrix}\).

-

Scheme 4 (\(p=4\))

\(\phi =0.5\), \(\alpha =1.5\), \(\textbf{ d} = \begin{bmatrix} 0.5 \\ 0.5\\ 1\\ 0.5 \end{bmatrix}\), \({\textbf{A}} = \begin{bmatrix} 0.35 &{} \quad 0 &{} \quad 0&{} \quad 0\\ 0 &{} \quad -0.3 &{} \quad 0&{} \quad 0\\ 0 &{} \quad 0 &{} \quad 0.4&{} \quad 0\\ 0 &{} \quad 0 &{} \quad 0&{} \quad -0.3 \end{bmatrix}\), \( {\textbf{B}}=\begin{bmatrix} -0.3 &{} \quad 0 &{} \quad 0&{} \quad 0\\ 0 &{} \quad 0.3 &{} \quad 0&{} \quad 0\\ 0 &{} \quad 0 &{} \quad -0.3&{} \quad 0\\ 0 &{} \quad 0 &{} \quad 0&{} \quad 0.4 \end{bmatrix}\).

-

Scheme 5 (\(p=4\))

\(\phi =0.5\), \(\alpha =1.5\), \(\textbf{ d} = \begin{bmatrix} 0.5 \\ 0.5\\ 1\\ 0.5 \end{bmatrix}\), \({\textbf{A}} = \begin{bmatrix} 0.35 &{} \quad -0.2 &{} \quad 0&{} \quad 0\\ 0 &{} \quad -0.3 &{} \quad 0&{} \quad 0\\ 0 &{} \quad 0 &{} \quad 0.4&{} \quad 0\\ 0 &{} \quad 0 &{} \quad 0.2&{} \quad -0.3 \end{bmatrix}\), \( {\textbf{B}}=\begin{bmatrix} -0.3 &{} \quad 0.2 &{} \quad 0&{} \quad 0\\ 0 &{} \quad 0.3 &{} \quad 0&{} \quad 0\\ 0 &{} \quad 0 &{} \quad -0.3&{} \quad 0\\ 0 &{} \quad 0&{} \quad -0.25&{} \quad 0.4 \end{bmatrix}\).

-

Scheme 6 (\(p=10\)) \(\phi =0.5\), \(\alpha =1.5\), \({\textbf {d}}=\text{ diag }(0,0,0,0,1,0,0.8,0.5, 1,0.8)\), \({\textbf{A}}=\text{ diag }(0.30,0.20,0.35,0.40,-0.20,0.30,-0.15, 0.15,-0.25,-0.10)\), \({\textbf{B}}=\text{ diag }(0.30,0.35,0.30,0.20,-0.20,0.40,-0.20, 0.25,-0.15,-0.20)\).

In Tables 1, 2, 3, 4, 5 and 6, model performance results are summarized in terms of absolute bias (AB) and root mean squared error (RMSE) averaged over 500 replications. In Schemes 4 and 5, we additionally consider a constrained version of the GMCEM algorithm by assuming the matrices A and B are known to be diagonal and tri-diagonal, respectively. In Scheme 6 with dimension \(p=10\), the H-GMCEM algorithm can be seen as a computationally faster alternative to the GMCEM approach.

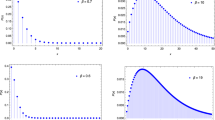

From the results in Tables 1, 2, 3, 4, 5 and 6, it is evident that the absolute bias and RMSE of the GMCEM-based estimators decrease as the sample size increases in all simulation schemes, which empirically indicates consistency. In Fig. 1, we present boxplots of the estimates obtained with the proposed GMCEM method for Scheme 1. Here again, one can witness the decrease in bias and variance with increasing sample size. Similar boxplots for all other simulation schemes are presented in the Supplementary Material.

Another critical issue is the running time for estimating the parameters since the number of parameters is proportional to \(p^2\), as mentioned earlier. To this end, in Table 7, we report a summary of computing times for each simulation scheme. For Schemes 1–3, it takes less than a minute in most cases to complete one repetition. When it comes to the larger dimensional cases, i.e., Schemes 4 to 6, it is seen that placing a constraint on the matrices \({\textbf{A}}\) and \({\textbf{B}}\) greatly reduces the computational burden as expected. In Scheme 6, the improvements in the running time of the H-GMCEM method when compared to the GMCEM method is evident.

Finally, in all our simulation schemes, the observed log-likelihood generally tends to monotonically increase with progression of iterations in our GMCEM and H-GMCEM algorithms. This behavior gives us empirical evidence that the proposed GMCEM algorithm satisfies the GEM principle given in (9). For a finite-sample illustration of this property, a log-likelihood function trajectory based on one realization under Scheme 1 is provided in Fig. 2.

5 Real data applications

In this section we present two applications of the proposed method, one in modeling monthly counts of drug-related crimes in Australia, and the other in modeling high-frequency financial transactions data involving multiple companies from the U.S. financial market. In both applications, results concerning model order selection, parameter estimation and model adequacy checks are discussed and presented.

5.1 Application to modeling monthly crime data

We begin with an application of the proposed method in modeling monthly number of drug-related crimes in the state of New South Wales (NSW), Australia. Model adequacy checks are done using probability integral transformation (PIT) plots, and comparisons with the existing multivariate Poisson-INGARCH model by Fokianos et al. (2020) are addressed.

We consider monthly counts of cannabis possession-related offenses in the Greater Newcastle (GNC) and Mid North Coast (MNC) regions of NSW from January 1995 to December 2011Footnote 3. This yields a bivariate count time series with a total of 204 observations, and a plot of this series is given in Fig. 3. Also, Fig. 5 provides a geographical plot indicating the two Australian regions MNC and GNC. For each of the two count time series, we inspect the presence of heavy-tailed behavior using the tail index (TI) (Qian et al. 2020) defined as \(\text{ TI }={\widehat{\gamma }}-{\widehat{\gamma }}_{nb}\), where \({\widehat{\gamma }}_{nb} = \dfrac{2{\widehat{\sigma }}^2-{\widehat{\mu }}}{{\widehat{\mu }}{\widehat{\sigma }}}\) is the index with respect to the negative binomial distribution, and \({\widehat{\gamma }}\), \({\widehat{\sigma }}^2\), and \({\widehat{\mu }}\) represent the sample skewness, variance, and mean, respectively. A positive value of \(\text{ TI }\) indicates a heavier tail compared to the negative binomial distribution. The estimated tail index values for the two count time series are 0.193 (MNC) and 0.090 (GNC), which points to some evidence of heavy-tailedness in this dataset. The sample autocorrelation function (ACF) plots, provided in Fig. 4, indicate that the observed series of counts in both regions involve significant temporal correlations along with a hint of seasonality with a period of roughly 12 months. Further, the cross-correlation function (CCF) plot at the bottom of Fig. 4 shows presence of serial and contemporaneous cross-correlation between the two series. Thus, a multivariate analysis is required rather than two separate univariate analyses.

To adjust for the annual seasonal effect, the MPGIG\(_p\)-INGARCH model in Definition 2.1 is extended to include additional time lags into the mean equation from (2), which leads to the following formulation:

where \(I_1\) and \(I_2\) are index sets of time lags for past means and past observations, respectively. The ACF plots in Fig. 4 indicate seasonality with a period of approximately 12 months, and this prompts the inclusion of a time lag of 12 months in the mean equation in (12).

For the sake of comparison, we also consider the multivariate negative binomial INGARCH (denoted by MNB\(_p\)-INGARCH) process, which is derived by assuming the latent mixing variable Z follows a Gamma distribution. This novel MNB\(_p\)-INGARCH model, capable of accommodating moderately overdispersed counts, can be incorporated within our proposed framework with the latent mixing variable approach by assuming a Gamma distribution (with mean 1 and variance \(\phi ^{-1}\)) instead of GIG distribution in Definition 2.1.

As indicated in Table 8, under the MPGIG\(_2\)-INGARCH setup, \(I_1=\emptyset \) and \(I_2=\{1,12\}\) in the mean equation in (12) is selected as the final model based on both the Akaike information criterion (AIC) and the Bayesian information criterion (BIC). Similarly, under the MNB\(_2\)-INGARCH setup, \(I_1=\emptyset \) and \(I_2=\{1,12\}\) in the mean equation in (12) is selected as the final model based on both AIC and BIC. In Table 9, we present the results of the fitted MPGIG\(_2\)-INGARCH model and these parameter estimates were obtained using the MCEM technique described in Algorithm 1. The standard errors were computed using the parametric bootstrap approach described in Algorithm 2 with 500 bootstrap replications. The bootstrap distribution of the various parameters are presented using the histograms in Fig. 6. Similar estimation and standard error results for the MNB\(_2\)-INGARCH model are provided in the Supplementary Material.

ACF and PACF plots of Pearson residuals from the fitted model is presented in Fig. 7 to assess the goodness-of-fit. At each time lag greater than 0, both the auto and cross-correlations fall within the confidence intervals, indicating that the fitted mean process adequately accounts for the temporal dependence structure. For checking the contemporaneous correlation structure, a \(95\%\) confidence interval for the contemporaneous correlation parameter \(\phi \) was computed using the parametric bootstrap technique. The estimated \({\widehat{\rho }}\) (=0.577) falls well within the confidence interval of (0.533,0.738), which further supports the selected model.

As our final model adequacy check, the non-randomized probability integral transformation (PIT) plots (Czado et al. 2009) are obtained for MPGIG\(_2\)-INGARCH and MNB\(_2\)-INGARCH models, and the existing multivariate Poisson autoregression proposed by Fokianos et al. (2020); see Fig. 8. Compared to the multivariate Poisson-INGARCH model, the PIT plots for the models that fall under our proposed framework (MPGIG\(_2\)-INGARCH and MNB\(_2\)-INGARCH processes) are much closer to the uniform distribution, implying a more adequate goodness-of-fit. This establishes the advantage and ability of our proposed approach in being able to handle overdispersion and heavy-tailedness in multivariate count time series data.

Non-randomized probability integral transformation (PIT) plots of the proposed MPGIG\(_2\)-INGARCH and MNB\(_2\)-INGARCH models, and the existing multivariate Poisson autoregression model (from the first to third column) with \(I_1=\emptyset \) and \(I_2=\{1,12\}\), fitted to the cannabis data example

5.2 Application to modeling high-frequency financial data

We present another real data application to validate the utility of our proposed model in handling higher-dimensional and heavy-tailed multivariate count time series. The dataset considered here involves high-frequency intraday transactions data from seven holdings in the U.S. financial marketFootnote 4. More specifically, we restrict attention to seven energy sector holdings namely ConocoPhillips (COP), Chevron (CVX), EOG Resources (EOG), Occidental Petroleum (OXY), Schlumberger (SLB), Valero (VLO) and Exxon Mobil (XOM). For each holding, the count time series includes the number of transactions per second recorded on February 13, 2009, from 11:00 am to 11:05 am, resulting in \(T=300\) observations. It is important to note that each transaction is counted as one, regardless of the number of shares sold in that transaction. A plot of this \(p=7\) dimensional count time series is given in Fig. 9, and one can witness some spikes which could imply the presence of heavy-tailedness in the data. This heavy-tailed behavior is further supported by positive estimates of tail-index (TI) values given in Table 10.

The ACF plot presented in Fig. 10 indicates that the observed multivariate count series exhibits both temporal and cross-correlations, thereby necessitating the use of a multivariate count time series modeling approach. We consider both multivariate PGIG and NB models for analyzing this data. As indicated in Table 11, under the MPGIG\(_7\)-INGARCH setup, \(I_1=\emptyset \) and \(I_2=\{1,2\}\) in the mean equation in (12) is selected as the final model based on both the Akaike information criterion (AIC) and the Bayesian information criterion (BIC). Similarly, under the MNB\(_7\)-INGARCH setup, \(I_1=\emptyset \) and \(I_2=\{1,2\}\) in the mean equation in (12) is selected as the final model based on both AIC and BIC. The parameter estimates of the MPGIG\(_7\)-INGARCH model, obtained using the MCEM method described in Algorithm 1, is presented in Tables 12 (for parameters \(\phi \), \(\alpha \) and \(\varvec{ d}\)) and 13 (for \(\varvec{ B}_{(1)}\) and \(\varvec{ B}_{(2)}\)). The standard errors were calculated via parametric bootstrap with 500 bootstrap replications. Similar estimation and standard error results from the MNB\(_7\)-INGARCH model with \(I_1=\emptyset \) and \(I_2=\{1,2\}\) are provided in the Supplementary Material.

To assess the goodness-of-fit, we examine the estimated autocorrelations of Pearson residuals from the fitted model. As shown in Fig. 11, the ACF plots generally exhibit the required white noise pattern. This implies that the temporal and cross-correlation structures present in the data is well accounted for by the proposed model. Similar plots from the MNB\(_7\)-INGARCH model fit is provided in the Supplementary Material.

The illustration presented in this section promotes a promising look for our methodology when analyzing high-dimensional count time series. It is worth mentioning that many existing works on multivariate count time series analyze only bivariate counts, which is already challenging when dealing with this type of data. Our methods seem to be feasible in dealing with \(p=7\) dimensional counts or even more as shown in our simulation results with \(p=10\).

6 Conclusion

In this article, we proposed a new multivariate count time series model that is capable of handling heavy-tailed behavior in count processes. The new model, referred to MPGIG\(_p\)-INGARCH, was defined using a multivariate version of the INteger-valued Generalized AutoRegressive Conditional Heteroscedastic (INGARCH) model. The setup involved a latent generalized inverse-Gaussian (GIG) random variable that controls contemporaneous dependence in the observed count process.

A computationally feasible variant of the expectation-maximization (EM) algorithm, referred to as GMCEM, was developed. Aiming at cases where the dimension of the observed count process is large, a variant of the GMCEM algorithm that combines the quasi-maximum likelihood estimation method and the GMCEM method was also outlined. This variant, called H-GMCEM algorithm, is seen to have improved computing time performance in finite sample cases. In the many finite-sample simulation schemes that are considered, the proposed GMCEM and H-GMCEM methods show increasing accuracy with increasing sample size.

Two relevant applications of the proposed method were presented. The first application is about a bivariate count time series on cannabis possession-related offenses in Australia. In the second one we modeled a seven-dimensional intraday high-frequency transactions data from the U.S. financial market. Empirical evidence indicated that these two multivariate count time series examples exhibit moderate overdispersion and/or heavy-tailed behavior. We illustrated, through suitable model adequacy checks, that the proposed MPGIG\(_p\)-INGARCH model effectively handles such types of multivariate count time series data.

Some points deserving future research are the inclusion of covariates, model extension allowing for general orders, and the R implementation of our method with a general class of mixed Poisson distributions (which would include the multivariate PGIG and NB distribution choices as particular cases). Another important point is how to impose sparsity on the matrices of parameters involved in the INGARCH equations to make our methods scalable for dimensions bigger than 10 (\(p>10\)). Regarding theoretical properties, a crucial step to study asymptotic properties of estimators is to establish conditions for the multivariate process to be stationary and ergodic. We believe that this can be done by adapting the theoretical results discussed in Fokianos et al. (2020) (see section 3.2) for their multivariate count autoregression. A sketch proof for the consistency and asymptotic normality of maximum likelihood estimators for our process can also be adapted from the strategy provided by Fokianos et al. (2020) by using the log-likelihood function instead of a quasi-likelihood function. One issue we anticipate is that our likelihood depends on the bessel function, which can impose some challenges in, for example, exploring the third-order derivatives with respect to parameters. We believe that these problems deserve further attention and research.

Data Availability

The dataset considered in this work and the computer code for implementing our method in R are made available on GitHub at https://github.com/STATJANG/MPGIG_INGARCH

Notes

Data source: https://wrds-www.wharton.upenn.edu/.

Data source: NSW Bureau of Crime Statistics and Research https://www.bocsar.nsw.gov.au/Pages/bocsar_datasets/Offence.aspx.

Data source: Wharton Data Research Services https://wrds-www.wharton.upenn.edu/.

References

Barreto-Souza, W.: Mixed Poisson INAR(1) processes. Stat. Pap. 60, 2119–2139 (2019)

Caffo, B.S., Jank, W., Jones, G.L.: Ascent-based Monte Carlo expectation-maximization. J. R. Stat. Soc. Ser. B 67, 235–251 (2005)

Chan, K.S., Ledholter, J.: Monte Carlo EM estimation for time series models involving counts. J. Am. Stat. Assoc. 90, 242–252 (1995)

Chen, Z., Dassios, A., Tzougas, G.: Multivariate mixed Poisson generalized inverse Gaussian INAR(1) regression. Comput. Stat. 38, 955–977 (2023)

Cui, Y., Zhu, F.: A new bivariate integer-valued GARCH model allowing for negative cross-correlation. TEST 27, 428–452 (2018)

Cui, Y., Li, Q., Zhu, F.: Flexible bivariate Poisson integer-valued GARCH model. Ann. Inst. Stat. Math. 72, 1449–1477 (2020)

Czado, C., Gneiting, T., Held, L.: Predictive model assessment for count data. Biometrics 65, 1254–1261 (2009)

Davis, R.A., Fokianos, K., Holan, S.H., Joe, H., Livsey, J., Lund, R., Pipiras, V., Ravishanker, N.: Count time series: a methodological review. J. Am. Stat. Assoc. 116, 1533–1547 (1995)

Dempster, A.P., Laird, N.M., Rubin, D.B.: Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. Ser. B 39, 1–38 (1977)

Ferland, R., Latour, A., Oraichi, D.: Integer-valued GARCH process. J. Time Ser. Anal. 27, 923–942 (2006)

Fokianos, K., Anders, R., Tjøstheim, D.: Poisson autoregression. J. Am. Stat. Assoc. 102, 563–578 (2009)

Fokianos, K., Tjøstheim, D.: Log-linear Poisson autoregression. J. Multivar. Anal. 104, 1430–1439 (2009)

Fokianos, K., Støve, B., Tjøstheim, D., Doukhan, P.: Multivariate count autoregression. Bernoulli 26, 471–499 (2020)

Fokianos, K., Fried, R., Kharin, Y., Voloshko, V.: Statistical analysis of multivariate discrete-valued time series. J. Multivar. Anal. 188, 104805 (2022)

Gorgi, P.: Beta-negative binomial auto-regressions for modelling integer-valued time series with extreme observations. J. R. Stat. Soc. Ser. B 82, 1325–1347 (2020)

Jørgensen, B.: Statistical Properties of the Generalized Inverse Gaussian Distribution. Lecture Notes in Statistics, vol. 9. Springer, New York (1982)

Meng, X.L., Rubin, D.B.: Using EM to obtain asymptotic variance-covariance matrices: the SEM algorithm. J. Am. Stat. Assoc. 86, 899–909 (1991)

Lange, K.: A gradient algorithm locally equivalent to the EM algorithm. J. R. Stat. Soc. Ser. B 57, 425–437 (1995)

Lee, Y., Lee, S., Tjøstheim, D.: Asymptotic normality and parameter change test for bivariate Poisson INGARCH models. TEST 27, 52–69 (2018)

Livsey, J., Lund, R., Kechagias, S., Pipiras, V.: Multivariate integer-valued time series with flexible autocovariances and their application to major hurricane counts. Ann. Appl. Stat. 12, 408–431 (2018)

Louis, T.A.: Finding the observed information matrix when using the EM algorithm. J. R. Stat. Soc. Ser. B 44(2), 226–233 (1982)

Paul, M., Held, L., Toschke, A.M.: Multivariate modelling of infectious disease surveillance data. Stat. Med. 27, 6250–6267 (2008)

Pedeli, X., Karlis, D.: A bivariate INAR(1) process with application. Stat. Model. 11, 325–349 (2011)

Pedeli, X., Karlis, D.: Some properties of multivariate INAR(1) processes. Comput. Stat. Data Anal. 67, 213–225 (2018)

Piancastelli, L.S.C., Barreto-Souza, W., Ombao, H.: Flexible bivariate INGARCH process with a broad range of contemporaneous correlation. J. Time Ser. Anal. 44, 206–222 (2023)

Qian, L., Li, Q., Zhu, F.: Modelling heavy-tailedness in count time series. Appl. Math. Model. 82, 766–784 (2020)

Sichel, H.S.: On a family of discrete distributions particularly suited to represent long-tailed frequency data. In: Laubscher, N.F. (ed.) .A. C.S.LR., Pretoria Proceedings of the Third Symposium on Mathematical Statistics, pp. 51–97. Springer, New York (1971)

Silva, R.B., Barreto-Souza, W.: Flexible and robust mixed Poisson INGARCH models. J. Time Ser. Anal. 40, 788–814 (2019)

Stein, G.Z., Zucchini, W., Juritz, J.M.: Parameter estimation for the Sichel distribution and its multivariate extension. J. Am. Stat. Assoc. 82, 938–944 (1987)

Tzougas, G., Makariou, D.: The multivariate Poisson-generalized inverse gaussian claim count regression model with varying dispersion and shape parameters. Risk Manag. Insur. Rev. 25, 401–417 (2022)

Acknowledgements

We thank the two anonymous referees for their constructive comments that led to improvements in the paper.

Funding

Open access funding provided by SCELC, Statewide California Electronic Library Consortium.

Author information

Authors and Affiliations

Contributions

YJ wrote the original draft including preparing figures and tables, collecting the real data set and writing the R codes. All three authors conceptualized the original idea of this paper, reviewed and edited the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jang, Y., Sundararajan, R.R. & Barreto-Souza, W. A multivariate heavy-tailed integer-valued GARCH process with EM algorithm-based inference. Stat Comput 34, 56 (2024). https://doi.org/10.1007/s11222-023-10372-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11222-023-10372-7

Keywords

- Cross-correlation

- EM algorithm

- Heavy tail

- Monte Carlo method

- Multivariate count times series

- Intraday

- High-frequency data