Abstract

Compositional data arise in many real-life applications and versatile methods for properly analyzing this type of data in the regression context are needed. When parametric assumptions do not hold or are difficult to verify, non-parametric regression models can provide a convenient alternative method for prediction. To this end, we consider an extension to the classical k- Nearest Neighbours (k-NN) regression, that yields a highly flexible non-parametric regression model for compositional data. A similar extension of kernel regression is proposed by adopting the Nadaraya–Watson estimator. Both extensions involve a power transformation termed the \(\alpha \)-transformation. Unlike many of the recommended regression models for compositional data, zeros values (which commonly occur in practice) are not problematic and they can be incorporated into the proposed models without modification. Extensive simulation studies and real-life data analyses highlight the advantage of using these non-parametric regressions for complex relationships between compositional response data and Euclidean predictor variables. Both the extended K-NN and kernel regressions can lead to more accurate predictions compared to current regression models which assume a, sometimes restrictive, parametric relationship with the predictor variables. In addition, the extended k-NN regression, in contrast to current regression techniques, enjoys a high computational efficiency rendering it highly attractive for use with large sample data sets.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Non-negative multivariate vectors with variables (typically called components) conveying only relative information are referred to as compositional data. When the vectors are normalized to sum to 1, their sample space is the standard simplex given below

where D denotes the number of components.

Examples of compositional data may be found in many different fields of study and the extensive scientific literature that has been published on the proper analysis of this type of data is indicative of its prevalence in real-life applications.Footnote 1 It is perhaps not surprising, given the widespread occurrence of this type of data, that many compositional data analysis applications involve covariates and the literature cites numerous applications of compositional regression analysis. For example, data from oceanography studies involving Foraminiferal (a marine plankton species) compositions at different sea depths from oceanography were analyzed in Aitchison (2003). In hydrochemistry, Otero et al. (2005) used regression analysis to draw conclusions about anthropogenic and geological pollution sources of rivers in Spain. In economics, Morais et al. (2018) linked market shares to some independent variables, while in political sciences the percentage of votes of each candidate were linked to some relevant predictor variables (Katz and King 1999; Tsagris and Stewart 2018). In the field of bioinformatics compositional data techniques have been used for analysing microbiome data (Xia et al. 2013; Chen and Li 2016; Shi et al. 2016). In Sect. 5 our proposed methodology is applied to real-life compositional glacial data, data on the concentration of chemical elements in samples of soil, as well as electoral data, all of which are associated with some covariates.

The need for valid regression models for compositional data in practice has led to several developments in this area, many of which have been proposed in recent years. The first regression model for compositional response data was developed by Aitchison (2003), commonly referred to as Aitchison’s model, and was based on the additive log-ratio transformation defined in Sect. 2. Dirichlet regression was applied to compositional data in Gueorguieva et al. (2008); Hijazi and Jernigan (2009); Melo et al. (2009). Iyengar and Dey (2002) investigated the generalized Liouville family of distributions that permits distributions with negative or mixed correlation and also contains non-Dirichlet distributions with non-positive correlation. The additive log-ratio transformation was again used by Tolosana-Delgado and von Eynatten (2009) while Egozcue et al. (2012) extended Aitchison’s regression model by using an isometric log-ratio transformation but, instead of employing the usual Helmert sub-matrix, Egozcue et al. (2012) chose a different orthogonal matrix that is compositional data dependent.

A drawback of the aforementioned regression models is their inability to handle zero values directly and, consequently, a few models have recently been proposed to address the zero problem. In particular, Scealy and Welsh (2011) transformed the compositional data onto the unit hyper-sphere and introduced the Kent regression which treats zero values naturally. Spatial compositional data with zeros were modelled in Leininger et al. (2013) from the Bayesian stance. In Mullahy (2015), regression models for economic share data were estimated, with the shares taking zero values with nontrivial probability. Alternative regression models in the field of econometrics and applicable when zero values are present are discussed in Murteira and Ramalho (2016). In Tsagris (2015a), a regression model that minimizes the Jensen–Shannon divergence was proposed while in Tsagris (2015b), \(\alpha \)-regression (a generalization of Aitchison’s log-ratio regression) was introduced, and both of these approaches are compatible with zeros. An extension to Dirichlet regression allowing for zeros was developed by Tsagris and Stewart (2018) and referred to as zero adjusted Dirichlet regression.

Most of the preceding regression models are parametric models in the sense that they are limited to the assumptions of linear or generalized linear relationships between the dependent and independent variables, even though the relationships in many real-life applications are not restricted to the linear setting nor conform to fixed parametric forms. In the case of unconstrained data, when parametric assumptions are not satisfied or easily verified, non-parametric regression models and algorithms, such as k-Nearest Neighbour (k-NN) regression, are often considered. As detailed in Sect. 3, Kernel regression (Wand and Jones 1994) is a more sophisticated technique that generalizes k-NN regression by adding different weights to each observation that decay exponentially with distance. A disadvantage of Kernel regression is that it is more complex and computationally expensive than k-NN regression.

The contribution of this paper is an extension of these classical non-parametric approaches for application to compositional data through the utilization of the \(\alpha \)-transformation (Tsagris et al. 2011), a power transformation that offers an extra degree of flexibility in compositional data analysis. Specifically, the proposed extensions of the k-NN and kernel regression models, termed \(\alpha \)-k-NN and \(\alpha \)-kernel regressions respectively, for compositional data link the predictor variables in a non-parametric, non-linear fashion, thus allowing for more flexibility. The models have the potential to provide a better fit to the data compared to conventional models and yield improved predictions when the relationships between the compositional and the non-compositional variables are complex. Furthermore, in contrast to other non-parametric regressions such as projection pursuit (Friedman and Stuetzle 1981), applicable to log-ratio transformed compositional data, the two proposed methods allow for zero values in the data. While our objective is improved prediction for complex relationships involving compositional response data, a disadvantage of non-parametric regression strategies, in general, is that the quantification and usual statistical inference of the effects of the independent variables is not straightforward. However, the use of ICE plots (Goldstein et al. 2015) can overcome this obstacle by facilitating visualization of the effects of the independent variables and we employ these plots in Sect. 5. Finally, a significant advantage of \(\alpha \)-k-NN regression in particular is its high computational efficiency compared to all current regression techniques, even when the sample sizes number hundreds of thousands or even millions of observations. This is also true for the case of online data or streaming data that require rapid predictions and our work lays the foundation for novel methods in machine learning since many currently rely on k-NN methodology. Functions to carry out both \(\alpha \)-k-NN and \(\alpha \)-kernel are provided in the R package Compositional.

While in practice either the response or predictor data (or both) may be compositional, we consider only the case in which the response data are compositional. The paper is structured as follows: Sect. 2 describes relevant transformations and regression models for compositional data, while in Sect. 3, the proposed \(\alpha \)-k-NN and \(\alpha \)-kernel regression models are introduced. We examine the advantages and limitations of these models through simulation studies, implemented in Sect. 4, and an analysis of real-life data sets is presented in Sect. 5. Finally, concluding remarks are provided in Sect. 6.

2 Compositional data analysis: transformations and regression models

In this section some preliminary definitions and methods in compositional data analysis relevant to the work in this paper are introduced. Specifically, two commonly used log-ratio transformations, as well as a more general \(\alpha \)-transformation are defined, followed by some existing regression models for compositional regression.

2.1 Transformations

2.1.1 Additive log-ratio transformation

Aitchison (1982) suggested applying the additive log-ratio (alr) transformation to compositional data prior to using standard multivariate data analysis techniques. Let \(\textbf{u}=\left( u_1,\ldots , u_D\right) ^\top \in {\mathbb {S}}^{D-1}\), then the alr transformation is given by

where \(\textbf{v}=\left( v_1,\ldots ,v_{D-1}\right) \in {\mathbb {R}}^{D-1}\). Note that the common divisor, \(u_1\), need not be the first component and was simply chosen for convenience. The inverse of Eq. (2) is given by

2.1.2 Isometric log-ratio transformation

An alternative transformation proposed by Aitchison (1983) is the centred log-ratio (clr) transformation defined as

where \(g\left( \textbf{u}\right) =\prod _{j=1}^Du_j^{1/D}\) is the geometric mean. The inverse of Eq. (4) is given by

where \(\mathcal {C}\left\{ . \right\} \) denotes the closure operation, or normalization to the unity sum.

The clr transformation in Eq. (4) was proposed in the context of principal component analysis with the potential drawback that \(\sum _{j=1}^D y_j=0\), so essentially the unity sum constraint is replaced by the zero sum constraint. Egozcue et al. (2003) proposed multiplying Eq. (4) by the \((D-1) \times D\) Helmert sub-matrix \(\textbf{H}\) (Lancaster 1965; Dryden and Mardia 1998; Le and Small 1999), an orthogonal matrix with the first row omitted, which results in what is called the isometric log-ratio (ilr) transformation

where \({\textbf{z}}_{0} = \left( z_{0,1},\ldots , z_{0,D-1}\right) ^\top \in {\mathbb {R}}^{D-1}\). Note that \(\textbf{H}\) may be replaced by any orthogonal matrix which preserves distances (Tsagris et al. 2011). The inverse of Eq. (6) is

2.1.3 \(\alpha \)-Transformation

The main disadvantage of the above transformations is that they do not allow zero values in any of the components, unless a zero value imputation technique (see Martín-Fernández et al. (2003), for example) is first applied. This strategy, however, can produce regression models with predictive performance worse than regression models that handle zeros naturally (Tsagris 2015a). When either zeros occur in the data or more flexibility is required, the Box-Cox type transformation proposed by Tsagris et al. (2011), and also discussed in Tsagris and Stewart (2020), may be employed. Specifically, Aitchison (2003) defined the power transformation as

and Tsagris et al. (2011) subsequently defined the \(\alpha \)-transformation, based on Eq. (8), as

where \(\textbf{H}\) is the Helmert sub-matrix and \(\textbf{1}_D\) is the D-dimensional vector of 1 s.

While the power transformed vector \(\textbf{w}_{\alpha }\) in Eq. (8) remains in the simplex \({\mathbb {S}}^{D-1}\), \(\textbf{z}_{\alpha }\) in Eq. (9) is mapped onto a subset of \({\mathbb {R}}^{D-1}\). Furthermore, as \(\alpha \rightarrow 0\), Eq. (9) converges to the ilr transformationFootnote 2 in Eq. (6) (Tsagris et al. 2016), provided no zero values exist in the data. For convenience purposes, \(\alpha \) is generally taken to be between \(-1\) and 1, but when zeros occur in the data, \(\alpha \) must be restricted to be strictly positive. The inverse of \(\textbf{z}_\alpha \) is

Tsagris et al. (2011) argued that while the \(\alpha \)-transformation did not satisfy some of the properties that Aitchison (2003) deemed important, namely perturbation and permutation invariance, and subcompositional dominance and coherence, this was not a downside of this transformation as those properties were suggested mainly to fortify the concept of log-ratio methods. Scealy and Welsh (2014) also questioned the importance of these properties and, in fact, showed that some of them are not actually satisfied by the log-ratio methods that they were intended to justify. The benefit of the \(\alpha \)-transformation over the alr and clr transformations is that it can be applied even when zero values are present in the data (using strictly positive values of \(\alpha \)), offer more flexibility and yield better results (Tsagris 2015b; Tsagris et al. 2016; Tsagris and Stewart 2022).

2.2 Regression models for compositional data

2.2.1 Additive and isometric log-ratio regression models

Let \(\textbf{V}\) denote the response matrix with n rows containing alr transformed compositions. \(\textbf{V}\) can then be linked to some predictor variables \(\textbf{X}\) via

where \(\textbf{B}=\left( \pmb {\beta }_2, \ldots , \pmb {\beta }_{D}\right) \) is the matrix of coefficients, \(\textbf{X}\) is the design matrix containing the p predictor variables (and the column of ones) and \(\textbf{E}\) is the residual matrix. Referring to Eq. (2), Eq. (11) can be re-written as

where \(\textbf{x}^\top _i\) denotes the i-th row of \(\textbf{X}\). Equation (12) can be found in Tsagris (2015b) where it is shown that the alr regression (11) is in fact a multivariate linear regression in the logarithm of the compositional data with the first component (or any other component) playing the role of an offset variable; an independent variable with coefficient equal to 1.

Regression based on the ilr tranformation (ilr regression) is similar to alr regression and is carried out by substituting \(\textbf{V}\) in Eq. (11) by \(\textbf{Z}_0\) in Eq. (6). The fitted values for both the alr and ilr transformations are the same and are therefore generally back transformed onto the simplex using the appropriate inverse transformation for ease of interpretation.

The drawback of alr and ilr regression is their inability to handle zero values in the compositional response data. Zero substitution strategies (Aitchison 2003; Martín-Fernández et al. 2012) could be applied prior to fitting these regression models but, as Tsagris (2015a) showed, their predictive performance can be worse compared to a divergence-based regression model.

2.2.2 Kullback–Leibler divergence based regression

Murteira and Ramalho (2016) estimated the \(\pmb {\beta }\) coefficients via minimization of the Kullback–Leibler divergence

where for \(i=1,\ldots n\), \(\textbf{u}_i\) are the observed compositional response data and \(\hat{\textbf{u}}_i=\left( \hat{u}_{i1}, \ldots , \hat{u}_{iD}\right) ^\top \) are the fitted response data which have been transformed to simplex space through the transformation

with \(\pmb {\beta }_j=\left( \beta _{0j},\beta _{1j},...,\beta _{pj} \right) ^\top \), \(j=2,\ldots ,D\). (Tsagris 2015a, b).

The regression model in Eq. (13), also referred to as Multinomial logit regression, will be denoted by Kullback–Leibler Divergence (KLD) regression throughout the rest of the paper. KLD regression is a semi-parametric regression technique, and, unlike alr and ilr regression, it can handle zeros naturally, simply because \(\lim _{x \rightarrow 0}x\log {x}=0\).

3 The \(\alpha \)-k-NN and \(\alpha \)-kernel regression models

Our proposed \(\alpha \)-k-NN and \(\alpha \)-kernel regression models extend the well-known k-NN regression and the Nadaraya-Watson smoother, respectively, to the compositional data setting. Unlike previous approaches, the models are more flexible (due to the \(\alpha \) parameter), entirely non-parametric and allow for zeros, thus filling an important gap in the compositional data analysis literature

In general terms, to predict the response value corresponding to a new vector of predictor values (\(\textbf{x}_{new}\)), the k-NN algorithm first computes the Euclidean distances from \(\textbf{x}_{new}\) to the observed predictor values \(\textbf{x}\). k-NN regression works by selecting the response values coinciding with the observations with the k-smallest distances between \(\textbf{x}_{new}\) and the observed predictor values, and then averaging those response values, using the sample mean or median, for example. The proposed \(\alpha \)-k-NN regression algorithm relies on an extension of the sample mean to the sample Fréchet mean, described below, while the \(\alpha \)-kernel regression method further extends this mean.

3.1 The Fréchet mean

The Fréchet mean (Pennec 1999) on a metric space \(\left( {\mathbb {M}}, \text {dist} \right) \), with the distance between \(p,q\in {\mathbb {M}}\) given by \(\text {dist}\left( p,q\right) \), is defined by \(\text {argmin}_{h \in {\mathbb {M}}}E_U\left[ \text {dist}\left( U, h\right) ^2 \right] \) and \(\text {argmin}_{h \in {\mathbb {M}}}\sum _{i=1}^n\text {dist}\left( u_i, h\right) ^2\) in the population and finite sample cases, respectively. The Fréchet mean in the compositional data setting for a sample size n is the argument \(\pmb {\gamma }=\left( \gamma _1,\ldots ,\gamma _D\right) ^\top \) which minimizes the following expression (Tsagris et al. 2011)

where \(\gamma _j=\frac{\mu _j^{\alpha }}{\sum _{l=1}^D\mu _l^{\alpha }}\). Setting \(\partial \pmb {\mu }_{\alpha }/\partial \gamma _j=0\), the minimization occurs at

and thus

Kendall and Le (2011) showed that the central limit theorem applies to Fréchet means defined on manifold valued data and the simplex space is an example of a manifold (Pantazis et al. 2019). Another nice property of the Fréchet mean is that it is absolutely continuous as \(\alpha \) tends to zero, in the limiting case (assuming strictly positive compositional data), Eq. (15) converges to the closed geometric mean, \(\hat{\varvec{\mu }}_0\) defined below and in Aitchison (1989). That is,

3.2 The \(\alpha \)-k-NN regression

When the response variables, \(\textbf{u}_1,\textbf{u}_2,\ldots ,\textbf{u}_n\), represent compositional data, the k-NN algorithm can be applied to the transformed data in a straightforward manner, for a specified transformation appropriate for compositional data. For added flexibility and to be able to handle compositional data with zeros directly, we propose extending k-NN regression using the power transformation in Eq. (8) combined with the Fréchet mean in Eq. (15). Specifically, in \(\alpha \)-k-NN regression, the predicted response value corresponding to \(\textbf{x}_{new}\) is then

where \(\mathcal {A}\) denotes the set of k observations, the k nearest neighbours. In the limiting case of \(\alpha =0\), without zero values in the data, the predicted response value is then

It is interesting to note that the limiting case also results from applying the clr transformation to the response data, taking the mean of the relevant k transformed observations and then back transforming the mean using Eq. (5). To see this, let

A visual representation of the added flexibility provided by \(\alpha \) on the Fréchet mean, in addition to the effect of k, is given in Fig. 1. Figure 1 shows how the Fréchet mean varies when \(\alpha \) moves from \(-1\) to 1 and different nearest neighbours are used. In Fig. 1a, b two different sets of 15 neighbours are used, whereas in Fig. 1c all 25 nearest neighbours are used. The closed geometric mean (i.e. Fréchet mean with \(\alpha =0\)) may lay outside the body of those observations. The Fréchet mean on the contrary offers a higher flexibility which, in conjunction with the number of nearest neighbour k, yields estimates that can lie within the region of the selected set of compositional observations, as visualized in Fig. 1.

Effect of \(\pmb {\alpha }\) and \(\textbf{k}\). The symbols are as follows: Blue filled square = Fréchet mean with \(\alpha =-1\), Red filled triangle = Fréchet mean with \(\alpha =0\) and Black filled circle = Fréchet mean with \(\alpha =1\). The dashed green curve Green dash shows the path of all Fréchet means starting with \(\alpha =-1\) up to \(\alpha =1\). The golden circles indicate the set of observations used to compute the Fréchet mean. (Color figure online)

3.3 The \(\alpha \)-kernel regression

Another possible non-parametric regression model results from extending the \(\alpha \) regression estimator in Eq. (16) and adopting the Nadaraya-Watson estimator (Nadaraya 1964; Watson 1964). This gives rise to the \(\alpha \)-kernel regression based on the weighted mean as follows

where \(\textbf{x}_i\) and \(\textbf{x}_{new}\) denote the i-th row vector and the new row vector containing the p predictor variables, and \(K_h(\textbf{x}- \textbf{y})\) denotes the kernel function to be employed. Typical examples include the Gaussian (or radial basis function) \(K_h(\textbf{x}- \textbf{y})=e^{-\frac{\Vert \textbf{x}-\textbf{y}\Vert ^2}{2\,h^2}}\), or the Laplacian kernel \(K_h(\textbf{x}- \textbf{y})=e^{-\frac{\Vert \textbf{x}-\textbf{y}\Vert }{h}}\), where \(\Vert \textbf{x} - \textbf{y}\Vert \) denotes the Euclidean distance between row vectors \(\textbf{x}\) and \(\textbf{y}\).

The \(\alpha \)-k-NN regression is more flexible than the simple k-NN regression but it is a special case of \(\alpha \)-kernel regression and thus less flexible. The difference lies in the fact that the \(\alpha \)-kernel regression produces a weighted Fréchet mean computed using all observations whereas the \(\alpha \)-k-NN regression computes the Fréchet mean using a subset of the observations. However, the advantage of the \(\alpha \)-k-NN regression over the \(\alpha \)-kernel regression is the former’s high computational efficiency. Lastly, as \(\alpha \rightarrow 0\) the \(\alpha \)-kernel regression in Eq. (17) converges to the Nadaraya-Watson estimator using the log-ratio transformed data (Di Marzio et al. 2015), again, without zero values in the data. This is straightforward to show by substituting the \(u_{ij}^{\alpha }\) with the \(\alpha \)-transformation in Eq. (9).

3.4 Theoretical remarks

The general family of nearest regression estimators, under weak regularity conditions, were shown to be uniformly consistent with probability one and the corresponding rate of convergence is near-optimal (Cheng 1984). More recently, Jiang (2019) proved the non-asymptotic uniform rates of consistency for the k-NN regression.Footnote 3 The proof of consistency of the \(\alpha \)-k-NN regression estimator falls within the work of Lian (2011) who dealt with the case of the response variable belonging in a separable Hilbert space. Lian (2011) investigated the rates of strong (almost sure) convergence of the k-NN estimate under finite moment conditions and exponential tail condition on the noises. Recall that the simplex in Eq. (1) is a \(D-1\) Hilbert space (Pawlowsky-Glahn and Egozcue 2001). However, results (asymptotic properties) for the \(\alpha \)-k-NN and \(\alpha \)-kernel regressions are much harder to derive due to the introduction of the power parameter \(\alpha \) and are not considered here.

3.5 Cross-validation protocol to select the values of \(\alpha \) and k or h

The tenfold cross-validation (CV) protocol is utilized to tune the pair (\(\alpha \), k) or (\(\alpha \), h). In the tenfold CV pipeline, the data are randomly split into tenfolds of nearly equal sizes. One fold is selected to play the role of the test set, while the other folds are considered the training set. The regression models are fitted on the training set and their predictive capabilities are estimated using the test set. This procedure is repeated for the tenfolds so that each fold plays the role of the test set. Ultimately, the predictive performance of each regression model is computed from the aggregation of their predictive performances at each fold. Note that while for Euclidean data the criterion of predictive performance is typically the mean squared error, we instead measure the Kulback–Leibler (KL) divergence from the observed to the predicted compositional vectors as well as the Jensen–Shannon (JS) divergence which, unlike KL, is a metric, to account for the compositional nature of our response data. The KL and JS measures of divergence are given below:

3.6 Visualisation of the predictor’s effect on the response compositional data

The alr, ilr and KLD regression models presented earlier allow for estimation of the effect of each predictor on the response compositional data. The \(\alpha \)-k-NN and the \(\alpha \)-kernel regression models, on the contrary, do not return coefficients demonstrating the effect of each predictor. To this end, the individual conditional expectation (ICE) plot (Goldstein et al. 2015) will portray these, possibly non-linear, effects visually.

The idea is rather simple and straightforward. Suppose the interest lies in the effect of predictor \(X_s\) on the response compositional data. Let us define by \(\textbf{X}_i=\left( X_{si}, \textbf{X}_{ci}\right) ^\top \) the i-th observation of the full set of predictors containing of n observations, where \(\textbf{X}_c\) refers to the rest of predictors. Create a new dataset \(\widetilde{\textbf{X}}=\left( X_{si},X_c\right) \), where the s-th predictor contains only the i-th value of \(X_s\) and the rest of the predictors remain the same and. For some chosen valuesFootnote 4 of \(\alpha \) and k or h, estimate the n compositional responses and compute their simple arithmetic mean, \(\tilde{\textbf{u}}_i\). By repeating this process for \(i=1,\ldots ,n\) one ends up with n average predictions (\(\tilde{\textbf{u}}\)). Plot the \(\tilde{\textbf{u}}\) values, one for each of the D components, versus the \(X_{s}\). To facilitate a smooth curve, local polynomial regression fitting is performed (Cleveland 1979).

To speed up the process instead of using all observed values of the \(X_s\), one can randomly select a subset of the values of the specific predictor. Finally, Goldstein et al. (2015) suggest a centering of the predictions, but in the compositional data case this is not necessary as all predicted values lie within the [0, 1] interval.

4 Simulation studies

Monte Carlo simulation studies were implemented to assess the predictive performance of the proposed \(\alpha \)-k-NN and \(\alpha \)-kernel regressions compared to the KLD regression, an alternative semi-parametric approach that also allows for zeros. While KLD regression is computationally expensive, employment of the Newton–Raphson algorithm (Bóhning 1992) allows for efficient and feasible computation.

Multiple types of relationships between the response and predictor variables are considered and a tenfold CV protocol was applied for each regression model to evaluate its predictive performance. A second axis of comparison was an assessment of computational cost of the regressions. Using the same scenario as above, the computational efficiency of the \(\alpha \)-k-NN regression was compared to that of the KLD regression.

All computations were carried out on a laptop with Intel Core i5-5300U CPU at 2.3 GHz with 16 GB RAM and SSD installed using the R package Compositional (Tsagris et al. 2022) for all regression models.

4.1 Predictive performance

In our simulation study, the values of the one or two predictor variables (denoted by \(\textbf{x}\)) were generated from a Gaussian distribution with mean zero and unit variance, and were linked to the compositional responses via two functions: a polynomial as well as a more complex segmented function. For both cases, the outcome was mapped onto \({\mathbb {S}}^{D-1}\) using Eq. (19)

More specifically, for the simpler polynomial case, the values of the predictor variables were raised to a power (1, 2 or 3) and then multiplied by a vector of coefficients. White noise (\(\textbf{e}_i\)) was added as follows

where \(\nu =1,2,3\) indicates the degree of the polynomial. The constant terms in the regression coefficients \(\pmb {\beta }_j,\ j=2,\ldots ,D\) were randomly generated from \(N(-3,1)\) whereas the slope coefficients were generated from N(2, 0.5).

For the segmented linear model case, one predictor variable x was set to range from \(-1\) up to 1 and the f function was defined as

where \(i=1,\ldots ,n\) and \(j=2,\ldots ,D\). The regression coefficients \(\beta _{1j}\) were randomly generated from a \(N(-1, 0.3)\) while the regression coefficients \(\beta _{2j}\), \(j=2,\ldots ,D\), were randomly generated from N(1, 0.2).

The above two scenarios were repeated with the addition of zero values in 20% of randomly selected compositional vectors. For each compositional vector that was randomly selected, a third of its component values were set to zero and those vectors were normalized to sum to 1. Finally, for all cases, the sample sizes varied between 100 and 1000 with an increasing step size equal to 50 while the number of components was set equal to \(D=\{3, 5, 7, 10\}\). The estimated predictive performance of the regression models was computed using the KL divergence (18a) and the JS divergence (18b). The results, however, were similar so only the KL divergence results are shown. For all examined case scenarios the results were averaged over 100 repeats.

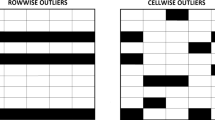

Figures 2 and 3 show graphically the results of the comparison of \(\alpha \)-k-NN and \(\alpha \)-kernel regressions with KLD regression with no zeros and zero values present, respectively. Note that values below 1 indicate that the proposed regression models have smaller prediction errors than the KLD regression. For the first case of no zero values present (Fig. 2), when the relationship between the predictor variable(s) and the compositional responses is linear (\(\nu =1\)), the error is slightly less for KLD regression compared to \(\alpha \)-k-NN regression. The relative error is smaller between the \(\alpha \)-kernel and the KLD regressions. In all other cases (quadratic, cubic and segmented relationships), the \(\alpha \)-k-NN and the \(\alpha \)-kernel regressions consistently produce more accurate predictions. Another characteristic observable in all plots of Fig. 2 is that the relative predictive performance of both non-parametric regressions compared to KLD regression reduces as the number of components in the compositional data increases.

The results in the zero values present case in Fig. 3 are, in essence, the same compared to the previous case. When the relationship between the predictor variables is linear, KLD regression again exhibits slightly more accurate predictions compared to the \(\alpha \)-k-NN and the \(\alpha \)-kernel regressions, while the opposite is true for most other cases. Furthermore, the impact of the number of components of the compositional responses on the relative predictive performance of both non-parametric regressions compared to the KLD regression varies according to the relationship between the response and covariates, the number of predictor variables and the sample size.

No zero values present case scenario. Ratio of the Kullback–Leibler divergences between the \(\alpha \)-k-NN and the KLD regression and between the \(\alpha \)-kernel and the KLD regression. The number of components (D) appear with different colors. Values less than 1 indicate that the \(\alpha \)-k-NN or the \(\alpha \)-kernel regression has smaller prediction error compared to the KLD regression. a and e: The degree of the polynomial in (20) is \(\nu =1\), b and f: The degree of the polynomial in (20) is \(\nu =2\), c and g: The degree of the polynomial in (20) is \(\nu =3\). d and h refer to the segmented linear relationship case (21)

Zero values present case scenario. Ratio of the Kullback–Leibler divergences between the \(\alpha \)-k-NN and the KLD regression and between the \(\alpha \)-kernel and the KLD regression. The number of components (D) appear with different colors. Values less than 1 indicate that the \(\alpha \)-k-NN or the \(\alpha \)-kernel regression has a smaller prediction error compared to the KLD regression. a and e: The degree of the polynomial in (20) is \(\nu =1\), b and f: The degree of the polynomial in (20) is \(\nu =2\), c and g: The degree of the polynomial in (20) is \(\nu =3\). d and h refer to the segmented linear relationship case (21)

4.2 Computational efficiency of the \(\alpha \)-k-NN regression

The linear relationship scenario (that is, when the degree of the polynomial in Eq. (20) is equal to \(\nu =1\)), but without zero values was used to illustrate the computational efficiency of only the \(\alpha \)-k-NN regression. Unlike the \(\alpha \)-kernel regression, the \(\alpha \)-k-NN regression does not require all observations for computation of the predicted values, only the k-nearest neighbours. Therefore, the \(\alpha \)-kernel regression is computationally slower.

With massive data (tens or hundreds of thousands of observations) the search for the k nearest neighbours in the \(\alpha \)-k-NN regression takes place using kd-trees implemented in the R package RANN (Arya et al. 2019). An important advantage of a kd-tree is that it runs in \(O(n\log {n})\) time, where n is the sample size of the training data set. The RANN package utilizes the Approximate Near Neighbor (ANN) C++ library, which can give the exact near neighbours or (as the name suggests) approximate near neighbours to within a specified error bound, but when the error bound is 0 (as in this case) an exact nearest neighbour search is performed. When the sample sizes are at the order of a few tens of thousands or less, kd-trees are slower to use and, in this case, the \(\alpha \)-k-NN regression algorithm employs a C++ implemented function to search for the k nearest neighbours from the R package Rfast (Papadakis et al. 2022). In either case, with low or large sample sizes, it is worthy to highlight that the implementation of the \(\alpha \)-k-NN regression algorithm has low memory requirements.

The KLD regression has been implemented computationally efficiently in R using the Newton–Raphson algorithm, yet it will be shown not to be as computational efficient as the \(\alpha \)-k-NN regression.

In this study, the number of components of the compositional data and the number of predictor variables were kept the same as before but the sample sizes, the values of \(\alpha \) and the number of neighbours were modified. Specifically, large sample sizes ranging from 500,000 up to 10,000,000 with an increasing step equal to 500, 000 were generated. Eleven positive values of \(\alpha \) (\(0, 0.1,\ldots , 1\)) were used and a large sequence of neighbours (from \(k=2\) up to \(k=100\) neighbours) were considered. The computational efficiency of each regression was measured as the time required to predict the compositional responses of 1000 new values.

The average time (in seconds) based on 10 repetitions versus the sample size of each regression is presented in Tables 1 and 2. The scalability of \(\alpha \)-k-NN regression is better than that of KLD regression and as the sample size explodes the difference in the computational cost increases. Furthermore, the ratio of the computational cost of \(\alpha \)-k-NN regression to the cost of OLS decays with the sample size and with the number of components. The converse is true for the ratio of the computational cost of KLD to the cost of OLS with respect to the number of components which appears to increase with the number of components. To appreciate the level of computational difficulty it should be highlighted that the \(\alpha \)-k-NN regression produced a collection of \(11 \times 99 = 1089\) predicted compositional data sets (for each combination of \(\alpha \) and k). KLD, in contrast, produced a single set of predicted compositional data. The same is true for OLS regression whose time required for the same task is also presented.

5 Examples with real data

5.1 Small sample data sets

To assess the predictive performance of \(\alpha \)-k-NN and \(\alpha \)-kernel regression in practice, 4 publicly available small sample data sets that contain at least one zero value in the compositional responses, were utilized as examples. The predictive performance of the proposed non-parametric regression models was compared to that of the KLD regression model. We note that the zeros in the data sets preclude the use of the the alr or ilr regression models. The tenfold CV protocol as before (see Sect. 3.5) was repeated using the 4 real data sets which are described briefly below. Note that the names of the data sets are consistent with the names previously used in the literature. A summary of the characteristics of the data sets, including the dimension of the response matrix, the number of compositional response vectors containing at least one zero value as well as the number of predictor variables, is provided in Table 3.

-

Glacial: In a pebble analysis of glacial tills, the percentages by weight in 92 observations of pebbles of glacial tills sorted into 4 categories (red sandstone, gray sandstone, crystalline and miscellaneous) were recorded. The glaciologist was interested in predicting the compositions based on the total pebbles counts. The data set is available in the R package compositions (van den Boogaart et al. 2018) and almost half of the observations (42 out of 92) contain at least one zero value.

-

Gemas: This data set contains 2083 compositional vectors containing the concentration in 22 chemical elements (in mg/kg). The data set is available in the R package robCompositions (Templ et al. 2011) with 2108 vectors, but 25 vectors had missing values and thus were excluded from the current analysis. There was only one vector with one zero value. The predictor variables are the annual mean temperature and annual mean precipitation.

-

Data: In this data set, the compositional response is a matrix of 9 party vote-shares across 89 different democracies (countries) and the (only) predictor variable is the average number of electoral districts in each country. The data set is available in the R package compositions (Rozenas 2015) and 80 out of the 89 vectors contain at least one zero value.

-

Elections: The Elections data set contains information on the 2000 U.S. presidential election in the 67 counties of Florida. The number of votes each of the 10 candidates received was transformed into proportions. For each county, information on 8 predictor variables was available such as population, percentage of population over 65 years old, mean personal income, percentage of people who graduated from college prior to 1990 among others. The data set is available in Smith (2002) and 23 out of the 67 vectors contained at least one zero value.

5.1.1 Selected hyper-parameters

Table 3 presents the most frequently selected values of \(\alpha \) and k. It is perhaps worth mentioning that the value of \(\alpha =0\) was never selected for any data set, indicating that the ilr transformation in Eq. (6) was never considered to be the optimal transformation. When the percentage of times the value of \(\alpha \) is selected is large, this implies a small variance in the chosen value of the parameter. From Table 3, it appears that the larger the value of the optimal \(\alpha \) (roughly), the smaller the variance. There does not appear to be an association between the variability in the chosen value of k and the variability in the optimal \(\alpha \) values for the \(\alpha \)-k-NN regression. For the data set Gemas, the choice of \(\alpha \) was highly variable, whereas the choice of k was always the same. The opposite was true for the data set Data, for which the optimal value of \(\alpha \) was always the same but the value of k was highly variable.

5.1.2 Predictive performance

Figure 4 presents the boxplots of the relative performance (computed via CV and the KL divergence) of \(\alpha \)-k-NN and \(\alpha \)-kernel regression compared to KLD regression for each data set. As before, values lower than 1 indicate that the proposed regression algorithm has smaller prediction error than KLD regression. On average, the \(\alpha \)-k-NN outperformed the KLD regression for the data sets Gemas and Elections, whereas the opposite was true for the data sets Glacial, and Data. The conclusions are similar for the \(\alpha \)-kernel regression and it had smaller prediction error (on average) for 2 of the 4 data sets, but this type of regression exhibited significantly less variability.

For the Glacial data set, Fig. 5 provides a plot of the observed and predicted (fitted) values for the four components of the response data, allowing for a visual comparison of the three competing models.

5.1.3 Effect of predictors on response compositions

For the \(\alpha \)-k-NN and the \(\alpha \)-kernel regressions, the effect of the predictor variables on the response data can be explored through ICE plots, while for KLD regression, it is possible to compute estimates of coefficients and their standard errors.

Figure 6 shows the ICE plots for the Glacial data set of the \(\alpha \)-k-NN and the \(\alpha \)-kernel regressions for the effect of the predictor variable on the compositional responses. ICE plots for the other three data sets may be found in the supplemental material. The values of \(\alpha \), k, and h were selected by the tenfold CV protocol. The two plots are similar and suggest that for smaller values of the predictor variable, its impact on the components is somewhat inconsequential. However, for larger values of the predictor variable, as Count increases, red sandstone decreases and grey sandstone increases, whereas the values of crystalline and miscellanea seem not to be affected at all.

Table 4 contains the regression coefficients of the KLD regression model for the Glacial data set. The relevant standard errors were computed using a non-parametric bootstrap with 1000 replicates. The t-ratios are non-significant. Similar tables for the other data sets are provided in the supplemental material.

5.2 Large sample data sets

A benefit of \(\alpha \)-k-NN regression is its high computational efficiency and hence we also illustrate its performance on two real large scale data sets, both containing hundreds of thousands of observations. The \(\alpha \)-kernel regression was not examined due to its high memory requirements.

-

Seoul pollution: Air pollution measurement information in Seoul, South Korea, is provided by the Seoul Metropolitan Government ‘Open Data Plaza’. This particular data set was downloaded from kaggle. The average values for 4 pollutants (SO\(_2\), NO\(_2\), CO, O\(_3\)) are available along with the coordinates (latitude and longitude) of each site. The data were normalized to sum to 1, so as to obtain the composition of each pollutant. In total, there are 639,073 of observations. Since the predictors in the Seoul pollution data set, the longitude and latitude, are expressed in polar coordinates they were first transformed to their Euclidean coordinates, using the R package Directional (Tsagris et al. 2022), in order to validly compute the Euclidean distances.

-

Electric power consumption: This data set contains 1,454,154 measurements of electric power consumption in one household with a one-minute sampling rate over a period of almost 4 years. The measurements were gathered in a house located in Sceaux (7 km of Paris, France) between December 2006 and November 2010 (47 months). Different electrical quantities and some sub-metering values are available. The data set is available to download from the UCI Machine Learning Repository. The response data are comprised of 3 energy sub-meterings measured in watt-hour of active energy: (a) the kitchen, containing mainly a dishwasher, an oven and a microwave (hot plates are not electric but gas powered), (b) the laundry room, containing a washing-machine, a tumble-drier, a refrigerator and a light, and (c) the electric water-heater and the air-conditioner. The data were again transformed to compositional data. There are 4 predictor variables, namely the global active power (in kW), the global reactive power (in kW), the voltage (in volt) and the global intensity (in ampere).

5.2.1 Predictive performance

The same tenfold CV protocol was employed again. Since both compositional data sets contained zero values,the KLD and \(\alpha \)-k-NN regression methods were suitable. Only strictly positive values of \(\alpha \) were therefore utilized, and for the nearest neighbours, 99 values were tested (\(k=2,\ldots ,100\)). The results of the \(\alpha \)-k-NN and the KLD regression are summarized in Table 5.

These two large scale data sets suggest that, as in the case of the small scale data sets, \(\alpha \)-k-NN is a viable alternative regression model option for compositional data, with the advantage that it is at least as computationally efficient as other regression models.

6 Conclusions

Two generic regressions able to capture complex relationships involving compositional data, termed \(\alpha \)-k-NN regression and \(\alpha \)-kernel regression, were proposed that take into account the constraints on such data. Through simulation studies and the analysis of several real-life data sets, \(\alpha \)-k-NN and \(\alpha \)-kernel regressions were evaluated alongside a comparable, but semi-parametric, regression model available for this setting. The classical k-NN regression provided the foundation for \(\alpha \)-k-NN regression, while the \(\alpha \)-transformation was used to transform the compositional data. Using this transformation added to the flexibility of the model and meant that commonly occurring zero values in the compositional data were allowed, unlike with many other regression approaches for compositional data. The flexbility was further increased with the introduction of a weighted scheme computed via a Gaussian kernel. The Fréchet mean (defined for compositional data by Tsagris et al. (2011)) was used in order to prevent fitted values from being outside the simplex.

Using a CV procedure and pertinent measures of predictive performance, we found that in simulation study cases where the relationship was non-linear (including when the data contained zeros), the \(\alpha \)-k-NN and \(\alpha \)-kernel regressions outperformed (sometimes substantially) their competing counterpart, KLD regression. For the real-life data sets, similar conclusions were made and our two non-parametric regressions tended to outperform KLD regression in data sets where it is surmised that non-linear relationships exist. We note that our conclusions were the same regardless of which type of divergence was used (either KL or JS). A second advantage of the \(\alpha \)-k-NN regression solely, is its high computational efficiency as it can treat millions of observations in just a few seconds. We further highlight that the new regression techniques are publicly available in the R package Compositional (Tsagris et al. 2022).

A disadvantage of the \(\alpha \)-k-NN regression, and of k-NN regression in general, is that it lacks the framework for classical statistical inference (such as hypothesis testing). This is counterbalanced by (a) its higher predictive performance compared to parametric models and (b) its high computational efficiency that make it applicable even with millions of observations. However, the use of ICE plots (Goldstein et al. 2015) that offer a visual inspection of the effect of each independent variable can overcome this issue. Note that while not considered in detail here, \(\alpha \)-regression (Tsagris 2015b) can also handle zeros but is computationally expensiveFootnote 5.

With respect to possible future directions related to this work, one area is non-linear PCA, such as kernel PCA (Mika et al. 1999). However, a disadvantage of kernel methods is their computational cost that increases exponentially with sample size. Additionally, more variations and improvements of k-NN regression found in Nguyen et al. (2016) could be explored, which are applicable to \(\alpha \)-k-NN regression. Alternatively, the proposed regression models can be generalized towards random forests (Breiman 2001), given the relationship of the latter with k-NN (Lin and Jeon 2006), and potentially other machine learning techniques.

Instead of the \(\alpha \)-transformation, one could use alternative transformations for compositional data and derive additional transformation based k-NN regression estimators. The natural question is which one would perform best and under what circumstances. Further, the rationale of the \(\alpha \)-k-NN regression can be transferred to Euclidean data by employing the Box-Cox transformation instead.

Projection pursuit (Friedman and Stuetzle 1981) is another non-linear alternative to the \(\alpha \)-k-NN regression, which also requires tuning of a parameter, but currently would only be possible for compositional data without zero values. While zero value imputation (Martín-Fernández et al. 2003, 2012) has the potential to resolve this problem, it could also lead to less accurate estimations as was found to be the case in (Tsagris 2015a).

Notes

For a substantial number of specific examples of applications involving compositional data see (Tsagris and Stewart 2020).

The scaling factor D exists to assist in the convergence.

For more asymptotic results, see the references cited within Jiang (2019).

These are selected via the tenfold CV protocol described earlier.

A numerical optimization using the Nelder-Mead algorithm (Nelder and Mead 1965) is applied using the command optim in R.

References

Aitchison, J.: The statistical analysis of compositional data. J. R. Stat. Soc. Ser. B 44(2), 139–177 (1982)

Aitchison, J.: Principal component analysis of compositional data. Biometrika 70(1), 57–65 (1983)

Aitchison, J.: Measures of location of compositional data sets. Math. Geol. 21(7), 787–790 (1989)

Aitchison, J.: The statistical analysis of compositional data. Blackburn Press, New Jersey (2003)

Arya, S., Mount, D., Kemp, S., Jefferis, G.: RANN: Fast Nearest Neighbour Search (Wraps ANN Library) Using L2 Metric. R package version 2(6), 1 (2019)

Bóhning, D.: Multinomial logistic regression algorithm. Ann. Inst. Stat. Math. 44(1), 197–200 (1992)

Breiman, L.: Random forests. Mach. Learn. 45(1), 5–32 (2001)

Chen, E.Z., Li, H.: A two-part mixed-effects model for analyzing longitudinal microbiome compositional data. Bioinformatics 32(17), 2611–2617 (2016)

Cheng, P.E.: Strong consistency of nearest neighbor regression function estimators. J. Multivar. Anal. 15(1), 63–72 (1984)

Cleveland, W.S.: Robust locally weighted regression and smoothing scatterplots. J. Am. stat. Assoc. 74(368), 829–836 (1979)

Di Marzio, M., Panzera, A., Venieri, C.: Non-parametric regression for compositional data. Stat. Model. 15(2), 113–133 (2015)

Dryden, I., Mardia, K.: Statistical shape analysis. Wiley (1998)

Egozcue, J., Pawlowsky-Glahn, V., Mateu-Figueras, G., Barceló-Vidal, C.: Isometric logratio transformations for compositional data analysis. Math. Geol. 35(3), 279–300 (2003)

Egozcue, J.J., Daunis-I-Estadella, J., Pawlowsky-Glahn, V., Hron, K., Filzmoser, P.: Simplicial regression the normal model. J. Appl. Probab. Stat. 6(182), 87–108 (2012)

Friedman, J.H., Stuetzle, W.: Projection pursuit regression. J. Am. Stat. Assoc. 76(376), 817–823 (1981)

Goldstein, A., Kapelner, A., Bleich, J., Pitkin, E.: Peeking inside the black box: visualizing statistical learning with plots of individual conditional expectation. J. Comput. Graph. Stat. 24(1), 44–65 (2015)

Gueorguieva, R., Rosenheck, R., Zelterman, D.: Dirichlet component regression and its applications to psychiatric data. Comput. Stat. Data Anal. 52(12), 5344–5355 (2008)

Hijazi, R., Jernigan, R.: Modelling compositional data using Dirichlet regression models. J. Appl. Probab. Stat. 4(1), 77–91 (2009)

Iyengar, M., Dey, D.K.: A semiparametric model for compositional data analysis in presence of covariates on the simplex. Test 11(2), 303–315 (2002)

Jiang, H.: Non-Asymptotic Uniform Rates of Consistency for \(k-NN\) Regression. In: Proceedings of the AAAI Conference on Artificial Intelligence 33, 3999–4006 (2019)

Katz, J., King, G.: A statistical model for multiparty electoral data. Am. Polit. Sci. Rev. 93(1), 15–32 (1999)

Kendall, W.S., Le, H.: Limit theorems for empirical fréchet means of independent and non-identically distributed manifold-valued random variables. Braz. J. Probab. Stat. 25(3), 323–352 (2011)

Lancaster, H.: The Helmert matrices. Am. Math. Mon. 72(1), 4–12 (1965)

Le, H., Small, C.: Multidimensional scaling of simplex shapes. Pattern Recognit. 32(9), 1601–1613 (1999)

Leininger, T.J., Gelfand, A.E., Allen, J.M., Silander, J.A., Jr.: Spatial regression modeling for compositional data with many zeros. J. Agric. Biol. Environ. Stat. 18(3), 314–334 (2013)

Lian, H., et al.: Convergence of functional k-nearest neighbor regression estimate with functional responses. Electron. J. Stat. 5, 31–40 (2011)

Lin, Y., Jeon, Y.: Random forests and adaptive nearest neighbors. J. Am. Stat. Assoc. 101(474), 578–590 (2006)

Martín-Fernández, J., Hron, K., Templ, M., Filzmoser, P., Palarea-Albaladejo, J.: Model-based replacement of rounded zeros in compositional data: Classical and robust approaches. Comput. Stat. Data Anal. 56(9), 2688–2704 (2012)

Martín-Fernández, J.A., Barceló-Vidal, C., Pawlowsky-Glahn, V.: Dealing with zeros and missing values in compositional data sets using nonparametric imputation. Math. Geol. 35(3), 253–278 (2003)

Melo, T.F., Vasconcellos, K.L., Lemonte, A.J.: Some restriction tests in a new class of regression models for proportions. Comput. Stat. Data Anal. 53(12), 3972–3979 (2009)

Mika, S., Schölkopf, B., Smola, A.J., Múller, K.-R., Scholz, M., Rátsch, G.: Kernel pca and de-noising in feature spaces. In Advances in Neural Information Processing Systems, pp. 536–542 (1999)

Morais, J., Thomas-Agnan, C., Simioni, M.: Using compositional and Dirichlet models for market share regression. J. Appl. Stat. 45(9), 1670–1689 (2018)

Mullahy, J.: Multivariate fractional regression estimation of econometric share models. J. Econ. Methods 4(1), 71–100 (2015)

Murteira, J.M.R., Ramalho, J.J.S.: Regression analysis of multivariate fractional data. Econ. Rev. 35(4), 515–552 (2016)

Nadaraya, E.A.: On estimating regression. Theory Probab. Appl. 9(1), 141–142 (1964)

Nelder, J., Mead, R.: A simplex algorithm for function minimization. Comput. J. 7(4), 308–313 (1965)

Nguyen, B., Morell, C., De Baets, B.: Large-scale distance metric learning for k-nearest neighbors regression. Neurocomputing 214, 805–814 (2016)

Otero, N., Tolosana-Delgado, R., Soler, A., Pawlowsky-Glahn, V., Canals, A.: Relative vs. absolute statistical analysis of compositions: a comparative study of surface waters of a mediterranean river. Water Res. 39(7), 1404–1414 (2005)

Pantazis, Y., Tsagris, M., Wood, A.T.: Gaussian asymptotic limits for the \(\alpha \)-transformation in the analysis of compositional data. Sankhya A 81(1), 63–82 (2019)

Papadakis, M., Tsagris, M., Dimitriadis, M., Fafalios, S., Tsamardinos, I., Fasiolo, M., Borboudakis, G., Burkardt, J., Zou, C., Lakiotaki, C., Chatzipantsiou, C.: Rfast: a collection of efficient and extremely fast R functions. R package version 2, 6 (2022)

Pawlowsky-Glahn, V., Egozcue, J.J.: Geometric approach to statistical analysis on the simplex. Stoch. Environ. Res. Risk Assess. 15(5), 384–398 (2001)

Pennec, X.: Probabilities and statistics on riemannian manifolds: Basic tools for geometric measurements. In: IEEE Workshop on Nonlinear Signal and Image Processing, vol. 4. Citeseer (1999)

Rozenas, A.: Composition: regression for rank-indexed compositional data. R package version 1, 1 (2015)

Scealy, J., Welsh, A.: Regression for compositional data by using distributions defined on the hypersphere. J. R. Stat. Soc. Ser. B 73(3), 351–375 (2011)

Scealy, J., Welsh, A.: Colours and cocktails: compositional data analysis 2013 Lancaster lecture. Aust. N. Z. J. Stat. 56(2), 145–169 (2014)

Shi, P., Zhang, A., Li, H.: Regression analysis for microbiome compositional data. Ann. Appl. Stat. 10(2), 1019–1040 (2016)

Smith, R.L.: A statistical assessment of Buchanan’s vote in Palm Beach county. Stat. Sci. 17(4), 441–457 (2002)

Templ, M., Hron, K., Filzmoser, P.: robCompositions: an R-package for robust statistical analysis of compositional data. Wiley (2011)

Tolosana-Delgado, R., von Eynatten, H.: Grain-size control on petrographic composition of sediments: compositional regression and rounded zeros. Math. Geosci. 41(8), 869 (2009)

Tsagris, M.: A novel, divergence based, regression for compositional data. In: Proceedings of the 28th Panhellenic Statistics Conference, April 15–18, Athens, Greece (2015)

Tsagris, M.: Regression analysis with compositional data containing zero values. Chilean J. Stat. 6(2), 47–57 (2015)

Tsagris, M., Athineou, G., Alenazi, A., Adam, C.: Compositional: compositional data analysis. R package version 5, 8 (2022)

Tsagris, M., Athineou, G., Sajib, A., Amson, E., Waldstein, M., Adam, C.: Directional: directional statistics. R package version 5, 5 (2022)

Tsagris, M., Preston, S., Wood, A.: A data-based power transformation for compositional data. In: Proceedings of the 4th Compositional Data Analysis Workshop, Girona, Spain (2011)

Tsagris, M., Preston, S., Wood, A.T.: Improved classification for compositional data using the \(\alpha \)-transformation. J. Classif. 33(2), 243–261 (2016)

Tsagris, M., Stewart, C.: A Dirichlet regression model for compositional data with zeros. Lobachevskii J. Math. 39(3), 398–412 (2018)

Tsagris, M., Stewart, C.: A folded model for compositional data analysis. Aust. N. Z. J. Stat. 62(2), 249–277 (2020)

Tsagris, M., Stewart, C.: A review of flexible transformations for modeling compositional data, pp. 225–234. Springer, Cham (2022)

van den Boogaart, K., Tolosana-Delgado, R., Bren, M.: Compositions: compositional data analysis. R package version 1.40-2 (2018)

Wand, M.P., Jones, M.C.: Kernel smoothing. Chapman and Hall/CRC (1994)

Watson, G.S.: Smooth regression analysis. Sankhya Indian J. Stat. Ser. A 26(4), 359–372 (1964)

Xia, F., Chen, J., Fung, W.K., Li, H.: A logistic normal multinomial regression model for microbiome compositional data analysis. Biometrics 69(4), 1053–1063 (2013)

Funding

Open access funding provided by HEAL-Link Greece.

Author information

Authors and Affiliations

Contributions

MT and CS conceived the idea and discussed it with AA. All authors participated in the writing of the manuscript, and read and approved it.

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tsagris, M., Alenazi, A. & Stewart, C. Flexible non-parametric regression models for compositional response data with zeros. Stat Comput 33, 106 (2023). https://doi.org/10.1007/s11222-023-10277-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11222-023-10277-5