Abstract

We propose a novel blocked version of the continuous-time bouncy particle sampler of Bouchard-Côté et al. (J Am Stat Assoc 113(522):855–867, 2018) which is applicable to any differentiable probability density. This alternative implementation is motivated by blocked Gibbs sampling for state-space models (Singh et al. in Biometrika 104(4):953–969, 2017) and leads to significant improvement in terms of effective sample size per second, and furthermore, allows for significant parallelization of the resulting algorithm. The new algorithms are particularly efficient for latent state inference in high-dimensional state-space models, where blocking in both space and time is necessary to avoid degeneracy of MCMC. The efficiency of our blocked bouncy particle sampler, in comparison with both the standard implementation of the bouncy particle sampler and the particle Gibbs algorithm of Andrieu et al. (J R Stat Soc Ser B Stat Methodol 72(3):269–342, 2010), is illustrated numerically for both simulated data and a challenging real-world financial dataset.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Background

Markovian state-space models are a class of probabilistic graphical models applied in biology, signal processing, target tracking, finance and more, see Cappé et al. (2006) for a technical overview. In our setup, a latent process \((x_n, n \ge 1)\) on \({\mathbb {R}}^d\) evolves according to a state transition density \(p(x_n | x_{n-1})\), with \(p(\cdot )\) denoting a generic density. The dimension of the latent process is its spatial dimension, although often no physically relevant interpretation might be available. We indirectly observe the latent process through a noisy set of observations \((y_n, N \ge n \ge 1)\) defined on \({\mathbb {R}}^m\), where the realizations depend only on the current value of the latent state, \(y_n | x_n \sim p(y_n| x_n)\). For convenience, we introduce the sequence notation \(i:j=(i,i+1,\ldots ,j)\) when \(j>i\). Unless otherwise mentioned, the sequence is \(y_{1:N}\) fixed throughout. Given the observation sequence, we define smoothing as the off-line estimation of the conditional joint probability density \(p(x_{l:m} | y_{1:N})\), with \(1 \le l \le m \le N\). We will be interested in the case where the target is the full conditional \(p(x_{1:N} | y_{1:N})\). Smoothing is generally a hard problem due to the high dimensionality of the state space and spatiotemporal interdependence of the latent states; below we will give a brief historical overview, and subsequently detail our contributions.

Sequential Monte Carlo methods form the backbone of most smoothing algorithms. A popular early example is the sequential importance resampling smoother of Kitagawa (1996), which utilizes the entire trajectories and final particle weights of a particle filter to generate smoothed estimates. This method suffers from severe particle degeneracy as the resampling step non-strictly decreases the available paths used to estimate the joint posterior. A solution was the algorithm of Godsill et al. (2004), which introduces a sequence of backward passes incorporating the state transition. This algorithm has linear computation cost in time, particles and number of samples. Similar algorithms like the general two-filter smoother of Briers et al. (2010) have equivalent computational costs. In Finke and Singh (2017), an approximate localization scheme is proposed for the forward–backward algorithm, including theoretical results that guarantees bounds on the asymptotic variance and bias under models that are sufficiently local. In the landmark paper of Andrieu et al. (2010), the authors introduced particle Markov Chain Monte Carlo, which combines particle filters in conjunction with either Metropolis–Hastings or Gibbs algorithms. The latter algorithm, denoted particle Gibbs, generates a single trajectory chosen according to the final particle weights from a particle filter run conditionally on a fixed trajectory. Particle Gibbs is stable if the number of particles grow at least linearly with the time series length; further theoretical analysis of ergodicity and asymptotic variance is provided in Andrieu et al. (2018) and Chopin and Singh (2015). More recently, couplings of conditional particle filters have been introduced in Jacob et al. (2020) and Lee et al. (2020), and provide unbiased estimators with asymptotically exact confidence intervals.

Unfortunately, the performance of particle Gibbs depends entirely on the efficiency of the conditional particle filter which like the particle filter can suffer from weight degeneracy. If the spatial dimension is large, the curse of dimensionality described in Bengtsson et al. (2008) implies that infeasibly many particles are required to effectively approximate the posterior; localization of proposals by exploiting spatial conditional independence was subsequently introduced in Rebeschini et al. (2015) but this method is not generically applicable. As an alternative, the space–time particle filter (Beskos et al. 2017) is applicable if the likelihood can be written in a product form of terms that depend on an increasing number of latent dimensions. In the data assimilation field, a very popular method for high-dimensional filtering is the use of the ensemble Kalman filter algorithm, but the theoretical understanding of this algorithm is still quite limited, see however Del Moral and Tugaut (2018) and de Wiljes et al. (2018) for recent work in this regard. Overall, there is no generically applicable, asymptotically exact approach that makes the particle filter viable in high dimensional time-series models.

In comparison with filtering which is known to be uniformly stable in time under reasonable assumptions, see Van Leeuwen et al. (2019), the difficulty of smoothing increases as the length of the time series increases. In such scenarios, Whiteley (2010), in the Royal Statistical Society’s discussion of Andrieu et al. (2010), proposed to incorporate a backward pass similar to the algorithm of Godsill et al. (2004) to avoid particle paucity in the early trajectories; for low spatial dimensions, the resulting algorithm was shown in Lee et al. (2020) to be computationally efficient and stable as the time horizon grows. A conceptually similar method that updates the fixed reference trajectory has been developed in Lindsten et al. (2014). As an alternative to manipulation of particle lineages, applying the particle Gibbs algorithm inside a generic Gibbs sampler over temporal blocks is proposed in Singh et al. (2017), where the authors furthermore show a stable mixing rate as the length of the time series increases. Singh et al. (2017) also shows that the sharing of states via overlapping blocks increases the mixing rate as the overlap increase. While the issue of long time series has been addressed successfully by the algorithms detailed above, the curse of spatial dimensionality indicates that particle Gibbs and more sophisticated extensions are currently unworkable in practical smoothing applications featuring high spatial dimensions.

1.2 Contributions

As a solution to the issues in high dimension, we propose a novel blocked sampling scheme based on irreversible, continuous-time piecewise deterministic Markov processes. Methods based on this class of stochastic process were originally introduced as event-chain Monte Carlo in the statistical physics literature by Bernard et al. (2009), and subsequently further developed in the computational statistics literature recently; see, for example, Bouchard-Côté et al. (2018), Bierkens et al. (2019), Wu and Robert (2020) and Power and Goldman (2019). In practice, the algorithms iterate persistent dynamics of the state variable with jumps to its direction at random event times. They also only depend on evaluations of the gradient of the log-posterior. Local versions of these samplers, see Bouchard-Côté et al. (2018) and Bierkens et al. (2020), can exploit any additive structure of the log-posterior density to more efficiently update trajectories, however as discussed above, long range dependencies of states indicate that sharing of information is desirable to achieve efficient mixing. To allow for sharing of information, we introduce a blocked version of the bouncy particle sampler of Bouchard-Côté et al. (2018) that utilizes arbitrarily designed overlapping blocks. (Our resulting algorithm is different from the approach presented in Zhao and Bouchard-Côté (2019) where the BPS is run on conditional distributions in a Metropolis-within-Gibbs-type fashion.) The blocking scheme is implementable without any additional assumptions on the target distribution and is therefore useful for generic target densities, particularly in cases where the associated factor graph is highly dense.

As our second contribution, we introduce an alternative implementation of the blocked sampler that leverages partitions to simultaneously update entire sets of blocks. The number of competing exponential clocks in the resulting sampler is independent of dimension and thus feature O(1) clocks for any target, and allows, for the first time for a piecewise-deterministic Monte Carlo algorithm, to carry out parallel updates at event times. Our numerical examples indicate that the blocked samplers can achieve noteworthy improvements compared to the bouncy particle sampler, both in terms of mixing time and effective sample size per unit of time, even without the use of parallelization. In addition, the blocked sampler provides efficient sampling of state space models when particle Gibbs methods, which are widely considered state of the art for state space models, fail due to high spatial dimensions.

2 Setup

2.1 Notation

In what follows, subscript on a variable x will denote temporal indices, while superscript indicates spatial. By \(x \sim {\mathcal {N}}(0, 1)\), we mean that x is distributed as a standard normal variable, whereas we by \({\mathcal {N}}(x; 0, 1)\) mean the evaluation at x of the standard normal density; this notation is extended to other densities. We use the standard sequence notation \(i{:}j = (i, i+1, \ldots , j-1, j)\) and \([n] = (1,2,\ldots , n-1, n)\). A generic Poisson process is denoted by \(\Pi \) and the associated, possibly inhomogeneous, rate function is the function \(t \mapsto \lambda (t)\). Let \(M_{m, n}\) be the space of \(m \times n\) real-valued matrices, with m referring to row and n to columns, respectively. We denote by \(\star \) the Hadamard product operator. The standard Frobenius norm of a matrix \(X \in M_{m,n}\) is denoted \(\Vert X \Vert _F = \sqrt{\text {tr}(X^T X)} = \sqrt{\sum _i \sum _j x_{i,j}^2}\), and the Frobenius inner product with another matrix \(Y \in M_{m,n}\) is subsequently \(\langle X, Y \rangle _F = \text {tr}(X^T Y) = \sum _i \sum _j x_{i,j}y_{i,j}\).

2.2 State space models

The class of state-space models we consider have differentiable transition and observation densities

It is thus natural to work in log-space for the remainder of the paper, and we note in this regard that all probability density functions are assumed to be normalized. The exponential notation is therefore merely a notational convenience to avoid repeated mentions of log-densities. We also only require access to derivatives of f and g which may have more convenient expressions than the full probability distribution. The negative log of the joint state density of the entire latent state \(x \in M_{d,N}\) is denoted the potential energy \(U: M_{d,N} \rightarrow {\mathbb {R}}\) and is given as

To ease notation we have dropped the explicit dependence on \(y_{1:N}\) when writing the log conditional joint state density from now on. We will often need to refer to the derivative \(\partial U / \partial x\), which we denote as the matrix map \(\nabla U: M_{d,N} \rightarrow M_{d,N}\) where the entry in the k’th row and n’th column is given by the partial derivative \(\nabla U(x)_{k,n} = \partial U(x)/\partial x_n^k.\) Again, we remind the reader that subscript on a variable x will denote temporal indices, while superscript indicates spatial.

2.3 Blocking strategies

Recall that \([n] = (1,2,\ldots , n-1, n)\). A blocking strategy \({\overline{B}}\) is a cover of the index of set of the latent states \(I = [d] \times [N]\) and solely consists of rectangular subsets. A generic block B is always of the form \(i{:}j \times l{:}m\) with \(i<j, l<m\), with the coordinates referring to spatial and temporal dimensions, respectively. The size of a block is the ordered pair (|i : j|, |l : m|). Blocks are allowed to overlap; we denote by the interior of a block the indices that it does not share with any other block. The neighborhood set of a block is

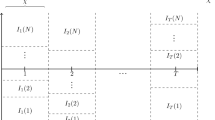

and always includes the block itself. A blocking strategy is temporal if each block in a strategy is of the form \(1{:}d \times l{:}m\), these are the most natural strategy types to consider for state-space models and will be the general focus in the rest of the paper, but the methods presented below work for arbitrary strategies. To improve mixing of blocked samplers in general, it is often necessary to design a blocking strategy such that within-block correlation between variables is large while the correlation with out-of-block variables is small; see, for example, Liu et al. (1994) or Turek et al. (2017). For state-space models, this naturally implies blocking across time, and in Fig. 1 a temporal strategy with overlap \(\xi \) and interior \(\delta \) is illustrated. We can in this case divide the blocks into even (\(B_k\) of Fig. 1 with even index k) and odd subsets such that each subset consists of non-overlapping blocks, see again Fig. 1. As analyzed in Singh et al. (2017) for blocked Gibbs samplers, temporal overlap leads to improved sharing of information across time and subsequent improved mixing. If the spatial dimension is very high, it can be necessary to block in the spatial domain as well; blocking strategies should in this case aim to exploit any spatial decorrelation if possible.

A few more remarks on notation: the restriction of \(x \in M_{d, N}\) to a block \(B = i{:}j \times l{:}m\) is the submatrix \(x_B \in M_{|i{:}j|, |l{:}m|}\) corresponding to deleting all but the rows i : j and columns l : m of x. Similarly, the block restriction of \(\nabla U\) is the map \(\nabla _B U: M_{d,N} \rightarrow M_{|i{:}j|, |l{:}m|}\); the entries of the submatrix \(\nabla _B U(x)\) are in correspondence with \(\nabla U(x)\) via \(\nabla _B U(x)_{a,b} = \nabla U(x)_{i+a-1, l+b-1}\). We extend this notation to also include the state and the velocity, with the submatrix under consideration being indicated by a subscript B.

3 Blocked bouncy particle sampler

In this section, we derive conditions under which the bouncy particle sampler of Peters et al. (2012) and Bouchard-Côté et al. (2018) can be run in blocked fashion; the resulting algorithm therefore applies to any target distribution \(\pi \). If we assume that \(\overline{B}\) consists of a single block of size \(1{:}d \times 1{:}N\), the description below reduces to the standard bouncy particle sampler, and it is therefore redundant to describe both.

The class of piecewise-deterministic Markov process we consider is a coupling of the solution x(t) of the ordinary differential equation \(\mathrm{d}x(t)/\mathrm{d}t = v(t)\), and a Markov jump process v(t) where both transition operator \(Q(v, \mathrm{d}v)\) and rate process \(\lambda (t)\) depends on x(t) as well; v(t) will henceforth be denoted the velocity. The joint process (x(t), v(t)) takes values in \(M_{d,N} \times M_{d,N}\). Given an initialization (x(0), v(0)), the state flows as \((x(t), v(t)) = (x(0) + t \cdot v(0), v(0)),\) until an event \(\tau \) is generated by an inhomogeneous Poisson process with rate \(\lambda (t)\). At this point the velocity changes to \(v(\tau ) \sim Q(v(0), \mathrm{d}v)\), and the process reinitializes at \((x(\tau ), v(\tau ))\). To use such a process for Markov chain Monte Carlo, the jump rate \(\lambda (t)\) and transition kernel Q of v(t) are chosen such that the marginal stationary distribution of \((x(t))_{t \in [0, \infty )}\) is the target distribution of interest. Exactly as in Metropolis–Hastings algorithms, we always want to move into regions of higher probability but desire to change direction, by a new choice of velocity vector, as we enter regions of declining probability. This in turn implies that the rate is determined by the directional derivative of the energy U in the direction of v, while the transition kernel Q is a deterministic involution or random velocity change, for general details; see Vanetti et al. (2017).

Blocking of this process corresponds to a localization of the rate function and transition kernel such that each block is equipped with its own random clock and corresponding local velocity updating mechanism. Subsequently, only velocities within a single block are changed at an event, while preserving the overall invariant distribution. In comparison with discrete time blocking that updates the variables one block at a time while keeping every other variable else fixed, in continuous time the block direction is changed while keeping every other direction fixed. For dimensions that are in multiple blocks, the additional clocks implies an excess amount of events compared to the base case of no overlap; the \(\phi \) variable introduced below adjusts for this discrepancy by speeding up the velocity of the shared dimensions. Intuitively, as a dimension shared by k blocks will have events k times as often, it should move at k times the speed to compensate. This also aligns exactly with discrete-time blocked sampling, where dimensions shared between blocks are updated twice as often.

We now present the blocked bouncy particle sampler in detail. We assume that the velocity is distributed such that each \(v_n^k \sim N(0,1)\) in stationarity, and the stationary joint distribution of all velocities has density \(p_v(v)\). For a blocking strategy \(\overline{B}\), we introduce the auxiliary variable \(\phi \in M_{d,N}\) with entries

\(\phi _n^k\) counts the number of blocks that include the k’th spatial dimension and n’th temporal dimension. Given \(\phi \), the resulting block-augmented flow of the ordinary differential equation driving x(t) is \(t \mapsto x + t \cdot (\phi \star v)\); as mentioned, individual dimensions of x are sped up in proportion to how many blocks includes them. With \(x \mapsto \{x\}^+ \equiv \max \{0, x\}\), the rate function for the Poisson process \(\Pi ^B\) associated with block B is

the associated superposition of all blocks is the Poisson process \(\Pi ^{{\overline{B}}} = \cup _{B \in {\overline{B}}} \Pi ^{B}\). Events generated by \(\Pi ^{\overline{B}}\) are denoted \(\tau _b\) with b referring to a bounce. Note that the inner product corresponds to the directional derivative \(\partial U(x+t\cdot v)/\partial t\) restricted to B. For the transition kernel, we define \(\textsc {reflect}^B_x(v)\) as the (deterministic) reflection of the velocity \(v_B\) in the hyperplane tangent to the block gradient at x:

while the remaining components of v are unchanged by \(\textsc {reflect}^B_x(v)\). (Note only the velocities that correspond to the block B are updated.) Variable v will also be updated by full velocity resampling via an independent homogeneous Poisson process with rate \(\gamma \) to alleviate issues with reducibility, see Bouchard-Côté et al. (2018, Section 2.2), and these event times are denoted \(\tau _r\) with r referring to refreshment. Without writing the refreshment operator, the infinitesimal generator of \((x(t), v(t))_{t \in [0,\infty )}\) is

the sum of the block-augmented linear flow \(\phi \star v\) driving x(t) and the sum of Markov jump processes updating the block-restricted velocities \(v_B\).

Proposition 1

Consider a blocking strategy \(\overline{B}\) and a target density \(\pi (x) \propto \exp \{-U(x)\}\). With the generator defined in Eq. (1), the blocked bouncy particle sampler has invariant distribution \(\pi \otimes p_v\).

Proof

See Sect. A.1. \(\square \)

The most closely corresponding method to the blocked bouncy particle sampler is the factor algorithm presented in Bouchard-Côté et al. (2018, Section 3.1). If the target distribution factorizes over a finite set of individual factors \(\overline{F}\) such that

where \(x_f\) corresponds to the restriction of the components in the factor, the local bouncy particle sampler of Bouchard-Côté et al. (2018) can be applied. Note that the derivation of the local bouncy particle sampler in Bouchard-Côté et al. (2018) is only considered under the above sum structure for the log density U(x) and where each block is the complete set of variables \(x_f\) for a factor. This contrasts with the blocked sampler, where blocks are allowed to share variables arbitrarily and without the need for the energy to satisfy a sum structure. The blocked sampler algorithm in practice functions as a hybrid between the Zig-Zag sampler of Bierkens et al. (2019) and the bouncy particle sampler: it incorporates the reflection operator when performing bounces, which allows for updating the velocity vector for multiple dimensions at event times, but combines a more local rate structure akin to that of the Zig-Zag sampler. In particular, if \(|B| = 1\) for all \(B \in \overline{B}\) and \(\phi _n^k = 1\) for all \(k, n \in [d] \times [N]\), the algorithm reduces to a process very close to the Zig-Zag sampler, with the velocity vector components “flipping” at their individual reflection event times (but an invariant normal distribution for the velocities compared to the binary uniform distribution of the standard Zig-Zag sampler.) In this sense, the Zig-Zag sampler is naturally blocked, but does not allow for sharing of information across dimensions. In Algorithm 1, the blocked bouncy particle sampler is presented in an implementable form.

3.1 Simulation

Due to the simplicity of the flow the computational challenge of the algorithm is to generate correctly distributed event times via Poisson thinning. The thinning procedures of Lewis and Shedler (1979) for simulating inhomogeneous Poisson processes is a two-step procedure that corresponds to finding a bounding process where direct simulation is available, and subsequently using rejection sampling to keep correctly distributed event times.

To employ thinning, local upper bounds \(t \mapsto {\bar{\lambda }}_{B}(t)\) for each block needs to be estimated. For some fixed lookahead time \(\theta > 0\) and current position (x, v), local bounds satisfy

and after \(\theta \) time has passed, the bounds are recomputed at the new location \((x + \theta (\phi \star v),v)\), if no reflection or refreshment has occurred in the interrim. The right-hand side is the worst-case bound and in all of our numerical examples we use this bound. In some particular cases, universal global bounds can be derived, but generally these bounds will have to be estimated by evaluating the rate function at some future time point. If the blocks are individually log-concave densities, evaluating the rate at the lookahead time, \(\lambda _B(\theta ),\) gives a valid bound until an event occurs, or alternatively, one can apply the convex optimization procedure described in Bouchard-Côté et al. (2018, Section 2.3.1). If blocks are overlapping, the local bounds of blocks in the neighborhood N(B) become invalid after a reflection and require updating. The generic process is given in Algorithm 2. Given the global bounding function

an event time \(\tau \) is simulated from \(\Pi _{{{\bar{\lambda }}}_{{\overline{B}}}}\), a block B is selected with probability proportional to its relative rate \({{\bar{\lambda }}}_B(\tau )/{{\bar{\lambda }}}_{{\overline{B}}}(\tau )\), and finally a reflection is carried out with probability corresponding to the true rate function relative to the local bound \(\lambda _B(\tau )/{{\bar{\lambda }}}_B(\tau )\). Given the local rate functions, the dominant cost is the unsorted proportional sampling of a block, which is done in \(O(|{\overline{B}}|)\). We propose to choose \(\theta \) such that the expected number of events generated by the bounding process on the interval \([0, \theta ]\) is equal to 1, as we can always decrease the computational cost of calculating bounds by changing \(\theta \) to another value that brings the expectation closer to 1. In our numerical examples, we have tuned \(\theta \) to approximately satisfy this requirement.

4 Parallel velocity updates via partitioned blocking strategies

As mentioned in the introduction, Singh et al. (2017) shows that the even–odd blocking strategy with overlaps is known to improve mixing, and furthermore allows for parallelization of updates in the case of Kalman smoothers or particle filter-based smoothing algorithms. Conversely, the current crop of piecewise-deterministic Markov process-based samplers are all purely sequential, in the sense that at each event time only the velocity of a single factor or dimension is updated, and these samplers therefore fail to exploit any conditional independence structure available. We will in this section provide an alternative implementation (see Algorithm 3) of the blocked bouncy particle sampler that mimics the even–odd strategy of discrete-time blocked samplers, extends to the fully spatially blocked setting, and allows for parallel implementation of updates at event times. To utilize this method, we need a partition of the blocking strategy into sub-blocking strategies such that no two blocks in any sub-blocking strategy share any variables. To this end, we capture the no-overlap condition precisely in the following assumption:

Assumption 1

Consider a blocking strategy \({\overline{B}}\). We will assume given a partition \(\cup _{k=1}^K {\overline{B}}_k = {\overline{B}}\) of the blocking strategy that satisfies, for each sub-blocking strategy \({\overline{B}}_k, k = 1,2,\ldots K\) and for all blocks \(B, B' \in {\overline{B}}_k\) such that \(B \ne B'\), that

This assumption also applies to fully spatiotemporal blocking schemes and not just temporal strategies. We will for illustrative purposes only describe in detail the simplest even–odd scheme of temporal blocking, which corresponds to \(K=2\) sub-blocking strategies such that no blocks that are temporally adjacent are in the same sub-blocking strategy. As shown in Fig. 1, each block is assigned a unique integer number k. We then partition the strategy into two sets of blocks based on whether k is an even or odd integer, and denote the sub-blocking strategies \(\{ {\overline{B}}_\mathrm{odd}, {\overline{B}}_\mathrm{even} \}\). In Fig. 1, we illustrate such a strategy, where the top row shows the even blocks, and the lower row the odd blocks. Note that individual even blocks have no state variables in common (similarly for individual odd blocks). Furthermore, for a Markovian state-space model, each block is chosen to be a consecutive time sequence of states.

For such a sub-blocking strategy, we then find the maximum rate among all blocks inside a sub-blocking strategy

and denote their associated Poisson processes \(\Pi _\mathrm{odd}^{{\overline{B}}}\) and \(\Pi _\mathrm{even}^{{\overline{B}}}\). By construction, we will have two exponential clocks, one for the set of blocks \({\overline{B}}_\mathrm{odd}\) and one for \({\overline{B}}_\mathrm{even}\). To detail what happens at an event time, consider an event generated by the superposition of \(\Pi _\mathrm{odd}^{{\overline{B}}}\) and \(\Pi _\mathrm{even}^{{\overline{B}}}\) and say \(\Pi _\mathrm{odd}^{{\overline{B}}}\) generated the event. Then for each block \(B \in {\overline{B}}_\mathrm{odd}\), the following kernel \(Q_x^B(v, \mathrm{d}v)\) is used to update the velocity of that block

This simultaneous velocity update of all the blocks in the particular set of blocks is permissible since the blocks of each set have no states in common, i.e., do not overlap. In Algorithm 3, we provide pseudocode for a fully implementable version of the blocked bouncy particle sampler under an even–odd partition of the blocking strategy.

We will show invariance for the particular case considered above; the result holds in general for any partition satisfying Assumption 1.

Proposition 2

Let \(\{ {\overline{B}}_\mathrm{odd}, {\overline{B}}_\mathrm{even} \}\) be a temporal strategy for \(\pi \) and \({\overline{B}}\) satisfying Assumption 1. Then the Markov process with associated generator

has invariant distribution \(\pi \otimes p_v\), where \({{\widehat{\Lambda }}}_\kappa (x,v)\) is defined in Eq. (4).

Proof

See Sect. A.2. \(\square \)

In contrast to the basic blocked BPS, the generator of Proposition 2 has a single overall event time generated from sum of odd and even strategies, but multiple overlapping event times for the blocks contained in the sub-blocking strategy that generated the event. The even–odd algorithm therefore corresponds to an implementation that “lines up” the event times in such a way that is beneficial for a parallel implementation. Relative to the blocked bouncy particle sampler, the even–odd implementation iterates over every block in the sub-blocking strategy that generated the event, updating velocities of the blocks with probability proportional to the ratio of the block’s rate \(\lambda _B\) evaluated at the current state (x, v) to the rate of the sub-blocking strategy given by the max-bound. It therefore becomes possible to parallelize the updating step, for example with multiple processors allocated to each sub-blocking strategy, say one processor per block of the sub-blocking strategy. In contrast to the generator in Proposition 1, the event rate of the sampler in Proposition 2 is now the maximum over the rates in a sub-blocking strategy which should grow slower than the sum rate in Proposition 1 as the global dimension (d) and thus number of blocks grow.

If the spatial dimension is significant, it will be necessary to also carry out spatial blocking. Under a full spatiotemporal strategy, the above implementation naturally extends to a four clock system, consisting of alternating even–odd temporal strategies over each ‘row’ of spatial blocks, such that that no blocks from the same sub-strategy overlap; this in turn guarantees that Assumption 1 is satisfied.

In practice, \({\widehat{\Lambda }}_\kappa \) is not available, as we can not evaluate the gradient in continuous time. Similarly to Algorithm 1, we employ a lookahead time \(\theta \) and a trivial global bound for the Poisson rates that is valid for the interval \((t,t+\theta ]\) where as before t is the instantaneous runtime. For any fixed \(\theta > 0\), assuming \((x(t),v(t))=(x,v)\), let the globally valid bound \({{\bar{\Lambda }}}_\kappa \), with \(\kappa \in \{\mathrm{odd}, \mathrm{even}\}\), be given as

As in Algorithm 1, we use this rate to define a bounding Poisson processes and apply thinning to find the appropriate events, see Line 4 in the algorithm. In practical implementations of piecewise-deterministic algorithms, tighter bounds for the event times are in general necessary to avoid wasteful computation from false events. Our max-type bound is tighter than the sum-type bound, and we can therefore have a larger lookahead time \(\theta \). (Again, \(\theta \) should be chosen such that the expected number of events generated by the bound in an interval of size \(\theta \) is 1.) With the max-type bound, the even–odd implementation will have larger event times compared to the blocked BPS.

The growth of the rate of the max-type bound, as a function of the number of blocks, is studied in the following result. In particular, under plausible assumptions on the tail-decay of the target distribution we can bound the expected rate.

Lemma 1

Assume that for all \(B \in \overline{B}\)

for some \(\alpha > 0\). Then both the odd and even sub-blocking strategies, indicated by subscript \(\kappa \), satisfies

Proof

See Sect. A.3. \(\square \)

In the Gaussian case, the rate function is a mixture of a point-mass at zero and a density proportional to the modified Bessel function of the second kind with order depending on the dimension, and this function is known to have sub-exponential decay for any d, see for example Yang and Chu (2017). We note that the key point of Lemma 1 is to verify that utilizing the maximum over blocks does not lead to pathological behavior.

To elaborate on the computational costs of the samplers, we compare the cost to run the samplers for one second. The exponential event times of Poisson processes indicates we can expect \(O(\log |\overline{B}_{\kappa }|)\) events per time unit (Line 3.4) via \({\widehat{\Lambda }}_\kappa \), each costing \(O(|\overline{B}_{\kappa }|)\) evaluations of blocks (Line 3.18) per kernel \(Q_x^B\). Thus the total cost of the even–odd sampler per second is \(O(|\overline{B}_{\kappa }|\log |\overline{B}_{\kappa }|)\). In comparison, the local bouncy particle sampler has a rate function defined as \(\Lambda _{\overline{F}} = \sum _{F \in \overline{F}} \lambda _F(x,v) = \sum _{F \in \overline{F}} \max \{ 0, \langle \nabla U_F(x), v \rangle \}\), with \(\overline{F}\) is the set of factors of U, \(\nabla U_F(x)\) is the gradient of the factor \(U_F(x)\). In this case, the event rate growth is of the order \(O(|\overline{F}|)\) by the inequality

combined with O(1) costs per event time, for a total cost of \(O(|\overline{F}|)\) per sampler second. However, we note again that each of the \(O(|\overline{B}_{\kappa }|)\) evaluations of the blocks can be carried out fully in parallel as no velocities are shared across ringing blocks. Furthermore, in the continuous-time Markov Chain Monte Carlo literature, the metric of effective sampler size per unit of trajectory length has been considered, and it is at this stage not known theoretically how the blocked BPS and the local BPS differ under this alternative measure of efficiency.

5 Numerical experiments

We will in the following two sections provide two numerical experiments comparing the blocked BPS, the local BPS, and particle Gibbs. As we are primarily interested in latent state estimation, we have not considered parameter inference in the examples below. A natural approach here would be to run a Metropolis-within-Gibbs sampler that proposes an update to the parameter vector after, for example, running the continuous-time sampler for a second. The proposal of the parameter vector could be done in discrete time, or alternatively using the BPS for parameter vector. This latter strategy was proposed for continuous-time Markov chains in Zhao and Bouchard-Côté (2019). Alternatively, the parameters could be inferred jointly in continuous-time together with the latent states; the parameter vector could be included in the blocking strategy, in particular if the parameter vector is also dynamic across time.

5.1 Linear Gaussian toy model

We consider an autoregressive model of order 1 given by

with A an autoregressive matrix with entries \(A_{ij} = \text {kern}(i,j) /\left( \psi + \sum _{l=1}^d \text {kern}(i,l)\right) \) with \(\text {kern}(i,j) = \exp \left\{ -\frac{1}{2\sigma ^2}\right. \left. |i-j|^2 \right\} \) and \(\psi > 0\) a constant, and finally, \(x_0 \sim {\mathcal {N}}(0, I_d)\). First, we want to compare the empirical mixing speed of the blocked and factor bouncy particle samplers. We consider a simulated model of \(d = 3\) and \(N=1000\), \(\sigma ^2 = 5\), and \(\psi = 0.1\). We initialize each sampler at the zero vector, run until \(T = 1000\), and thin at every 0.1 sampler second. In Table 1, we provide detailed specifications of the setups for the various algorithms and results from a representative run of the algorithms.

In Fig. 2a, we plot the log of the mean square error as a function of time for increasing block overlap; empirically the blocked sampler with block width 20 and overlap 10 reaches stationarity around three times faster than the factor version. In Fig. 2b, we compare the mean squared jumping distance of the first spatial dimension after discarding the first 25% of samples. For the overlapping sections, the exploration is, due to the shared overlap and \(\phi \), happening at twice the speed, and, accordingly, four times the mean-square jumping distance compared to the factor algorithm. In terms of effective sample size per second, the blocked and even–odd samplers are about 30–40% and 100% more efficient, respectively, than the factor sampler, without using any parallel implementation. It is observed in general for any choice of d and T that the benefits of speeding up the dimensions compensate for the increased computational cost due to the overlaps. We also note that for models like this where the spatial dimension is low, there is not a strong argument to use PDMP-based methods as particle Gibbs with a basic particle filter will be more than adequate.

a Mean square error estimate per unit of CPU time of the autoregressive Gaussian model as the overlap varies. b Mean square jump distance for the standard bouncy particle sampler and blocked counter-part with overlaps 9 and 10, showcasing the impact \(\phi \) has on exploration. In particular, the dips for the overlap 9 case corresponds to the variables that are part of a single block only, and subsequently are not sped up. We show a subset of 200 time points to enhance detail

Second, we consider the case where \(d=200\) and \(T=100\) to illustrate the benefits of spatial blocking in high-dimensional scenarios. In this case we also include a spatiotemporal blocking strategy, and the details of the example and a representative simulation are provided in Table 2. The model and example parameters are otherwise as described above.

The spatiotemporally blocked sampler significantly outperforms the other implementations, with effective sample size per second typically 2–4 times larger, evidenced over multiple runs with random trajectories generated under the model. The even–odd temporal implementation blocked strategy is often still efficient even when the number of dimensions per block is up to 400, but the relative ESS/s is on aggregate lower than the spatiotemporally blocked version. Furthermore, this discrepancy will only increase under models with even higher spatial dimension. As before, no concurrent implementation was used, indicating that additional improvements in performance are possible for the partitioned blocking schemes when parallelized over multiple processors.

5.2 Heavy-tailed stochastic volatility with leverage effects

We will in this section consider an example based a stochastic volatility model of the Dow Jones Industrial Average (DJIA) equity index to explore the efficiency of the even–odd implementation of the BPS in comparison with two benchmark implementations of particle Gibbs when the spatial dimension is moderate and the length of the time-series is long. Stochastic volatility models are widely used in finance and econometrics. They model the volatility of financial assets as a dynamic latent process to capture the time-varying and persistent nature of changes in asset returns. We will analyze a general model proposed by Ishihara and Omori (2012) that incorporates heavy-tailed observations and leverage effects, see Cont (2001) for empirical discussion of these effects. To test the blocked algorithms on a reasonably challenging dataset, we attempt to estimate the latent volatility of the 29 continuously available constituents of the DJIA between April 1, 2017, and April 6, 2020, for a total of \(29 \times 757 = 21{,}953\) latent states. This period is characterized both by relatively low volatility and historical high levels uncertainty due to the COVID-19 pandemic, see WHO (2020) for example.

Let \(x_n \in {\mathbb {R}}^d\) be an unobserved vector of volatilities, and \(y_n \in {\mathbb {R}}^d\) be observed asset log returns. The dynamics over a fixed time horizon \(n = 1,2,\ldots , N\) are

with \(A =\text {diag}(\alpha _1, \alpha _2, \ldots , \alpha _d)\), where \(\alpha _i \in [0, 1), \forall i \in \{1, 2, \ldots , d\}\). The noise is jointly modelled as

and \({\widehat{\Sigma }}\) a \(2d \times 2d\) matrix. The off-diagonal block matrices introduce leverage effects in the model if they are negative definite. Finally, for some \(\nu \in {\mathbb {N}},\) \(\gamma _n \sim \Gamma (\frac{\nu }{2}, \frac{\nu }{2})\) is a memory-less stochastic process independent of \((\eta _n, \epsilon _n)\). The resulting observation noise is multivariate t-distributed with \(\nu \) degrees of freedom, details are in Ishihara and Omori (2012). For the initialization, we assume that \(x_1 \sim {\mathcal {N}}(0, (I_d - AA)^{-1} \Sigma _{\eta })\). Define \(y_n^\gamma = \sqrt{\gamma _n} y_n\) as the observations whenever \(\gamma _n\) is known; it follows that \(y_n^\gamma = \Lambda _n \epsilon _n\) and inference can be carried out with this observation sequence instead. Conditional on \(\gamma _{1:N}\) and using basic properties of multivariate Gaussians, the transition distributions can be written as

implying that the distribution has a more complicated dependence structure, as the past observation feeds into the next realized state. Furthermore, the state transition is nonlinear in the previous state variable due to the leverage effect.

For the blocking strategy, use a spatiotemporal strategy with blocks 9 timepoints wide, 7 spatial dimensions high, and each block has temporal overlap 4 and spatial overlap 3, giving a total of \(151 \times 6 = 906\) blocks. Due to the better performance of partitioned blocked bouncy particle sampler in the previous example, we only compare this method with blocked particle Gibbs, see Singh et al. (2017), and the particle Gibbs with ancestor sampling algorithm of Lindsten et al. (2014), both using a bootstrap particle filter as proposal mechanism. For the blocked particle Gibbs sampler, we let the blocks be 25 observations wide and have overlap 5. For a fair comparison, we set the number of particles to 500 which leads to an average time per sample quite close to that of the spatiotemporal blocked bouncy particle sampler for both samplers. We generated 2000 samples via each algorithm, and initialized each at the \(d \times N\) zero vector, and for the velocity we used the \(d \times N\) vector of ones. Typically, estimation of latent states will be carried out inside a Gibbs sampling algorithm that also estimates parameters, indicating that prior knowledge of the states are retained, whereas this example tests the significantly more difficult case of no prior information on the latent states.

In Fig. 4a, we illustrate the posterior energy. The blocked particle Gibbs sampler moves in a wide band of posterior energy, but never reaches levels of higher posterior probability. This is in contrast to the results reported in Singh et al. (2017) where the dimension of the hidden state is much lower and thus the state transition density has better forgetting properties than our higher-dimensional example. Even if this issue could be remedied, see Bunch et al. (2015), implementing particle Gibbs with both temporal and spatial blocking appears non-trivial in contrast to the ease of which it can be achieved with the BPS. The ancestor sampling-based particle Gibbs sampler similarly does not generate proposals that have high posterior probability. Conversely, the bBPS reaches stationarity in less than 100 samples, and subsequently mixes across the posterior: the auto-correlation function, plotted in Fig. 4b, reaches zero around a lag of 20 samples, indicating adequate mixing for a posterior of this dimension. In Fig. 5, we plot the correlation matrix of the assets, and also the estimated latent volatility via the posterior mean. It is quite clear that the volatilities show weaker correlation across the assets, but appear to preserve some of the structure of seen in the correlation matrix of the log returns.

References

Andrieu, C., Doucet, A., Holenstein, R.: Particle Markov chain Monte Carlo methods. J. R. Stat. Soc. Ser. B Stat. Methodol. 72(3), 269–342 (2010)

Andrieu, C., Lee, A., Vihola, M., et al.: Uniform ergodicity of the iterated conditional SMC and geometric ergodicity of particle Gibbs samplers. Bernoulli 24(2), 842–872 (2018)

Bengtsson, T., Bickel, P., Li, B., et al.: Curse-of-dimensionality revisited: collapse of the particle filter in very large scale systems. Probability and Statistics: Essays in Honor of David A. Freedman, pp. 316–334. Institute of Mathematical Statistics, London (2008)

Bernard, E.P., Krauth, W., Wilson, D.B.: Event-chain Monte Carlo algorithms for hard-sphere systems. Phys. Rev. E 80(5), 056704 (2009)

Beskos, A., Crisan, D., Jasra, A., Kamatani, K., Zhou, Y.: A stable particle filter for a class of high-dimensional state-space models. Adv. Appl. Probab. 49(1), 24–48 (2017)

Bierkens, J., Fearnhead, P., Roberts, G.: The zig-zag process and super-efficient sampling for Bayesian analysis of big data. Ann. Stat. 47(3), 1288–1320 (2019)

Bierkens, J., Grazzi, S., van der Meulen, F., Schauer, M..: A piecewise deterministic Monte Carlo method for diffusion bridges. arXiv preprint arXiv:2001.05889 (2020)

Bouchard-Côté, A., Vollmer, S.J., Doucet, A.: The bouncy particle sampler: a nonreversible rejection-free Markov chain Monte Carlo method. J. Am. Stat. Assoc. 113(522), 855–867 (2018)

Briers, M., Doucet, A., Maskell, S.: Smoothing algorithms for state-space models. Ann. Inst. Stat. Math. 62(1), 61 (2010)

Bunch, P., Lindsten, F., Singh, S.: Particle Gibbs with refreshed backward simulation. In: 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 4115–4119. IEEE (2015)

Cappé, O., Moulines, E., Ryden, T.: Inference in Hidden Markov Models (Springer Series in Statistics). Springer, New York (2006)

Chopin, N., Singh, S.S.: On particle Gibbs sampling. Bernoulli 21(3), 1855–1883 (2015)

Cont, R.: Empirical properties of asset returns: stylized facts and statistical issues. Quant. Finance 1, 223–236 (2001)

de Wiljes, J., Reich, S., Stannat, W.: Long-time stability and accuracy of the ensemble Kalman–Bucy filter for fully observed processes and small measurement noise. SIAM J. Appl. Dyn. Syst. 17(2), 1152–1181 (2018)

Del Moral, P., Tugaut, J.: On the stability and the uniform propagation of chaos properties of ensemble Kalman–Bucy filters. Ann. Appl. Probab. 28(2), 790–850 (2018)

Durmus, A., Guillin, A., Monmarché, P.: Piecewise deterministic markov processes and their invariant measure. arXiv preprint arXiv:1807.05421 (2018)

Ethier, S.N., Kurtz, T.G.: Markov Processes: Characterization and Convergence, vol. 282. Wiley, New York (2009)

Finke, A., Singh, S.S.: Approximate smoothing and parameter estimation in high-dimensional state-space models. IEEE Trans. Signal Process. 65(22), 5982–5994 (2017)

Godsill, S.J., Doucet, A., West, M.: Monte Carlo smoothing for nonlinear time series. J. Am. Stat. Assoc. 99(465), 156–168 (2004)

Holderrieth, P.: Cores for piecewise-deterministic Markov processes used in Markov chain Monte Carlo. arXiv preprint arXiv:1910.11429 (2019)

Ishihara, T., Omori, Y.: Efficient Bayesian estimation of a multivariate stochastic volatility model with cross leverage and heavy-tailed errors. Comput. Stat. Data Anal. 56(11), 3674–3689 (2012)

Jacob, P.E., Lindsten, F., Schön, T.B.: Smoothing with couplings of conditional particle filters. J. Am. Stat. Assoc. 115(530), 721–729 (2020)

Kitagawa, G.: Monte Carlo filter and smoother for non-Gaussian nonlinear state space models. J. Comput. Graph. Stat. 5(1), 1–25 (1996)

Lee, A., Singh, S.S., Vihola, M., et al.: Coupled conditional backward sampling particle filter. Ann. Stat. 48(5), 3066–3089 (2020)

Lewis, P.A.W., Shedler, G.S.: Simulation of nonhomogeneous Poisson processes by thinning. Naval Res. Logist. Q. 26(3), 403–413 (1979)

Lindsten, F., Jordan, M.I., Schön, T.B.: Particle Gibbs with ancestor sampling. J. Mach. Learn. Res. 15(1), 2145–2184 (2014)

Liu, J.S., Wong, W.H., Kong, A.: Covariance structure of the Gibbs sampler with applications to the comparisons of estimators and augmentation schemes. Biometrika 81(1), 27–40 (1994)

Peters, E.A.J.F., et al.: Rejection-free Monte Carlo sampling for general potentials. Phys. Rev. E 85(2), 026703 (2012)

Power, S., Goldman, J.V.: Accelerated sampling on discrete spaces with non-reversible Markov processes. arXiv preprint arXiv:1912.04681 (2019)

Rebeschini, P., Van Handel, R., et al.: Can local particle filters beat the curse of dimensionality? Ann. Appl. Probab. 25(5), 2809–2866 (2015)

Rigollet, P., Hütter, J.-C.: High Dimensional Statistics (2015)

Singh, S.S., Lindsten, F., Moulines, E.: Blocking strategies and stability of particle Gibbs samplers. Biometrika 104(4), 953–969 (2017)

Turek, D., de Valpine, P., Paciorek, C.J., Anderson-Bergman, C., et al.: Automated parameter blocking for efficient Markov chain Monte Carlo sampling. Bayesian Anal. 12(2), 465–490 (2017)

Van Leeuwen, P.J., Künsch, H.R., Nerger, L., Potthast, R., Reich, S.: Particle filters for high-dimensional geoscience applications: a review. Q. J. R. Meteorol. Soc. 145(723), 2335–2365 (2019)

Vanetti, P., Bouchard-Côté, A., Deligiannidis, G., Doucet, A.: Piecewise deterministic Markov chain Monte Carlo. arXiv preprint arXiv:1707.05296 (2017)

Ward, J.H.: Hierarchical grouping to optimize an objective function. J. Am. Stat. Assoc. 58(301), 236–244 (1963)

Whiteley, N.: Discussion on particle Markov chain Monte Carlo methods. J. R. Stat. Soc. Ser. B Stat. Methodol. 72, 306–307, 01 (2010)

WHO (2020) Coronavirus disease 2019 (Covid-19): situation report, 67

Wu, C., Robert, C.P.: Coordinate sampler: a non-reversible Gibbs-like MCMC sampler. Stat. Comput. 30(3), 721–730 (2020)

Yang, Z.-H., Chu, Y.-M.: On approximating the modified bessel function of the second kind. J. Inequal. Appl. 2017(1), 1–8 (2017)

Zhao, T., Bouchard-Côté, A.: Analysis of high-dimensional continuous time Markov chains using the local bouncy particle sampler. arXiv preprint arXiv:1905.13120 (2019)

Acknowledgements

JVG acknowledges financial support from an EPSRC Doctoral Training Award (Grant No. 10429838).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Appendices

A Proofs

1.1 A.1 Proof of Proposition 1

Proof

Denoting the domain of \({\mathcal {L}}^{\textsc {bBPS}} \) by \(D({\mathcal {L}}^{\textsc {bBPS}})\), invariance follows if \(\forall f \in \overline{D} \subset D({\mathcal {L}}^{\textsc {bBPS}})\), with \(\overline{D}\) a core for \(({\mathcal {L}}^{\textsc {bBPS}}, D({\mathcal {L}}^{\textsc {bBPS}}))\), that

see Ethier and Kurtz (2009, Chapter 9). Identification of the core for the generators associated with piecewise deterministic Markov processes is quite technical, we refer to Durmus et al. (2018, Section 7) and Holderrieth (2019, Section 5) for details. We from now on assume for the test function that \(f \in C^{1,0}_0( ({\mathbb {R}}^d \times {\mathbb {R}}^N) \times ({\mathbb {R}}^d \times {\mathbb {R}}^N) \rightarrow {\mathbb {R}})\), for the density that \(U(x) \in C^1({\mathbb {R}}^d \times {\mathbb {R}}^N \rightarrow {\mathbb {R}})\). The proof in essence follows that of Proposition 1 in Bouchard-Côté et al. (2018). For the first part of the generator associated with the linear flow, consider first the integral with respect to \(\pi (x)\). We have

where Z is the normalizing constant \(Z = \int e^{-U(x)}\). Applying integration-by-parts we immediately get

by integrability of the functions in the domain of \({\mathcal {L}}^\mathrm{bBPS}\). For the second part, note that

where the last line follows by the definition of \(\phi \). Consider then the integral of the jump dynamics generator

Using that \((\textsc {reflect}^{B}_x)^{-1} = \textsc {reflect}^{B}_x\) by involution and the norm-preserving property of the restricted reflection operator we get

so we have from using the identity above that

which implies the result. \(\square \)

1.2 A.2 Proof of Proposition 2

Proof

We will show that the eoBPS is a special case of the blocked bouncy particle sampler, we again subdue dependence on refreshments. Writing out the integral with respect to \(Q^B_x,\) we have

which by Proposition 1 gives the result. \(\square \)

1.3 A.3 Proof of Lemma 1

Proof

We will just consider the odd strategy in the proof, everything translates seamlessly. By Hölders inequality, we have for any \(p \in {\mathbb {N}}\)

For any \(B \in \overline{B}_\mathrm{odd},\) by adapting the proof of Lemma 1.10 in Rigollet and Hütter (2015), we have by the sure positivity of the rate function that

such that in particular

Optimizing over p leads to \(p^* = \log |{\overline{B}}_\mathrm{odd}| \), which together with the bound gives

implying the result. \(\square \)

B Parameters of the stochastic volatility model

1.1 B.1 Choice of parameters

The daily asset prices \((p_n)_{n=1,2,\ldots , N}\) is collected from eSignal Data Access and transformed to log returns via the relation \(y_n = \log p_n / p_{n-1}\). As our paper is centered on latent state estimation, we have foregone a full Bayesian parameter estimation. Instead, for all unobserved quantities we have used the parameters proposed by Ishihara and Omori (2012) Section 3, these are based on previous empirical studies by the authors and quite closely correspond to what is inferred during their parameter estimation procedure on S&P 500 data. In particular, we set the persistence parameter to \(\alpha = 0.99\) and use \(\nu = 15\) degrees of freedom for the multivariate t-distribution.

For the unobserved volatility covariance matrix \(\Sigma _\eta \), the cross-asset correlation is set at 0.7, and the same standard deviation is assumed for each asset, 0.2. For the leverage matrix, the intra-asset parameter is set at \(\Sigma _{\rho , ii} = -0.4\) and cross-asset leverage \(\Sigma _{\rho ,ij} = -0.3\).

We estimate the return covariance matrix \(\Sigma _\epsilon \) directly from the observed log returns over the entire period. The values we arrive at from this procedure is again close to what is empirically observed, indicating it is a reasonable parameter value to use for a latent states estimate. If we were to run a spatially blocked scheme, we could for example apply a hierarchical clustering algorithm like the algorithm of Ward (1963) to learn the relationship between the assets, and then rearrange the order of the assets to match the order of the clustering procedure. As discussed this should have a beneficial effect on mixing, as the blocks become more localized.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Goldman, J.V., Singh, S.S. Spatiotemporal blocking of the bouncy particle sampler for efficient inference in state-space models. Stat Comput 31, 68 (2021). https://doi.org/10.1007/s11222-021-10034-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11222-021-10034-6