Abstract

Stochastic approximation methods play a central role in maximum likelihood estimation problems involving intractable likelihood functions, such as marginal likelihoods arising in problems with missing or incomplete data, and in parametric empirical Bayesian estimation. Combined with Markov chain Monte Carlo algorithms, these stochastic optimisation methods have been successfully applied to a wide range of problems in science and industry. However, this strategy scales poorly to large problems because of methodological and theoretical difficulties related to using high-dimensional Markov chain Monte Carlo algorithms within a stochastic approximation scheme. This paper proposes to address these difficulties by using unadjusted Langevin algorithms to construct the stochastic approximation. This leads to a highly efficient stochastic optimisation methodology with favourable convergence properties that can be quantified explicitly and easily checked. The proposed methodology is demonstrated with three experiments, including a challenging application to statistical audio analysis and a sparse Bayesian logistic regression with random effects problem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Maximum likelihood estimation (MLE) is central to modern statistical science. It is a cornerstone of frequentist inference (Berger and Casella 2002), and also plays a fundamental role in parametric empirical Bayesian inference (Carlin and Louis 2000; Casella 1985). For simple statistical models, MLE can be performed analytically and exactly. However, for most models, it requires using numerical computation methods, particularly optimisation schemes that iteratively seek to maximise the likelihood function and deliver an approximate solution. Following decades of active research in computational statistics and optimisation, there are now several computationally efficient methods to perform MLE in a wide range of classes of models (Gentle et al. 2012; Boyd and Vandenberghe 2004).

In this paper, we consider MLE in models involving incomplete or “missing” data, such as hidden, latent or unobserved variables. Expectation maximisation (EM) optimisation methods (Dempster et al. 1977) are common strategies to obtain approximate solutions in this setting. However, they rely on a maximization step of a surrogate which is not possible in most models (Robert and Casella 2004). Several strategies can be considered to overcome this issue. In particular, we consider Robbins–Monro stochastic approximation (SA) schemes that use a Monte Carlo stochastic simulation algorithm to approximate the gradients that drive the optimisation procedure (Robbins and Monro 1951; Delyon et al. 1999; Kushner and Yin 2003; Fort et al. 2011). When combined with Markov chain Monte Carlo (MCMC) algorithm, SA schemes provide a powerful general methodology which is simple to implement, has a detailed convergence theory (Atchadé et al. 2017), and can be easily applied to a wide range of moderately low-dimensional models.

The expectations and demands on SA methods constantly rise as we seek to address larger problems and provide stronger theoretical guarantees on the solutions delivered. Unfortunately, existing SA methodology and theory do not scale well to large problems. The reasons are twofold. First, the family of MCMC kernels driving the SA scheme needs to satisfy uniform geometric ergodicity conditions that are usually difficult to verify for high-dimensional MCMC kernels. Second, the existing theory requires using asymptotically exact MCMC methods. For large models, these are usually high-dimensional Metropolis–Hastings methods such as the Metropolis-adjusted Langevin algorithm (Roberts and Tweedie 1996) or Hamiltonian Monte Carlo (Girolami and Calderhead 2011; Durmus et al. 2017), which are sometimes difficult to calibrate within the SA scheme to achieve a prescribed acceptance rate (both automatic and manual calibration can be difficult as the target density changes with each iteration of the SA scheme). For these reasons, practitioners rarely use SA schemes with Markovian disturbances in high-dimensional settings.

In this paper, we propose to address these limitations by exploiting recent developments in inexact MCMC methodology to drive the SA scheme, particularly unadjusted Langevin algorithms, which have easily verifiable geometric ergodicity conditions and are easy to calibrate (Durmus and Moulines 2017; Dalalyan 2017). This will allow us to design a high-dimensional stochastic optimisation scheme with favourable convergence properties that can be quantified explicitly and easily checked.

Our contributions are structured as follows: Sect. 2 formalises the class of MLE problems considered and presents the proposed stochastic optimisation method, which is based on a SA approach driven by an unadjusted Langevin algorithm. Detailed theoretical convergence results for the method are reported in Sect. 3, which also describes a generalisation of the proposed methodology and theory to other inexact Markov kernels. Section 4 presents three numerical experiments that demonstrate the proposed methodology in a variety of scenarios. The online supplementary material includes additional theoretical results, postponed proofs and some details on computational aspects.

2 The stochastic optimisation via unadjusted Langevin method

The proposed Stochastic Optimisation via Unadjusted Langevin (SOUL) method is useful for solving maximum likelihood estimation problems involving intractable likelihood functions. The method is a SA iterative scheme that is driven by an unadjusted Langevin MCMC algorithm. Langevin algorithms are very efficient in high dimensions and lead to an SA scheme that inherits their favourable convergence properties.

2.1 Maximum marginal likelihood estimation

Let \(\varTheta \) be a convex closed set in \(\mathbb {R}^{d_{\varTheta }}\). The proposed optimisation method is well-suited for solving maximum likelihood estimation problems of the form

where the parameter of interest \(\theta \) is related to the observed data \(y \in \textsf {Y}\) by a likelihood function \(p(y,x|\theta )\) involving an unknown quantity \(x \in \mathbb {R}^d\), which is removed from the model by marginalisation. More precisely, we consider problems where the resulting marginal likelihood \(\textstyle {p(y|\theta ) = \int _{\mathbb {R}^d} p(y,x|\theta )\text {d}x}\) is computationally intractable, and focus on models where the dimension of x is large, making the computation of (1) even more difficult. For completeness, we allow the use of a penalty function \(g : \varTheta \rightarrow \mathbb {R}\), or set \(g=0\) to recover the standard maximum likelihood estimator.

As mentioned previously, the maximum marginal likelihood estimation problem (1) arises in problems involving latent or hidden variables (Dempster et al. 1977). It is central to parametric empirical Bayes approaches that base their inferences on the pseudo-posterior distribution given by \(p(x|y,\theta ^{\star }) = {p(y,x|\theta ^{\star })}/{p(y|\theta ^{\star })}\) (Carlin and Louis 2000; Vidal et al. 2019). The same problem also arises in hierarchical Bayesian maximum-a-posteriori estimation of \(\theta \) given y, with marginal posterior \(p(\theta |y)\propto p(y|\theta )p(\theta )\) where \(p(\theta )\) denotes the prior for \(\theta \); in that case \(g(\theta ) = -\log p(\theta )\) (Berger and Casella 2002).

Finally, in this paper we assume that \(\log p(y,x|\theta )\) is continuously differentiable with respect to x and \(\theta \), and that g is also continuously differentiable with respect to \(\theta \). A generalisation of the proposed methodology to non-smooth models is presented in Vidal et al. (2019), De Bortoli et al. (2020b) which focus on non-smooth statistical imaging models.

2.2 Stochastic approximation methods

The scheme we propose to solve the optimisation problem (1) is derived in the SA framework (Delyon et al. 1999), which we recall below.

Starting from any \(\theta _0 \in \varTheta \), SA schemes seek to solve (1) iteratively by computing a sequence \((\theta _n)_{n \in \mathbb {N}}\) associated with the recursion

where \(\bar{H}_{\theta _n}\) is some estimator of the intractable gradient \(\theta \mapsto \nabla _{\theta }\log p(y|\theta )\) at \(\theta _n\), \(\varPi _{\varTheta }\) denotes the projection onto \(\varTheta \), and \((\delta _n)_{n \in \mathbb {N}^*} \in (\mathbb {R}_+^*)^{\mathbb {N}^*}\) is a sequence of stepsizes. From an optimisation viewpoint, iteration (2) is a stochastic generalisation of the projected gradient ascent iteration (Boyd and Vandenberghe 2004) for models with intractable gradients. For \(n \in \mathbb {N}\), Monte Carlo estimators \(\bar{H}_{\theta _n}\) for \(\nabla _{\theta }\log p(y|\theta )\) at \(\theta _n\) are derived from Fisher’s identity

which suggests to consider

where \((m_n)_{n \in \mathbb {N}}\in \left( \mathbb {N}^*\right) ^{\mathbb {N}}\) is a sequence of batch sizes and \(X_{1:m_n}^n = (X_k^n)_{k \in \lbrace 1, \dots , m_n \rbrace }\) is either a sequence of exact Monte Carlo samples from \(p(x|y,\theta _n) = p(x,y|\theta _n)/p(y|\theta _n)\), or a Markov chain targeting this distribution.

Given a sequence \((\theta _{k})_{k \in \{1,\ldots ,N\}}\) generated by using (2), an approximate solution of (1) can then be obtained by calculating, for example, the average of the iterates, i.e.

This estimate converges a.s to a solution of (1) as \(N \rightarrow \infty \) provided that some conditions on \(p(y|\theta )\), \(p(x|y,\theta )\), g, \((\delta _n)_{n \in \mathbb {N}}\), and \(\bar{H}_{\theta _n}\) are fulfilled. Indeed, following three decades of active research efforts in computational statistics and applied probability, we now have a good understanding of how to construct efficient SA schemes, and the conditions under which these schemes converge (see for example Benveniste et al. 1990; Fort and Moulines 2003; Duchi et al. 2011; Andrieu and Moulines 2006; Nemirovski et al. 2008; Atchadé et al. 2017).

SA schemes are successfully applied to maximum marginal likelihood estimation problems where the latent variable x has a low or moderately low dimension. However, they are seldomly used when x is high-dimensional because this usually requires using high-dimensional MCMC samplers that, unless carefully calibrated, exhibit poor convergence properties. Unfortunately, calibrating the samplers within a SA scheme is challenging because the target density \(p(x|y,\theta _n)\) changes at each iteration. As a result, it is, for example, difficult to use Metropolis–Hastings algorithms that need to achieve a prescribed acceptance probability range. Additionally, the conditions for convergence of MCMC SA schemes are often difficult to verify for high-dimensional samplers.

As mentioned previously, we propose to address these difficulties by using modern inexact Langevin MCMC samplers to drive (3). These samplers have received a lot of attention lately because they can exhibit excellent large-scale convergence properties and empirically outperform their Metropolised counterparts in many situations (see Durmus et al. 2018 for an extensive comparison in the context of Bayesian imaging models). Stimulated by developments in high-dimensional statistics and machine learning, we now have detailed theory for these algorithms, including explicit and easily verifiable geometric ergodicity conditions (Durmus and Moulines 2017; Dalalyan 2017; Eberle and Majka 2018; De Bortoli and Durmus 2019). This will allow us to design a stochastic optimisation scheme with favourable convergence properties that can be quantified explicitly and easily checked.

2.3 Langevin Markov chain Monte Carlo methods

Langevin MCMC schemes to sample from \(p(x|y,\theta )\) are based on stochastic continuous dynamics \((\varvec{X}_t^{\theta })_{t \ge 0}\) for which the target distribution \(p(x|y,\theta )\) is invariant. One fundamental example is the Langevin dynamics solution of the following stochastic differential equation (SDE)

where \((\varvec{B}_t)_{t \ge 0}\) is a standard d-dimensional Brownian motion. Under mild assumptions on \(p(x|y,\theta )\), this SDE admits a strong solution for which \(p(x|y,\theta )\) is the invariant probability measure. In addition, there are detailed explicit convergence results for \((\varvec{X}_t^{\theta })_{t \ge 0}\) to \(p(x|y,\theta )\) under different metrics (Eberle 2016; Eberle et al. 2017). Note that other SDEs satisfy these favorable properties such as the kinetic Langevin dynamics (Dalalyan and Riou-Durand 2018).

However, sampling solutions for these continuous-time dynamics is not feasible in general. Therefore, discretisations have to be used instead. In this paper, we mainly focus on the Euler-Maruyama discrete-time approximation of (5), known as the Unadjusted Langevin Algorithm (ULA) (Roberts and Tweedie 1996), given by

where \(\gamma > 0\) is the discretisation time step and \((Z_k)_{k \in \mathbb {N}^*}\) is a i.i.d. sequence of d-dimensional zero-mean Gaussian random variables with identity covariance matrix. We will use this Markov kernel to drive our SA schemes.

Observe that (6) does not exactly target \(p(x|y,\theta )\) because of the bias introduced by the discrete-time approximation. Computational statistical methods have traditionally addressed this issue by complementing (6) with a Metropolis–Hastings correction step to asymptotically remove the bias (Roberts and Tweedie 1996). This correction empirically deteriorates the convergence properties of the chain and may lead to poor non-asymptotic estimation results, particularly in very high-dimensional settings (see for example Durmus et al. 2018). However, until recently it was considered that using (6) without a correction step was too risky. Fortunately, recent works have established detailed theoretical guarantees for (6) that do not require using any correction (Dalalyan 2017; Durmus and Moulines 2017). In addition, new explicit convergence bounds have been derived under various assumptions on the target probability distribution (Dalalyan 2017; Cheng et al. 2018; Cheng and Bartlett 2017; Lee et al. 2018). In addition, accelerations and variations of ULA have been studied, both theoretically and experimentally, yielding better ergodic convergence rates (Maddison et al. 2018; Ma et al. 2019; Muehlebach and Jordan 2019; Dalalyan and Riou-Durand 2018). However, such extensions are out of the scope of the present work whose main contribution is not to provide new results to the existing Markov chain theory but to use the theoretical guarantees of ULA in order to study SA schemes driven by this efficient but inexact sampler.

Note also that the methodology we propose and analyse in this paper is fundamentally different from the Stochastic Gradient Langevin dynamics (Vollmer et al. 2016; Teh et al. 2016; Welling and Teh 2011a; Patterson and Teh 2013; Ahn et al. 2014, 2012) which is an MCMC algorithm to sample from \(p(x|y,\theta )\) using estimators of \(\nabla _{x} \log p(x|y,\theta )\). Finally, it should be highlighted that, in an independent line of work, a similar methodology is studied under a different set of assumptions in (Karimi et al. 2019).

2.4 The SOUL algorithm and main results

We are now ready to present the proposed Stochastic Optimization via Unadjusted Langevin (SOUL) methodology. Let \((\delta _n)_{n \in \mathbb {N}^*} \in (\mathbb {R}_+^*)^{\mathbb {N}^*}\) and \((m_n)_{n \in \mathbb {N}} \in \left( \mathbb {N}^*\right) ^{\mathbb {N}}\) be the sequences of stepsizes and batch sizes defining the SA scheme (2)–(3). For any \(\theta \in \varTheta \) and \(\gamma >0\), denote by \(\text {R}_{\gamma , \theta }\) the Langevin Markov kernel associated with (6) to approximately sample from \(p(x|y,\theta )\), and by \((\gamma _n)_{n \in \mathbb {N}} \in (\mathbb {R}_+^*)^{\mathbb {N}}\) the sequence of discrete time steps used.

Formally, starting from some \(X_0^0 \!\in \! \mathbb {R}^d\) and \(\theta _0 \!\in \! \!\varTheta \!\) we define recursively \((\lbrace X_k^n: k \!\in \! \lbrace 0, \!\dots \!, m_n \rbrace \rbrace , \theta _n)_{n \in \mathbb {N}}\) on a probability space \((\varOmega ,\mathcal {F},\mathbb {P})\): for any \(n \in \mathbb {N}\) \(X_{0:m_n}^n\) is a Markov chain with Markov kernel \(\text {R}_{\gamma _n, \theta _n}\), \(X_0^n \!=\! X_{m_{n-1}}^{n-1}\) given \(\mathcal {F}_{n-1}\), and

where for any \(n \in \mathbb {N}\) and \(k \in \{1, \dots , m_n\}\), \(\bar{H}_{\theta _n}(X_k^n) = \nabla _{\theta } \log p(X_k^n,y |\theta _n)\) and we recall that \(\varPi _{\varTheta }\) is the projection onto \(\varTheta \), and for all \(n \in \mathbb {N}\)

Note that such a construction is always possible by Kolmogorov extension theorem (Kallenberg 2006, Theorem 5.16), hence for any \(n \in \mathbb {N}\), \(\theta _{n+1}\) is \(\mathcal {F}_n\)-measurable. Then, as mentioned previously, we compute a sequence of approximate solutions of (1) by calculating the average of the iterates (4). The pseudocode associated with the proposed SOUL method is presented in Algorithm 1 below. Observe that, for additional efficiency, instead of generating independent Markov chains at each SA iteration, we warm-start the chains by setting \(X_0^n = X_{m_{n-1}}^{n-1}\), for any \(n \in \{1,\ldots , N\}\).

In Sect. 3 we prove the following results for SOUL, which we derive in more generality by analysing a broader class of methods where the Markov kernel associated with ULA is replaced by any regular and geometrically ergodic Markov kernel, see H1 and H2. For any \(a \in \mathbb {R}^d\) and \(R \ge 0\) denote by \({\text {B}}(a,R)\) the open ball centered at a and radius \(R \ge 0\) and \(\overline{\text {B}}(a,R)\) its closure.

Theorem 1

Assume that \(\varTheta \) is convex, compact and \(\varTheta \subset \overline{\text {B}}(0,R)\) with \(R \ge 0\). In addition, assume that \(\theta \mapsto -\log (p(y|\theta )) + g(\theta ) \in \text {C}^2(\varTheta , \mathbb {R})\) and is convex, that \((x, \theta ) \mapsto \log (p(x,y|\theta )) \in \text {C}^2(\mathbb {R}^d \times \varTheta , \mathbb {R})\) and that there exists \(\texttt {m}_1> 0\) such that for any \(\theta \in \varTheta \) and \(x \in \mathbb {R}^{d}\)

Assume that there exist \(\texttt {L}_1, \texttt {L}_2, R_1, \texttt {c}\ge 0\) and \(\texttt {m}_2> 0\) such that for any \(\theta \in \varTheta \) and \(x \in \mathbb {R}^d\) we have

Let \((\gamma _n,\delta _n)_{n \in \mathbb {N}} \in ((\mathbb {R}_+^*)^2)^{\mathbb {N}}\) non-increasing, \((m_n)_{n \in \mathbb {N}} \in (\mathbb {N}^*)^{\mathbb {N}}\) non-decreasing and \(\sum _{n \in \mathbb {N}} \delta _n = +\infty \). Assume

or alternatively

Then almost surely, \(\hat{\theta }_{\infty } = \lim _{N \rightarrow +\infty } \hat{\theta }_N\) exists and \(\hat{\theta }_{\infty } \in \hbox {arg min}_{\varTheta } f\) for \(\delta _0, \gamma _0\) sufficiently small.

Note that (8) only holds if \(\lim m_n = +\infty \) (increasing batch size setting) whereas (9) holds even if \(m_n=1\) under tighter conditions on \((\delta _n)_{n \in \mathbb {N}}\) and \((\gamma _n)_{n \in \mathbb {N}}\) (fixed batch size setting). For constant sequences \((\gamma _n)_{n \in \mathbb {N}}\) and \((\delta _n)_{n \in \mathbb {N}}\), Theorem 1 does not apply and \((f(\hat{\theta }_N))_{N \in \mathbb {N}}\) is biased. However, we can control the asymptotic bias using the following result.

Theorem 2

Under the same conditions on \(\varTheta \), \((x, \theta ) \mapsto \log (p(x,y|\theta ))\) and \(\theta \mapsto -\log (p(y|\theta )) + g(\theta )\) as in Theorem 1, there exist \(\bar{\delta }, \bar{\gamma }>0\) and \(C \ge 0\) such that if for any \(n \in \mathbb {N}\), \(\delta _n = \delta \in (0, \bar{\delta }]\), \(\gamma _n = \gamma \in (0, \bar{\gamma }]\) and \(m_n = 1\) then \(\limsup _{N \rightarrow +\infty } \lbrace \mathbb {E}[ f(\hat{\theta }_N) ] - \min _{\theta } f \rbrace \le C \gamma ^{1/2}\).

We believe Theorem 2 to be highly relevant to practitioners, as we often empirically observe that the best trade-off between accuracy and computing-time is obtained by setting \((\gamma _n)_{n \in \mathbb {N}}\) to a constant and relatively large value (determined, e.g. from a bound on the Lipschitz constant of the target density). In our experience, this leads to a fast converging sequence that stabilises quickly close to the MLE, albeit with some bias.

The detailed theoretical analysis of the proposed SOUL method and its generalization is reported to Sect. 3. To conclude, Sect. 4 demonstrates the proposed methodology with three numerical experiments related to high-dimensional logistic regression and statistical audio analysis with sparsity promoting priors. Finally, we also study the case where f is not convex. In that case, we use the results of Kushner and Yin (2003) to establish that \((\theta _n)_{n \in \mathbb {N}}\) converges a.s to a stationary point of the projected ordinary differential equation associated with \(\nabla f\) and \(\varTheta \). We postpone this result to Section S3 in De Bortoli et al. (2019).

3 Theoretical convergence analysis for SOUL, and generalisation to other inexact MCMC kernels (SOUK)

In this section, we state our main theoretical results in a broader framework than the one introduced in Sect. 2. After establishing notation and conventions in Sects. 3.1, 3.2 presents the general stochastic optimisation setting considered in this paper, which encompasses the MLE estimation problem (1). In order to address this class of optimisation problems, we develop a generalisation of SOUL: the Stochastic Optimisation via Unadjusted Kernel (SOUK) method. In this class of methods, ULA is replaced by a generic inexact Markov chain Monte Carlo method. We then establish our main results regarding the convergence properties of SOUK in Sect. 3.3. We conclude the section by showing that our general results apply to the specific MLE optimisation problem (1), and to the specific Langevin algorithm (6) used in SOUL in Sect. 3.4. All the proofs are postponed to the supplementary document.

3.1 Notation and convention

Denote by \(\mathcal {B}(\mathbb {R}^d)\) the Borel \(\sigma \)-field of \(\mathbb {R}^d\), and for \(f : \mathbb {R}^d \rightarrow \mathbb {R}\) measurable,  . For \(\mu \) a probability measure on \((\mathbb {R}^d, \mathcal {B}(\mathbb {R}^d))\) and f a \(\mu \)-integrable function, denote by \(\mu (f)\) the integral of f with respect to \(\mu \). For \(f: \ \mathbb {R}^d \rightarrow \mathbb {R}\) measurable, the V-norm of f is given by

. For \(\mu \) a probability measure on \((\mathbb {R}^d, \mathcal {B}(\mathbb {R}^d))\) and f a \(\mu \)-integrable function, denote by \(\mu (f)\) the integral of f with respect to \(\mu \). For \(f: \ \mathbb {R}^d \rightarrow \mathbb {R}\) measurable, the V-norm of f is given by  . Let \(\xi \) be a finite signed measure on \((\mathbb {R}^d,\mathcal {B}(\mathbb {R}^d))\). The V-total variation distance of \(\xi \) is defined as

. Let \(\xi \) be a finite signed measure on \((\mathbb {R}^d,\mathcal {B}(\mathbb {R}^d))\). The V-total variation distance of \(\xi \) is defined as

If \(V = 1\), then  is the total variation denoted by \(\Vert \cdot \Vert _{\mathrm{TV}}\). Let \(\textsf {U}\) be an open set of \(\mathbb {R}^d\). We denote by \(\text {C}^{k}(\textsf {U}, \mathbb {R}^p)\) the set of \(\mathbb {R}^p\)-valued k-differentiable functions, respectively the set of compactly supported \(\mathbb {R}^p\)-valued and k-differentiable functions. Let \(f : \textsf {U}\rightarrow \mathbb {R}\), we denote by \(\nabla f\), the gradient of f if it exists. f is said to me \(\texttt {m}\)-convex with \(m\ge 0\) if for all \(x,y \in \mathbb {R}^d\) and \(t \in \left[ 0,1\right] \),

is the total variation denoted by \(\Vert \cdot \Vert _{\mathrm{TV}}\). Let \(\textsf {U}\) be an open set of \(\mathbb {R}^d\). We denote by \(\text {C}^{k}(\textsf {U}, \mathbb {R}^p)\) the set of \(\mathbb {R}^p\)-valued k-differentiable functions, respectively the set of compactly supported \(\mathbb {R}^p\)-valued and k-differentiable functions. Let \(f : \textsf {U}\rightarrow \mathbb {R}\), we denote by \(\nabla f\), the gradient of f if it exists. f is said to me \(\texttt {m}\)-convex with \(m\ge 0\) if for all \(x,y \in \mathbb {R}^d\) and \(t \in \left[ 0,1\right] \),

For any \(a \in \mathbb {R}^d\) and \(R > 0\), denote \({\text {B}}(a,R)\) the open ball centered at a with radius R. Let \((\textsf {X}, \mathcal {X})\) and \((\textsf {Y}, \mathcal {Y})\) be two measurable spaces. A Markov kernel \(\text {P}\) is a mapping \(\text {K}: \ \textsf {X}\times \mathcal {Y}\rightarrow \left[ 0, 1\right] \) such that for any \(x \in \textsf {X}\), \(\text {P}(x, \cdot )\) is a probability measure and for any \(\textsf {A}\in \mathcal {Y}\), \(\text {P}(\cdot , \textsf {A})\) is measurable. For any probability measure \(\mu \) on \((\textsf {X}, \mathcal {X})\) and measurable function \(f : \textsf {Y}\rightarrow \mathbb {R}_+\) we denote \(\mu \text {P}= \int _{\textsf {X}} \text {P}(x, \cdot ) \text {d}\mu (x)\) and \(\text {P}f = \int _{\textsf {Y}} f(y) \text {P}(\cdot , \text {d}y)\). In what follows the Dirac mass at \(x \in \mathbb {R}^{d}\) is denoted by \(\updelta _x\) (which should not be confused with the stepsize sequence \((\delta _n)_{n \in \mathbb {N}}\)). The complement of a set \(\textsf {A}\subset \mathbb {R}^d\), is denoted by \(\textsf {A}^{\text {c}}\). All densities are w.r.t. the Lebesgue measure unless stated otherwise.

3.2 Stochastic Optimization with inexact MCMC methods

We consider the problem of minimizing a function \(f : \varTheta \rightarrow \mathbb {R}\) with \(\varTheta \subset \mathbb {R}^{d_{\varTheta }}\) under the following assumption.

A 1

-

(i)

\(\varTheta \) is convex, closed, \(\varTheta \subset {\text {B}}(0,M_{\varTheta })\) for \(M_{\varTheta }>0\).

-

(ii)

There exist an open set \(\textsf {U}\subset \mathbb {R}^{d_{\varTheta }}\) and \(L_f\ge 0\) such that \(\varTheta \subset \textsf {U}\), \(f \in \text {C}^1(\textsf {U},\mathbb {R})\) and for any \(\theta _1, \theta _2 \in \varTheta \)

$$\begin{aligned} \Vert \nabla f (\theta _1) - \nabla f (\theta _2) \Vert \le L_f\Vert \theta _1 - \theta _2 \Vert \;. \end{aligned}$$ -

(iii)

For any \(\theta \in \varTheta \), there exist \(H_{\theta }: \ \mathbb {R}^d \ \rightarrow \ \mathbb {R}^{d_{\varTheta }}\) and a probability distribution \(\pi _{\theta }\) on \((\mathbb {R}^d, \mathcal {B}(\mathbb {R}^d))\) satisfying that \(\pi _{\theta }(\Vert H_{\theta }\Vert )< +\infty \) and

$$\begin{aligned} \textstyle {\nabla f(\theta ) = \int _{\mathbb {R}^d} H_{\theta }(x) \text {d}\pi _{\theta }(x) \;.} \end{aligned}$$In addition, \((\theta , x) \mapsto H_{\theta }(x)\) is measurable.

Note that for the maximum marginal likelihood estimation problem (1), f corresponds to \(\theta \mapsto -\log (p(y|\theta )) + g(\theta )\), for any \(\theta \in \varTheta \), \(H_{\theta } : x \mapsto -\nabla _{\theta } \log (p(x,y | \theta )) + \nabla g(\theta )\) and \(\pi _{\theta }\) is the probability distribution with density \(x \mapsto p(x|y, \theta )\).

To minimise the objective function f we suggest the use of a SA strategy which extends the one presented in Sect. 2. More precisely, motivated by the methodology described in Sect. 2, we propose a SA scheme which relies on biased estimates of \(\nabla f(\theta )\) through a family of Markov kernels \(\lbrace \text {K}_{\gamma , \theta }, \gamma \in (0, \bar{\gamma }] , \ \theta \in \varTheta \rbrace \), for \(\bar{\gamma }>0\), such that for any \(\theta \in \varTheta \) and \(\gamma \in (0,\bar{\gamma }]\), \(\text {K}_{\gamma , \theta }\) admits an invariant probability distribution \(\pi _{\gamma ,\theta }\) on \((\mathbb {R}^d, \mathcal {B}(\mathbb {R}^d))\). \(\bar{\gamma }\) is an extra parameter which ensures the stability of the Markov kernel. In the SOUL method, the Markov kernel \(\text {K}_{\gamma , \theta }\) stands for \(\text {R}_{\gamma , \theta }\) for any \(\gamma \in (0, \bar{\gamma }]\) and \(\theta \in \varTheta \), where \(\text {R}_{\gamma , \theta }\) is the Markov kernel associated with (6). We assume in addition that the bias associated to the use of this family of Markov kernels can be controlled with respect to to \(\gamma \) uniformly in \(\theta \), i.e. for example there exists \(C>0\) such that for all \(\gamma \in (0, \bar{\gamma }]\) and \(\theta \in \varTheta \), \(\Vert \pi _{\gamma , \theta } - \pi _{\theta } \Vert _{\mathrm{TV}} \le C \gamma ^{\alpha }\) with \(\alpha > 0\).

Let now \((\delta _n)_{n \in \mathbb {N}} \in (\mathbb {R}_+^*)^{\mathbb {N}}\) and \((m_n)_{n \in \mathbb {N}} \in \left( \mathbb {N}^*\right) ^{\mathbb {N}}\) be sequences of stepsizes and batch sizes which will be used to define the sequence relatively to the variable \(\theta \) similarly to (2) and (3). Let \((\gamma _n)_{n \in \mathbb {N}} \in (\mathbb {R}_+^*)^{\mathbb {N}}\) be a sequence of stepsizes which will be used to get approximate samples from \(\pi _{\theta _n}\), similarly to (6). Starting from \(X_0^0 \in \mathbb {R}^d\) and \(\theta _0 \in \varTheta \), we define on a probability space \((\varOmega ,\mathcal {F},\mathbb {P})\), \((X_{1:m_n}^n, \theta _n)_{n \in \mathbb {N}}\) by the following recursion for \(n \in \mathbb {N}\) and \(k \in \lbrace 0, \dots , m_n -1 \rbrace \)

where \(\varPi _{\varTheta }\) is the projection onto \(\varTheta \) and \(\mathcal {F}_n\) is defined by (7). By (10), for any \(n \in \mathbb {N}\), \(\theta _{n+1}\) is \(\mathcal {F}_n\)-measurable. Then, the sequence of approximate minimisers of f is given by \((\hat{\theta }_N)_{N \in \mathbb {N}}\), see (4). The recursion (10) defines the SOUK methodology.

Under different sets of conditions on \(f, H, (\delta _n)_{n \in \mathbb {N}}\), \((\gamma _n)_{n \in \mathbb {N}}\) and \((m_n)_{n \in \mathbb {N}}\) we obtain that \((\theta _n)_{n \in \mathbb {N}}\) converges a.s to an element of \(\hbox {arg min}_{\varTheta } f\). In particular in this section we consider the case where f is assumed to be convex. We establish that if \((\gamma _n)_{n \in \mathbb {N}}\) and \((\delta _n)_{n \in \mathbb {N}}\) go to 0 sufficiently fast, \(\mathbb {E}[ f(\hat{\theta }_N) ] - \min _{\varTheta } f\) goes to 0 with a quantitative rate of convergence. In the case where \((\gamma _n)_{n \in \mathbb {N}}\) is held fixed, i.e. for all \(n \in \mathbb {N}\), \(\gamma _n = \gamma \), we show that while \(\mathbb {E}[ f(\hat{\theta }_N) ]\) does not converge to 0, there exist \(C, \alpha >0\) such that \(\limsup _{N \rightarrow +\infty } \mathbb {E}[ f(\hat{\theta }_N) ] - \min _{\varTheta } f \le C\gamma ^{\alpha }\). In the case where f is non-convex, we apply some results from stochastic approximation (Kushner and Yin 2003) which establish that the sequence \((\theta _n)_{n \in \mathbb {N}}\) converges a.s to a stationary point of the projected ordinary differential equation associated with \(\nabla f\) and \(\varTheta \). Note that we restrict ourselves to the study of the convergence of \((\theta _n)_{n \in \mathbb {N}}\) and do not derive non-asymptotic bounds. We postpone this result to Section S3 in De Bortoli et al. (2019), since it involves a theoretical background which we think is out of the scope of the main document.

The SOUK methodology allows for the use of Markov kernels beyond the one associated with the ULA algorithm considered in Sect. 2.4. Important examples include the Moreau Yosida ULA and Proximal ULA algorithms see De Bortoli et al. (2020a), De Bortoli et al. (2020b). We are currently investigating application of the SOUK framework using other samplers, in particular the Stochastic Gradient Langevin Dynamics (Welling and Teh 2011b), the underdamped Langevin algorithm (Ma et al. 2019) and the Hamiltonian Monte Carlo algorithm (Girolami and Calderhead 2011; Durmus et al. 2017; Maddison et al. 2018).

Finally note that this general optimisation setting encompasses many cases of interest which are generalizations of the SOUL algorithm. If \((\textsf {W}, \mathcal {W}, \mu _{\textsf {W}})\) is a probability space and \(f(\theta ) = \int _{\textsf {W}} \hat{f}(\theta , w) \text {d}\mu _{\textsf {W}}(w)\) for any \(\theta \in \varTheta \), with \(\hat{f}\) such that for any \(w \in \textsf {W}\), \(\hat{f}(\cdot , w)\) satisfies A1 with \(\pi _{\theta } \leftarrow \text {P}_{\theta }(w, \cdot )\), \(H_{\theta } \leftarrow H_{\theta }(\cdot , w)\), where for any \(\theta \in \varTheta \), \(\text {P}_{\theta }: \textsf {W}\times \mathcal {X}\rightarrow \left[ 0,1\right] \) is a Markov kernel. Assume that \(\int _{\textsf {W}} \int _{\mathbb {R}^{d}} \Vert H_{\theta }(x, w)\Vert \text {P}_{\theta }(w, \text {d}x) \text {d}\mu _{\textsf {W}}(w) < + \infty \) for any \(\theta \in \varTheta \). Then, we can consider the following algorithm

where \(\{\text {K}_{\gamma , \theta , w}\,:\;\theta \in \varTheta , \ \gamma \in \left( 0, \bar{\gamma }\right] , w \in \textsf {W}\}\) is a family of Markov kernels. Similar convergence guarantees and control of the bias as the ones established in Sect. 3.3 can be obtained for this algorithm which allows to tackle the case where f can be written as a sum of functions.

3.3 Main results

We impose a stability condition on the stochastic process \(X_{1:m_n}^n\) defined by (10) and that for any \(\gamma \in \left( 0, \bar{\gamma }\right] \) and \( \theta \in \varTheta \) the iterates of \(\text {K}_{\gamma , \theta }\) are close enough to \(\pi _{\theta }\) after a sufficiently large number of iterations.

H 1

There exists a measurable function \(V : \mathbb {R}^d \rightarrow \left[ 1,+\infty \right) \) satisfying the following conditions.

-

(i)

There exists \(A_1 \ge 1\) such that for any \(n,p \in \mathbb {N}\), \(k \in \{0, \dots , m_n \}\)

-

(ii)

For any \(\gamma \in \left( 0,\bar{\gamma }\right] \), \(\theta \in \varTheta \), \(\text {K}_{\gamma ,\theta }\) admits a stationary distribution \(\pi _{\gamma ,\theta }\) and there exist \(A_2, A_3\ge 1\), \(\rho \in \left[ 0,1\right) \) such that for any \(\gamma \in \left( 0,\bar{\gamma }\right] \), \(\theta \in \varTheta \), \(x \in \mathbb {R}^d\) and \(n \in \mathbb {N}\)

-

(iii)

There exists \(\varvec{\varPsi }: \ \mathbb {R}_+^{\star } \rightarrow \mathbb {R}_+\) such that for any \(\gamma \in \left( 0, \bar{\gamma }\right] \) and \(\theta \in \varTheta \),

.

.

H1-(ii) is an ergodicity condition in V-norm for the Markov kernel \(\text {K}_{\gamma , \theta }\) uniform in \(\theta \in \varTheta \). There exists an extensive literature on the conditions under which a Markov kernel is ergodic (Meyn and Tweedie 1992; Douc et al. 2018). Many MCMC algorithms enjoy geometric ergodicity such as the independence sampler (Tierney 1994), the Random Walk Metropolis–Hastings algorithm (Jarner and Hansen 2000), the Hamiltonian Monte-Carlo algorithm (Durmus et al. 2017) or the ULA algorithm (Dalalyan 2017; Durmus and Moulines 2017). However, obtaining bounds which are independent from \(\theta \in \varTheta \) can be arduous. In Sect. 3.4, we show that such bounds can be established for ULA under regularity and curvature conditions on the family of potentials \(\{U_{\theta }\,:\;\theta \in \varTheta \}\) if for any \(\theta \in \varTheta \), \(\pi _{\theta }\) admits a density proportional to \(x \mapsto \exp [-U_{\theta }(x)]\). A popular way to establish geometric ergodicity is to derive minorization and Foster-Lyapunov drift conditions (Hairer and Mattingly (2011); De Bortoli and Durmus (2019)) which can be verified on a case-by-case basis depending on the Markov kernel at hand, see Douc et al. (2018) for instance. H1-(iii) ensures that the distance between the invariant measure \(\pi _{\gamma , \theta }\) of the Markov kernel \(\text {K}_{\gamma , \theta }\) and \(\pi _{\theta }\) can be controlled uniformly in \(\theta \). We show that this condition holds in the case of the Langevin Monte Carlo algorithm in Proposition S15 in De Bortoli et al. (2019). We now state our mains results.

Theorem 3

(Increasing batch size 1) Assume A1 and that f is convex. Let \((\gamma _n)_{n \in \mathbb {N}}\), \((\delta _n)_{n \in \mathbb {N}}\) be sequences of non-increasing positive real numbers and \((m_n)_{n \in \mathbb {N}}\) be a sequence of positive integers satisfying \(\delta _0 < 1/L_f\), \(\gamma _0 < \bar{\gamma }\) and

Let \((\theta _n)_{n \in \mathbb {N}}\) and \((X_{1:m_n}^n)_{n \in \mathbb {N}}\) be given by (10). Assume in addition that H1 is satisfied and that for any \(\theta \in \varTheta \) and \(x \in \mathbb {R}^d\), \(\Vert H_{\theta }(x)\Vert \le V^{1/2}(x)\). Then, the following statements hold:

-

(a)

\((\theta _n)_{n \in \mathbb {N}}\) converges a.s to some \(\theta ^{\star } \in \hbox {arg min}_{\varTheta } f\) ;

-

(b)

furthermore, a.s there exists \(C\ge 0\) such that for any \(n \in \mathbb {N}^*\)

$$\begin{aligned} \textstyle {f(\hat{\theta }_n) - \min _{\varTheta } f \le \left. C \bigg /\left( \sum _{k=1}^n \delta _k \right) \right. \;.} \end{aligned}$$

Proof

The proof is postponed to Section S1.1 in De Bortoli et al. (2019). \(\square \)

Note that in (10), \(X_0^n = X_{m_{n-1}}^{n-1}\) for \(n \in \mathbb {N}^*\). This procedure is referred to as warm-start in the sequel. An inspection of the Proof of Theorem 3 shows that \(X_0^n\) could be any random variable independent from \(\mathcal {F}_{n-1}\) for any \(n \in \mathbb {N}\) with \(\sup _{n \in \mathbb {N}^*} \mathbb {E}\left[ V(X_0^n) \right] < +\infty \). It is not an option in the fixed batch size setting of Theorem 5, where the warm-start procedure is crucial for the convergence to occur.

We extend this theorem to non-convex objective function, see Section S3 in De Bortoli et al. (2019). Under the conditions of Theorem 3 with the additional assumption that \(\varTheta \) is a smooth manifold we obtain that \((\theta _n)_{n \in \mathbb {N}}\) converges a.s to some point \(\theta ^{\star }\) such that \(\nabla f(\theta ^{\star }) + \mathbf{n} =0\) with \(\mathbf{n} = 0\) if \(\theta ^{\star } \in \text {int}(\varTheta )\) and \(\mathbf{n} \in \text {N}(\theta ^{\star }, \varTheta )\) where \(\text {N}(\theta ^{\star }, \varTheta )\) is the convex cone generated by the outer normals at point \(\theta ^{\star }\), see (Aubin 2000, Chapter 2).

In the case where \(\text {K}_{\gamma , \theta } = \text {R}_{\gamma , \theta }\) is the Markov kernel associated with the Langevin update (6), under appropriate conditions Proposition S15 in De Bortoli et al. (2019) shows that H1-(iii) holds with for any \(\gamma \in \left( 0,\bar{\gamma }\right] \), \(\varvec{\varPsi }(\gamma ) = \mathcal {O}(\gamma ^{1/2})\). Assume that there exist \(a,b,c >0\) such that for any \(n \in \mathbb {N}^*\), \(\delta _n = n^{-a}\), \(\gamma _n = n^{-b}\) and \(m_n = \left\lceil n^c \right\rceil \) then (11) is equivalent to

Suppose \(a \in \left[ 0,1\right] \) is given, (12) reads

This illustrates the trade-off between the intrinsic inaccuracy of our algorithm through the family of Markov kernels (10) which do not exactly target \(\pi _{\theta }\) and the minimization aim of our scheme. Note also that \((\delta _n)_{n \in \mathbb {N}}\) is allowed to be constant. In this worst-case scenario, the convergence is guaranteed if \(\gamma _n = n^{-2-\varsigma _1}\) and \(m_n = \left\lceil n^{3+\varsigma _2} \right\rceil \) with \(\varsigma _2> \varsigma _1 >0\).

In our next result we derive an non-asymptotic upper-bound of \((\mathbb {E}[ f(\hat{\theta }_n) - \min _{\varTheta } f ])_{n \in \mathbb {N}}\).

Theorem 4

(Increasing batch size 2) Assume A1 and that f is convex. Let \((\gamma _n)_{n \in \mathbb {N}}\), \((\delta _n)_{n \in \mathbb {N}}\) be sequences of non-increasing positive real numbers and \((m_n)_{n \in \mathbb {N}}\) be a sequence of positive integers satisfying \(\delta _0 < 1/L_f\), \(\gamma _0 < \bar{\gamma }\). Let \((\theta _n)_{n \in \mathbb {N}}\) and \((X_{1:m_n}^n)_{n \in \mathbb {N}}\) be given by (10). Assume in addition that H1 is satisfied and that for any \(\theta \in \varTheta \) and \(x \in \mathbb {R}^d\), \(\Vert H_{\theta }(x)\Vert \le V^{1/2}(x)\). Then, there exists \((E_n)_{n \in \mathbb {N}}\) such that for any \(n \in \mathbb {N}^*\)

with for any \(n \in \mathbb {N}^*\),

with \(B_1\), \(B_2\) given in Lemmas S3 and S4 in De Bortoli et al. (2019).

Proof

The proof is postponed to Section S1.2 in De Bortoli et al. (2019). \(\square \)

We recall that in the case where \(\text {K}_{\gamma , \theta } = \text {R}_{\gamma , \theta }\) is the Markov kernel associated with the Langevin update (6). Under appropriate conditions, Proposition S15 in De Bortoli et al. (2019) shows that for any \(\gamma \in \left( 0,\bar{\gamma }\right] \), \(\varvec{\varPsi }(\gamma ) = \mathcal {O}(\gamma ^{1/2})\). In that case, if there exist \(a, b,c \ge 0\) such that for any \(n \in \mathbb {N}^*\), \(\delta _n = n^{-a}\), \(\gamma _n = n^{-b}\), \(m_n = n^c\) and (12) holds, the accuracy, respectively, the complexity, of the algorithm are of orders \(\left( \sum _{k=1}^n \delta _k\right) ^{-1} = \mathcal {O}(n^{a-1})\), respectively \(\sum _{k=0}^n m_k = \mathcal {O}(n^{3(1-a) + \varsigma _2 + 1})\) for \(\varsigma _2 >0\). Therefore, for a fixed target precision \(\varepsilon >0\), it requires that \(\varepsilon = \mathcal {O}(n^{a-1})\) and the complexity reads \( \mathcal {O}(\varepsilon ^{-3} \left( \log (1/\varepsilon )/(1-a) \right) ^{1+ \varsigma _2})\). On the other hand, if we fix the complexity budget to N the accuracy is of order \(\mathcal {O}(N^{-(3 + (1 + \varsigma _2)/(1-a))^{-1}})\). These two considerations suggest to set a close to 0. In the special case where \(a = 0\), we obtain that the accuracy is of order \(\mathcal {O}(n^{-1})\), which matches the order identified in the deterministic gradient descent for convex functionals, see (Bertsekas 1997, Proposition 1.3.3) for instance in the unconstrained case. This behavior is specific to the increasing batch size setting.

Another case of interest is the fixed stepsize setting, i.e. for all \(n \in \mathbb {N}, \ \gamma _n = \gamma >0\). Assume that \( (\delta _n)_{ n \in \mathbb {N}}\) is non-increasing, \(\lim _{n \rightarrow +\infty } \delta _n = 0\) and \(\lim _{n \rightarrow +\infty } m_n = +\infty \). In addition, assume that \(\sum _{n \in \mathbb {N}^*} \delta _n = +\infty \) then, by (Pólya and Szegő 1998, Problem 80, Part I), it holds that

Therefore, we obtain that

Similarly, if the stepsize is fixed and the number of Markov chain iterates is fixed, i.e. for all \(n \in \mathbb {N}\), \(\gamma _n=\gamma \) and \(m_n = m\) with \(\gamma >0\) and \(m \in \mathbb {N}^*\), we obtain that

with \(\varvec{\varXi }_1(\gamma ) = 2B_1M_{\varTheta }\mathbb {E}[ V^{1/2}(X_0^0) ] /\gamma + 2 M_{\varTheta }\varvec{\varPsi }(\gamma )\). However if \((m_n)_{n \in \mathbb {N}}\) is constant the convergence cannot be obtained using Theorem 3. Strengthening the conditions of Theorem 3 and making use of the warm-start property of the algorithm we can derive the convergence in that case.

We now are interested in the case where the batch size is fixed, i.e. \(m_n = m_0\) for all \(n \in \mathbb {N}\). For ease of exposition we only consider \(m_0 = 1\) and let \(\tilde{X}_{n+1} = X_1^n\) for any \(n \in \mathbb {N}\). However, the general case can be adapted from the proof of the result stated below. More precisely we consider the setting where the recursion (10) can be written for any \(n \in \mathbb {N}\) as

with \(\tilde{\theta }_0 \in \varTheta \), \(\tilde{X}_0 \in \mathbb {R}^d\) and where \(\tilde{\mathcal {F}}_n\) is given by

We consider the following assumption.

A 2

There exists \(L_H \ge 0\) such that for any \(x \in \mathbb {R}^d\) and \(\theta _1, \theta _2 \in \varTheta \), \(\Vert H_{\theta _1}(x) - H_{\theta _2}(x) \Vert \le L_H \Vert \theta _1 - \theta _2\Vert V^{1/2}(x)\), where V is given in H1.

We consider a similar property as A2 on the family of Markov kernels \(\left\{ \text {K}_{\gamma , \theta }, \gamma \in \left( 0,\bar{\gamma }\right] , \theta \in \varTheta \right\} \), which weakens the assumption (Atchadé et al. 2017, H6). Indeed, we do not assume that for any \(\gamma \in \left( 0, \bar{\gamma }\right] \), \(\theta \mapsto \text {K}_{\gamma , \theta }\) is Lipschitz.

H 2

There exist \(\varvec{\varLambda }_1: \left( \mathbb {R}_+^* \right) ^2 \rightarrow \mathbb {R}_+\) and \(\varvec{\varLambda }_2: \left( \mathbb {R}_+^* \right) ^2 \rightarrow \mathbb {R}_+\) such that for any \(\gamma _1,\gamma _2 \in \left( 0,\bar{\gamma }\right] \) with \(\gamma _2 < \gamma _1\), \(\theta _1,\theta _2 \in \varTheta \), \(x \in \mathbb {R}^d\) and \(a \in \left[ 1/4, 1/2\right] \)

where V is given in H1.

The following theorem ensures convergence properties for \((\theta _n)_{n \in \mathbb {N}}\) similar to the ones of Theorem 3. The proof of this result is based on a generalization of (Fort et al. 2011, Lemma 4.2) for inexact MCMC schemes.

Theorem 5

(Fixed batch size 1) Assume A1, A2 hold and f is convex. Let \(\bar{\gamma }>0\), \((\gamma _n)_{n \in \mathbb {N}}\) and \((\delta _n)_{n \in \mathbb {N}}\) be sequences of non-increasing positive real numbers satisfying \(\delta _0 < 1/L_f\), \(\gamma _0 < \bar{\gamma }\), \( \sup _{n \in \mathbb {N}} |\delta _{n+1} - \delta _n | \delta _n^{-2} < +\infty \), \(\sum _{n=0}^{+\infty } \delta _{n+1} = +\infty \) and

Let \((\tilde{\theta }_n)_{n \in \mathbb {N}}\) and \((\tilde{X}_n)_{n \in \mathbb {N}}\) be given by (15). Assume in addition that H1 and H2 are satisfied and that for any \(\theta \in \varTheta \) and \(x \in \mathbb {R}^d\), \(\left\| H_{\theta }(x) \right\| \le V^{1/4}(x)\). Then, the following statements hold:

-

(a)

\((\tilde{\theta }_n)_{n \in \mathbb {N}}\) converges a.s to some \(\theta ^{\star } \in \hbox {arg min}_{\varTheta } f\) ;

-

(b)

furthermore, a.s there exists \(C\ge 0\) such that for any \(n \in \mathbb {N}^*\)

$$\begin{aligned} \textstyle {f(\hat{\theta }_n) - \min _{\varTheta } f \le \left. C \bigg /\left( \sum _{k=1}^n \delta _k \right) \right. \;.} \end{aligned}$$

Proof

The proof is postponed to Section S1.3 in De Bortoli et al. (2019). \(\square \)

In the case where \(\text {K}_{\gamma , \theta } = \text {R}_{\gamma , \theta }\) is the Markov kernel associated with the Langevin update (6), under appropriate conditions, Propositions S15 and S16 in De Bortoli et al. (2019) show that for any \(\gamma _1, \gamma _2 \in \left( 0,\bar{\gamma }\right] \) with \(\bar{\gamma }>0\) and \(\gamma _1 > \gamma _2\), \(\varvec{\varPsi }(\gamma _1) = \text {C}_1 \gamma _1^{1/2}\), \(\varvec{\varLambda }_1(\gamma _1, \gamma _2) = \text {C}_2 (\gamma _1/\gamma _2 - 1)\), \(\varvec{\varLambda }_2(\gamma _1, \gamma _2) = \text {C}_3 \gamma _2^{1/2}\), and \(\text {C}_1,\text {C}_2,\text {C}_3 \ge 0\). Thus, we obtain that the following series should converge

In addition, assume that \(\delta _n = n^{-a}\) and that \(\gamma _n = n^{-b}\) with \(a, b >0\). In this case, the summability conditions of Theorem 5 read

i.e. \(b \in \textsf {I}= \left( 2(1-a), a-1/2\right) \) and \(a \in \left[ 0,1\right] \). Note that \(\textsf {I}\ne \emptyset \) as soon as \(a > 5/6\). In the special setting where \(a =1\) then the convergence in Theorem 5 occurs if \(b \in \left( 0, 1/2\right) \). Since \(a > b + 1/2\) we obtain that \(\lim _{n \rightarrow +\infty } (\delta _n / \gamma _n) = 0\). This means that the stochastics gradient descent dynamic associated with \((\tilde{\theta }_n)_{n \in \mathbb {N}}\) moves slower than the sequence \((\tilde{X}_n)_{n \in \mathbb {N}}\).

Theorem 6

(Fixed batch size 2) Assume A1, A2 hold and f is convex. Let \((\gamma _n)_{n \in \mathbb {N}}\), \((\delta _n)_{n \in \mathbb {N}}\) be sequences of non-increasing positive real numbers and \((m_n)_{n \in \mathbb {N}}\) be a sequence of positive integers satisfying \(\delta _0 < 1/L_f\) and \(\gamma _0 < \bar{\gamma }\). Let \((\theta _n)_{n \in \mathbb {N}}\) and \((\tilde{X}_n)_{n \in \mathbb {N}}\) be given by (15). Assume in addition that H1 and H2 are satisfied and that for any \(\theta \in \varTheta \) and \(x \in \mathbb {R}^d\), \(\left\| H_{\theta }(x) \right\| \le V^{1/4}(x)\). Then, there exists \((\tilde{E}_n)_{n \in \mathbb {N}}\) such that for any \(n \in \mathbb {N}^*\)

with for any \(n \in \mathbb {N}^*\),

where \(C_1\), \(C_2\) and \(C_3\) are given in Lemmas S5, S8 and S7 in De Bortoli et al. (2019), respectively.

Proof

The proof is postponed to Section S1.4 in De Bortoli et al. (2019). \(\square \)

Theorem 6 improves the conclusions of Theorem 4 in the case where \(\gamma _n = \gamma >0\) for any \(n \in \mathbb {N}\). Indeed, in that case, similarly to (14), assuming that \(\lim _{n \rightarrow +\infty } \delta _n =0\), \(\sup _{n \in \mathbb {N}} \left| \delta _{n+1} - \delta _n \right| \delta _n^{-2} < +\infty \) and that for any \(\gamma \in \left( 0, \bar{\gamma }\right] \), \(\varvec{\varLambda }_1(\gamma , \gamma ) = 0\) and we obtain that

with

In the case where \(\sup _{\gamma \in \left( 0, \bar{\gamma }\right] } \varvec{\varPsi }(\gamma ) < +\infty \), \(\varvec{\varXi }_2(\gamma )\) is of order \(\mathcal {O}(\varvec{\varPsi }(\gamma ))\) and \(\varvec{\varXi }_1(\gamma )\) is of order \(\mathcal {O}(\gamma ^{-1})\). Therefore if \(\lim _{\gamma \rightarrow 0} \varvec{\varPsi }(\gamma ) = 0\), even in the fixed batch size setting, the minimum of the objective function f can be approached with arbitrary precision \(\varepsilon >0\) by choosing \(\gamma \) small enough.

Note that the conclusions of Theorem 6 are similar to the ones of (Karimi et al. 2019, Theorem 2). In Karimi et al. (2019) the main result is a bound on \(\mathbb {E}[ \sum _{k=1}^n \delta _k \left\| \nabla _{\theta } f(\theta _k) \right\| ^2 / \sum _{k=1}^n \delta _k ]\) and \(\nabla f(\theta )\) is not assumed to be convex but only related to a Lyapunov functional (Karimi et al. 2019, A1-A3). However, it is assumed that for any \(\theta \in \varTheta \) and \(\gamma \in \left( 0, \bar{\gamma }\right] \) the invariant probability distribution of the Markov kernel \(\text {K}_{\gamma , \theta }\) is \(\pi _{\theta }\), i.e. \(\varvec{\varPsi }= 0\) in H1-(iii), which is not the case in our analysis. Following this line of work, one could establish similar non-asymptotic result in the non-convex setting using the SOUK methodology. However, this is highly technical study that is beyond the scope of the present paper, and which we defer to future work.

To conclude, we highlight the differences between our work and Atchadé et al. (2017). Our results are based on the deterministic estimates derived in (Atchadé et al. 2017, Theorem 1, Theorem 2). However (Atchadé et al. 2017, Theorem 4, Theorem 6) rely on (i) the fact that for any \(\theta \in \varTheta \), \(\pi _{\theta }\) is an invariant probability measure for \(\text {K}_{\gamma , \theta }\), see (Atchadé et al. 2017, H5) and (ii) a Lipschitz regularity property for \((\gamma , \theta ) \mapsto \text {K}_{\gamma , \theta }\), see (Atchadé et al. 2017, H6). Conditions (i) and (ii) do not hold if we consider unadjusted (inexact) Markov kernels. In this work we relax (Atchadé et al. 2017, H5) and (Atchadé et al. 2017, H6) by considering H1-(ii), respectively H2. As an important example, the results of Atchadé et al. (2017) do not apply if the Markov kernel is the one associated with ULA, whereas in Sect. 3.4 we show that our results do hold.

3.4 Application to SOUL

We now apply our results to the SOUL methodology introduced in Sect. 2 where the Markov kernel \(\text {R}_{\gamma , \theta }\) with \(\gamma \in \left( 0, \bar{\gamma }\right] \) and \(\theta \in \varTheta \) is given by a Langevin Markov kernel and associated with recursion (6). We consider the following assumption on the family of probability distributions \((\pi _{\theta })_{\theta \in \varTheta }\).

L 1

For any \(\theta \in \varTheta \), there exists \(U_{\theta }: \mathbb {R}^d \rightarrow \mathbb {R}\) such that \(\pi _{\theta }\) admits a probability density function proportional to \(x \mapsto \exp [-U_{\theta }(x)]\). In addition \((\theta , x) \mapsto U_{\theta }(x)\) is continuous, \(x \mapsto U_{\theta }(x)\) is differentiable for all \(\theta \in \varTheta \) and there exists \(\texttt {L}\ge 0\) such that for any \(x,y \in \mathbb {R}^d\),

and \(\left\{ \Vert \nabla _x U_{\theta }(0) \Vert \,:\;\theta \in \varTheta \right\} \) is bounded.

In the case where \(\text {K}_{\gamma , \theta } = \text {R}_{\gamma , \theta }\) for any \(\gamma \in \left( 0, \bar{\gamma }\right] \) and \(\theta \in \varTheta \), the first line of (10) can be rewritten for any \(n \in \mathbb {N}\) and \(k \in \lbrace 0, \dots , m_n -1 \rbrace \)

given \((\gamma _n)_{n \in \mathbb {N}} \in \left( 0,\bar{\gamma }\right] ^{\mathbb {N}}\), \((m_n)_{n \in \mathbb {N}} \in \left( \mathbb {N}^*\right) ^{\mathbb {N}}\) and also \((Z_k^n)_{n \in \mathbb {N}, k \in \lbrace 1, \dots , m_n \rbrace }\) a family of i.i.d d-dimensional zero-mean Gaussian random variables with covariance matrix identity. In the following propositions, we show that the above results hold by deriving sufficient conditions under which H1 and H2 are satisfied. Consider now the following additional tail condition on \(U_{\theta }\) which ensures geometric ergodicity of \(\text {R}_{\gamma , \theta }\) for any \(\theta \in \varTheta \) and \(\gamma \in \left( 0,\bar{\gamma }\right] \), with \(\bar{\gamma }> 0\) which will be specified below.

L 2

There exist \(\texttt {m}_1, \texttt {m}_2>0\) and \(\texttt {c}, R_1\ge 0\) such that for any \(\theta \in \varTheta \) and \(x \in \mathbb {R}^d\),

L 3

There exists \(L_{U} \ge 0\) such that for any \(x\in \mathbb {R}^d\) and \(\theta _1, \theta _2 \in \varTheta \), \(\left\| \nabla _x U_{\theta _1}(x) -\nabla _x U_{\theta _2}(x) \right\| \le L_U \Vert \theta _1 - \theta _2 \Vert V(x)^{1/2}\).

The next theorems assert that under L1, L2 and L3, the SOUL algorithm introduced in Section 2 satisfies H1 and H2 and therefore Theorems 3, 4, 5 and 6 can be applied if in addition A1 and A2 hold. Under L2 define for any \(x \in \mathbb {R}^d\)

Theorem 7

Assume L1 and L2. Then, H1 holds with \(V \leftarrow V_{\text {e}}\), \(\bar{\gamma }\leftarrow \min (1,2\texttt {m}_2)\) and \(\varvec{\varPsi }(\gamma ) = D_4 \sqrt{\gamma }\) where \(D_4\) is given in Proposition S15 in De Bortoli et al. (2019).

Proof

The proof is postponed to Section S1.5 in De Bortoli et al. (2019). \(\square \)

Theorem 8

Assume L1, L2, L3 and that for any \(\theta \in \varTheta \) and \(x \in \mathbb {R}^d\), \(\left\| H_{\theta }(x) \right\| \le V_{\text {e}}^{1/4}(x)\). H2 holds with \(V \leftarrow V_{\text {e}}\) and \(\bar{\gamma }\leftarrow \min (1,2\texttt {m}_2)\) and for any \(\gamma _1,\gamma _2 \in \left( 0,\bar{\gamma }\right] \), \(\gamma _2 < \gamma _1\),

where \(D_5\) is given in Proposition S16 in De Bortoli et al. (2019).

Proof

The proof is postponed to Section S1.6 in De Bortoli et al. (2019). \(\square \)

Finally, we discuss the dependency of the complexity of the SOUL algorithm with respect to the dimension of the latent space d in the specific case where \(\{U_{\theta }\,:\;\theta \in \varTheta \}\) satisfies the following assumption.

L 4

There exist \(\texttt {m}_3>0\), \(R_3 \ge 0\) such that for any \(\theta \in \varTheta \) and \(x \in \mathbb {R}^{d}\) with \(\left\| x \right\| \ge R_3\), \(\langle \nabla _x U_{\theta }(x) , x \rangle \ge \texttt {m}_3\left\| x \right\| ^2\), \(U_{\theta }\) is convex and there exist \(C, \varpi _0 \ge 0\) such that

where C and \(\varpi _0\) are independent of the dimension d.

In what follows, we show that under L1, L3 and L4, the constants appearing in H1 and H2 depend polynomially on the dimension of the latent space d. This implies that the complexity of the SOUL algorithm is polynomial with respect to the dimension d since the constants appearing in Theorems 4 and 6 depend polynomially on the constants of H1 and H2.

Theorem 9

Assume L1, L3 and L4. Then H1 and H2 are satisfied with \(V: \ \mathbb {R}^{d} \rightarrow \left[ 1,+\infty \right) \) given for any \(x \in \mathbb {R}^{d}\) by \(V(x) = 1 + \left\| x \right\| ^4\) and \(\varvec{\varPsi }, \varvec{\varLambda }_1, \varvec{\varLambda }_2\) given by Theorems 7 and 8, respectively. Hence, the conclusions of Theorems 4 and 6 hold with \((E_n)_{n \in \mathbb {N}}\) and \((\tilde{E}_n)_{n \in \mathbb {N}}\) given for any \(n \in \mathbb {N}\) by

and

with \(\varpi \in \mathbb {N}\) and \(A\ge 0\) independent from d.

Proof

The proof is postponed to Section S1.7 in De Bortoli et al. (2019) . \(\square \)

4 Numerical results

We now demonstrate the proposed methodology with three experiments that we have chosen to illustrate a variety of scenarios. Section 4.1 presents an application to empirical Bayesian logistic regression, where (1) can be analytically shown to be a convex optimisation problem with an unique solution \(\theta ^{\star }\), and where we benchmark our MLE estimate against the solution obtained by calculating the marginal likelihood \(p(y|\theta )\) over a \(\theta \)-grid by using an harmonic mean estimator. Furthermore, Sect. 4.2 presents a challenging application related to statistical audio compressive sensing analysis, where we use SOUL to estimate a regularisation parameter that controls the degree of sparsity enforced, and where a main difficulty is the high-dimensionality of the latent space (\(d = 2900\)). Finally, Sect. 4.3 presents an application to a high-dimensional empirical Bayesian logistic regression with random effects for which the optimisation problem (1) is not convex. All experiments were carried out on an Intel i9-8950HK@2.90 GHz workstation running MATLAB R2018a.

4.1 Bayesian Logistic Regression

In this first experiment we illustrate the proposed methodology with an empirical Bayesian logistic regression problem (Wakefield 2013; Polson et al. 2013). We observe a set of covariates \(\{v_i\}^{d_y}_{i=1} \in \mathbb {R}^d\), and binary responses \(\{y_i\}^{d_y}_{i=1} \in \{0,1\}\), which we assume to be conditionally independent realisations of a logistic regression model: for any \(i \in \{1,\ldots , d_y\}\), \(y_i\) given \(\beta \) and \(v_i\) has distribution \(\text {Ber}( s(v_i^{{\text {T}}}\beta ))\), where \(\beta \in \mathbb {R}^d\) is the regression coefficient, \(\text {Ber}(\alpha )\) denotes the Bernoulli distribution with parameter \(\alpha \in [0, 1]\) and \(s(u) = \text {e}^u /(1 + \text {e}^u )\) is the cumulative distribution function of the standard logistic distribution. The prior for \(\beta \) is set to be \(\text {N}(\theta \varvec{1}_d,\sigma ^2{\text {I}}_d)\), the d-dimensional Gaussian distribution with mean \(\theta \varvec{1}_d\) and covariance matrix \(\sigma ^2 {\text {I}}_d\), where \(\theta \) is the parameter we seek to estimate, \(\varvec{1}_d = (1,\ldots ,1) \in \mathbb {R}^{d}\), \(\sigma ^2 = 5\) and \({\text {I}}_d\) is the d-dimensional identity matrix. Following an empirical Bayesian approach, the parameter \(\theta \) is computed by maximum marginal likelihood estimation using Algorithm 1 with the marginal likelihood \( p(y|\theta )\) given by

Lemma S18 in De Bortoli et al. (2019) shows that (19) is log-concave with respect to \(\theta \). In addition, using Lebesgue’s dominated convergence theorem A1 is satisfied for any convex and compact set \(\varTheta \) with \(H_{\theta }: \ \beta \mapsto - \nabla _{\theta } \log (p(\beta ,y|\theta ))\). We use the proposed SOUL methodology to estimate \(\theta ^{\star }\) for the Wisconsin Diagnostic Breast Cancer datasetFootnote 1, for which \(d_y = 683\) and \(d=10\), and where we suitably normalise the covariates. In order to assess the quality of our estimation results, we also calculate \(p(y|\theta )\) over a grid of values for \(\theta \) by using a truncated harmonic mean estimator.

To implement Algorithm 1 we derive the log-likelihood function

and obtain the following expressions for the gradients used in the MCMC steps (6) and SA steps (2), respectively

Note that \(\{\beta \mapsto - \log p(\beta |y, \theta )\,:\;\theta \in \varTheta \}\) satisfies L1 and L2. Therefore, since A1 holds and \(\theta \mapsto -\log p(y|\theta )\) is convex we get that Theorems 7 and 8 apply and the conclusions of Theorems 1 and 2 hold. For the MCMC steps, we use a fixed stepsize \(\gamma _n = 8.34 \times 10^{-5}\), and batch size \(m_n=1\), for any \(n \in \mathbb {N}\). On the other hand, we consider for the SA steps, the sequence of stepsizes \(\delta _n=60 n^{-0.8}\), \(\varTheta =\left[ -100,100\right] \) and \(\theta _0=0\). Finally, we first run 100 burn-in iterations with fixed \(\theta _n = \theta _0\) to warm-up the Markov chain, followed by 50 iterations of Algorithm 1 to warm-up the iterates. This procedure is then followed by \(N=10^6\) iterations of Algorithm 1 to compute \(\hat{\theta }_N\).

Figure 1a shows the evolution of the iterates \(\theta _n\) during the first 100 iterations. Observe that the sequence initially oscillates, and then stabilises close to \(\theta ^{\star }\) after approximately 50 iterations. Figure 1b presents the iterates \(\theta _n\) for \(n = 10^5,\ldots ,10^6\). For completeness, Fig. 2 shows the histograms corresponding to the marginal posteriors \(p(\beta _j|y,v,\hat{\theta }_N)\), for \(j = 1,\ldots , 10\), obtained as a by-product of Algorithm 1. In order to verify that the obtained estimate \(\hat{\theta }_N\) is close to the true MLE \(\theta ^{\star }\) we use a truncated harmonic mean estimator (THME) Robert and Wraith (2009) to calculate the marginal likelihood \(p(y|\theta )\) for a range of values of \(\theta \). Although obtaining the THME is usually computationally expensive, it is viable in this particular experiment as \(\beta \) is low-dimensional. Given n samples \((\beta _i)_{i \in \{1,\ldots ,n\}}\) from \(p(\beta |y,\theta )\), we obtain an approximation of \(p(y|\theta )\) by computing

with \(\bar{\beta }=n^{-1}\sum _{k=1}^{n}\beta _k\), and radius \(R \ge 0\) such that \({n^{-1} \sum _{i=1}^{n} \mathbb {1}_{\textsf {A}}(\beta _i) \approx 0.4}\). Using \(n=6 \times 10^5\) samples, we obtain the approximation shown in Fig. 3a, where in addition to the estimated points we also display a quadratic fit (corresponding to a Gaussian fit in linear scale), which we use to obtain an estimate of \(\theta ^{\star }\) (the obtained log-likelihood values are small because the dataset is large (\(d_y = 683\))).

To empirically study the estimation error involved, we replicate the experiment 1000 times. Figure 3 shows the obtained histogram of \(( \hat{\theta }_{n} )_{n \in \mathbb {N}}\), where we observe that all these estimators are very close to the true maximiser \(\theta ^{\star }\). Besides, note that the distribution of the estimation error is close to a Gaussian distribution, as expected for a maximum likelihood estimator. Also, there is a small estimation bias of the order of \(3\%\), which can be attributed to the discretisation error of SDE (5), and potentially to a small error in the estimation of \(\theta ^{\star }\). We conclude this experiment by using SOUL to perform a predictive empirical Bayesian analysis on the binary responses. We split the original dataset into an \(80\%\) training set \((y^{\text {train}},v^{\text {train}})\) of size \(d_{\text {train}}=546\), and a \(20\%\) test set \((y^{\text {test}}, v^{\text {test}})\) of size \(d_{\text {test}}=137\), and use SOUL to draw samples from the predictive distribution \(p(y^{\text {test}} | y^{\text {train}},v^{\text {train}},v^{\text {test}}, \hat{\theta }_N)\). More precisely, we use SOUL to simultaneously calculate \(\hat{\theta }_N\) and simulate from \(p(\beta | y^{\text {train}},v^{\text {train}}, \hat{\theta }_N)\), followed by simulation from \(p(y^{\text {test}}|\beta , y^{\text {train}}, v^{\text {train}}, v^{\text {test}})\). We then estimate the maximum-a-posteriori predictive response \(\hat{y}^{\text {test}}\), and measure prediction accuracy against the test dataset by computing the error \(\epsilon = \sum _{i=1}^{d_{\text {test}}}\left| y^{\text {test}}_i-\hat{y}^{\text {test}}_i \right| /d_{\text {test}}\) and obtain \(\epsilon = 2.2\%\). For comparison, Fig. 4 below reports the error \(\epsilon \) as a function of \(\theta \) (the discontinuities arise because of the highly non-linear nature of the model). Observe that the estimated \(\hat{\theta }_N\) produces a model that has a very good performance in this regard.

Bayesian logistic regression-a Estimated points of the marginal log-likelihood \(\log \hat{p}(y|\theta )\) with quadratic fit (corresponding to a Gaussian fit in linear scale). b Normalised histogram of \(\hat{\theta }_N\) for 1000 repetitions of the experiment. An estimate of \(\theta ^{\star }\), the maximiser of \(\hat{p}(y|\theta )\), is plotted as a reference

4.2 Statistical audio compression

Compressive sensing techniques exploit sparsity properties in the data to estimate signals from fewer samples than required by the Nyquist–Shannon sampling theorem (Candès et al. 2006; Candès and Wakin 2008). Many real-world data admit a sparse representation on some basis or dictionary. Formally, consider an \(\ell \)-dimensional time-discrete signal \( z \in \mathbb {R}^{\ell } \) that is sparse in some dictionary \( \varvec{\varXi }\in \mathbb {R}^{\ell \times d} \), i.e, there exists a latent vector \( x \in \mathbb {R}^d \) such that \( z = \varvec{\varXi }x\) and \( \Vert x\Vert _0 = \sum _{i=1}^d \mathbb {1}_{\mathbb {R}^*}(x_i) \ll \ell \). This prior assumption is be modelled using a smoothed-Laplace distribution (Lingala and Jacob 2012)

where \(h_\lambda \) is the Huber function given for any \(u \in \mathbb {R}\) by

Acquiring z directly would call for measuring \( \ell \) univariate components. Instead, a carefully designed measurement matrix \(\mathbf{M} \in \mathbb {R}^{p \times \ell }\), with \( p \ll \ell \), is used to directly observe a “compressed” signal \(\mathbf{M} z \), which only requires taking p measurements. In addition, measurements are typically noisy which results in an observation \(y \in \mathbb {R}^p\) modeled as \( y= \mathbf{M} z + w\) where we assume that the noise w has distribution \( \text {N}(0,\sigma ^2{\text {I}}_p)\), and therefore the likelihood function is given by

where \(\text {A}= \mathbf{M} \varvec{\varXi }\), leading to the posterior distribution

To recover z from \(y\), we then compute the maximum-a-posteriori estimate

and set \(\hat{ z}_{\text {MAP}}=\varvec{\varXi }\hat{ x}_{\text {MAP}}\).

Following decades of active research, there are now many convex optimisation algorithms that can be used to efficiently solve (22), even when d is very large (Chambolle and Pock 2016; Monga 2017). However, the selection of the value of \(\theta \) in (22) remains a difficult open problem. This parameter controls the degree of sparsity of x and has a strong impact on estimation performance.

A common heuristic within the compressive sensing community is to set \(\theta _{\mathrm{cs}}=0.1 \times \Vert \text {A}^{\top } y\Vert _{\infty }/\sigma ^2\), where for any \(z \in \mathbb {R}^\ell \), \(\left\| z \right\| _{\infty } = \max _{i\in \{1,\ldots ,\ell \}} \left| z_i \right| \), as suggested in Kim et al. (2007) and Figueiredo et al. (2007); however, better results can be obtained by adopting a statistical approach to estimate \(\theta \).

The Bayesian framework offers several strategies for estimating \(\theta \) from the observation \(y\). In this experiment, we adopt an empirical Bayesian approach and use SOUL to compute the MLE \(\theta ^{\star }\), which is challenging given the high-dimensionality of the latent space.

To illustrate this approach, we consider the audio experiment proposed in Balzano et al. (2010) for the “Mary had a little lamb” song. The MIDI-generated audio file z has \( \ell = 319,725 \) samples, but we only have access to a noisy observation vector y with \( p = 456 \) random time points of the audio signal, corrupted by additive white Gaussian noise with \(\sigma =0.015\). The latent signal x has dimension \( d = 2900 \) and is related to z by a dictionary matrix \(\varvec{\varXi }\) whose row vectors correspond to different piano notes lasting a quarter-second longFootnote 2. The parameter \(\lambda \) for the prior (20) is set to \(\lambda =4\times 10^{-5}\). We used the heuristic \(\theta _{\mathrm{cs}}\) as the initial value for \(\theta \) in our algorithm. To solve the optimisation problem (22) we use the Gradient Projection for Sparse Reconstruction (GPSR) algorithm proposed in Figueiredo et al. (2007). We use this solver because it is the one used in the online MATLAB demonstration of Balzano et al. (2010), however, more modern algorithms could be used as well. We implemented Algorithm 1 using a fixed stepsize \(\gamma _n=6.9 \times 10^{-6}\), a fixed batch size \(m_n=1\), \(\delta _n=20 \, n^{-0.8}/d=0.0069\, n^{-0.8}\) and 100 burn-in iterations.

Note that in this problem \(\theta \mapsto -\log p(y|\theta )\) is non-convex whereas \(x \mapsto -\log p(x|y, \theta )\) is convex. Using Lebesgue’s dominated convergence theorem we get that A1 holds for any compact and convex set \(\varTheta \). Note also that \(\{x \mapsto - \log p(x|y, \theta )\,:\;\theta \in \varTheta \}\) satisfies L1 and L2.

The algorithm converged in approximately 500 iterations, which were computed in only 325 milliseconds. Figure 5 (left), shows the first 250 iterations of the sequence \(\theta _n\) and of the weighted average \(\hat{\theta }_{n}\). Again, observe that the iterates oscillate for a few iterations and then quickly stabilise. Finally, to assess the quality of the estimate \(\hat{\theta }_N\), Fig. 5 (right) presents the reconstruction mean squared error as a function of \(\theta \). The error is measured with respect to the reconstructed signal and is given by \(\text {MSE} (\hat{ x}_{\text {MAP}}) = \Vert z^\star -\varvec{\varXi }\hat{x}_{\text {MAP}}\Vert _2^2/\ell \), where \(z^\star \) is the true audio signal. Observe that the estimated value \(\hat{\theta }_N\) is very close to the value that minimises the estimation error, and significantly outperforms the heuristic value \(\theta _{cs}\) commonly used by practitioners.

4.3 Sparse Bayesian logistic regression with random effects

Following on from the Bayesian logistic regression in Sect. 4.1, where \(p(y|\theta )\) is log-concave and hence \(\theta ^{\star }\) unique, we now consider a significantly more challenging sparse Bayesian logistic regression with random effects problem. In this experiment \(p(y|\theta )\) is no longer log-concave, so SOUL can potentially get trapped in local maximisers. Furthermore, the dimension of \(\theta \) in this experiment is very large (\(d_\theta = 1001\)), making the MLE problem even more challenging. This experiment was previously considered by Atchadé et al. (2017) and we replicate their setup.

Let \(\{y_i\}^{d_y}_{i=1} \in \{0,1\}\) be a vector of binary responses which can be modelled as \({d_y}\) conditionally independent realisations of a random effect logistic regression model,

where \( v_i\in \mathbb {R}^p \) are the covariates, \(\beta \in \mathbb {R}^p\) is the regression vector, \(z_i \in \mathbb {R}^d\) are (known) loading vectors, x are random effects and \(\upsigma >0\). In addition, recall that \(\text {Ber}(\alpha )\) denotes the Bernoulli distribution with parameter \(\alpha \in \left[ 0, 1\right] \) and \(s(u) = \text {e}^u /(1 + \text {e}^u )\) is the cumulative distribution function of the standard logistic distribution. The goal is to estimate the unknown parameters \(\theta =(\beta , \upsigma ) \in \mathbb {R}^p \times \left( 0,+\infty \right) \) directly from \(\{y_i\}^{d_y}_{i=1}\), without knowing the value of x, which we assume to follow a standard Gaussian distribution, i.e. \(p(x)=\exp \{-\left\| x \right\| ^2_2/2\}/(2\pi )^{d/2}\). We estimate \(\theta \) by MLE using Algorithm 1 to maximise (1), with marginal likelihood given by

and penalty function \(g(\theta )= (\lambda \delta _0)^{-1} \sum _{j=1}^{d} h_{\lambda \delta _0}(\beta _j)\), where \(h_\lambda \) is the Huber function defined in (21). Using Lebesgue’s dominated convergence theorem we get that A1 holds for any compact and convex set \(\varTheta \).

We follow the procedure described in Atchadé et al. (2017) to generate the observations \(\{y_i\}^{{d_y}}_{i=1}\), with \({d_y} = 500 \), \(p = 1000\) and \(d = 5\)Footnote 3. The vector of regressors \(\beta _{\text {true}}\) is generated from the uniform distribution on [1, 5] and \( 98\% \) of its coefficients are randomly set to zero. The variance \(\upsigma _{\text {true}}\) of the random effect is set to 0.1, and the projection interval for the estimated \(\upsigma \) is \([10^{-5},+\infty )\). Finally, the parameter \(\lambda \) is set to \(\lambda =30\). We emphasise at this point that \(\theta \) is high-dimensional in this experiment (\(d_\varTheta =1001\)), making the estimation problem particularly challenging.

The conditional log-likelihood function is \(\log p(y|x,\theta ) =\sum _{i=1}^{{d_y}}\lbrace y_{i}(v_i^{{\text {T}}} \beta +\upsigma z_i^{{\text {T}}} x)-\log (1+\text {e}^{v_i^{{\text {T}}} \beta +\upsigma z_i^{{\text {T}}} x}) \rbrace \). To implement Algorithm 1 we use the gradients

Note that \(\{x \mapsto - \log p(x|y, \theta )\,:\;\theta \in \varTheta \}\) satisfies L1 and L2. Therefore, since A1 holds we get that Theorems 7 and 8 apply and the conclusions of Theorem S19 in De Bortoli et al. (2019) hold. Finally, the gradient of the penalty function is given by

where \({\text {sign}}\) denotes the sign function, i.e. for any \(s \in \mathbb {R}\), \({\text {sign}}(s) = |s|/s\) if \(s \ne 0\), and \({\text {sign}}(s) = 0\) otherwise.

We use \(\gamma _n=0.01\), \(\delta _n=n^{-0.95}/d=0.2 \times n^{-0.95}\), a fixed batch size \(m_n=1\), \(\beta _0=\varvec{1}_p\) and \(\upsigma _0=1\) as initial values. Moreover, we perform \(10^4\) burn-in iterations with a fixed value of \(\theta _0=(\beta _0,\upsigma _0)\) to warm-up the Markov chain, and further 600 iterations of Algorithm 1 to warm-start the iterates. Following on from this, we run \(N=5\times 10^4\) iterations of Algorithm 1 to compute \(\hat{\theta }_N\). Computing this estimates required 25 seconds in total.

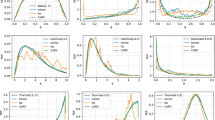

Figure 6 shows the evolution of the iterates throughout iterations, where we used \(\Vert \hat{\beta }_n\Vert _0\) as a summary statistic to track the number of active components. Because the Huber penalty (21) does not enforce exact sparsity on \(\beta \), to estimate the number of active components we only consider values that are larger than a threshold \(\tau \) (we used \(\tau =0.005\)).

From Fig. 6 we observe that \(\hat{\upsigma }_n\) converges to a value that is very close to \(\upsigma _{\text {true}}\), and that the number of active components is also accurately estimated. Moreover, Fig. 7 shows that most active components were correctly identified. We also observe that \(\hat{\beta }_n\) stabilises after approximately 6300 iterations, which correspond to 6300 Monte Carlo samples as \(m_n=1\). This is in close agreement with the results presented in (Atchadé et al. 2017, Figure 5), where they observe stabilization after a similar number of iterations of their highly specialised Polya-Gamma sampler.

It is worth emphasising at this point that Atchadé et al. (2017) considers the non-smooth penalty \(g(\theta )=\lambda \Vert \beta \Vert _1\) instead of the Huber loss. Consequently, instead of using the gradient of g, they resort to the so-called proximal operator of g (Chambolle and Pock 2016). The generalisation of the SOUL methodology proposed in this paper to models that have non-differentiable terms is addressed in Vidal and Pereyra (2018), Vidal et al. (2019).

Notes

Available online: https://archive.ics.uci.edu/ml/datasets/Breast+Cancer+Wisconsin+(Diagnostic).

Each quarter-second sound can have one of 100 possible frequencies and be in 29 different positions in time.

We renamed some symbols for notation consistency. What we denote by \(v_i\), x, \({d_y}\) and d, is denoted in Atchadé et al. (2017) by \(x_i\), \(\mathbf{U} \), N and q, respectively.

References

Ahn, S., Korattikara, A., Welling, M.: Bayesian posterior sampling via stochastic gradient fisher scoring. (2012) arXiv preprint arXiv:1206.6380,

Ahn, S., Shahbaba, B., Welling, M.: Distributed stochastic gradient mcmc. In: International Conference on Machine Learning, pp. 1044–1052, (2014)

Andrieu, C., Moulines, E.: On the ergodicity properties of some adaptive MCMC algorithms. Ann. Appl. Probab. 16(3), 1462–1505 (2006)

Atchadé, Y.F., Fort, G., Moulines, E.: On perturbed proximal gradient algorithms. J. Mach. Learn. Res 18(1), 310–342 (2017)

Aubin, T.: A Course in Differential Geometry. Graduate Studies in Mathematics. AMS, New York (2000)

Balzano, L., Nowak, R., Ellenberg, J.: Compressed sensing audio demonstration. (2010) website http://web.eecs.umich.edu/~girasole/csaudio

Benveniste, A., Métivier, M., Priouret, P.: Adaptive algorithms and stochastic approximations, volume 22 of Applications of Mathematics (New York). Springer-Verlag, Berlin, (1990). Translated from the French by Stephen S. Wilson

Berger, R., Casella, G.: Statistical inference, 2nd edn. Duxbury / Thomson Learning, Pacific Grove, USA (2002)

Bertsekas, D.P.: Nonlinear programming. Journal of the Operational Research Society 48(3), 334–334 (1997)

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press, Cambridge (2004)

Candès, E.J. et al.: Compressive sampling. In: Proceedings of the international congress of mathematicians, volume 3, pages 1433–1452. Madrid, Spain, (2006)

Candès, E.J., Wakin, M.B.: An introduction to compressive sampling [a sensing/sampling paradigm that goes against the common knowledge in data acquisition]. IEEE Signal Processing Magazine 25(2), 21–30 (2008)

Carlin, B.P., Louis, T.A.: Empirical Bayes: past, present and future. J. Am. Statist. Assoc. 95(452), 1286–1289 (2000)

Casella, G.: An introduction to empirical Bayes data analysis. Am. Statist. 39(2), 83–87 (1985)

Chambolle, A., Pock, T.: An introduction to continuous optimization for imaging. Acta Numerica 25, 161–319 (2016)

Cheng, X., Bartlett, P.: Convergence of langevin mcmc in kl-divergence. (2017). arXiv preprint arXiv:1705.09048