Abstract

Holonomic function theory has been successfully implemented in a series of recent papers to efficiently calculate the normalizing constant and perform likelihood estimation for the Fisher–Bingham distributions. A key ingredient for establishing the standard holonomic gradient algorithms is the calculation of the Pfaffian equations. So far, these papers either calculate these symbolically or apply certain methods to simplify this process. Here we show the explicit form of the Pfaffian equations using the expressions from Laplace inversion methods. This improves on the implementation of the holonomic algorithms for these problems and enables their adjustments for the degenerate cases. As a result, an exact and more dimensionally efficient ODE is implemented for likelihood inference.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Fisher–Bingham distribution is defined as the conditional distribution of a general multivariate normal distribution on a unit sphere. In particular, for a p-dimensional multivariate normal distribution with parameters \({\varvec{\mu }}\) and \({\varvec{\varSigma }}\), the corresponding density function with respect to \(d_{\mathcal {S}^{p-1}}(\varvec{x})\), the uniform measure in the \(p-1\)- dimensional sphere \(\mathcal {S}^{p-1}\), is

where

is the normalizing constant. Since multiplication by any orthogonal transformation induces isometry in \(\mathcal {S}^{p-1}\),

where \({\varvec{\varDelta }}=\mathrm{diag}(\delta _1^2,\delta _2^2,{\ldots },\delta _p^2)\) and the orthogonal matrix \({\varvec{O}} \in \mathcal {O}(p)\) are obtained from the singular value decomposition of \({\varvec{\varSigma }}={\varvec{ O^\top \varDelta O}}\). Similarly, we can also choose the particular \({\varvec{ O}}\) such that entries of \({\varvec{ \varDelta ^{-1} O \mu }}\) are non-negative. Hence, without loss of generality, we can assume that the covariance parameter is diagonal, and therefore, a more efficient parametrization of dimension 2p can be used for the normalizing constant

with \({\varvec{\theta }}=(\theta _{1}, \theta _{2},\ldots ,\theta _{p})=\mathrm{diag}(\frac{{\varvec{\varDelta }}^{-1}}{2})\), i.e. \(\theta _i=\frac{1}{2 \delta ^2_i}\) and \({\varvec{\gamma }}=(\gamma _{1}, \gamma _{2},\ldots ,\gamma _{p})={\varvec{\varDelta ^{-1} O \mu }}\). Note the slight inconsistency in notation as we write \(\mathcal {C}(\mathrm{diag}({\varvec{\theta }}),{\varvec{\gamma }})=\mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})\). The special case of \({\varvec{\gamma }}=0\) corresponds to the Bingham distributions studied separately in Wood (1993); Kume and Wood (2007); Sei and Kume (2015). Despite the fact that these distributions are part of the exponential family (see e.g. Mardia and Jupp 2000), maximum likelihood estimation ultimately involves numerical routines for approximating the normalizing constant term \(\mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})\). The method of Kume and Wood (2005) relies on the saddlepoint approximation which is known to be not only very close to the exact value with little computational cost but also numerically stable. However, recently there has been a renewed interest in this problem with the implementation of the holonomic gradient method (HGM), which in theory is exact since the problem of calculating \(\mathcal {C}\) is mathematically characterized via a solution of an ODE (see e.g. Nakayama et al. 2011; Hashiguchi et al. 2013; Sei et al. 2013; Koyama 2011; Koyama and Takemura 2016; Koyama et al. 2014 and Koyama et al. 2012).

In particular, the HGM approach generates exact solutions if the corresponding ODE is numerically stable and the dimensionality of the parameters is not extremely large. Please note that, in the relevant literature, numerically unstable ODE’s are called stiff (see eg 10.6 in Zarowsky 2004). Koyama et al. (2014) focus on the numerical efficiency of HGM implementation by expressing the corresponding Pfaffian equations (see Sect. 4) in terms of some elementary matrices \(R_i\) and \(Q_i\). Note that HGM is applicable not only to \(\mathcal {C}\) but also any holonomic function. See Chapter 6 of Hibi (2013) for more details.

The contribution in this paper is threefold. Firstly, by expanding the Laplace transform in Eq. (1) in partial fractions, we obtain the Pfaffian equations explicitly in terms of only two vector parameters \({\varvec{\theta }}\) and \({\varvec{\gamma }}\), each of length p. This makes the differential structure of these functions more transparent and the implementation of the holonomic algorithm more dimensionally and computationally efficient, since at most 2p parameters are needed for the normalizing constant and there is no need to use symbolic algebra packages for generating the Pfaffians explicitly.

Secondly, by imposing some constraints on \(\theta _i\) and \(\gamma _i\), our approach is easily applied to many important sub-classes within the Fisher–Bingham family (such as the Bingham, Watson and Kent distributions). In fact, the general methodology of HGM algorithms does not automatically apply to these situations because the Pfaffian equations become degenerate. In particular, the corresponding ODE is stiff if some eigenvalues of \({\mathbf {\varDelta }}\) coalesce. Therefore, special attention for cases with various multiplicities in parameters is practically useful in the model selection process. Our explicit Pfaffian expressions, however, require minimal adjustments for these degenerate cases. The special case of Bingham distribution appearing when all \(\gamma _i\)’s are zero is considered separately by Sei and Kume (2015). However, in this paper our approach is more general and accommodates all possible variations in the parameter space. Therefore, we can easily perform model selection within the Fisher–Bingham family based on the standard likelihood ratio tests. If we only need to evaluate the normalizing constant and the first-order derivatives at these degenerate points, the HGM with respect to the radius parameter can be applied as Koyama et al. (2014) and Koyama and Takemura (2016) suggested. However, if we also have to evaluate higher-order derivatives (e.g. standard errors for MLE) or apply the ODE along any general curve and not just as radial rescaling of parameters, the Pfaffian system in our paper is necessary.

Finally, while many papers focus on the normalizing constant, there has not been much interest in the estimation of the orthogonal component \({\mathbf {O}}\) from the real data. For \(p=3\), this problem is tackled in Kent (1982) where a closed form solution is shown for a very useful family of spherical distributions. However, for general p such a solution is not available. We combine the holonomic gradient method for the normalizing constant with that of a particular solution on orthogonal matrices \({\mathbf {O}}\) so that a maximum likelihood estimator is evaluated. This method is shown to work well in both simulated and real data examples, but special care is needed in the general setting for the Fisher–Bingham distributions due to multimodality of the likelihood function for these members of the curved exponential family.

The paper is organized as follows. We start with general remarks about the Fisher–Bingham normalizing constant where we provide a simple univariate integral representation. We then give a brief introduction to the holonomic gradient method which characterizes the the evaluation of \(\mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})\) as a solution of an ordinary differential system of equations. The explicit expressions for the Pfaffian equations needed for such ODE in the case of the Fisher–Bingham integral are given in the next section where degenerate cases with multiplicities on the parameters are specifically addressed. We then focus on the implementation of the proposed MLE approach for both degenerate and non-degenerate cases of Fisher–Bingham distributions so that some log-likelihood ratio test can be used for choosing the appropriate model.

2 Laplace inversion representation

2.1 General case

Based on the key result in Proposition 1 from Kume and Wood (2005), one can easily derive that

where \(f_r(1)\) is the density at point 1 of \(r=\sum _{i=1}^p y_i^2\), while \(y_i\) are independent normal random variables as \(y_i \sim N(\frac{\gamma _i}{2\theta _i}, \frac{1}{2 \theta _i})\) with \(\theta _i=\frac{1}{2 \delta ^2_i}\) and \(\gamma _i=\frac{\mu _i}{\delta ^2_i }>0\). Since the random variable r takes non-negative values, the Laplace transform of its density is the same as its moment generating function (with a sign switch in its argument) which in our parametrization is:

Applying the inverse Laplace transform

for any \(-t_0<\mathrm{min}({\varvec{\theta }})\), implies

where

Equation (1) establishes the general Fisher–Bingham normalizing constant in terms of an univariate complex integration. In particular, it is easily seen from (1) and the definition of \(\mathcal {A}({\varvec{\gamma }}, {\varvec{\theta }}) \) that for any \(c \in \mathbb {R}\)

where \(|{\varvec{\gamma }}|\) is the vector of absolute values of \({\varvec{\gamma }}\). Therefore, without loss of generality we can assume that both vector parameters \({\varvec{\theta }}\) and \({\varvec{\gamma }}\) have non-negative entries.

2.2 Degenerate cases

Constraints on the parameter values \({\varvec{\theta }}\) and \({\varvec{\gamma }}\) could lead to degeneracy in the corresponding ODE. For statistical inference however, some model constraints in the Fisher–Bingham distributions are necessary for practical use. Such models induce constraints on \({\varvec{\theta }}\) and \({\varvec{\gamma }}\) for the corresponding normalizing constants as follows (c.f. Mardia and Jupp 2000, Table 9.2):

-

Bingham distribution is generated if \({\varvec{\gamma }}\) is set to zero.

-

Fisher–Watson if \(\theta _2 = \theta _3 =\cdots = \theta _p\) and \(\gamma _3 = \gamma _4 = \cdots =\gamma _p = 0\)

-

Kent distributions if \(\gamma _2=\gamma _3=\cdots =\gamma _p=0\) and \(\sum _{i=1} ^p\theta _i=p\theta _1\)

-

von Mises–Fisher if \(\theta _1=\theta _2=\cdots =\theta _p\)

-

Bingham–Mardia if \(\theta _2=\theta _3=\cdots =\theta _p\) and \(\gamma _2=\gamma _3=\cdots =\gamma _p=0\)

-

Watson if \(\theta _2=\theta _3=\cdots =\theta _p\) and \(\gamma _1=\gamma _2=\cdots =\gamma _p=0\)

Note that property (2) implies that \(\theta _i\) can be assumed strictly positive. Alternatively, this property implies that we can also fix one entry \(\theta _i\) to a fixed value and hence reduce the dimension by one, but we will not concern ourselves here with that. Of the models mentioned above, degeneracy appears in the corresponding ODE if one or two of the following scenarios occur:

-

(a)

some entries in \({\varvec{\theta }}\) coincide.

-

(b)

some entries in \({\varvec{\gamma }}\) are zero.

In order to accommodate scenario (a), let us assume that we have l distinct values such that each \(\theta _i\) has multiplicity \(n_i\), i.e. \(n_1+n_2+{\ldots }+n_l=p\). Let us index the corresponding \(n_{i}\) entries of \({\varvec{\gamma }}\) as \(\gamma _{1,i},{\ldots },\gamma _{n_i,i}\). From the integral representation of

it is clear that its value depends on only the summation terms \(\sum _{r=1}^{n_i} \gamma ^2_{r,i}\) and not on the particular values \(\gamma ^2_{r,i}\). This implies that for scenario (b), we can work with \(\sum _{r=1}^{n_i} \gamma ^2_{r,i}=\gamma ^2_i\) and perform the required differentiation only with respect to this particular \(\gamma _{1,i}=\gamma _i=\sqrt{\sum _{r=1}^{n_i} \gamma ^2_{r,i}}\), while the other \(\gamma ^2_{r,i}\) remain zero. As a result,

and without loss of generality, we can focus on evaluating (3) with l distinct \(\theta _{i}\), while (1) is derived from above if \(n_i=1\) for all i. In the remainder of the paper, we will focus on evaluating \(\mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})\) as in (3) where \({\varvec{\theta }}\) has l distinct values.

3 Holonomic gradient method

In this section, we briefly review the framework of the holonomic gradient methods. See Nakayama et al. (2011), Hashiguchi et al. (2013), Sei et al. (2013), Koyama (2011), Koyama et al. (2014) and Koyama et al. (2012) for details and further information.

Let \(\varTheta \) be an open subset of the d-dimensional Euclidean space. Denote the partial derivative \(\partial /\partial \alpha _i\) by \(\partial _i\). A function \(c({\varvec{\alpha }})\) of \({\varvec{\alpha }}\in \varTheta \) is called holonomic if there exists a finite-dimensional (say r-dimensional) column vector \(\varvec{g}=\varvec{g}({\varvec{\alpha }})\) consisting of (possibly \(c({\varvec{\alpha }})\) and higher-order) partial derivatives of \(c({\varvec{\alpha }})\) such that \(\varvec{g}\) satisfies

where \(\varvec{P}_i({\varvec{\alpha }})\) is a \(r\times r\)-matrix of rational functions of \({\varvec{\alpha }}\). For example, the trigonometric function \(c(\alpha )=\sin \alpha \) is holonomic since it satisfies

where \(\partial =\partial /\partial \alpha \), \(d=1\) and \(r=2\). It is known that the normalizing constants of the von Mises–Fisher, Bingham and Fisher–Bingham distributions are holonomic.

The Eq. (4) is called the Pfaffian equation of \(\varvec{g}\). This equation essentially states that higher- order derivatives of \(\varvec{g}({\varvec{\alpha }})\) are linear combinations of its entries while involving the Pfaffian matrices as rescaling constants. For example, the second-order derivative is

Assume that a numerical value of the vector \(\varvec{g}({\varvec{\alpha }}^{(0)})\) at some point \({\varvec{\alpha }}^{(0)}\in \varTheta \) is given. The holonomic gradient algorithm evaluates \(\varvec{g}({\varvec{\alpha }}^{(1)})\) at any other point \({\varvec{\alpha }}^{(1)}\). Here the term gradient refers to the gradient of \(\varvec{g}({\varvec{\alpha }})\).

Let \(\bar{\varvec{\alpha }}(\tau )\), \(\tau \in [0,1]\), be a smooth curve in \(\varTheta \) such that \(\bar{\varvec{\alpha }}(0)={\varvec{\alpha }}^{(0)}\) and \(\bar{\varvec{\alpha }}(1)={\varvec{\alpha }}^{(1)}\). Denote \(\bar{\varvec{g}}(\tau )=\varvec{g}(\bar{\varvec{\alpha }}(\tau ))\). Then, it is easily shown that \(\bar{\varvec{g}}(\tau )\) is the solution of the ODE

where

In particular, \(\bar{\varvec{g}}(1)=\varvec{g}({\varvec{\alpha }}^{(1)})\).

A natural choice of \(\bar{{\varvec{\alpha }}}(\tau )\) is the segment \(\bar{\varvec{\alpha }}(\tau )=(1-\tau ){\varvec{\alpha }}^{(0)}+\tau {\varvec{\alpha }}^{(1)}\) connecting \({\varvec{\alpha }}^{(0)}\) and \({\varvec{\alpha }}^{(1)}\) with the constant derivative vector \( \frac{d\bar{\alpha }_i(\tau )}{d\tau }={\varvec{\alpha }}^{(1)}_{i}-{\varvec{\alpha }}^{(0)}_{i}\). The holonomic gradient algorithm is described as follows:

-

Input \({\varvec{\alpha }}^{(0)}\), \(\varvec{g}({\varvec{\alpha }}^{(0)})\), \({\varvec{\alpha }}^{(1)}\) and a sufficiently small number \(\delta >0\).

-

Output \(\varvec{g}({\varvec{\alpha }}^{(1)})\).

-

Algorithm

-

1.

Solve the ODE (5) over \(\tau \in [0,1]\) numerically by a Runge–Kutta method so that the solution is attained within a required accuracy.

-

2.

Return \(\bar{\varvec{g}}(1)\).

Note that the standard numerical routines for solving (5) are highly accurate and available in most computer packages. More specifically, the rk function in the deSolve package of R provides the required solution for a given accuracy.

As shown later in Sect. 5, the holonomic gradient method is used for maximum likelihood estimation via some gradient descent scheme, where the orthogonal matrix \({\varvec{O}}\) can somehow be treated independently from the normalizing constant. As a result, we only need the Pfaffian equations for diagonal covariance matrices when the corresponding ODE has dimension 2l.

4 Explicit Pfaffians and HGM for Fisher Bingham

The parameters of Sect. 2.2 for the most general Fisher–Bingham case are \({\varvec{\alpha }} =({\varvec{\theta }},{\varvec{\gamma }})\), i.e. \(\mathrm{dim}(\varTheta )=2l\) where l is the number of distinct values of \(\theta _i\). Using properties (2), we can assume here that \(\theta _i\) and \(\gamma _i\) are allowed to vary freely as positive values, while the smallest entry of \({\varvec{\theta }}\) can be fixed to 0. As a direct consequence of differentiating (1) and the fact that \(\sum _{i=1}^{p}x^{2}_{i}=1\),

This equation implies that partial derivatives \(\frac{\partial \mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})}{\partial \theta _i}\) are sufficient for evaluating \(\mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})\) where the vector \(\varvec{g}\) has length \(r=2l\) and is defined as

where the first-order partial derivatives above are easily seen from (1) or (3), to depend on \({\varvec{\gamma }}\) and \({\varvec{\theta }}\) as

and for \(\gamma _i\ne 0\)

In this case, the corresponding ODE as in (5) is seeking the solution of some vector curve \({\varvec{g}}({\varvec{\alpha }})\) of dimension 2l and the required normalizing constant is simply minus the sum of the components of this vector as in (6). The left side of the Pfaffian equations (4) is clearly \(\frac{\partial \varvec{g}}{\partial \theta _i}\) and \(\frac{\partial \varvec{g}}{\partial \gamma _i}\), which are actually the second-order derivatives \(\frac{\partial ^2 \mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})}{\partial \theta _i \partial \gamma _j}\). In other words, the Pfaffian equations (4) are stating identities such that these second-order derivatives of \(\mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})\) are linearly dependent on the first-order ones and Pfaffian entries. Therefore, in order to establish explicitly the Pfaffian equations for \(\varvec{g}\) we need to consider such particular relationships between the first- and second- order derivatives of \(\mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})\). They are stated in the following theorem:

Theorem 1

If \(\theta _i \ne \theta _j\) and \(\gamma _i\ne 0\ne \gamma _j\), the Pfaffian equations (4) for the general Fisher–Bingham distribution are generated by

where \(\frac{\partial ^2 \mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})}{\partial \theta _j \partial \gamma _i}\) and \(\frac{\partial ^2 \mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})}{\partial \theta _i \partial \theta _j}\) in (13) and (14) can be given in terms of first-order derivatives using (11) and (10).

The proofs of these identities which are in “Appendix” rely on results from partial fractions.

Note also that as the Pfaffian matrices are defined in terms of the pairwise differences \(\theta _i-\theta _j\), one can easily see that the ODE solution for \(\mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})\) satisfies properties (2).

As a corollary to the theorem, the differential equation for the well-known von Mises–Fisher distribution is derived from (6) and (15) as:

where \(l=1\), \(n_1=p\) and \({\varvec{\theta }}={\varvec{0}}\). The expression \(\gamma _1^{\frac{p}{2}-1} \mathcal {C}(0,\gamma _1)\) satisfies equation 9.6.1 in Abramowitz and Stegun (1972) for the modified Bessel functions and is consistent with the known expression for these cases (see 9.3.4 in Mardia and Jupp 2000).

4.1 Two types of Pfaffians

The Pfaffian matrices will be of two types: \(\mathbf {P}_{i}\) and \(\mathbf {P}_{i+l}\) for \(i=1,2,\dots ,l\) since the vector \(\varvec{g}\) in (7) with parameters \({\varvec{\alpha }} =({\varvec{\theta }},{\varvec{\gamma }})\) implies \(\varvec{g}_{i}=\frac{\partial \mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})}{\partial \theta _i}\) and \(\varvec{g}_{i+l}=\frac{\partial \mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})}{\partial \gamma _i}\). Each \(\mathbf {P}_{i}\) or \(\mathbf {P}_{i+l}\) will be of dimension \(2l\times 2l\), with all but two rows having at most 4 nonzero entries. The explicit expressions are found in “Appendix”

For a curve \(\bar{\varvec{\alpha }}\) with constant derivative, the matrix function \(\mathbf {K}\) of (5) will be a linear combination of the 2l Pfaffian matrices.

This implies that for situations where some \(\theta _{i}\) and \(\theta _{j}\) coalesce, the matrix \(\mathbf {K}\) will have intolerably large entries due to the presence of \(\frac{1}{(\theta _j-\theta _i)^{r}}\) for \(r=1,2,3\) in the Pfaffian matrices. In these cases, stiffness in the corresponding ODE could appear. These situations are generally addressed by reparametrizing or changing the integrating curve

\(\bar{\varvec{\alpha }}(\tau )\) along which \(\mathbf {K}\) remains manageable. For example, the choose of integrating path along some radial direction as suggested in Koyama et al. (2014) and Koyama and Takemura (2016) seems to work well. The default setting in our implementation is based on the same path so that

starting from a small \(\tau _0\) so that \(\varvec{g}({\varvec{\alpha }}{(\tau _0)})\) is accurately evaluated as a starting point for the ODE. For example, using the curve above for a choice of close entries for \({\varvec{\theta }}=(1, 2.9999, 3, 3.0001)\) and \({\varvec{\gamma }}= (1, 1, 1, 1)\) the method works well by providing within 0.53 seconds a value for \(\mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})=2.9753553\). Note that the saddlepoint approximation provides the value 2.942742 in 0.001 seconds. This is not surprising since, while SPA is very fast, the proposed method relies on a potentially computationally expensive step of evaluating the starting value of \(\varvec{g}\) at a sufficiently small \(\tau _0\) so that to guarantee the required accuracy at the target value \(\tau =1\). In general, our method could require a careful choice of both the starting values and the integration path so that the ODE is does not have numerical problems. However, our implementation with the radial curve described as not failed in the examples that we have considered.

One can easily see that the Pfaffian values do not become degenerate even if all \(\gamma _{i}\) become zero (except cases when \(n_{i}>1\)); therefore, a possible starting value for carrying out the numerical evaluation for general \(\mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})\) or \(\varvec{g}({\varvec{\theta }},{\varvec{\gamma }})\) could be the corresponding derivatives of the Bingham normalizing constant at \(\varvec{g}({\varvec{\theta }},{\varvec{\gamma }}={\varvec{0}})\) (evaluated as in Sei and Kume 2015), and then stemming from this point in \(R^{2l}\), a second integration curve can be defined ending at the required \(\varvec{g}({\varvec{\theta }},{\varvec{\gamma }})\). In cases of \(n_{i}>1\), we can use the power series derived by Kume and Walker (2009) and Koyama et al. (2014) as a starting value of \(\varvec{g}({\varvec{\theta }},{\varvec{\gamma }})\).

5 MLE optimization using the gradient approach

If the observed data are collected in a matrix \(\mathbf {X}=(x_1, x_2,{\ldots },x_n)\) of dimension \(p \times n\), such that \({\mathbf {A}} =\frac{\sum _{i=1}^n x_i x_i^\top }{n}\) and \({\mathbf {B}} =\frac{\sum _{i=1}^n x_i}{n}\), the corresponding likelihood function is

with \({\varvec{\varSigma }}^{-1}={\mathbf {O}}^\top {\varvec{\varDelta }}^{-1} {\mathbf {O}}\), \(\mathcal {C}(\frac{{\varvec{\varSigma }}^{-1}}{2},{\varvec{\varSigma }}^{-1} \mu )=\mathcal {C}(\frac{{\varvec{\varDelta }}^{-1}}{2},{\varvec{\gamma }})\), \({\varvec{\gamma }}= {\varvec{\varDelta }}^{-1} {\mathbf {O}}{\varvec{\mu }}\) and \(\frac{{\varvec{\varDelta }}^{-1}}{2}=\mathrm{diag}({\varvec{\theta }})\), while without loss of generality one can replace \({\varvec{O}}\) with \(-{\varvec{O}}\) as this does not affect \({\mathbf {O}}^{\top }{\varvec{\varDelta }}^{-1} {\mathbf {O}}\) but switches the sign of \({\mathbf {O}}{\mathbf {B}} {\varvec{\gamma }}^{\top }\).

Therefore, maximizing \(\log \mathcal {L}(\frac{{\varvec{\varSigma }}^{-1}}{2},{\varvec{\varSigma }}^{-1} \mu , \mathbf {X})\) is equivalent to minimizing

Since values of \({\varvec{\theta }}\) can be shifted so that its smallest value becomes 0 and \({\mathbf {O}}\) can allow for \({\varvec{\gamma }}\) to have non-negative entries, we can optimize (16) on \({\varvec{\theta }}\ge 0\) with \(\mathrm{min}({\varvec{\theta }})=0\) and \({\varvec{\gamma }}\ge 0\) by iteratively updating the parameters which increase the likelihood value such that:

-

1.

for a fixed \({\mathbf {O}}\), consider the optimization problem on \({\varvec{\theta }}\) and \({\varvec{\gamma }}\) which is performed in 2l dimensions including the ODE for the HGM implementation.

-

2.

by keeping these values \({\varvec{\theta }}\) and \({\varvec{\gamma }}\) fixed, we can then find the optimal \({\mathbf {O}}\) by minimizing or decreasing only the quadratic part of the likelihood \(tr({\mathbf {A}}{\mathbf {O}}^{\top } \mathrm{diag}({\varvec{\theta }}) {\mathbf {O}}\) \(+{\mathbf {O}}{\mathbf {B}} {\varvec{\gamma }}^{\top })\).

In order to establish a gradient descent approach for the first step, we only need the partial derivatives of \(\log L({\varvec{\theta }}, {\varvec{\gamma }}, {\mathbf {O}})\) as follows:

where \(\frac{\partial {\mathcal {C}}({\varvec{\theta }},{\varvec{\gamma }})}{\partial {\varvec{\theta }}}\frac{1}{{\mathcal {C}}({\varvec{\theta }},{\varvec{\gamma }})}\) and \(\frac{\partial {\mathcal {C}}({\varvec{\theta }},{\varvec{\gamma }})}{\partial {\varvec{\gamma }}}\frac{1}{{\mathcal {C}}({\varvec{\theta }},{\varvec{\gamma }})}\) are the output of our holonomic gradient algorithm implementation for the Pfaffian equations shown earlier. In its general form, the second optimization needs special care as it is a non standard optimization problem in \(\mathcal {O}(p)\). We show below an adopted gradient method which addresses this problem and therefore completes the MLE optimization. In fact, two special cases that do not require our optimization in \(\mathcal {O}(p)\) are:

-

Bingham distribution, i.e. \({\varvec{\gamma }}={\varvec{0}}\), here the orthogonal component of the SVD decomposition of \({\mathbf {A}}\) is optimal

-

Kent distributions for \(p=3\) where approximate MLE is used and the problem is conveniently reduced to an optimization in \(\mathcal {O}(2)\) after the third column vector of \({\mathbf {O}}\) is chosen independently such that the 3-dimensional vector \({\mathbf {B}}\) coincides with a fixed axis (see Kent 1982, Sect. 4).

5.1 Optimization in \({{\mathbf {O}}}\)

In particular, we need to find the optimal \(\hat{{\mathbf {O}}}\) such that

In fact, this problem is equivalent to

This is the weighted Procrustes optimization problem considered in Chu and Trendafilov (1998). The authors there adopt an ODE approach to this problem as a simple adaption of continuous gradient optimization. We show in the following the gradient descent version in discrete time which can be immediately implemented within a unified MLE optimization procedure for the Fisher–Bingham family of distributions. Note that, provided we allow in the likelihood optimization the sign of one of the components in \({\varvec{\gamma }}\) to vary, the optimal matrix \({\mathbf {O}}\) can be allowed to be a rotation matrix, i.e. \({\mathbf {O}}=e^{{\varvec{v}} }\) where \({\varvec{v}}\) is skew symmetric, i.e. \({\varvec{v}}{+}{\varvec{v}}^\top =0\).

Proposition 1

A necessary condition for \({\mathbf {O}}\) to be an optimal orthogonal matrix is that

is symmetric.

Proof

(see “Appendix”). \(\square \)

In our case however, we can implement the gradient approach in the orthogonal group by taking as a possible new update for \({\mathbf {O}}\) some rotation along the curve

Clearly, this curve reduces to a single point only if \(\mathcal {A}\) is symmetric, i.e. \({\mathbf {O}}\) is a critical point. We can use this fact as a stopping criterion in our gradient optimization. We proceed in a similar way to obtain the second derivative, and it can be shown that a necessary condition that a particular critical \({\mathbf {O}}\) is a local minimum is

5.2 Algorithm for finding the MLE

We now have all the ingredients to establish our gradient approach for the MLE of the Fisher–Bingham distributions.

Algorithm

For a given initial set of estimates \({\varvec{\theta }}, {\varvec{\gamma }}\) and \({\mathbf {O}}\), we perform the updates as follows:

-

1.

$$\begin{aligned} \hat{{\varvec{\theta }}}={\varvec{\theta }}+ \frac{\partial \log L({\varvec{\theta }}, {\varvec{\gamma }}, {\mathbf {O}})}{\partial {\varvec{\theta }}} \delta _{{\varvec{\theta }}} \end{aligned}$$

where \(\frac{\partial \log L({\varvec{\theta }}, {\varvec{\gamma }}, {\mathbf {O}})}{\partial {\varvec{\theta }}}\) is as in (17) and \(\delta _{{\varvec{\theta }}}\) is a real number such that \(\log L(\hat{{\varvec{\theta }}}, {\varvec{\gamma }}, {\mathbf {O}})>\log L({\varvec{\theta }}, {\varvec{\gamma }}, {\mathbf {O}})\).

-

2.

$$\begin{aligned} \hat{{\varvec{\gamma }}}={\varvec{\gamma }}+ \frac{\partial \log L(\hat{{\varvec{\theta }}}, {\varvec{\gamma }}, {\mathbf {O}})}{\partial {\varvec{\gamma }}} \delta _{{\varvec{\gamma }}} \end{aligned}$$

where \(\frac{\partial \log L(\hat{{\varvec{\theta }}}, {\varvec{\gamma }}, {\mathbf {O}})}{\partial {\varvec{\gamma }}}\) is as in (18) and \(\delta _{{\varvec{\gamma }}}\) is a real number such that \(\log L(\hat{{\varvec{\theta }}}, \hat{{\varvec{\gamma }}}, {\mathbf {O}})>\log L(\hat{{\varvec{\theta }}}, {\varvec{\gamma }}, {\mathbf {O}})\).

-

3.

$$\begin{aligned} \hat{{\mathbf {O}}}=e^{\hat{{\varvec{v}}} t_0}{\mathbf {O}}\end{aligned}$$

where \({\varvec{v}}\) is calculated as in (19) and \(t_0\) is chosen such that \(\log L(\hat{{\varvec{\theta }}}, \hat{\gamma }, \hat{{\mathbf {O}}})>\log L(\hat{{\varvec{\theta }}}, \hat{{\varvec{\gamma }}}, {\mathbf {O}})\).

-

4.

We stop when the derivatives in steps 1 and 2 and \(\hat{{\varvec{v}}}\) are practically zero.

Note, however, that if we wanted to fit the Bingham distribution, i.e. \({\varvec{\gamma }}\) is assumed to be zero, then there is no need to implement step 3 above as the optimal \({\mathbf {O}}\) in this case is simply the one for which \(\mathcal {A}=0\) that is \({\mathbf {O}}{\mathbf {A}} {\mathbf {O}}^\top \) is diagonal.

5.3 Numerical evidence

In Nakayama et al. (2011), the authors illustrate the general methodology of holonomic gradient method by focusing on two data sets: one from the area of astronomy and the other one from the magnetism. We revisit the first data set in order to confirm that our method gives the same MLE results. We also want to make use of our different parametrization which deals with the sub-classes of Fisher–Bingham family to perform statistical inference to choose the most appropriate model. The second data set considered is previously used in the paper of Arnold and Jupp (2013) where a statistical model of orthogonal frames is introduced. Particular recordings of three orthogonal axis related to individual earthquake events in New Zealand are grouped in three data sets. Each triplet of orthogonal axes in \(\mathbb {R}^3\) related to a particular earthquake event gives rise to a direction orthogonal to the horizontal plane. Observations of these directions can allow modelling by Bingham distributions c.f Arnold and Jupp (2013). So we have three classes of directional data where Bingham distributions are considered appropriate. In particular, a Bayesian modelling approach to fitting Bingham distributions to such data is also considered in Fallaize and Kypraios (2014). We will show below that in fact the best modelling choice among the sub-classes of Fisher–Bingham family is indeed the Bingham distribution.

Astronomy data

For this data set in our parametrization, the components A and B are as follows:

Fitting the Fisher–Bingham distribution to these data, we get the following MLE values

where the values in brackets are the MLE estimates using the saddlepoint approximation for the normalizing constant. The optimal likelihood value rescaled by \(-n\) as defined in (16) is \(2.457746=\log 11.67846\) which is same as the value reported in Nakayama et al. (2011). The corresponding quantity for the saddlepoint approximation is 2.463414. We fitted to this data set the Kent distribution and the MLE of the corresponding quantities using HGM (and saddlepoint approximation) are

with 2.465478 and 2.471299 being the corresponding values of function (16) at these optimal points. Since the difference in the number of parameters between these models is \(8-5=3\), we can apply the log-likelihood ratio test, under the null hypothesis of the Kent model

where \(\log L\) is (the rescaled log-likelihood by \(-n\)) defined in (16) and \({\varvec{\varTheta }}_{\mathrm{FB}}\) and \({\varvec{\varTheta }}_K\) represent the MLE estimates for the full Fisher–Bingham and Kent, respectively. The sample size is \(n=168\), and the value of likelihood ratio statistic is therefore \(2*168*(2.465478-2.457746)=2.597952\) which suggests that there is not enough evidence supporting the full Fisher–Bingham distribution model here. The same conclusion holds for the saddlepoint approximation quantities.

The planar projections on the horizontal plane of the frame of orthogonal axes (P A T) related to earthquake records. The left plot shows the data for earthquakes in Christchurch prior to 22 February 2001, the middle plot those recorded post 22 February 2001, and the third plot refers to earthquake records in South Island. The point \(+\) in each plot denotes the mode of the Bingham distribution fitted to directions of axis \(\mathbf A \)

Earthquake data

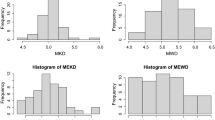

The three axes of interest for an earthquake event are the directions of compressional axis P; tensional axis T, while the null axis A is defined as \(\mathbf A = \mathbf P \times \mathbf T \). For these data sets, the first two axes tend to be horizontal, and therefore, the third axis points vertically. These axial data are shown in Fig. 1 and are split into three groups of particular interest. The key assumption in modelling these data sets is that observed directions of axis A follow a Bingham distribution on the sphere of dimension 2.

The MLEs for the Bingham distributions parameters fitted to the three data sets of directions of axis A shown in Fig. 1 are as follows:

We also fitted Fisher–Bingham distributions to these three clusters of directions, and the corresponding values of the corresponding \(\chi ^2_3\) test statistics and their p values are 0.4889717(0.9213075), 3.885764(0.2740667) and 1.630983 (0.6523852). These results suggest that the Bingham distribution assumption is reasonable for these data sets. One of the referees mentioned correctly that the Bingham distribution is in fact appropriate for axial data. This means that if the data points undergo independently some axial rearrangement, (namely independent sign changes to each individual coordinates), the likelihood will not change under Bingham but will do so for the Fisher–Bingham case. Therefore, the model choice that we perform here is to only illustrate numerically that our HGM implementation here works for a given axial arrangement of these data points, and alternative models like the matrix Fisher distributions as suggested by the referee could be better modelling strategies for these orthogonal frames.

6 Concluding remarks

In this paper, we provide explicitly the Pfaffian system for the normalizing constant of the Fisher–Bingham distributions including the degenerate cases. Such explicit expressions have not only theoretical interest but also improve on the implementation of the current methods used for the MLE of these models. We reduce the dimensionality of the ODE equation as we need to operate at a dimension not more than twice the number of distinct values of \(\theta _i\). The standard HGM so far does not account for multiplicities among \(\theta _i\)’s or \(\gamma _i=0\). We can also perform exact MLE inference by using gradient optimization methods for the optimal orthogonal component \({\mathbf {O}}\) as in weighted Procrustes optimization. Note, however, that optimization in \({\mathbf {O}}\) shown in Sect. 5.1 is only local and \(\mathrm{rank}({\mathbf {B}}^{\top } {\varvec{\gamma )}}=1\) might imply many optimal solutions. For the Bingham distribution, namely \({\varvec{\gamma }}=0\) case, the optimal matrix \({\mathbf {O}}\) does not depend on \({\varvec{\theta }}\) as that is defined such that \(\sum _{i=1}^n {\mathbf {O}}x_i ({\mathbf {O}}x_i)^{\top } \) is diagonal. The numerical examples indicate that when carefully implemented, the method is highly accurate and performs well in real applications. Its implementation can fail sometimes since the corresponding ODE does not perform well numerically. This can be addressed by changing the ODE, namely, by altering either the starting point and/or the integrating path as discussed in the last paragraph of Sect. 4. The default choice of the curve which is used in our implementation in R works well in many tests. As indicated in our first real data example, the MLE using the saddle point approximation is with some exceptions, not far from the our MLE. One can start the HGM from this solution. This hybrid approach could in principle reduce the regions of the numerical search and could be seen as a way of calibrating the saddle point approximation. Our proposed method clearly generalizes that given in Koyama et al. (2014) since it offers explicit expressions for the Pfaffian equations for all Fisher–Bingham distributions including those with degeneracies in the parameters. Finally, since the saddle point approximation method is numerically stable, practically accurate and immediately available, the HGM could be used as a refinement to this approximation.

References

Abramowitz, M., Stegun, I.A.: Handbook of Mathematical Functions with Formulas. Graphs and Mathematical Functions. Dover, New York (1972)

Arnold, R., Jupp, P.E.: Statistics of orthogonal axial frames. Biometrika 100, 571–586 (2013)

Chu, M.T., Trendafilov, N.T.: On a differential equation approach to the weighted orthogonal Procrustes problem. Stat. Comput. 8, 125–133 (1998)

Fallaize, C.J., Kypraios, T.: Exact Bayesian Inference for the Bingham Distribution Statistics and Computing. Springer, Berlin (2014)

Hashiguchi, H., Numata, Y., Takayama, N., Takemura, A.: Holonomic gradient method for the distribution function of the largest root of a wishart matrix. J. Multivar. Anal. 117, 296–312 (2013)

Hibi, T. (ed.): Gröbner Bases: Statistics and Software Systems. Springer, New York (2013)

Kent, J.T.: The Fisher–Bingham distribution on the sphere. J. R. Stat. Soc. Ser. B 44, 71–80 (1982)

Koyama, T.: A Holonomic Ideal Annihilating the Fisher-Bingham Integral. arxiv:1104.1411 (2011)

Koyama, T., Takemura, A.: Holonomic gradient method for distribution function of a weighted sum of noncentral chi-square random variables. Comput. Stat. 31, 1645–1659 (2016)

Koyama, T., Nakayama, H., Nishiyama, K., Takayama, N.: The Holonomic Rank of the Fisher–Bingham System of Differential Equations. arxiv:1205.6144 (2012)

Koyama, T., Nakayama, H., Nishiyama, K., Takayama, N.: Holonomic gradient descent for the Fisher–Bingham distribution on the n-dimensional sphere. Comput. Stat. 29, 661–683 (2014)

Kume, A., Walker, S.G.: On the Fisher–Bingham distribution. Stat. Comput. 19, 167–172 (2009)

Kume, A., Wood, A.T.A.: Saddlepoint approximations for the Bingham and Fisher–Bingham normalising constants. Biometrika 92, 465–476 (2005)

Kume, A., Wood, A.T.A.: On the derivatives of the normalising constant of the Bingham distribution. Stat. Probab. Lett. 77, 832–837 (2007)

Mardia, K.V., Jupp, P.E.: Directional Statistics. Wiley Series in Probability and Statistics. Wiley, Chichester (2000)

Nakayama, H., Nishiyama, K., Noro, M., Ohara, K., Sei, T., Takayama, N., Takemura, A.: Holonomic gradient descent and its application to the Fisher–Bingham integral. Adv. Appl. Math. 47, 639–658 (2011)

Sei, T., Kume, A.: Calculating the normalising constant of the Bingham distribution on the sphere using the holonomic gradient method. Stat. Comput. 25, 321–332 (2015)

Sei, T., Shibata, H., Takemura, A., Ohara, K., Takayama, N.: Properties and applications of Fisher distribution on the rotation group. J. Multivar. Anal. 116, 440–455 (2013)

Wood, A.T.A.: Estimation of the concentration parameters of the Fisher matrix distribution on \( {SO}(3)\) and the Bingham distribution on \(S_q, q\ge 2\). Aust. J. Stat. 35, 69–79 (1993). (Wiley Online Library)

Zarowsky, C.J.: An Introduction to Numerical Analysis for Electrical and Computer Engineers. Wiley, London (2004)

Acknowledgements

The authors are very grateful to Richard Arnold and Peter Jupp for providing the earthquake data and the two anonymous referees for their helpful comments. This work was partially supported by JSPS KAKENHI Grant No. JP26108003 and JP26540013. Our special thanks go to Andy Wood for general discussions and encouragement.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix

Appendix

The results of Theorem 1 rely heavily on Lemma 1 which is stated after some initial remarks.

Remark 1

One can easily notice that

and

and therefore, these elementary functions

are actually representing the first-order derivatives \(\frac{\partial \mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})}{\partial \theta _i}\) and \(\frac{\partial \mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})}{\partial \gamma _i}\).

In what follows, we will show that based on the theory of partial fractions, \(\frac{\partial \mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})}{\partial \theta _i}\) and \(\frac{\partial \mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})}{\partial \gamma _i}\) can be used to express the integrals above even for \(r=3,4\) which will then derive the expressions for the second-order derivatives. This is the basis of the following methodology for obtaining the Pfaffian equations.

For example, using (8) and (9) the second-order derivatives generate these expressions:

For \(i \ne j\)

and for \(i=j\),

Remark 2

If \(i=j\) it is clear, however, that nonzero terms \(\gamma _i\) give rise to

in the second-order derivatives, while for \(\gamma _i=0\) such terms vanish.

Remark 3

The second-order derivatives \(\frac{\partial ^2 \mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})}{\partial \theta _i \partial \theta _j}\), \(\frac{\partial ^2 \mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})}{\partial \gamma _i \partial \gamma _j}\) and \(\frac{\partial ^2 \mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})}{\partial \gamma _i \partial \theta _j}\) for \(i\ne j\) can be given in terms of only this pair of basis functions

which from Remark 1 are obtained in terms of \(\frac{\partial \mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})}{\partial \theta _i }\) and \(\frac{\partial \mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})}{\partial \gamma _i }\). This is easily seen if applying the integration with respect to \(\mathcal {A}({\varvec{\gamma }}, {\varvec{\theta }}) e^{t} \mathrm{d}t\) and by using the following basis decomposition:

Lemma 1

If \(\theta _i \ne \theta _j\) and \(\gamma _i\ne 0 \ne \gamma _j\), then

and

where

and

i.e.

Note that expressions on the right-hand side for a and b in the statement of Lemma are valid if \(B\ne 0 \ne D\).

Proof of Lemma 1

The identity (26) is a direct consequence of the theory of partial fractions. From (26), we see that

or

and, applying this equation for \(t=-\theta _i\), \(t=-\theta _j\), we have

which establish the explicit expressions for b and d. After differentiating with respect to t both sides of (29) and then substituting \(t=-\theta _i\), \(t=-\theta _j\) consecutively, we have the following pair of equations

which confirm the remaining expressions for a and b of the lemma including the identity \(a=-c\).

The second result of the lemma is direct consequence of the first, while \(\int \limits _{\text {i}\mathbb {R}+t_0} \frac{1}{(\theta _i+t)} \mathcal {A}({\varvec{\gamma }}, {\varvec{\theta }}) e^{t} \mathrm{d}t=\frac{2}{\gamma _i}\frac{\partial \mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})}{\partial \gamma _i}\) and \(\int \limits _{\text {i}\mathbb {R}+t_0} \frac{1}{(\theta _i+t)^2} \mathcal {A}({\varvec{\gamma }}, {\varvec{\theta }}) e^{t} \mathrm{d}t= -\frac{4n_i}{\gamma _i^3}\frac{\partial \mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})}{\partial \gamma _i} -\frac{4}{\gamma _i^2}\frac{ \partial \mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})}{\partial \theta _i}\) \(\square \)

Proof of Theorem 1

Applying Lemma 1 to Eqs. (20), (21) and (22), we obtain the following three identities:

since the corresponding terms are

with

and

since

and

The corresponding cases of \(i=j\), are obtained after applying \(\frac{\partial }{\partial \gamma _i }\) on both sides of (6) and separating the term \(\frac{\partial ^2 \mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})}{\partial \theta _i \partial \gamma _i}\) while using (32 33)

Similarly, after applying \(\frac{\partial }{\partial \theta _j }\) on both sides of (6) and using (31) we have

Finally, the equation for \(\frac{\partial ^2 \mathcal {C}({\varvec{\theta }},{\varvec{\gamma }})}{\partial ^2 \gamma _i^2}\) is

with singularity at \(\gamma _i=0\) if \(n_i\ge 2\).

Explicit expressions for the Pfaffians For \(\mathbf {P}_{i}\) only the rows i and \(i+l\) will have 2l nonzero entries, while the remaining \(2(l-1)\) rows indexed by \(j\in \{1,2,{\ldots },l |j \ne i\}\) and \(j+l\) will have at most 4 nonzero entries as indicated in (10):

and for the \(l-1\) rows \(j+l\) using (11) (with i and j interchanged) we have only 3 nonzero entries:

The ith row of \(\mathbf {P}_{i}\) can be obtained by rewriting (14):

and therefore, the nonzero entries of \(\mathbf {P}_{i}(i,:)\) are:

\(j\in \{1,2,{\ldots },l |j \ne i\}\) and for the \(i+l\)th row. Please note that Eq. (13) implies that

and

Similarly, one can show that for the second type \(\mathbf {P}_{i+l}\) the only nonzero elements in the rows j and \(j+l\), for all \(j \ne i\) are

as seen from (11). For the ith row

and for the (\(i+l\))th row

Proof of Proposition 1

Now, for a given \({\mathbf {O}}\) and some direction \({\varvec{v}}\), we can define a curve in the space of orthogonal matrices which start from \({\mathbf {O}}\): \({\mathbf {O}}(t)=e^{{\varvec{v}} t}{\mathbf {O}}\) where \({\varvec{v}}\) is skew symmetric. Such curves clearly start from \({\mathbf {O}}\) since \(e^{{\varvec{v}} t}|_{t=0}={\mathbf {I}}\). Since \(\frac{\log L({\varvec{\theta }}, {\mathbf {O}}(t), {\varvec{\gamma }})}{\partial t}=-\frac{tr({\mathbf {A}}{\mathbf {O}}^{\top } \mathrm{diag}({\varvec{\theta }}) {\mathbf {O}}+ {\varvec{\gamma }} {\mathbf {B}}^{\top } {\mathbf {O}}^\top )}{\partial t}\) and \(\frac{\partial {\mathbf {O}}(t) }{\partial t}|_{t=0}={\varvec{v}} {\mathbf {O}}\), \(-{\varvec{v}}^{\top }={\varvec{v}}\) we obtain

This derivative is zero for any skew symmetric matrix \({\varvec{v}}\) only if

is symmetric, i.e. \(\mathcal {A}=\mathcal {A}^\top \).

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Kume, A., Sei, T. On the exact maximum likelihood inference of Fisher–Bingham distributions using an adjusted holonomic gradient method. Stat Comput 28, 835–847 (2018). https://doi.org/10.1007/s11222-017-9765-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-017-9765-3