Abstract

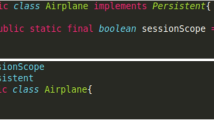

Nowadays, usability can be meant as an important quality characteristic to be considered throughout the software development process. A great variety of usability techniques have been proposed so far, mostly intended to be applied during analysis, design, and final testing phases in software projects. However, little or no attention has been paid to the analysis and measurement of usability in the implementation phase. Most of the time, usability testing is traditionally executed in advanced stages. However, the detection of usability flaws during the implementation is of utmost importance to foresee and prevent problems in the utilization of the software and avoid significant cost increases. In this paper, we propose a feasible solution to analyze and measure usability metrics during the implementation phase. Specifically, we have developed a framework featuring code annotations that provides a systematic evaluation of the usability throughout the source code. These annotations are interpreted by an annotation processor to obtain valuable information and automatically calculate usability metrics at compile time. In addition, an evaluation with 32 participants has been carried out to demonstrate the effectiveness and efficiency of our approach in comparison with the manual process of analyzing and measuring internal usability metrics. Perceived satisfaction was also evaluated, demonstrating that our approach can be considered as a valuable tool for dealing with usability metrics during the implementation phase.

Similar content being viewed by others

References

Abran, A., Khelifi, A., Suryn, W., & Seffah, A. (2003). Usability meanings and interpretations in ISO standards. Software Quality Journal, 11(4), 325–338.

Ammar, L. B., Trabelsi, A., & Mahfoudhi, A. (2013). Dealing with usability in model-driven development method. In Proceedings of the International Conference on Enterprise Information Systems.

Bailey, R. W., Wolfson, C. A., Nall, J., & Koyani, S. (2009). Performance-based usability testing: metrics that have the greatest impact for improving a system’s usability. In Proceedings of the International Conference on Human Centered Design.

Bevan, N. (2009). Extending quality in use to provide a framework for usability measurement. In Proceedings of the International Conference on Human Centered Design.

Bevan, N., Carter, J., Earthy, J., Geis, T., & Harker, S. (2016). New ISO standards for usability, usability reports and usability measures. In Proceedings of the International Conference on Human-Computer Interaction. Cham: Springer.

Borges, C. R, & Macías, J. A. (2010). Feasible database querying using a visual end-user approach. In Proceedings of the ACM SIGCHI Symposium on Engineering Interactive Computing Systems.

Brajnik, G. (2000). Automatic web usability evaluation: what needs to be done. In Proc. Human Factors and the Web, 6th Conference.

Briand, L. C., & Wüst, J. (2002). Empirical studies of quality models in object-oriented systems. Advances in Computers, 56, 97–166.

Card, S., Newell, K., & Moran, T. (1999). The psychology of human-computer interaction. Lawrence Erlbaum Associate.

Carvalho, R. M., de Castro Andrade, R. M., de Oliveira, K. M., de Sousa Santos, I., & Bezerra, C. I. M. (2017). Quality characteristics and measures for human–computer interaction evaluation in ubiquitous systems. Software Quality Journal, 25(3), 743–795.

Cayola, L., & Macías, J. A. (2018). Systematic guidance on usability methods in user-centered software development. Information and Software Technology, 97, 163–175.

Dix, A., Finlay, J. E., Abowd, G. D., & Beale, R. (2004). Human-computer interaction. Prentice-Hall.

Dubey, S. K., & Rana, A. (2011). Usability estimation of software system by using object-oriented metrics. ACM SIGSOFT Software Engineering Notes, 36(2), 1–6.

Faulkner, L. (2003). Beyond the five-user assumption: benefits of increased sample sizes in usability testing. Behavior Research Methods, 35, 379–383.

Fernandez, A., Insfran, E., Abrahão, S., Carsí, J. Á., & Montero, E. (2012). Integrating usability evaluation into model-driven video game development. In Proceedings of the International Conference on Human-Centred Software Engineering.

Feuerstack, S., Blumendorf, M., Kern, M., Kruppa, M., Quade, M., Runge, M., et al. (2008). Automated usability evaluation during model-based interactive system development. In Proceedings of the Engineering Interactive Systems.

Fraternali, P., Matera, M., & Maurino, A. (2002). WQA: an XSL framework for analyzing the quality of web applications. In Proc. of IWWOST (Vol. 2).

Han, W. M. (2017). Evaluating perceived and estimated data quality for Web 2.0 applications: a gap analysis. Software Quality Journal, 26(2), 367–383. https://doi.org/10.1007/s11219-017-9365-7.

Hwang, W., & Salvendy, G. (2010). Number of people required for usability evaluation: the 10±2 rule. Communications of the ACM, 53(5), 130–133.

IBM (2018). Eclipse Development Framework. http://www.eclipse.org. Accessed 11 Nov 2018.

IEEE (2008). IEEE 1028 Standard for software reviews and audits.

ISO (2001). ISO/IEC 9126-1:2001. Software engineering- Product Quality – Part 1: quality model.

ISO (2002). ISO/IEC TR 9126-2:2002. Software engineering – Product quality – Part 2: external metrics.

ISO (2003). ISO/IEC TR 9126-3:2003. Software engineering – Product quality – Part 3: internal metrics.

ISO (2005) ISO/IEC 25000. Systems and software engineering – Systems and Software Quality Requirements and Evaluation (SQuaRE).

ISO (2006). ISO/IEC 14598:2006. Information Technology – Software Product Evaluation.

ISO (2011). ISO/IEC 25010:2011. Systems and software engineering — Systems and software Quality Requirements and Evaluation (SQuaRE) — System and software quality models – Quality Model Division.

ISO (2016). ISO/IEC 25023:2016. Systems and Software Engineering – Systems and Software Quality Requirements and Evaluation (SQuaRE) – Measurement of System and Software Product Quality.

Jabangwe, R., & Šmite, D. (2012). An exploratory study of software evolution and quality: before, during and after a transfer. In Proceedings of the IEEE International Conference on Global Software Engineering.

Jabangwe, R., Börstler, J., & Petersen, K. (2015). Handover of managerial responsibilities in global software development: a case study of source code evolution and quality. Software Quality Journal, 23(4), 539–566.

Kanellopoulos, Y., Antonellis, P., Antoniou, D., Makris, C., Theodoridis, E., Tjortjis, C., & Tsirakis, N. (2010). Code quality evaluation methodology using the ISO/IEC 9126 standard. International Journal of Software Engineering and Applications, 1(3), 17–36.

Lettner, F., & Holzmann, C. (2011). Usability evaluation framework: automated interface analysis for android applications. In Proceedings of the International Conference on Computer Aided Systems Theory.

Lund, A. M. (2001). Measuring usability with the USE questionnaire. Usability Interface, 8, 3–6.

Macías, J. A. (2008). Intelligent assistance in authoring dynamically generated web interfaces. World Wide Web, 11(2), 253–286.

Macías, J. A., & Castells, P. (2003). Dynamic web page authoring by example using ontology-based domain knowledge. In Proceedings of the 8th International Conference on Intelligent User Interfaces. ACM.

Memon, A. M. (2009). Using reverse engineering for automated usability evaluation of gui-based applications. In Proceedings of the Human-Centered Software Engineering.

Nielsen, J., & Landauer, T.K. (1993). A mathematical model of the finding of usability problems. In Proceedings of the Conference on Human Factors in Computing Systems.

Nielsen, J., & Molich, R. (1990, March). Heuristic evaluation of user interfaces. In Proceedings of the SIGCHI Conference on Human factors in Computing Systems.

Oliveira, P., Valente, M. T., & Lima, F. P. (2014). Extracting relative thresholds for source code metrics. In Proceedings of the Software Evolution Week-IEEE Conference on Software Maintenance, Reengineering, and Reverse Engineering. IEEE.

Oracle (2018). Java Development Kit. http://www.oracle.com/technetwork/java. Accessed 11 Nov 2018.

Orehovački, T., Granić, A., & Kermek, D. (2013). Evaluating the perceived and estimated quality in use of Web 2.0 applications. Journal of Systems and Software, 86(12), 3039–3059.

Otkjær Bak, J., Nguyen, K., Risgaard, P., & Stage, J. (2008). Obstacles to usability evaluation in practice: a survey of software development organizations. In Proceedings of the Nordic Conference on Human-Computer Interaction.

Panach, J. I., Condori-Fernandez, N., Vos, T., Aquino, N., & Valverde, F. (2011). Early usability measurement in model-driven development: definition and empirical evaluation. International Journal of Software Engineering and Knowledge Engineering, 21(03), 339–365.

Perlman, G. (2015). User interface usability evaluation with web-based questionnaires. http://garyperlman.com/quest/quest.cgi?form=USE. Accessed 11 Nov 2018.

Sánchez, E., & Macías, J. A. (2017). A set of prescribed activities for enhancing requirements engineering in the development of usable e-Government applications. Requirements Engineering, 24(2), 181–203. https://doi.org/10.1007/s00766-017-0282-x.

Sauro, J. (2018). MeasuringU. https://measuringu.com. Accessed 11 Nov 2018.

Seffah, A., Donyaee, M., Kline, R. B., & Padda, H. K. (2006). Usability measurement and metrics: a consolidated model. Software Quality Journal, 14(2), 159–178.

Singh, Y., Kaur, A., & Malhotra, R. (2010). Empirical validation of object-oriented metrics for predicting fault proneness models. Software Quality Journal, 18(1), 3–35.

Sun (2018). NetBeans Development Framework. https://netbeans.org. Accessed 11 Nov 2018.

Tomas, P., Escalona, M. J., & Mejías, M. (2013). Open source tools for measuring the Internal Quality of Java software products. A survey. Computer Standards & Interfaces, 36(1), 244–255.

Tullis, T., & Albert, W. (2013). Measuring the user experience. Morgan Kaufmann.

Veral, R., & Macías, J. A. (2019). Supporting user-perceived usability benchmarking through a developed quantitative metric. International Journal of Human-Computer Studies., 122, 184–195.

Wharton, C. (1992). Cognitive walkthroughs: instructions, forms and examples. Technical Report CU-ICS-92-17. University of Colorado.

Acknowledgments

This work was partially supported by the Madrid Research Council (P2018/TCS-4314).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

About this article

Cite this article

Schramme, M., Macías, J.A. Analysis and measurement of internal usability metrics through code annotations. Software Qual J 27, 1505–1530 (2019). https://doi.org/10.1007/s11219-019-09455-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11219-019-09455-4