Abstract

The aim was to describe biomedical retractions and analyse those retracted in 2000–2021 due to research misconduct among authors affiliated with European institutions. A cross-sectional study was conducted, using Retraction Watch database, Journal Citation Reports and PubMed as data sources. Biomedical original papers, reviews, case reports and letters with at least one author affiliated with an European institution retracted between 01/01/2000 and 30/06/2021 were included. We characterized rates over time and conducted an analysis on the 4 countries with the highest number of retractions: Germany, United Kingdom, Italy and Spain. 2069 publications were identified. Retraction rates increased from 10.7 to 44.8 per 100,000 publications between 2000 and 2020. Research misconduct accounted for most retractions (66.8%). The reasons for misconduct-related retractions shifted over time, ranging from problems of copyright and authorship in 2000 (2.5 per 100,000 publications) to duplication in 2020 (8.6 per 100,000 publications). In 2020, the main reason was fabrication and falsification in the United Kingdom (6.2 per 100,000 publications) and duplication in Spain (13.2 per 100,000 publications).Retractions of papers by authors affiliated with European institutions are increasing and are primarily due to research misconduct. The type of misconduct has changed over time and differ between European countries.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The retraction of scientific papers has increased recently with research misconduct now being the primary cause instead of honest research errors (Campos-Varela & Ruano-Ravina, 2019; Fang et al., 2012; Nath et al., 2006). Although the term “research misconduct” is generally defined as fabrication, falsification, and plagiarism (FFP) (ICMJE, 2022), there is strong heterogeneity concerning research integrity guidance on crucial aspects at both an international and a national level (Godecharle et al., 2014), including the definition of this phenomenon (National Academies of Sciences, Engineering, and Medicine. Fostering. Integrity in Research., 2017; Resnik, 2019). Common definitions may include further violations of good research practice such as duplication, misleading image manipulation; deliberate failure to disclose relationships and activities; and unethical behavior, such as misleading image manipulation or failure to disclose conflicts of interest (Li & Cornelis, 2020; Resnik et al., 2015).

Research misconduct has been identified as a structural problem in research (Grieneisen & Zhang, 2012). In this regard, previous research in Europe has shown that a considerable proportion of researchers have engaged in scientific misconduct. An Italian study found that 21.2% of the researchers surveyed admitted to some form of misconduct (Mabou Tagne et al., 2020), and even higher rates were found in other studies carried out in Spain (43.3%) (Candal-Pedreira et al., 2023a, 2023b) and Norway (39.5%) (Kaiser et al., 2021). In a meta-analysis of researcher surveys conducted in 2009 worldwide, 2% acknowledged having fabricated data and 33.7% in other questionable practices (Fanelli, 2009).

The increase in retractions due to scientific misconduct highlights the need to establish mechanisms and strategies that are truly effective in preventing, detecting, and even sanctioning these unethical behaviors. In the European context, there is no supranational body which supervises research in cases where research misconduct is suspected. Only 14 out of 46 countries have national ethics committees to promote research integrity, and only six of them have the power to investigate, but none can take disciplinary action (Candal-Pedreira et al., 2021). This becomes even more relevant when considering that previous research concludes that countries with policies regulating scientific misconduct have a lower likelihood of having publications retracted (Fanelli et al., 2015).

Moreover, previous research has shown that the country of origin of authors is a contributing factor to retractions and scientific misconduct. In Portugal and Brazil, 60.1% and 55.9% of retractions, respectively, were due to misconduct, with plagiarism being the primary reason (Candal-Pedreira et al., 2023a, 2023b). Other studies have pointed out fraud or suspicion of fraud is the main reason for scientific misconduct in the USA and Germany, meanwhile duplication is more common in China and Turkey (Fang et al., 2012). A study conducted in Brazil (Stavale et al., 2019a, 2019b) concluded that the most frequent type of scientific misconduct was plagiarism, as did another study conducted in India (Elango et al., 2019). Amos (2014) concluded that Italy had the highest ratio of retractions for plagiarism, whereas Finland had the highest ratio of retractions for duplication. Having this into account, it is important to monitor retractions and categorize them by country to identify issues related to unethical behavior. Analyzing retractions is a tool to identify problems related to research misconduct within a particular country.

Previous studies have analysed scientific misconduct in Europe (Marco-Cuenca et al., 2021) or including European countries (Zhang et al., 2020). Marco-Cuenca et al. focused only on the analysis of fabrication, falsification and plagiarism found in both biomedical and non-biomedical papers, while Zhang et al. included only biomedical papers and assigned a retraction reason to each article. Marco-Cuenca et al. (2021) concluded that biomedical research accounts for the majority of retractions due to scientific misconduct. Moreover, fraud in biomedical research can have serious consequences (Stern et al., 2014) as the fraudulent results are incorporated into clinical practice. It is worth noting that retracted articles usually have multiple retraction reasons, and analysing all of them can provide valuable information. However, to the best of our knowledge, no previous study has comprehensively analysed the reasons for retraction, i.e. taking all of them into account, in the biomedical field. Therefore, this study analyzed biomedical retracted research studies by European-affiliated authors from 2000 to 2021 to characterize research misconduct in Europe and identify trends in the countries with the highest number of retractions due to misconduct.

Methods

Study design and data extraction

We conducted a cross-sectional study of papers which had been retracted for any reason from 01/01/2000 through 30/06/2021 and whose corresponding author was affiliated with an European institution, using Retraction Watch database as our main data source (The Retraction Watch Database). For this purpose, we have used the Retraction Watch database. This database has been used in previous research with similar objectives to ours (Marco-Cuenca et al., 2021). The functioning of Retraction Watch was described before (Candal-Pedreira et al., 2022). In summary, it is the most comprehensive database on retractions as its coverage is larger than other databases, such as PubMed. Retraction Watch identifies retractions by accessing various sources and assigns causes based on retraction notices, misconduct investigations, and input from PubPeer. The last access to the Retraction Watch database was on 27/09/2021. We included biomedical original articles, reviews, case reports and letters. We excluded expressions of concern and corrections, papers falling outside the field of biomedical sciences, and non-research papers, such as commentaries and editorials, as well as papers that had not been published in English, Spanish or Portuguese were excluded.

The country assigned to each included paper was based on the corresponding author’s affiliation. For study purposes, the term “Europe” was construed as encompassing current European Union Member States plus the United Kingdom, Norway, Iceland, Liechtenstein, Serbia, Turkey, Russia, Switzerland, and Belarus.

In order to include retractions pertaining to the health sciences, we selected those categorized as “Basic Life Sciences (BLS)” and/or “Health Sciences (HSC)” in the “Subject” field of the Retraction Watch database and included under the head of “Basic Life Sciences (BLS)” are specialized fields, such as biology, microbiology, toxicology, environmental sciences, biochemistry, and virology. The “Health Sciences (HSC)” category includes all medical specialties (medical, surgical, as well as cross-disciplinary specialties such as pathological anatomy and clinical analysis).

The following Retraction Watch variables were extracted for each retracted paper included: title of paper; type of paper (original paper, systematic review, meta-analysis, letter, guidelines, case report); date of paper publication and retraction; number of authors; country of corresponding author; corresponding author’s affiliation (university, hospital, research center, health center, industry, independent); and reason for retraction.

In addition, data relating to the journal of publication (quartile, category, Journal Citation Indicator and impact factor (IF) of the year of publication) were extracted from Journal Citation Reports (Clarivate Analytics).

Retraction causes were obtained from Retraction Watch database and verified using publisher’s notes. Unavailable notes were sourced from Retraction Watch. All the reasons cited by Retraction Watch, found and labeled, were taken into account.

For the categorization of the reasons for retraction, we relied on international documents related to scientific integrity and various practices considered as misconduct or unacceptable/questionable practices by different organisms (ALLEA. The European Code of Conduct for Research Integrity, 2023; COPE, 2019). In this study, we consider both practices included in the traditional definition of research misconduct (fabrication, falsification, and plagiarism), as well as other practices commonly referred to as unacceptable or questionable practices. It is important to note that no distinction is made in this study between unacceptable/questionable practices and research misconduct, and we will refer to all practices under the term “research misconduct”. The reasons for retraction were categorized as follows:

-

No research misconduct: this group included papers identified as having various types of errors unrelated with research misconduct, as well as retracted papers in which a reason for research misconduct had not been explicitly identified (all kind of errors, such as method errors, imaging errors or cell line contamination, unreliable data and/or results because or error).

-

Research misconduct was classified into the following categories: (a) falsification/fabrication of images, data and/or results, false peer review, paper mills and manipulation of results; (b) duplication: papers, images or parts of the text published in other journals by the same authors, “salami slicing”, or duplication of data; (c) plagiarism, whether of complete papers or parts of these, including text and images; (d) problems with authorship and/or affiliation: problems of copyright, dubious authorship, lack of approval of author or third party, objections by the company or institution, or false authorship or affiliation; (e) unreliable data because of misconduct: unreliable data or images, original data not provided, problems of bias or lack of balance, or problems with data; (f) unreliable results because of misconduct; (g) ethical and legal problems: breach of ethics by the author, conflict of interests, lack of informed consent, civil or criminal proceedings, lack of approval by the competent bodies; and, (h) any case in which no specific reason was given but the Retraction Watch database stated that the paper had been retracted for “misconduct”.

-

Reason not specified: papers retracted without a specific reason stated in Retraction Watch database or the notice of retraction (those categorized as “withdrawn” or “retracted” without further explanation and those stating “Unreliable data” or “Unreliable results” without other reason suggesting misconduct or no misconduct).

We excluded two articles with “miscommunication by the author, company, institution or journal” as the only reason for retraction, due to the impossibility of categorizing them in the established groups.

Papers may be associated with more than one reason for retraction. The Retraction Watch database identifies this by associating each paper with a given reason or ground. Hence, we took each of these reasons into account independently, and used the total number of reasons as the unit of measure for our analysis.

Statistical analysis

First, we performed a descriptive analysis of biomedical retractions in Europe, broken down by cause of retraction (honest research error and research misconduct), with the categorical variables being expressed as absolute and relative frequencies, and the quantitative variables as median and interquartile range (25th to 75th percentile). Chi square test was used to assess for differences between samples.

Then, we excluded retractions due to honest research error and those in which no specific reason was given, focusing on retractions because of research misconduct. To make the comparison between countries and years for a given reason of retraction, we calculated the rates using the total number of papers retracted for that reason divided by the total number of papers in that same country and year. The total number of papers assigned to each country and year was extracted from PubMed, with the search being filtered by individual country and year of the study period. To characterize the trend of retractions for research misconduct, both for European countries overall and for the countries ranked highest by number of retractions, a cross-sectional analysis was performed for every 5 years. We calculated the number of papers ascribed to each of the main reasons and expressed the results by reference to the total number of papers as the common denominator. Mann–Kendall test was used to analyze the trends in retractions in European countries overall, by main reason for retraction. To conduct a year-to-year comparison among countries and by the main reasons, the trend analysis was limited to 2020 since we do not have data for the full year of 2021.

We described the trend in misconduct-related causes of retraction across the study period, analyzed the main variations between countries with at least 10 retractions, and examined the main distinguishing patterns by reference to the corresponding author’s country of affiliation. In the results, detailed information is provided for the 4 countries with the highest number of retractions, while in the supplementary material, data for the remaining countries with at least 10 retractions can be accessed.

All analyses were performed using the Microsoft Excel 2016 and Stata v.17 statistics software programs.

Ethical aspects

The manuscript was written following the Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) reporting guideline for cross-sectional studies. The study did not require institutional review board approval because it was based on publicly available information and involved no patient records.

Results

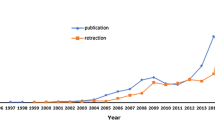

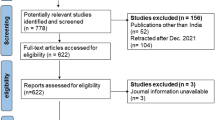

We included 2069 papers retracted from 01/01/2000 to 30/06/2021, by authors affiliated to European institutions identified in the Retraction Watch database (Fig. 1). The number of retractions was 10.7 per 100,000 publications in 2000 and 44.8 per 100,000 publications in 2020. Overall, 1383 (66.8%) retracted papers involved some type of research misconduct, while 322 (15.6%) were retracted due to honest research error. There were differences in type of publication (p < 0.001), journal quartile (p = 0.002), number of categories in which the journal is indexed (p < 0.001) and number of authors (p < 0.001) between the groups (Table 1).

Causes for retractions due to research misconduct

Among the 1383 papers retracted because of research misconduct, we found 2466 reasons for retraction (an article may have more than one cause for retraction). Among them, the most frequent reasons were duplication (n = 542, 22.0%) and misconduct in which no subtype was specified (n = 386, 15.7%) (Table 2).

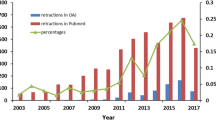

In addition, the most common causes for research misconduct varied over time (Fig. 2). In 2000, the main causes were duplication, problems relating to authorship and affiliations and ethical and legal problems (2.5 per 100,000 publications). Duplication remained as the main cause in 2010, 2015 and 2020 (7.6, 15.3 and 8.6 per 100,000 publications, respectively). Across the period 2000 through 2020, a significant increase was observed in falsification and/or fabrication (p = 0.02), going from 0.8 to 5.9 per 100,000 publications. In the case of plagiarism, the increase was also significant (p = 0.03), and this went from 0.8/100,000 in 2000 to 27.2/100,000 publications in 2010, with a subsequent downturn until 2020. In 2020, the main causes for research misconduct retractions were duplication (8.6/100,000 publications) and unreliable data because of misconduct (8.4/100,000 publications).

Research misconduct by Country

Four countries accounted for the largest number of retracted papers during the study period: the United Kingdom, Germany, Italy, and Spain (Table 3). Falsification and/or fabrication was identified as the main cause of misconduct in 2000, 2005, 2010 and 2020 (9.7, 3.7, 4.6 and 6.2 per 100,000 publications, respectively). In 2015, the main cause of misconduct was ethical and legal problems (8.8 per 100,000 publications). In the German case, duplication was the main retraction reason in 2000 (4.3/100,000 publications). In 2010 and 2015, 14.1 and 11.2 articles per 100,000 publications were retracted because of falsification and/or fabrication, while in 2020 this figure fell to 0. In 2020, duplication and problems relating to authorship and affiliations were the main causes (2.1 per 100,000 publications). In the case of Spain, falsification and/or fabrication and ethical and legal problems were the main causes of retraction in 2005 and 2010, respectively. In the next years, the main cause was duplication, going from 5.0 articles per 100,000 publications in 2015 to 13.2 in 2020. In Italy, in 2010, retractions due to plagiarism accounted for 16.7 per 100,000 publications and in 2020 the main cause of misconduct was duplication, with 15.6 articles per 100,000 publications.

This information can be also found in Supplementary Table 1 for all years of the series for countries with 10 or more retractions.

Discussion

Our study found that research misconduct was the primary cause for paper retraction in Europe from 2000 to 2021, but the specific reasons varied over time and by country. This is the first study to systematically analyze research misconduct across European institutions, taking into account the expanded FFP definition offered in this type of studies.

Our findings indicate that research misconduct has become more prevalent in Europe over the last two decades, even after adjusting for the increase in the number of papers published. The reasons for retraction due to misconduct also changed over time in all countries studied. Initially, copyright and authorship issues were the primary causes, but their prevalence decreased significantly by the end of the period, possibly due to the implementation of authorship control systems and increased researcher awareness.

Since 2010, duplication has been the main reason for retraction in Europe. However, the prevalence of plagiarism and duplication may be underestimated. These results agree with other studies which report a higher prevalence of plagiarism as compared with other types of misconduct (Marco-Cuenca et al., 2021; Mousavi & Abdollahi, 2020; Stavale et al., 2019a, 2019b). While the incipient use of duplication detection software in the early 2000s was said to be the cause of an increase in the identification of plagiarism (Van Noorden, 2011), their continued use should have contributed to its decline. In the same vein, lack of access to these tools in low-income countries may explain why copyright and plagiarism issues remain the primary reason for research misconduct-related retractions in those regions (Rossouw et al., 2020). It is essential for plagiarism control and surveillance systems to stay one step ahead. Tools for detecting plagiarism and duplication should be used routinely in scientific papers and also in dissertations by university undergraduate and postgraduate students (Bordewijk et al., 2021; Fischhoff et al., 2021; Mousavi & Abdollahi, 2020).

It is a matter of concern that, in addition to duplication, the use of unreliable data has been identified as a growing threat in some countries, such as Italy (Capodici et al., 2022; Perez-Neri et al., 2022; Rapani et al., 2020). The proportion of papers retracted for unreliable data has increased dramatically in this country in 2020. This rise may be due to journals using the phrasing “unreliable data” or “invalid data” when they lack the resources to investigate, or it may be due to the massive retractions of publications from paper mills that have taken place in recent years (The Retraction Watch Database).

Individualized analysis of the 4 countries with the highest number of retractions shows interesting data which have not been previously described. Countries’ retraction patterns are different. Falsification and/or fabrication is the main reason in the United Kingdom. In Spain and Italy, however, duplication ranks as the leading cause of research misconduct. This result may suggest a difference between southern European countries and northern/central European countries. These results are in line with other studies conducted in European countries. Thus, a study undertaken in Scandinavian countries found that the proportion of cases corresponding to fabrication and falsification exceeded the proportion corresponding to plagiarism (Hofmann et al., 2020). Another study conducted in Belgium found a prevalence of 40% of guest authorship and 4% of plagiarism (Godecharle et al., 2018). In Portugal, 12.9% of retractions were due to plagiarism and duplication (Candal-Pedreira et al., 2023a, 2023b).

Various explanations for differences between countries in research activity can be found in scientific literature. According to Fanelli et al. (2015), authors from Germany, Australia, China, and South Korea have a higher chance of having their papers retracted than authors from the USA, while authors from France and The Netherlands have a lower likelihood. This may be due to national-level policies introduced to manage misconduct. Countries with such policies and structures to supervise misconduct experience fewer retractions. Therefore, Europe needs a central scientific integrity office that national offices can refer cases to (Candal-Pedreira et al., 2021).

Furthermore, the sociocultural context may also be a determinant of unethical research conduct (Asplund, 2019; Aubert Bonn et al., 2017). It is possible that southern European countries lead in terms of retractions due to duplication and plagiarism because they do not tend to perceive plagiarism as being a cause of research misconduct (Fanelli, 2009). In addition, two studies have observed that being in the early stages of a research career is a predictive factor of retraction as well as engaging in misconduct (Asplund, 2019; Aubert Bonn et al., 2017; Fanelli et al., 2015; Gopalakrishna et al., 2022). This suggests that education in scientific integrity is inadequate and should be reinforced, especially in the case of undergraduate and postgraduate students (El Bairi et al., 2024; Ljubenkovic et al., 2021).

This study’s principal strength resides in the sample size achieved by using the Retraction Watch database as its data-source (Kocyigit & Akyol, 2022; Marco-Cuenca et al., 2021). This database contains over 50,000 retractions and is currently the best source of information on retractions, thanks to its high coverage. We think that the number of retracted papers missed by this study is minimal. This study considers all the reasons identified by Retraction Watch as causes of retraction. In our opinion, this ensures that no reason is underestimated, thus reflecting the reality of the problem more accurately.

This study has also limitations. Some retracted papers couldn’t be accessed, a frequent limitation in this type of study (Bordewijk et al., 2021; Frias-Navarro et al., 2021; Marco-Cuenca et al., 2021). Retracted papers should not be eliminated but should rather be identified and flagged as retracted, as it was recommended by the Committee of Publication Ethics (COPE) (COPE, 2019). A further limitation lies in the fact that attribution of misconduct is based on corresponding author. This may overrepresent the misconduct associated with European institutions in those articles with authors from several countries. An additional limitation may be the lack of detailed information on countries with 10 or fewer retractions due to the limited sample size. However, we believe this would potentially limit the comprehension of our results.

In conclusion, this study shows that the main reason for retraction in Europe is research misconduct, and that the causes of such research misconduct changed over the last 20 years. Currently, duplication and unreliable data are the main reasons for research misconduct in Europe. Furthermore, there are differences in the causes of research misconduct between the countries with the highest number of retractions in Europe. In view of this, the causes of research misconduct should be analyzed on an individualized basis within the context of each country. Having such information is essential for implementing mechanisms of control, surveillance and discipline that would ensure scientific research fulfilling the highest quality standards.

Data availability

The data that support the findings of this study are available from Retraction Watch. Restrictions apply to the availability of these data, which were used under contract license for this study.

References

ALLEA. The European Code of Conduct for Research Integrity. (2023). https://allea.org/wp-content/uploads/2023/06/European-Code-of-Conduct-Revised-Edition-2023.pdf

Amos, K. A. (2014). The ethics of scholarly publishing: Exploring differences in plagiarism and duplicate publication across nations. Journal of the Medical Library Association, 102(2), 87–91. https://doi.org/10.3163/1536-5050.102.2.005

Asplund, K. (2019). [New Swedish legislation on research misconduct from 2020]. Lakartidningen, 116. https://www.ncbi.nlm.nih.gov/pubmed/31846052 (Oredlighet i forskning - regleras i lag fran arsskiftet - Lagen okar rattssakerheten men tacker inte alla omoraliska beteenden i forskningen.)

Aubert Bonn, N., Godecharle, S., & Dierickx, K. (2017). European Universities’ guidance on research integrity and misconduct. Journal of Empirical Research on Human Research Ethics, 12(1), 33–44. https://doi.org/10.1177/1556264616688980

Bordewijk, E. M., Li, W., van Eekelen, R., Wang, R., Showell, M., Mol, B. W., & van Wely, M. (2021). Methods to assess research misconduct in health-related research: A scoping review. Journal of Clinical Epidemiology, 136, 189–202. https://doi.org/10.1016/j.jclinepi.2021.05.012

Campos-Varela, I., & Ruano-Ravina, A. (2019). Misconduct as the main cause for retraction. A descriptive study of retracted publications and their authors. Gaceta Sanitaria, 33(4), 356–360. https://doi.org/10.1016/j.gaceta.2018.01.009

Candal-Pedreira, C., Ghaddar, A., Perez-Rios, M., Varela-Lema, L., Alvarez-Dardet, C., & Ruano-Ravina, A. (2023a). Scientific misconduct: A cross-sectional study of the perceptions, attitudes and experiences of Spanish researchers. Accountability in Research. https://doi.org/10.1080/08989621.2023.2284965

Candal-Pedreira, C., Ross, J. S., Ruano-Ravina, A., Egilman, D. S., Fernandez, E., & Perez-Rios, M. (2022). Retracted papers originating from paper mills: Cross sectional study. BMJ, 379, e071517. https://doi.org/10.1136/bmj-2022-071517

Candal-Pedreira, C., Ruano-Ravina, A., & Perez-Rios, M. (2021). Should the European Union have an office of research integrity? European Journal of Internal Medicine, 94, 1–3. https://doi.org/10.1016/j.ejim.2021.07.009

Candal-Pedreira, C., Ruano-Ravina, A., Rey-Brandariz, J., Mourino, N., Ravara, S., Aguiar, P., & Perez-Rios, M. (2023b). Evolution and characterization of health sciences paper retractions in Brazil and Portugal. Accountability in Research, 30(8), 725–742. https://doi.org/10.1080/08989621.2022.2080549

Capodici, A., Salussolia, A., Sanmarchi, F., Gori, D., & Golinelli, D. (2022). Biased, wrong and counterfeited evidences published during the COVID-19 pandemic, a systematic review of retracted COVID-19 papers. Quality & Quantity. https://doi.org/10.1007/s11135-022-01587-3

Committee on Publication Ethics. (2019). COPE Guidelines: Retraction Guidelines. https://publicationethics.org/retraction-guidelines

El Bairi, K., El Kadmiri, N., & Fourtassi, M. (2024). Exploring scientific misconduct in Morocco based on an analysis of plagiarism perception in a cohort of 1220 researchers and students. Accountability in Research, 31(2), 138–157. https://doi.org/10.1080/08989621.2022.2110866

Elango, B., Kozak, M., & Rajendran, P. (2019). Analysis of retractions in Indian science. Scientometrics, 119(2), 1081–1094. https://doi.org/10.1007/s11192-019-03079-y

Fanelli, D. (2009). How many scientists fabricate and falsify research? A systematic review and meta-analysis of survey data. PLoS ONE, 4(5), e5738. https://doi.org/10.1371/journal.pone.0005738

Fanelli, D., Costas, R., & Lariviere, V. (2015). Misconduct policies, academic culture and career stage, not gender or pressures to publish. Affect Scientific Integrity. Plos One, 10(6), e0127556. https://doi.org/10.1371/journal.pone.0127556

Fang, F. C., Steen, R. G., & Casadevall, A. (2012). Misconduct accounts for the majority of retracted scientific publications. Proceedings of the National Academy of Sciences of the United States of America, 109(42), 17028–17033. https://doi.org/10.1073/PNAS.1212247109

Fischhoff, B., Dewitt, B., Sahlin, N. E., & Davis, A. (2021). A secure procedure for early career scientists to report apparent misconduct. Life Sci Soc Policy, 17(1), 2. https://doi.org/10.1186/s40504-020-00110-6

Frias-Navarro, D., Pascual-Soler, M., Perezgonzalez, J., Monterde, I. B. H., & Pascual-Llobell, J. (2021). Spanish scientists’ opinion about science and researcher behavior. Span J Psychol, 24, e7. https://doi.org/10.1017/SJP.2020.59

Godecharle, S., Fieuws, S., Nemery, B., & Dierickx, K. (2018). Scientists still behaving badly? A survey within industry and universities. Science and Engineering Ethics, 24(6), 1697–1717. https://doi.org/10.1007/s11948-017-9957-4

Godecharle, S., Nemery, B., & Dierickx, K. (2014). Heterogeneity in European research integrity guidance: Relying on values or norms? Journal of Empirical Research on Human Research Ethics, 9(3), 79–90. https://doi.org/10.1177/1556264614540594

Gopalakrishna, G., Ter Riet, G., Vink, G., Stoop, I., Wicherts, J. M., & Bouter, L. M. (2022). Prevalence of questionable research practices, research misconduct and their potential explanatory factors: A survey among academic researchers in The Netherlands. PLoS ONE, 17(2), e0263023. https://doi.org/10.1371/journal.pone.0263023

Grieneisen, M. L., & Zhang, M. (2012). A comprehensive survey of retracted articles from the scholarly literature. PLoS ONE, 7(10), e44118. https://doi.org/10.1371/journal.pone.0044118

Hofmann, B., Bredahl Jensen, L., Eriksen, M. B., Helgesson, G., Juth, N., & Holm, S. (2020). Research integrity among PhD students at the faculty of medicine: A comparison of three Scandinavian universities. Journal of Empirical Research on Human Research Ethics, 15(4), 320–329. https://doi.org/10.1177/1556264620929230

ICMJE. (2022). Recommendations for the conduct, reporting, editing, and publication of scholarly work in medical journals. Corrections, retractions, republications and version control. In.

Kaiser, M., Drivdal, L., Hjellbrekke, J., Ingierd, H., & Rekdal, O. B. (2021). Questionable research practices and misconduct among Norwegian researchers. Science and Engineering Ethics, 28(1), 2. https://doi.org/10.1007/s11948-021-00351-4

Kocyigit, B. F., & Akyol, A. (2022). Analysis of retracted publications in the biomedical literature from Turkey. Journal of Korean Medical Science, 37(18), e142. https://doi.org/10.3346/jkms.2022.37.e142

Li, D., & Cornelis, G. (2020). Defining and handling research misconduct: A comparison between Chinese and European institutional policies. Journal of Empirical Research on Human Research Ethics, 15(4), 302–319. https://doi.org/10.1177/1556264620927628

Ljubenkovic, A. M., Borovecki, A., Curkovic, M., Hofmann, B., & Holm, S. (2021). Survey on the research misconduct and questionable research practices of medical students, PhD students, and supervisors at the Zagreb School of Medicine in Croatia. Journal of Empirical Research on Human Research Ethics, 16(4), 435–449. https://doi.org/10.1177/15562646211033727

Mabou Tagne, A., Cassina, N., Furgiuele, A., Storelli, E., Cosentino, M., & Marino, F. (2020). Perceptions and attitudes about research integrity and misconduct: A survey among young biomedical researchers in Italy. Journal of Academic Ethics, 18(2), 193–205. https://doi.org/10.1007/s10805-020-09359-0

Marco-Cuenca, G., Salvador-Oliván, J., & Arquero-Avilés, R. (2021). Fraud in scientific publications in the European Union. An analysis through their retractions. Scientometrics. https://doi.org/10.1007/s11192-021-03977-0

Mousavi, T., & Abdollahi, M. (2020). A review of the current concerns about misconduct in medical sciences publications and the consequences. Daru, 28(1), 359–369. https://doi.org/10.1007/s40199-020-00332-1

Nath, S. B., Marcus, S. C., & Druss, B. G. (2006). Retractions in the research literature: Misconduct or mistakes? Medical Journal of Australia, 185(3), 152–154. https://doi.org/10.5694/j.1326-5377.2006.tb00504.x

National Academies of Sciences Engineering and Medicine. (2017). Fostering Integrity in Research. The National Academies Press.

Perez-Neri, I., Pineda, C., & Sandoval, H. (2022). Threats to scholarly research integrity arising from paper mills: A rapid scoping review. Clinical Rheumatology, 41(7), 2241–2248. https://doi.org/10.1007/s10067-022-06198-9

Rapani, A., Lombardi, T., Berton, F., Del Lupo, V., Di Lenarda, R., & Stacchi, C. (2020). Retracted publications and their citation in dental literature: A systematic review. Clin Exp Dent Res, 6(4), 383–390. https://doi.org/10.1002/cre2.292

Resnik, D. B. (2019). Is it time to revise the definition of research misconduct? Accountability in Research, 26(2), 123–137. https://doi.org/10.1080/08989621.2019.1570156

Resnik, D. B., Neal, T., Raymond, A., & Kissling, G. E. (2015). Research misconduct definitions adopted by US research institutions. Accountability in Research, 22(1), 14–21. https://doi.org/10.1080/08989621.2014.891943

Retraction Watch (n.d.). Retraction Watch: Tracking retractions as a window into the scientific process. https://retractionwatch.com/

Rossouw, T. M., Matsau, L., & van Zyl, C. (2020). An analysis of retracted articles with authors or co-authors from the African Region: Possible implications for training and awareness raising. Journal of Empirical Research on Human Research Ethics, 15(5), 478–493. https://doi.org/10.1177/1556264620955110

Stavale, R., Ferreira, G. I., Galvao, J. A. M., Zicker, F., Novaes, M., Oliveira, C. M., & Guilhem, D. (2019a). Research misconduct in health and life sciences research: A systematic review of retracted literature from Brazilian institutions. PLoS ONE, 14(4), e0214272. https://doi.org/10.1371/journal.pone.0214272

Stavale, R., Ferreira, G. I., Galvao, J. A. M., Zicker, F., Novaes, M. R. C. G., de Oliveira, C. M., & Guilhem, D. (2019b). Research misconduct in health and life sciences research: A systematic review of retracted literature from Brazilian institutions. PLoS ONE. https://doi.org/10.1371/journal.pone.0214272

Stern, A. M., Casadevall, A., Steen, R. G., & Fang, F. C. (2014). Financial costs and personal consequences of research misconduct resulting in retracted publications. eLife, 3, e02956. https://doi.org/10.7554/eLife.02956

Van Noorden, R. (2011). Science publishing: The trouble with retractions. Nature, 478(7367), 26–28. https://doi.org/10.1038/478026a

Zhang, Q., Abraham, J., & Fu, H. (2020). Collaboration and its influence on retraction based on retracted publications during 1978–2017. Scientometrics, 125, 19. https://doi.org/10.1007/s11192-020-03636-w

Acknowledgements

We would like to thank Retraction Watch for making their data available to the research group, and to Ivan Oransky and Alison Abritis for their comments on this manuscript.

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature. This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

Fabian Freijedo-Farinas was responsible for methodology, data curation, formal analysis, and original draft preparation. Alberto Ruano-Ravina was responsible for conceptualization, methodology, review, editing and supervision. Mónica Pérez-Ríos, was responsible for methodology, review, and editing. Joseph S Ross was responsible for methodology, review, and editing. Cristina Candal-Pedreira was responsible for conceptualization, methodology, review, and editing.

Corresponding author

Ethics declarations

Conflict of interest

Dr. Ross currently receives research support through Yale University from Johnson and Johnson to develop methods of clinical trial data sharing, from the Medical Device Innovation Consortium as part of the National Evaluation System for Health Technology (NEST), from the Food and Drug Administration for the Yale-Mayo Clinic Center for Excellence in Regulatory Science and Innovation (CERSI) program (U01FD005938), from the Agency for Healthcare Research and Quality (R01HS022882), from the National Heart, Lung and Blood Institute of the National Institutes of Health (NIH) (R01HS025164, R01HL144644), and from Arnold Ventures; in addition, Dr. Ross is an expert witness at the request of Relator’s attorneys, the Greene Law Firm, in a qui tam suit alleging violations of the False Claims Act and Anti-Kickback Statute against Biogen Inc. that settled in September 2022. No other authors declared potential conflicts of interests regarding this publication.

Ethical approval

No ethics committee approval was necessary as publicly accessible materials were used without involving human subjects.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Freijedo-Farinas, F., Ruano-Ravina, A., Pérez-Ríos, M. et al. Biomedical retractions due to misconduct in Europe: characterization and trends in the last 20 years. Scientometrics 129, 2867–2882 (2024). https://doi.org/10.1007/s11192-024-04992-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-024-04992-7