Abstract

In this study, we present an objective, replicable methodology to identify trendy journals in any consolidated discipline. Trendy journals are those most read by authors who are currently publishing within the scope of the discipline. Trendy journal lists differ from consolidated lists of top core journals; the latter are very stable over time, mainly reflecting reputational factors, whereas the former reveal current influences not yet captured by studies based on bibliometric indicators or expert surveys. We apply our methodology to identify trendy journals among 167 titles indexed in the Web of Science category of the Information Science & Library Science (LIS) research area. Our list of trendy journals represents the most influential journals nowadays in the LIS discipline, challenging to some extent the core LIS journal list and journal category lists ordered by citations (e.g., by the Journal Impact Factor). Our results show that Scientometrics is the journal that bears the most influence on current production when not corrected for journal size and that Quantitative Science Studies—a small, relatively new journal not yet assigned a Journal Impact Factor nor present on any list of core LIS journals—is the journal that has shown the most significant recent influence when controlling for size.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Journals are the main channel for disseminating research. Staying abreast of influential journals in a field of knowledge is an ongoing concern for researchers. Lists of such journals provide authors with guidance on reading selection and publishing options. In the LIS discipline, a ranking of influential core journals has been consolidated (Nixon, 2014), which is indicative of the maturity of the discipline.

However, in interdisciplinary fields of knowledge such as LIS, the lists of core journals, while valuable, are incomplete sources of information. This is because the methodologies they are compiled with preclude identifying which journals are truly influential at present and to what extent their influence remains exclusively within the discipline. This is especially important nowadays because recent studies indicate that LIS is under reorientation (Hsiao & Chen, 2020; Jarvelin & Vakkari, 2021; Ma & Lund, 2020). The discipline is witnessing the steadily increasing usage of other influential sources not included in the core journals list, a sign that the hierarchization established by the most recent LIS journal rankings is being questioned. From this evidence emerges our main research question: What are researchers in the LIS discipline currently reading—what is influencing them?

Here, we examine the citations (references) from publications indexed under the LIS category between January 2021 and March 2022 to determine which journals are being read by researchers in the discipline. We assume that the references/cited works have been read and that their reading has not been superficial, i.e., that these references/works have influenced the authors. Our study provides insight into which journals influence the most recently published papers, enables the creation of a ranking of trendy journals, and therefore, challenges the status quo imposed by the top core LIS journals. We also compare our list of trendy journals with other lists and studies to see what our selection of journals adds to existing lists.

Articles currently being published are often based on recently published studies—but not always. Some current articles are based on studies published some time ago. Therefore, we also analyze how the influence of journals evolves according to the time at which their production is published.

In summary, this study attempts to answer the following research questions: Which journals are most influencing the articles that LIS authors are currently publishing? Is our trendy journal list redundant? How does a journal’s influence on current papers change over time?

Literature review

The literature uses different terms to designate the most influential journals of a discipline or subject area: “category journal list”, “core journal list”, “top journals”, “hot journals”, “leading journals”, and “reference journals”, among others. In this section, we review this literature in the context of LIS.Footnote 1

Category journal list

For a given discipline, the broadest set of influential journals is the category’s journal list. The most commonly used lists are the JCR (Clarivate Analytics) list and the Scopus list. In April 2022, Clarivate listed 86 journals in the Information Science & Library Science research area for the SSCI edition, and 78 for the ESCI edition. Scopus contains 317 journals in the Library and Information Sciences subject area.

These lists reduce the universe of journals (according to Kherde, 2003, there are over 1300 journals in LIS) to a smaller list, but they are still large and often contain journals that, while generally influential (e.g., with a high JIF [Journal Impact Factor] within the category), are not discipline-specific, which calls into question their impact on the discipline. For example, the Journal of Knowledge Management is the 3rd journal by JIF in the current JCR edition, and the 6th by JCI (Journal Citation Indicator), yet some authors do not consider it a LIS journal (e.g., Taylor & Willet, 2017).

Some researchers suggest identifying the most influential LIS journals based on those best positioned by citations in the LIS categories of JCR, Scopus, or Google Scholar. According to Winkler and Kiszl (2020, p. 4), this logic accounts for the fact that “generally speaking, the criterion for choosing the best journals and articles is in many cases—just like with the SJR and the JCR—partially or fully based on their citation metrics.” For example, Ahmad et al. (2019) used ISI- and Scopus-indexed journals to examine the top LIS journals using the bibliometric indicators JIF, ES, SJR, CiteScore, and SNIP and proposed a Journal Quality Index (JQI) to produce a single ranking. Jan and Hussain (2021) identified the top 20 LIS journals from the Google Scholar matrix on the basis of citations. Winkler and Kiszl (2020) compiled their list of 32 LIS journals based on two criteria: those that appeared at least once among the top 5 journals in the top quarter of the SJR quartile list between 2013 and 2017; and those that had an SJR Q1 rating in all five years between 2013 and 2017.

Core journal list

Core journal lists were created in response to the need to have a solid base of journals in a discipline for its academic and professional consolidation. A core journal has been defined as “a scholarly journal that reports original research of such significance to the academic community that the publication is considered indispensable to students, teachers, and researchers in the discipline or subdiscipline. For this reason, it is included in the serials collections of academic libraries supporting curriculum and research in the field” (ODLIS-Online Dictionary for Library and Information Science).

Core journal lists usually are compiled from the journals of a given discipline, using either bibliometric indicators or expert judgments, although other methods are often employed (Nisonger, 2007). Some lists rank the journals according to their importance, while others just list them in no particular order (Nisonger, 2007). When core journals are ranked using a bibliometric indicator or by the number of votes received, those appearing in the first positions are deemed top journals, and it is typical to select the top 10.

The LIS discipline has a long tradition of core journal lists, with as many as 178 rankings or ratings issued in the last century alone (Nisonger, 1999). Nixon (2014) conducted a comprehensive review of the methods used to develop the list of core journals in the LIS discipline. According to her, expert opinion and citation-based approaches are the dominant ones.

One of the most recognized and often replicated opinion-based studies is Kohl and Davis’s (1985). These authors analyzed 31 core LIS journals and surveyed the deans of all North American library schools with accredited programs and the directors of the Association of Research Libraries. Each journal was rated on a 5-point Likert scale in terms of value for tenure and promotion. They observed differences in the rankings achieved for each group and significant variation within each group in a third of the journals. These authors obtained the following list of the top 5 journals identified by both groups: College & Research Libraries; Library Quarterly; Library Trends; Journal of the American Society for Information Science and Technology; Journal of Education for Librarianship; and Journal of Academic Librarianship.

Blake (1996) investigated the prestige of professional journals perceived by the deans and directors of ALA (American Library Association) accredited schools of library and information studies and by the directors of ARL libraries. This author noticed significant differences in the perceptions of these two groups. In his list of top journals, he added new titles to Kohl and Davis’s (1985) top journals, including one that is not considered a LIS journal.

Nisonger and Davis (2005) ranked 71 LIS journals based on dean and director ratings and observed good agreement with the Kohl and Davis (1985) study and an absence of a relationship between the ratings received from the experts and the JCR citation scores. They created an amended list of the 12 best journals by combining the top 10 of both groups (deans and directors).

Manzari (2013) study asked US LIS faculty to rank a list of 89 LIS journals based on each journal’s importance to their research and teaching, and to list the five most prestigious journals in which they published for tenure and promotion purposes at their institution. She found three journals that were in both the top 5 of their rankings, and in the top 5 responses: Journal of the American Society for Information Science and Technology; Library Quarterly; and Journal of Documentation.

Taylor and Willett (2017) replicated Manzari’s (2013) research and obtained a ranking of 87 LIS journals. These authors observed differences between their ranking, which was based on UK experts, and Manzari’s ranking, based on US experts. Their top 10 journals were Journal of Documentation; Journal of Librarianship and Information Science; Journal of the Association for Information Science and Technology; Journal of Information Science; Information Research; Aslib Journal of Information Management; International Journal of Information Management; Library & Information Science Research; Library Trends; and Journal of Academic Librarianship.

The results of the above expert studies allow us to conclude that:

-

1.

There is some consensus on which LIS journals are the most influential (the top 5 or 10 journals), although there are differences in rankings among different groups of specialists and among experts from different countries.

-

2.

The list of top journals has been stable for more than two decades.

-

3.

Outside of the list of the top journals, there is more discrepancy about the influence of each journal.

Other studies have looked at the list of core journals using citations, and some have confronted this method and the opinion-based method. Among the latter, Kim (1991) conducted a citation analysis of 28 core LIS journals and compared his findings with Kohl and Davis’s (1985) prestige rankings. He used several citation measures while controlling for journal orientation, age, circulation, and index coverage, and found (i) a strong correlation between the two methods’ results, even though the relationship between the impact factor and the deans’ prestige ranking for the practitioner journals vanished once size was taken into account; (ii) that “the discipline citation measures identified a core of top journals that overlapped well with the core listings of the directors and deans for a similar time period” (p. 34); and (iii) that “deans and directors may differ in their weighting of scholarliness and timeliness when rating journal value, especially when the practitioner-research orientation of the journal is considered” (p. 24).

Following Kim’s study, Esteibar and Lancaster (1993) rated LIS journals based on the number of citations from doctoral dissertations and faculty publications, as well as the number of mentions they received in 131 course reading lists at the Graduate School of LIS (the University of Illinois at Urbana-Champaign). Although the top 10 journals in this weighted ranking overlapped heavily with prior citation studies, there are notable differences between the research-based and the teaching-based rankings.

Kherde (2003) examined the citations of articles published in LIS journals between 1996 and 2001 to identify the core journals, noting disparities in the top 10 core journals for Indian and non-Indian researchers.

Via and Schmidle (2007, p. 336) calculated “the frequency with which individual library journals are cited in the bibliographies of a core group of LIS journals that, arguably, comprise the premier journals in the LIS field” and compared it with each journal’s subscription cost to produce a list of journals based on impact and cost. The bibliographies and references of feature articles published in 11 journals on the Nisonger and Davis’s list during the period 2002 to 2005 were examined. The top 10 journals by citation closely overlapped with the leading journals identified in the expert opinion studies. Four journals received half of the citations: Journal of the American Society for Information Science and Technology (24% of total citations); Information Processing & Management (10%); Journal of Documentation (8%); and College & Research Libraries (6%). The top 20 accumulated 80% of the citations, in accordance with the 80–20 rule (Pareto principle).

Blessinger and Frasier (2007) analyzed trends in publication and citation in LIS journals during a ten-year period (1994–2004). The top ten most-cited journals are, in this order: Journal of the American Society for Information Science; College & Research Libraries; Journal of Documentation; Journal of Academic Librarianship; Library Journal; Library & Information Science Research; Library Trends; Library Quarterly; Reference & User Services Quarterly; and Information Processing & Management. The latter is far from the second place in the ranking obtained by Via and Schmidle (2007).

Nixon (2014) created a new ranking of 63 LIS journals based on a mixed methodology (opinions, journal characteristics, and bibliometric indicators) and divided them into three tiers. The following journals make up the first tier: Aslib Proceedings; College & Research Libraries; Collection Management; Government Information Quarterly; Information Technology and Libraries; Journal of Academic Librarianship; Journal of Documentation; Journal of Information Science; Journal of the American Society for Information Science (title changed to Journal of the American Society for Information Science and Technology − JASIST); Journal of the Medical Library Association; Library Collections, Acquisitions, and Technical Services; Library & Information Science Research; Library Journal; Library Quarterly; Library Resources & Technical Services; Library Trends; Libri; and RQ (title changed to Reference & User Services Quarterly).

Using multiple criteria, Walters and Wilder (2015) drew up a list of 31 LIS journals. This ranking is constructed by calculating the percentage of contributions made by fifty top authors. They found that the LIS subject is far from homogeneous due to differences in authorship and subject emphasis. They also identified five journal types according to author contributions: 11 core LIS journals (where faculty and students contribute at least 1.5 times as many articles as the authors in any other group), 10 practice-oriented journals, 1 computer science-oriented journal, 2 management-oriented journals, 2 informetrics journals, and 5 other LIS journals. The core LIS journals are Annual Review of Information Science and Technology; Aslib; Information Research; JASIST; Journal of Documentation; Journal of Information Science; Journal of Librarianship and Information Science; Knowledge Organization; Library & Information Science Research; Library Quarterly; and Libri.

Weerasinghe (2017) examined 803 citations from 46 articles published in the Journal of the University Librarians Association over the period 2010–2015 and identified 13 core LIS journals by applying Bradford’s law of scattering, ordered as follows: Journal of Academic Librarianship; Journal of the University Librarians Association of Sri Lanka; Library Review; College and Research Libraries; Scientometrics; Library Philosophy and Practice; Library Trends; Journal of Marketing; Information Research; Collection Building; New Library World; Sri Lanka Library Review; and Electronic Library.

Vinkler (2019), for his part, identified 11 core journals in scientometrics: Scientometrics; Journal of the American Society for Information Science and Technology; Information Processing and Management; Journal of Informetrics; Journal of Documentation; Journal of Information Science; Annual Review of Information Science and Technology; Research Policy; Library Trends; Research Evaluation; and Libri. He obtained the list from the frequency of papers in journals in the elite publication subgroups of Price medalists. According to this author, leading scientists publish their work with a high potential for impact in the field’s most prestigious journals.

In general, identifying core journals based on citations produces lists similar to those produced by other methods, but there are differences derived from the journals chosen, the years analyzed, and the metrics used.

Hot journal list

The concept of hot journals is usually associated with that of hot papers (articles that have lately earned the most citations), but it can also refer to journals which fall into certain JCR or Scopus categories and focus on specific topics.

Identifying hot journals has usually been done by identifying the papers on a given topic that have been published over several years and then obtaining a list of the journals according to the percentage of papers published by each journal. Examples of this are the studies by Jing et al. (2021), who identify the top 10 hot journals in the field of school travel; Hu and Yin (2022), for business intelligence literature; Wang et al. (2021), for green building research; or Wu et al. (2020), for the domain of travel mode choice. The focus of this research is a topic and not a discipline, so they are outside the interest of our study.

However, within this approach, a notable study in the field of LIS, carried out by Budd (1991), should be highlighted. This author proposed the following research question: what journals can be identified as having articles relevant to academic librarianship? (p. 291). To answer the question, he followed the methodology of the hot journal approach, using the descriptor “academic libraries.” He analyzed 328 articles from 50 journals published between 1984 and 1988, where 40 of these journals had LIS-related titles. The remainder were journals from other disciplines. Among the 40 LIS journals, the top 10 journals with the most articles on academic libraries were: College & Research Libraries; Journal of Academic Librarianship; RQ; Library Journal; International Library Review; Catholic Library World; Library Trends; New Directions for Teaching and Learning; Library & Information Science Research; and Library Quarterly.

Reference journals, leading journals, and trendy journals

To refer to the most influential journals, the literature frequently employs terms such as reference journal or leading journal, in addition to the aforementioned terms. These are high-impact journals in a discipline or a specific area of the field. Regarding their citation impact, they are usually in the Q1 of their journal category but can also be in a different quartile and still be considered a reference journal for a particular subcategory. This is the case of Scientometrics, a reference journal in informetrics (Walters & Wilder, 2015), but currently in Q2 of the JCR in the LIS category.

There are no concepts in the literature for journals that are most influencing the authors of papers that are currently being published, i.e., before these authors even know how many citations their papers will receive. This is how we understand our concept of trendy journals. With trendy, we refer to those journals that have most influenced recently published papers (2021 and 2022) with their recently published papers (2020 and 2021). Bibliometric data on the first are available after a period of time (for example, the JIF released in 2022 reported citations in 2021 for items published in 2019 and 2020). Therefore, our definition of trendy refers to what the authors of a given discipline have recently cited, i.e., the influences of their newly published research. Because it can change every year, our list of top trendy journals is more unstable than that of top core journals, but it describes a hitherto undiscovered reality.

Methodology for the trendy journal list

This study aims to identify trendy journals in the LIS field by assessing their influence on authors currently publishing through a contextual analysis of their citations. So, while we are interested in knowing what influences the authors, we are more specifically interested in what influences the most influential authors (the “influencers”). For this reason, our methodology introduces a correction factor to overweight the influences of the influencers.

We expect our list to coincide with the lists generated by papers such as Nixon’s (2014) or the JCR rankings, as it is logical that those journals which are influential for their very recently issued papers are core journals with high impact factors in the LIS field. However, we also expect to discover journals with very influential recent papers, which are being read by the most influential authors, but that published rankings may not detect.

We describe our methodology below and in Fig. 1.

Step 1. Selection of journals publishing “the latest”

Our methodology is based on citations (references). We want to know what currently-publishing authors are reading (we assume they are reading what they are citing). Therefore, the references cited in papers published by the most influential authors were given more weight (Vinkler, 2019).

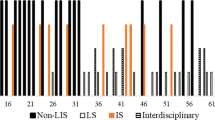

The first step was to establish a discipline for which we wanted to answer the question What are authors reading? and determine the journal set with bibliometric data. In this study, we examined the LIS discipline since this field has consolidated a list of journals with abundant bibliometric information. As Kim (1991) points out, omitting significant journals may distort citation measures but doing the opposite (including journals that are less significant) is likely to result in very little impact on citation measures, if any. Therefore, in our study, we analyzed a large set of LIS journals. Specifically, we first downloaded all journals containing 2021–2022 articles indexed by Clarivate Analytics in the InCites dataset—including journals indexed in the Emerging Sources Citation Index (ESCI)—within the Web of Science category of the Information Science & Library Science research area. We then searched for all publications that appeared in those journals in the same period. Finally, we retained the journals that had 100% of their publications indexed in the WoS category of the Information Science & Library Science subject for further analysis. Our final sample comprises 167 journals (see Table 3 further on). It should be noted that all journals indexed in the InCites Dataset (with ESCI documents) within the LIS category were included in the sample.

Step 2. Obtaining recently published papers

The second step was to obtain all the papers published between January 2021 and March 2022 (15 months) and indexed by InCites in the LIS category. Only papers belonging to the following document types were downloaded: Article, Book Chapter, Editorial Material, Letter, Proceedings Paper, and Review. We also downloaded all cited references, i.e., all “influences.” We found 11,415 papers and 561,376 references in total, with 98,829 citing the journals on our list.

Step 3. Filtering and grouping references

We arranged the references into the following groups: references to our journals list; self-citations; references to other journals; and references to other sources. In addition, references were grouped into five time periods, with the last period being used to obtain the trendy journals ranking: 1980–1999; 2000–2009; 2010–2014; 2015–2019; and 2020–2021. Table 1 shows the distribution of references from the 11,415 papers published between January 2021 and March 2022 and indexed in the Information Science & Library Science’s WoS category. The table shows that of the nearly 100,000 citations from more than 11,000 papers, about 13,000 refer to publications that are on our journal list and were released in 2020 and 2021.

In this study, we focused on “references to our list of journals.” We excluded “references to other journals” and “other sources” because our focus is on trendy LIS journals. In the results presented in this study, we also excluded self-citations because they are subject to certain biasesFootnote 2 (although we performed all calculations with them, and the results do not differ significantly.).

Step 4. Identification of authors and assignment of their quality scores

We are interested in knowing what the authors of the most recently published papers are reading and, more specifically, what the authors of the highest level of quality—the influencers—are reading. To identify the authors of these papers, we used only the corresponding author(s) in the case of two or more authors and the author of the paper in the case of a sole signatory.

In a recent paper, Docampo and Safón (2021) justified that, on average, authorial quality can be estimated by the quality of the author’s institution. Since quality is so difficult to measure in our context, we decided to use a restricted concept of quality through citation performance. We selected the Category Normalized Citation Impact (CNCI) to measure citation performance. Derived from the Crown indicator created by the CTWS at Leiden University—and now used by Clarivate Analytics as a standard impact measure for individual researchers, journals, institutions, and countries—the CNCI is a well-known, widely used bibliometric indicator that normalizes citation counts across fields. It is calculated by dividing the total amount of citing items by the expected citation rate for documents with the same document type, year of publication, and subject area. Calculating averages of all papers published by an institution over a specific period provides a measure that serves as a proxy for the institution’s quality (see, for instance, Moed, 2010, or Potter et al., 2020); as such, it is also used as an indicator by several international academic classifications (e.g., Leiden Ranking, ARWU subject rankings, USNews Ranking, among others).

The need to assign a CNCI to each paper prompted us to review several credit allocation schemes proposed in the literature, such as “the fractional counting approach; full allocation of publication credit to the first author; full allocation of publication credit to the corresponding author; and allocation of publication credit to the individual authors in a weighted manner, with the first author receiving the largest share of the credit” (Wouters et al., 2015, p. 5).

Since we needed a straightforward and meaningful procedure for assigning a paper to an institution, we had to decide between allocating it to the first author or to the corresponding author. In a multi-author, multi-institution publication, a researcher from the leading institution of the study usually assumes the role of corresponding author. This is the reason for choosing the corresponding author to identify the institution with which a paper is associated. To validate our choice, we analyzed the complete list of institutions with more than 25 publications in the LIS category (2021–2022): on average, the first author belongs to the institution of the corresponding author in 90% of the cases. If we had selected the first author instead of the corresponding one, the changes in the results of our analysis would be immaterial, validating our decision to assign a weight to the papers associated with the corresponding author’s CNCI (in the case of several corresponding authors, we averaged the figures).

Table 2 shows the CNCI of the LIS discipline’s 40 most influential institutions in the period under analysis (January 2021 to March 2022). The CNCI was computed for each institution over the 2011–2019 period to obtain a reliable measure of recent citation impact. Institutions for which InCites does not provide a CNCI measure were assigned the 10th percentile of all equivalent institutions contributing to LIS publications from January 2021 to March 2022: approximately 0.33. The influencers’ score shown in Table 2 (column C) is the geometric mean of the institutional CNCI (column B) and the number of contributions to the LIS discipline from January 2021 to March 2022 (column A), normalized to the maximum of this mean across the institutions. The note in Table 2 states the calculation involved in this normalization procedure.

Step 5. Obtaining the trendy journal scores

To obtain the score of trendy journals in the 2020–2021 period, we used, for each journal i:

-

(a)

The references from papers (p) published during January 2021–March 2022, filtered as described in step 3, \({R}_{ip},\) as well as the total number of references from those papers, \({R}_{p}\), to the papers published in the same period (2020–2021).

-

(b)

And the CNCI scores, \({CNCI}_{p}\), which passed from institutions to authors and from authors to papers, as indicated in step 4.

The trendy journal non-normalized score (NNS) is calculated according to the following equation:

Since part of our research question seeks to understand the evolution of a journal’s historical influence on current papers, the score is also calculated for the remaining periods presented in step 3.

Step 6. Obtaining the trendy journal scores with normalized data

A journal’s influence is biased by the number of papers published annually (Kim, 1991). In order to correct for this effect and reduce the handicap of smaller journals, the trendy journal score is normalized by the number of papers published by the journal in the period 2020–2021, \({P}_{i}\), according to the following equation:

As in step 5, the normalized score (NS) is also computed for the remaining time periods.

The results of the analysis following the six steps of our methodology are presented in Table 3.

Discussion

RQ1.

Which journals are most influencing the articles that LIS authors are currently publishing?

Table 3 shows all results. The top 10 journals that most influenced papers published between 2021 and March 2022, i.e., our top trendy journals, were (in order of non-normalized influence):

-

1.

Scientometrics.

-

2.

International Journal of Information Management.

-

3.

Journal of the Association for Information Science and Technology.

-

4.

Quantitative Science Studies.

-

5.

MIS Quarterly.

-

6.

Information & Management.

-

7.

Information Processing & Management.

-

8.

Journal of the Association for Information Systems.

-

9.

Journal of Informetrics.

-

10.

Journal of Academic Librarianship.

A journal’s influence depends on its size since it is more likely to be read when many papers are published. To correct this effect, we normalized the data as explained in the methodology. The normalized data produce a new ranking (Table 3, NS column) in which the top 10 match the top 8 of the non-normalized ranking. The new ranking places three highly influential but small journals in the top 10: Information Systems Journal (132 papers published between 2021 and March 2022), Journal of Information Technology (65 papers), and European Journal of Information Systems (153 papers).

RQ2.

Are our trendy journal lists redundant?

The second research question assesses the value our lists add to existing lists. In other words, it seeks to answer the following question: “Do our lists capture something that the latest JCR list does not?” From a methodological standpoint, our study is different from previous ones in several key features:

-

It analyzes the influence of those journals (through their papers) that do not yet have a calculated impact factor, so it is an “early” list. For example, the JIF of the JCR published in 2022 analyzes the 2020 citations of papers published in 2018 and 2019. We analyzed the 2021 and 2022 papers and their 2020 and 2021 references to establish the list of trendy journals.

-

It reduces the journal’s influence to its impact on a particular discipline, overcoming measures such as JIF or SJR.

-

It identifies the trendy journals from an extensive list (167 titles), which is unusual since most studies try to identify core journals with smaller lists.

-

It considers that not all authors are equally relevant and, thus, influencers are overweighted.

Hence, to the best of our knowledge, we believe that our methodology is unprecedented in the literature and offers novelty.

In terms of results, our analysis provides information on current impacts that no other ranking is highlighting. However, our list is a compilation of contemporary influences. And therefore, it is to be expected to be linked to the core journal lists or the impact factor rankings issued each year. Only 4 of the top trendy journals in our non-normalized and normalized lists are in the top 10 JIF list. And one, Quantitative Science Studies, does not even have a Clarivate Analytics impact factor for 2020. This academic periodical is, according to our calculations, the fourth journal in terms of influence (non-normalized data) and the most influential journal in our size-controlled ranking.

The core LIS journal lists assessed in the literature review section show little overlap with our top 10 journals. Table 4 shows the matches. Five journals that are very influential and are on our lists were not taken into account by previous studies: Quantitative Science Studies (136 papers), Journal of the Association for Information Systems (158), Journal of Information Technology (65), Information Systems Journal (132), and European Journal of Information Systems (153). These journals are small, and the first one is very young. Our findings show they are very influential publications that set trends, and thus they are worth paying special attention to. These results provide our list with a great differential value when compared to the core journal lists or those based on the JIF rankings.

Researchers who rank journals using one method frequently correlate their results with those obtained using another method to better understand what their rankings represent (Kim, 1991). The correlations between our scores and the rankings, ratings, and scores of other studies are low or moderate (Table 5), implying that our rankings contribute something that these lists overlook.

To further illustrate the value of our research, the plots in Figs. 2, 3, 4 and 5 compare the non-normalized and normalized scores (in the x-axes) for journals with a calculated JCI (in the y-axes) and the results of Taylor and Willet’s (2017) and Jan and Hussain’s (2021) studies. The size of the bubbles represents the Taylor and Willet ranking score (Figs. 2 and 3) and the inverse (= 1/rank) of the ranking of Jan and Hussain (Figs. 4 and 5). The dots represent the journals with JCI, NNS, and NNS that are not included in the lists of these authors.

NNS vs. Taylor and Willet scores. Notes Logarithmic scales. The bubbles represent the scores achieved by each journal in the Taylor and Willet (2017) study. The larger the bubble, the higher the score. If a journal has no score, a value close to zero is used to calculate the logarithm and plot it on the chart

NS vs. Taylor and Willet scores. Notes Logarithmic scales. The bubbles represent the scores achieved by each journal in the Taylor and Willet (2017) study. The larger the bubble, the higher the score. If a journal has no score, a value close to zero is used to calculate the logarithm and plot it on the chart

NS vs. Jan and Hussain ranking. Note. Logarithmic scales. The bubbles represent the inverse of the Jan and Hussain (2021) ranking. If the journal is not in the ranking, a value close to zero is used to calculate the logarithm and plot it on the chart

Figures 2 and 3 show that the Taylor and Willet core LIS journal ranking identifies some of our top trendy journals (large bubbles with high NNS and NS scores). The figures also show that their ordering does not adequately capture the actual influence of some journals (e.g., Scientometrics gets the highest score in our NNS, but Taylor and Willet’s study assigns this journal a low score, as shown by the small bubble size). Also, their ranking classifies some journals with unremarkable scores in the JCI rankings and our NNS and NS lists as top journals (bubbles, not dots, in the bottom/left quadrant).

Figures 4 and 5 show the ranking of Jan and Hussain (2021) compared to our NNS and NS lists and the JCI ranking. These figures show that some of the highly influential journals we identified are not in the top positions of these authors’ rankings (there are dots in the high levels of NNS and NS, which means that these journals do not appear in the Jan and Hussain classification). Another remarkable result observed in Figs. 2, 3, 4 and 5 is that many LIS journals with a calculated JCI indicator have no impact on the latest published papers.

RQ3.

How does a journal’s influence on current papers change over time?

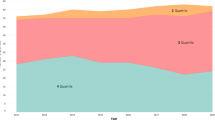

Our third study goal is to determine the extent to which older papers influence current production. Table 2 provided information on the distribution of references by time span, while Fig. 6 shows the influence of the top 10 trendy journals by time span.

Articles from 2021 and 2022 cited papers from different years. Among the references dated 2020 and 2021 (the period we used to identify the trendy journals), Scientometrics was the most influential journal (score = 100, see Table 3), according to NNS [see Eq. (1)]; and Quantitative Science Studies, according to NS (see equation [2]). We repeated the exercise shown in Table 3 for the remaining periods examined in this study: 1980–1999, 2000–2009, 2010–2014, and 2015–2019. According to NNS, among the references dated between 2015 and 2019, Scientometrics was also the most influential journal, and conforming to NS, MIS Quarterly had the most influence. Among references between 2010 and 2014, MIS Quarterly was also the most influential journal, according to NNS, and Journal of the Association for Information Science and Technology had the greatest impact, according to NS. MIS Quarterly was the most influential for the period 2000–2009, and also for the 1980–1999 period, according to NNS, while according to NS, the journal with the greatest impact was Information Systems Research, for that period.

An extremely simplified example may serve to help understand these results better. Taking the NNS as an influence indicator, we could imagine an article published in 2022 with only five references, one dated between 1980 and 1999, one between 2000 and 2009, one between 2010 and 2014, one between 2015 and 2019, and one between 2020 and 2021. In which journals would the papers corresponding to these references have appeared? Two papers would have been published by Scientometrics (one between 2020 and 2021, and the other one between 2015 and 2019), and three would have been MIS Quarterly articles (one appearing between 2010 and 2014, one between 2000 and 2009, and one between 1980 and 1999). Thus, the plots show the huge influence of the MIS Quarterly papers dated up to 2019 on current research; however, in subsequent years (2020 and 2021), other journals set the trend more. It may also be observed how certain journals are gaining importance because their most recent production has more influence on current publications than their earlier ones.

Conclusions

Our trendy journal list identifies the most influential journals right now, challenging to some extent the core LIS journal list and journal category lists ordered by citations (e.g., by JIF). The former is stable over time and affected by reputational factors; the latter are subject to delay and fail to exert their influence exclusively on LIS discipline researchers, even with indicators such as the JCI. Our list corrects these two problems.

Our methodology shows that journals such as Scientometrics (Q2 in the Journal Citation Report) notoriously influence current production when not corrected for journal size. It also shows that a newcomer journal, Quantitative Science Studies, with no calculated JIF and no presence on any core LIS journal list, is the most influential journal for recent production if controlled for journal size.

Trendy journals generate reputational advantages. If they are influencing right now, they are likely to continue to do so in the future because they are gaining, recovering, or maintaining their notoriety. Because of this, it is possible to predict the entry of some trendy journals, which are not on the core journal list or not even on the JCR list, into these lists; this may be true of journals such as Quantitative Science Studies, especially if it maintains its capacity for influence in the coming years. Our findings provide a complementary view of what to read: each subject prioritizes a core collection of journals, but lists like those generated in this study succeed in identifying the hottest journals in terms of current influence. An individual who generates our trendy journal list every year should notice more changes in the trendy ranking than those shown in the core journal lists or JIF-based rankings. But therein lies its interest; it is a list of the most influential journals today, but this ranking may change tomorrow. A journal that is trendy today may not be so in a few years. Tomorrow, we will want to know what influences us tomorrow. Many core journals with very influential pasts have now lost steam, something our list corrects.

According to Newell (1993), a proposal for a new method entails pointing out the novelty of the task, the algorithm, and the justification. In support of the new methodology, we argue its novelty and simplicity. The choice of LIS as the sample context offers multiple journal rankings to make meaningful comparisons. The sample case with the intermediate and final scoring results shows the potential of using the proposed methodology in different realms of knowledge. We also present arguments supporting the validity of the choices (journal selection, allocation of weights to publications).

The methodology proposed in this paper is replicable and generalizable, although there are some limitations. On the one hand, certain interdisciplinary fields lack their own academic journals, while some emerging fields still need to develop a strong tradition of publishing in scholarly journals, and they must rely on other dissemination forms, such as conference proceedings or preprint servers. In these cases, our methodology is not applicable.

On the other hand, the field of journal rankings lacks a single, omnidisciplinary generalizable methodology that is widely acknowledged. Nor do we expect ours to be. Different disciplines have different criteria for assessing the quality and impact of journals, and there is an ongoing debate about the most effective metrics for evaluating scientific output and impact. For example, some disciplines place a high value on the JIF as a measure of a journal's influence, while others also consider factors such as the rigor of the peer review process, the quality of the editorial board, and the scope and focus of the journal.

Our methodology is based on objective data that can be found with little difficulty by any researcher in any established knowledge field of study where journals are an important vehicle for disseminating ideas. As a result, our methodology is generalizable to other consolidated fields, and it may be replicated in the LIS field, whose data is publicly available. Evidently, the validity of this method is greater in fields where journals are the most effective means of communicating research and where bibliometric analysis uses citations in a consistent, comprehensible manner. In some disciplines, such as the arts, music, film or dance, journals are not the primary means of disseminating research results. Here, our methodology is not very useful, but it is also true that in these disciplines, it is less interesting to know which journals are trendy. Consequently, to the extent that conventional journals are more important for a discipline, the more consolidated the discipline is and the more accessible the bibliometric data is, the wider the generalizability of our methodology.

In contrast to specialized methodologies, generalized methodologies can be applied to a variety of different research domains. Generalized methodologies are flexible and adaptable, and they are often based on general principles or theories that can be applied in a wide range of contexts. Our methodology is based on principles shared by many disciplines, and the techniques are applicable in most of them. Therefore, we think it has great potential for application in various fields of knowledge.

Limitations and further research

A limitation of the study is that we do not know what current authors are reading; however, the observable variable, their citation patterns, gives us useful information about which readings are influencing their research. It is the contiguity, and hence, association between the two actions (reading and citing) what led us to the metonymy used in the title of our article. Addressing this limitation would require surveying the researcher community, which is beyond the scope of this paper. On the other hand, we assume a restricted meaning of influence. We only acknowledge the influence on current literature when it cites previously published research.

The results shown in this paper are from a controlled exercise. We analyzed 167 LIS journals to identify only the trendy ones in the set. However, as shown in Table 2, the LIS category draws from other journals lacking discipline specificity (in particular, 38% of references in papers published from 2021 until March 2022 are from journals not included in the LIS category). An extension of our study should expand the list to these outlets to identify other trendy journals that are not core LIS journals. Moreover, by weighting the references to a paper using the CNCI of the corresponding author institution, we reinforced the feedback loop between influencers and influence through a particular choice of the proxy variable for institutional quality. In future work, we will explore alternative approaches to identifying a proxy for quality and compare the results with those reported here.

Notes

In 1927, Gross and Gross published a citation-based classification of chemistry journals that is considered the first formal journal rating; since then, there have been thousands of published rankings in all disciplines (Nisonger, 1999). In order to avoid making the literature review section too long, in this paper we have limited the review to journal rankings related to the LIS research area. Docampo and Safón (2021) provide a recent review of the journal rankings literature in the Social Sciences.

It could be that the self-citation was suggested in the paper review process or included by the author to show they have read the papers published by the journal in which they wish to publish. Obviously, many self-citations are legitimate and constitute genuine influences, but it is riskier to include them in the analysis than not.

References

Ahmad, S., Sohail, M., Waris, A., Abdel-Magid, I. M., Pattukuthu, A., & Azad, M. S. (2019). Evaluating journal quality: A review of journal citation indicators and ranking in library and information science core journals. COLLNET Journal of Scientometrics and Information Management, 13(2), 345–363.

Blake, V. L. (1996). The perceived prestige of professional journals, 1995: A replication of the Kohl-Davis study. Education for Information, 14(3), 157–179.

Blessinger, K., & Frasier, M. (2007). Analysis of a decade in library literature: 1994–2004. College & Research Libraries, 68(2), 155–169.

Budd, J. M. (1991). The literature of academic libraries: An analysis (research note). College & Research Libraries, 52(3), 290–295.

Docampo, D., & Safón, V. (2021). Journal ratings: A paper affiliation methodology. Scientometrics, 126(9), 8063–8090.

Esteibar, B. A., & Lancaster, F. W. (1993). Ranking of journals in library and information science by research and teaching relatedness. The Serials Librarian, 23(1–2), 1–10.

Hsiao, T.-M., & Chen, K.-H. (2020). The dynamics of research subfields for library and information science: An investigation based on word bibliographic coupling. Scientometrics, 125(1), 717–737.

Hu, H., & Yin, M. (2022). Evolution of business intelligence: An analysis from the perspective of social network. Tehnički Vjesnik, 29(2), 497–503.

Jan, S. U., & Hussain, A. (2021). Analytical study of the most citied International Research Journals of Library and Information Science. Library Philosophy and Practice, 4983, 1–12.

Jarvelin, K., & Vakkari, P. (2021). LIS research across 50 years: Content analysis of journal articles. Journal of Documentation., 78(7), 65–88.

Jing, P., Pan, K., Yuan, D., Jiang, C., Wang, W., Chen, Y., & Xie, J. (2021). Using bibliometric analysis techniques to understand the recent progress in school travel research, 2001–2021. Journal of Transport & Health, 23, 101265.

Kherde, M. R. (2003). Core journals in the field of library and information science. Annals of Library and Information Studies., 50(1), 18–22.

Kim, M. T. (1991). Ranking of journals in library and information science: A comparison of perceptual and citation-based measures. College & Research Libraries, 52(1), 24–37.

Kohl, D. F., & Davis, Ch. H. (1985). Ratings of Journals by ARL Library Directors and Deans of Library and Information Science Schools. College & Research Libraries., 46, 40–47.

Ma, J. & Lund, B. (2020). The evolution of LIS research topics and methods from 2006 to 2018: a content analysis. Proceeding 83rd Annual Meeting of ASIS&T 2020 (vol. 57, p. e241). https://doi.org/10.1002/pra2.241.

Manzari, L. (2013). Library and information science journal prestige as assessed by library and information science faculty. The Library Quarterly, 83(1), 42–60.

Moed, H. K. (2010). CWTS crown indicator measures citation impact of a research group’s publication oeuvre. Journal of Informetrics, 4(3), 36–438.

Newell, A. (1993). Heuristic programming: Ill-structured problems. In The Soar papers (vol. 1) research on integrated intelligence: 3–54.

Nisonger, T. E. (1999). JASIS and library and information science journal rankings: A review and analysis of the last half-century. Journal of the American Society for Information Science, 50(11), 1004–1019.

Nisonger, T. E. (2007). Journals in the core collection. The Serials Librarian, 51(3–4), 51–73.

Nisonger, T. E., & Davis, C. H. (2005). The perception of library and information science journals by LIS education deans and ARL library directors: A replication of the Kohl-Davis study. College & Research Libraries, 66, 341–377.

Nixon, J. M. (2014). Core journals in library and information science: Developing a methodology for ranking LIS journals. College & Research Libraries, 75(1), 66–90.

Potter, R. W. K., Szomszor, M., & Adams, J. (2020). Interpreting CNCIs on a country-scale: The effect of domestic and international collaboration type. Journal of Informetrics, 14(4), 101075.

Smith, K. (2011). The dawn of a new era? Australian Library & Information Studies (LIS) Researchers Further Ranking of LIS Journals. Australian Academic & Research Libraries, 42(4), 320–341.

Smith, K., & Middleton, M. (2009). Australian library & information studies (LIS) researchers ranking of LIS journals. Australian Academic & Research Libraries, 40(1), 1–21.

Taylor, L., & Willett, P. (2017). Comparison of US and UK rankings of LIS journals. Aslib Journal of Information Management., 69(3), 354–367.

Via, B. J., & Schmidle, D. J. (2007). Investing wisely: Citation rankings as a measure of quality in library and information science journals. Portal: Libraries and the Academy, 7(3), 333–373.

Vinkler, P. (2019). Core journals and elite subsets in scientometrics. Scientometrics, 121(1), 241–259.

Walters, W. H., & Wilder, E. I. (2015). Worldwide contributors to the literature of library and information science: Top authors, 2007–2012. Scientometrics, 103(1), 301–327.

Wang, Q., Zhu, K., Guo, Z., Shen, W., & Kang, X. (2021, April). Research hotspots and tendency of green building based on bibliometric analysis. In IOP Conference Series: Earth and Environmental Science (Vol. 719, No. 2, p. 022032). IOP Publishing.

Weerasinghe, S. (2017). Citation Analysis of Library and Information Science research output for collection development. Journal of the University Librarians Association of Sri Lanka, 20(1), 1–18.

Winkler, B., & Kiszl, P. (2020). Academic libraries as the flagships of publishing trends in LIS: A complex analysis of rankings, citations and topics of research. The Journal of Academic Librarianship, 46(5), 102223.

Wouters, P. et al. (2015). The metric tide: Supplementary report: Literature review. Downloaded from the UK Research and Innovation center on November 28th, 2022. https://re.ukri.org/documents/hefce-documents/metric-tide-lit-review-1/

Wu, L., Wang, W., Jing, P., Chen, Y., Zhan, F., Shi, Y., & Li, T. (2020). Travel mode choice and their impacts on environment—A literature review based on bibliometric and content analysis, 2000–2018. Journal of Cleaner Production, 249, 119391.

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature. Funding was provided by Generalitat Valenciana (Grant No. AICO/2021/309) and Xunta de Galicia.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors state that there is no conflict of interest.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Safón, V., Docampo, D. What are you reading? From core journals to trendy journals in the Library and Information Science (LIS) field. Scientometrics 128, 2777–2801 (2023). https://doi.org/10.1007/s11192-023-04673-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-023-04673-x