Abstract

The development of network technique and open access has made numerous research results freely obtained online, thereby facilitating the growth of the emerging evaluation methods of Altmetrics. However, it is unknown whether the time interval from reception to publication has an impact on the evaluation indicators of articles in the social network environment. We construct a range of time-series indexes that represent the features of the evaluation indicators and then explore the correlation of acceptance delay, technical delay, and overall delay with the relevant indicators of citations, usage, sharing and discussions, and collections that are obtained from the open access journal platform PLOS. Moreover, this research also explores the differences in the correlations of the delays for the literature in six subject areas with the corresponding indicators and the discrepancies of the correlations of delays and indexes in various metric quartiles. The results of the Mann–Whitney U test reveal that the length of delays affects the performance of the literature on some indicators. This study indicates that reducing the acceptance time and final publication time of articles can improve the efficiency of knowledge diffusion through the formal academic citation channel, but in the context of social networking communication, an appropriate interval at a particular stage in the publishing process can enhance the heat of sharing, discussion, and collection of articles to a small extent, hence boosting the influence and attention received by the literature on the internet.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Research on the publication delays of journal papers provides a valuable contribution to the scientific data disclosure of periodicals and a reference for editorial departments to arrange their review and publication time appropriately. Due to the editorial process, peer review, and issue scheduling, journal papers can experience lags of varying length from their initial submission to final publication, leading to differences in the time cost of knowledge dissemination.

Publication delays can affect certain evaluation indicators of periodicals and their articles. For instance, a longer delay in the publication of journals in a scientific field corresponds to a larger backward shift in the citation distribution curves and a greater decrease in the impact factor of journals in this field (Yu et al., 2005). In addition, a clear yet weak tendency is observed for an inverse relationship between the editorial time and the number of citations of articles from Nature, Science, and Cell (Shen et al., 2015). Papers with shorter publication delays are more likely to become highly cited (Lin et al., 2016). With the development of information and communication technology and open access platforms, an increasing number of articles have become accessible online, improving the range and efficiency of academic knowledge diffusion to a certain extent. Against this background, there are several unanswered questions concerning the influence of delays during the publishing procedure. What is the time lag difference during the publication between online accessible articles with different citation quantities? What are the correlations between publication delays and measurement indicators in the social media environment? Does the delay vary in different metric quartiles and disparate discipline categories? Could the length of delays affect the performance of articles in terms of various indicators? Exploring these questions would help us better understand the impact of time lags during the publishing procedure on the metrics of articles in the social network context. The research objectives of this paper are as follows:

-

To check for differences in the publication delays of articles with different citation frequencies and subject areas.

-

To investigate the correlation between the publication delay and measurement indicators of journal articles in the social network environment and the correlation discrepancies in different metric quartiles and disciplines.

-

To probe the effect of the length of delays during the publication procedure on various literature indicators.

Literature review

The peer–review process constitutes a form of knowledge production (Rigby et al., 2018), but the publication delay, which refers to the time between the dates of submission and acceptance or publication of an article, can influence the frequency of citations, the aging of the literature, and the impact factor of journals. For instance, a longer average publication delay corresponds to a larger average moving range of the aging curve of the scientific literature; in this case, publication delays are one of the main factors contributing to long-term aging literature (Egghe & Rousseau, 2000). A simulative investigation theoretically revealed an approximate inverse linear relation between the average publication delay in a field (or discipline) and the impact factor of journals (Yu et al., 2005). Moreover, the faster the aging rate of the discipline literature is, the worse the influence of publication delays on the impact factor, immediacy index, and cited half–life of journals in the same discipline (Yu, et al., 2006a, 2006b). The editorial delay of academic papers published in Nature, Science, and Physical Review Letters also has a strong negative correlation with the possibility of being highly cited (Lin et al., 2016). One journal publication delay also influences another journal's impact factor (Shi et al., 2017). When the publication delay of a journal increases, the impact factor of all journals in its inter–citation journal group will decrease, and the ranking of the journals according to the impact factor may be changed (Yu, et al., 2006a, 2006b). Additionally, Guo et al. (2021) proposed a publication delay adjusted impact factor to reduce the negative effect of publication delays on the quality of the impact factor determination.

Various elements affect the length of delays during the publication process. For instance, the prestige factor of the research group and authors' publishing experience can determine the length of editorial delays (Amat, 2008; Yegros & Amat, 2009). Per unit increase in impact factor, there is a 19.1% increase in acceptance delay and a 15.8% decrease in publication delay in orthopedic surgery (Charen et al., 2020). Moreover, the increasing pressure to publish journal articles with high impact factor significantly influences the publication time in ophthalmology journals (Skrzypczak et al., 2021). Interestingly, scientific publications by board members have shorter publication delays than those by non–board members (Xu et al., 2021). Additionally, on average, the duration of review for Covid-19 research papers was almost 1.7 to 2.1 times shorter than those for non-Covid-19 articles during and after the coronavirus pandemic, which confirms a widespread fast academic response to Covid-19 in the form of apparently universally supporting more rapid publishing for relevant studies (Kousha & Thelwall, 2022).

Publication delays demonstrate some differences across disciplines. For instance, technical science and mathematics publications have longer delays than those in natural and life science (Luwel & Moed, 1998). Björk and Solomon (2013) found that journal articles show considerable variances in the time between their submission and acceptance, whereas the variances in the time between the acceptance and publication of these articles can be mostly ascribed to the differences in the characteristics of journals. They also found that the shortest publication delays occur in science, technology, and medicine (STM) and the longest in the social sciences, the arts, and humanities and business and Economics. Meanwhile, the editorial handling time of biomedical journals varies widely from a few months to almost two years (Andersen et al., 2021).

Along with the growing demand for knowledge sharing, easy access, and its utilization, plenty of database platforms have introduced the online publishing function while at the same time giving rise to a boom in open access (OA) to journals and articles, so that anyone interested in downloading and reading the work of researchers does not face any obstacles. Although the term OA itself was coined around 2000, scientists have been experimenting open access journals since the early 1990s (Laakso & Björk, 2013). In 2001, the Budapest Open Access Initiative (BOAI) conference gave the most widely used definition of OA, the core of which is, in short, that OA is unrestricted online access to articles published in academic periodicals. The concept of open access includes a range of components such as readability, copyright, reuse, publishing online, and machine readability. Open access could immensely accelerate the process of scientific discovery and encourage scientific innovation. Further, the advantages of OA have been confirmed in various empirical research. Breaking the traditional subscription model of academic publishing, OA articles usually get more citations, receive more recognition and eventually produce a bigger impact in academia (Koler–Povh et al., 2014; Piwowar et al, 2018; Sotudeh & Estakhr, 2018; Wang et al., 2015). Thus, an increasing number of researchers led to publishing in OA to make their results more accessible, with the prospect of a higher and faster influence (Antelman, 2017; Kristin, 2004). Online issues are generally available ahead of their respective print versions, with an average delay of nearly three months (Das & Das, 2006). Therefore, authors can receive significant gains from posting their manuscripts informally in some online repositories (Amat, 2008). In addition, the delays between online and print publications may artificially raise the impact factor of a journal, and this inflation is particularly greater for those with longer publication delays (Tort et al., 2012).

The boom in open access brings an opportunity for Altmetrics to thrive. The flexibility and convenience of open access allow academic achievements to be freely circulated across different communication platforms and groups, laying the groundwork for the rise of new metrics that rely on diverse social media. Social media has built a valuable bridge between scientists and society as a modern network interaction tool. Rainie et al. (2015) conducted a survey among members of the American Association for the Advancement of Science (AAAS), in which up to 71% of respondents indicated that their area of expertise would attract some or sometimes considerable interest to the public. With the continuous increase of scholarly communication on the Internet, the impact evaluation framework of academic papers considering online social media and online academic tools needs to break through the shackles of the old traditional system of assessing scholarly works to reflect the broad and rapid impact of scholarship in this emerging ecosystem. In this context, J. Priem put forward the concept of Altmetrics based on summarizing the previous research results (Priem et al., 2010). As a new evaluation method based on social networks and scientific communication activities, altmetrics provides comprehensive and timely feedback on the influence of research works through tracking activities (collection, sharing, reposting, commenting, citing, etc.) of all forms of scientific achievements. Compared to traditional evaluation methods, altmetrics not only enrichs the connotation of the impact of research results but also expands the evaluation dimension of influence. Studies have shown a strong positive correlation between altmetrics indexes and article visits (Wang et al., 2016, 2017). It is worth noting that although there is still a degree of uncertainty in the relationship between altmetrics indexes and citing metrics, altmetrics can still capture the aspect of social influence that differ from the reviewer’s perspective (Bornmann, 2014). And the metrics of social media are usually deemed to be positive indicators of public interest and attention in scientific research (Haustein et al., 2015).

Previous studies have mainly explored the influence of publication delays on citations, literature aging, and journal impact factors, the factor affecting the length of publication time lags, whereas others have investigated the size of publication delays in different disciplines. However, few studies explore the effect of publication delays on the metrics of articles in an internet social context. Therefore, the impact of publication delays on traditional metrics (citations) and social network metrics (usage, sharing, discussions, and collections) warrants investigation.

Methodology

Using online metrics data from the open access journal platform PLOS, we attempt to explore the correlation between publication delays and a series of indexes of articles by conducting Spearman correlation analyses to understand the influence of publication delays on the measurement indicators of journal articles in a social network environment. Statistically significant differences are considered with a probability of error less than 1% (** indicates p < 0.01), probability of error less than 5% (* indicates p < 0.05). Meanwhile, we will discuss the differences in publication delays and the discrepancies in their correlations with evaluation indicators across subject areas.

To involve all aspects of the publication process, we partition the publication delay of articles into three types, namely, acceptance delay (time span from submission to acceptance), technical delay (time span from acceptance to publication), and overall delay (time span from submission to publication).

Launched by the Public Library of Science in December 2006, PLOS is a highly influential open access journal platform in academia that covers dozens of subject areas, from natural sciences to social sciences. PLOS supports full–text downloads of papers and, since March 2009, offers comprehensive article-level metrics (ALM), whose classification is shown in Table 1. Citations are the number of times a publication has been cited by other publications, a relative metric created from multiple sources. The original citation data in this article comes from Dimensions, an extensive world-class linked research information dataset. The number of citations available in Dimensions differs from Google Scholar, Scopus, and Web of Science. References contained in Dimensions are not directly comparable to other databases; it also captures references and links to sources beyond classic publication–based citations. In Dimensions, citation relations between different references are either harvested from existing databases like PubMed Central, Crossref, and Open Citation Data or extracted directly from the full–text record provided by the content publisher, which is not only limited to journal publication references but also includes acknowledgments and citations from books, conference proceedings, patents, grants, and clinical trials. Therefore, we use the citation frequencies in Dimensions as the raw data of citations of the sample literature. However, usage, sharing and discussions, and collections are cumulative metrics reflecting social attention, which is the sum across different sources such as Mendeley, CiteULike, Facebook, Twitter, Wikipedia, Reddit, etc. We applied online data in PLOS, powered by Altmetric as the source dataset for usage, sharing and discussions, and collections.

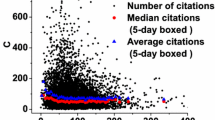

For the empirical sample, on the PLOS platform, we retrieved the articles published in 2015–2019 that come from Biology and life sciences, Chemistry, Engineering and technology affiliated to the natural sciences, and Economics, Psychology, and Sociology belonging to the social sciences, at the beginning of 2022. Then we ranked the articles in these 6 subject areas in descending order of citations and divided the articles in each field into 4 groups using quartiles to obtain the top 200 papers in each group by citations, resulting in a total of 24,000 pieces that were online access for free. We got these sample papers’ titles, keywords, and other bibliographic information, as well as their received time, accepted time, and published time, with the help of a self–written code by Python. We also collected data on the number of citations, usage, sharing and discussions, and collections of the obtained literature (Appendix Fig. 7).

Based on the online metrics data provided by PLOS, we constructed time–series–based indexes and explored the correlation between these indicators and acceptance delay, technical delay, and overall delay. To acquire the real–time metrics data, we also used Python to obtain citation frequencies in each year (Appendix Table 10), usage in each month (Appendix Table 11), and sharing and discussions in each month (Appendix Table 12) of the sample literature from the publication year to 2021.

Construction of time–series indexes

Mk denotes the observed value of an indicator in the kth window period of an article after publication. The indicators of citations are expressed in years, whereas those of usage, sharing and discussions are expressed in months. We built our indexes based on time series as follows.

Immediacy Index (MI) MI measures the size of an indicator within a short period after a paper is published, which represents the promptness of this indicator. Because the articles in 2019 in this study have only two years of citations at the statistics time, we computed the MI for citations by taking the average of the corresponding citations within two years after the publication year of an article:

Given that papers gain a certain number of views, downloads, reposts, and discussions as soon as they are published, the metrics of usage, sharing and discussions are more timely than those of citations. Therefore, we defined the MI for usage, sharing and discussions as the average of the corresponding metric values within three months (including the month of publication) after a paper is published:

Volatility Index (VI) A greater dispersion of metrics data corresponds to an indicator's lower stability and greater volatility. Therefore, we used the coefficient of variation (CV) to represent the fluctuation degree of metric values. CV is calculated as the ratio of the standard deviation to the mean. To prevent the formula from being meaningless when the mean equals 0, we added β to the formula denominator as shown below:

where s denotes the standard deviation of the metric value, whereas X̅ denotes the mean value.

Growing Trend Index (GTI): Trend analysis is a quantitative forecasting method that predicts growing tendencies by finding regular changes in historical data. We used the slope formula derived from the principle of least squares to evaluate the growing trend of metrics at the statistical time nodes within a specific time frame. GTI is computed as follows:

Given the varying time units among indicators and the immediacy of usage, sharing and discussions, we set T in formula (4) to 5 months before 2022 for the GTI of usage, sharing and discussions. Because of the different publication years, we set T of the GTI of citations for articles published between 2015 and 2017, in 2018 and 2019 to 5, 4, and 3 years before 2022, respectively.

Peak Velocity Index (PVI) PVI indicates the time taken by an indicator to reach its maximum value and the size of the peak. Mmax denotes the maximum value of an indicator over the life cycle of a paper, Tmax denotes the time when an indicator peaks, and T0 represents the publication time (measured in years for citations and in months for usage, sharing and discussions) of the literature. We computed PVI as follows:

Results analysis

Three types of publication delays of articles

We calculated the acceptance delay, technical delay, and overall delay of articles published between 2015 to 2019 according to their received time, accepted time, and published time and analyzed them using a boxplot, as shown in Fig. 1 (the publication delay is calculated in days). In the boxplot, the upper quartile of the box represents the third quartile, the middle line inside the box indicates the median, and the lower quartile of the box means the first quartile. From Fig. 1, the third quartile (Q3) of acceptance delay increases annually from 2015 to 2018, which indicates that the time from the editor’s receipt of the manuscript to the acceptance of the article has been dramatically extended. However, we observed a decrease in acceptance delay in 2019. Most of the technical delays are less than 50 days for Q3 each year from 2015 to 2019. The technical delay in 2015 has the highest median (38 days), whereas the technical delays from 2016 to 2019 have medians of 21 to 22 days, which indicates that publishers have become more efficient in processing the accepted articles over time. The median of overall delay decreases from 2015 to 2016, increases from 2016 to 2018, and reaches its highest median (190 days) in 2018. However, the median of overall delay decreases again to 171.5 days in the following year.

By using the quartile method, we divided all sample literature into 4 groups, RQ1 (the range from the minimum to the first quartile Q1), RQ2 (the range from Q1 to the second quartile, i.e. the median, Q2), RQ3 (the range from Q2 to Q3), and RQ4 (the range from Q3 to the maximum), according to the citations from low to high.

We analyzed the distribution of acceptance delay, technical delay, and overall delay for these articles with different quantity ranges of citations as shown in Fig. 2. Only articles in RQ4 of citations have the upper quartile of the acceptance delay of fewer than 200 days, while the upper quartiles of the acceptance delay for those articles in other citation groups (RQ1, RQ2, RQ3) are greater than 200 days. The technical delays for all citation groups do not significantly differ as a whole. However, the technical delay of papers in RQ4 has a bigger median (27 days), whereas those in other citation groups have medians ranging from 22 to 23 days. In addition, the maximum technical delay of articles in RQ2, RQ3 and RQ4 is less than 400 days, whereas that of articles in RQ1 extends over 600 days. The upper quartiles of the technical delay for the articles in all groups are less than 50 days, suggesting that most papers can be published within two months after they are accepted. With the augment of the number of the citations, the upper quartile of the overall delay gradually decreases, demonstrating that most articles with higher citations have a relatively shorter publication time lag.

The above analysis (Fig. 2) reveals that the more frequently cited articles have a relatively shorter acceptance delay and overall delay than the less frequently cited papers. We also did not observe any remarkable variance in the technical delay of articles across different citation groups.

Differences in the three types of delays across six subject areas

To confirm whether the three types of delays differ across disciplines, we calculated the maximum, minimum, interquartile range (IQR), mean, and standard deviation (STD) of acceptance delay, technical delay, and overall delay of the literature in the six subject areas of Biology and life sciences, Chemistry, Engineering and technology, Economics, Psychology, and Sociology from 2015 to 2019 (Table 2).

As shown in Table 2, for the acceptance delay, except for the articles in Engineering and technology, the most prolonged acceptance delay of articles from the other five disciplines all exceed 33 months. But the minimum acceptance delay in Biology and life sciences is zero, suggesting that at least one article in the subject was accepted on the same day upon submission, which is not found in the other five disciplines. Moreover, the median, IQR, mean, and STD of acceptance delay for articles in Economics, Psychology, and Sociology are all bigger than those articles in Biology and life sciences, Chemistry, and Engineering and technology, which indicates that on the whole, the literature from social sciences usually need to take more time to review before they are accepted, and the discrepancy of acceptance delay from natural sciences is smaller compared with that from social sciences.

Regarding the technical delay, only articles in Economics have technical delays exceeding 20 months. Further, the minimum technical delay for Engineering and technology, Psychology, and Sociology are 4 days shorter than that for Biology and life sciences, Chemistry, and Economics. Overall, the median, IQR, mean, and STD of technical delay for articles in the six disciplines have no striking differences. This suggests that the difference of technical delay across various disciplines is not as notable as that of the acceptance delay, reflecting from the side that the length of the overall delay is mainly determined by the period between reception and acceptance.

For the overall delay, similar to the acceptance delay, the overall delay maximum of Engineering and technology is smaller than that of the other five disciplines, whose overall delays are more than 34 months. Besides, Biology and life sciences is the only one among the six disciplines with a single-digit minimum overall delay. The median, IQR, mean, and STD of overall delay of articles in Biology and life sciences, Chemistry, and Engineering and technology are shorter than those in Economics, Psychology, and Sociology. Among them, the median and mean value of overall delay of Biology and life sciences, Chemistry, Engineering and technology are less than 6 months and 7 months, respectively. Furthermore, the STD of overall delay for natural science articles is smaller than for social sciences.

In sum, the majority of articles in natural sciences have shorter acceptance and overall delay than those in social sciences in this study, and the variances of these two kinds of delays in the natural sciences are minor than that in social sciences. The above (Table 2) suggests that the nature of disciplines determines the discrepancy in publication time lag.

Correlations between the three types of delays and a series of measurement indicators

We conducted Spearman correlation analyses to explore the relationship among the acceptance delay, technical delay, and overall delay with the measurement indicators of citations, usage, sharing and discussions, and collections. Table 3 reports the results of correlation coefficients and corresponding confidence intervals. In the following, TC denotes total counts; C denotes citations; U denotes usage; S denotes sharing and discussions; Co denotes collections.

In terms of the citation relevant indicators, Table 3 shows that the TC and MI of citations have a weakly positive correlation with technical delay (r = 0.151 and r = 0.075, respectively) and a weakly negative correlation with acceptance delay (r = − 0.100, and r = − 0.077, respectively) and overall delay (r = − 0.066, and r = − 0.058, respectively), hence suggesting that a shorter time of being accepted or published corresponds to a greater TC and MI of citations. Moreover, the VI of citations is weakly positively correlated with acceptance delay (r = 0.084) and overall delay (r = 0.053), whereas it is negatively correlated with technical delay (r = − 0.123), hence indicating that a shorter time of acceptance or publication corresponds to a lower VI of citations, but an increase in the waiting time for papers to be published after being accepted will increase the VI of citations to a small extent. Further, the GTI of citations has a weakly positive correlation with acceptance delay(r = 0.030) yet a weakly negative correlation with technical delay (r = − 0.056), hence suggesting that increasing the review time will slightly increase the GTI of citations at the statistical time node. Nevertheless, a longer period between acceptance and publication will produce a contrary effect. Additionally, the PVI of citations has a weakly negative correlation with acceptance delay (r = − 0.068) and overall delay (r = − 0.054), meaning that a shorter acceptance or total publication time of the literature contributes to a larger PVI of citations.

For the usage relevant indicators, the amount of usage is weakly negatively correlated with acceptance delay (r = − 0.017), thereby indicating that a shorter acceptance time lag can increase the TC of usage to a small extent. However, the TC of usage has a weakly positive correlation with technical delay (r = 0.130), and the correlation between overall delay and the TC of usage is insignificant. Among the time–series usage indexes, MI has a weakly negative correlation with acceptance delay (r = − 0.049) and overall delay (r = − 0.044). Combined with the definition of MI, reducing the time for an article to be accepted or published can increase its usage within three months after its publication by a small margin. In addition, all three types of delays have a weakly negative correlation with the VI and PVI of usage and a weakly positive correlation with its GTI, thereby suggesting that a shorter time spent on each stage of the literature from its submission to publication corresponds to a higher VI and PVI of usage, yet a lower GTI of usage at the statistical time node.

Corresponding to the sharing and discussion relevant indicators, Table 3 shows that the TC, MI, and PVI of sharing and discussions are weakly positively correlated with the three types of delays, thereby indicating that a longer time lag during the publication process will increase the amount of sharing and discussions to a small extent, the number of sharing and discussions within three months after publication, and the speed at which the quantity of sharing and discussions reaches its maximum. This result may be related to the fact that some manuscripts are open for review after they are received by editors, shortening the time for them to become available to the public but causing long delays before their official publication. Furthermore, reducing the time of acceptance or final publication of the literature increases the VI of sharing and discussions to a small extent. A weakly negative correlation is also observed between the GTI of sharing and discussions and overall delay (r = − 0.013), hence suggesting that reducing the total delay will slightly increase the GTI of sharing and discussions.

The number of collections reflects the interests of readers in an article and the degree of its potential utility. Table 3 manifests that the TC of collections has a weakly positive correlation with the three types of delays, indicating that a long time between the literature submission and final publication corresponds to a more extensive collections.

An interesting phenomenon is that the confidence intervals of delays ‘acceptance’ and ‘overall’ are overlapped in some indicators of citations, usage, sharing and discussions, and collections. This demonstrates that acceptance and overall delay are similar in correlation with some measurement indicators; furthermore, compared with technical delay, acceptance delay tends to play a decisive role in the length of overall delay.

Correlations between the measurement indicators of articles in the different number of metrics by quartiles and the three types of delays

We use the quartile method that has been adopted in the chapter “Three types of publication delays of articles” to divide the sample into four groups named RQ1, RQ2, RQ3, and RQ4 according to their number of citations, usage, sharing and discussions, and collections, respectively, to understand the relationship between each of their measurement indicators and these delays for articles under the different number of metrics. Table 4 reports the medians and quantity ranges of metrics for groups by various metric quartiles. As seen in Table 4, among these four metrics, 'Usage' has an enormous median and range of values in each group. In comparison, 'Sharing and discussions' has the smallest median and range of values among these four metrics, followed by 'Citations' and 'Collections'. This manifests that in the social network environment, ‘Usage’ has a quantitative advantage over the other three metrics. Besides, Figs. 3, 4, 5, 6 show the Spearman correlation coefficients for the three types of delays with various indicators for articles divided by quartiles of citations, usage, sharing and discussions, and collections, respectively. The solid symbols indicate statistical significance at the 0.01 or 0.05 level, whereas the hollow signs indicate insignificant correlations.

As shown in Fig. 3a, in all groups by citation quartiles except for RQ2, the TC of citations has a weakly negative correlation with acceptance delay, with the strongest negative correlation occurring in RQ1 (r = − 0.087). Meanwhile, Fig. 3b shows a weakly negative correlation between the TC of citations and technical delay in RQ1 to RQ3 but a weakly positive correlation between these two in RQ4. Moreover, the TC of citations is weakly negatively correlated with overall delay in all groups, and the strongest correlation coefficients is in RQ1 (r = − 0.088). From the above analysis (Fig. 3), we can see that: (i) for most articles, shortening the review or total publication time can increase their citations to a small extent, and this effect is more pronounced for the literature with only a few citations; (ii) a reduction in technical delay will slightly increase the number of citations for the less cited articles but will reduce the citations for those articles with a large number of citations.

Regarding the MI and PVI of citations, as shown in Fig. 3a, the MI and PVI of citations have a weakly negative correlation with acceptance delay in RQ1. Figure 3b shows that the MI and PVI of citations are weakly negatively correlated with technical delay in all groups by citation quartiles. In Fig. 3c, the MI and PVI of citations have a significantly weak negative correlation with overall delay only in RQ1. So it is noted that: (i) for those articles with only a few citations, shortening the review time will help them receive more citations within two years after their publication and even reach their peak number of citations within a shorter period to a small extent; (ii) shortening the time from acceptance to publication can increase the number of citations within two years after publication and, to a less extent, reduce the time needed to reach the maximum number of citations; (iii) the decline in the total publication time of papers with few citations can increase the citation frequencies within two years after publication and reduce the time needed to reach the peak number of citations to a small extent.

Figure 3a shows that the VI of citations has a significantly and weakly positive correlation with acceptance delay in all groups by citation quartiles except for RQ2, which indicates that for the majority of the articles, improving the efficiency of the review phase will slightly reduce the VI of citations. Meanwhile, Fig. 3b shows that the VI of citations has a weakly positive correlation with technical delay in RQ1, a weakly negative correlation in RQ3 and RQ4, and the strongest correlation in RQ4 (r = − 0.194), which suggests that for those articles with only a few citations, increasing their technical delay after being accepted will increase the degree of fluctuations in their number of citations. However, for those articles with numerous citations, increasing their technical delay will improve the stability of their citation frequencies in each time window.

From Figs. 3a and c, the GTI of citations in all groups by citation quartiles except for RQ1 has a weakly positive correlation with acceptance delay and overall delay. While in Fig. 3b, the correlation between the GTI of citations and technical delay is weakly negative in every group by citation quartiles, and this negative correlation gradually increases along with the number of citations. Therefore, an increase in the time of acceptance or final publication for those articles with a relatively high number of citations will slightly enhance their GTI in the statistical time spot. But shortening the technical delay helps slightly promote the GTI of citations regardless of their frequency.

In terms of the usage–relevant indicators, in Fig. 4a, the TC of usage has significant weakly negative correlations with acceptance delay (r = − 0.050) and overall delay(r = − 0.042) in RQ1, which suggests that for those articles with very low usage, shortening their acceptance time or publication time will increase their usage to a small extent. Meanwhile, in Fig. 4b, the TC of usage is weakly positively correlated with technical delay in RQ4 (r = 0.084), meaning that a more extended period between being accepted and published corresponds to a greater amount of usage for that literature with heavy usage.

The MI and PVI of usage show similar trends in their correlation with the three types of delays in all groups by usage quartiles. Among them, the correlations of the MI and PVI of usage with acceptance delay gradually diminish along with larger usage quartiles but remain weakly negative. Moreover, from RQ1 to RQ3, the correlations of the MI and PVI of usage with technical delay and overall delay are gradually attenuated but still show a weakly negative correlation. Still, the MI and PVI of usage are significantly positively correlated with technical delay in RQ4. Consequently, curtailing the technical delay or total publication time for articles with low usage will increase their MI and PVI of usage, which is reversed for those articles with massive usage.

Figures 4a and c show that, as a whole, the negative correlation of the VI of usage with acceptance delay and overall delay weakens as the range values of usage augment. Meanwhile, Fig. 4b demonstrates that the correlation between the VI of usage and technical delay is significantly negative in RQ1 and RQ2 but significantly positive in RQ4, thereby suggesting that a reduction in technical delay boosts the fluctuation of usage for articles with low usage but weakens that for pieces with a multitude of usage. Additionally, the GTI of usage is weakly positively correlated with acceptance and overall delay in all groups by usage quartiles except RQ2, indicating that prolonging the period of acceptance or eventual publication will advance the GTI of usage at the statistical time point for most articles.

For the sharing and discussion–relevant indicators, Fig. 5a shows a weakly negative correlation between the TC of sharing and discussions and acceptance delay in RQ1, RQ2, and RQ4. And the correlation coefficient gradually decreases along with increasing sharing and discussions. In Fig. 5b, the TC of sharing and discussions is significantly correlated with technical delay in all groups except for RQ2, and the correlation between these two is negative in RQ1 but positive in RQ3 and RQ4. As shown in Fig. 5c, the TC of sharing and discussions is weakly negatively correlated with overall delay in RQ1 and RQ2, which means that for those articles with few sharing and discussions, shortening their technical delay or final publication time will slightly increase their number of sharing and discussions.

In Fig. 5b, the MI of sharing and discussions has a slightly negative correlation with technical delay from RQ1 to RQ3, meaning that for unpopular papers, shortening the technical delay can enlarge their immediate sharing and discussions to a small degree. Further, the VI of sharing and discussions has weakly negative correlations with acceptance delay and overall delay from RQ1 to RQ3. These correlations gradually decrease along with the increase in sharing and discussion quartiles. Therefore, shortening the review cycle and the total publication time lag of the literature will increase the instability of its monthly volume of sharing and discussions to a small extent, but this effect will diminish as the number of sharing and discussions increases.

Figures 5a and c reveal that the PVI of sharing and discussions is weakly positively correlated with both acceptance delay and overall delay in RQ1. Consequently, for articles that attract only a few social sharing and discussions, extending their review cycle or total publication time may improve their quality and thus acquire maximum sharing and discussions on the web within a shorter period.

An interesting phenomenon can be seen in Fig. 5b that the TC, MI and PVI of sharing and discussions have a slightly positive correlation with technical delay in RQ4. This can be understood that for hot–discussed articles, extending the technical delay can improve their TC, MI, and PVI of sharing and discussions to a small degree, which, probably owing to the longer time they are stuck during the technical delay, the inherent popularity of them arise more massive sharing and discussions as soon as being published.

As seen in Fig. 6, the TC of collections have a weakly negative correlation with acceptance delay and overall delay in RQ1 and RQ4 but have a weakly positive correlation with these delay types in RQ2. Therefore, for those articles with very few or vast collections, reducing their review time or total publication time lag can increase their number of collections to a small degree. Moreover, reducing technical delay can slightly increase the collections of articles with a small number of collections yet reduce those with a large number of collections.

Correlations between the measurement indicators and the three types of delays in different disciplines

We performed Spearman correlation analyses for each indicator and the three types of delays across the six subject areas considered in this study, and the results are presented in Tables 5, 6, 7.

As shown in Table 5, the correlation between each measurement indicator and acceptance delay varied according to the nature of the discipline. For instance, for articles in the social sciences, their TC, MI, and PVI of citations have stronger correlations with acceptance delay than those articles in the natural sciences. And the VI of citations is positively correlated with acceptance delay for all six disciplines. Interestingly, there is a weakly positive correlation between the GTI of citations and acceptance delay for articles in the natural sciences, which does not exist in the social sciences. Further, except for Biology and life sciences, Chemistry, and Psychology, the TC of usage is negatively correlated with acceptance delay for articles from the left disciplines. The MI, VI, and PVI of usage have stronger negative correlations with acceptance delay for papers in the social sciences compared with those in the natural sciences. Only for papers in Engineering and technology, the GTI of usage is positively correlated with acceptance delay, and the VI of sharing and discussions is negatively correlated with acceptance delay. Moreover, the correlations of acceptance delay with the TC, MI, and PVI of sharing and discussions are weakly positive for articles in the natural sciences but negative or irrelevant for articles in the social sciences. We also observed a significant positive correlation between acceptance delay and the TC of collections for articles in Biology and life sciences, Chemistry, and Psychology but not for other disciplines.

Table 6 shows a significantly weak positive correlation between the TC of citations and technical delay for articles across the six disciplines. Moreover, technical delay is weakly positively correlated with the MI and PVI of citations for all subject areas except for Economics. Besides, the MI and VI of citations for the literature in Biology and life sciences have the highest correlation coefficient with the technical delay, indicating that changes in technical delays have a relatively stronger influence on Biology and life sciences articles. However, the GTI of citations shows a weakly negative correlation with technical delay for papers in all disciplines except for Biology and life sciences, where no significant correlation is observed.

For the usage–relevant indicators and technical delay, the TC of usage for articles across the six subject areas are significantly positively correlated with technical delay. Further, a shorter technical delay for those articles in the natural sciences and Economics corresponds to greater VI of usage to a small degree. Moreover, reducing the technical delay in all subject areas other than Psychology can improve the PVI of usage by a small margin.

Apart from Economics, the TC of sharing and discussions positively correlate with technical delay for the literature in other disciplines. For those articles in all subject areas except for Biology and life sciences, the MI of sharing and discussions shows a weakly positive correlation with technical delay. The VI and PVI of sharing and discussions in almost all disciplines except for Economics are significantly correlated with the technical delay, with the articles in Sociology demonstrating the strongest correlation. The correlation coefficient of technical delay for articles in Sociology with the VI of sharing and discussions is − 0.194, while that with the PVI of sharing and discussions is 0.258. This manifests that the increase in the interval from being accepted to published causes the decrease in the VI of sharing and discussions for articles in Sociology but induces the higher PVI of sharing and discussions of Sociology papers. In addition, the TC of collections for the literature in all subject areas show a weakly positive correlation with technical delay.

As seen in Table 7, the TC of citations is weakly negatively correlated with overall delay for articles in all disciplines other than Biology and life sciences and Chemistry. We also observe no significant correlation between the MI of citations and overall delay for articles in Biology and life sciences, a weakly positive correlation between overall delay and the MI of citations for articles in Chemistry, which is negative between the two for articles in other disciplines. Moreover, the VI of citations has no significant correlation with overall delay for articles in Biology and life sciences and Chemistry, but a significant weakly positive correlation is observed for articles in Engineering and technology and the social sciences. Furthermore, the GTI of citations has a weakly positive correlation with overall delay for Engineering and technology articles. And reducing the total publication time can increase the PVI of citations for articles from the social sciences.

Shortening the overall delay of articles in Biology and life sciences, and Chemistry contributes to reducing the TC of usage. By contrast, the TC of usage negatively correlate with overall delay for Economics and Sociology articles. Further, reducing the overall delay will slightly increase the MI of usage for articles in Engineering and technology, Economics, and Sociology. The VI of usage has no significant correlation with overall delay for articles in Chemistry and Engineering and technology, but a significant weakly negative correlation is observed for articles in other disciplines. Furthermore, the GTI of usage for papers in Biology and life sciences, Engineering and technology, and Psychology shows a weakly positive correlation with overall delay. Additionally, decreasing the overall delay will, to a small extent, help articles in Economics and Sociology obtain larger PVI of usage.

For Economics articles, all indicators of sharing and discussions, and collections have no significant correlation with overall delay. However, the TC, MI, and PVI of sharing and discussions show a weakly positive correlation with overall delay for natural sciences and Psychology articles and a weakly negative correlation for Sociology articles. In addition, the VI of sharing and discussions shows a weakly negative relationship with overall delay for articles in Engineering and technology. Additionally, except for papers in Economics and Sociology, the TC of collections for articles from the natural sciences and Psychology are weakly positively correlated with overall delay.

Effect of the length of the three types of delays on the measurement indicators

To explore the effect of the length of the three types of delays on each measurement indicator of articles in the social network environment, we define short and long delays as those below and above the median of the three types of delays (144 days for acceptance delay, 23 days for technical delay, and 174 days for overall delay), respectively. Considering that the sample is divided into two sets respectively for comparison according to the three types of short and long delays, namely, there are two independent groups. Therefore, we apply the Mann–Whitney U test on the distribution of various indicators for articles with the three short and long delay types and report the results in Table 8. If the value of p is less than 0.05, then the result is statistically significant, which means that the corresponding indicators of the two groups with short and long delays have significant differences. Table 9 presents each measurement indicator’s median value for literature with short and long delays (calculation results are rounded to two decimal places). In Table 9, the medians of individual indicators in the two groups with short and long delays are equal. Even so, as long as their p–values meet the significance requirement, it proves that there is a significant difference between the indicators of the two groups.

For acceptance delay, as shown in Table 8, the TC of citations in the group with short acceptance delays is significantly different from that in the group with long acceptance delays. Besides, we can see significant discrepancies in the MI, VI, GTI, and PVI of citations between papers with short and long acceptance delays. Moreover, the median values of the TC and PVI of citations for articles with a short acceptance delay are bigger than for those with a long acceptance delay (the pseudo–median values of the difference for the TC and PVI of citations are 2.00 and 0.069, respectively), which indicates that most articles with a short acceptance delay are cited more frequently and get larger PVI of citations compared with those having a long acceptance delay. At the same time, both the median values of VI and GTI of citations are smaller for papers with a short acceptance delay than those with a long acceptance delay (the pseudo–median values of the difference for VI of citations and the difference for GTI of citations are − 0.039 and − 0.1 separately), suggesting that the VI and GTI of citations for the majority of articles having a short acceptance delay are lower compared with those articles having a long acceptance delay.

As can be seen in Table 8, articles having short acceptance delays have no apparent discrepancy with those having long acceptance delays in the TC of usage (Z(A) = − 1.364, p(A) = 0.173) and the GTI of sharing and discussions (Z(A) = − 0.147, p(A) = 0.883). However, Table 3 shows that the acceptance delay of articles is weakly negatively correlated with their TC of usage (r = − 0.017**), which manifests that shortening the acceptance delay can increase the TC of usage, yet this augment is not that notable. The group with short acceptance delays differs significantly from those with long acceptance delays in terms of the MI, VI, and PVI of usage and sharing and discussions, as well as the GTI of usage. Meanwhile, the median of the MI, VI, and PVI of usage are greater for articles with a short acceptance delay than for those with a long acceptance delay (the pseudo–median values of the difference for the MI, VI, and PVI of usage are 5.00, 0.061, and 0.067, respectively), indicating that the MI, VI, and PVI of usage are larger for articles with a short acceptance delay. Furthermore, the median of the GTI of usage is smaller for pieces with a short acceptance delay compared with those articles having a long acceptance delay (the pseudo–median of the difference is − 0.20), which means that those articles with a long acceptance delay have a relatively sizeable GTI.

For technical delay, the TC, MI, VI, GTI, and PVI of citations for articles with short technical delays differ significantly from those with long technical delays. Moreover, the median values of the TC, MI, and PVI of citations are smaller for articles with a short technical delay than for those with a long technical delay (the pseudo–median values of the difference for the TC, MI, and PVI of citations are − 3.00, − 0.50, and − 0.095, respectively), which indicate that the TC, MI, and PVI of citations are all greater for articles with a long technical delay. However, the median of the VI and GTI of citations for pieces with a short technical delay is greater than for those with a long technical delay (the pseudo–median of the difference for the VI and GTI of citations are 0.065 and 0.10, respectively).

In terms of the usage–relevant indicators, the papers with short technical delays differ significantly from those with long technical delays in terms of the TC, MI, VI, and GTI of usage. Besides, the median values of the TC and GTI of usage for articles with a short technical delay are smaller compared with those articles having a long technical delay (the pseudo–median of the difference for the TC and GTI of usage are − 499 and − 0.3, respectively), suggesting that those articles with a short technical delay also have relatively few usage and small GTI of usage. On the contrary, the medians of MI and VI of usage for articles with a short technical delay are larger than those with a long technical delay (the pseudo–median of the difference for the VI of usage equals 0.013). Interestingly, the pseudo–median of the difference for the MI of usage is − 6.33, which is inconsistent with the comparison result of the median of U(MI). The reason may be that in Table 6, there is a significant correlation between the MI of usage and technical delay for articles in only three disciplines, with positive correlations in two disciplines and a negative correlation in one discipline. Even so, this does not affect the fact that the MI of usage of articles with short technical delays is significantly different from that of articles with long technical delays. Further, for the sharing and discussions–related indicators, only the TC, MI, and PVI of sharing and discussions show significantly different for the group with short technical delays and those with long technical delays. At the same time, the median values of the TC, MI, and PVI of sharing and discussions are lower for articles with a short technical delay. Hence, the TC, MI, and PVI of sharing and discussions are relatively larger for articles with a longer technical delay.

For overall delay, the articles with short overall delays differ significantly from those with long overall delays in terms of the TC, MI, VI, and PVI of citations. Moreover, the median of the TC of citations for articles with a short overall delay is larger than for those with a long overall delay (the pseudo–median of the difference is 1.00), which indicates that articles with a shorter publication time lag are cited more frequently. By contrast, the median of the VI of citations for papers with a short overall delay is smaller than for those with a long overall delay (the pseudo-median of the difference is − 0.022). Besides, for usage–relevant indicators, the TC, MI, VI, GTI, and PVI of usage for the group with short overall delays are significantly different from those with long overall delays. Compared with articles with a long overall delay, the medians of the TC and GTI of usage are smaller for papers with a short overall delay (the pseudo–median values of the difference for the TC and GTI of usage are –84 and –0.30, respectively). Furthermore, the medians of the MI, VI, and PVI of usage are higher for articles with a short overall delay than for those with a long overall delay (the pseudo-median values of the difference for the MI, VI, and PVI of usage are 5.00, 0.049, and 0.093, respectively). In sum, compared to papers with a long overall delay, those with a short overall delay have lower TC and GTI of usage, but a larger MI, VI, and PVI of usage. Additionally, there are significant differences in the TC, MI, VI, and PVI of sharing and discussions between the articles with short and long overall delays. Moreover, the median of the TC of sharing and discussions for papers with short overall delays is smaller than for those with long overall delays, which is the opposite regarding the VI of sharing and discussions.

Regarding the TC of collections, the articles with short acceptance delays, short technical delays, and short overall delays are significantly different from those with long delays. Furthermore, the median values of the TC of collections for articles with short acceptance, technical, and overall delays are relatively smaller than for those with long delays (the pseudo–median values of the three differences for the three types of delays are − 2.00, − 11.00, and − 4.00, respectively), which suggests that the amount of collections is higher for articles with long acceptance, technical, and overall delays compared with those articles having short delays.

asa denotes articles with a short acceptance delay; ala denotes articles with a long acceptance delay; ast denotes articles with a short technical delay; alt denotes articles with a long technical delay; aso denotes articles with a short overall delay; alo denotes articles with a long overall delay.

Discussions and conclusions

Journal articles go through a series of reviews and layout processes from their initial submission to final publication, which can ultimately affect the efficiency of knowledge dissemination. Exploring the relationship between publication delays and evaluation indicators helps the publisher arrange the publication time of articles properly and contributes to enhancing the influence of the literature. However, few studies have examined the effect of publication delay on the evaluation indexes of articles in an internet social context. To fill this gap, we constructed a range of time–series indexes and explored the role of review, layout cycle, and total publication time lag on the indicators of citations, usage, sharing and discussions, and collections to understand the impact of delays during the publication process on the measurement indicators of periodical articles in the social network environment.

We use the quartile method to obtain articles from the open access journal platform PLOS with different citation frequencies in six disciplines from 2015 to 2019. The results manifest that most papers' acceptance delay and overall delay demonstrate an increasing trend from 2015 to 2018 but started to decrease in 2019. Moreover, most articles published in 2015 had a relatively longer technical delay, whereas the processing efficiency of these articles from acceptance to publication improved from 2016 to 2019. Our analysis of the three types of delays across different citation quartiles reveals that the acceptance delay and overall delay of articles with relatively high citations are shorter than those with low citations. In addition, the median values of the acceptance delay and overall delay of papers in Biology and life sciences, Chemistry, and Engineering and technology are lower than those of articles in Economics, Psychology, and Sociology, which, to some extent, indicates that the nature of disciplines determines the delays during the publication process.

The correlations of the three types of delays with various indicators also varied. For the relevant indicators of citations, the acceptance delay and overall delay of articles have a weakly negative correlation with the TC, MI, and PVI of citations, respectively, yet have a weakly positive correlation with the VI of citations. However, the results for the indicators of sharing and discussions are the complete opposite. Meanwhile, there are significantly weak negative correlations between the GTI of citations and acceptance delay, as well as the GTI of sharing and discussions and overall delay. At the same time, the technical delay has a weakly positive correlation with the TC, MI, and PVI of citations and sharing and discussions.

In this study, the significantly weak negative relationship between acceptance delay and the number of citations is consistent with the findings of previous studies. For example, based on academic papers published in Nature, Science and Cell from 2005 to 2009, Shen et al. (2015) found that articles with a short acceptance delay received more citations than those with a long acceptance delay. Lin et al. (2016) revealed a weak negative correlation between the editorial delay and the number of received citations of papers published in Nature, Science and Physical Review Letters. Furthermore, faster reviewed Covid-19 research has more Scopus citations across all major Covid–19–related journals; the correlations between review time and citation frequencies presented by Covid-19 articles are mostly stronger than those presented by non-Covid-19 articles (Kousha & Thelwall, 2022).

There may be certain factors that have influenced the associations of publication delays with a series of evaluation indexes. For instance, our research dataset is much larger than previous studies in terms of discipline categories and volume of literature. Although our sample data are drawn from six disciplines in the natural and social sciences, the correlations calculated by mixing the data from all six subject areas and the correlations calculated separately for each discipline all show a weak negative correlation between citations and acceptance delay. This weak correlation indicates that it is generally easier for reviewers to make faster and more credible determinations for high–quality papers, which may attract more influence and citations later. For the usage–relevant indicators, acceptance delay has a weakly negative correlation with the TC, MI, VI, and PVI of usage and a weakly positive correlation with the GTI of usage. In addition, the three types of delays are weakly positively correlated with the TC of collections. Different metric quartiles also influence such correlations. Further, the correlations between these delays and various measurement indicators differ across subject areas. For example, there is a weakly positive correlation between the TC of collections and overall delay for sample articles from the natural sciences (Biology and life sciences, Chemistry, and Engineering and technology) and Psychology, which is absent for articles in other disciplines.

The Mann–Whitney U test results reveal that the lengths of the three types of delays for the literature are significantly different in many indicators. For instance, from Table 8, the p–values of the TC of citations, sharing and discussions, and collections and the MI, VI, GTI, and PVI of citations and usage for the articles with short and long acceptance delays are less than 0.05, indicating that these indicators for the articles with short acceptance delays have statistically significant differences from those with long acceptance delays. Besides, most papers with longer acceptance delays are cited less, obtain more collections, and have smaller MI of usage and PVI of citations and usage than those with shorter acceptance delays. All in all, the lengths of the three kinds of delays during the publication can affect the performance of the literature on some indicators under the context of the social network.

To a certain extent, our results indicate that a shorter publication time is not necessarily preferred in the modern internet communication environment. In general, reducing the acceptance and final publication lag can improve the efficiency of knowledge diffusion through the formal academic citation channel. Nevertheless, an appropriate time interval at a particular stage of the publishing process can enhance the heat of sharing, discussion, and collection of articles to some extent, hence boosting the attention received by the literature on the internet. Meanwhile, the difference in the relationship between publication delays and traditional citation indexes and social network indicators may be attributed to the more severe hysteresis of the former compared to the latter.

This study has several limitations. First, although we examined the correlations of the three types of delays with various measurement indicators of articles in six disciplines, these correlations may not apply to papers in other subject areas and different journals that are not involved in this work. Second, the reasons behind the established correlations warrant further investigation. For instance, the three types of delays of articles in our sample have weakly positive correlations with the number of sharing and discussions, and collections, respectively, which may be ascribed to the fact that some of these articles are already open and even subject to public scrutiny since their review stage, thereby extending the time intervals before the final publication. Moreover, the causality between the length of delays during publication and the measurement indicators of journal articles is not clear enough. From the author’s perspective, it may refer to gender, fame, country, institution, etc. From the point of research achievements, it may involve whether it is online reviewing by the public or a research hotspot in its field and so on. Therefore, the explanation of causality is complicated, and more detailed work and in–depth research are needed to explore the lag effect during publication, such as choosing the papers cited for more than ten years to guarantee a more extended window period (Li et al., 2019) to further analyze. Additionally, although publication delays vary by discipline categories and journal types, exploring delays in the publishing process is of great significance to the author’s reputation, the reasonable layout for publishers, and the efficiency of knowledge dispersion.

References

Amat, C. B. (2008). Editorial and publication delay of papers submitted to 14 selected food research journals. Influence of Online Posting. Scientometrics, 74(3), 379–389. https://doi.org/10.1007/s11192-007-1823-8

Andersen, M. Z., Fonnes, S., & Rosenberg, J. (2021). Time from submission to publication varied widely for biomedical journals: A systematic review. Current Medical Research and Opinion, 37(6), 985–993. https://doi.org/10.1080/03007995.2021.1905622

Antelman, K. (2017). Leveraging the growth of open access in library collection decision making. In Proceeding from ACRL 2017: at the helm: leading transformation.

Björk, B. C., & Solomon, D. (2013). The publishing delay in scholarly peer–reviewed journals. Journal of Informetrics, 7, 914–923. https://doi.org/10.1016/j.joi.2013.09.001

Bornmann, L. (2014). Validity of altmetrics data for measuring societal impact: A study using data from altmetric and F1000Prime. Journal of Informetrics, 8(4), 935–950. https://doi.org/10.1016/j.joi.2014.09.007

Charen, D. A., Maher, N. A., Zubizarreta, N., Poeran, J., Moucha, C. S., & Shemesh, S. (2020). Correction to: Evaluation of publication delays in the orthopedic surgery manuscript review process from 2010 to 2015. Scientometrics, 124(2), 1137–1137. https://doi.org/10.1007/s11192-020-03603-5

Das, A., & Das, P. (2006). Delay between online and offline issue of journals: A critical analysis. Library & Information Science Research, 28(3), 453–459. https://doi.org/10.1016/j.lisr.2006.03.019

Egghe, L., & Rousseau, R. (2000). The influence of publication delays on the observed aging distribution of scientific literature. Journal of the American Society for Information Science JASIS, 51, 158–165.

Guo, X., Li, X., & Yu, Y. (2021). Publication delay adjusted impact factor: The effect of publication delay of articles on journal impact factor. Journal of Informetrics, 15(1), 101100. https://doi.org/10.1016/j.joi.2020.101100

Haustein, S., Sugimoto, C., & Larivière, V. (2015). Guest editorial: social media in scholarly communication. Aslib Journal of Information Management. https://doi.org/10.1108/AJIM-03-2015-0047

Koler–Povh, T., Južnič, P., & Turk, G. (2014). Impact of open access on citation of scholarly publications in the field of civil engineering. Scientometrics, 98(2), 1033–1045. https://doi.org/10.1007/s11192-013-1101-x

Kousha, K., & Thelwall, M. (2022). Covid–19 refereeing duration and impact in major medical journals. Quantitative Science Studies, 3(1), 1–17. https://doi.org/10.1162/qss_a_00176

Kristin, A. (2004). Do open–access articles have a greater research impact? College & Research Libraries, 65, 372–382. https://doi.org/10.5860/crl.65.5.372

Laakso, M., & Björk, B. C. (2013). Delayed open access: An overlooked high–impact category of openly available scientific literature. Journal of the American Society for Information Science and Technology, 64(7), 1323–1329. https://doi.org/10.1002/asi.22856

Li, L. Y., Min, C., & Sun, J. J. (2019). On the quantification and distribution of citation peaks. Journal of the China Society for Scientific and Technical Information, 38(7), 697–708. https://doi.org/10.3772/j.issn.1000-0135.2019.07.004

Lin, Z., Hou, S., & Wu, J. (2016). The correlation between editorial delay and the ratio of highly cited papers in Nature. Science and Physical Review Letters. Scientometrics, 107(3), 1457–1464. https://doi.org/10.1007/s11192-016-1936-z

Luwel, M., & Moed, H. F. (1998). Publication delays in the science field and their relationship to the ageing of scientific literature. Scientometrics, 41(1), 29–40. https://doi.org/10.1007/BF02457964

Piwowar, H., Priem, J., Larivière, V., Alperin, J. P., Matthias, L., Norlander, B., et al. (2018). The state of OA: a large–scale analysis of the prevalence and impact of Open Access articles. PeerJ, 6, e4375. https://doi.org/10.7717/peerj.4375

Priem, J., Taraborelli, D., Groth, P., & Neylon, C. (2010). Altmetrics: A manifesto Retrieved Oct 26, 2010. From http://altmetrics.org/manifesto

Rainie, L., Funk, C., & Anderson, M. (2015). How scientists engage the public. Pew Research Center.

Rigby, J., Cox, D., & Julian, K. (2018). Journal peer review: A bar or bridge? An analysis of a paper’s revision history and turnaround time, and the effect on citation. Scientometrics, 114(3), 1087–1105. https://doi.org/10.1007/s11192-017-2630-5

Shen, S., Rousseau, R., Wang, D., Zhu, D., Liu, H., & Liu, R. (2015). Editorial delay and its relation to subsequent citations: The journals Nature. Science and Cell Scientometrics, 105(3), 1867–1873. https://doi.org/10.1007/s11192-015-1592-8

Shi, D., Rousseau, R., Yang, L., & Li, J. (2017). A journal’s impact factor is influenced by changes in publication delays of citing journals. Journal of the Association for Information Science and Technology, 68(3), 780–789. https://doi.org/10.1002/asi.23706

Skrzypczak, T., Michałowicz, J., Mamak, M., Jany, A., Skrzypczak, A., Bogusławska, J., et al. (2021). Publication Times in Ophthalmology Journals: The Story of Accepted Manuscripts. Cureus, 13(9), e17738. https://doi.org/10.7759/cureus.17738

Sotudeh, H., & Estakhr, Z. (2018). Sustainability of open access citation advantage: The case of Elsevier’s author–pays hybrid open access journals. Scientometrics, 115(1), 563–576. https://doi.org/10.1007/s11192-018-2663-4

Tort, A., Targino Dias Góis, J. H., & Amaral, O. (2012). Rising Publication Delays Inflate Journal Impact Factors. PLoS ONE, 7, e53374. https://doi.org/10.1371/journal.pone.0053374

Wang, X., Cui, Y., Li, Q., & Guo, X. (2017). Social media attention increases article visits: An investigation on article–level referral data of PeerJ. Frontiers in Research Metrics and Analytics, 2, 11. https://doi.org/10.3389/frma.2017.00011

Wang, X., Fang, Z., & Guo, X. (2016). Tracking the digital footprints to scholarly articles from social media. Scientometrics, 109(2), 1365–1376. https://doi.org/10.1007/s11192-016-2086-z

Wang, X., Liu, C., Mao, W., & Fang, Z. (2015). The open access advantage considering citation, article usage and social media attention. Scientometrics, 103(2), 555–564. https://doi.org/10.1007/s11192-015-1547-0

Xu, S., An, M., & An, X. (2021). Do scientific publications by editorial board members have shorter publication delays and then higher influence? Scientometrics, 126(8), 6697–6713. https://doi.org/10.1007/s11192-021-04067-x

Yegros, A., & Amat, C. (2009). Editorial delay of food research papers is influenced by authors’ experience but not by country of origin of the manuscripts. Scientometrics, 81(2), 367–380. https://doi.org/10.1007/s11192-008-2164-y

Yu, G., Guo, R., & Li, Y. –J. (2006a). The influence of publication delays on three ISI indicators. Scientometrics, 69(3), 511–527. https://doi.org/10.1007/s11192-006-0167-0

Yu, G., Guo, R., & Yu, D.-R. (2006b). The influence of the publication delay on journal rankings according to the impact factor. Scientometrics, 67(2), 201–211. https://doi.org/10.1007/s11192-006-0094-0

Yu, G., Wang, X.-H., & Yu, D.-R. (2005). The influence of publication delays on impact factors. Scientometrics, 64(2), 235–246. https://doi.org/10.1007/s11192-005-0249-4

Acknowledgements

The authors would like to acknowledge support from the National Social Science Foundation of China (Grant No. 21BTQ010).

Funding

National Social Science Foundation of China, 21BTQ010, Jingda Ding

Author information

Authors and Affiliations

Contributions

JD was involved in conceptualization, formal analysis, methodology, supervision, review, the preparation of the initial draft, and the editing of the final draft. DH was involved in data collection, formal analysis, methodology, software, and the preparation of the initial draft.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Appendix

Appendix

See Fig.

7 and See Tables

10,

11,

12.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ding, J., Du, D. A study of the correlation between publication delays and measurement indicators of journal articles in the social network environment—based on online data in PLOS. Scientometrics 128, 1711–1743 (2023). https://doi.org/10.1007/s11192-023-04640-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-023-04640-6