Abstract

In course of the organization of Workshop III entitled “Cited References Analysis Using CRExplorer” at the International Conference of the International Society for Scientometrics and Informetrics (ISSI2021), we have prepared three reference publication year spectroscopy (RPYS) analyses: (i) papers published in Journal of Informetrics; (ii) papers regarding the topic altmetrics; and (iii) papers published by Ludo Waltman (we selected this researcher because he received the Derek de Solla Price Memorial Medal during the ISSI2021 conference). The first RPYS analysis has been presented live at the workshop and the second and third RPYS analyses have been left to the participants for undertaking after the workshop. Here, we present the results for all three RPYS analyses. The three analyses have shown quite different seminal papers with a few overlaps. Many of the foundational papers in the field of scientometrics (e.g., distributions of publications and citations, citation network and co-citation analyses, and citation analysis with the aim of impact measurement and research evaluation) were retrieved as seminal papers of the papers published in Journal of Informetrics. Mainly papers with discussions of the deficiencies of citation-based impact measurements and comparisons between altmetrics and citations were retrieved as seminal papers of the topic altmetrics. The RPYS analysis of the paper set published by Ludo Waltman mainly retrieved papers about network analyses, citation relations, and citation impact measurement.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

We organized Workshop III entitled “Cited References Analysis Using CRExplorer” at the International Conference of the International Society for Scientometrics and Informetrics (ISSI2021). We reported about the detailed procedure of this workshop earlier (Haunschild & Bornmann, 2021b). A cited references analysis of the papers published in Journal of Informetrics was presented in an interactive part of this workshop. We could not present two other cited references analyses due to time constraints: One on the topic altmetrics, short for alternative metrics such as Twitter or Mendeley counts (Sugimoto et al., 2017), and one on the papers published by Ludo Waltman. The purpose of this contribution is to put the cited references analysis that was performed during the workshop into writing and present the other two applications of cited references analyses. Furthermore, we intend to present these three applications as examples of current good practice of cited references analyses.

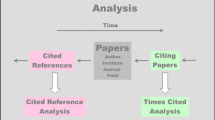

Times cited analyses provide a forward impact perspective from a set of focal papers whereas cited references analyses turn this around and provide a backwards impact perspective. The impact of publications on specific publication sets (e.g., journals, topics, or oeuvres of researchers) can be analyzed using the cited references’ perspective. The focus of citation impact measurement within the cited references’ perspective lies on the selected publication set and not the whole literature database (such as Web of Science, that can be cited in principle). This enables researchers to answer questions, such as: Which publications are seminal papers or historical roots of the analyzed publication set? Bornmann and Marx (2013) proposed thus a specific new form of cited references analysis. This new form was named reference publication year spectroscopy (RPYS, Marx et al., 2014). The most frequent applications of RPYS include the discovery of seminal papers and historical roots of papers published in a journal, in a topic, or by a researcher.

RPYS analyses consist of different steps: In the first step, the publication set under study has to be collected. In the second step, the publication set with cited references is analyzed with regard to the number of cited references in each reference publication year (RPY). In the third and final step, the RPYS results are analyzed (and interpreted). A plot of the RPYs against the number of cited references shows early peaks where historical roots can be found. Different metrics (e.g., in how many citing years were certain references cited very frequently) can be used to further support the analysis of the RPYS results. The program CRExplorer (see www.crexplorer.net) was introduced by Thor et al. (2016a) for simplifying and supporting the latter two stages. Advanced indicators that provide new cited references analysis opportunities were included in the capabilities of CRExplorer later (Thor et al., 2018a, 2018b).

During our workshop, Rüdiger Mutz and Peter Kokol presented RPYS analyses as invited talks. Their analyses can be found in the literature (Barth et al., 2014; Kokol et al., 2021). In this contribution, we present three RPYS analyses: (1) papers published in Journal of Informetrics, (2) papers regarding the topic altmetrics, and (3) papers published by Ludo Waltman (we selected this researcher because he received the Derek de Solla Price Memorial Medal during ISSI2021). The first one has been presented live at the workshop and data sets for the second and third one have been provided to the participants of the workshop. We are happy to share these data sets also with interested readers on a personal basis. These three RPYS analyses represent examples of the frequent uses of RPYS: studying the historical roots and seminal papers of a journal, a research field, or a researcher. We encourage the readers to analyze the data sets themselves to better understand the RPYS procedure.

Data and methods

Data

We use publication metadata from Web of Science (WoS; Birkle et al., 2020) by Clarivate Analytics. The data were downloaded in June 2021. We searched for all papers authored by Ludo Waltman, all papers with the topic (title, abstract, and keywords) altmetrics, and all papers published in Journal of Informetrics. Among the three data sets, the data set regarding the topic altmetrics should be considered most incomplete because not all publications on the topic altmetrics use this term in their title, abstract, or keywords. However, RPYS analyses are rather robust in this respect. A more complete publication set that was retrieved by a more complicated search query should not yield very different results (Haunschild et al., 2019).

Methods

We used CRExplorer (https://crexplorer.net; Thor et al., 2016a, 2016b; Thor et al., 2018a, 2018b) for RPYS analysis. CRExplorer can be used interactively and via the script interface. The script interface has the advantage of enabling the reproducibility of the analysis. The following CRExplorer scripts in Figs. 1, 2, and 3 can be used to reproduce our results after downloading the corresponding publication data.

The first two lines of the CRExplorer scripts in Figs. 1, 2, and 3 import the WoS data. The third line invokes an automatic clustering algorithm. Since cited references data are concerned by variants of the same reference, the unification of variants is an important step in RPYS. We used the clustering algorithm with a Levenshtein threshold of 0.75 accounting for volume and page number. We have had good experience with a Levenshtein threshold of 0.75 in many previous studies: It is a good compromise between clustering references that are different and missing too many references that are the same. DOIs are not used in the clustering of the cited references, since we made the experience that the focus on volume and page number leads to good clustering results (in most of the cases). When books are important in a field or topic, neglecting the page number or manual clustering might be necessary. In the fourth line, the equivalent cited references are merged. The fifth line removes rarely occurring cited references.

Removing rarely occurring references leads to more pronounced RPYS results. We used different removal thresholds for the three data sets due to the different total number of cited references: (1) All cited references occurring less than ten times were removed from the RPYS analysis of the papers published in Journal of Informetrics; (2) all cited references occurring less than five times were removed from the RPYS analysis of the papers regarding the topic altmetrics, and (3) all cited references occurring only once were removed from the RPYS analysis of the papers published by Ludo Waltman. The choice of these thresholds roughly depend on the total number of cited references within the data sets: The higher the number of cited references, the higher the appropriate threshold. When inspecting an unknown data set, one should start by removing the cited references that occur only once. This threshold should be raised until a peak structure is visible in the spectrogram. Early peaks might disappear if too high threshold values are used.

Lines six to eight in the scripts export the results: A CSV file with the remaining cited references is exported in line six. A CSV file with the number of cited references per RPY for plotting the RPYS spectrogram is exported in line seven. A CRE file that can be opened later in CRExplorer is saved in line eight. It is also possible to make multiple exports of different data sets, e.g., the user can export the CSV files with all cited references included. Afterwards the user can remove the singly cited references and export this data set, and subsequently remove all cited references that were cited less than five times and export that data set and so on. The exported CSV files can be inspected in the interactive CRExplorer and the most appropriate result can be analyzed further.

Table 1 shows relevant data regarding cited references and citing publications of the three RPYS analyses. The CSV file that is written in line 7 can be imported in other programs for producing high-quality and customized graphics.

We analyzed the RPYS spectrograms regarding relevant peaks of the five-year median deviation. Tukey’s fences (Tukey, 1977) were used to support the identification of the most important peaks: Important peaks are flagged based on the interquartile range of the median deviations (Thor et al., 2018a, 2018b). The relevant cited references under the peaks can be determined by the N_CR values (number of cited references) and PERC_YR values (proportion of the number of cited references within this RPY, i.e., N_CR/NCR). However, proper selection of the relevant cited references requires some experience. We hope that the selection we made for the three example RPYS analyses provides helpful guidelines for the readers: (i) Cited references with rather high PERC_YR values should be selected because they contribute a significant proportion of the entire peak. (ii) Multiple top cited references of peaks should be selected if they have rather similar N_CR values. If a larger gap occurs in the N_CR values within the peak, cited references above the gap should be selected.

We used the N_TOP10 indicator to discover highly influential cited references of the three publication sets. The advanced indicator N_TOP10 (Thor et al., 2018a, 2018b) counts the number of citing years in which the corresponding cited reference has been cited very frequently (i.e., within the top-10%). We expect that highly influential cited references have values of at least half of the maximal N_TOP10 value, i.e., more than half of the number of different citing years in Table 1. Furthermore, we checked the cited references with the highest N_CR values. Other advanced indicators, for example N_TOP1 (i.e., within the top-1%) and N_TOP0_1 (i.e., within the top-0.1%), are available if needed (Thor et al., 2020).

The RPYS spectrograms were plotted using R (R Core Team, 2018) with the R package ‘BibPlots’ (Haunschild, 2021). As part of the preparation and post-processing of our workshop, the R package ‘BibPlots’ was extended. The new function ‘rpys_bl’ was added for producing RPYS bar-line charts. Such RPYS charts display the number of cited references as a bar-plot with a line-plot overlay for the median deviation. This is the plot style used in the RPYS spectrograms in this paper. In addition to the static plots in this paper, we produced interactive RPYS graphs using the R package ‘dygraphs’ (Vanderkam et al., 2018).

Results

In the following three subsections, we present the results of the three RPYS analyses prepared for the workshop. The first one has been performed during the workshop, and the other ones were left to the participants to try on their own afterwards.

RPYS analysis of the papers published in Journal of Informetrics

Figure 4 presents the spectrogram from the RPYS analysis of the papers published in Journal of Informetrics. The first reference cited at least ten times is Hazen (1914). This cited reference is cited in bibliometrics usually when Hazen percentiles are used, e.g., for citation impact normalization purposes (see for example Bornmann & Haunschild, 2018). The most important peaks in Fig. 4 are marked with a red star, and the RPY is written next to it. An interactive version of Fig. 4 is available at: https://s.gwdg.de/EmA74A. Readers can zoom into specific regions of the RPYS spectrogram using the interactive version. Crosshairs are located at the data points closest to the mouse cursor for improved usability.

available at: https://s.gwdg.de/EmA74A

Spectrogram from the RPYS analysis of the papers published in Journal of Informetrics. An interactive version is

When the mouse cursor hovers over the tooltip flag on top of the bars, a tooltip appears that shows up to five most referenced cited references of that RPY with additional advanced indicators: PERC_YR, N_TOP10, and N_PYEARS (number of different citing years in which this cited reference has been cited). The most important cited references from the marked RPYs are listed in Table 2.

All publications in Table 2 are well-known landmark publications in scientometrics. In CR1, bibliographic coupling has been proposed as a method for grouping publications by M. M. Kessler. CR2 is a classical book of collected lectures given by D. J. de Solla Price entitled “Little Science, Big Science”. This lecture collection discusses science and its place in society. CR3 is the work of D. J. de Solla Price regarding networks of scientific publications and about incidences of references and citations. CR4 is R. K. Merton’s proposal of the Matthew effect of accumulated advantage in science. CR5 is H. Small’s proposal to use co-citations as a measure of relationships between scientific publications. CR6 is R. K. Merton’s book “The sociology of science: Theoretical and empirical investigations” explaining many well-known concepts such as the Matthew effect.

CR7 is the contribution by G. Pinski and F. Narin regarding citation influence of scientific publications on the journal basis. This work constitutes the basis for Google’s PageRank algorithm (Page, 2001). In CR8, D. J. de Solla Price presented the cumulative advantage distribution as an underlying theory for Bradford’s law, Lotka’s law, and Zipf’s law. CR9 is E. Garfield’s discussion on the use of citation analysis to rate scientific performance. CR10 is L. C. Freeman’s review on measures of structural centrality in social networks. In CR11, P. O. Seglen argued against the use of the journal impact factor in research evaluation. In CR12, J. S. Katz and B. R. Martin discussed research collaboration on a conceptual level and point out that co-authorship is not necessarily adequate to describe collaboration. In CR13, S. Brin and L. Page describe a prototype of the Google search engine.

CR14 is S. Redner’s empirical analysis of the citation distribution of papers published in 1981 and indexed by the Institute for Scientific Information (one of the predecessors of Clarivate Analytics). In CR15, J. E. Hirsch proposed the popular although heavily criticized h index for measuring the performance of individual researchers. Based on an analysis of common citation distributions, F. Radicchi proposed a generalized h index in CR16. CR17 is L. Bornmann’s and H.-D. Daniel’s discussion on the question what citation counts are measuring based on their review of studies on citation habits. In CR18, L. Waltman et al. proposed a new crown indicator (mean normalized citation score, MNCS) and presented an analysis of its formal mathematical properties.

Two cited references in Table 2 have the highest possible N_TOP10 value of 15 in our data set. One of them is CR15 (the introduction of the h index), and the other one is a theoretical analysis by L. Egghe of the g index, a modified version of the h index (Egghe, 2006), with N_CR = 109. Thus, Egghe (2006) was cited less than half as often as CR15. Therefore, the peak in 2005 and CR15 itself dominate the neighboring years. It is mainly a matter of choice how detailed RPYS analyses are performed. A more in-depth analysis of the data set might have listed Egghe (2006) in Table 2. However, our aim with this contribution is more to provide guidance how RPYS analyses should be performed than to analyze the example data sets in high detail. The other cited references that surpassed half of the maximal possible N_TOP10 value are also listed in Table 2 (CR16, CR17, and CR18) except for H. F. Moed’s proposal of a new journal impact metric, the source normalized impact per paper, SNIP (Moed, 2010). Only two cited references reached N_CR values above 100: J. Hirsch’s paper with N_CR = 262 (CR15) and Egghe (2006) with N_CR = 109 (not listed in Table 2).

This example shows the importance of looking also into regions of the RPYS spectrogram with high N_CR values even if the median deviation is low. Usually, as in this case, such highly influential cited references are found when sorting the list of cited references by N_CR or N_TOP10 values. We recommend this approach in addition to only looking at extreme peaks. Alternatively, one could inspect each RPY with a sizable N_CR value separately. If many additional cited references are found by these approaches, separate tables should be provided for presenting them. In our case here, only two additional cited references were found by the analyses. Thus, we recommend mentioning those cited references in the text and formally citing them.

RPYS analysis of the papers regarding the topic altmetrics

The spectrogram that results from our RPYS analysis of the papers published regarding the topic altmetrics is shown in Fig. 5. The first reference cited at least five times is Lotka (1926). Based on his analysis of the productivity of chemists and physicists, A. Lotka found that statistically \(1/n^{2}\) scientists are making n contributions to science, i.e., many scientists publish few papers and few scientists publish many papers. This became known as Lotka’s law, see also CR8 in Table 2. Red stars mark the most important peaks in Fig. 5. Additionally, the corresponding RPY is written next to the star. Table 3 lists the most important cited references from the marked RPYs in Fig. 5.

available at: https://s.gwdg.de/zaPCOY

Spectrogram from the RPYS analysis of the papers published regarding the topic altmetrics. An interactive version is

CR19 in Table 3 is actually the same cited reference as CR 17 in Table 2 but we provide a new CR number for this cited reference because the N_CR values in both RPYS analyses differ due to the different data set. CR20 shows one of the main strengths of cited references analyses. CR20 is the altmetrics manifesto by J. Priem, D. Taraborelli, P. Groth, C. Neylon that provided early reasons for the need of additional new metrics. This cited reference is a webpage, i.e., a non-source reference of citation databases like WoS. A topic search alone would not yield this digital document. The RPYS analysis is able to discover such important non-source references. In fact, CR20 is the most cited reference in Table 3. CR21 is a discussion about the relationship between altmetrics and broader impact (e.g., societal impact) and an overview of advantages and downsides of altmetrics by L. Bornmann. CR22 is a status report about the development of altmetrics by Z. Zahedi, R. Costas, and P. Wouters. They analyzed the presence of altmetrics across disciplines and calculated correlations between altmetrics and citations. CR23 is a follow-up study of CR22 that uses a larger data set by the same authors in a slightly different order.

There are 22 cited references that surpass the N_TOP10 threshold for this analysis. Only four (CR20, CR21, CR22, and CR23) of these 22 cited references appeared in Table 3 and were just discussed. In the previous section where we discussed the RPYS analysis of the papers published in the Journal of Informetrics, only two additional cited references were found by analyzing the N_TOP10 values. In situations with many additional cited references like the current one, we recommend listing these additional cited references in another table. Thus, the additional cited references are listed in Table 4.

In CR24, G. Eysenbach studied the suitability of Twitter counts as an early proxy for citation counts. In CR25, M. Thelwall et al. studied the correlations between eleven altmetrics and citations. Actually, CR25 is the most-frequently referenced cited reference in the altmetrics literature. CR26 presents an analysis by S. Haustein et al. of the relationship between tweets and citations in the biomedical literature. CR27 by Li et al. checked the validity of using bookmark counts in online reference managers for research evaluation. In CR28, C. Neylon and S. Wu discussed the need of article-level metrics that accumulate faster than citations thereby paving the way towards altmetrics. In CR29, E. Mohammadi and M. Thelwall compared the number of times papers from different disciplines in Social Sciences and Humanities have been bookmarked in the online reference manager platform Mendeley with the numbers of times the same papers have been cited. In CR30, S. Haustein et al. analyzed the altmetrics activity regarding the papers authored by 57 of the presenters at the 2010 STI conference in Leiden, The Netherlands. CR31 in Table 4 is actually the same cited reference as CR15 in Table 2, but we assigned a different CR number to this cited reference here because the N_CR value is different within the topic of altmetrics from that in the papers published in Journal of Informetrics.

H. Piwowar discussed the implications of a small change of words (applicants are asked to list their “research products” rather than their “publications”) in the US National Science Foundation’s funding guidelines in CR32 in Table 4. In CR33, X. Shuai, A. Pepe, and J. Bollen studied the altmetrics activity regarding selected arXiv preprints. In CR34, J. Priem, P. Groth, and D. Taraborelli promoted the use of altmetrics for tracking, describing, and analyzing various processes in which scholars are involved (e.g., publishing, teaching, reading, and recommending). CR35 by J. Priem and B. M. Hemminger contains the description of different altmetrics and their possible use before the term altmetrics was coined. In CR36, P. Wouters and R. Costas discussed possible use cases of altmetrics on a broad basis. CR37 in Table 4 is the same cited reference as CR17 in Table 2 just with a different N_CR value due to the different set of citing papers.

CR38 in Table 4 by P. Sud and M. Thelwall contains a discussion about the potential applicability of altmetrics for research evaluation. In CR39, S. Haustein, R. Costas, and V. Larivière presented an analysis of incidence of selected altmetrics with respect to different document characteristics, e.g., document type, number of pages, length of title, and scientific discipline. In CR40, J. Priem, H. Piwowar, and B. M. Hemminger presented an analysis of the frequencies in which papers published by the Public Library of Science (PLoS) were mentioned in selected altmetrics sources. In CR41, S. Haustein and T. Siebenlist proposed to use bookmarking data from online reference managers as a proxy for journal readership. All cited references with N_CR values above 100 are included in Table 4.

RPYS analysis of the papers published by Ludo Waltman

The RPYS analysis of the papers published by Ludo Waltman yields the spectrogram that is shown in Fig. 6. The first cited reference that was cited at least twice is the work of Moskowitz (1958) who analyzed redundancy networks. There is only one extreme peak in Fig. 6 that covers two RPYs. Both RPYs are labeled and marked with a red star. There are only three main cited references from these two RPYs, all of them referenced at least ten times. None of the cited references reached the threshold of N_TOP10 = 9 (derived from 17 citing years). The reason might be that we chose the publication output of a still rather young researcher. Therefore, we present the cited references that were referenced at least ten times in Table 5.

available at: https://s.gwdg.de/jdkgRn

Spectrogram from the RPYS analysis of the papers by Ludo Waltman. An interactive version is

In CR42 in Table 5, L. Waltman and N. J. van Eck proposed an algorithm for clustering publications on the basis of direct citation relationships. CR43 by N. J. van Eck and L. Waltman is a survey about the visualization software VOSviewer which is very popular in bibliometrics. CR44 and CR45 in Table 5 are actually the same cited references as CR18 and CR15 in Table 2. In CR46, N. J. van Eck et al. reported that citation habits vary considerably in some medical WoS subject categories. They point out that clinical research is cited less frequently than basic or diagnostic research. CR47 by M. Zitt and H. Small is the proposal of the audience factor, a variant of the journal impact factor with fractional citation weighting.

In CR48 in Table 5, H. P. F. Peters and P. Wouters proposed a new approach of term co-occurrence mapping. A manual check has shown that most of the times, both papers that are related to the DOIs of CR49 have been cited together in the studied data set. These cited reference companions presented (i) a fast algorithm for detecting community structures in networks and (ii) a set of algorithms for finding and evaluating community structures in networks. The similar topics of the two cited references, both published in Physical Review E in 2004, explain why they were frequently cited together. In CR50, J. Lundberg proposed a paper-based field-normalized logarithmized citation score as an alternative to the so called ‘crown indicator’. In CR51, L. Waltman, N. J. van Eck, and E. C. M. Noyons discussed the visualization of similarities (VOS) mapping and clustering technique that was made available to the scientific community within VOSviewer (see CR43).

Discussion and conclusions

In this paper, we have presented three different RPYS analyses that were prepared for and/or presented at the workshop III “Cited References Analysis Using CRExplorer” at the 18th ISSI conference: (i) one analysis of the papers published in a journal (Journal of Informetrics), (ii) one analysis of a topic (altmetrics), and (iii) one analysis of the papers published by a researcher (Ludo Waltman). Those are three different types of publication sets. These differences are (mainly) responsible for the different approaches we took in the RPYS analyses. The proper approach for an RPYS analysis has to be determined by the user based on the inspection of the data set at hand. We provided the three different examples with the aim to help the reader to find the proper approach for performing their own RPYS analyses. The method RPYS is very versatile; there is no simple rule how a particular data set should be analyzed. However, we hope that this contribution serves as a helpful guidance for other researchers.

The seminal publications of the three publication sets were discussed. The seminal papers of Journal of Informetrics can be summarized as many of the foundational papers in the field of scientometrics (e.g., distributions of publications and citations, co-citation and citation network analyses, and citation analysis with the aim of research evaluation and impact measurement). The seminal papers of the topic altmetrics mainly comprise discussions of the deficiencies of citation-based impact measurement and comparisons between altmetrics and citations. The seminal papers of the paper set published by Ludo Waltman are mainly about citation relations, network analyses, and citation impact measurement.

RPYS analyses provide a different perspective than a times cited analysis. One of the main advantages is that also non-source documents can be found if they are included in the indexed list of cited references, like in WoS. Such documents cannot be found using a topic search. In this paper, we demonstrate this advantage based on the altmetrics data set. We expect that this advantage will become more important in the (near) future. Since researchers are increasingly active in writing other texts than classical journal papers or book chapters such as blogs, RPYS is able to reveal the importance of these texts for research in fields, on topics or by researchers. Thus, RPYS is very useful for analyzing the relevance of new text formats for scientific referencing or communication.

One of the advantages of RPYS is that is a very robust method. In our experience, incomplete data sets (i.e., data sets not including all papers on a topic, from a researcher or in a field) yield rather similar results, see for example Haunschild, et al. (2019). A few missing or not relevant publications usually do not distort the general conclusions. However, too many wrong publications in the data set can cause wrong peaks to appear, it is a better strategy to exclude some relevant publications in the data set than to include too many irrelevant ones. However, RPYS is not sensitive to changes in the delineation of the data set.

The main limitation of RPYS is that one is only able to discuss the most-prominent cited references, either those responsible for the main peaks, those with extreme high numbers of cited references, or those with a track record of being highly referenced in many citing years. The latter is very closely related to the method multi-RPYS that “extends the impact of these [RPYS] techniques by first segmenting the data in terms of the publication years of the citing sets, performing the standard RPYS analysis within each set and then rank transforming the de-trended results to compare influential references across the history of the citing set” (Comins & Leydesdorff, 2017, p. 1498).

We invite the readers to use this study as a guide to discover RPYS using the data sets presented here for a more in-depth study of their choice. If readers are more interested in the historical roots and seminal papers of a certain aspect of the topics discussed here, the RPYS data can be inspected with a special focus on such a topic. Successful replication of the analyses presented here can be a good starting point for new RPYS analyses of other topics, journals, and researchers. A good data set for a first RPYS analysis with another publication set would be the publications of one’s own research field, since the RPYS results can be well interpreted then.

The selection of peaks is another limitation of RPYS. Usage of Tukey’s fences is only one of the possible choices for peak selection. Another possible option is the selection based on visual inspection. However, this is a rather subjective option. The interested reader could browse through the other peaks not discussed here using the interactive versions of the RPYS spectrograms to find other possibly relevant seminal publications.

RPYS analyses should be interpreted against the backdrop of knowledge about the scientific topic of the analyzed data set. Experts can reconcile RPYS results with their knowledge of the analyzed field. RPYS results might also substantiate or refine prior knowledge about the field. RPYS analyses can discover surprising cited references that experts would not have guessed in the first place.

References

Barth, A., Marx, W., Bornmann, L., & Mutz, R. (2014). On the origins and the historical roots of the Higgs boson research from a bibliometric perspective. European Physical Journal plus, 129(6), 13. https://doi.org/10.1140/epjp/i2014-14111-6

Birkle, C., Pendlebury, D. A., Schnell, J., & Adams, J. (2020). Web of Science as a data source for research on scientific and scholarly activity. Quantitative Science Studies, 1(1), 363–376. https://doi.org/10.1162/qss_a_00018

Bornmann, L., & Haunschild, R. (2018). Measuring individual performance with comprehensive bibliometric reports as an alternative to h-index values. Journal of Korean Medical Science, 33(18), 5. https://doi.org/10.3346/jkms.2018.33.e138

Bornmann, L., & Marx, W. (2013). The proposal of a broadening of perspective in evaluative bibliometrics by complementing the times cited with a cited reference analysis. Journal of Informetrics, 7(1), 84–88. https://doi.org/10.1016/j.joi.2012.09.003

Comins, J. A., & Leydesdorff, L. (2017). Identification of long-term concept-symbols among citations: Do common intellectual histories structure citation behavior? Journal of the Association for Information Science and Technology, 68(5), 1224–1233. https://doi.org/10.1002/asi.23769

Egghe, L. (2006). Theory and practise of the g-index. Scientometrics, 69(1), 131–152. https://doi.org/10.1007/s11192-006-0144-7

Haunschild, R. (2021). BibPlots: Plot functions for use in bibliometrics. R package version 0.0.8. Retrieved 10 February, 2022, from https://cran.r-project.org/web/packages/BibPlots/index.html

Haunschild, R., & Bornmann, L. (2021a). Reference Publication Year Spectroscopy (RPYS) in practice: Three RPYS analyses in the course of Workshop III "Cited References Analysis Using CRExplorer" at the 18th ISSI conference. Retrieved from https://arxiv.org/abs/2109.00969

Haunschild, R., & Bornmann, L. (2021b). Report on Workshop III "Cited References Analysis Using CRExplorer" at the 18th International Conference of the International Society for Scientometrics and Informetrics (ISSI2021). Retrieved from https://arxiv.org/abs/2108.11309

Haunschild, R., Marx, W., Thor, A., & Bornmann, L. (2019). How to identify the roots of broad research topics and fields? The introduction of RPYS sampling using the example of climate change research. Journal of Information Science, 46(3), 392–405. https://doi.org/10.1177/0165551519837175

Hazen, A. (1914). Storage to be provided in impounding municipal water supply. Transactions of the American Society of Civil Engineers, 77(1), 1539–1640. https://doi.org/10.1061/taceat.0002563

Kokol, P., Zavrsnik, J., & Blazun Vosner, H. (2021). Identifying historical roots in paediatric echocardiography using RPYS. arXiv:2108.00789. Retrieved from https://ui.adsabs.harvard.edu/abs/2021arXiv210800789K

Lotka, A. J. (1926). The frequency distribution of scientific productivity. Journal of the Washington Academy of Sciences, 16(12), 317–323.

Marx, W., Bornmann, L., Barth, A., & Leydesdorff, L. (2014). Detecting the historical roots of research fields by reference publication year spectroscopy (RPYS). Journal of the Association for Information Science and Technology, 65(4), 751–764. https://doi.org/10.1002/asi.23089

Moed, H. F. (2010). Measuring contextual citation impact of scientific journals. Journal of Informetrics, 4(3), 265–277. https://doi.org/10.1016/j.joi.2010.01.002

Moskowitz, F. (1958). The analysis of redundancy networks. Transactions of the American Institute of Electrical Engineers, Part i: Communication and Electronics, 77(5), 627–632. https://doi.org/10.1109/TCE.1958.6372698

Page, L. (2001). US 6285999 B1.

R Core Team. (2018). R: A language and environment for statistical computing (Version 3.5.0). Vienna, Austria: R Foundation for Statistical Computing. Retrieved from https://www.r-project.org/

Sugimoto, C. R., Work, S., Larivière, V., & Haustein, S. (2017). Scholarly use of social media and altmetrics: A review of the literature. Journal of the Association for Information Science and Technology, 68(9), 2037–2062.

Thor, A., Bornmann, L., & Haunschild, R. (2018a). CitedReferencesExplorer (CRExplorer) manual. Retrieved December 19, 2019, from https://andreas-thor.github.io/cre/manual.pdf

Thor, A., Bornmann, L., Haunschild, R., & Leydesdorff, L. (2020). Which are the influential publications in the Web of Science subject categories over a long period of time? CRExplorer software used for big-data analyses in bibliometrics. Journal of Information Science, 47(3), 419–428. https://doi.org/10.1177/0165551520913817

Thor, A., Bornmann, L., Marx, W., & Mutz, R. (2018b). Identifying single influential publications in a research field: New analysis opportunities of the CRExplorer. Scientometrics, 116(1), 591–608. https://doi.org/10.1007/s11192-018-2733-7

Thor, A., Marx, W., Leydesdorff, L., & Bornmann, L. (2016a). Introducing CitedReferencesExplorer (CRExplorer): A program for reference publication year spectroscopy with cited references standardization. Journal of Informetrics, 10(2), 503–515. https://doi.org/10.1016/j.joi.2016.02.005

Thor, A., Marx, W., Leydesdorff, L., & Bornmann, L. (2016b). New features of CitedReferencesExplorer (CRExplorer). Scientometrics, 109(3), 2049–2051. https://doi.org/10.1007/s11192-016-2082-3

Tukey, J. W. (1977). Exploratory data analysis. Addison-Wesley Publishing Company.

Vanderkam, D., Allaire, J. J., Owen, J., Gromer, D., & Thieurmel, B. (2018). dygraphs: Interface to 'dygraphs' interactive time series charting library. R package version 1.1.1.6. Retrieved August 19, 2021, from https://CRAN.R-project.org/package=dygraphs

Acknowledgements

A preprint of this paper that has been used in the ISSI workshop has been made available (Haunschild & Bornmann, 2021a). We thank the anonymous referees for helpful comments that improved our manuscript.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

LB is member of the Price Medal Laureates Board of this journal, and RH is member of the Distinguished Reviewers Board of this journal.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Haunschild, R., Bornmann, L. Reference publication year spectroscopy (RPYS) in practice: a software tutorial. Scientometrics 127, 7253–7271 (2022). https://doi.org/10.1007/s11192-022-04369-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-022-04369-8