Abstract

This work applies a factor analysis with VARIMAX rotation to develop a bibliometric indicator, named the Weighted Factor Index, in order to derive a new classification for journals belonging to a certain category, alternative to the one provided by the Journal Impact Factor. For this, 16 metrics from three different databases (Web of Science, Scopus and SCImago Journal Rank) are considered. The Weighed Factor Index entails the advantage of incorporating and summarizing information from all the indicators; so as to test its performance, it was applied to rank journals belonging to the category Information Science & Library Science.

Similar content being viewed by others

Introduction

The Journal Impact Factor (JIF), introduced by Garfield (1955), is widely considered as the reference indicator to establish the quality of a journal, hence used as a tool in several evaluation processes. Authors such as Roberts (2017), believe its usefulness is limited and that it should be replaced by more valid and informative indicators. Moreover, Malay (2013) suggests that journal editors might increase JIF through coercive self-citation or even by omitting citations from competing journals. To overcome such drawbacks, other bibliometric indicators have been developed, such as the Eigenfactor score, the Article influence score, or the Immediacy index, among others.

Nevertheless, the JIF continues to be used, and proof of its importance is that more than 2000 articles have analysed it or used it as part of the title. A number of committees for the assessment of research activity in Spain and elsewhere, consider publication in journals of the first quartile—according to the ranking established by the Journal of Citation Reports (JCR) developed by Web of Science—as a priority criterion for positive evaluation in almost all fields of knowledge.

Through the development of mathematical models, variables that influence the JIF can be analysed, both from the standpoint of numerical values (Valderrama et al., 2018a), and in terms of the position that a journal occupies within the ranking according to its JIF (Valderrama et al., 2018b, 2020). Approaches to the explanation and prediction of JIF through statistical regression have been addressed by Park (2015), Qian et al. (2017), Ayaz et al. (2018), Bravo et al. (2018) and Abramo et al. (2019), among others.

The purpose of this work is to define a new metric that summarizes and compiles the information contained in various indicators, specifically the main ones provided by three databases: Journal of Citation Reports (JIF, 5-year JIF, JIF without self-citations, Eigenfactor score, Article influence score, Immediacy index, total number of citations, citable items, open access papers during 2015–19, number of times that other sources have cited articles from the journal between 2015 and 2019, cited half-life and citing half-life), Scopus (journal’s h-index, CiteScore and Source Normalized Impact per Paper) and the SJR index by SCImago Journal Rank.

An antecedent of the bibliometric application of this technique, in a different context, was developed by Bollen et al. (2009). They performed Principal Component Analysis (PCA) of the rankings produced by 39 existing ones and proposed measures of scholarly impact, calculated on the basis of both citation and usage log data. More recently, Ortega (2020) introduced by means of PCA two groups of Altmetric impact indicators based on weights, using two metrics for different impact dimensions. Seiler and Wohlrabe (2012) also applied PCA to obtain weights of a set of 27 bibliometric indicators in journals from the field of Economics, to derive, as an application, a world ranking of economists based on the PCA. Later, Bornmann et al. (2018) again used PCA to assign weights to a set of 22 indicators to obtain a meta-ranking of economic journals.

The methodology developed in the current contribution will relies on Factor Analysis with VARIMAX rotation considering three factors of the model in such a way that they explain around 82.5% of the total variability; the new indicator proposed, which we call the Weighed Factor Index (WFI), affords an alternative classification of journals with respect to those provided by the JIF or by other metrics. It is applied to rank the journals belonging to the JCR category Information Science & Library Science, with interpretation of the meaning of each factor.

Methodology

Factor Analysis is a classic statistical technique, introduced by Spearman (1904), to represent a set of variables through a linear combination of underlying common and unobservable factors, and a variable that synthesizes the specific part of original variables. The usual procedure considers orthogonal factors, though they could also be obliques. By selecting a suitable number of factors we can reduce the dimension of the initial problem. Later, Hotelling (1933) developed a factor extraction method based on the principal component technique. In PCA each component explains a percentage of the initial variance, and there are as many components as initial variables, so that by selecting those with the greatest variance, a high percentage of the total variability can be concentrated. Mathematically, the principal components are obtained by solving a matrix problem of eigenvalues, which represent the variances of the components.

The main problem associated with the factors lies in the interpretation of their meaning. It is common to link each factor to the variables of the combination that originates it, with higher coefficients in absolute terms. The factor matrix representing the relationship between factors and initial variables can, however, be difficult to interpret the factors. To facilitate interpretation, so-called factorial rotations are carried out. They consist of rotating the coordinate axes representing the factors until they are as close as possible to the variables in which they are saturated. Factor saturation thereby transforms the initial factor matrix into another, called a rotated factor matrix, which is a linear combination of the first and explains the same amount of initial variance, but is easier to interpret.

No variable should be more saturated than one factor; and it would be desirable for the factors to have very high weights for some coordinates and very low for others. In practice, this situation does not arise and it is achieved by performing a rotation of the factors. Whereas it transforms the factorial matrix and changes the variance explained by each factor, the communalities are not altered. Unless there is reason to believe that the factors are correlated, the usual technique is orthogonal rotation, the most widely used method being VARIMAX introduced by Kaiser (1958).

In this work we deal with 16 metrics selected from three databases: Web of Science (WoS), Scopus, and SCImago Journal Rank. From WoS the following metrics are considered:

-

Journal Impact Factor (JIF) Yearly average number of citations of articles published in the last two years in a given journal

-

5-year JIF The same JIF but considering a window of five years instead of two

-

JIF without self-citations The same JIF but removing citations of journal articles in the same where they are published

-

Eigenfactor score Number of times articles from the journal published in the past five years have been cited in the JCR year calculated by an algorithm according to which citations from highly ranked journals have a greater weight than those from poorly ranked journals and excluding self-citations

-

Article influence score (AIS) It determines the average influence of a journal's articles over the first five years after publication, again excluding self-citations

-

Immediacy index Average number of times an article is cited in the year it is published

-

Total cites Total number of times that a journal has been cited by all journals included in the database in the JCR year

-

Times cited 2015–19 Number of times that other sources have cited articles from the journal between 2015 and 2019

-

Open access papers 2015–19 Free online access to research articles in a journal between 2015 and 2019

-

Citable items Items identified in WoS as an article, review or proceedings paper

-

Cited half-life Median age of the articles that were cited in the JCR year

-

Citing half-life Median age of articles cited by the journal in the JCR year

From Scopus:

-

h-index of the journal Maximum value of h such that the given journal has published at least h papers, each cited at least h times

-

Source Normalized Impact per Paper (SNIP) Average number of citations received by journal articles over three years

-

CiteScore It measures the ratio of citations per published article

And from SCImago:

-

SCImago Journal Rank index (SJR) Is a measure of scientific influence of a journal that accounts for both the number of citations received and the importance or prestige of the journals where such citations come from

The journal’s h-index, CiteScore, and SNIP were obtained from Scopus; SJR from SCImago Journal Rank; and the remaining values from JCR. The 87 journals included in the category Information Science and Library Science of the 2019 edition of JCR (Clarivate Analytics, 2020) were considered in this study, although fourteen of them were excluded due to a lack of some of the metrics.

Let us denote as f1, f2, …, f16 the factors obtained from the 16 bibliometric variables considered, with respective variances (eigenvalues) λ1, λ2, …, λ16, so that V = λ1 + λ2+ ··· + λ16 would be the total variance. The percentage of variance explained by each component is given by λi/V; hence if we want to explain V up to a certain level, it is necessary to accumulate the first k components so that (λ1 + λ2 + ··· + λk)/V can reach that level. We then define the Weighed Factor Index (WFI) as:

and this will be the tool to obtain the new ranking of journals within the field. Let us observe that WFI is the sum of uncorrelated random variables, each collecting a piece of information from the analysis, and whose importance acts as a weighting factor.

The statistical calculations were carried out using SPSS (version 26) licensed by the University of Granada.

Results

The journals of the category appear in Table A of the Appendix, sorted in descending order according to their JIF. As a previous step to the factor analysis, the main descriptive statistics of the bibliometric indicators have been calculated and are included in Table 1. Given the nature of each indicator, the descriptive ones take very different values. As the median is a more robust statistic than the mean, the differences observed between the different indicators are smaller, especially at very extreme values. Perhaps the most interesting aspect is reflected in the coefficient of variation that shows that those that represent characteristics of the volume of citations (Eigenfactor score, times cited, number of open access articles and total citations) present a much greater relative dispersion than the rest.

After performing the corresponding Factor Analysis with VARIMAX rotation, the results shown in Table 2 were obtained. It is seen that the first three factors accumulate about 82.5% of the total variance, meaning our analysis is reduced to dimension 3. The factorial weights associated to these factors are shown in Table 3.

We note that the first factor is mainly related to indicators representing averages or ratio of citations, that is, normalized metrics, while the second one is associated with variables that are expressed in terms of volume or quantity. In turn, the third factor represents the half-life of citations received and made by the journal.

In view of these results, the indicator that we propose, called Weighed Factor Index (WFI), which integrates the information contained in the initial 16 metrics, is given by:

that is, the sum of the three uncorrelated factors that accumulate 82.5% of the total variance weighted by their own variances. The distribution of the variances between the three factors after orthogonal rotation is quite balanced: the second accumulates approximately half the variance of the first, and the third, half that of the second.

The application of the WFI to the journals of Information Science and Library Science gives rise to values and orders gathered in Table 4, where the value of WFI for a concrete journal is calculated by substituting in expression (1) the value of each factor corresponding to that journal. For example, in the case of Int. J. Inf. Manag., the factor values are: f1 = 3.043, f2 = 0.916 and f3 = − 2.396, so that WFI = 21.997.

As the average of the values of each factor for the different sample individuals, in our case the journals of the category, is zero, the sign of the factor for a journal indicates whether for that particular journal that factor is above or below of the mean of the values of the total of journals. In this way, positive values are interpreted as that the factor considered is higher than the average, and negative values in the opposite direction. This can therefore cause the WFI value to be negative.

Table 5 displays the calculation of the bivariate Pearson and Spearman correlation coefficients for the complete set of indicators, together with the WFI. It can be seen that, in general, there is a high degree of correlation between the different metrics with some exceptions. Open access in the period 2015–19 only shows a significant correlation, although it is low, with other indicators associated with the same factor (Eigenfactor score, times cited in 2015–19, total cites and citable items), in addition to a spurious correlation with cited half-life. The latter, in turn, is significantly correlated, in addition to the aforementioned open access, with citing half-life, that integrates the same factor. Conversely, it is interesting to note that the new WFI indicator is highly correlated with all bibliometric indicators, with the exception of open access and citing half-life. Although many indicators are highly correlated, none of them, as such, measures a dimension that can be labeled as quality of a journal and, therefore, it is not possible to configure an objective list of journals from a theoretical point of view based on these metrics (Bornmann et al., 2018).

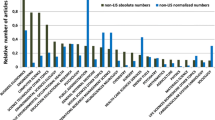

Finally, Table 6 shows the 5 journals with the highest and lowest values in the three factors and their positional changes.

Interpretation of results and conclusion

The new index introduced in this work, called the Weighted Factor Index (WFI), stems to some extent from JIF and other indicators correlated with it, yet it incorporates the information contained in other metrics that can be compartmentalized. In fact, WFI can be expressed as the sum of three dimensions:

-

Factor 1 contains the information related to standardized indicators as representing an average or citation rate, such as the JIF and related indices (5-years JIF and without self-citations), AIS, journals’ h index, SJR index Immediacy index, CiteScore and SNIP. The classic idea of impact can be associated with them.

-

Factor 2 represents quantity indicators as Eigenfactor score, total cites, citable items, times cited in period 2015–19, and open access papers published by the journals in this same period. None of them are normalized, but they represent volume.

-

Factor 3 represents the long-term citation dimension insofar as it includes the half-life of received and performed citations by each journal, which are two indicators that respond to the same aging model described by Brookes (1970); they reflect the opposite of the Immediacy index included in the first component, which is the one having the narrowest window. Both are related to the aging process of the literature.

In the sum that makes up the WFI, each factor is weighted by its respective contribution to the total variance through its corresponding eigenvalue. As seen in Table 1, the weight of the first factor is approximately twice that of the second, which in turn is twice that of the third.

From the reordering provided by WFI, shown in Table 4, some noteworthy changes are observed for certain journals, although overall the recording is highly correlated with JIF, both quantitatively (Pearson coefficient = 0.912) and by orders (Spearman coefficient = 0.945). The greatest differences appear mainly regarding the second and third factor. Thus, on the one hand, the journals Scientometrics (ascending from position 21–7), Journal of Health Communication (from 41 to 27), and Qualitative Health Research (from 25 to 12) are seen to have a strong value for the second factor. In the opposite direction we can mention Learned Publishing (descends from position 26–40) with one of the lowest values in the half-life dimension, along with Journal of Organizational and End User Computing (from 38 to 51) and Malaysian Journal of Library & Information Science (from 46 to 59).

Table 6 takes in the five journals with highest and lowest values in the three dimensions. Worth highlighting is the case of the International Journal of Information Management. It occupies the first position in terms of the JIF and the first factor, but the last with respect to the third factor, due to the short cited half-life of only 4.6 years, which makes it decline a position in the WFI order. It is displaced in the first position by MIS Quarterly due to the strength it presents in factors 1 and 3. Similarly, Journal of Strategic Information Systems, falls from position 4–9, also presenting a relatively low value in the second factor.

There are two journals in the lower-middle zone of the ranking whose position is significantly altered when comparing both ranking criteria. It is Information Research, which occupies the last position in terms of Factor 1 but is fourth with respect to Factor 2, allowing it to rise from position 63 (according to JIF) to 52 (according to WFI). The second case is Social Science Information sur les Sciences Sociales, among the last five in terms of Factor 1, but occupying the second position of Factor 2, thereby rising from position 59 in the JIF to position 47 according to WFI.

Based on the stated interpretation of the factors, an alternative approach to that proposed in this work, integrating the three factors in a single index, would consist of considering only some of them depending on the purpose of the analysis. Thus, for example, if when classifying journals we were interested only in the citation rate, we would consider only the first factor; or, if the interest was focused on the volume of citations, the analysis would be carried out taking into account the second factor.

An interesting point to consider is that the more articles a journal publishes, the higher its impact factor and there is a direct linear relationship between the journal production and the impact factor (Rousseau & Van Hooydonk, 1996). This point can be debated and, in fact, in this work standardized indicators such as the JIF, the AIS or the Inmediacy Index (assigned to the first factor) are combined with others that are not, such as the Eigenfactor score, times cited or number of open access papers (assigned to the second factor). Although this may seem like an erroneous methodological approach, Factor Analysis itself is in charge, as we have seen, of configuring the model by giving each of the factors a homogeneous form. A similar situation occurs when estimating a regression model for a certain response variable, where the explanatory variables can collect very diverse information, and can even be qualitative.

In conclusion, the indicator introduced in this article, called Weighed Factor Index, allows the information from various metrics to be aggregated through terms that are not correlated with each other in a single indicator, so that such information does not overlap. Therefore, a more reliable and complete ranking of journals within a certain category can be obtained than when using indicators that configure them in isolated fashion.

Of course, the results obtained in this article correspond to the field of Information Science and Library Science, and may differ when studying other subject areas. Extrapolation to other categories included in the JCR would be interesting and will be approached in subsequent research efforts.

References

Abramo, G., D’Angelo, C. A., & Felici, G. (2019). Predicting long-term publication impact through a combination of early citations and journal impact factor. Journal of Informetrics, 13(1), 32–49. https://doi.org/10.1016/j.joi.2018.11.003

Ayaz, S., Masood, N., & Islam, M. A. (2018). Predicting scientific impact based on h-index. Scientometrics, 114(3), 993–1010. https://doi.org/10.1007/s11192-017-2618-1

Bollen, J., de Sompel, H. V., Hagberg, A., & Chute, R. (2009). A principal component analysis of 39 scientific impact measures. PLoS ONE, 4(6), e6022. https://doi.org/10.1371/journal.pone.0006022

Bornmann, L., Butz, A., & Wohlrabe, K. (2018). What are the top five journals in economics? A new meta-ranking. Applied Economics, 50(6), 659–675. https://doi.org/10.1080/00036846.2017.1332753

Bravo, G., Farjman, M., Grimaldo, F., Birukou, A., & Squazzoni, F. (2018). Hidden connections: Network effects on editorial decisions in four computer science journals. Journal of Informetrics, 12(1), 101–112. https://doi.org/10.1016/j.joi.2017.12.002

Brookes, B. C. (1970). Obsolescence of special library periodicals: Sampling errors and utility contours. Journal of the American Society for Information Science, 21, 320–329.

Clarivate Analytics (2020). InCites journal citation reports. Retrieved from https://jcr.clarivate.com/JCRHomePageAction.action

Garfield, E. (1955). Citation indexes for science: A new dimension in documentation through association of ideas. Science, 122(3159), 108–111. https://doi.org/10.1126/science.122.3159.108

Hotelling, H. (1933). Analysis of a complex of statistical variables into principal components. Journal of Educational Psychology, 24(6), 417–441. https://doi.org/10.1037/h0071325

Kaiser, H. F. (1958). The varimax criterion for analytic rotation in factor analysis. Psychometrika, 23, 187–200. https://doi.org/10.1007/BF02289233

Malay, D. S. (2013). Impact factors and other measures of a journal’s influence. The Journal of Foot and Ankle Surgery, 52(3), 285–287. https://doi.org/10.1053/j.jfas.2013.03.039

Ortega, J. L. (2020). Proposal of composed altmetric indicators based on prevalence and impact dimensions. Journal of Informetrics, 14(4), Article 101071. https://doi.org/10.1016/j.joi.2020.101071

Park, S. (2015). The R&D logic model: Does it really work? An empirical verification using successive binary logistic regression models. Scientometrics, 105(3), 1399–1439. https://doi.org/10.1007/s11192-015-1764-6

Qian, Y., Rong, W., Jiang, N., Tang, J., & Xiong, Z. (2017). Citation regression analysis of computer science publications in different ranking categories and subfields. Scientometrics, 110(3), 1351–1374. https://doi.org/10.1007/s11192-016-2235-4

Roberts, R. J. (2017). An obituary for the impact factor. Nature, 546(7660), 600. https://doi.org/10.1038/546600e

Rousseau, R., & Van Hooydonk, G. (1996). Journal production and journal impact factors. Journal of the American Society for Information Science, 37(10), 775–780.

Seiler, C., & Wohlrabe, K. (2012). Ranking economists on the basis of many indicators: An alternative approach using RePEc data. Journal of Informetrics, 6(3), 389–402. https://doi.org/10.1016/j.joi.2012.01.007

Spearman, C. (1904). General intelligence objectively determined and measured. American Journal of Psychology, 15(2), 201–293. https://doi.org/10.2307/1412107

Valderrama, P., Escabias, M., Jiménez-Contreras, E., Valderrama, M. J., & Baca, P. (2018a). A mixed longitudinal and cross-sectional model to forecast the journal impact factor in the field of Dentistry. Scientometrics, 116(2), 1203–1212. https://doi.org/10.1007/s11192-018-2801-z

Valderrama, P., Escabias, M., Jiménez-Contreras, E., Rodríguez-Archilla, A., & Valderrama, M. J. (2018b). Proposal of a stochastic model to determine the bibliometric variables influencing the quality of a journal: Application to the field of dentistry. Scientometrics, 115(2), 1087–1095. https://doi.org/10.1007/s11192-018-2707-9

Valderrama, P., Escabias, M., Valderrama, M. J., Jiménez-Contreras, E., Baca, P. (2020). Influential variables in the journal impact factor in Dentistry journals. Heliyon, 6(3), Article e03575. https://doi.org/10.1016/j.heliyon.2020.e03575

Acknowledgements

Although this phrase is usually written in the articles as a courtesy, in our case we sincerely thank two anonymous reviewers for the interest and time they have dedicated to studying our work, since their suggestions have contributed decisively to improve its final version.

Funding

Funding for open access charge: Universidad de Granada / CBUA. This research was funded in part by project PID2020-113961GB-I00 of the Spanish Ministry of Science and Innovation, project A-FQM-66-UGR20 of Vicerectorate for Research and Transfer of the University of Granada (also supported by the FEDER program), and project FQM-307 of the Government of Andalusia (Spain).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no potential conflicts of interest with respect to the research, authorship or publication of this article.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Valderrama, P., Jiménez-Contreras, E., Escabias, M. et al. Introducing a bibliometric index based on factor analysis. Scientometrics 127, 509–522 (2022). https://doi.org/10.1007/s11192-021-04195-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-021-04195-4