Abstract

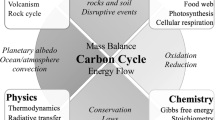

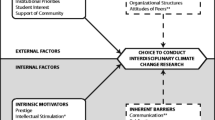

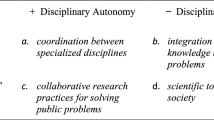

Growing interest in interdisciplinary (ID) understanding has led to the recent development of four ID assessments, none of which have previously been comprehensively validated. Sources of evidence for the validity of tests include construct validity, such as the internal structure of the test. ID tests may (and should) test both disciplinary (D) and ID understanding, and the internal structure can be examined to determine whether the theoretical relationship among D and ID knowledge is supported by empirical evidence. In this paper, we present an analysis of internal structure of ISACC, an ID assessment of carbon cycling, selected because the developers included both disciplinary and ID items and posited a relationship among these two types of knowledge. Responses from 454 high school and college students were analyzed using confirmatory factor analyses (CFA) with the Mplus software for internal structure focusing on dimensionality, as well as functioning by gender and race/ethnicity, and reliability. CFA confirmed that the underlying structure of the ISACC best matches a two-factor path model, supporting the developers’ theoretical hypothesis of the impact of disciplinary understanding on interdisciplinary understanding. No gender effect was found in the factor structure of the ISACC, indicating that females and males have similar performance. Race/ethnicity performance was similar to other science assessments, revealing possible ethnicity bias on the part of the instrument. The reliability coefficients for the two-factor path model were found to be sufficient. This study highlights the importance of the internal structure of test instruments as a source of validity evidence and models a procedure to assess internal structure.

Similar content being viewed by others

References

Abraham, J. (2004). Multidisciplinary explorations: Bridging the gap between engineering and biology. Journal of College Science Teaching, 33(5), 27–31.

American Educational Research Association (AERA), American Psychological Association (APA), & National Council on Measurement in Education (NCME). (2014). Standards for educational and psychological testing. Washington, DC: AERA.

Australian Curriculum and Assessment Reporting Authority [ACARA] (2016). ACARA STEM connections project report. Retrieved from https://www.australiancurriculum.edu.au/media/3220/stem-connections-report.pdf.

Boix Mansilla, V., & Duraisingh, E. D. (2007). Targeted assessment of students' interdisciplinary work: An empirically grounded framework proposed. The Journal of Higher Education, 78(2), 215–237.

Boone, W. J., Staver, J. R., & Yale, M. S. (2014). Rasch analysis in the human sciences. Dordrecht: Springer Netherlands.

Boone, W. J. (2016). Rasch analysis for instrument development: why, when, and how?. CBE—Life Sciences Education, 15(4), rm4.

Chen, F. F., West, S. G., & Sousa, K. H. (2006). A comparison of bifactor and second-order models of quality of life. Multivariate Behavioral Research, 41(2), 189–225.

Chi, M. T. H., & Ceci, S. J. (1987). Content knowledge: Its role, representation, and restructuring in memory development. In H. W. Reese (Ed.), Advances in child development and behavior (Vol. 20, pp. 91–142). New York: Academic Press.

Clark, L. A., & Watson, D. (1995). Constructing validity: Basic issues in objective scale development. Psychological Assessment, 7(3), 309–319.

Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. Psychometrika, 16(3), 297–334.

Dimitrov, D. M. (2010). Testing for factorial invariance in the context of construct validation. Measurement and Evaluation in Counseling and Development, 43(2), 121–149.

DiStefano, C., Liu, J., Jiang, N., & Shi, D. (2018). Examination of the weighted root mean square residual: Evidence for trustworthiness? Structural Equation Modeling, 25(3), 453–466.

Doerschuk, P., Bahrim, C., Daniel, J., Kruger, J., Mann, J., & Martin, C. (2016). Closing the gaps and filling the STEM pipeline: A multidisciplinary approach. Journal of Science Education and Technology, 25(4), 682–695.

Education Scotland. (2012). CfE Briefing Interdisciplinary Learning. Retrieved from https://education.gov.scot/Documents/cfe-briefing-4.pdf.

Erduran, S., Guilfoyle, L., Park, W., Chan, J., & Fancourt, N. (2019). Argumentation and interdisciplinarity: Reflections from the Oxford argumentation in religion and science project. Disciplinary and Interdisciplinary Science Education Research, 1(8), 1–10.

Garner, J. K., Kaplan, A., Hathcock, S., & Bergey, B. (2020). Concept mapping as a mechanism for assessing science teachers’ cross-disciplinary field-based learning. Journal of Science Teacher Education, 31(1), 8–33.

Gipps, C. V., & Murphy, P. (1994). A fair test? Assessment, achievement and equity. Open University Press.

Hirschfeld, G., & Von Brachel, R. (2014). Improving Multiple-Group confirmatory factor analysis in R–A tutorial in measurement invariance with continuous and ordinal indicators. Practical Assessment, Research, and Evaluation, 19 (1), 7.

Holbrook, J. B. (2013). What is interdisciplinary communication? Reflections on the very idea of disciplinary integration. Synthese, 190, 1865–1879.

Hu, L. T., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling, 6(1), 1–55.

Hurd, P. D. (1991). Why we must transform science education. Educational Leadership, 49(2), 33–35.

Kane, M. T. (2006). Validation. In R. L. Brennan (Ed.), Educational measurement (4th ed., pp. 17–63). Westport: Praeger Publishers.

Khisty, L. L. (1995). Making inequality: Issues of language and meanings in mathematics teaching with Hispanic students. In W. G. Secada, E. Fennema, & L. B. Adajian (Eds.), New directions for equity in mathematics education (pp. 279–297). Cambridge: Cambridge University Press.

Kline, R. (2015). Principles and practice of structural equation modeling (4th ed.). New York: Guilford Publications.

Kochelmans, J. (1979). Why interdisciplinarity? Interdisciplinarity and higher education. University Park: Pennsylvania State University Press.

Lattuca, L. R., Voigt, L. J., & Fath, K. Q. (2004). Does interdisciplinarity promote learning? Theoretical support and researchable questions. The Review of Higher Education, 28(1), 23–48.

LoGerfo, L., Nichols, A., & Reardon, S. F. (2006). Achievement gains in elementary and high school. Washington, DC: Urban Institute.

Lord, F. M., & Novick, M. R. (1968). Statistical theory of mental test scores. MA: Addison-Wesley.

Marasco, E. A., & Behjat, L. (2013). Developing a cross-disciplinary curriculum for the integration of engineering and design in elementary education. In Proc. of the 2013 American society of engineering education annual conference and exposition, Atlanta, GA.

Marshall, J., Banner, J., & You, H. (2018). Assessing the effectiveness of sustainability learning. Journal of College Science Teaching, 47(3), 57-67.

Messick, S. (1989). Validity. In R. L. Lin (Ed.), Educational measurement. New York: American Council on Education, Macmillan Publishing.

Ministry of Education. (2015). The 2015 revised science curriculum. Report no. 2015–74. Sejong: Ministry of Education.

Moss, P. A. (1992). Shifting conceptions of validity in educational measurement: Implications for performance assessment. Review of Educational Research, 62(3), 229–258.

Musu-Gillette, L., de Brey, C., McFarland, J., Hussar, W., Sonnenberg, W., & Wilkinson-Flicker, S. (2017). Status and Trends in the Education of Racial and Ethnic Groups 2017 (NCES 2017-051). Washington, DC; U.S. Department of Education, National Center for education statistics. Retrieved [Sep 16] from http://nces.ed.gov/pubsearch.

Muthen, L. K., & Muthen, B. O. (1998-2018). Mplus User’s Guide (8th ed.). Los Angeles: Muthén & Muthén.

National Research Council. (2001). Knowing what students know: The science and design of educational assessment. In J. Pellegrino, N. Chudowsky, & R. Glaser (Eds.), Board on testing and assessment, Center for Education, division of behavioral and social science and education. Washington, DC: National Academy Press.

National Research Council. (2012). A Framework for K-12 Science Education: Practices, Crosscutting Concepts, and Core Ideas. Committee on a conceptual framework for new K-12 science education standards. Board on science education, division of behavioral and social sciences and education. Washington, DC: The National Academies Press.

Neurath, O. (1996). Unified science as encyclopedic integration. In S. Sarkar (Ed.), Logical empiricism at its peak: Schlick, Carnap, and Neurath (pp. 309–335). Boston: Harvard University.

NGSS Lead States. (2013). Next generation science standards: For states, by states. Washington, DC: National Academies Press.

Nowacek, R. S. (2005). A discourse-based theory of interdisciplinary connections. The Journal of General Education, 54(3), 171–195.

Raykov, T. (2001). Bias of coefficient a for fixed congeneric measures with correlated errors. Applied Psychological Measurement, 25(1), 69–76.

Raykov, T. (2009). Evaluation of scale reliability for unidimensional measures using latent variable modeling. Measurement and Evaluation in Counseling and Development, 42(3), 223–232.

Reiska, P., Soika, K., & Cañas, A. J. (2018). Using concept mapping to measure changes in interdisciplinary learning during high school. Knowledge Management & E-Learning: An International Journal (KM&EL), 10(1), 1–24.

Rennie, L., Wallace, J., & Venville, G. (2012). Integrating science, technology, engineering, and mathematics: Issues, reflections, and ways forward. In L. Rennie, G. Venville, & J. Wallace (Eds.), Exploring curriculum integration: Why integrate? (pp. 1–11). Routledge.

Schaal, S., Bogner, F. X., & Girwidz, R. (2010). Concept mapping assessment of media assisted learning in interdisciplinary science education. Research in Science Education, 40(3), 339–352.

Schumacker, R. E., & Lomax, R. G. (2010). A beginners guide to structural equation modeling. New York: Routledge

Sedlacek, W. E. (1994). Issues in advancing diversity through assessment. Journal of Counseling & Development, 72(5), 549–553.

Shen, J., Liu, O. L., & Sung, S. (2014). Designing interdisciplinary assessments in sciences for college students: An example on osmosis. International Journal of Science Education, 36(11), 1773–1793. https://doi.org/10.1080/09500693.2013.879224.

Sireci, S. G. (2007). On validity theory and test validation. Educational Researcher, 36(8), 477–481.

Smith, E. V. (2001). Evidence for the reliability of measures and validity of measure interpretation: A Rasch measurement perspective. Journal of Applied Measurement, 2(3), 281–311.

Stock, P., & Burton, R. J. (2011). Defining terms for integrated (multi-inter-transdisciplinary) sustainability research. Sustainability, 3(8), 1090–1113.

Tate, W. F. (1994). Race retrenchment and reform of school mathematics. Phi Delta Kappan, 75(6), 477–480.

Willingham, J. C., Pair, J. D., & Parrish, J. C. (2016). A framework for cross-disciplinary engineering projects. Science Scope, 39(7), 68.

Wilson, M. (2005). Constructing measures: An item response modeling approach. Lawrence Erlbaum Associates Publishers.

Wolfe, C. R., & Haynes, C. (2003). Assessing interdisciplinary writing. Peer Review, 6(1), 126–169.

Wright, B. D.,& Stone, M. H. (1979). Best test design: Rasch measurement. Chicago, IL: Mesa Press.

You, H. S. (2017). Why teach science with an interdisciplinary approach: History, trends, and conceptual frameworks. Journal of Education and Learning, 6(4), 66–77.

You, H. S., Marshall, J. A., & Delgado, C. (2018). Assessing students' disciplinary and interdisciplinary understanding of global carbon cycling. Journal of Research in Science Teaching, 55(3), 377-398.

You, H. S., Marshall, J. A., & Delgado, C. (2019). Toward Interdisciplinary Learning: Development and Validation of an Assessment for Interdisciplinary Understanding of Global Carbon Cycling. Research in Science Education, 1-25.

Yu, C. Y. (2002). Evaluating cutoff criteria of model fit indices for latent variable models with binary and continuous outcomes (Unpublished doctoral dissertation). University of California, Los Angeles, CA.

Zimmerman, D. W. (1972). Test reliability and the Kuder-Richardson formulas: Derivation from probability theory. Educational and Psychological Measurement, 32(4), 939–954.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

You, H.S., Park, S., Marshall, J.A. et al. Interdisciplinary Science Assessment of Carbon Cycling: Construct Validity Evidence Based on Internal Structure. Res Sci Educ 52, 473–492 (2022). https://doi.org/10.1007/s11165-020-09943-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11165-020-09943-9