Abstract

We provide an applied introduction to Bayesian estimation methods for empirical accounting research. To showcase the methods, we compare and contrast the estimation of accruals models via a Bayesian approach with the literature’s standard approach. The standard approach takes a given model of normal accruals for granted and neglects any uncertainty about the model and its parameters. By contrast, our Bayesian approach allows incorporating parameter and model uncertainty into the estimation of normal accruals. This approach can increase power and reduce false positives in tests for opportunistic earnings management as a result of better estimates of normal accruals and more robust inferences. We advocate the greater use of Bayesian methods in accounting research, especially since they can now be easily implemented in popular statistical software packages.

Similar content being viewed by others

1 Introduction

A central theme in accounting is the measurement of latent constructs (e.g., economic earnings, managerial effort, or abnormal accruals). Such measurement is inherently uncertain (e.g., Barker et al. 2020). To account for the uncertainty, the Bayesian framework provides a formal approach (e.g., Gelman et al. 2013) that is commonly used in accounting theory (e.g., Johnstone 2018). In empirical accounting research, by contrast, the formal Bayesian approach is uncommon, despite its conceptual appeal.Footnote 1 An important barrier to adoption has been the Bayesian framework’s computational requirements. But as a result of increasing computational power, this barrier has diminished in recent years. A remaining barrier is unfamiliarity.

We provide an applied introduction to key methods of Bayesian estimation relevant for the measurement of latent constructs, including Bayesian regression, Bayesian hierarchical modelling, and Bayesian model averaging. To illustrate the methods, we apply them to a central concern of accounting researchers, investors, and regulators: the detection of accruals-based earnings management (e.g., Healy & Wahlen 1999; Dechow et al. 2010; Lewis 2012). A key challenge in detecting managed abnormal accruals is researchers’ uncertainty about the determinants of normal accruals, absent earnings management (Ball, 2013). Faced with this uncertainty, researchers default to models of normal accruals to detect accruals-based earnings management. Several studies, for example, use a variant of the seminal Jones (1991) model. But reliance on any given model and its estimates risks overstating the confidence of conclusions by producing false positives. The likely false positives are not only troubling to the literature (e.g., Ball 2013) but also concerning investors allocating scarce capital and regulators allocating enforcement resources (e.g., Lewis2012).Footnote 2

Using the popular Jones (1991) model, we first conceptually discuss and then empirically document how Bayesian methods increase power and reduce false positives in tests of accruals-based earnings management by combining various sources of information (e.g., priors and data), according to their relative (un)certainty. The Jones model provides a useful example because its OLS-based estimation is well known, allowing a contrast with the Bayesian approach. In addition, the Jones model, while widely employed, is strongly criticized for producing false positives (e.g., Dechow et al. 2010; Ball 2013), allowing us to tackle a first-order problem of this important model.

Bayesian estimation per se does not solve all the issues with the Jones model, nor does it eliminate the need for model fixes and alternative models. It instead allows combining and assessing the importance of various fixes and alternatives via Bayesian model averaging, a technique increasingly applied to fundamental questions in economics (for a review, see Steel 2020). Given this feature, we use the basic modified Jones model (Dechow et al. 1995) for the purpose of exposition, but note that the conceptual benefits of the proposed Bayesian estimation only increase with the incorporation of more and more recent models used in the literature and practice (Piironen and Vehtari, 2017). In this spirit, we apply our Bayesian approach to an extended set of models, including popular and recent ones (e.g., Dechow et al. 1995; McNichols 2002; Ball & Shivakumar 2006; Collins et al. 2017; Frankel & Sun 2018). The resulting model-averaged estimates of abnormal accruals can readily be used by researchers interested in explaining or controlling for accruals-based earnings management.Footnote 3

To conceptually discuss the benefits of our Bayesian approach, we compare and contrast it with the literature’s standard approach to detecting accruals-based earnings management. The standard approach entails three steps. First, researchers choose a model for normal accruals (i.e., accruals explained by fundamental forces), usually the modified Jones model (Dechow et al. 1995).Footnote 4 Next, they estimate the model’s parameters (i.e., the regression coefficients). To account for heterogeneity in the accruals process, they typically estimate the model separately for different groups of observations—usually industry-years with a minimum amount of observations. Finally, researchers use the estimated model parameters to generate a point prediction of normal accruals for a given firm-year. Any deviation from this prediction is classified as abnormal (i.e., accruals potentially affected by managerial discretion). A crucial point is that this approach shoves any uncertainty about the predicted level of normal accruals into the abnormal accruals measure.

The Bayesian approach allows for an explicit accounting for researchers’ prediction uncertainty in abnormal accruals estimation. It can account for the two central components of prediction uncertainty: uncertainty about the parameters of a chosen model (e.g., the coefficient values of the Jones model) and uncertainty about the model to choose. The Bayesian estimation of a given model’s parameters produces a posterior distribution for each of the parameter values. Equipped with these distributions, we can obtain a distribution of normal accruals for each firm-year, instead of merely a point prediction. This distribution, called posterior predictive distribution, incorporates the parameter uncertainty, given a chosen model. Notably, this distribution can be calculated for several variants of the Jones model and alternatives. Using Bayesian averaging techniques, the various models’ accruals predictions can be combined to yield a model-averaged predictive distribution of normal accruals. This distribution explicitly incorporates the uncertainty about the best model (within the set of considered models). We can use this distribution of normal accruals to discern the extent of plausibly abnormal accruals. This extent can be expected to be substantially reduced, compared to the extent implied by the standard approach, which conflates abnormal accruals and prediction uncertainty.

Following the conceptual discussion, we implement the Bayesian approach to empirically assess its benefits. We explicitly consider two model variants in our empirical implementation: models with varying levels of parameter heterogeneity and those with varying extents of controls. With respect to parameter heterogeneity (across groups), we implement a model allowing for parameters varying at the industry-year level (consistent with the literature, e.g., DeFond & Jiambalvo 1994) and a model allowing for firm-level heterogeneity (in the spirit of Owens et al. 2017). We implement the parameter heterogeneity using Bayesian hierarchical modelling, which uses two sources of information about a group’s parameters, that is, within- and across-group data. The less within-group information available for a particular group, the more that group’s coefficient estimates rely on the information provided by other groups. This cross-group learning obviates any ad hoc cutoffs and allows accommodating even firm-level heterogeneity. With respect to the extent of controls, we implement a model with the basic determinants included in the modified Jones model and one model with performance, financing, and volatility controls (in the spirit of prominent model critiques, e.g., Kothari et al.2005).

Our empirical implementation confirms the conceptual benefits of the Bayesian approach and yields additional insights. First, we find that the firm-level model with extensive controls receives the greatest weight in the Bayesian model’s averaging stage. This finding highlights that it is not only important to control for fundamental factors, such as performance but also to allow for firm-level heterogeneity. To date, firm-level heterogeneity in accruals models has been neglected, because of concerns about the required number of observations (per firm). The Bayesian hierarchical approach circumvents this constraint, allowing an accounting for a relevant level of heterogeneity in firms’ accruals processes.

Second, we find that the distribution of plausible normal accruals, after accounting for parameter and (selected) model uncertainty, is quite wide. This finding suggests the large extent of abnormal accruals (e.g., in terms of percentage of total assets) observed in the literature largely reflects parameter and model uncertainty, rather than truly unexpected or abnormal accruals.

Third, we document that the predicted normal (abnormal) accruals of the Bayesian averaged model are more (less) correlated with total accruals than the normal (abnormal) accruals obtained using the literature’s standard approach, even after controlling for performance, financing, and volatility. This finding suggests that combining various models, including models with firm-level heterogeneity, substantially improves our ability to model firms’ accruals. As long as the vast amount of firms’ accruals is driven by factors other than opportunistic reporting incentives, such improved model fit translates into a reduced risk of false positives (e.g., Dechow et al.2010).

Lastly, we find that, in simulations with randomly assigned true and false earnings management indicators, the Bayesian approach increases the power of earnings management tests and reduces the incidence of false positives, relative to the standard approach. This improvement is particularly evident in cases when accruals processes vary at the firm level, instead of the industry-year level, and when there is high uncertainty (e.g., among young firms with few observations and volatile fundamentals). Notably, our earlier evidence suggests accruals processes indeed primarily vary at the firm, not industry-year, level. Moreover, the literature often uses opportunistic incentive variables correlated with uncertainty. In these cases (missspecified model and limited or noisy data), our simulations suggest that the standard approach can frequently yield false positives, while a Bayesian approach provides more powerful and robust inferences.

In a final step, we extend our Bayesian estimation to incorporate accruals models besides the Jones model. Following a review of the recent literature, we consider industry-year and firm-level variants of the models developed by Dechow et al. (1995), Kothari et al. (2005), Dechow and Dichev (2002), McNichols (2002), Ball and Shivakumar (2006), Collins et al. (2017), and Frankel and Sun (2018). The averaged model gives nonzero weights to all models, documenting the importance of including various empirical models. The greatest weight is put on more recent models, especially Frankel and Sun (2018), and model variants allowing for firm-level heterogeneity, consistent with our previous finding.

We assess the usefulness of the averaged model by examining its ability to explain future performance and popular earnings management indicators (AAERs, restatements, and comment letters). We find that the normal accruals produced by our averaged model explain future performance better than those of the individual (OLS-based) models, while its (absolute) abnormal accruals exhibit a stronger relation with earnings management indicators than those of the individual models. Collectively, these findings indicate the usefulness of the normal and abnormal accruals estimates produced by our averaged model.

Our paper contributes to the accounting literature by illustrating key methods of Bayesian estimation of relevance to empirical accounting research. Bayesian methods, while conceptually appealing, have received limited attention in applied work until recently, because they require computational power and methodological expertise. These barriers to their application, however, are vanishing, as a result of the rapid expansion of computational power and the implementation of ready-made Bayesian methods into popular applied statistics programs (e.g., R and STATA). Taking advantage of these trends, recent work in accounting has adopted select Bayesian methods (e.g., hierarchical modelling) to uncover latent constructs, such as a new earnings quality measure (Du et al. 2020) and investor learning (Zhou, 2021). We add to this emerging stream of the literature by providing a timely illustration of various Bayesian methods, including Bayesian regression, Bayesian hierarchical modelling, and, in particular, Bayesian model averaging. This illustration should help make the new empirical tools more accessible and reduce barriers to adoption.Footnote 5

Our paper also contributes to the extensive literature on accruals-based earnings management. We suggest a novel estimation approach to address a central, unaddressed criticism of the literature: researchers’ uncertainty in specifying normal accruals and the corresponding concern about false positives. Our proposed approach provides a natural way to incorporate uncertainty into the prediction of normal accruals derived from accruals models. It improves the literature’s standard approach in two key ways. First, it uses more information and models, leading to better predictions and greater power in tests for (opportunistic) abnormal accruals. Second, it accounts for model and parameter uncertainty, reducing the incidence of false positives.

More broadly, Bayesian estimation promises to enhance the measurement and identification of several other constructs of interest, such as productivity (Syverson, 2011), investment inefficiency (Biddle & Hilary, 2006; Biddle et al. 2009), real earnings management (Roychowdhury, 2006), abnormal R&D activity (Kothari et al. 2015), abnormal tone (Huang et al. 2014), and abnormal readability (Bushee et al. 2018). Each of these constructs, like abnormal accruals, is measured using models predicting normal behavior, which researchers are uncertain about. Using the familiar example of abnormal accruals as an application, we provide a relevant and timely introduction to key methods of Bayesian estimation and explain how they can help researchers with their predictions.

Before delving into the details of our approach, we acknowledge that the presented Bayesian methods, while eliminating several ad hoc choices typically made by researchers (e.g., cutoff values for industry-year observations), are not free from subjectivity. Researchers must specify the distributions representing the beliefs and data. In particular, they must explicitly specify prior beliefs. This feature often invokes criticism. We note that prior beliefs are usually chosen such that the data, not the prior, chiefly determine the posterior belief. In cases of limited data and reasonable expectations about plausible values (e.g., regarding the range of economically plausible coefficient values), however, we actually view the ability to inject information into the prior as an advantage, not a drawback, of Bayesian methods. This feature prevents over-fitting the data and enables cross-study learning and truly cumulative empirical work.Footnote 6

2 Concepts

2.1 Prediction problem

Researchers need to know firms’ normal accruals process to discern abnormal deviations, due to managerial discretion and opportunism. However, this sort of knowledge has remained elusive to date. Absent perfect knowledge of the normal process, the modelling of the normal process and measurement of abnormal accruals is akin to a prediction problem. Given researchers’ imperfect knowledge and data, the goal is to produce the best prediction of normal accruals possible. This prediction, however, is naturally uncertain, and the uncertainty should be considered in classifying firms’ accruals as abnormal. In the presence of prediction uncertainty, not every deviation from predicted normal accruals can confidently be classified as abnormal.

2.1.1 Standard approach

The literature’s standard approach to predicting normal accruals proceeds in three steps. Researchers first pick a model specifying the relevant determinants (e.g., change in revenues) and functional forms (e.g., linear model) of normal accruals, typically the modified Jones model:

where InvAti,t− 1 is the inverse of lagged total assets, △CRevi,t is the change in cash revenues scaled by lagged total assets, and PPEi,t is property, plant, and equipment scaled by lagged total assets.Footnote 7 (For detailed variable definitions, see Table 1.)

The model’s parameters (i.e., coefficients) are treated as unknown but estimable quantities. In the second step, these parameters are estimated via ordinary least squares (OLS). Typically, the parameters (Θg) are estimated at the industry-year level (g), for industry-years with a minimum number of observations, to allow for heterogeneity in the accruals process across industries (j = 1,...,J) and time (t = 1,...,T):

In the last step, these parameter estimates are combined with firms’ observed determinants in the way described by the chosen model to generate a prediction of normal accruals for a given firm at a given point in time:

These point predictions are expected accruals, conditional on firms’ observed determinants (Dg), the estimated parameters (\(\hat {\Theta }_{g}\)), and the chosen model (M):

These predictions do not account for the fact that the model parameters were estimated (parameter uncertainty) and that researchers are uncertain about the true model (model uncertainty). Nevertheless, the literature typically neglects prediction uncertainty (model and parameter uncertainty), takes the predicted accruals of the chosen model as the true accruals, and classifies any deviations of firms’ observed accruals from the predictions as abnormal. As a result, this classification rule can be expected to lead to excessive evidence of managerial discretion and opportunism.

2.1.2 Bayesian approach

Our proposed Bayesian approach to predicting normal accruals attempts to explicitly incorporate model and parameter uncertainty to reduce the incidence of false positives.

Bayes

Bayesian statistics provide a convenient way to incorporate uncertainty about firms’ accruals processes in predicting normal accruals. Researchers’ beliefs about unknown quantities (e.g., models, parameters, or normal accruals) can be represented by distributions. As researchers examine data (on firms’ observed accruals and their determinants), their initial beliefs change following Bayes’s rule. For example, researchers’ beliefs about the coefficient values of the modified Jones model after examining the data are a weighted average of researchers’ beliefs before examining the data (prior distribution) and the data (likelihood), where the relative weights depend on the precision of the prior belief vis-à-vis the amount and quality of the data:

In the following three steps, we illustrate how Bayesian methods and tools can be applied to the estimation of the modified Jones model to better predict normal accruals and explicitly account for multiple sources of uncertainty.

Parameter uncertainty

In a first step, we show how uncertainty surrounding the model’s parameters (i.e., coefficients) is incorporated into the normal accruals prediction. For this purpose, we take the modified Jones model as given and simply estimate its parameters using Bayesian, instead of OLS regressions. For the Bayesian regression estimation, we must specify distributions describing accruals and our priors about reasonable coefficient values (p(Θg|M)).

The Bayesian estimation yields posterior distributions for the coefficient values (p(Θg|Dg,M)), informed by the data (Dg). The wider these distributions, the less precise are the coefficient values. The coefficient distributions serve as a key input into the prediction of normal accruals. Just as in the standard approach, we predict firms’ normal accruals by combining the firms’ observed determinants with the respective coefficient values. In contrast to the standard approach, however, we do not simply use one point estimate per coefficient but instead calculate predicted accruals by weighting all plausible coefficient values with the respective probabilities assigned by the posterior coefficient distribution (p(Θg|Dg,M)). As a result, we obtain a full distribution of predicted normal accruals for a given firm at a given time—the so-called posterior predictive distribution (\(p(\hat {TA} | D_{g}, M)\)):

This predictive distribution incorporates uncertainty about the true parameters of the model into the prediction of normal accruals. Unlike the OLS point prediction, the predictive distribution does not take the coefficient estimates as given.

Heterogeneity

In a next step, we illustrate how one can efficiently incorporate heterogeneity in parameters across groups into the normal accruals prediction. For this purpose, we again take the modified Jones model as given and estimate its parameters using Bayesian regressions. In addition, we now explicitly model the heterogeneity in parameters across groups using Bayesian hierarchical modelling, instead of merely estimating our coefficients within groups with a given minimum number of observations.

The hierarchical model accounts for the fact that, in a hierarchical setting, the data really contains two sources of information: the within-group data and across-group variation. It splits the model parameters into group-specific parameters (e.g., industry-year-specific coefficients) and hyper-parameters (ψ) governing the distribution of the group-specific parameters across groups:

The hierarchical model uses the entire sample (D instead of Dg) to estimate all the parameters jointly. If there are plenty of observations in a given industry-year, the group-specific coefficient is chiefly determined by these observations. If instead there are only limited observations in a given industry-year, the group-specific coefficient borrows information from the other industry-year coefficients. (This information is contained in the across-group hyper-parameters.) This approach eliminates the need to define ad hoc cutoffs of minimum required observations and discard useful information/data (e.g., cross-group information and information in groups with fewer observations than defined by the cutoff).

Model uncertainty

In a last step, we illustrate how, using Bayesian model averaging, one can combine different models of normal accruals to achieve better predictions and incorporate model uncertainty into the predictive distribution. Just as with parameters, we can have a prior belief about the plausible models and update this belief based on the data. The resulting posterior distribution of plausible models can be used to weight the predictions of distinct models. These model probabilities can be used to average the model-specific predictive distributions of normal accruals and obtain an averaged predictive distribution of normal accruals:Footnote 8

This averaged distribution uses information from all candidate models with nonzero probabilities. Importantly, it incorporates researchers’ uncertainty about the true model among the set of candidate models. Unlike the OLS point prediction (\(\hat {TA} | D_{g}, {\Theta }_{g}, M\)), the predictive distribution \(p(\hat {TA} | D)\) does not take one ad hoc model for granted anymore.Footnote 9

2.2 Measurement problem

We can use the model-averaged predictive distribution of normal accruals in various ways to classify firms’ accruals as abnormal. For example, we can calculate a dichotomous variable indicating when firms’ observed accruals fall outside of, for example, the 98% credible interval of the predictive distribution. We can also calculate a continuous variable using scoring rules (i.e., loss functions). The logarithmic scoring rule, for example, closely relates to information theory. It translates the predictive probability assigned to firms’ observed accruals into a measure of loss or cost to a decision-maker who relies on the prediction. The negative of the logarithmic scoring function hence can be viewed as a measure of researchers’ confidence that observed accruals are indeed abnormal.Footnote 10 Alternatively, and similar to the standard approach, we can take the difference between the observed accruals and the mean of the posterior predictive distribution as a point prediction of abnormal accruals but then apply an adjustment for the corresponding prediction uncertainty.

2.3 Testing problem

We can use moments of the predictive distribution of normal accruals to test for abnormal accruals, due to opportunistic accruals in a Bayesian measurement-error model. In the measurement-error model, we can, for example, not only use the mean but also the standard deviation of the predictive distribution to infer opportunistic accruals. In particular, we can consider abnormal accruals as a latent construct:

which can only be measured with error, because we do not observe the normal (or nondiscretionary) accruals (\(NDA^{*}_{i,t}\)).

To measure abnormal accruals (DAi,t), we take the difference between firms’ observed accruals (TAi,t) and the expected value of the predictive distribution of normal accruals (\(NDA_{i,t}=\hat {TA}_{i,t}| D\)), closely following the literature’s approach:

As our prediction of normal accruals is uncertain, so is our abnormal accruals measure. To reflect the uncertainty, we can model our abnormal accruals measure as normally distributed with the latent abnormal accruals value (\(DA^{*}_{i,t}\)) as its mean and the extent of measurement error (σNDA,i,t) as its standard deviation.Footnote 11 We proxy for the extent of error in our measurement by using the standard deviation of the predictive distribution of normal accruals (\(p\left (TA_{i,t}| D\right )\)).

Equipped with our measure of abnormal accruals and its extent of measurement error, we can estimate the Bayesian measurement-error model regressing measured abnormal accruals on variables for managerial opportunism (Xi,t).Footnote 12

In estimating the impact of managerial opportunism, this model takes into account that some abnormal accruals are less precisely measured than others (i.e., more uncertain). The model weights observations according to the prediction uncertainty for DAi,t. This precision-based weighting of abnormal accruals will shrink the influence of volatile firms and firms with limited information. Notably, these firms typically contribute to the many (potentially false) positives in tests for managerial opportunism (e.g., Hribar and Collins 2002; Kothari et al.2005).Footnote 13

3 Data

We closely follow the literature in constructing the sample and variables underlying our tests. We use Compustat North America data from 1988 until 2017. For the estimation of the Jones model and its basic variants (Section 4), we exclude financial firms (three-digit SIC code between 600 and 699) and observations with lagged total assets smaller than $10 million, leverage (debt over total assets) larger than one, or total asset growth of 200% or more (to exclude M&A activity). We restrict the sample to observations with nonmissing values for all of required variables. We truncate all continuous variables at the first and 99th percentile. Table 1 summarizes the data. Panel A provides the definition of our variables (following Dechow et al. 1995). Panel B presents descriptive statistics for the variables.

For our application of the Bayesian approach to an extended set of accruals models (Section 5), we additionally require nonmissing values for all the variables specified by the various models (listed in Table 6). These additional requirements (e.g., availability of book-to-market information) further restrict the sample size. As before, we truncate all continuous variables at the first and 99th percentile.

For validation tests (Section 5), we use earnings management indicators provided by Dechow et al. (2011) and Audit Analytics. In our first earnings management test, we employ an indicator (IAAER) taking the value of one for firm-years subject to upward earnings management, according to an Accounting and Auditing Enforcement Release (AAER) of the SEC (Dechow et al. 2011) (and zero otherwise). In our second test, we use an indicator (IREST) taking the value of one for firm-years with a restatement due to accounting rule (GAAP/FASB) application failure, excluding disclosure issues (and zero otherwise) (Du et al. 2020). In our third test, we use an indicator (ICL) taking the value of one for firm-years with an SEC comment letter pertaining to a 10-K or 10-Q in the particular year (and zero otherwise) (Du et al. 2020).Footnote 14

4 Estimation

4.1 Predicting normal accruals

4.1.1 Bayesian approach

Specification

The Bayesian version of the industry-year-level modified Jones model (with g = {j,t}) takes the following form:

Similar to the OLS model in Eq. 1, we model total accruals (TA) as a linear function of (inverse) total assets, changes in revenues, and PP&E, plus some noise. For the Bayesian estimation, we need to specify the distribution of the noise and priors over the model parameters. Following standard Bayesian practice for linear regressions, we model the noise of the accruals model as normally distributed, with mean zero and unknown standard deviation Σ. In specifying the priors, we follow guiding principles advocated by leading Bayesian statisticians (e.g., McElreath 2020; van de Schoot et al. 2021). We choose priors that satisfy three criteria. First, they should down-weight unreasonable parameter ranges. Second, they should make minimal assumptions about the prior shape. Third, they should be easy to reason about.Footnote 15

Following the first criterion, we choose weakly informative priors for the unknown standard deviation Σ and the industry-year-level coefficients, β0,j,t,β1,j,t,β2,j,t. In contrast to diffuse priors, ours down-weight unreasonable parameter values, reducing overfitting concerns. Following the second criterion, we choose an exponential distribution for the shape of the unknown standard deviation and normal distributions for the shape of the coefficient priors. Following the maximum entropy argument (e.g., Jaynes 2003; McElreath 2020), these distributions encode maximum uncertainty about the shapes of the priors. The exponential distribution, for example, is the maximum uncertainty distribution if all we are willing to specify is the “average deviation.” Similarly, the normal distribution is the maximum uncertainty distribution if all we are willing to specify are the mean and standard deviation. Lastly, following the third criterion, we standardize total accruals and all determinants to have means of zero and standard deviations of one. This standardization (and the choice of low-parameter distributions) aids the accessibility of the model estimation by simplifying the reasoning about the priors.

In sum, we specify our priors as follows:

The prior for Σ implies that, before seeing the data, we expect the average deviation of the residual to be one. As we standardize our dependent variable, this parameterization allows the residuals to explain most if not all the variation in the dependent variable. Accordingly, it is a conservative but still weakly informative prior (which respects the nonnegativity constraint for values of the unknown standard deviation). Similarly, the prior choices for the coefficients imply that, before seeing the data, we expect that the true (standardized) coefficients lie within the range of -5 to + 5 with a 95% probability. This choice essentially says that we view it as unlikely that a one-standard-deviation increase in InvAt, △CRev, or PPE is associated with a change in accruals of more than five times its standard deviation. These priors are weakly informative because only economically implausible and statistically rare coefficients of magnitudes outside the -5 to + 5 range are down-weighted.

We specify and estimate this model using STAN software (Carpenter et al. 2017). The benefit of this software is that it can be called from standard statistical software packages (e.g., R and STATA) and employs various forms of Markov Chain Monte Carlo (MCMC) algorithms in the background to draw the posterior distributions.Footnote 16 Accordingly, our Bayesian approach merely requires a conceptual understanding of Bayesian statistics and concepts. It does not require knowledge of new programming languages or expertise in sampling algorithms.

For illustrative purposes, we briefly introduce the conceptual idea behind MCMC sampling algorithms, provide details on necessary estimation choices, and discuss post-estimation diagnostics in the following. In our Bayesian estimation, MCMC algorithms are needed because the posterior parameter distributions of our complex models do not correspond to closed-form expressions of known distributions. In the absence of such distributions, the algorithms allow to numerically approximate the posterior distributions by generating a large sample of parameter values. To obtain this sample, MCMC algorithms perform two operations to ensure that the parameter values are sampled in a way that approximates the underlying posterior of interest. First, starting from current parameter values (Θs), they propose a new set of parameter values (Θ∗) by stepping randomly in a new direction in parameter space. Second, they decide whether to accept the proposed step. The algorithms accept the proposed values (Θ∗) into the series ({Θ(1),...,Θ(s)}) if the ratio of the probabilities of the proposed vis-à-vis the current parameter values (p(y|Θ∗)p(Θ∗)/p(y|Θ(s))p(Θ(s))) is higher than some random amount between 0 and 1. Otherwise, they add the current value (Θs) to the series again. As the series or chain grows, the resulting distribution of parameter values converges to the desired parameter distribution. (For a detailed introduction to sampling algorithms, see Gelman et al. (2013).)

MCMC algorithms differ in how they implement the generation of proposal values and the acceptance decision. In our estimation, we use the Hamiltonian MCMC algorithm, the default algorithm in STAN. The algorithm uses Hamiltonian motion dynamics to determine the next proposed value. For complex models, it tends to be more efficient than previous algorithms (e.g., Gibbs or Metropolis-Hastings) as the proposed values tend to have a higher acceptance rate (Betancourt 2018). To parameterize the algorithm (e.g., step size of proposal values), we rely on the default settings in STAN. The STAN implementation auto-tunes the algorithm parameterization to achieve appropriate mixing (e.g., a high acceptance rate of proposal values) via machine learning tools (e.g., using trees to determine the next move).

We estimate all models using two chains with 3,500 iterations each. We discarded the first 1,000 iterations of each chain as our burn-in period. Using multiple chains and discarding the initial values are best-practices, which reduce the dependence of a given series on the starting value.

After the estimation, we examine the chains for convergence and efficiency (i.e., their mixing properties). The chains should not be stuck at any particular value for too long and move through all possible values several times. We visually inspect the chains of the key model parameters using trace plots, which plot the series of accepted values across the iterations. In addition, we examine the R-hat convergence diagnostic and effective sample size (Gelman and Rubin 1992). We make sure that our choices of the burn-in period and number of iterations result in R-hat values of less than 1.05, which indicates sufficient mixing and ensures an effective sample size of at least 100 samples per chain (Stan Development Team, 2018).Footnote 17

Results

The model estimation produces posterior distributions of the model parameters. Equipped with these distributions, we can generate the posterior predictive distribution of accruals. Figure 1 showcases an example of a predictive distribution for a given firm in a given year. The peak of the distribution represents the most likely accruals value, according to the model. The width of the distribution reflects parameter uncertainty. The figure highlights the importance of accounting for uncertainty in the accruals prediction. The OLS estimate (marked by a thin black line) and theobserved accruals level (marked by a dashed line), while differing from each otherby around 4% of the standard deviation of accruals, are close to the center of the predictive distribution. Accordingly, neither the observed accruals level nor the difference between the OLS estimate and the observed accruals level appears unusual nor significant, given the extent of prediction uncertainty.

Example of Posterior Predictive Distribution. The figure illustrates the posterior predictive distribution. The light-gray area shows the posterior predictive distribution for one illustrative firm-year observation from a Bayesian hierarchical version of the modified Jones model of the form TAi,t = a1,j,tInvAti,t + a2,j,t△CRevi,t + a3,j,tPPEi,t. The subscripts j,t denote industry-year-specific coefficients for the three variables. Variable descriptions can be found in Table 1. The dark-gray areas mark accruals values that lie beyond the first and 99th percentile and are thus very unlikely according to the model. More generally, the width of the posterior reflects the model’s uncertainty regarding which value of nondiscretionary accruals to expect

4.1.2 Heterogeneity

Specification

We augment the industry-year-level model in Eq. 12 by introducing a hierarchical parameter structure featuring hyper-parameters governing the relation of industry-year-level coefficients across industry-years.Footnote 18 To this end, we first modify the priors for the coefficients in Eq. 13 to be:

The coefficient priors are now functions of new hyper-parameters (μ,ρ,σ). The hyper-parameters determine the plausible distribution of coefficients across industry-year groups. μ reflects the expected average coefficient across industry-years, σ determines the range of plausible coefficients across industry-years, and ρ allows for cross-coefficient learning. Importantly, and unlike before, the hyper-parameters μ and σ are not fixed anymore. Their posterior distributions are estimated from the data, jointly with the industry-year-specific coefficients. Just as the coefficients, these hyper-parameters need (hyper-)priors. We specify these priors as follows:

The parameters of the hyper-priors determine the plausible values of the hyper-parameters, before seeing the data. μd is the prior for the average across all group-specific coefficients βd,g for determinant d (with d = 0,1,2 in the modified Jones model). σd is the prior for the amount of variation in βd,g across groups. We chose relatively weak priors to have nearly all the shrinkage (i.e., the disciplining of group specific coefficient estimates) come from the data.Footnote 19 The prior for the correlation between coefficients ρ is a special multivariate prior for correlations that honors the dependencies between correlations (Lewandowski et al. 2009).Footnote 20LKJcorr(2) defines a weakly informative prior that is skeptical of extreme correlations.

We estimate this hierarchical model using the entire dataset. The hierarchical modelling eliminates the need to estimate parameters separately for industry-years and facilitates information sharing across industry-year groups. (For further detail on the prior definition and choice of the Bayesian hierarchical model, please refer to the ??.)

Results

The model estimation produces posterior distributions of the model parameters. Figure 2 illustrates that, compared to the corresponding OLS estimates, the (average) coefficient estimates obtained using the hierarchical model are less dispersed. The hierarchical modelling, in particular, shrinks extreme coefficients observed for industry-year groups with little information (i.e., few observations) toward the typical coefficient values observed across industry-year groups. This shrinkage is based on the amount of available information, and cross-group learning eliminates the need to rely on ad hoc cutoffs (e.g., for the number of required observations), opens the possibility of estimating even firm-level models, and can be expected to lead to more plausible coefficient estimates and accruals predictions.

Comparison of Industry-Year Coefficient Estimates. The figure plots industry-year coefficient estimates (b1,j,t, b2,j,t, b3,j,t) of the modified Jones model by industry-year sample size. The modified Jones model used is of the form TAi,t = b1,i,tInvAti,t + b2,j,t△CRevi,t + b3,j,tPPEi,t. Variable descriptions can be found in Table 1. The three plots on the left of the figure show OLS estimates, whereas the plots on the right show posterior means for each coefficient from a Bayesian hierarchical model. The grey line highlights the requirement of a minimum of 20 observations in an industry-year that is common in the literature. Comparing the OLS (left) and Bayes (right) plots for each coefficient illustrates the automatic regularization inherent in Bayesian hierarchical models. No significant differences are apparent for industry-years with large sample sizes (e.g., greater than 200 obs). By contrast, the dispersion of OLS estimates is much larger for small industry-year samples (e.g. less than 100 observations)

4.1.3 Model uncertainty

Specification

We incorporate model uncertainty in our estimation and prediction following Yao et al. (2018). They propose a Bayesian model-averaging approach for the realistic case where researchers can specify a set of (K) candidate models but do not expect any of those models to be the one true model. Their approach combines the posterior predictive distributions of the various candidate models via model weights, \(\hat {w}_{k}\). These weights, which are akin to the posterior probabilities of the various models, provide a discrete distribution over the set of candidate models. They are derived from the models’ out-of-sample prediction performance, using approximate leave-one-out-prediction accuracy. For each model, we multiply its weight with its predictive distribution to obtain a weighted-averaged posterior predictive distribution that fits the data best (in the sense of the Kullback–Leibler divergence scoring rule):Footnote 21

In our implementation, we use six candidate models (K = 6). The first is the hierarchical modified Jones model with industry-year-specific coefficients. The second includes three additional determinants capturing firms’ operating and financing characteristics (e.g., in the spirit of Kothari et al. (2005) and Hribar and Nichols (2007)): return on assets (RoA), leverage (Lev), and revenue volatility (SdRev).

The third model resembles the extended (second) model but includes an industry-year-specific intercept, instead of an industry-year-specific coefficient for the inverse of total assets. Models four to six are versions of the first three models with coefficients varying at the firm, instead of the industry-year, level.

For illustrative purposes, we focus on a narrow set of candidate models, representing slight deviations from the modified Jones model. Our implemented approach accordingly only captures uncertainty about the models within the set of candidate models. It neglects any uncertainty about and information in models excluded from the candidate set. To incorporate model uncertainty more fully and obtain the best normal-accruals prediction, we apply our Bayesian approach to an extended set of models, including some of the most popular and recent ones in a final step (Section 5).Footnote 22

Results

We fit all six candidate models to the data and compute their weights. Panel A of Fig. 3 shows the weights for each. The extended firm-level model (model 5) receives the highest weight, followed by the extended firm-level model with firm-level intercepts (instead of inverse total asset coefficients) (model 6). By contrast, the industry-year-level models receive only little weight. Only the extended industry-year model with industry-year intercepts (model 3) receives a nonnegligible weight.

Illustration of Stacking Weights. Panel A shows stacking weights for six models: a hierarchical modified Jones model with industry-year-specific coefficients (Model 1); a hierarchical modified Jones model with industry-year-specific coefficients and three additional determinants, RoA, Lev, and SdRev (Model 2); a version of model 2 with an industry-year specific intercept instead of the inverse of total assets covariate (Model 3); a hierarchical modified Jones model with firm-specific coefficients (Model 4); a hierarchical modified Jones model with firm-specific coefficients and three additional determinants, RoA, Lev, and SdRev (Model 5); a version of model 5 with a firm-specific intercept, instead of the inverse of total assets covariate (Model 6). The stacking weights are based on approximations of leave-one-out cross-validation performance of the models. Models with better out-of-sample performance receive more weight. The left plot of panel B presents the posterior predictive distributions (PPDs) of six models for an exemplary firm-year observation. The right part shows the combined, weighted posterior predictive distribution of all six models. The weights used are the stacking weights shown in panel A

The weights make three important points. First, the dominance of the firm-level models highlights that firm-level heterogeneity in accruals processes is more important in explaining the data than industry-year-level heterogeneity (which is consistent with Owens et al. (2017)). Second, the dominance of the extended models highlights the importance of controlling for performance and related determinants (which is consistent with Kothari et al. (2005)). Third, the nonnegligible weight on the industry-year intercept model suggests that time trends in accruals (not necessarily their processes (i.e., coefficients)) appear important to account for (e.g., via industry-year fixed effects).

Panel B of Fig. 3 illustrates, for a given company in a given year, how the model-specific predictive distributions combine to generate one model-averaged posterior predictive distribution of normal accruals. The left graph depicts the model-specific distributions. The predictive distributions of the industry-year-level models are centered around lower values than the firm-level distributions and exhibit more narrow ranges. The narrower range reflects that the industry-year-level coefficients are estimated with greater precision than the firm-level coefficients. The model weights, however, suggest that this greater precision comes at the cost of significant bias, compared to the firm-level models. As a result, the model-averaged distribution, in the right graph, more closely resembles the firm-level predictive distribution in that it exhibits a relatively wide range and is centered more to the right (compared to the industry-year-level distributions). Notably, the model-averaged distribution is less wide than the firm-level distributions, because it uses information and features from several models. Accordingly, the model-averaged predictive distribution is not only less biased than the individual distributions but also more precise than the widest individual (firm-level) distribution.

4.2 Measuring abnormal accruals

Specification

Our Bayesian approach allows for various ways of defining and measuring abnormal accruals (see Section 2.2). In this section, we compare point predictions between OLS and the Bayesian approach (Table 2). In line with the literature, we measure abnormal accruals (DAi,t) as the difference between observed accruals (TAi,t) and the expected (mean) normal accruals (\(NDA_{i,t} =\hat {TA}_{i,t}| D)\):

We descriptively assess the quality of the measurement of normal and abnormal accruals by correlating these measures, calculated using the literature’s standard approach and our Bayesian approach, with the observed accruals levels. The OLS-based normal and abnormal accruals are estimated using the predictions of industry-year-level modified Jones MODEL, including performance, leverage, and volatility controls (model 2). The Bayes-based normal and abnormal accruals are estimated using the model-averaged predictive distribution.

Results

Table 3 presents univariate correlations of observed accruals with OLS-based and Bayes-based predicted normal accruals and measured abnormal accruals. The correlation between observed accruals and OLS-based normal accruals is a modest 0.56.Footnote 23 By contrast, the correlation between observed accruals and Bayes-based normal accruals is 0.83. The reverse pattern (mechanically) emerges for the correlations of observed accruals with OLS- vis-à-vis Bayes-based abnormal accruals. Figure 4 illustrates the relation between actual, normal, and abnormal accruals using scatter plots. The upper graphs document that the Bayes-based normal accruals better predict observed accruals than the OLS-based normal accruals do. Importantly, the Bayes-based abnormal accruals in the lower graphs are widely unrelated to observed accruals, whereas the OLS-based abnormal accruals are strongly positively associated with observed accruals.

Comparison of Prediction Accuracy. The figure plots total accruals against predicted accruals and abnormal accruals (residuals). The predictions (and residuals) on the left are based on the extended modified Jones model (including performance, leverage, and volatility controls) fitted separately by industry-year using OLS. The predictions (and residuals) on the right are based on the posterior means of our averaged Bayesian models. One observation (TA = 78.30) was omitted from the plots for the sake of presentation

Under the plausible assumption that variation in observed accruals is primarily driven by first-order economic factors (e.g., business model differences), instead of second-order reporting incentives, the above patterns highlight that our Bayesian estimates better capture the normal structure of firms’ accruals and hence allow identifying abnormal deviations. Notably, this substantially better fit is achieved simply by using some basic variations of a weak and often criticized model.

4.3 Testing for abnormal accruals

Specification

We test for (opportunistic) abnormal accruals using a measurement-error model (see Section 2.3). Using simulations, we compare the power to detect abnormal accruals and the frequency of false positives of our proposed Bayesian approach with the standard approach.Footnote 24 In our simulations, we are particularly interested in the impact of model misspecification and uncertainty on test power and false positives.

To investigate the impact of misspecification, we simulate three distinct data generating processes (DGP) for normal accruals. The three processes generate total accruals following the modified Jones model, that is, as a linear function of the inverse of total assets (InvAt), change in cash revenues (△CRev), and property, plant, and equipment (PPE). They differ only in the level of coefficient heterogeneity. The first process assumes that coefficients only vary by industry-year (consistent with the standard approach in the literature). The second assumes that coefficients vary by both industry-year and firm. The third assumes that coefficients only vary by firm.

We use the entire sample of 139,241 observations to simulate the three series of total accruals resulting from the three distinct processes. We combine firms’ observed modified Jones model determinants with the process-specific coefficients to generate three simulated series of total accruals. We obtain the industry-year- or firm-level coefficients for the modified Jones model determinants via random draws from multivariate normal distributions (calibrated using previous posterior coefficient distributions). Equipped with these coefficients, we generate the simulated accruals for the distinct industry-year- and the firm-level processes by multiplying the modified Jones model determinants (observed for a given firm in a given year) with the respective industry-year- or firm-level coefficients and adding normally distributed noise. To generate the mixed industry-year and firm-level process, we add one-half of the simulated accruals from the industry-year-level process to one-half of the simulated accruals from the firm-level process (i.e., we assign weights of 0.5 to both models to arrive at the model-averaged process).

For each of the three accruals series, we fit an industry-year-level modified Jones model using OLS, resulting in three OLS predictions of normal accruals (\(NDA^{DGP}_{OLS, i,t}\), where DGP is either Industry-Year, Industry-Year + Firm, or Firm). For the industry-year-level process, the OLS model is correctly specified. For the mixed industry-year and firm-level process and the purely firm-level process, by contrast, the OLS model is increasingly misspecified. We define OLS abnormal accruals as the difference between observed and predicted accruals using the industry-year-level modified Jones model estimated via OLS.

For the Bayesian estimation, we essentially assume there are two distinct candidate models. An industry-year-level model and a firm-level model. We fit both hierarchical models to each of the three accruals series. We combine the respective posterior predictive distributions of the two candidate models using model averaging. We define Bayes abnormal accruals as the difference between observed and predicted (mean) accruals measured as the mean of the model-averaged posterior predictive distribution.Footnote 25

To compare test power across the Bayesian and the standard approach, we randomly add earnings management to the simulated accruals series following the example of Kothari et al. (2005). We proceed as follows. We first randomly select 20,000 observations from the entire sample. We next randomly add a constant earnings management amount to 5% of the selected sample observations.Footnote 26 We calibrate the earnings management amount at 5% of the standard deviation of the simulated total accruals. We finally run regressions of abnormal accruals on a true earnings management indicator (taking the value of one for all firm-years with earnings management and zero otherwise). For the OLS version, we simply regress the OLS abnormal accruals on the earnings management indicator (EM):

where \(DA^{DGP}_{OLS,i,t} = TA^{DGP}_{i,t} - NDA^{DGP}_{OLS, i,t}\).

For the Bayes version, we implement a measurement-error model where we regress the Bayes abnormal accruals as a noisy measure of observed abnormal accruals (\(DA^{*DGP}_{BAY,i,t}\)) on the earnings management indicator. We measure the level of noise in the Bayes abnormal accruals as the standard deviation of the model-averaged posterior predictive distribution (σDA,i,t):

For both the OLS and Bayes regressions, we record whether the coefficient on the earnings management indicator is statistically significant at the 5% level (using the 95% confidence interval for the OLS version and the 95% credible interval for the Bayes version).

To compare false positives across the Bayesian and the standard approach, we slightly adjust the steps above. We keep the previously added earnings management (to have the same sample for both tests) and assign a new but random earnings management indicator. This random indicator falsely indicates earnings management.

We repeat the steps for the test power and false positives simulation 500 times. The fraction of rejections of the true earnings management indicator provides a measure of power of the OLS and Bayes approaches to detect true earnings management for the three different accruals processes. The fraction of rejections of the false earnings management indicator provides a measure of false positives generated by the OLS and Bayes approaches for the three different accruals processes.

To investigate the impact of uncertainty on power and false positives, we augment our simulations with subsample tests. We test for power and false positives separately in samples with high versus low uncertainty or measurement error:

We use two measures of uncertainty. Our first splits the sample into observations from firms with a short time series (HIGH) versus those with a long time series (LOW ). Fifty percent of the firms in our sample have a time series of six years or less. We use this median as the cutoff for the split. We expect that predicted and abnormal accruals of firms with a short time series contain more measurement error, especially in the Bayesian firm-level version. Our second measure splits the sample into observations with high (HIGH) versus low (LOW ) posterior uncertainty of the Bayesian predictions. We label observations with a posterior uncertainty (\(\sigma ^{DGP}_{DA, i, t}\)) in the fourth quartile of the \(\sigma ^{DGP}_{DA, i, t}\) distribution as high uncertainty observations.Footnote 27

Results

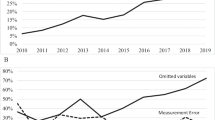

Table 4 summarizes the rates at which the null of no earnings management is rejected for the OLS and Bayesian versions across the different accruals processes and sample splits using a two-tailed 95% confidence/credible interval. In Panel A, we observe that the OLS and Bayesian approaches exhibit about the same amount of power to detect true earnings management (59% versus 60%) for the industry-year processes. By contrast, we observe substantially higher power to detect earnings management for the Bayesian vis-à-vis the OLS approach for the averaged and the firm-level processes. Notably, power decreases for the OLS approach to 43% and 34% for the averaged process and firm-level process, respectively, whereas it increased for the Bayesian approach to 61% and 92% (Fig. 5).

Main Simulation Results. The figure shows rejection rates for two tests of earnings management using simulated data. Statistics are based on 500 draws from the three data generating processes (DGP): industry-Year, averaged, and firm. Each draw samples 20,000 observations and randomly adds earnings management of 5% of the standard deviation of the simulated accruals to 5% of the observations. For each sample, the null of no earnings management is tested. The left figure shows tests using an earnings management dummy that correctly identifies managed earnings. The right figure shows tests using a randomly assigned dummy (for observations with a posterior standard deviation greater than the third quartile of sample)

These patterns suggest that model misspecification significantly reduces the power of the OLS approach, especially if the researcher’s model is an industry-year model when the true model is a firm-level model. The Bayes approach does not suffer from a loss of power due to misspecification. It adapts its model weights across the different processes to best fit the true process. Table 5 shows that the Bayesian approach relies (almost) completely on the industry-year-level predictions for the industry-year process and on the firm-level predictions for the firm-level process. For the averaged process, it puts a weight of 23% on the industry-year-level predictions and 77% on the firm-level predictions. These weights differ from the underlying 50%-50% mix of the averaged process. The greater weight on the firm-level predictions likely reflects that the firm-level model already accounts for the across industry heterogeneity of the industry-year process. Thus only the year-to-year variation by industry remains to be explained by the industry-year model. Interestingly, the Bayesian approach not only does not suffer from loss of power, due to misspecification, but even increases its power, especially for the firm-level processes. One reason for this increase might be that there are more firms than industry-years in the sample. Accordingly, the parameters governing the across-firm distribution of coefficients can be estimated more precisely than the parameters governing the across-industry-year distribution of coefficients.

With respect to false positives, we observe that the OLS and Bayesian approaches exhibit about the same rate of false positives (close to the expected 5% level) for all processes in Panel A. Accordingly, misspecification per se does not, on average, appear to substantially increase false positives for the OLS approach. Once we partition observations in our simulated samples by low versus high uncertainty, however, we observe that model misspecification notably increases the rate of false positives. In Panel B, we observe that, for the subsample of firms with few firm-year observations, the false positive rate of the OLS approach increases from 5.6% to 7.2% and 13.2%, as we go from the industry-year process to the averaged and firm-level processes. By contrast, the false positive rate of the Bayesian approach decreases from 5.2% to 3.6% and 2.4%, as we go from the industry-year process to the averaged and firm-level processes. In Panel C, we observe the same pattern for the subsample of firms with the highest (posterior) uncertainty (Fig. 5).Footnote 28

These patterns suggest that the combination of model misspecification and uncertainty can lead to substantially increased false positive rates for the OLS approach. By contrast, greater uncertainty leads the Bayesian approach to down-weight its evidence, resulting in fewer (false) rejections.

Taken together, our simulation results support the conceptual merits of the Bayesian approach over the standard approach. The former approach appears to exhibit more power and fewer false positives as a result of its inclusion of model and parameter uncertainty. Notably, our simulations support concerns (e.g., Ball 2013) that the standard approach may produce many false positives, due to model misspecification in combination with treatment indicators correlated with uncertainty. Our simulation documents that the power of the literature’s standard approach to detecting earnings management is low, especially for firm-level accruals processes and subsamples characterized by high uncertainty. By contrast, the rate of false positives is highest in these cases. These patterns are noteworthy, as our Bayesian model-averaging approach suggests that observed accruals processes in the data indeed are better described by firm-level than industry-year-level heterogeneity. Moreover, earnings management (treatment) indicators used in the literature are often correlated with uncertainty (e.g., younger and volatile firms). This combination of model misspecification and selection on high uncertainty subsamples should be expected to favor false positives.

In the ??, we document that our inferences from the simulation are robust to using alternative forms of earnings management and data generating processes. We, for example, show that the Bayesian approach also provides greater power to detect earnings management and produces fewer false positives in subsamples with high uncertainty when modeling earnings management as a deterministic function of earnings (e.g., Einhorn and Ziv 2020; Bertomeu et al. 2021; McClure & Zakolyukina2021), instead of a random constant. Similar patterns obtain when modeling earnings management as random noise (e.g., Fischer & Verrecchia 2000; Dye & Sridhar 2004; Beyer et al. 2019) and using absolute abnormal accruals as an outcome. Lastly, we show that, in subsamples with high uncertainty, the Bayesian approach produces fewer false positives, even if the underlying data generating process is not perfectly captured by its candidate models (or a mix thereof). These patterns highlight that the benefits of the Bayesian approach extend above and beyond the controlled environment of our stylized simulation.

5 Application

Specification

In a final step, we apply our Bayesian approach to an extended set of models, including some of the most popular and recent accruals models. Following a review of the literature, we consider variants of the models developed by Jones (1991), Dechow et al. (1995), Kothari et al. (2005), Dechow and Dichev (2002), McNichols (2002), Ball and Shivakumar (2006), Collins et al. (2017), and Frankel and Sun (2018).Footnote 29 As before, we estimate industry-year and firm-level variants of these models. Equipped with the estimates of these model variants, we calculate the weights assigned to each of the variants and the corresponding averaged model.

Results

Table 6 reports the individual model specifications and their weights. The basic modified Jones model receives almost no weight. This pattern is consistent with subsequent refinements (e.g., Kothari et al. 2005) not only improving but essentially subsuming the basic model. It echoes our earlier finding that an extended Jones model, which controls for firm characteristics, such as performance, dominates the basic Jones model. All other models receive a nonnegligible weight for at least one of their respective variants (industry-year or firm-level). This pattern highlights the usefulness of considering a broad set of models. The greatest weights are assigned to firm-level variants of the McNichols (2002) model, one of the most popular models, which combines the Jones (1991) and the Dechow and Dichev (2002) models, and the Frankel and Sun (2018) model, the most recent one. Consistent with our earlier results, these patterns reiterate the need to account for firm-level heterogeneity. They also document that the combination of two seminal models (Jones, 1991; Dechow et al. 1995) helps in modelling accruals and that recent work by Frankel and Sun (2018) substantially improves this modelling.

We assess the usefulness of the averaged model by examining its ability to explain future performance and earnings management indicators (AAERs, restatements, and comment letters). Following Frankel and Sun (2018), Table 7 reports the results from regressions of future performance on current performance, constructed by combining current cash flow from operations and normal accruals predicted by the respective accruals models. We find that the current performance measure based on our averaged model’s (mean) normal accruals better explains future performance than current performance measures constructed using the normal accruals produced by the individual models.Footnote 30 The averaged model achieves an R2 of 54% (column 1), whereas the individual models exhibit explanatory power ranging from 45% to 48% (columns 2 to 8). The results suggest the averaged model significantly improves over and above the individual models’ ability to capture the structure of normal accruals.

Table 8 reports the results from regressions of indicators for (income-increasing) AAERs on abnormal accruals. We find that abnormal accruals are positively and statistically significantly associated with AAERs, irrespective of the accruals model used to estimate abnormal accruals. Compared to the abnormal accruals produced by the individual models, the ones produced by the averaged model exhibit a higher coefficient magnitude. The averaged model produces a slope coefficient of 0.035, suggesting a one standard-deviation increase of abnormal accruals is associated with a 3.5% increase in the likelihood of a financial reporting enforcement action. The slope coefficients of the individual models, by contrast, range from 0.018 to 0.031. These results are consistent with the averaged model producing less noisy measures of abnormal accruals.Footnote 31

Tables 9 and 10 report the results from regressions of indicators for restatements (related to accounting rule misapplications) and comment letters on absolute abnormal accruals. Following Dechow et al. (2011) and Du et al. (2020), we use absolute abnormal accruals to explain restatements and comment letters, because these incidences, unlike the income-increasing AAERs, capture both income-increasing and -decreasing reporting abnormalities. We find that the absolute abnormal accruals produced by our averaged model are positively and statistically significantly associated with both restatements (Table 9) and comment letters (Table 10). The absolute abnormal accruals produced by the individual models, by contrast, exhibit economically lower and statistically insignificant associations. These results are consistent with the averaged model exhibiting greater power to detect earnings management than any of the individual models.Footnote 32 Notably, the averaged model appears to increase power, especially for absolute abnormal accruals. Absolute abnormal accruals measures tend to suffer particularly from errors in the estimation of abnormal accruals (Hribar and Nichols, 2007).

6 Conclusion

We provide an applied introduction to key methods of Bayesian estimation of relevance to empirical accounting research. To illustrate the methods, we apply them to an issue of central concern to the literature and practice: the detection of accruals-based earnings management.

Our proposed Bayesian approach explicitly incorporates uncertainty about parameters and models in the estimation of normal (or unmanaged) accruals and tests for abnormal (or suspect) accruals. We document that the Bayesian approach significantly reduces the extent of accruals that can confidently be classified as abnormal and lowers the incidence of false positives in tests for earnings management. Our approach even increases the power to detect earnings management (i.e., reduce false negatives). This improvement is due to the fact that it allows for firm-level heterogeneity in accruals processes via hierarchical modelling. Firm-level heterogeneity appears to be key for improving the modelling of normal accruals and detection of abnormal accruals.

We illustrate the Bayesian approach with the help of the popular and familiar modified Jones model to ease comparison with the literature’s standard estimation approach. Our proposed approach, however, is not model specific. To the contrary, it can combine several models, such as the modified Jones model and other popular and more recent models (e.g., Dechow & Dichev 2002; Frankel & Sun 2018), to obtain superior normal and abnormal accruals estimates. Even more so, it can be applied to the problem of estimating normal levels of a host of variables of interest (e.g., productivity, investment, tone, or readability), not just accruals. Given its broad applicability, conceptual appeal, and increasing computational feasibility, we expect the Bayesian tools explained here to be widely adopted and to contribute to more credible inferences in the accounting literature.

Notes

Ball (2013) voices concerns that limited knowledge of the determinants of accruals results in bias and false positives. To address the issue, several studies have proposed improvements to the Jones model (e.g., Kothari et al. 2005; Hribar and Collins, 2002) or new models (e.g., Dechow & Dichev 2002; Gerakos & Kovrijnykh 2013; Bloomfield et al.2017; Beyer et al. 2019; Nikolaev 2018; Du et al. 2020). The issue of false positives, arising from the neglect of model and parameter uncertainty, by contrast, remains largely unaddressed.

To facilitate the adoption of our approach, we provide the code for our Bayesian estimation publicly. We also provide a dataset containing the means and standard deviations of abnormal accruals produced by our averaged model for each firm-year in our sample.

Following the literature, we use the modified Jones model, which includes the change in cash revenues, instead of the change in total revenues, as a determinant of accruals, in all of our analyses.

We view the Bayesian methods as additional tools for accounting researchers, instead of a substitute for familiar frequentist methods (including recent machine-learning techniques). Indeed, several features of our Bayesian approach can be mimicked by frequentist methods (e.g., regularization and model averaging). We focus on the Bayesian approach because it provides a coherent framework, which nests various methods to account for uncertainty about models and their parameters.

In the ??, we document the robustness of our inferences to alternative prior choices by varying the informativeness and the functional forms of our priors.

In line with the original Jones (1991) model, we include the inverse of total assets, instead of a constant. In latter sections, we also consider models with (group-specific) constants, instead of (group-specific) coefficients on the inverse of total assets.

For notational brevity, we us \(p(\hat {TA} | D, M) \propto {\int \limits } p(\hat {TA} | D, {\Theta }, M) p({\Theta } | D, M) d{\Theta }\) as a short form of the hierarchical model where ψ is already integrated out.

Bayesian model averaging relates to two ad hoc approaches to addressing model uncertainty common in the earnings management literature: showing robustness across various models (e.g., Sletten et al. 2018) and using a combined score (e.g., Leuz et al. 2003). Unlike these approaches, Bayesian model averaging explicitly accounts for differences in the predictive ability of the distinct models via the model weights. This theoretically motivated and data-driven weighting increases power to detect earnings management, relative to ad hoc judgments about which models to report and how to combine earnings management indicators.

The logarithmic scoring rule uses (the negative of) the logarithm of the posterior probability assigned by the model to the observed accruals value. This rule can be linked to information theory and is commonly used in Bayesian inference. Absent an explicit economic model, it, however, is just one among many possible rules (i.e., it is a purely statistical construct).

To implement the measurement-error model, we assume that the unobservable measurement error (\(NDA^{*}_{i,t} - NDA_{i,t}\)) follows a normal distribution. This assumption is a simplifying assumption as the actual distribution is a mixture of unknown form. It is motivated by two observations. First, we observe that the predictive distributions of abnormal accruals appears to approximate normal distributions. Second, we note that the normal distribution is the maximum uncertainty distribution if all we know is an expected value and a standard deviation. Hence our simplifying assumption follows the maximum uncertainty principle that governs our choice of priors (Section 4).

Figure A4 in the online appendix illustrates the measurement-error regression framework.

For the above two-step approach, it is important to include the determinants used in the normal-accruals estimation (the first step) into the earnings-management regression (the second step) to avoid omitted variable bias, if the incentive variables are correlated with the determinants of normal accruals (Chen et al. 2018). Alternatively, one can use a one-step estimation, regressing total accruals on both their normal determinants and the incentive variables.

We follow Du et al. (2020) in requiring that the filing date of the form (10-K or 10-Q) commented on by the SEC to fall within a 365-calendar-day interval ending 100 days after the fiscal year-end.

A popular alternative approach to choosing priors is to consider conjugate priors that, in combination with the likelihood, result in known posterior distributions. The benefit of conjugacy is that it reduces the computational burden. Its drawback is that it may impose undesirable restrictions on the shape of the priors.

We provide our code publicly to ease the adoption of our approach.

In line with the literature, we allow for coefficient heterogeneity. The Bayesian hierarchical modelling approach could also be used to allow heterogeneity in the residual variance (Σ).

“Shrinkage” in Bayesian estimation refers to the impact of (weakly) informative priors on posterior coefficient estimates. Priors pull (or shrink) the coefficient estimates obtained from the data toward the priors. This pull is a form of regularization, which addresses issues with outliers in the data (see Leone, Minutti-Meza, and Wasley (2019) for a discussion of outliers in accounting research).

Correlations cannot be independent. For example, if the correlation between PPE and InvAt is large, then this influences the correlations between PPE and △CRev and between InvAt and △CRev. The LKJ prior accounts for this dependence (Lewandowski et al. 2009). Its use for multi-level models is advocated in most software packages. See, for example, the STAN manual (Stan Development Team, 2018).

The approach combines models based on their out-of-sample fit, reducing concerns about over-fitting (e.g., selecting more complex models due to better in-sample fit as a result of more parameters). For a detailed discussion and implementation of the Bayesian model-averaging approach, see Yao et al. (2018).

In determining the relevant candidate models, including their determinants and level of heterogeneity, we advocate the use of theory, institutional knowledge, and prior literature. For proper inferences, we suggest to account for first-order determinants of accruals, especially if these determinants are correlated with proxies for opportunistic reporting incentives (e.g., Belloni et al. 2013). As a result of the bias-variance trade-off, we note that this approach may result in a lack of power to detect earnings management. This lack of power (or independent treatment variation), however, does not justify the omission of important correlated factors. Instead, it suggests that, given the data and the existence of confounding influences, we cannot confidently infer whether earnings are managed opportunistically.

Without performance, leverage, and volatility determinants, the industry-year OLS model exhibits a correlation with observed accruals as low as 0.30.