Abstract

An increasing number of research projects and infrastructure services involve pooling data across different survey programs. Creating a homogenous integrated dataset from heterogeneous source data is the domain of ex-post harmonization. The harmonization process involves various considerations. However, chief among them is whether two survey measurement instruments have captured the same concept. This issue of conceptual comparability is a fundamental precondition for pooling different source variables to form a harmonized target variable. Our paper explores this issue with a mixed-methods approach. On the one hand, we use psychometric latent variable modeling by presenting several single-item wordings for social trust to respondents and then performing factor analytic procedures. On the other hand, we complement and contrast these quantitative findings with qualitative findings gained with an open-ended web probe. The combined approach gave valuable insights into the conceptual comparability of the eleven social-trust-related single-item wordings. For example, we find that negative, distrust-related wordings and positive, trust-related wordings should not be pooled into an integrated variable. However, the paper will also illustrate and discuss why it is easier to disprove conceptual comparability than fully prove it.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Secondary research reusing data collected by large-scale survey programs is an established practice in the quantitative social sciences. However, while many research projects use data from a single survey program, an increasing number of projects pool data from different survey programs instead (May et al. 2021; Schulz et al. 2021; Slomczynski and Tomescu-Dubrow 2018). Running analyses on such integrated datasets requires much preparatory work to create an integrated, homogenous combined dataset. This is the domain of ex-post harmonization, a developing field of methodology that seeks to assess and increase comparability of existing data. The challenges here are unlike those of ex-ante harmonization, where comparability was intended and planned for before data collection. Instead, ex-post harmonization has to take data and all the survey design decisions that have been made as given (Peter Granda et al. 2010).

Ex-post harmonization must overcome many obstacles before we can place trust in the comparability of our pooled and harmonized data. This paper focuses on one particular challenge: How can we assess if different single-item measurement instruments capture the same concept? This challenge is especially pronounced with concepts that cannot be directly observed: So-called latent constructs. Examples are subjective estimates, attitudes, values, or emotions. Pragmatically speaking, we are often caught between two pitfalls. On the one hand, there is always the risk that even similarly worded questions capture markedly different substantive concepts. On the other hand, we also risk discarding data sources with different wordings that would have measured the same construct. In this paper, we want to extend the range of options for ex-post harmonization practitioners by exploring a mixed-methods approach. Quantitatively, we draw upon perspectives and techniques from psychometry: The concept of congenericity and the modeling framework of factor analysis (Price 2017; Raykov and Marcoulides 2011). Qualitatively, we use open-ended cognitive probing questions to complement the quantitative findings (Neuert et al. 2021).

As an example, we formed items based on the question wordings used in general social survey programs to capture social trust. We conducted a study using a non-probability sample collected via an online access panel (N = 2171). In the study, respondents saw eleven items with different question wordings in a randomly shuffled question battery and answered them using the same response scale. Afterward, we randomly picked one of the items and asked respondents in an open question why they chose their answer. We also asked how easy or hard it was to decide on an answer and how well the question fits into the theme of the other questions in the battery.

As we aim to demonstrate, this hybrid approach allows for more informed decisions on which questions to add to our harmonized dataset and which to discard as not sufficiently comparable. In the theory section below, we justify looking at the comparability of single-item questions through the lens of factor analyses and psychometric models. We will outline what factor analyses can tell us and where they fall short for our purposes. We then discuss the added benefits of adding open-ended probing questions (probes) to complement the factor analytic approach. The methods section details the design and materials used in our empirical example of single-item measures for social trust. In the results section, we present the results from both the quantitative factor analytic approach and the qualitative insights gained from web probing. In the discussion, we discuss these findings and try to give guidance on how to apply similar designs for other ex-post harmonization projects.

2 Theoretical background

Our interest lies in assessing the comparability of two survey measurements from different sources (e.g., different measurement instruments, survey programs, survey modes, points in time, or countries). Specifically, we look at the comparability of single-item survey measurement instruments intended to capture latent constructs such as attitudes, values, interests, or, in our example, social trust. This context means we face several interlinked challenges, exacerbated by both the nature of latent constructs and the limitations of single-item instruments.

To approach these challenges, we find it helpful to divide them into three issues: Conceptual comparability, comparable reliabilities, and comparable units of measurement (Singh 2022). And as we will see, these issues are all the more pressing if we want to harmonize single-item instruments in contrast to psychometric multi-item scales. Conceptual comparability means that we should only combine variables from different sources that measure the same concept. However, this is far from an easy task, especially when we try to measure latent constructs. In this paper, we attempt to formalize this idea of comparable constructs by borrowing the concept of congenercity from psychometry (Price 2017).

The other two issues are beyond the scope of this paper. However, we want to stress that they are also relevant for successful ex-post harmonization. The second issue, comparable reliabilities, means that measurements should have similar reliabilities before combining them into a single, ex-ante harmonized variable. Different reliabilities are problematic because lower reliability reduces correlations with that measure through attenuation (Charles 2005). Combining source variables with different reliabilities into a single target variable thus introduces heterogeneity into our combined dataset and may skew analyses. If the reliability of all source measurements can be estimated, then we can mitigate the issue via statistical corrections such as the correction for attenuation (Charles 2005). However, with single-item instruments, reliability estimation is not trivial since we cannot apply the convenient measures of internal consistency that we would use for multi-item scales (e.g., Cronbach’s Alpha or McDonald’s Omega; Dunn et al. 2014). Nonetheless, several options exist, such as test–retest reliability, muti-trait multi-method models (Tourangeau 2020), the Survey Quality Predictor (Saris 2013), or novel approaches such as comparative attenuation (Singh and Repke 2023).

The third issue is the comparability of measurement units (or comparable scaling). Many concepts in the social sciences, and especially latent constructs, do not have natural or even conventional units. In other words, the numerical response scores measured by different instruments do not necessarily imply the same construct intensity (such as the same level of social trust). However, many methods exist to establish comparable measurement units by transforming measured scores afterward. Such methods are often discussed in the psychometric literature, where different versions of diagnostic tests must be made comparable (Kolen and Brennan 2014). Terms for such harmonization techniques include linking, aligning, calibrating, or equating (Kolen and Brennan 2014). Another body of literature on the comparability of measurement units comes from medicine and their integrative data analysis efforts (Fortier et al. 2017; Hussong et al. 2013; Siddique et al. 2015). The problem is that most methods have strong requirements regarding what data on the instruments they require (e.g., respondents answering both instruments; Siddique et al. 2015) or regarding the instruments themselves (e.g., requiring multi-item instruments that share some common items (Fortier et al. 2017; Kolen and Brennan 2014). However, some methods can be applied to single-item instruments with less strict data requirements (e.g., observed-score equating; Kolen and Brennan 2014; Singh 2022).

2.1 Conceptual comparability

As we have seen, ex-post harmonization poses several challenges. However, to our mind, conceptual comparability is the most central issue because if two instruments measure different concepts, ex-post harmonization might not be possible at all. Consequently, we must carefully assess if different single items measure the same concept (Singh 2022). Meanwhile, psychometric applications face a similar challenge: We often present collections of single items to respondents and then check if they all measure the same concept or, in other words, if they are congeneric indicators of the same construct (Price 2017). The goal in psychometry is to gain a justification to use this collection of single items as a coherent multi-item scale for a specific construct. It seems promising to explore if we can transfer concepts and methods from psychometry to our sociometric case: Harmonizing a set of single-item measures where we need to establish whether they measure the same concept. Justification for EFA and CFA usage.

In the following, we will justify our belief that psychometric validation methods can be transferred to the ex-post harmonization of single-item instruments. Specifically, we will borrow statistical procedures such as exploratory factor analyses (EFAs) and confirmatory factor analyses (CFAs) that are usually applied to ensure that a multi-item measurement instrument works as intended (Price 2017). Such methods also reveal valuable information on the separate items, but again, to ensure that they play their role within the ensemble of the multi-item instrument. However, in our case, the goal is to address whether single items measure the same construct even if applied in separate contexts. Still, we argue that collecting data on all harmonization candidates from the same respondents and applying factor analytic techniques is helpful. To justify this standpoint, we look at the issue from two sides. First, we consider if such techniques help us identify instances where items measure different concepts. Second, we consider if a failure to find evidence for different concepts proves that all single items measure the same concept. However, such psychometric statistical methods also have shortcomings. Consequently, we will try to complement the psychometric approach with open-ended probing questions about respondents’ reasoning, which we will analyze with qualitative methods.

The first perspective focuses on opportunities to falsify our assumption that all candidate question wordings capture the same concept. This is where the parallel to the traditional psychometric application is strongest, and we are confident that applying EFA or CFA techniques can be helpful for the ex-post harmonization of single-item instruments. Factor analyses can help us identify several interesting issues. For example, we might find that we prefer a solution with several factors instead of all candidate items measuring the same construct (Brown 2015). This might mean we can identify candidate items to discard. It might also mean that if we find a clear, substantive explanation for the factor structure, we can differentiate items as measures of distinct (sub-)constructs. In short, few will contest that EFA and CFA approaches provide opportunities to find relevant construct comparability issues even if we are only interested in single items.

The second perspective, however, is less certain. That is because it is easier to find reliable evidence against conceptual comparability than it is to build a case for conceptual comparability. If a CFA draws a single-factor solution into doubt, we have gained clear evidence against comparability. However, even if the CFA demonstrates a single-factor solution, we cannot directly infer that there are no conceptual comparability issues. CFAs can be blind to systematic biases affecting only a specific item (Raykov and Marcoulides 2011). We must delve deeper into how factor analyses work to understand the issue better. Factor analyses are founded on the intercorrelations between the items we feed into the analyses. Factors are, in fact, nothing else than instances where several items covary markedly. If a set of items all reflect the same concept, then this results in a strong covariance of all those items.

On the level of items, the factor analysis decomposes the variance of responses to that item into common variance and unique variance (Raykov and Marcoulides 2011). Common variance is response variance explained by the extracted factor(s). In other words, this is the portion of variance where items covary due to their shared underlying latent source(s), i.e., due to a shared construct they capture. The unique variance is the residual variance not explained by the factor(s). Unique variance (i.e., the residual terms in a factor analysis) can then be conceptually decomposed into random measurement error and specific variance (Price 2017). Random measurement error means that responses vary unsystematically and is conceptually the inverse of what we think of as reliability (Raykov and Marcoulides 2011). Specific variance, in contrast, is a systematic (i.e., non-random) response variance likely caused by the item being correlated to another concept or methodological influence that is not captured by the factors (Price 2017).

Specific variance is a vexing problem because we cannot easily empirically distinguish between random error and specific variance. In a factor model, we only have the residual, which combines the two components in one value (Price 2017). We can somewhat address the problem from within the factor analytic framework. Specifically, we can look for correlated residuals. If the residuals were only composed of random error, they would be uncorrelated. However, suppose residuals of different items are correlated. In that case, this potentially implies that they have some form of latent influence in common that is not captured by the extracted factor(s) (Brown 2015). This might mean that another factor exists that influences some items in particular. This might result from other substantive influences, or it might be a methodological artifact if some subset of items shares some question design characteristic. If our goal was a working psychometric multi-item scale, we could extract additional factors or perhaps correlate the error terms of some item pairs. However, our interest lies in assessing single-item instruments. If we find a solution with more than one factor to be superior, we might be forced to discard some instruments or only pool and harmonize instruments within each factor.

Unfortunately, it is also possible that some foreign influence (e.g., another concept, a methodological artifact) influences only one item, for example, because that specific item can be misunderstood in a substantively different way by respondents. Such instances of measurement contamination are indistinguishable from random error for the factor analysis. After all, factor analyses require intercorrelations, and when only one item is influenced, there is no correlation in the data. This is less of an issue for psychometry, where we can extract factors, meaning we discard the items’ uniqueness and only retain their commonality. In our case, focusing on single-item instruments used separately in the source data this cannot be done. Variance tied to the concept, random error variance, and specific error variance are inextricably entangled in single-item instruments. Still, we do not want to overstate the issue. Establishing a well-fitting factor model with adequate factor loadings for each item already goes a long way to show that all items predominantly measure the same concept. Furthermore, the issue itself is not a problem caused by harmonization. It is a common limitation of all single-item instruments, which we nonetheless routinely use in the social sciences and many applied fields of psychology (Matthews et al. 2022).

Nonetheless, we might consider complementing factor analytic methods with other strategies to mitigate this remaining risk. If potential contaminating influences are known from the literature, adding measures for those concepts to the study is possible. This way, we can easily quantify to which extent these alternative concepts bias specific items. However, our paper applies a qualitative approach to complement our quantitative factor analytic strategy. This has the advantage that we give ourselves the opportunity to find contaminating influences that we had not thought of ourselves. Specifically, we ask respondents to explain their answer to a randomly selected item of the set of candidate items. This approach can help us identify alternative concepts and contaminating influences, such as potential ways to misunderstand the question. Of course, qualitative approaches cannot quantify their respective impact, but they explore bias sources that the researchers would not have thought of otherwise. However, we want to stress that this paper is not intended to pit different methods against each other. Instead, establishing conceptual comparability, much like establishing validity, requires integrating different approaches and perspectives (Price 2017).

2.2 Study design and analysis strategies

Next, we want to present our specific empirical example focusing on candidate measures of social trust. As a guiding definition for selecting items, we looked for items that capture “people’s estimates of the trustworthiness of generalized others” (Robinson and Jackson 2001, p. 119). We chose social trust as a target concept because it is a central concept in many research topics in the social sciences. It is also a realistic harmonization candidate because many surveys collect data on the concept. Social trust is also a concept with competing ideas regarding its definition and dimensionality. Social trust is often measured with a general trust question (Justwan et al. 2018), which aligns with the idea that trust and distrust are opposites on the same continuum or dimension (Van De Walle and Six 2020). However, trust and distrust can also be seen as separate concepts. There is the idea that citizens can exhibit high trust in government or society while still maintaining vigilance (Van De Walle and Six 2020). Vice versa, citizens might also have low levels of trust while not being distrustful. This might happen if citizens do not harbor routine positive expectations about interactions with others (low trust) but do not have routine negative expectations (distrust; Van De Walle and Six 2020). Lastly, it is an ideal concept to illustrate the need for assessing conceptual comparability. The question wordings we found that may capture social trust are similar enough to seem promising but different enough to make the need for empirical work obvious. It is entirely plausible that “most people can be trusted” captures a very similar concept as “people can generally be trusted.” However, what about “people mostly try to be helpful?” Or negative wordings, such as “one can’t be careful enough when dealing with other people?” The question of positive versus negative wordings is especially pressing since many instruments feature positive (i.e., trust) and negative (i.e., mistrust) wordings as endpoints in the same response scale. If trust and distrust represent separate constructs, then this would make such composite questions double-barreled items with all the associated problems for respondents and interpretability (Elson 2017).

Consequently, we collected a non-probability sample via an online access panel. In this online survey, we presented eleven wordings from different survey programs that might capture social trust of all respondents. This forms the basis for our factor analytic tests on whether these eleven wordings evoke the same concept in our respondents. We then complement this approach with probing questions. For our qualitative approach, we randomly chose one of the eleven items. We then reminded respondents of the item wording and their response to it and asked them to explain why they chose this answer. Out of exploratory interest, we also asked two close-ended probing questions: One on how easy or hard it was to decide on an answer and one on how good or bad the item fit the perceived topic of the other items.

2.2.1 Analyses

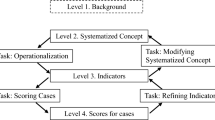

Here, we wanted to lay out the possible factor analysis approaches we can apply in our study. For each approach, we explain its role within our ex-post harmonization case of testing the conceptual comparability of single-item wordings. The first analysis approach is to test if all items measure a single factor directly. This is, after all, our initial, hopeful assumption that guided us to select these harmonization candidates. We can use a CFA with a simple congeneric model to that end. Stricter measurement models exist, but we are not interested in creating a robust psychometric multi-item scale. The model simply purports that all items load onto a single factor and that their residuals are uncorrelated. Should the model be associated with a tenable fit, we have gained confidence that the items are conceptually comparable. However, the size of factor loadings and residuals should still be considered. Fit indices are omnibus measures that may well mask problems at the level of individual items or item pairs.

If a congeneric model fails, we could perform an EFA. This approach might give us an idea of how many underlying concepts we are dealing with and which items most closely represent one concept or another. A related bonus is that in an EFA, items can load, albeit to a different degree, on all extracted factors at once. This helps us identify ambiguous, hybrid items. EFAs have many researcher degrees of freedom, however. Chief among them is the number of factors to extract. We will demonstrate traditional decision strategies in the results section. We can also specify models that account for several factors based on the EFA results or theoretical considerations. If such a model holds, it would mean that harmonization within each factor may be permissible. Still, we should perhaps refrain from pooling data of items that load onto different factors.

To gain qualitative insights into whether respondents share the same understanding of the implemented items and whether this corresponds to the intention of the researcher, in our case, measuring social trust, we apply a technique originating from cognitive interviewing (Willis 2005), which is called web probing (Behr et al. 2013). Cognitive interviews are a qualitative method in which survey questions are administered to respondents, followed by so-called probing questions or probes. Traditionally, the method has been used as a pretesting method (Miller 2014). The probes are intended to retrieve additional verbal information about the survey question and response (Beatty and Willis 2007). A major goal of asking probes is to evaluate the comprehensibility and validity of a given survey item, i.e., whether respondents interpret the item in different ways or in the same way but not as intended by the researcher (Collins 2003). When using web probing, probes are not administered to respondents by an interviewer but instead implemented into a web survey, usually as open-ended questions (Behr et al. 2012; Neuert et al. 2021). Combined with a quantitative design, this additional information from respondents makes it possible to understand interpretive variation in more detail.

3 Method

3.1 Sample

We conducted an online survey with a non-probability sample collected in an online access panel with N = 2171 (respondi AG 2021). We applied sex, age, and education quotas to encourage a demographically diverse sample. The sample comprised 50.2% of women, 49.7% of men, and two respondents reported their sex as “divers” (Germany’s official designation for intersex). Respondents mean age was \(\overline{x }\) = 41.7 (sd = 15.2). About half of the respondents (54.3%) had achieved some form of college entry exam. Responses to the social trust items had no omissions, meaning that the total sample of N = 2171 will be the basis for our analyses.

It is generally considered good practice to use independent samples for exploratory and confirmatory factor analyses (Brown 2015). In our case, we will randomly split our sample into two equally large subsamples. In the results section, we will thus speak of exploratory sample and confirmatory sample. In the exploratory sample, we will perform our first, naïve single-factor CFA and the EFA. It may seem confusing that we perform a CFA model in the exploratory sample, but we use the single-factor CFA as a pragmatic shot in the dark. In some cases, this first step might already lead to a tenable model, and ex-post harmonization practitioners will welcome a reduction of their workload. In our case, however, a plausible two-factor pattern will emerge, which we will test again in the confirmatory sample. Concerning the qualitative web probes, each respondent answered all items but only saw one probe related to one randomly selected item to keep the burden low and to avoid any wear-out effects from repeated exposure to a probe. This effectively resulted in eleven random samples of ni ≈ 197 each.

3.2 Procedure

Our study was part of a larger survey. Respondents first saw the welcome page with information on the survey and data protection information. Then, respondents saw a set of pages with basic sociodemographic questions on sex, age, education, and occupation. Next, participants saw two items related to political interest and three items related to political efficacy. These items are not relevant to our present paper. Then, participants were presented with the eleven candidates for social trust items we examine in this paper. The items were presented in a question matrix, as is common for multi-item scales. The order of items was randomized for each respondent. Next, respondents saw further questions relating to one randomly selected item from the set of eleven items. Specifically, they answered an open-ended question on why they chose their answer and then two closed-ended questions on how easy or hard it was to decide on an answer and on how well or badly the item fitted with the perceived topic of the other items on that page. The study then continued with questions not intended for this paper—specifically, a question on participants' subjective health as well as their reproductive choices. However, respondents were unable to go back to previous survey pages and were unable to change the responses they had given. Thus, later content would not have influenced the responses relevant to this paper. Lastly, respondents were thanked and referred back to the environment of the online access panel.

3.3 Materials

3.3.1 Candidate social trust items

We collected example wordings from five German survey programs and the German language versions of different international survey programs. This collection is not a comprehensive list of social trust-related questions in German surveys. Still, it certainly represents plausible examples of the kind of items we might encounter in ex-post harmonization. Table 1 below shows the eleven items and the source survey. The column “wording” differentiates items that are positively worded in line with trust and items that are negatively worded in line with distrust. We also sorted the table by positive and negative wording because this differentiation will play a central role in the results section.

Below, we detail the sources for each item. Please note that some items were originally the endpoints of the same scale. As we will see, however, the wordings used in the same scale are often quite different and merit being separated into their own items.

Items 1 and 5 were derived from a single-item measurement instrument used in the ALLBUS survey (GESIS 2017). The two items were presented as two options, and respondents were asked to choose between the two. Additionally, they could choose “it depends” as another close-ended option or give a detailed answer in an open-ended “other” option. While response scales vary, other surveys used almost identical wordings, such as the EVS, for example, in 2008 (EVS 2022) and the GESIS Panel for example in 2014 (GESIS 2022).

Items 2 and 6 are also the endpoints of the same scale used in the German questionnaire of the ISSP wave of 2010 from the topic module “environment” (ISSP Research Group 2019). Respondents could choose either option or gradations between them on a five-point scale.

Items 3 and 9 are again derived from the endpoints of a scale used in the EVS in 2008 (EVS 2022). This time, respondents could grade their answers on a ten-point scale, where only the endpoints are labeled.

Items 4, 10, and 11 were taken from the SOEP (SOEP 2019), for example, in 2018. The three items are presented as three separate items of a single multi-item battery. Respondents can express their level of agreement or disagreement on a four-point scale.

Items 7 and 8 are from the ISSP wave 2006 on the topic module “role of government” (ISSP Research Group 2021). Both were separate single-item measures with a five-point Likert-scale response scale, allowing respondents to agree or disagree with the statement.

3.3.2 Item battery

We presented the eleven derived items on the same questionnaire page in random order in a multi-item battery. Since we wanted to focus on the question wording, we used the same Likert-style response scale for all items: A five-point scale with the options “do not agree at all” (“Stimme überhaupt nicht zu”), “do not agree” (“Stimme nicht zu”), “neither/nor” (“Weder/noch”), “agree” (“Stimme zu”), and “fully agree” (“Stimme voll und ganz zu”).

3.3.3 Open-ended probing question

Respondents received one probing question after answering the eleven questions targeting one randomly selected item. The open-ended probing question started with an introduction: “We would like to gather some additional information about one of the statements you saw on the previous page.” Below, respondents were reminded of the item wording and the response option they had chosen for that item. The following category-selection probe read, "Please explain your answer in more detail. Why did you choose this answer?”. Respondents then could type their answers into a text input field. Notably, we probed each respondent on only one randomly selected item to decrease respondent burden and probe non-response (Lenzner and Neuert 2017; Meitinger and Behr 2016). We also were wary of respondents conflating their associations and interpretations across items when probing on several of them quickly. However, one drawback of this approach is that unlike the factor analytical sample, we cannot split the sample in two to cross-validate our qualitative analyses.

3.3.4 Coding procedure and coding scheme

Responses to the category-selection probe were coded using a coding scheme representing the core themes and main associations respondents had in mind when answering the respective item. The second author and a student assistant developed the coding scheme jointly. The categories were developed from theory and directly from the answers given in response to the probe (Naef and Schupp 2009). After coding a subsample of responses, the categories were refined before all responses were coded. The coding scheme distinguishes between substantive responses and responses containing no substantively codable content. Non-substantive responses include complete nonresponse, no useful answers, refusals, and insufficient responses for substantive coding. Generally, open-ended probes suffer from comparable high rates of non-substantive responses (Meitinger and Behr 2016; Neuert et al. 2021). In our study, the amount of non-substantive responses was 17.6%, corresponding to the level of non-substantive responses in other studies using web probing (Lenzner and Neuert 2017; Meitinger and Behr 2016).

The coding scheme contained 17 substantive codes at the most detailed level, which were condensed into seven core (social trust, distrust, selfishness, helpfulness, exploitation, fairness, and generalizability) and ten peripheral categories (see Online Resource 1 for the coding scheme). The core category social trust includes responses referring to general interpersonal trust, particularized trust, defined as trust in people known, or a general belief in the benevolence of human nature (moralistic trust). Distrust consists primarily of answers expressing a lack of trust in people. Occasionally, distrust in political institutions, officials, or the political system as a whole was also mentioned. The categories selfishness and helpfulness can be seen as opposites and contain selfishness/lack of helpfulness and perceived helpfulness, whereby helpfulness can be further subdivided into help towards known and unknown people (particularized helpfulness). The second pair of opposites is labeled exploitation versus fairness. Exploitation was coded when a response referred to behaviour such as taking unfair advantage or unfair interactions, while fairness referred to the opposite, e.g., a belief in people acting generally fair. The category generalizability was assigned when a respondent answered that the statement given was too general or that there are people and there are people. Peripheral codes based on the responses to the category-selection probe included caution (being careful when dealing with other people), reciprocity (expectation and experience that favors will be returned), confidencein one's knowledge of human nature, external factors (context or specific situations), and own life experiences, however without giving detailed information. A definition of all categories with several example responses can be found in Online Resource 1.

3.3.5 Close-ended probing questions

Below the open-ended question, two close-ended probing questions followed. Firstly, respondents were asked a difficulty probe which read as: “How easy or difficult was it to decide on your response ‘[chosen response]’?” (“Wie leicht oder schwer ist Ihnen die Entscheidung für Ihre Antwort ‚[chosen response]‘ gefallen?”). [Chosen response] was replaced by the response respondents had selected in response to the respective item. The response options were „very easy “ („sehr leicht “), „rather easy “ („eher leicht “), „rather difficult “ („eher schwer”), and „very difficult “ („sehr schwer “).

Secondly, the probe question “How good or bad did the statement fit with the topics of the other statements on the previous page?” („Wie gut oder schlecht hat die Aussage zum Thema der anderen Aussagen auf der letzten Seite gepasst? “). The response options were „very well “ („sehr gut “), „rather well” (“eher gut”), “rather bad” (“eher schlecht”), and “very bad” (“sehr schlecht”).

3.4 Software

All data transformations and quantitative analyses were conducted in R (R Core Team 2021) using RStudio (RStudio Team 2022). All original datasets were in SPSS format and read into R using Haven (Wickham 2017). The tidyverse package collection (Wickham 2017) was used for data transformation and visualization. Confirmatory factor analyses were conducted using the lavaan package (Rosseel 2012). The exploratory factor analysis was conducted using the psych package (Revelle 2022). Gini coefficients were calculated using the ineq package (Zeileis and Kleiber 2014).

4 Results

In the results section, we will approach the conceptual comparability of the eleven items first quantitatively and then qualitatively. We test our initial assumption that all eleven items measure the same concept with a single-factor CFA model. We then perform an EFA to explore alternative factor structures. Both analyses use the exploratory sample. Next, we confirm the factor structure suggested by the EFA in another CFA, this time with two factors. This analysis uses the confirmatory sample. Then, we examine what this factor solution does not capture by exploring correlated residuals. Then, we move on to the probing questions, starting with the two close-ended probing questions. Lastly, we lay out what we can learn from the open-ended probing question using a qualitative approach.

4.1 Single-factor CFA

If our assumption is correct that all eleven question wordings capture a single concept, then we should be able to find a tenable model that has only one factor on which all items load. Specifically, we fit first a congeneric CFA model with one factor and uncorrelated residuals on the exploratory sample. To make the model identifiable, we constrained the factor variance to 1. We used a robust maximum likelihood estimator. Since these analyses represent a first, tentative test, we performed them in the exploration sample, which encompasses a randomly selected half of our respondents.

Model fit indicators suggest that a congeneric model with only one factor is not tenable: χ2 (44, N = 1085) = 1614.62, p ≥ 0.01, RMSEA = 0.181, CFI = 0.675, SRMR = 0.137. Hu and Bentler (1999), in comparison, recommended CFI > 0.96, RMSEA ≤ 0.06, and SRMR ≤ 0.08 as criteria for a good model fit. Please note, that we use such criteria as a heuristic. Formally, the criteria were designed for assessing the fit for psychometric multi-item scales. If we look into the factor loadings, we find that the bad model fit is unsurprising. We find several lower factor loadings, meaning that the responses to that item do not predict the overall factor score of the respondent well. Specifically, the first four items, all positively worded, do not seem to fit the factor we extracted well. The loadings (\(\lambda \)) of the first four items are rather low with values within a range of \(.29\le \left|\lambda \right|\le .49\). Table 2 gives all factor loadings in direct comparison to the loadings from the other models that we will describe in the following sections.

4.2 EFA

Since we cannot uphold our assumption of a single factor, we apply an EFA to find alternative factor solutions. First, we perform a parallel analysis to get an idea of how many factors are needed to adequately capture the eleven items. Figure 1 gives an overview of several commonly used decision strategies. The Guttman rule (or Kaiser rule) suggests that we should extract two factors with eigenvalues greater than 1 (Warne and Larsen 2014). In Fig. 1 we would thus choose a two factor solution with their blue triangles above the horizontal black line at eigenvalue 1. Next, we look at the shape of the blue line to perform Cattell’s scree plot analysis. EFAs characteristically produce plots as the one below, where some factors form a steep downward slope until they meet a gently sloped set of factors that are likely random artifacts. Again, we are inclined to extract two factors. Lastly, we can compare the blue line to the red ones, which represent the parallel test. In essence, the red line shows which factor eigenvalues to expect in randomly simulated data (Revelle 2022). Formally, we might consider extracting three factors based on the parallel analysis (Warne and Larsen 2014). However, the third factor is very close to a randomly simulated solution and would offer very little explained variance. We therefore chose to proceed with two factors.

We thus ran an EFA with two extracted factors and used the oblique direct oblimin rotation method. In plain English, we calculated a solution for the two factors which retain the most variance of all items. By specifying an oblique rotation method, we allow the two factors to correlate. This is a plausible assumption given that all items address similar topics. We skip any considerations of overall model fit, because we will formally examine the factor solution using a CFA in the next section. Instead, we look towards the factor loadings. Again, the full set of loadings is presented in table X. However, the pattern of loadings is rather clear. We apply the recommendation of (Howard 2016) to assess if items load cleanly on only one factor: Items should load on their primary factor with |λ|> 0.40, they should load onto alternative factors at most with |λ|< 0.30, and the difference of loadings between primary and alternative factors should be |Δλ|> 0.20. Based on this, the positively worded first four items load on one factor, and the seven negatively worded items from item 5 onward load onto the other factor.

4.3 Two factors CFA

Next, we validate this new factor structure in a CFA conducted in the confirmation sample. The primary difference to the EFA model we just saw is that items are now forced to only load onto one factor. As Table 2 illustrates, we now constrain items to load onto the factor on which they loaded most strongly in the EFA. Specifically, we ran another congeneric CFA model, identical to the one earlier except this time with two factors. Item 1 through 4 now only load on the first factor, which we call “trust” and items 5 through 11 now only load on a second factor, which we call “distrust”. Please note that factor structures do not directly imply substantive meaning. However, the names help us remember that one factor captures the items with a positive, trust-related wording and the other captures the items with a negative, distrust-related wording.

The model fit greatly improved, even though it does not quite reach acceptable levels for a psychometric scale: χ2 (43, N = 1086) = 409.82, p ≥ 0.000, RMSEA = 0.087, CFI = 0.930, SRMR = 0.059. To reiterate: Hu and Bentler (1999) recommend CFI > 0.96, RMSEA ≤ 0.06, and SRMR ≤ 0.08 as indices of a good model fit. Still, we can be rather certain now that we should distinguish between question wordings that focus on trust and question wordings that focus on distrust. Looking towards the loadings, we see acceptable loadings for the trust factor (0.61 ≤|λ|≤ 0.81) and equally acceptable loadings for the distrust factor (0.60 ≤|λ|≤ 0.81). The trust and distrust factors are also negatively correlated with r = − 0.42.

While we gained insights into which wordings not to combine in ex-post harmonization, we still cannot be certain if we can combine data measured with the wordings within each factor. Thus, we take a look at the residuals; that is variance of the items not explained by their respective factor. As a basis, we use the two-factor CFA model from the last section.

The first approach might be to select items with low residuals. Since high factor loadings imply lower residuals, we could thus decide only to select items with higher loadings for harmonization. In our case, we might select item 1 (λ = 0.81) and item 4 (λ = 0.85) amongst the trust items as well as item 5 (λ = 0.81) and item 8 (λ = 0.78) amongst the distrust items. We could also decide only to use items with similar loadings. After all, this would make it more likely that those items measure the concept equally reliably. However, all these approaches cannot address the main issue that residuals encompass both random error as well as specific variance. In other words, the items may covary with concepts outside those captured by our two factors.

However, factor analyses provide some ways of addressing this issue. Specifically, we look into whether the residuals of specific items were correlated to the residuals of other items. If so, this implies that two items share some common covariance aside from our factor solution. If we select correlated residuals with correlations greater than r > 0.1 (Kline 2016), we find eight item pairs listed in Table 3. Two are within a factor, whereas the remaining six are residual correlations across factors. We will discuss each in turn. However, please note that all these correlations are markedly weaker than the factor loadings of each item. In other words, we should not overemphasize these residual correlations.

First, the correlated residuals within factors. Those imply that pairs of items share some systematic correlation that is not captured by the factor. Still, such correlated residuals do not offer substantive explanations. However, we can use such tools to consider if different item pairs share some additional meaning that is not common across all other items for that concept. The residual correlation between item 2 (“Most people would try to be fair if they got the chance”) and item 3 (“People mostly try to be helpful”) may well be because both hint at human prosociality. These are the two items where the wording refers to intention, not behavior. In contrast, the other two items in the trust factor speak of trust in general. However, such ad-hoc explanations for residual correlations are often not as clear. Item 6 (“Most people would try to take advantage if they got the chance.”) and item 10 (“Nowadays you cannot trust anybody anymore.”), for example, do not share a clear alternative meaning. They are also not dissimilar to other items loading on the distrust factor.

Second, there are many residual correlations across the two factors. Notably, almost all are caused by items 02 and 03, which already exhibited correlated residuals within their factor. It is also interesting to note that the residual correlations across the factors that involve items 02 and 03 are all positive, whereas the factors themselves are correlated negatively. Items 04 and 10, meanwhile, have negatively correlated residuals in line with the factor correlation.

4.4 Close-ended probing questions

Let us now turn to the close-ended probing questions and the insights they offer. Table 4 shows the perceived difficulty to decide on a response and the perceived fit of the respective item with the topic of the other items. Both response scales ranged from 1 to 4, whereas a higher response meant higher difficulty or worse topic fit, respectively.

At first glance, there were no items that stand out as particularly easy or difficult nor as particularly well or ill-fitting. On average, respondents tended towards rather easy and rather well-fitting. If we differentiate by trust and distrust items, we find that trust items were rated as more difficult (M = 2.01; SD = 0.69) than distrust items (M = 1.87, SD = 0.69), t(1686.98) = 4.65, p ≤ 0.001. However, this mean difference only amounts to a small Cohen’s d = 0.21. We also find that trust items were rated as less well fitting with the other items (M = 2.00, SD = 0.60) than distrust items (M = 1.94, SD = 0.58), t(1686.98) = 4.65, p ≤ 0.001. However, this amounts to an even smaller Cohen’s d = 0.11. This latter finding is also unsurprising, given that there were four trust items and seven distrust items.

We also asked ourselves if subjective perceptions of response difficulty are reflected in the factor loadings of the items. The reasoning is that response difficulties might increase random error, which would, in turn decrease factor loadings. However, we find no significant correlation between the absolute factor loadings of each item and respondents’ perceived response difficulty, r = − 0.02, p = 0.308.

4.5 Open-ended probing questions

Next, we analyze the open-ended probing questions. Please keep in mind that the findings can be seen from two perspectives. First, they can be interpreted on their own by what they tell us about which items to harmonize and which to keep separate. Second, they can be interpreted in contrast to the factor analytic findings. To start, let us again assume that all items reflected a single concept, which means we would expect similar associations across question wordings. The qualitative answers the respondents gave reveal that they have various associations and themes in mind, which clearly differ between the items (see Fig. 2).

Category frequencies of the open-ended probing question. Note. Shows all items' relative frequencies (in percent) of category codes. The plot is split into items with positive and negative wording. The highest frequency (of frequencies in case of a tie) is marked with an asterisk. The leftmost column, \(\overline{f }\) represents the average frequency of that category across all items

Overall, the most frequent category on average was social trust (\(\overline{f }=25.5\%\)). The second most frequent category was selfishness (\(\overline{f }=17.2\%\)), with frequent mentions (> 10%) in items 3, 8, 9, and 21. The third most frequent category was generalizability (\(\overline{f }=11.8\%\)). This category merits special notice, since it represents respondents feeling an item statement was too general. The items 3, 7 and 11 stood out as very seldomly (< 10%) perceived as too general. The antonym of trust, distrust, was the fourth most frequently coded category (\(\overline{f }=24.8\%\)).

We also see that the categories do not align with the factor analytic findings. Only half the positively worded items have social trust as the most frequent category. And for negatively worded items, four out of seven items nonetheless have social trust as the most frequent category, while only one item has distrust as the most frequent category. This shows that the open-ended probe reveals novel information about the harmonization candidate items.

The open-ended probe points to several items that might be contaminated by other concepts beside social trust or distrust. The clearest examples are item 3, with 47.3% of respondents linking it to helpfulness, and item 9, with 67.3% of respondents linking it to selfishness. Given the item wording, both interpretations are quite plausible (cf. Table 1). There are also other instances where a category beyond social trust or distrust is the most frequently reported for specific items: Fairness for item 2 (23.0%) as well as selfishness (21.9%) and exploitation (21.9%) for item 8.

We can also interpret the findings regarding how (un-)ambiguous respondents’ thoughts were on each item. To this end, we can look at how equally or unequally responses are distributed across the categories by looking at the Gini index across the frequencies (Giorgi and Gigliarano 2017). The Gini index is bounded between zero and 1, where \(G=0\) implies a perfectly even distribution and \(G=1\) a completely uneven distribution (i.e., all respondents falling into only one category). Table 5 lists these \(G\) values for all items. Items are sorted from left to right in order of ascendingly unequal distributions. The column f̅, represents the Gini index of the average category frequencies. It becomes apparent that some items resulted in more ambiguous interpretations with responses more evenly spread across categories, such as items 6, 2, and 8. On the other side of the spectrum, we can identify items where respondents were consistent with their explanations, such as items 7, 3, and 9.

A general pattern also was that respondents’ associations and the wording of the respective item often coincided. However, the same term may carry different nuances. To illustrate, we take a closer look at social trust. Previous research shows that trust measures differ depending on whether respondents interpret the item as meaning strangers or known people (Naef and Schupp 2009). Comparing the items shows that across all codes summarized as trust, particularized trust was most frequently assigned in item 7 (83.5%) and item 10 (73.9%). Table 6 shows trust category frequencies for all items.

5 Discussion

5.1 Summary

In this paper, we tackled a common and crucial challenge in ex-post harmonization: Deciding which source variables were measured with enough conceptual comparability so that we can justify pooling them to form harmonized variables. To that end, we explored using factor analytic methods to establish conceptual comparability. In parallel, we also applied close-ended and open-ended web-probing questions which we used to contrast and complement the factor analytic findings. Our empirical example centered around eleven items with wordings related to social trust taken from several survey programs. The factor analytic results clearly established that we should be wary of combining items with positive trust wording and items with negative distrust wording. However, a look at the residuals and the qualitative analysis of the web-probing responses makes us cautious to combine items within these two factors. In the following, we will discuss these findings and their implications. We begin with drawing conclusions about our concrete empirical example. Then we will discuss limitations and draw lessons useful for ex-post harmonization practitioners. Lastly, we will anchor our interpretation conundrum into a larger epistemological context.

5.2 Coming to a concrete decision

In this section, we apply our empirical findings to make decisions regarding the conceptual comparability of the concrete items for social trust. However, there are many researcher degrees of freedom. Depending on the needs of their ex-post harmonization project and the conventions of their respective discipline, different researchers might make different decisions. We will address this in Sect. 5.3.

Now with a look to our empirical example, we have made substantial headway towards assessing conceptual comparability. The results of our factor analyses strongly suggest that combining data measured with positive social trust wording and negative social distrust wording should not be pooled into a single harmonized variable. While all eleven items share a common covariance, there seems to be a systematic difference between positively and negatively worded items. Combining variables across these differences means contaminating our harmonized variable with different specific item variances. Our hopeful assumption that all wordings reflect the same concept was insufficient.

However, while the factor analysis helped us uncover what not to combine, it did not clearly recommend what we can combine. Factor analyses infer latent concepts that influence responses from the intercorrelations of items. As such, they are somewhat blind to respondent interpretations unique to an item. We can establish the portion of item response variance that is not linked to a factor, but that residual comprises the random measurement error and specific variance (Price 2017). To some degree, we can explore substantive errors related across items by looking at correlated residuals. Within each factor, we only find small residual correlations: r = 0.11 between items 2 and 3 and r = 0.11 between items 6 and 10. Based on the factor analyses alone, we might say these correlated residuals are barely higher than the r > 0.1 threshold (Kline 2016) and small enough to ignore. Alternatively, we might pool all items within a factor except 2, 3, 6, and 10.

If we look at the qualitative findings from the open-ended probing questions, we find that most items do indeed seem to evoke associations with social trust or distrust. At the same time, we do not find a clear split between negatively and positively worded items. For example, most negatively worded items still evoke more trust than distrust-related associations. This shows that complementing the factor analytic approach with an open-ended probe provided additional insights. This deviation between factor analysis and qualitative analysis also reminds us that quantitative factor patterns have no inherent substantive interpretation. Based on the factor analysis alone, we might have been tempted to explain both factors substantively as items capturing social trust and social distrust. However, it is equally possible that the two factors arise from a methodological effect. Respondents often treat positively and negatively worded items differently, so much so that a second method factor arises (Weijters et al. 2013).

Aside from the recommendation to only pool items within each factor, we have also gained some guidance on which items we might want to examine further. We found, for example, that items 2 and 3, as well as items 6 and 10 had nontrivial residual correlations, implying that they capture specific variance not linked to their respective factor. The qualitative analysis, meanwhile, implied that items 3 and 9 likely capture helpfulness and selfishness, respectively. Taken to its extreme, we might choose only to harmonize and combine items 1 and 4 as measures for social trust. However, such a decision must always be weighed against the data sources we discard alongside each discarded item. If social trust is a central variable, discarding one source item might mean excluding decades of survey data with thousands of observations per wave from our harmonization project.

5.3 Alternative decisions

Given the complex trade-offs, harmonization practitioners might come to different decisions in the case that we have presented.

(1) There is the possibility to overrule the findings of a factor analysis for theoretical reasons. Trust and distrust were, and often still are, treated as opposites on the same continuum (Van De Walle and Six 2020) and measured with a general trust question (Justwan et al. 2018). Our one-factor CFA also reveals that all items, trust and distrust, share at least some common variance. However, if we combine trust and distrust items into a single, harmonized variable, we should carefully consider if our research questions are sensitive to the difference between trust and distrust or not. For example, if we want to predict the level of vigilance that citizens display regarding their country’s political institutions, we might find that trust items predict vigilance less strongly than distrust items. While distrust certainly induces constant vigilance, we might well find that not only respondents with low trust levels, but also those with high trust levels exhibit vigilance. As Lennard (2008; p. 326) puts it: “vigilance does not require an attitude of distrust towards our legislators and the vigilance we display in constraining our legislators is not inconsistent with trusting them”. However, for other concepts, trust and distrust items may work equally well.

(2) We might reject the two-factor structure and combine trust and distrust if we believe the effect is merely a methodological artifact. Reverse-keyed (or negative) items sometimes exhibit common variance solely due to respondents answering reverse-keyed items slightly differently (Weijters et al. 2013). This issue would still pose a problem for comparability because it means some items are contaminated by an additional source of (method) variance and others are not. However, this is less of an issue than different items representing genuinely different constructs if the method's influence is not tied to the substantive variables we are interested in.

(3) We might include all items but add a methodological control variable to all subsequent analyses with the harmonized dataset (Slomczynski and Tomescu-Dubrow 2018). This variable would be a nominal, dichotomous variable denoting whether an observation was measured with a trust or distrust item. Suppose we interact this variable with other substantive variables in a regression or multi-level mode, for example. In that case, we might get some idea if the difference between trust and distrust plays a crucial role in our research. However, this is only helpful if trust and distrust items are not systematically tied to specific research populations (Singh 2021). For example, if all surveys from one country use trust wordings and all surveys from another country use distrust wordings, then the control variable would be contaminated by true population differences between the two countries.

(4) Researchers might also choose to heed the factorial structure (i.e., distinguishing between trust and distrust items) but to tolerate residual correlations and qualitative contaminations (e.g., helpfulness and selfishness). After all, the items seem adequate indicators for their respective factor. While the contamination of items by item uniqueness is always possible, it can be argued that this is an issue we routinely accept in the social sciences when we field single-item instruments in surveys.

5.4 Limitations

Before we discuss more general implications, we point out some limitations of our paper. First, we made the deliberate choice to collect data in a non-probability online access panel. Harmonization practitioners often have few resources to conduct additional method experiments, and thus can seldomly afford probabilistic samples to explore harmonization issues systematically. We argue that much can be learned about comparability in non-probability samples, but we also acknowledge that the (in-)comparability of question wordings is population dependent. Factor analytic patterns that hold in one (non-probability) population may not always hold the same way in our target population. Also, with online access panels, the analyses are based on people who can be reached online and have sufficient literacy skills as they have signed up for taking part in surveys. Lastly, a non-probability sample also means that our factor analytic coefficients (e.g., the loadings in our EFA and CFA models) are not formally generalizable to the general population. However, previous research on using non-probability online samples to validate psychometric scales make us optimistic, that our findings would replicate in principle, if not in exact proportions (e.g., Meyerson and Tryon 2003).

Second, we implicitly assume that respondents treat items when seen together the same as when presented separately. After all, our design is meant to allow inferences about source surveys where respondents only saw one item in isolation. However, it is reasonable to assume that respondents may contrast the wording of different items when responding to a multi-item scale. Still, we argue that the assumption of independent responses is very established in psychometric practice. For example, it is common practice to shorten scales or to only present a selection of items to respondents (Price 2017). Furthermore, commonly used test theory models assume independent responses to items even in multi-item scales (Raykov and Marcoulides 2011). We also randomized the order in which the items were presented, which should mitigate consistency or contrast effects between specific items.

Third, our open-ended web probing technique was rather limited. We only asked a single open-ended question, and each respondent was only asked about a single item. This obviously falls short of what insights a full cognitive pretest might provide. The role of the open-ended probe in our study was to provide complementary insights in a cost-effective way. Thus, our study should not be mistaken factor analytic as a comparison of quantitative and qualitative approaches.

Fourth, some of the item wordings in our study originate from the same item where the positive and negative wording formed ends of a bipolar continuum. The way our study was designed, we cannot really say if those are comparable with solely positively or solely negatively worded items. In fact, our results seem to suggest that such survey instruments which use a bipolar distrust-trust scheme may be double barreled items with the associated drawbacks even when used in a single source setting.

Fifth, we acknowledge that the insights gained from the qualitative approach target the German context. The general meaning of trust might differ across countries or time, and respondents from other countries or cultural groups might associate cultural-specific interpretations with this concept. Besides context-specific associations, differences in understanding might also indicate issues with the items' translation, which might threaten comparability (referred to as item bias; van de Vijver and Leung 2021). However, applying multi-item analysis techniques, such as CFAs, already paves the way for statistical tests of international comparability via formal measurement invariance testing (e.g., MGCFAs; Leitgöb et al. 2022). Additionally, web probing studies are generally well-suited to assess comparability in cross-cultural contexts (Behr et al. 2020). However, there are then some specific aspects to consider. For instance, code scheme development in cross-cultural surveys should take answers from all countries or cultural groups into account to prevent biased coding schemes (Behr et al. 2020). Given the labor-intensive analyses of probe answers, the application is also restricted to a selection of countries or contexts. Sixth, we would like to point out that assessing congenericity using factor analyses is only one possible angle to assess conceptual comparability. While it is common practice in psychometry to assess the validity of a test based on its internal (covariate) structure with CFAs and the like, it is equally common to assess the construct and criterion validity of a test by correlating it with other variables (Price 2017). And indeed, we can extend this idea of construct validity towards assessing comparability by correlating two different measurement instruments with the same set of validation concepts (Westen and Rosenthal). However, such approaches are not alternatives to what we have presented in this paper. They are instead complementary approaches and are usually used together in psychometry (Price 2017). In fact, validity (and thus, to our mind, also conceptual comparability) is routinely inferred by integrating insights from different validation techniques into a nuanced judgment (Price 2017).

5.5 General implications

We hope that ex-post harmonization practitioners can derive some inspiration for their projects from our study. With a simple, time- and cost-efficient research design, we gained valuable insight into the conceptual comparability of a whole set of candidate question wordings at once. Applying such an approach may not be feasible for every concept of interest in a harmonization project. Still, it can certainly illuminate selected concepts that are very central to your research goals. It may also help researchers form a decision in cases where they are uncertain what to pool and what to discard. We feel that both components, presenting the items in a battery together for factor analytic methods and asking open-ended web probes, have added unique and helpful insights into the comparability of these question wordings.

We do acknowledge that our design still left some questions open. The problem of potentially contaminated items persists: That is items potentially capturing other and different substantive concepts than the one we are interested in. In practice, we would thus suggest complementing the design in this paper with a construct and criterion validation approach. This means that we should correlate our items with at least some measures that represent related concepts (i.e., convergent validity), potentially contaminating concepts we do not want to measure (i.e., divergent validity), and lastly concepts that are important outcomes in the context of our subject domain (i.e., criterion validity). We can field measures for validation concepts in the same initial study when the literature gives clear guidance on which concepts to include. Alternatively, we can also use the insights gained from web probing to select some candidates for contaminating concepts and then field a second, much shorter study where we have already excluded many items that we deem incomparable. Alternatively, we can also look for such measures of potential contaminants in our source survey datasets. This is not as clean as fielding a methodological experiment and it depends on serendipity if the right concepts were asked in several sources. However, it might save cost and time.

Lastly, we see the need for further research on the quantitative consequences of different comparability violations on the research we conduct using integrated datasets. How comparable is comparable enough? And if we need to accept trade-offs between different comparability issues, how can we make optimal decisions? In the context of conceptual comparability, we saw a recurring theme in our study. It is easier to find evidence for potential comparability problems than it is to establish comparability. At the same time, we do science a disservice if we apply too strict a standard. The problem is similar to the type I and type II error issues in statistical hypothesis testing. Yes, we incur a scientific cost in the form of bias if we pool data that are not perfectly comparable. But we also incur a scientific (opportunity) cost if we discard data sources that are not perfectly comparable but comparable enough for our research goals. What is comparable enough strongly depends on the research question addressed. Methodological research is needed on suitable approaches for assessing and improving comparability, on the potential consequences of comparability violations, and on strategies for optimizing such methodological trade-offs. This would allow informed decisions on how to weigh competing evidence from different approaches. Ideally, research in this field would lead to robust guidelines or even standards for ex-post harmonization practitioners in the social sciences.

Finally, it is worth pointing out that sociometric measurement instruments are not usually designed from a psychometric perspective and the views on sociological concepts versus psychometric constructs and their associated measurement assumptions may differ. Ex-post harmonization practitioners must make careful informed decisions of how to approach comparability, including being mindful of the implicit decisions inherent in certain methods.

References

Beatty, P.C., Willis, G.B.: Research synthesis: the practice of cognitive interviewing. Public Opin. Q. 71, 287–311 (2007). https://doi.org/10.1093/poq/nfm006

Behr, D., Braun, M., Kaczmirek, L., Bandilla, W.: Testing the validity of gender ideology items by implementing probing questions in web surveys. Field Methods 25, 124–141 (2013). https://doi.org/10.1177/1525822X12462525

Behr, D., Kaczmirek, L., Bandilla, W., Braun, M.: Asking probing questions in web surveys: Which factors have an impact on the quality of responses? Soc. Sci. Comput. Rev. 30, 487–498 (2012). https://doi.org/10.1177/0894439311435305

Behr, D., Meitinger, K., Braun, M., Kaczmirek, L.: Cross-national web probing: an overview of its methodology and its use in cross-national studies. In: Beatty, P.C., Collins, D., Kaye, L., Padilla, J.-L., Willis, G.B., Wilmot, A. (eds.) Advances in questionnaire design, development, evaluation and testing, pp. 521–543. Wiley, Hoboken (2020)

Brown, T.A.: Confirmatory Factor Analysis for Applied Research. The Guilford Press, New York (2015)

Charles, E.P.: The correction for attenuation due to measurement error: clarifying concepts and creating confidence sets. Psychol. Methods 10, 206–226 (2005). https://doi.org/10.1037/1082-989X.10.2.206

Collins, D.: Pretesting survey instruments: an overview of cognitive methods. Qual. Life Res. 12, 229–238 (2003). https://doi.org/10.1023/a:1023254226592

Dunn, T.J., Baguley, T., Brunsden, V.: From alpha to omega: a practical solution to the pervasive problem of internal consistency estimation. Br. J. Psychol. 105, 399–412 (2014). https://doi.org/10.1111/bjop.12046

Elson, M.: Question wording and item formulation. In: Matthes, J., Davis, C.S., Potter, R.F. (eds.) The international encyclopedia of communication research methods, pp. 1–8. Wiley, Hoboken (2017)

EVS: European Values Study 2008: Integrated Dataset (EVS 2008) (2022). https://doi.org/10.4232/1.13841

Fortier, I., Raina, P., Van den Heuvel, E.R., Griffith, L.E., Craig, C., Saliba, M., Doiron, D., Stolk, R.P., Knoppers, B.M., Ferretti, V., Granda, P., Burton, P.: Maelstrom research guidelines for rigorous retrospective data harmonization. Int. J. Epidemiol. 46, 103–105 (2017). https://doi.org/10.1093/ije/dyw075

GESIS: ALLBUS/GGSS 2016 (Allgemeine Bevölkerungsumfrage der Sozialwissenschaften/German General Social Survey 2016) (2017). https://doi.org/10.4232/1.12796

GESIS: GESIS Panel - Standard EditionGESIS Panel - Standard Edition (2022). https://doi.org/10.4232/1.14007

Giorgi, G.M., Gigliarano, C.: The Gini concentration index: a review of the inference literature: the Gini concentration index. J. Econ. Surv. 31, 1130–1148 (2017). https://doi.org/10.1111/joes.12185

Howard, M.C.: A review of exploratory factor analysis decisions and overview of current practices: What we are doing and how can we improve? Int. J. Hum. Comput. Interact. 32, 51–62 (2016). https://doi.org/10.1080/10447318.2015.1087664

Hu, L., Bentler, P.M.: Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equ. Model. Multidiscip. J. 6, 1–55 (1999). https://doi.org/10.1080/10705519909540118

Hussong, A.M., Curran, P.J., Bauer, D.J.: Integrative data analysis in clinical psychology research. Annu. Rev. Clin. Psychol. 9, 61–89 (2013). https://doi.org/10.1146/annurev-clinpsy-050212-185522

ISSP Research Group: International Social Survey Programme: Environment III - ISSP 2010 (2019). https://doi.org/10.4232/1.13271

ISSP Research Group: International Social Survey Programme: Role of Government IV - ISSP 2006 (2021). https://doi.org/10.4232/1.13707

Justwan, F., Bakker, R., Berejikian, J.D.: Measuring social trust and trusting the measure. Soc. Sci. J. 55, 149–159 (2018). https://doi.org/10.1016/j.soscij.2017.10.001

Kline, R.B.: Principles and Practice of Structural Equation Modeling. The Guilford Press, New York (2016)

Kolen, M.J., Brennan, R.L.: Test Equating, Scaling, and Linking. Springer, New York (2014)

Leitgöb, H., Seddig, D., Asparouhov, T., Behr, D., Davidov, E., De Roover, K., Jak, S., Meitinger, K., Menold, N., Muthén, B., Rudnev, M., Schmidt, P., van de Schoot, R.: Measurement invariance in the social sciences: Historical development, methodological challenges, state of the art, and future perspectives. Soc. Sci. Res. (2022). https://doi.org/10.1016/j.ssresearch.2022.102805

Lenard, P.T.: Trust your compatriots, but count your change: the roles of trust, mistrust and distrust in democracy. Polit. Stud. 56, 312–332 (2008). https://doi.org/10.1111/j.1467-9248.2007.00693.x

Lenzner, T., Neuert, C.E.: Pretesting survey questions via web probing: Does it produce similar results to face-to-face cognitive interviewing? Surv. Pract. 10, 1–11 (2017). https://doi.org/10.29115/SP-2017-0020

Matthews, R.A., Pineault, L., Hong, Y.-H.: Normalizing the use of single-item measures: validation of the single-item compendium for organizational psychology. J. Bus. Psychol. 37, 639–673 (2022). https://doi.org/10.1007/s10869-022-09813-3

May, A., Werhan, K., Bechert, I., Quandt, M., Schnabel, A., Behrens, K.: ONBound-Harmonization User Guide (Stata/SPSS), Version 1.1. GESIS Paper (2021). https://doi.org/10.21241/SSOAR.72442

Meitinger, K., Behr, D.: Comparing cognitive interviewing and online probing: Do they find similar results? Field Methods 28, 363–380 (2016). https://doi.org/10.1177/1525822X15625866

Meyerson, P., Tryon, W.W.: Validating Internet research: a test of the psychometric equivalence of Internet and in-person samples. Behav. Res. Methods Instrum. Comput. 35, 614–620 (2003). https://doi.org/10.3758/BF03195541

Miller, K.: Introduction. In: Miller, K., Chepp, V., Willson, S., Padilla, J.-L. (eds.) Cognitive Interviewing Methodology, pp. 1–6. Wiley, New York (2014)

Naef, M., Schupp, J.: Measuring trust: experiments and surveys in contrast and combination. SSRN Electron. J. (2009). https://doi.org/10.2139/ssrn.1367375

Neuert, C.E., Meitinger, K., Behr, D.: Open-ended versus closed probes: assessing different formats of web probing. Sociol. Methods Res. (2021). https://doi.org/10.1177/00491241211031271

Peter, G., Wolf, C., Hadorn, R.: Harmonizing survey data. In: Harkness, J.A., Braun, M., Edwards, B., Johnson, T.P., Lyberg, L.E., Mohler, P.P., Pennell, B.-E., Smith, T.W. (eds.) Survey Methods In Multinational, Multiregional, And Multicultural Contexts, pp. 315–332. Wiley, Hoboken (2010)

Price, L.R.: Psychometric Methods: Theory into Practice. The Guilford Press, New York (2017)

R Core Team: R: A language and environment for statistical computing. In: R Foundation for statistical computing, Vienna, Austria (2021)

Raykov, T., Marcoulides, G.A.: Introduction to psychometric theory. Routledge, New York (2011) respondi AG: Access Panel, https://www.respondi.com/access-panel

Revelle, W.: psych: Procedures for psychological, psychometric, and personality research, https://CRAN.R-project.org/package=psych (2022)

Robinson, R.V., Jackson, E.F.: Is trust in others declining in America? An age–period–cohort analysis. Soc. Sci. Res. 30, 117–145 (2001). https://doi.org/10.1006/ssre.2000.0692

Rosseel, Y.: lavaan: an R package for structural equation modeling. J. Stat. Softw. (2012). https://doi.org/10.18637/jss.v048.i02

RStudio Team: RStudio: integrated development for R. http://www.rstudio.com/ (2022)

Saris, W.E.: The prediction of question quality: the SQP 2.0 software. In: Understanding research infrastructures in the social sciences, pp 135–144 (2013)

Schulz, S., Weiß, B., Sterl, S., Haensch, A.-C., Schmid, L., May, A.: HaSpaD - Datenhandbuch (September 2021). GESIS Paper (2021). https://doi.org/10.21241/SSOAR.75134

Siddique, J., Reiter, J.P., Brincks, A., Gibbons, R.D., Crespi, C.M., Brown, C.H.: Multiple imputation for harmonizing longitudinal non-commensurate measures in individual participant data meta-analysis. Stat. Med. 34, 3399–3414 (2015). https://doi.org/10.1002/sim.6562

Singh, R.K.: Harmonizing single-question instruments for latent constructs with equating using political interest as an example. Surv Res Methods 16(3), 353–369 (2022). https://doi.org/10.18148/srm/2022.v16i3.7916