Abstract

This study confirms a local trade-off between information and disturbance in quantum measurements. It is represented by the correlation between the changes in these two quantities when the measurement is slightly modified. The correlation indicates that when the measurement is modified to increase the obtained information, the disturbance also increases in most cases. However, the information can be increased while decreasing the disturbance because the correlation is not necessarily perfect. For measurements having imperfect correlations, this paper discusses a general scheme that raises the amount of information while decreasing the disturbance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

An interesting topic in the quantum theory of measurements is the trade-off between information and disturbance. In general, when a measurement provides much information about the state of a system, it causes a large disturbance. This trade-off has been studied using various measures of information and disturbance [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18]. Most studies focused on optimal measurements, which saturate the upper bound of the information for a given disturbance. An optimal measurement providing more information always causes a larger disturbance in the system.

By contrast, even for general measurements, a trade-off can be considered locally. Consider the neighborhood of a given measurement in the space of measurements. Most neighboring measurements provide more information with larger disturbance or less information with smaller disturbance. This means that the information and disturbance of neighboring measurements are correlated to some extent. According to this correlation, when the given measurement is slightly modified to increase the obtained information, it usually increases the disturbance in the system. This is a kind of trade-off between information and disturbance. It is local in that it pays attention to the neighborhood of the given measurement.

However, this trade-off is not strict because the correlation is imperfect. The minority of neighboring measurements have the opposite trend. In particular, unless the given measurement is optimal, there always exist measurements that provide more information with smaller disturbances. This implies that the given measurement can be improved to enhance the information gain while decreasing the disturbance. The imperfectness of the correlation estimates the improvability of the measurement.

This trade-off has not been discussed so far. Non-optimal measurements are a little interesting in quantum information theory. However, in quantum measurement theory, the trade-off can be utilized to understand the mathematical structure of measurements. The correlation clarifies the connections to the neighboring measurements, giving a geometry in the measurement space. Moreover, the trade-off can be utilized in quantum information processing experiments to improve realized measurements. The correlation estimates their improvabilities, pointing the improvement direction.

This paper mathematically formulates the local trade-off between information and disturbance when a measurement is slightly modified. Suppose that the measurement is performed on a quantum system in a completely unknown state. The obtained information and resulting disturbance are represented as functions of the same parameters. For these functions, their directions of steepest ascent and descent are derived from their gradient vectors. The angles between these directions quantify the correlation between the changes in information and disturbance. They also quantify the improvability in a general scheme to enhance the measurement.

The remainder of this paper is organized as follows. Section 2 covers the review of information and disturbance for a single outcome of a quantum measurement. Section 3 focuses on finding the steepest-ascent and -descent directions of the information and disturbance. Section 4 provides the calculations of the angles between the steepest directions. Section 5 tackles the correlation between the information and disturbance. Section 6 discusses a general measurement-improvement scheme. Section 7 provides a summary of our results.

2 Preliminaries

To present a self-contained paper, we recall the information and disturbance for a single outcome of a quantum measurement [19]. Suppose that a quantum system is known to be in a pure state \(| \psi (a) \rangle \) with probability p(a), where \(a=1,\ldots ,N\). To know the actual state of the system, we perform a quantum measurement on this system.

A quantum measurement is described by a set of measurement operators \(\{\hat{M}_m\}\) satisfying [20]

where \(\hat{I}\) is identity operator and the index m denotes an outcome. For a system in state \(| \psi (a) \rangle \), outcome m is obtained with probability

After a measurement yielding outcome m, the system’s state changes from \(| \psi (a) \rangle \) to

Note that this measurement does not change the system’s state to a mixed state. This type of measurement is called an ideal measurement [21].

The outcome m provides information on the system’s state. More specifically, from the outcome m, one can naturally estimate the system’s state as \(| \psi (a'_m) \rangle \), where \(a'_m\) is a that maximizes p(m|a). The estimation fidelity determines the quality of this estimate, given as

where we have used the conditional probability of the system’s state as \(| \psi (a) \rangle \) given outcome m:

The total probability of m is

The estimation fidelity G(m) quantifies the information provided by outcome m.

When a measurement yields outcome m, it changes the system’s state from \(| \psi (a) \rangle \) to \(| \psi (m,a) \rangle \) given by Eq. (3). This system disturbance can be quantified by either the size or reversibility of the state change. The size of the state change can be evaluated by the operation fidelity

In contrast, the state change reversibility can be evaluated on the basis of a reversing measurement [22, 23]. The reversing measurement reverts the system’s state from \(| \psi (m,a) \rangle \) to \(| \psi (a) \rangle \) when it yields a successful outcome. Using its maximum successful probability [24], the state change reversibility is evaluated by the physical reversibility

The operation fidelity F(m) and the physical reversibility R(m) quantify the disturbance caused by obtaining outcome m. Both measures decrease as the disturbance increases.

To explicitly calculate G(m), F(m), and R(m), we assume a completely unknown system state to be measured. That is, the set of possible states \(\{| \psi (a) \rangle \}\) consists of all pure states of the system, and p(a) is uniform according to a normalized invariant measure over the pure states. In this case, the information and disturbance are functions of the singular values \(\{\lambda _{mi}\}\) of \(\hat{M}_m\) [19]. The singular value \(\lambda _{mi}\) means the square root of the probability for obtaining outcome m when the system is in the ith eigenstate of the positive operator-valued measure (POVM) element \(\hat{E}_m=\hat{M}_m^\dagger \hat{M}_m\) [20]. Therefore, a measurement with outcome m can be conveniently expressed by the d-dimensional vector

where d is the Hilbert space dimension of the system. By definition, the singular values are not less than 0. Moreover, by Eq. (1), they cannot exceed 1. For simplicity, the singular values are sorted in the following descending order:

where \(\lambda _{m1}\ne 0\).

In terms of the singular values, G(m), F(m), and R(m) are, respectively, written as [19]

where

Note that Eqs. (11)–(13) are invariant under rescaling of the singular values by a constant c,

and under rearrangement of all singular values except \(\lambda _{m1}\) and \(\lambda _{md}\). In the rearrangement, \(\lambda _{m1}\) is excluded because it should be the maximum singular value to use Eq. (11) for G(m), and \(\lambda _{md}\) is excluded because it should be the minimum one to use Eq. (13) for R(m).

Fundamental measurements are represented by the following vectors [25]:

where c is a proportionality factor from the rescaling invariance in Eq. (15) and \(\lambda \) is a parameter satisfying \(0\le \lambda \le 1\). In particular, \(\varvec{p}^{(d)}_{1}\) represents the projective measurement of rank 1, achieving the maximum G(m) with the minimum F(m) and R(m). Conversely, \(\varvec{p}^{(d)}_{d}\) represents the identity operation, achieving the minimum G(m) with the maximum F(m) and R(m). Moreover, the measurements represented by \(\varvec{m}^{(d)}_{1,d-1}(\lambda )\) are the optimal measurements, saturating the upper bounds of G(m) for given F(m) or R(m) [25].

3 Steepest directions

Herein, we find the directions of steepest ascent and descent of G(m), F(m), and R(m). Under the conditions of Eq. (10), these directions are not necessarily parallel or antiparallel to the gradient vectors of G(m), F(m), and R(m). That is, consider modifying a measurement \(\varvec{\lambda }_m\) by an infinitesimal vector \(\varvec{\varepsilon }_m\) as

However, \(\varvec{\varepsilon }_m\) cannot be arbitrary. After the modification, \(\varvec{\lambda }'_m\) must also satisfy the conditions of Eq. (10).

Measurement and its modification. The points A and B denote measurements, and the attached arrows connote some possible modifications. The gray region shows a forbidden region \(\lambda _{m(i-1)}<\lambda _{mi}\). The vector \(\varvec{e}_{i-1}-\varvec{e}_i\) is normal to its boundary \(\lambda _{m(i-1)}=\lambda _{mi}\)

Figure 1 shows a sketch of this situation. Although the measurement A can accept any modification, the measurement B cannot because some modifications would move it into the gray region forbidden by Eq. (10). As long as the modification is infinitesimal, such a violation occurs only when the measurement is on any of the boundaries. Therefore, \(\varvec{\varepsilon }_m\) is restricted when \(\varvec{\lambda }_m\) has some equal signs in Eq. (10).

However, not all inequalities in Eq. (10) are relevant. The relevant inequalities are \(\lambda _{md}\ge 0\), \(\lambda _{m1}\ge \lambda _{mi}\), and \(\lambda _{mi}\ge \lambda _{md}\). The other inequalities can be ignored by rescaling and rearranging \(\varvec{\lambda }'_m\). For example, if \(\lambda '_{m1}>1\), \(\varvec{\lambda }'_m\) is rescaled by Eq. (15) to satisfy \(\lambda '_{m1}\le 1\), and if \(\lambda '_{m2}<\lambda '_{m3}\), \(\lambda '_{m2}\) and \(\lambda '_{m3}\) are interchanged to satisfy \(\lambda '_{m2}>\lambda '_{m3}\). Note that these operations do not affect G(m), F(m), and R(m). To keep the three relevant inequalities, \(\varvec{\varepsilon }_m\) should satisfy

where \(\varvec{e}_i\) is the unit vector along the ith axis. These are because \(\varvec{e}_d\), \(\varvec{e}_1-\varvec{e}_i\), and \(\varvec{e}_i-\varvec{e}_d\) are normal to the boundaries \(\lambda _{md}=0\), \(\lambda _{m1}=\lambda _{mi}\), and \(\lambda _{mi}=\lambda _{md}\), respectively (Fig. 1).

Under the conditional equations (19)–(21), we consider the steepest directions of G(m), F(m), and R(m). As shown in Appendix A, they are derived from the gradient vectors \(\varvec{\nabla } G(m)\), \(\varvec{\nabla } F(m)\), and \(\varvec{\nabla } R(m)\) in Eqs. (70)–(72). Three unit vectors are obtained for each function as follows.

The first vector is a unit vector in the gradient direction. For G(m), F(m), and R(m), it is given by

respectively, where

Directions of gradient and steepest ascent on boundary. The plane denotes the boundary \(\lambda _{m(d-1)}=\lambda _{md}\) with the normal vector \(\varvec{e}_{d-1}-\varvec{e}_d\), and the region above it is the forbidden region \(\lambda _{m(d-1)}<\lambda _{md}\). The vectors \(\varvec{r}_m\) and \(\varvec{r}^{(+)}_m\) are the unit vectors of R(m) in the gradient and steepest-ascent directions, respectively, for a measurement on the boundary. The vectors \(\varvec{g}_m\) and \(\varvec{g}^{(+)}_m\) are unit vectors of G(m). The angle between \(\varvec{r}^{(+)}_m\) and \(\varvec{r}_m\) is \(\theta _r\)

The second vector is a unit vector in the steepest-ascent direction. For G(m), F(m), and R(m), it is given by

respectively, where \(n_d\) is the degeneracy of the minimum singular value. If the minimum singular value degenerates as \(n_d\ne 1\) (e.g., \(\lambda _{m(d-1)}=\lambda _{md}\) with \(n_d=2\)), \(\varvec{r}^{(+)}_m\) differs from \(\varvec{r}_m\). In this case, \(\varvec{r}_m\) points from the boundary \(\lambda _{m(d-1)}=\lambda _{md}\) into the forbidden region \(\lambda _{m(d-1)}<\lambda _{md}\) as illustrated in Fig. 2, violating the condition of Eq. (21). The steepest-ascent direction \(\varvec{r}^{(+)}_m\) is obtained by projecting \(\varvec{r}_m\) onto the boundary and normalizing the projected vector to length 1 (see Appendix A). The difference is the angle \(\theta _r\) measured between \(\varvec{r}^{(+)}_m\) and \(\varvec{r}_m\),

The third vector is a unit vector in the steepest-descent direction. For G(m), F(m), and R(m), it is given by

respectively, where \(n_1\) is the degeneracy of the maximum singular value and \(n_0\) is that of the singular value 0. If the maximum singular value degenerates as \(n_1\ne 1\) (e.g., \(\lambda _{m1}=\lambda _{m2}\) with \(n_1=2\)), \(\varvec{g}^{(-)}_m\) differs from \(-\varvec{g}_m\). The difference is the angle \(\theta _g\) measured between \(\varvec{g}^{(-)}_m\) and \(-\varvec{g}_m\),

Similarly, if some singular values are 0 as \(n_0\ne 0\) (e.g., \(\lambda _{md}=0\) with \(n_0=1\)), \(\varvec{f}^{(-)}_m\) differs from \(-\varvec{f}_m\). The difference is the angle \(\theta _f\) measured between \(\varvec{f}^{(-)}_m\) and \(-\varvec{f}_m\),

In this case, \(\varvec{r}^{(-)}_m\) is not \(-\varvec{r}_m\) but a zero vector \(\varvec{0}\).

The above equations give \(\varvec{0}/0\) or 0/0, when a zero vector is normalized. For example, when \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{1}\), Eq. (22) gives \(\varvec{g}_m=\varvec{0}/0\) because \(\varvec{\nabla } G(m)=\varvec{0}\) from Eq. (70) in Appendix A. Unfortunately, the limit of \(\varvec{g}_m\) as \(\varvec{\lambda }_m\rightarrow \varvec{p}^{(d)}_{1}\) does not exist. In such cases, we simply assume \(\varvec{0}/0=\varvec{0}\) and \(0/0=0\). Specifically,

4 Angles

In this section, we consider the angle between the steepest directions of the information and disturbance. This angle concerns the local trade-off between the information and disturbance when a measurement is slightly modified. Two information–disturbance pairs are discussed herein: G(m) versus F(m) and G(m) versus R(m).

Relation between angle and trade-off. For the information G(m) and the disturbance F(m) or R(m), a measurement is modified to increase the information a in an effective way, b as far as possible, and c with decreasing the disturbance. The vectors \(\varvec{g}^{(+)}_m\), \(\varvec{f}^{(+)}_m\), and \(\varvec{r}^{(+)}_m\) are unit vectors in the steepest-ascent directions of G(m), F(m), and R(m), respectively. The vector \(\varvec{\varepsilon }_m\) is a measurement modification. In panel c, the line \(S_I\) denotes constant-information surface according to G(m), whereas the line \(S_D\) denotes constant-disturbance surface according to F(m) or R(m)

Figure 3 illustrates the relation between the angle and the trade-off. Suppose that the measurement is modified by \(\varvec{\varepsilon }_m\) as in Eq. (18). To effectively increase the obtained information, \(\varvec{\varepsilon }_m\) should align in a close direction to \(\varvec{g}^{(+)}_m\), as shown in Fig. 3a. However, in this case, \(\varvec{\varepsilon }_m\) points in an approximately opposite direction to \(\varvec{f}^{(+)}_m\) or \(\varvec{r}^{(+)}_m\), because the angles between \(\varvec{g}^{(+)}_m\) and \(\varvec{f}^{(+)}_m\), and \(\varvec{g}^{(+)}_m\) and \(\varvec{r}^{(+)}_m\) are usually obtuse as will be shown later. This means that such a modification usually increases the disturbance in the system as a trade-off, since decreasing F(m) or R(m) means increasing disturbance. The wider the angle, the larger the trade-off.

For G(m) versus F(m), the angle between \(\varvec{g}^{(+)}_m\) and \(\varvec{f}^{(+)}_m\) is expressed by their dot product \(C^{(++)}_{GF}=\varvec{g}^{(+)}_m\cdot \varvec{f}^{(+)}_m\). This dot product is the cosine of the angle because the two vectors are normalized. Its value is determined as

from Eqs. (22), (23), (26), and (27). Note that by assuming \(0/0=0\), \(C^{(++)}_{GF}=0\) if \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{1}\) or \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{d}\). The last inequality of Eq. (38) can be proven as

As \(C^{(++)}_{GF}\le 0\), the angle between \(\varvec{g}^{(+)}_m\) and \(\varvec{f}^{(+)}_m\) is either right or obtuse. The maximum value \(C^{(++)}_{GF}=0\) is achieved at \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{r}\), whereas the minimum value \(C^{(++)}_{GF}=-1\) is achieved at optimal measurements \(\varvec{\lambda }_m=\varvec{m}^{(d)}_{1,d-1}(\lambda )\).

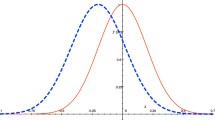

Ranges of angles between a \(\varvec{g}^{(+)}_m\) and \(\varvec{f}^{(+)}_m\), and b \(\varvec{g}^{(+)}_m\) and \(\varvec{r}^{(+)}_m\). The gray regions show the possible ranges of \(C^{(++)}_{GF}=\varvec{g}^{(+)}_m\cdot \varvec{f}^{(+)}_m\) and \(C^{(++)}_{GR}=\varvec{g}^{(+)}_m\cdot \varvec{r}^{(+)}_m\) as functions of G(m) in \(d=4\). The point \(P_r\) denotes \(\varvec{p}^{(d)}_{r}\), the line (k, l) denotes \(\varvec{m}^{(d)}_{k,l}(\lambda )\), and the dotted line \(L_n\) in panel b denotes the limits as measurements having \(n_d=n\) approach the optimal ones

Figure 4a shows the possible range of \(C^{(++)}_{GF}\) as a function of G(m) in \(d=4\). The possible range is determined similarly to Appendix A of Ref. [25]. The point \(P_r\) denotes \(\varvec{p}^{(d)}_{r}\), and the line (k, l) denotes \(\varvec{m}^{(d)}_{k,l}(\lambda )\) as \(0<\lambda <1\). \(C^{(++)}_{GF}\) cannot have unique limits as \(\varvec{\lambda }_m\rightarrow \varvec{p}^{(d)}_{1}\) and \(\varvec{\lambda }_m\rightarrow \varvec{p}^{(d)}_{d}\), where \(C^{(++)}_{GF}=0\) by Eqs. (35) and (36). For example, although both \(\varvec{m}^{(4)}_{3,1}(\lambda )\) and \(\varvec{m}^{(4)}_{2,2}(\lambda )\) become \(\varvec{p}^{(4)}_{4}\) at \(\lambda =1\), they give different limits of \(C^{(++)}_{GF}\) as \(\lambda \rightarrow 1\). Figure 4a shows that their corresponding lines (3, 1) and (2, 2) do not coincide at the left ends as \(\lambda \rightarrow 1\), i.e., at \(G(m)=0.25\).

Similarly, for G(m) versus R(m), the cosine of the angle between \(\varvec{g}^{(+)}_m\) and \(\varvec{r}^{(+)}_m\) is given by \(C^{(++)}_{GR}=\varvec{g}^{(+)}_m\cdot \varvec{r}^{(+)}_m\), determined as

from Eqs. (22), (26), and (28). Note that by assuming \(0/0=0\), \(C^{(++)}_{GR}=0\) if \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{1}\) or \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{d}\), where \(n_d=d\) when \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{d}\). The angle between \(\varvec{g}^{(+)}_m\) and \(\varvec{r}^{(+)}_m\) is also either right or obtuse. The maximum value \(C^{(++)}_{GR}=0\) is achieved at \(\lambda _{md}=0\) or \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{d}\), whereas the minimum value \(C^{(++)}_{GR}=-1\) is achieved at optimal measurements \(\varvec{\lambda }_m=\varvec{m}^{(d)}_{1,d-1}(\lambda )\) using \(n_d=d-1\).

Figure 4b shows the possible range of \(C^{(++)}_{GR}\) as a function of G(m) in \(d=4\). The dotted line \(L_n\) denotes the limits of \(C^{(++)}_{GR}\) as measurements having \(n_d=n\) approach the optimal ones, that is, the values obtained for \(\varvec{\lambda }_m=\varvec{m}^{(d)}_{1,d-1}(\lambda )\) but using \(n_d=n\) instead of \(n_d=d-1\). This line is an open boundary because \(C^{(++)}_{GR}\) jumps to \(-1\) at \(\varvec{\lambda }_m=\varvec{m}^{(d)}_{1,d-1}(\lambda )\). By the lines (k, l) and \(L_n\), the region is divided into overlapping subregions according to \(n_d\). Similar to the case of \(C^{(++)}_{GF}\), \(C^{(++)}_{GR}\) cannot have unique limits as \(\varvec{\lambda }_m\rightarrow \varvec{p}^{(d)}_{1}\) and \(\varvec{\lambda }_m\rightarrow \varvec{p}^{(d)}_{d}\), where \(C^{(++)}_{GR}=0\) by Eqs. (35) and (36).

The above angles are compared with the angles between the gradient vectors, \(C_{GF}=\varvec{g}_m\cdot \varvec{f}_m\) and \(C_{GR}=\varvec{g}_m\cdot \varvec{r}_m\). From Eqs. (26) and (27),

However, \(\varvec{r}_m\) is not equal to \(\varvec{r}^{(+)}_m\) if \(n_d>1\), as in Eqs. (24) and (28). Hence, \(C_{GR}\) is different from \(C^{(++)}_{GR}\), given by

From Eq. (29), \(C^{(++)}_{GR}\) and \(C_{GR}\) are related as

if \(\varvec{\lambda }_m\ne \varvec{p}^{(d)}_{d}\). However, if \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{d}\), \(C^{(++)}_{GR}=\cos \theta _r=0\) but \(C_{GR}=-1/(d-1)\).

Any measurement cannot achieve \(C_{GR}=-1\). Even the optimal measurements \(\varvec{m}^{(d)}_{1,d-1}(\lambda )\) give \(C_{GR}>-1\) with \(\lambda \)-dependence. As Fig. 2 illustrates, if \(n_d>1\) like the optimal measurements, \(\varvec{g}_m\) lies on the boundary \(\lambda _{m(d-1)}=\lambda _{md}\) because \(\left( \varvec{e}_{d-1}-\varvec{e}_d\right) \cdot \varvec{g}_m=0\), whereas \(\varvec{r}_m\) does not. Therefore, \(\varvec{r}_m\) cannot be antiparallel to \(\varvec{g}_m\). In contrast, \(\varvec{r}^{(+)}_m\) can because it is obtained by projecting \(\varvec{r}_m\) onto the boundary.

Using \(C^{(++)}_{GF}\) and \(C^{(++)}_{GR}\), we now discuss an example of a local trade-off between information and disturbance. When a measurement \(\varvec{\lambda }_m\) is modified by \(\varvec{\varepsilon }_m\) as in Eq. (18), G(m), F(m), and R(m), respectively change as follows:

As an example, \(\varvec{\varepsilon }_m\) is set to \(\varepsilon \varvec{g}^{(+)}_m\) with a positive infinitesimal \(\varepsilon \) to increase G(m) as far as possible, as shown in Fig. 3b. In this case, G(m) increases by

However, F(m) decreases as

where

is \(\Delta F(m)\) when F(m) is increased as far as possible by \(\varvec{\varepsilon }_m=\varepsilon \varvec{f}^{(+)}_m\). Similarly, R(m) decreases as

from Eq. (43), where

is \(\Delta R(m)\) when R(m) is increased as far as possible by \(\varvec{\varepsilon }_m=\varepsilon \varvec{r}^{(+)}_m\) (not by \(\varvec{\varepsilon }_m=\varepsilon \varvec{r}_m\)). Note that \(\varvec{\varepsilon }_m=\varepsilon \varvec{r}_m\) is forbidden under the condition of Eq. (21). Equation (48) shows a local trade-off between G(m) and F(m), and Eq. (50) shows that between G(m) and R(m).

These trade-offs, described by \(C^{(++)}_{GF}\) and \(C^{(++)}_{GR}\), are special cases obtained for \(\varvec{\varepsilon }_m=\varepsilon \varvec{g}^{(+)}_m\). To describe the entire local trade-off, the angles between the other steepest directions, such as \(C^{(--)}_{GF}=\varvec{g}^{(-)}_m\cdot \varvec{f}^{(-)}_m\), are also needed, as will be shown in the next section. The remaining angles are summarized in Appendix B.

5 Correlation

To describe the entire local trade-off, we consider the correlation between the information and disturbance changes. The two changes are plotted on an information–disturbance plane for various measurement modifications. The points plotted are distributed in a region characterized by four different angles between the steepest directions of the information and disturbance.

For G(m) versus F(m), when a measurement \(\varvec{\lambda }_m\) is modified by \(\varvec{\varepsilon }_m\), the changes of the information and the disturbance are given by Eqs. (44) and (45), respectively. They are normalized as

to make it easier to compare different measurements.

Correlation between changes in G(m) and F(m). For six different measurements of \(d=4\), the normalized changes \(\Delta g_m\) and \(\Delta f_m\) are plotted using 250 random \(\varvec{\varepsilon }_m\). The ellipse \(\Sigma _{GF}\), characterized by \(C_{GF}\), encloses the region if \(\varvec{\varepsilon }_m\)’s were unconditional, whereas the boundary \(\Gamma _{GF}\) encloses the region when \(\varvec{\varepsilon }_m\)’s are conditioned by Eqs. (19) and (20). The points \(G^\pm \) and \(F^\pm \) are given by \(\varvec{\varepsilon }_m=\varepsilon \varvec{g}^{(\pm )}_m\) and \(\varvec{\varepsilon }_m=\varepsilon \varvec{f}^{(\pm )}_m\), respectively

For various \(\varvec{\varepsilon }_m\), \(\Delta g_m\) and \(\Delta f_m\) are plotted on a plane. The modification \(\varvec{\varepsilon }_m\) should satisfy the conditions of Eqs. (19) and (20). However, there is no need to impose the condition of Eq. (21) on \(\varvec{\varepsilon }_m\) for the case of G(m) versus F(m). This is because as long as R(m) is not used, the inequality \(\lambda _{mi}\ge \lambda _{md}\) in Eq. (10) can also be ignored by rearranging \(\varvec{\lambda }'_m\). Figure 5 shows the plotted graphs for six measurements of \(d=4\). The points were generated by 250 random \(\varvec{\varepsilon }_m\) normalized as \(\left\| \varvec{\varepsilon }_m \right\| =0.01\).

If \(n_1=1\) and \(n_0=0\), the measurement \(\varvec{\lambda }_m\) is away from the relevant boundaries \(\lambda _{m1}=\lambda _{mi}\) and \(\lambda _{md}=0\), like the measurement A in Fig. 1. Therefore, it can accept any \(\varvec{\varepsilon }_m\) without constraint from Eqs. (19) and (20). Figure 5a shows this case. The plotted points lie inside the ellipse \(\Sigma _{GF}\) generated by

where \(0 \le \phi < 2\pi \). The ellipse \(\Sigma _{GF}\) is described by

with an angle of \(-45^\circ \). The shape of \(\Sigma _{GF}\) is characterized by \(C_{GF}\), circular when \(C_{GF}=0\), linear (with slope \(-1\)) when \(C_{GF}=-1\) (Fig. 5b), and elliptical (as described above) otherwise.

The points \(G^\pm \) and \(F^\pm \) correspond to \(\varvec{\varepsilon }_m=\varepsilon \varvec{g}^{(\pm )}_m\) and \(\varvec{\varepsilon }_m=\varepsilon \varvec{f}^{(\pm )}_m\), respectively. Their coordinates are given by

where \(C^{(-+)}_{GF}=\varvec{g}^{(-)}_m\cdot \varvec{f}^{(+)}_m\ge 0\) and \(C^{(+-)}_{GF}=\varvec{g}^{(+)}_m\cdot \varvec{f}^{(-)}_m\ge 0\) are given by Eqs. (87) and (88) in Appendix B. Note that by Eq. (35), \(\varvec{g}^{(+)}_m\cdot \varvec{g}_m=0\) when \(n_0=d-1\) and by Eq. (36), \(\varvec{f}^{(+)}_m\cdot \varvec{f}_m =0\) when \(n_1=d\). The point \(G^+\) is the case discussed in the preceding section.

The tilted \(\Sigma _{GF}\) indicates that \(\Delta g_m\) and \(\Delta f_m\) are negatively correlated. When \(n_1=1\) and \(n_0=0\), \(C_{GF}\) can be related to the Pearson correlation coefficient using isotropic modifications. That is, let \(\varvec{\varepsilon }^{(n)}_m\) be a modification normalized to \(\varepsilon \) for \(n=1,2,\ldots ,N_p\). They are assumed to be isotropic as

where \(\varepsilon ^{(n)}_{mi}\) is the ith component of \(\varvec{\varepsilon }^{(n)}_m\). When the points are generated using \(\{\varvec{\varepsilon }^{(n)}_m\}\), their correlation coefficient is equal to \(C_{GF}\). The perfect negative correlation \(C_{GF}=-1\) is achieved by the optimal measurements \(\varvec{\lambda }_m=\varvec{m}^{(d)}_{1,d-1}(\lambda )\), as shown in Fig. 5b. Conversely, the non-correlated case \(C_{GF}=0\) cannot be achieved when \(n_1=1\) and \(n_0=0\).

In contrast, if \(n_1>1\) or \(n_0>0\), the measurement \(\varvec{\lambda }_m\) is on the relevant boundaries \(\lambda _{m1}=\lambda _{mi}\) or \(\lambda _{md}=0\), like the measurement B in Fig. 1. Some \(\varvec{\varepsilon }_m\)’s are prohibited by Eqs. (19) and (20). Therefore, the plotted points distribute only in a subregion of the region enclosed by \(\Sigma _{GF}\). This case is shown in Fig. 5c for \(n_1=2\), Fig. 5d for \(n_0=1\), and Fig. 5e for \(n_1=2\) and \(n_0=1\). Although \(G^+\) and \(F^+\) are always on \(\Sigma _{GF}\), \(G^-\) is not on \(\Sigma _{GF}\) if \(n_1>1\), and \(F^-\) is not on \(\Sigma _{GF}\) if \(n_0>0\).

The boundary \(\Gamma _{GF}\) of the subregion consists of four curves connecting the four points \(G^+\), \(F^+\), \(G^-\), and \(F^-\). These curves are generated by

where \(0 \le \varphi < \pi /2\). The equations of these curves are provided in Appendix C. They are elliptical arcs characterized by \(C^{(++)}_{GF}\), \(-C^{(-+)}_{GF}\), \(C^{(--)}_{GF}\), and \(-C^{(+-)}_{GF}\), where \(C^{(--)}_{GF}=\varvec{g}^{(-)}_m\cdot \varvec{f}^{(-)}_m\le 0\) is given by Eq. (89) in Appendix B.

Therefore, the correlation between \(\Delta g_m\) and \(\Delta f_m\) can be represented by the four coefficients \(\{C^{(++)}_{GF},-C^{(-+)}_{GF},C^{(--)}_{GF},-C^{(+-)}_{GF}\}\). If \(n_1=1\) and \(n_0=0\), the four coefficients are equal. For example, \(\{-0.60,-0.60,-0.60,-0.60\}\) in Fig. 5a and \(\{-1,-1,-1,-1\}\) in Fig. 5b. Otherwise, the four coefficients are not equal. In Fig. 5c, they are \(\{-0.26,-0.79,-0.79,-0.26\}\), which denotes that \(\Gamma _{GF}\) is flatter in the left region of the line between \(F^+\) and \(F^-\) than in the right region. In Fig. 5d, they are \(\{-0.37,-0.37,-0.87,-0.87\}\), which denotes that \(\Gamma _{GF}\) is flatter in the lower region of the line between \(G^+\) and \(G^-\) than in the upper region. In Fig. 5e, \(\Gamma _{GF}\) is linear between \(G^-\) and \(F^-\) as denoted by \(\{-0.13,-0.43,-1,-0.32\}\).

Unfortunately, the case of \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{r}\) is anomalous in the sense that some of \(C^{(\pm \pm )}_{GF}\) fail to characterize \(\Gamma _{GF}\). If \(r\ne 1\) and \(r\ne d\), \(\Sigma _{GF}\) is a circle from \(C_{GF}=0\). However, \(\Gamma _{GF}\) is its first-quadrant quarter, as shown in Fig. 5f, although the coefficients are \(\{0,0,0,0\}\). If \(r=1\) or \(r=d\), those \(\Sigma _{GF}\) and \(\Gamma _{GF}\) collapse to lines although \(C_{GF}=0\). The lines are vertical if \(r=1\) and horizontal if \(r=d\). The anomalous case occurs because some of the steepest directions are zero vectors, as explained in Appendix C.

Correlation between changes in G(m) and R(m). For six different measurements of \(d=4\), the normalized changes \(\Delta g_m\) and \(\Delta r_m\) are plotted using 250 random \(\varvec{\varepsilon }_m\). The ellipse \(\Sigma _{GR}\), characterized by \(C_{GR}\), encloses the region if \(\varvec{\varepsilon }_m\)’s were unconditional, whereas the boundary \(\Gamma _{GR}\) encloses the region when \(\varvec{\varepsilon }_m\)’s are conditioned by Eqs. (19)–(21). The points \(G^\pm \) and \(R^\pm \) are given by \(\varvec{\varepsilon }_m=\varepsilon \varvec{g}^{(\pm )}_m\) and \(\varvec{\varepsilon }_m=\varepsilon \varvec{r}^{(\pm )}_m\), respectively

Similarly, for G(m) versus R(m), the change of R(m) in Eq. (46) is normalized as

For various \(\varvec{\varepsilon }_m\), \(\Delta g_m\) and \(\Delta r_m\) are plotted on a plane. The modification \(\varvec{\varepsilon }_m\) should satisfy the conditions of Eqs. (19)–(21). Figure 6 shows the plotted graphs for six measurements of \(d=4\). The points were generated similarly to the case of G(m) versus F(m).

If \(n_1=n_d=1\) and \(n_0=0\), the measurement \(\varvec{\lambda }_m\) is away from the relevant boundaries \(\lambda _{m1}=\lambda _{mi}\), \(\lambda _{mi}=\lambda _{md}\), and \(\lambda _{md}=0\). It can accept any \(\varvec{\varepsilon }_m\) without constraint from Eqs. (19)–(21). Figure 6a shows this case. The plotted points lie inside the ellipse \(\Sigma _{GR}\) described by

with an angle of \(-45^\circ \). The shape of \(\Sigma _{GR}\) is characterized by \(C_{GR}\), although \(\Sigma _{GR}\) cannot be linear because \(C_{GR}>-1\). The points \(G^\pm \) and \(R^\pm \), corresponding to \(\varvec{\varepsilon }_m=\varepsilon \varvec{g}^{(\pm )}_m\) and \(\varvec{\varepsilon }_m=\varepsilon \varvec{r}^{(\pm )}_m\), are given by

where \(C^{(+-)}_{GR}=\varvec{g}^{(+)}_m\cdot \varvec{r}^{(-)}_m\ge 0\) and \(C^{(--)}_{GR}=\varvec{g}^{(-)}_m\cdot \varvec{r}^{(-)}_m\le 0\) are given by Eqs. (92) and (93) in Appendix B.

In contrast, unless \(n_1=n_d=1\) and \(n_0=0\), the measurement \(\varvec{\lambda }_m\) is on the relevant boundaries. Because \(\varvec{\varepsilon }_m\)’s are constrained by Eqs. (19)–(21), the plotted points distribute only in a subregion of the region enclosed by \(\Sigma _{GR}\). This case is shown in Fig. 6b for \(n_d=2\), Fig. 6c for \(n_1=n_d=2\), and Fig. 6d for an optimal measurement \(n_d=3\). Although \(G^+\) is always on \(\Sigma _{GR}\), \(R^+\) is not on \(\Sigma _{GR}\) if \(n_d>1\), \(G^-\) is not on \(\Sigma _{GR}\) if \(n_1>1\), and \(R^-\) is not on \(\Sigma _{GR}\) if \(n_0>0\). The boundary \(\Gamma _{GR}\) of the subregion consists of four elliptical arcs connecting the four points \(G^+\), \(R^+\), \(G^-\), and \(R^-\), as described in Appendix C. The arcs are characterized by \(C^{(++)}_{GR}\), \(-C^{(-+)}_{GR}\), \(C^{(--)}_{GR}\), and \(-C^{(+-)}_{GR}\), where \(C^{(-+)}_{GR}=\varvec{g}^{(-)}_m\cdot \varvec{r}^{(+)}_m\ge 0\) is given by Eq. (91) in Appendix B.

The correlation between \(\Delta g_m\) and \(\Delta r_m\) can be represented by the four coefficients \(\{C^{(++)}_{GR},-C^{(-+)}_{GR},C^{(--)}_{GR},-C^{(+-)}_{GR}\}\). If \(n_1=n_d=1\) and \(n_0=0\), the coefficients are equal. For example, they are \(\{-0.17,-0.17,-0.17,-0.17\}\) in Fig. 6a. Otherwise, the coefficients are not equal. In Fig. 6b, they are \(\{-0.58,-0.58,-0.38,-0.38\}\), which denotes that \(\Gamma _{GR}\) is flatter in the upper region of the line between \(G^+\) and \(G^-\) than in the lower region. In Fig. 6c, \(R^+\) coincides with \(G^-\) as denoted by \(\{-0.41,-1,-0.67,-0.27\}\). In Fig. 6d, \(\Gamma _{GR}\) is linear between \(G^+\) and \(R^+\) for an optimal measurement, as denoted by \(\{-1,-1,-0.47,-0.47\}\).

The cases of \(\lambda _{md}=0\) and \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{d}\) are anomalous, as explained in Appendix C. In the case of \(\lambda _{md}=0\), \(\Sigma _{GR}\) is a circle from \(C_{GR}=0\) if \(\varvec{\lambda }_m\ne \varvec{p}^{(d)}_{1}\), but it collapses to a vertical line if \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{1}\). If \(\varvec{\lambda }_m\ne \varvec{p}^{(d)}_{r}\), \(\Gamma _{GR}\) are untilted elliptical arcs given by Eq. (98) in the first quadrant and Eq. (99) in the second quadrant, but are horizontal lines in the other quadrants (Fig. 6e), although the coefficients are \(\{0,0,0,0\}\). Moreover, it collapses to a vertical line in the second quadrant if \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{r}\) and likewise in the first quadrant if \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{1}\). In contrast, in the case of \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{d}\), \(\Gamma _{GR}\) is an elliptical sector of \(\Sigma _{GR}\), as shown in Fig. 6f, although the coefficients are \(\{0,0,0,-1/(d-1)\}\).

Allowed regions of a G(m) versus F(m) and b G(m) versus R(m). The point \(P_r\) denotes \(\varvec{p}^{(d)}_{r}\), and the line (k, l) denotes \(\varvec{m}^{(d)}_{k,l}(\lambda )\). The six points from a to f in panel a correspond to the measurements in Fig. 5, and those in panel b correspond to the measurements in Fig. 6. The lines \(E_f\) and \(E_r\) show the transitions of measurement as displayed in Fig. 8 of Sect. 6

The local relations shown in Figs. 5 and 6 are consistent with the global ones shown by the allowed regions of information versus disturbance. Figure 7a shows that of G(m) versus F(m) [25]. Figure 5f reproduces the neighborhood of point \(P_2\). Moreover, Fig. 5b reproduces the upper boundary (1, 3) around the point b except for its lower neighborhood derived from the higher-order terms in \(\varvec{\varepsilon }_m\), and Fig. 5e reproduces the lower boundary (2, 1) around the point e. The slopes \(\Delta f_m/\Delta g_m=-1\) in Fig. 5b and \(\Delta f_m/\Delta g_m=-\cos \theta _f/\cos \theta _g\) in Fig. 5e accord with the boundary slope dF(m)/dG(m) in Ref. [26] via Eqs. (73) and (74) in Appendix A. Similarly, as seen from Fig. 7b showing the allowed region of G(m) versus R(m) [25], panels d, e, and f of Fig. 6 reproduce the upper boundary (1, 3) around the point d, the lower boundary \(R(m)=0\) around the point e, and the neighborhood of point \(P_4\), respectively. However, when \(\lambda _{md}=0\) like Fig. 6e, \(\Delta r_m \ne 0\) does not mean \(\Delta R(m)\ne 0\), because \(\left\| \varvec{\nabla } R(m) \right\| =0\) by Eq. (75) in Appendix A.

6 Improvability

Finally, we attempt to improve the measurement according to the imperfectness of the correlation. By a general scheme, the measurement is modified to increase the information extraction while decreasing the disturbance. The improvability of the measurement is quantified by the angle between the steepest-ascent directions of the information and disturbance.

To improve a measurement \(\varvec{\lambda }_m\), the modification \(\varvec{\varepsilon }_m\) should be chosen such that \(\Delta G(m)>0\) with \(\Delta F(m)\) or \(\Delta R(m)\) also being positive. The condition for \(\varvec{\varepsilon }_m\) is illustrated in Fig. 3c. The line \(S_I\) orthogonal to \(\varvec{g}^{(+)}_m\) denotes the surface on which G(m) is constant, whereas the line \(S_D\) orthogonal to \(\varvec{f}^{(+)}_m\) or \(\varvec{r}^{(+)}_m\) denotes the surface on which F(m) or R(m) is constant. If \(\varvec{\varepsilon }_m\) points into the region colored in gray, the measurement is improved. Such \(\varvec{\varepsilon }_m\) always exists unless \(\varvec{g}^{(+)}_m\) is antiparallel to \(\varvec{f}^{(+)}_m\) or \(\varvec{r}^{(+)}_m\).

For G(m) versus F(m), the best choice of \(\varvec{\varepsilon }_m\) is

as shown in Fig. 3c. This increases both G(m) and F(m) symmetrically,

from Eqs. (47) and (49). Thus, the improvability of the measurement can be defined by \(1+C^{(++)}_{GF}\). The optimal measurements \(\varvec{\lambda }_m=\varvec{m}^{(d)}_{1,d-1}(\lambda )\) are not improvable because \(C^{(++)}_{GF}=-1\), i.e., \(\varvec{g}^{(+)}_m\) is antiparallel to \(\varvec{f}^{(+)}_m\). For example, when \(C^{(++)}_{GF}=-1\), no modification \(\varvec{\varepsilon }_m\) obtains \(\Delta G(m)>0\) and \(\Delta F(m)>0\), as shown in Fig. 5b.

The improvement can be repeated until the measurement is optimized as \(C^{(++)}_{GF}=-1\). For example, consider improving a measurement \(\varvec{\lambda }_m=\left( 0.8,0.7,0.4,0\right) \) by iterating Eq. (63) with \(\varepsilon =0.05\) for \(d=4\). Figure 8a shows the transitions of \(\{\lambda _{mi}\}\) and \(1+C^{(++)}_{GF}\) as functions of \(N_m\), where \(N_m\) is the number of modifications. As \(N_m\) increases, \(C^{(++)}_{GF}\) monotonously decreases to \(-1\) and exhibits no further change thereafter. The resultant measurement \(\varvec{\lambda }_m=\left( 0.93,0.39,0.39,0.39\right) \) is optimal. As a result, G(m) increases from 0.30 to 0.33 and F(m) from 0.76 to 0.87. The transitions of G(m) and F(m) are shown by the line \(E_f\) in Fig. 7a.

Similarly, for G(m) versus R(m), the best choice of \(\varvec{\varepsilon }_m\) is

This increases both G(m) and R(m) symmetrically,

from Eqs. (47) and (51). In the case of G(m) versus R(m), the improvability can be defined by \(1+C^{(++)}_{GR}\). As expected, the optimal measurements \(\varvec{\lambda }_m=\varvec{m}^{(d)}_{1,d-1}(\lambda )\) are not improvable because \(C^{(++)}_{GR}=-1\). For example, when \(C^{(++)}_{GR}=-1\), no modification \(\varvec{\varepsilon }_m\) obtains \(\Delta G(m)>0\) and \(\Delta R(m)>0\), as shown in Fig. 6d.

The improvement can be repeated until the measurement is optimized as \(C^{(++)}_{GR}=-1\). For example, consider improving the same measurement as the previous example by iterating Eq. (66) with \(\varepsilon =0.01\). Figure 8b shows the transitions of \(\{\lambda _{mi}\}\) and \(1+C^{(++)}_{GR}\) as functions of \(N_m\). As \(N_m\) increases, \(C^{(++)}_{GR}\) monotonously decreases to \(-1\), although it discontinuously decreases at each change in \(n_d\). It exhibits no further change after it becomes \(-1\) with \(n_d=d-1\). During the simulation, \(\varvec{\lambda }_m\) was checked if it satisfied Eq. (10) after each modification. If \(\lambda _{m1}>1\), \(\varvec{\lambda }_m\) was renormalized to \(\lambda _{m1}=1\) by Eq. (15) (when \(N_m\ge 52\)). If \(\lambda _{m(4-n_d)}<\lambda _{m4}\), the last modification was redone using a temporarily reduced \(\varepsilon \) such that \(\lambda _{m(4-n_d)}=\lambda _{m4}\) to update \(n_d\) (when \(N_m=33,55\)). The resultant measurement \(\varvec{\lambda }_m=\left( 1,0.31,0.31,0.31\right) \) is optimal. As a result, G(m) increases from 0.30 to 0.35 and R(m) from 0 to 0.30. The transitions of G(m) and R(m) are shown by the line \(E_r\) in Fig. 7b.

The modifications in Eqs. (63) and (66) slightly change the probability of the outcome m, i.e., \(p(m)=\sigma _m^2/d\) defined in Eq. (6). This probability does not change to first-order in \(\varvec{\varepsilon }_m\) by Eqs. (84)–(86) provided in Appendix A. However, p(m) increases by the second-order term in \(\varvec{\varepsilon }_m\) and decreases when \(\varvec{\lambda }_m\) is rescaled by Eq. (15). In practice, p(m) increases from 0.32 to 0.33 in Fig. 8a and by less than 0.01 in Fig. 8b.

As a caveat, the projective measurement \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{1}\) and the identity operation \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{d}\) are exceptions. They are the singular points of \(C^{(++)}_{GF}\) and \(C^{(++)}_{GR}\), for which we have assumed \(C^{(++)}_{GF}=C^{(++)}_{GR}=0\). Therefore, their improvabilities are calculated to be 1. However, they cannot be improved because they have already reached the maximum G(m), F(m), or R(m).

Transitions of measurement by iterated modifications for a G(m) versus F(m) and b G(m) versus R(m). For the initial measurement \(\varvec{\lambda }_m=\left( 0.8,0.7,0.4,0\right) \) in \(d=4\), the transitions of singular values \(\{\lambda _{mi}\}\) and improvability \(1+C^{(++)}_{GF}\) or \(1+C^{(++)}_{GR}\) are shown as functions of the number of modifications \(N_m\)

From the above, the improvability of a measurement can be quantified by \(1+C^{(++)}_{GF}\) or \(1+C^{(++)}_{GR}\). The measurement can be improved as long as the improvability is not zero. The larger the improvability, the more effectively the measurement can be improved. Interestingly, the improvability decreases in any measurement–improvement process. This law of improvability decrease is shown in Appendix D.

7 Summary and discussion

We discussed the local trade-off between information and disturbance in quantum measurements. When a measurement is slightly modified to enhance the information, increased disturbance in the system is the local trade-off. The measurement was described by the singular values \(\varvec{\lambda }_m\) of a measurement operator \(\hat{M}_m\). As functions of them, the information was quantified by the estimation fidelity G(m), whereas the disturbance was quantified by the operation fidelity F(m) and by the physical reversibility R(m). The present study investigated the local trade-offs between G(m) and F(m) and between G(m) and R(m).

In the local trade-off, the directions of steepest ascent and descent of the information and disturbance play an important role. For G(m), F(m), and R(m), their unit vectors in the directions of steepest ascent and descent, \(\varvec{g}^{(\pm )}_m\), \(\varvec{f}^{(\pm )}_m\), and \(\varvec{r}^{(\pm )}_m\), were derived from their unit gradient vectors, \(\varvec{g}_m\), \(\varvec{f}_m\), and \(\varvec{r}_m\). Using these vectors, the trade-off was shown as the correlation between the information and disturbance changes. The correlation was represented by the angles between the steepest directions, \(C^{(\pm \pm )}_{GF}=\varvec{g}^{(\pm )}_m\cdot \varvec{f}^{(\pm )}_m\) or \(C^{(\pm \pm )}_{GR}=\varvec{g}^{(\pm )}_m\cdot \varvec{r}^{(\pm )}_m\). Moreover, according to the imperfectness of the correlation, the measurement was improved to enhance the information gain while diminishing the disturbance. This improvability was quantified by \(1+C^{(++)}_{GF}\) or \(1+C^{(++)}_{GR}\).

The main difference of R(m) from F(m) is that the steepest-ascent direction \(\varvec{r}^{(+)}_m\) is not equal to the gradient direction \(\varvec{r}_m\) when the minimum singular value degenerates. This leads to some differences in the angle, correlation, and improvability. For example, the range of the angle \(C^{(++)}_{GR}\) is divided into subregions according to the degeneracy. In the correlation, the first elliptical sector characterized by \(C^{(++)}_{GR}\) is compressed. The improvability \(1+C^{(++)}_{GR}\) discontinuously decreases at each change in the degeneracy during iterated modifications.

Compared to G(m), F(m), and R(m), their averaged values over outcomes, G, F, and R [2, 15], are difficult to be analyzed similarly. Since they are given by \(G=\sum _m p(m)G(m)\) and so on, they are functions of \(N_o d\) singular values when the number of outcomes is \(N_o\). Therefore, their gradient vectors cannot be made when \(N_o\) is indefinite. Moreover, a measurement modification is difficult to be defined, because singular values of different outcomes are not independent of each other by Eq. (1). Our analysis assumes that the information and disturbance are characterized by a fixed number of the same independent parameters. This implies that other information–disturbance pairs having such properties could be analyzed similarly.

The above results are entirely general and fundamental to the quantum theory of measurements. They are applicable to any single-outcome process of an arbitrary measurement. From the correlation, there is a trade-off relation within the neighborhood of the measurement. This provides a framework for theorists to develop quantum measurement theories. From the improvability, one can find how much the measurement can be improved and how to do it. This provides a hint for experimentalists to improve their experiments. The results in this paper can broaden our perspectives on quantum measurements and are potentially useful for quantum information processing and communication.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Fuchs, C.A., Peres, A.: Quantum-state disturbance versus information gain: uncertainty relations for quantum information. Phys. Rev. A 53, 2038–2045 (1996)

Banaszek, K.: Fidelity balance in quantum operations. Phys. Rev. Lett. 86, 1366–1369 (2001)

Fuchs, C.A., Jacobs, K.: Information-tradeoff relations for finite-strength quantum measurements. Phys. Rev. A 63, 062305 (2001)

Banaszek, K., Devetak, I.: Fidelity trade-off for finite ensembles of identically prepared qubits. Phys. Rev. A 64, 052307 (2001)

Barnum, H.: Information–disturbance tradeoff in quantum measurement on the uniform ensemble. arXiv:quant-ph/0205155

D’Ariano, G.M.: On the Heisenberg principle, namely on the information–disturbance trade-off in a quantum measurement. Fortschr. Phys. 51, 318–330 (2003)

Ozawa, M.: Uncertainty relations for noise and disturbance in generalized quantum measurements. Ann. Phys. (NY) 311, 350–416 (2004)

Genoni, M.G., Paris, M.G.A.: Optimal quantum repeaters for qubits and qudits. Phys. Rev. A 71, 052307 (2005)

Mišta, L., Jr., Fiurášek, J., Filip, R.: Optimal partial estimation of multiple phases. Phys. Rev. A 72, 012311 (2005)

Maccone, L.: Information–disturbance tradeoff in quantum measurements. Phys. Rev. A 73, 042307 (2006)

Sacchi, M.F.: Information–disturbance tradeoff in estimating a maximally entangled state. Phys. Rev. Lett. 96, 220502 (2006)

Buscemi, F., Sacchi, M.F.: Information–disturbance trade-off in quantum-state discrimination. Phys. Rev. A 74, 052320 (2006)

Banaszek, K.: Information gain versus state disturbance for a single qubit. Open. Syst. Inf. Dyn. 13, 1–16 (2006)

Buscemi, F., Hayashi, M., Horodecki, M.: Global information balance in quantum measurements. Phys. Rev. Lett. 100, 210504 (2008)

Cheong, Y.W., Lee, S.W.: Balance between information gain and reversibility in weak measurement. Phys. Rev. Lett. 109, 150402 (2012)

Ren, X.J., Fan, H.: Single-outcome information gain of qubit measurements on different state ensembles. J. Phys. A Math. Theor. 47, 305302 (2014)

Fan, L., Ge, W., Nha, H., Zubairy, M.S.: Trade-off between information gain and fidelity under weak measurements. Phys. Rev. A 92, 022114 (2015)

Shitara, T., Kuramochi, Y., Ueda, M.: Trade-off relation between information and disturbance in quantum measurement. Phys. Rev. A 93, 032134 (2016)

Terashima, H.: Information, fidelity, and reversibility in general quantum measurements. Phys. Rev. A 93, 022104 (2016)

Nielsen, M.A., Chuang, I.L.: Quantum Computation and Quantum Information. Cambridge University Press, Cambridge (2000)

Nielsen, M.A., Caves, C.M.: Reversible quantum operations and their application to teleportation. Phys. Rev. A 55, 2547–2556 (1997)

Ueda, M., Imoto, N., Nagaoka, H.: Logical reversibility in quantum measurement: general theory and specific examples. Phys. Rev. A 53, 3808–3817 (1996)

Ueda, M.: Logical reversibility and physical reversibility in quantum measurement. In: Lim, S.C., Abd-Shukor, R., Kwek, K.H. (eds.) Frontiers in Quantum Physics, pp. 136–144. Springer, Singapore (1998)

Koashi, M., Ueda, M.: Reversing measurement and probabilistic quantum error correction. Phys. Rev. Lett. 82, 2598–2601 (1999)

Terashima, H.: Allowed region and optimal measurement for information versus disturbance in quantum measurements. Quantum Inf. Process. 16, 250 (2017)

Terashima, H.: Derivative of the disturbance with respect to information from quantum measurements. Quantum Inf. Process. 18, 63 (2019)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

Derivations of vectors

Herein, we outline the derivations of the unit vectors in the gradient, steepest-ascent, and steepest-descent directions of G(m), F(m), and R(m) under the conditions of Eqs. (19)–(21).

First, we calculate the unit vectors in the gradient directions \(\varvec{g}_m\), \(\varvec{f}_m\), and \(\varvec{r}_m\). The gradient vector of a function f is defined by

From Eqs. (11)–(13), the gradient vectors of G(m), F(m), and R(m) are, respectively, given by

where \(\varvec{l}_{n}\) is defined by Eq. (25). Their respective magnitudes are given by

By dividing the gradient vectors by their magnitudes, the unit vectors in the gradient directions are given as Eqs. (22)–(24).

Gradient vector of R(m) on boundary. The vector \(\varvec{r}_m\) is the unit gradient vector of R(m) for a measurement on the boundary \(\lambda _{m(d-1)}=\lambda _{md}\). The gray region shows the forbidden region \(\lambda _{m(d-1)}<\lambda _{md}\), and the vector \(\varvec{e}_{d-1}-\varvec{e}_d\) is normal to the boundary. By projecting \(\varvec{r}_m\) onto the boundary, the vector \(\varvec{r}'_{m}\) is obtained

Next, we consider the unit vectors in the steepest-ascent directions \(\varvec{g}^{(+)}_m\), \(\varvec{f}^{(+)}_m\), and \(\varvec{r}^{(+)}_m\). They are not necessarily equal to \(\varvec{g}_m\), \(\varvec{f}_m\), and \(\varvec{r}_m\) under the conditions of Eqs. (19)–(21). \(\varvec{\varepsilon }_m=\varepsilon \varvec{g}_m\) and \(\varvec{\varepsilon }_m=\varepsilon \varvec{f}_m\) with a positive infinitesimal \(\varepsilon \) satisfy the conditions. This means \(\varvec{g}^{(+)}_m=\varvec{g}_m\) and \(\varvec{f}^{(+)}_m=\varvec{f}_m\) in Eqs. (26) and (27). However, \(\varvec{\varepsilon }_m=\varepsilon \varvec{r}_m\) violates the condition of Eq. (21) if the minimum singular value degenerates:

where \(n_d\) is the degeneracy of the minimum singular value. For example, if \(\lambda _{m(d-1)}=\lambda _{md}\) and \(i=d-1\), the condition of Eq. (21) is violated as

In this case, \(\varvec{r}_m\) points from the boundary \(\lambda _{m(d-1)}=\lambda _{md}\) into the forbidden region \(\lambda _{m(d-1)}<\lambda _{md}\) (Fig. 9). In the forbidden region, \(\varvec{\lambda }'_m= \varvec{\lambda }_m +\varvec{\varepsilon }_m\) should be rearranged such that \(\lambda '_{md}\) is smaller than the other singular values, meaning that R(m) decreases rather than increases. For example, when \(d=3\) and \(\varvec{\lambda }_m=\left( 1,1/2,1/2\right) \), \(\varvec{\varepsilon }_m=\varepsilon \varvec{r}_m\) generates

R(m) decreases from 1/2 to \(\left( 1/2\right) -\left( 2\varepsilon /\sqrt{30}\right) \). Hence, \(\varvec{r}_m\) does not point in the steepest-ascent direction of R(m) if \(\lambda _{m(d-1)}=\lambda _{md}\).

If \(\lambda _{m(d-1)}=\lambda _{md}\), the steepest-ascent direction of R(m) is given by the vector \(\varvec{r}'_{m}\) obtained by projecting \(\varvec{r}_m\) onto the boundary, as shown in Fig. 9. Using the unit normal vector of the boundary,

the projected vector is given by

which satisfies the condition of Eq. (21) when \(i=d-1\). However, if \(\lambda _{m(d-2)}=\lambda _{md}\), the condition of Eq. (21) is violated when \(i=d-2\). In that case, \(\varvec{r}'_{m}\) is again projected onto the boundary \(\lambda _{m(d-2)}=\lambda _{md}\) using the unit normal vector

In general, if the degeneracy of the minimum singular value is \(n_d\), \(\varvec{r}_m\) should be projected \((n_d-1)\) times to satisfy the condition of Eq. (21) for all i. After normalizing the projected vector, the unit vector in the steepest-ascent direction of R(m) is determined as Eq. (28).

Finally, we consider the unit vectors in the steepest-descent directions \(\varvec{g}^{(-)}_m\), \(\varvec{f}^{(-)}_m\), and \(\varvec{r}^{(-)}_m\). They are not necessarily equal to \(-\varvec{g}_m\), \(-\varvec{f}_m\), and \(-\varvec{r}_m\) under the conditions of Eqs. (19)–(21). For example, \(\varvec{\varepsilon }_m=-\varepsilon \varvec{g}_m\) violates the condition of Eq. (20) if the maximum singular value degenerates:

where \(n_1\) is the degeneracy of the maximum singular value. By projecting and normalizing \(-\varvec{g}_m\), the unit vector in the steepest-descent direction of G(m) is given as Eq. (30). Similarly, \(\varvec{\varepsilon }_m=-\varepsilon \varvec{f}_m\) violates the condition of Eq. (19) if some singular values are 0:

where \(n_0\) is the degeneracy of the singular value 0. \(n_0=0\) if \(\lambda _{md}\ne 0\), and \(n_0=n_d\) if \(\lambda _{md}=0\). By projecting and normalizing \(-\varvec{f}_m\), the unit vector in the steepest-descent direction of F(m) is given as Eq. (31). Moreover, \(\varvec{\varepsilon }_m=-\varepsilon \varvec{r}_m\) violates the condition of Eq. (19) if \(\lambda _{md}=0\), because \(-\varvec{r}_m=-\varvec{e}_d\). As the projected vector of \(-\varvec{e}_d\) on the boundary \(\lambda _{md}=0\) is the zero vector \(\varvec{0}\), the unit vector in the steepest-descent direction of R(m) is \(-\varvec{r}_m\) if \(\lambda _{md}\ne 0\) but \(\varvec{0}\) if \(\lambda _{md}=0\). This is equivalently written as Eq. (32), because \(n_0= 0\) if \(\lambda _{md}\ne 0\), and \(n_0\ne 0\) if \(\lambda _{md}=0\).

All of the derived vectors are orthogonal to \(\varvec{\lambda }_m\):

This originates from the invariance of G(m), F(m), and R(m) under the rescaling operation in Eq. (15).

Formulas for angles

Herein, we show the angles between the steepest directions of the information and disturbance. Two information–disturbance pairs are discussed: G(m) versus F(m) and G(m) versus R(m).

For G(m) versus F(m), the cosines of the angles are defined by \(C^{(\pm \pm )}_{GF}=\varvec{g}^{(\pm )}_m\cdot \varvec{f}^{(\pm )}_m\) and \(C_{GF}=\varvec{g}_m\cdot \varvec{f}_m\). \(C^{(++)}_{GF}\) and \(C_{GF}\) are given by Eqs. (38) and (41), respectively. The remaining angles are

Therefore, the angles between \(\varvec{g}^{(-)}_m\) and \(\varvec{f}^{(+)}_m\), and \(\varvec{g}^{(+)}_m\) and \(\varvec{f}^{(-)}_m\) are either acute or right, whereas the angle between \(\varvec{g}^{(-)}_m\) and \(\varvec{f}^{(-)}_m\) is either right or obtuse. Using Eqs. (33) and (34), all the cosines are related as follows:

Similarly, for G(m) versus R(m), the cosines of the angles are defined by \(C^{(\pm \pm )}_{GR}=\varvec{g}^{(\pm )}_m\cdot \varvec{r}^{(\pm )}_m\) and \(C_{GR}=\varvec{g}_m\cdot \varvec{r}_m\). \(C^{(++)}_{GR}\) and \(C_{GR}\) are given by Eqs. (40) and (42), respectively. The remaining angles are

The angles between \(\varvec{g}^{(-)}_m\) and \(\varvec{r}^{(+)}_m\), and \(\varvec{g}^{(+)}_m\) and \(\varvec{r}^{(-)}_m\) are either acute or right, whereas the angle between \(\varvec{g}^{(-)}_m\) and \(\varvec{r}^{(-)}_m\) is either right or obtuse. Using Eqs. (29) and (33), all the cosines are related as follows:

if \(\varvec{\lambda }_m\ne \varvec{p}^{(d)}_{d}\). In contrast, if \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{d}\), the third equality in Eq. (94) does not hold, because \(C^{(++)}_{GR}=C^{(-+)}_{GR}=C^{(--)}_{GR}=0\) but \(-C^{(+-)}_{GR}=C_{GR}=-1/(d-1)\).

Equations of arcs

Herein, we describe the boundary equation in which the normalized changes of the information and disturbance are contained. The boundary consists of four elliptical arcs characterized by the angles between the steepest directions.

For G(m) versus F(m), the normal case \(\varvec{\lambda }_m\ne \varvec{p}^{(d)}_{r}\) is first considered. The boundary \(\Gamma _{GF}\) is generated by Eq. (59). Between \(G^+\) and \(F^+\), it coincides with the ellipse \(\Sigma _{GF}\) from Eqs. (26) and (27). Therefore, the arc in this interval is described by Eq. (55), characterized by \(C^{(++)}_{GF}\) from Eq. (41). However, between \(F^+\) and \(G^-\), \(\Gamma _{GF}\) is an elliptical arc described by

where we have used \(\Delta g'_m=\Delta g_m/\cos \theta _g\) and Eq. (90). This ellipse is obtained from \(\Sigma _{GF}\) by replacing \(C_{GF}\) with \(-C^{(-+)}_{GF}=C_{GF}/\cos \theta _g\) and horizontally compressing by a factor of \(1/\cos \theta _g\). The compression is just for the arc to be connected with the adjacent arcs at \(F^+\) and \(G^-\). Thus, the arc in this interval is characterized by \(-C^{(-+)}_{GF}\). When \(C^{(-+)}_{GF}=0\), the ellipse in Eq. (95) is untilted (with axes \(\cos \theta _g\) and 1), but when \(C^{(-+)}_{GF}=1\), it collapses to a line (with slope \(-1/\cos \theta _g\)). In the latter case, \(F^+\) coincides with \(G^-\), which means that the arc in this interval shrinks to a point.

Moreover, \(\Gamma _{GF}\) is an elliptical arc described by

with \(\Delta f'_m=\Delta f_m/\cos \theta _f\) between \(G^-\) and \(F^-\), and is an elliptical arc described by

between \(F^-\) and \(G^+\). The arcs in these intervals are characterized by \(C^{(--)}_{GF}\) and \(-C^{(+-)}_{GF}\), respectively. When \(C^{(--)}_{GF}=-1\), \(\Gamma _{GF}\) is linear between \(G^-\) and \(F^-\), as shown in Fig. 5e, and when \(C^{(+-)}_{GF}=1\), \(F^-\) coincides with \(G^+\).

In contrast, the case of \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{r}\) is anomalous in the sense that some of \(C^{(\pm \pm )}_{GF}\) fail to characterize \(\Gamma _{GF}\). This is because \(\varvec{g}^{(-)}_m=\varvec{f}^{(-)}_m = \varvec{0}\) from Eq. (37). The elliptical arcs generated by Eq. (59) collapse to lines or points regardless of \(C^{(\pm \pm )}_{GF}=0\) except for the first one. In addition, \(\varvec{g}_m=\varvec{g}^{(+)}_m = \varvec{0}\) if \(r=1\) from Eq. (35), and \(\varvec{f}_m=\varvec{f}^{(+)}_m= \varvec{0}\) if \(r=d\) from Eq. (36). These also collapse the first arc generated by Eq. (59) and \(\Sigma _{GF}\) generated by Eq. (54) to vertical or horizontal lines. The explicit shape of \(\Gamma _{GF}\) in the anomalous case is described in the main text.

Similarly, for G(m) versus R(m), the normal case, \(\lambda _{md}\ne 0\) and \(\varvec{\lambda }_m\ne \varvec{p}^{(d)}_{d}\), is first considered. The boundary \(\Gamma _{GR}\) is generated by a similar equation to Eq. (59), but using \(\varvec{r}^{(\pm )}_m\) instead of \(\varvec{f}^{(\pm )}_m\). Between \(G^+\) and \(R^+\), \(\Gamma _{GR}\) is an elliptical arc described by

where we have used \(\Delta r'_m=\Delta r_m/\cos \theta _r\) and Eq. (94). This ellipse is obtained from \(\Sigma _{GR}\) by replacing \(C_{GR}\) with \(C^{(++)}_{GR}=C_{GR}/\cos \theta _r\) and vertically compressing by a factor of \(1/\cos \theta _r\). Thus, the arc in this interval is characterized by \(C^{(++)}_{GR}\). When \(C^{(++)}_{GR}=0\), the ellipse in Eq. (98) is untilted (with axes 1 and \(\cos \theta _r\)), but when \(C^{(++)}_{GR}=-1\), it collapses to a line (with slope \(-\cos \theta _r\)). In the latter case, \(\Gamma _{GR}\) is linear between \(G^+\) and \(R^+\), as shown in Fig. 6d.

Moreover, \(\Gamma _{GR}\) is an elliptical arc described by

between \(R^+\) and \(G^-\) and is an elliptical arc described by

between \(G^-\) and \(R^-\). The arcs in these intervals are characterized by \(-C^{(-+)}_{GR}\) and \(C^{(--)}_{GR}\), respectively. For example, when \(C^{(-+)}_{GR}=1\), \(R^+\) coincides with \(G^-\), as shown in Fig. 6c, and when \(C^{(--)}_{GR}=-1\), \(\Gamma _{GR}\) is linear between \(G^-\) and \(R^-\). Finally, \(\Gamma _{GR}\) coincides with \(\Sigma _{GR}\) between \(R^-\) and \(G^+\). The arc in this interval is described by Eq. (61), characterized by \(-C^{(+-)}_{GR}\) from Eq. (92).

However, the cases of \(\lambda _{md}=0\) and \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{d}\) are anomalous. In the case of \(\lambda _{md}=0\), \(\varvec{r}^{(-)}_m = \varvec{0}\) from Eq. (32). This collapses the third and fourth arcs of \(\Gamma _{GR}\) to horizontal lines. Moreover, \(\varvec{g}^{(-)}_m = \varvec{0}\) if \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{r}\) from Eq. (37), collapsing the second arc to a vertical line. In addition, \(\varvec{g}_m=\varvec{g}^{(+)}_m = \varvec{0}\) if \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{1}\) from Eq. (35), collapsing the first arc and \(\Sigma _{GR}\) also collapse to vertical lines. In contrast, in the case of \(\varvec{\lambda }_m=\varvec{p}^{(d)}_{d}\), \(\varvec{r}^{(+)}_m =\varvec{g}^{(-)}_m = \varvec{0}\) from Eqs. (36) and (37). The first and third arcs of \(\Gamma _{GR}\) collapse to lines tilting by \(C^{(+-)}_{GR}\ne 0\) and the second arc to a point. The explicit shapes of \(\Gamma _{GR}\) in the anomalous cases are described in the main text.

Law of improvability decrease

Herein, we outline the proof of the law of improvability decrease. It states that the improvability decreases in any measurement–improvement process. That is, an improved measurement is always less improvable than the original measurement.

For G(m) versus F(m), suppose that a measurement \(\varvec{\lambda }_m\) is modified by an arbitrary \(\varvec{\varepsilon }_m\). This modification changes \(\lambda _{m1}\), \(\tau _m\), and \(\sigma _m^2\) by \(\Delta \lambda _{m1}\), \(\Delta \tau _m\), and \(\Delta \sigma _m^2\), respectively. To first-order in these changes, \(\Delta G(m)\) and \(\Delta F(m)\) are expanded as

\(\Delta C^{(++)}_{GF}\) can be expanded similarly. By eliminating \(\Delta \lambda _{m1}\), \(\Delta \tau _m\), and \(\Delta \sigma _m^2\) from \(\Delta C^{(++)}_{GF}\) using Eqs. (101) and (102), \(\Delta C^{(++)}_{GF}\) is related to \(\Delta G(m)\) and \(\Delta F(m)\) as

This means that \(\Delta C^{(++)}_{GF}<0\) if \(\Delta G(m)>0\) and \(\Delta F(m)>0\), proving the law of improvability decrease.

In contrast, for G(m) versus R(m), it suffices to consider that \(\varvec{\varepsilon }_m\) does not change \(n_d\). This is because when \(n_d\) is increased by reaching the boundary, \(C^{(++)}_{GR}\) decreases, whereas when \(n_d\) is decreased by leaving the boundary, G(m) and R(m) cannot increase simultaneously (Fig. 2). In terms of \(\Delta \lambda _{m1}\), \(\Delta \lambda _{md}\), and \(\Delta \sigma _m^2\), \(\Delta R(m)\) is expanded as

\(\Delta C^{(++)}_{GR}\) can be expanded similarly. By eliminating \(\Delta \lambda _{m1}\), \(\Delta \lambda _{md}\), and \(\Delta \sigma _m^2\) from \(\Delta C^{(++)}_{GR}\) using Eqs. (101) and (104), \(\Delta C^{(++)}_{GR}\) is related to \(\Delta G(m)\) and \(\Delta R(m)\) as

This means that \(\Delta C^{(++)}_{GR}<0\) if \(\Delta G(m)>0\) and \(\Delta R(m)>0\), proving the law of improvability decrease.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Terashima, H. Local trade-off between information and disturbance in quantum measurements. Quantum Inf Process 21, 138 (2022). https://doi.org/10.1007/s11128-022-03480-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11128-022-03480-2