Abstract

As the Mobility as a Service (MaaS) concept attracts more interest, there is increased demand for understanding more about MaaS subscription plans. There is a gap in knowledge on how the plans should be created and what transport modes and features they should include in order to cater for the heterogeneous mobility preferences of all the socio-demographic user groups. This paper presents the design of a survey including a stated preference (SP) experiment that captures the complex decision-making process of purchasing MaaS products. Respondents are presented with repeated choices between four hypothetical MaaS plans out of which three are fixed plans and one is a menu option. This approach allows for testing people’s preferences and willingness to pay for flexibility. The attributes of the plans include transport modes and amounts, mode specific features (e.g. 10-min taxi guarantee), transferability (meaning how much of left over mode-attributes can be transferred to the next month), special bonuses (e.g. a free dinner for two) and the price of the plan. The SP is tested with a number of focus groups. Insights on two data collection applications are detailed, first as part of web-based survey, then incorporated into a smartphone-based prompted recall travel survey. The design presented in this paper can be adapted to other areas and provide valuable insights for MaaS products design and pricing.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

Introduction

In the past year, the Mobility as a Service (MaaS) concept has been gaining wider recognition in the mobility sector. Many countries, (e.g. Finland, UK, Australia, Singapore, Canada etc.) have stated their intentions to introduce this new service model, which restructures the mobility distribution chain to include a MaaS operator who satisfies users’ every transportation need through a single digital platform (Kamargianni 2015; Datson 2016; MaaS Australia). One of the truly revolutionary elements of MaaS is that it aims to offer travellers the option to buy monthly mobility plans that include a certain amount of each transportation service such that are personalised to each user (Hietanen 2016). These MaaS mobility plans are analogous to “TV + phone + broadband” subscription plans in the telecommunication sector where customers can purchase services in a prepaid one-stop-shop manner. Depending on the local environment, the MaaS plans could not only include the various public transport options, which in many cities are already offered in monthly subscriptions, but also private transport options such as taxi, car sharing, bike sharing, car rental, long distance rail etc.

Due to the novelty of the concept, there is still a vast gap in knowledge about the ideal design of the mobility plans. As travellers’ needs are immensely heterogeneous, the plans need to be able to cater for the differing preferences of all user groups. Through the few pilots, projects and thought pieces (Sochor et al. 2015; Hietanen 2016; Kamargianni et al. 2016a etc.) several potential designs have been presented, but there is no quantifiable evidence or consensus about which one would be the best approach. Further, with careful design, MaaS mobility plans can be used as a travel demand management tool to assist in the shift towards more sustainable travel (Matyas and Kamargianni, 2018). Finding subscription plan designs that will both cater to the preferences of users and at the same time support the shift away from private vehicle dominance is not a straightforward task. Before attempting large-scale MaaS applications, it is crucial that some key points are established about user preferences and behaviour. This a priori information can then be used to guide the developments and best practices of MaaS plans design.

Against this background, it becomes clear that in depth analysis is needed about preferences and behaviour under various MaaS subscription plan designs. However, to do this, new, currently unavailable data is necessary. As such, the following paper presents a survey and a stated preference (SP) experiment that are designed to investigate the decision-making process of MaaS plan choices. The detailed SP experiments are based upon repeated discrete choices between mobility plans involving the respondents making tradeoffs between the various characteristics and elements of each plan. The case study city in this paper is London, however the presented survey could be used as a basis for MaaS surveys in other geographic areas. More importantly, the SP design of this survey can be applied to other MaaS market research, while the findings from the focus groups provide insights to the research community regarding interpretation of the information presented in the experiment. The findings presented in this paper have the potential to provide in depth insights into how MaaS plans should be designed and can be of high value to transport operators and the wider industry.

The paper is structured as follows. “Stated preference for service packages” section provides a short review of service packages in other sectors and the stated preference methodologies that are used to study them. “Mobility tools and MaaS plans” section briefly presents the background on mobility tools and the current approaches to MaaS subscription plans, and sets the core objectives of the SP designs. “London mobility survey” section describes MaaS SP design, including the process at which the attributes and attribute levels were chosen and generated, the scenarios and final design. “Application and data collection” section presents initial insights into the application of the design, which includes the basic design as well as an extension which integrates the survey with a smartphone based prompted recall travel survey. “Conclusions” section concludes the paper and describes the next steps.

Stated preference for service packages

Stated preference techniques are frequently used to gather information about products and services that are not yet available in the market (Louviere et al. 2000). With discrete choice SP experiments, respondents choose between hypothetical alternatives defined by a set of attributes, where each attribute can take two or more levels. Each alternative will differ in terms of the combination of the attributes’ levels. The advantage of using SP over revealed preference data, is that it allows the researchers to manipulate the attributes of the choice options and thereby with great speed and statistical efficiency, explore the effect of changes in attributes which could not be otherwise observed (Bonnel et al. 2009). This characteristic makes it an ideal technique to use when there is much yet to discover about a new product, such as MaaS plans. However, caution needs to be exercised as the number of hypothetical combinations increases exponentially as the number of attributes and levels increases, leading to potential problems.

MaaS plans are a case of product bundling, which is the practice of marketing two or more products or services in a package for a special price (Guiltinan 1987). In SP situations such as these, an alternative is a composite good made up of a bundle of related components or features (Dellaert 1995; Ben-Akiva and Gershenfeld 1998). In order to analyse predetermined bundles, studies prompt respondents to state their preferred choices among the proposed bundles (Fojcik and Proff 2014; Hamilton and Koukova 2008; Madden et al. 2002). For example, Madden et al. (2002) examined broadband delivered entertainment subscription packages based on an experiment where respondents were asked to choose from a list of package options. In some cases, respondents are first shown individual products and then the same products in a package to determine how packaging can change demand. Examples include Hamilton and Koukova (2008) who analyse students’ perceptions of the relative importance of bundle elements; Fojcik and Proff (2014) and Sheng and Pan (2009) who both test how bundling could increase product diffusions of a new product; and Janiszewski and Cunha (2004) who focus on price discounts in the evaluation of bundles. However, it is not necessary to test respondents’ preferences for individual product elements. A widely referenced study by Yadav (1995) examines students’ choices of magazine subscription bundles based on pairwise comparisons of bundles.

In classic SP situations, the choice task is designed for each person by creating alternatives through a combination of attribute levels from the same finite list, and the respondent’s task is to simply pick their preferred option. However, using solely this approach would not be sufficient for us to address the issue of flexible/customisable MaaS subscriptions. Studies that research customizable/flexible product bundles, which are found in the marketing literature, mainly resort to menu based survey designs to determine consumers’ preferences. Menu based designs allow respondents to choose their own preferred attribute levels (Bharati and Chaudhury 2004; Kamakura and Kwak 2002). In some cases, the experiment is designed to include both pre-determine bundles and the choice to customize the elements within the bundle (Ben-Akiva and Gershenfeld 1998; Liechty et al. 2001; Moore 2010). A study by Ben-Akiva and Gershenfeld (1998) for example presents 3 fixed packages and 12 individual features to respondents in a study about custom calling product bundles. They allow respondents to choose among: (1) one fixed package, (2) one fixed package and any number of extra features, (3) any number of features, (4) none. Stated Adaption (SA) experiments are also useful to understand how people would customise their own bundles, such as the one described by Erath and Axhausen (2010). In this study, respondents are asked to choose the preferred bundle of mobility tools (e.g. car choice, public transport season ticket) given new prices for mobility costs. Another interesting approach is the use of the Priority Evaluator (PE) technique. In these cases, respondents are asked to create their own products or services based on a budget constraint. This allows them to make tradeoffs with a capped budget, which creates a situation in which respondents have to make decisions of what attributes they would like to include. Examples include: Permain (1989) who uses a PE to examine preferences for railway station features in Britain; Axhausen and Jäggi (2010) who use PE to understand how people would invest in energy efficiency and Turrentine and Kurani (2007) who examine the design of the respondents next vehicle purchase with PE.

A growing trend in stated preferences is to try to make the respondents choice situations as realistic as possible (Hensher and Greene 2003; Train and Wilson 2008; Rose and Hess 2009; Huang et al. 2015). This can be especially useful when attribute levels can take a wide range of values, some which may not be relevant for the respondent. When looking at personal mobility choices, this is very true for the amount different individuals use each transportation mode. As such, context aware SP experiments are now frequently applied. Respondent’s specific experiences can be incorporated in three ways: (1) by presenting the status quo as an option without detailing the attributes or attribute levels; (2) by including the status quo with all of the information and (3) by presenting alternatives and their levels that are based on the respondents’ real-life experiences (Rose and Hess 2009). This latter type, pivot-style SP experiments use the existing knowledge base of the respondent when creating experiments and is what we will utilize in our SP design (Rose and Hess 2009; Train and Wilson 2008). Most such pivot based experiments use preliminary information collected through an RP survey for their reference alternative. Some applications include: Hensher 2004; Masiero and Hensher 2011; van Cranenburgh et al. 2014.

Mobility tools and MaaS plans

Mobility tools are considered to be anything that help fulfil the mobility needs of individuals, such as private vehicle, public transport season tickets and car sharing memberships. It represents commitment to using a transport mode or modes. They usually carry a number of benefits to their users. A frequently studied mobility tool is season tickets, which have a number of advantages for travellers including not having to worry about purchasing individual tickets or getting fined and decrease in boarding and queueing times (Wittmer and Riegler 2014; White 1981). The decision of what mobility tool to purchase, determines later mode choice decisions and shape travel behaviour (Le Vine et al. 2011). Mobility tool choices are also interdependent and can have significant effects on the usage of other modes (Becker et al. 2017; Vovsha and Petersen 2009). For example, public transport season tickets, have been shown to significantly increase public transport patronage, while decreasing the use of car. When the inter-modal travelcard was introduced in London, car use decreased by 9%, while public transport trips (combined bus and underground) increased by 7% (White 1984). The same substitution between car and season tickets has been found in German studies, where researchers found that as the number of season tickets increase, car ownership decreases (Scott and Axhausen 2006; Beige and Axhausen 2008). Further, car owners are less likely to subscribe to season tickets, while season ticket holders are more likely to become car sharing members (Simma and Axhausen 2001; Becker et al. 2017).

Turning to MaaS plans, these are also considered mobility tools, just multimodal (combining both public and private transport modes). Even though MaaS has recently caught the eye of many experts, due to the complexity of MaaS, there are still no full, wide scale applications. As there is such little evidence about MaaS plans, other combined mobility services can be used to gain inspiration about possible elements to include in them. One of the key questions is what modes and services could be introduced. Building on a recent survey of integrated mobility schemes, core services around the world that tend to be included are public transport, rail, taxi, car sharing, car rental and on demand transport (Kamargianni et al. 2016a). These should all be contenders to be included into MaaS plans (obviously the supply side considerations are very important, but are out of the scope of this paper).

The first complete MaaS field trial was the Ubigo project carried out in Goethenburg, Sweden (Sochor et al. 2015). Here, public transport, car sharing, taxi, bike sharing and car rentals were offered to users as subscription plans. Households subscribed for monthly plans including a personalised combination of—and credit for—the various travel services. The prepaid tailored monthly plans were determined in time or distance for each mode and the combined subscription was cheaper than each element individually. Credit could be topped up or rolled over and subscriptions modified. A mobility broker handled everything for the users to make it a seamless experience. This project led to the first analysis about subscriptions and mode use under MaaS. As this was an actual trial, different provision structures/prices etc. could not be tested.

Another MaaS development is the product of MaaS Global, the Whim app.Footnote 1 Up until recently, their publications indicated that they support fixed plans for a number of sociodemographic groups (families, students etc.; Hietanen 2016). The proposed approach included prespecified amounts of certain transport modes in each plan which are determined by the needs of each group. They also included some more innovative ideas, such as ‘guaranteed 15-min pick up by taxi’, ‘child seats provided in cars’, and the inclusion of shared taxis. These concepts indicate that tailoring mobility plans is a much more holistic idea and should comprises of more than just changing the amount of the transport modes in each plan (e.g. miles of taxi or hours of car sharing). However, just recently MaaS Global has come out with their new MaaS product design, Whim, which indicates a slightly different approach. The new subscription options are not segmented according to sociodemographic groups, but rather by the size of each plan. All of them have local public transport as their core, and then have a certain amount of points that can be used freely among other modes (taxi and car sharing). They also have a pay as you go option where there is complete flexibility. Some ideas even include adding bonuses to increase ridership of sustainable modes (Patel 2016). This MaaS application is a prime example of how the service itself and the market around it is still constantly evolving and changing.

To conclude, most elements of MaaS subscription plans are not yet solidified. However, some key features are selected to give focus and objectives to this study. First, it is unknown what transport modes each group of travellers would like in their plans and their willingness to pay for these to be included. This is also important when thinking about using MaaS as a travel demand management tool, as shared and sustainable modes (e.g. bike sharing) may only be successful in plans under certain conditions. Second, it is not clear whether individuals prefer plans to be fixed—predefined bundles, flexible menus or no bundles at all with pay as you go options. It is even possible that this preference is different for each user group and for each city context. Finally, additional features and bonuses have been raised as good additions to plans. It is unclear yet how important these are to the various groups and whether they can be used to help convince users to purchase a MaaS plan. In addition, gaining better understanding about peoples’ attitudes and perceptions towards MaaS and the potential effect MaaS plans can have on mode usage is also important. These are all key questions to answer as they will assist in creating tailored product design and pricing that can aid in the shift away from a private vehicle dominated era.

London mobility survey

The MaaS stated preference experiment is part of a wider survey, the London Mobility Survey (LMS) that has been designed by MaaSLab (www.maaslab.org) at UCL Energy Institute and aims to gather in-depth data on travel behavior and new mobility services. LMS includes two main sections: (1) a revealed preference questionnaire about individual characteristics and current mobility habits and (2) the MaaS questionnaire including the stated preference experiment and additional questions about attitudes and perceptions as well as the potential impact of MaaS.

The survey itself is a web based self-administered questionnaire. As a web based survey, it enables large sample sizes with relative ease and low marginal costs. It also allows complex questionnaire design using features such as conditional branching (skip logic) meaning that questions could be adapted dynamically based on the respondents answer to a previous question. However, it is unable to represent the general population as those who are computer illiterate or do not have access to the internet will not be represented. This in our case is not a problem, as the main target audience for MaaS is those who have smartphones (who are assumed to also be computer literate) thus, will be able to use the MaaS services (MaaS is based on smartphone technology involving dynamic, real time journey planning and electronic ticketing and payment; for further details please refer to Matyas and Kamargianni 2017). As a self-administered survey, special care must be taken with how the questions are worded as there is no feedback from a trained interviewer (Lavrakas 2008). Hover over pop-ups were used to help respondents understand questions and concepts. These included in places where the focus groups indicated that further explanation would be helpful. Both coded and open-ended questions were used—however, open ended were kept at a minimum (and made non-mandatory) as they can increase dropout rates (Crawford et al. 2001). Radio buttons prevent multiple answers when only one is called for, and item non-response was minimized by making the important questions mandatory.

The survey was deployed as a web application using the Ruby on Rails (http://rubyonrails.org/) open source framework based on the Ruby programming language. The application was built to be responsive and cross-platform so that users across multiple browsers, devices and systems would be able to access it easily. We opted to use a web application built from scratch using Rails, rather than an already existing survey development tool, to give us more flexibility and customisation when creating the SP experiment. The data from the completed surveys are automatically verified and stored in secure servers in a MySQL (https://www.mysql.com/) database system. This was used as the database design as it can follow a relational database structure and MySQL is an open source, robust system that can handle numerous concurrent users. This structure was designed in a form necessary for choice modelling exercises, to enable seamless export into other bespoke analytical tools.

Revealed preferences questionnaire

Focusing first on the revealed preference survey, information regarding the respondents’ socio-demographic characteristics, current mobility tool ownership and mobility habits are collected. These are important not only for later modelling purposes to allow for market segmentation, but also some elements of the SP will be dependent on these responses (context dependence). Prior analysis was conducted on London’s traditional household travel demand survey, the London Travel Demand Survey (LTDS), in order to evaluate the information that currently exists about travel demand in the city and possible gaps that LMS could fill. The more fundamental questions are matched up with those in LTDS to allow for later comparison between the two data sets. The categories of questions included in LMS are presented in Table 1.

First questions regarding the socio-demographic characteristics of the respondent are included. These are necessary for segmentation and will assist with generalizing the results to the wider population. Residential location was only asked to a ward level (first 3 digits of a 6–8-digit post code) and were made optional, in order to ensure anonymity and the privacy of the respondent. Next, private mobility information is requested. After determining the missing information in LTDS about vehicle costs, special attention was paid on including detailed questions about parking, fuel and other vehicle related monthly household expenditures.

The following section includes questions regarding shared mobility and taxi. We had the opportunity to add this section thus collecting RP data on new modes and services as London market is quite mature and the awareness and penetration of these is high enough to gain in valuable insights about them. Awareness of—and membership in car sharing schemes (car club in the UK) as well as their subscription status and monthly usage fees if members are all included. During these questions, car sharing was explained as a service where “you can rent a vehicle to drive for a short period of time, usually hourly”, to ensure that it is not confused with car rental or ride sharing. These were all conditional questions which only pop up based on answers to earlier questions. Prior examination of the local supply for car sharing options aided the development of questions which offer company based lists of existing subscriptions, with single answer radio buttons to click as answers (rather than asking respondents to remember their subscriptions). Bike sharing, Santander Cycles in London, questions were designed in a similar manner. Finally, question about taxi use closed this section. London has a wide variety of taxi services including the traditional black cab which can be hailed off the street, black cab ordered via a dedicated smartphone app (e.g. Hailo app), Uber, shared taxi (e.g. Uberpool) and countless other minicab companies (which can only be ordered in advance, unlike the black cab, which can be hailed). Questions here are broken down by these taxi types to allow comparisons between the uptake and popularity of the different types and focus mainly on usage and costs.

Next, public transport pass ownership and usage are investigated. As London’s pricing scheme is very intricate, if the respondent has a travel pass, several questions were included regarding the duration, the zonal coverage, discounts and costs of the pass. Further, questions about contactless payment (apple pay, contactless card etc.) were included as the usage of these can indicate openness to innovative payment methods which is an important element of MaaS. Finally, questions about disability and related mode usage were also included (but had a prefer not to answer option), which fed later elements of the survey.

The final two sections of the survey were about journey planners and attitudes towards various elements of new mobility services and vehicles. Journey planners have become an important element of peoples travel behavior and the importance of including them in studies should not be underestimated. As such, questions that help understand their use, such as the frequency of use and the transport modes journey planners are used for are included in LMS. Also, statements regarding the respondents’ attitudes and perceptions towards the usefulness of—and dependence on—journey planners are added. These are statements with 7-point Likert scale answer options. Carrying on with attitudes and perceptions, statements regarding the ‘innovation adoption’, specific to transport, is shown. These can later be used to construct latent factors that can help explain openness to MaaS. The last section of the RP survey solely focused on car ownership and car sharing. Statements about the necessity of car ownership and the respondent’s views on current and future car ownership were presented. These, as well as the following questions focusing on car sharing schemes (both companies and peer to peer) were split depending on whether the respondent has or does not have a vehicle (conditional question).

The presented RP questions can be adapted to any area and can be lengthened/shortened based on the needs of each study. In the London case study, these questions were of interest to the industry and the researchers, which is why they were all included. However, some elements are vital as they feed the SP part of the survey. These will be pointed out below.

MaaS SP design: attributes and levels

Now turning to the SP, in choosing attributes, there are many considerations to take into account. First, the factors included should create a realistic choice for the respondent. Second, the number of attributes presented in each experiment should not be too many so that the respondent is able to comprehend the task and make appropriate tradeoffs (Hensher 2006). Using the above discussed MaaS developments as a basis, attributes fall under two categories: transport mode specific attributes and non-mode specific attributes. The former includes the actual modes contained in the plans as well as the additional features available for each mode, such as including minivan access in car sharing or 10-min cab guarantee (see Table 2). The latter includes characteristics of the plans such as price and transferability of unused elements to the next month. The attributes and levels were determined through a priori reasoning and evaluation of the local environment for the case study city. It was noted early on in the design process that there is a significant flexibility-complexity tradeoff; while it would be desirable to include and test all possible modes, service options and innovative concepts, this would be too complex of a cognitive task for the respondents, especially since the whole concept of MaaS is new and needs to be understood.

The assumption was made that if MaaS were introduced it would include only existing transport modes, thus would be built from the current service provisions of the case study city. A dataset was created which included all non-private modes of the city covering characteristics for each mode and supplier such as their business models, pricing structures and subscription possibilities, booking and payment options if applicable and ICT availability (booking apps etc.). This dataset was used to determine the attributes used in the SP.

Transport mode specific attributes and levels

Public transport Public transport in London includes bus, tube, overground, Docklands Light Rail (DLR), tram, rail and riverboat. Due to the high number of public transport options in the city, it quickly becomes obvious that presenting all of them individually would overcomplicate the public transport attribute. As the transport modes are already integrated with the Oyster card ticketing and payment system they were aggregated and used as “public transport” in the SP. Three public transport levels were chosen: none, unlimited bus and unlimited public transport. These follow the currently existing bus pass and oyster travelcard (unlimited travel) options available in the city. The unlimited pubic transport level had an additional complication. The London travelcards (unlimited public transport) have a zonal aspect to them. Thus, the level had to be ‘unlimited public transport in your zones’ where the zones were fed from earlier elements of the survey. This tailoring to respondents was crucial as there are huge differences in the prices depending on which zones are included. If the respondent stated that they had a travelcard in the RP survey, the travelcard zones were fed through from there. Further, two other elements had to be taken into account and fed from the RP survey. Both eligibility for discounts/free travel and disabilities were questions included in the RP survey and fed into the public transport—and associated price (to be discussed below)—levels.

Bike sharing In London, there is one main bike sharing scheme the Santander Cycles (former Barclays Bikes). The two levels are none and unlimited access for 30 min use at a time. This matches up with the current operation of the service. An additional feature was included that allowed for increased bike sharing rental time to 60 min at a time. Similarly, to the public transport mode, if the respondent stated in the RP survey that they have a disability that prevents them from cycling, this mode was excluded.

Taxi London has a vast amount of taxi services, including the London black cab, ridehailing services (i.e. Uber) and hundreds of minicab companies. A base dataset was created including a selection of these and containing information about their availability, business models and pricing. After evaluating the extent of the taxi provisions, the decision was made to lump all these together to reduce the complexity of the task. Before determining the levels for the taxi attribute, an analysis was conducted on data from the London Travel Demand Survey (LTDS) to get an idea about the ranges people tend to travel using taxi. It quickly became clear, that there are huge differences in these amounts and it is very easy to have levels that are quite far off of what respondents would like to see (this was also tested with focus groups). In order to create better, tailored levels, which will result in improved information gained through the SP, a pivot style design was used. Information from the RP survey about taxi distance travelled on an average month was used as a baseline from which the attribute levels were pivoted off of. This approach had to be slightly altered as respondents with low or null amounts taxi would have little or no variation (or even negative values) in their levels. Analysing some test simulations, 10 miles per month was chosen as the threshold under which pivoting was not used and fixed attribute levels were adopted. There are also some additional feature attributes for taxi, out of which at most one was presented by alternative. These attributes are presented in Table 2.

Car Sharing (Car Clubs) By car sharing (called car clubs in the UK) we mean short term car rental services, where you can pay by the day, hour or in some cases even minute. When mapping out the offerings, differences in the setups were identified. There are two main types of car sharing services available in London: company owned fleet or peer to peer car sharing (or peer-to-peer car rental). We decided to focus our efforts on the first type as the business models of the latter tend to be based on individual agreements between the supplier and the customer, thus would be difficult to include in MaaS plans. Six main car sharing operators (based on the size of their fleet) were identified all with varying geographical coverage. Similarly, to the taxi case, pivoting was used here except for those people who do not use car sharing at all, and as such they were assigned predetermined levels. A report on car clubs in London stated that 80% of car club members use their car less than 6 times a year and that the average hire is 6.9 h. As these values are quite low, the levels for car sharing were also kept low (Steer Davies Gleave 2016). Driving licence possession and disabilities were excluding factors of this attribute. Car sharing also has some additional features, which can not only provide insights into MaaS plans, but also to some characteristics that would encourage more people to use this mode.

Other modes Other modes, such as car rental, ride sharing and demand responsive transport were also considered but were excluded in the final design. Car rental was excluded, because we wanted to focus on short term, city trips and in London for urban trips car rental is very similar to car sharing. However, it is not as flexible for seamless door-to-door mobility (which is the aim of MaaS) since car rental points are usually in a specific area and users need to travel there to collect and return to the cars. Car sharing is much more flexible; even with round trip car sharing, the pick-up points are much more dispersed around the city allowing for more options. With the more novel models, such as free-floating car sharing which already exists in parts of London, users have complete flexibility. To include longer term hiring, the SP includes car sharing attribute levels on a daily besides the usual hourly levels. This being said, in other cities/areas where car sharing is not available, car rental could be included instead. Ride sharing, falls under the same category as peer to peer car sharing by which they are based on individual agreements between the customer and the supplier. Further, peer to peer as well as demand responsive services are not as well known in the case study area and in a SP experiment they would need to be explained in much more detail for respondents to understand what they are asked to choose about. It needs to be noted that these modes could be integral part of MaaS schemes and future SP experiments should also aim to include these into them.

It has to be pointed out that there is a focus on the features of taxi and car sharing in this SP plan designs. The reason for this is that these modes are provided by private companies and can offer several innovative features to advance customer experience. These features can be important elements to customise the MaaS user experience.

Non-mode specific attributes and levels

The non-mode specific attributes that were included in the SP are the cost of the plan transferability and special prizes in Table 2, above.

Regarding price, only the total cost of the plans was presented, not the individual price of each element. This was done so that the respondents would evaluate their willingness to pay for all the elements included in the plans, rather than try to compare each individual unit price. This also provided a more realistic approach to how these plans would be shown in a real market setting. To determine the actual prices presented to the respondents, each mode-specific attribute had a ‘base price’ that was established through the dataset of all non-private modes in the city. The price of the presented plan is pivoted around the sum of base prices for each included mode. The base value for each mode was chosen after detailed evaluations of the current market offerings of transport service providers. For those modes where only a single provider exists (or is dominant over the others) their price was taken. These modes are public transport and bike sharing (the authors acknowledge, that now there is more than one bike sharing services offered in the city, however when the survey was designed and carried out, these were not yet available). The public transport fare system in London is very intricate. For bus passes discount levels need to be taken into account, while for travelcards (season ticket) there is also a zonal dimension. As such, the base price for public transport used Transport for London’s price table, but was tailored to each respondent based on answers provided in the RP survey. For bike sharing, Santander Cycles only offers annual subscriptions, not monthly. Doing research on historical prices (where other subscriptions were also offered) a base price of £25 was decided upon. Moving on, taxi and car sharing are the two modes where multiple service providers exists. For both these services, the top 6–10 providers payment models were collected and average values were taken for the base prices. The resulting base price for taxi is £4/mile. For car sharing, this depends on the amount included in the plan: for under 8 h of usage, the base price is a fixed cost £10 with an additional usage fee of £6.5/hour; for over 8 h of usage, the fixed cost is £20 with £5 for each hour and £45 for each day. Even though the base price calculation method is quite complex, we wanted to mimic the market prices as closely as possible to have realistic values. Using the sum of the transport mode base prices, each plan base price is calculated. These are then multiplied by a cost attribute level (see Table 2) to arrive at the plan costs in each SP scenario.

Transferability refers to whether left over credit from 1 month can transferred over to 1 month or not. It has two levels “none of your credits can be transferred to the next month” and “all of your credits can be transferred to the next month”. The special present incentive attribute was included to see whether they can be used as motivational techniques for people to subscribe to certain plans. The hypothesis is that if someone subscribes to a plan that includes public and shared modes that they may have not used before, there is a chance they will try it and start using it. Transferability and the special present was not included in the menu as through the focus groups it became clear that the menus had to be kept very simple or respondents will not comprehend the task and will just randomly click through.

MaaS SP design: scenario design testing and presentation

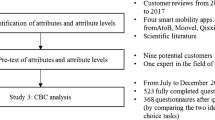

Detailed focus groups coupled with the review of transport services described above lead to a design that is both relevant for MaaS plan research and understood by respondents. A flowchart of the design activities is presented in Fig. 1. First, the local transport environment was mapped out, including transport modes, their business models and pricing structures. Next, the number and type of modes to be included in the survey were narrowed based on a priori reasoning to keep it understandable to the respondents. Next, the remaining modes were narrowed/grouped based on their business models, and only models that are feasible and easily understandable are kept. Once a final group of modes with a narrow segment of business models was selected, a mock-up of the SP was drawn up. Various design and presentation options were tested and refined in three waves of focus groups. The groups included individuals from a range of socio-demographic backgrounds. The first and the third focus groups were smaller (5 individuals) while the middle one was with around two groups of 10 individuals. These took the format of both email feedback and personal interviews (in some cases the combination of both) about preferred design, presented information and wording. Initially, various SP presentation designs were tested, including placing each plan on separate pages and then on a final page asking them to indicate their preference. Even though some people preferred this as it was easier to understand, overall, this significantly lengthened the survey process thus was less favoured to showing all the plan options on one page.

The final design is as follows. In order to be introduced to the SP exercise, a description of Mobility as a Service concept was shown to the respondents. An example of this can be seen in Fig. 2. As the concept is new and unknown to the wider community, it had to be explained in terms that were easily understood. The difficulty came from doing this in a short and concise manner, while making sure all the key MaaS characteristics were included. All three focus groups provided a vast amount of feedback on a number of wording options, which were all taken into account to arrive at the description presented in Fig. 2. The wording throughout the SP was put into context—everything was phrased using London terminology and analogies after previous wordings did not resonate with respondents. The description was phrased from the point of the journey planner function as this is one of the most relatable features of the service. This approach greatly increased the overall understanding of MaaS as a concept and how it would be relevant to a user.

Moving on to the SP pages, in each scenario task (page), the respondents were presented with a single choice between 4 different hypothetical plans. The 4 alternatives were: three fixed plans and one menu option where the users can determine which and how much of each mode they would like (Stated Adaptation element). These were presented alongside each other, but only one of them can be chosen. Thus, the outcome of a choice made from the options is either one of the three fixed plans or any combination of the individual attributes in the menu option. The menu option is presented alongside the others to allow analysis of the flexibility-complexity tradeoffs. Further, the flexibility of the menu option is priced, meaning that the price attribute of the menu is always greater than that of all the other plans. This approach was chosen to allow for analysis of peoples’ willingness to pay for flexibility within MaaS plans.

A number of conditions were imposed on each page in order to focus on the research objectives of this study. First, maximum of two ‘none’ level among the mode-specific attributes was allowed. This had to be imposed as the whole point of MaaS plans is that it offers users a combination of transport modes and if there is only one in the plan the aim is lost. This meant, that for example if a respondent did not have a driving licence and had a disability where they could not cycle, the other two modes (public transport and taxi) had to be included. Another element that we wanted to test was how the additional features affected the choice of plans. Hence, a differentiation was made between two different types of plans: a basic which did not have any additional features and a premium which had additional features. However, even in the premium plans only one additional feature per mode was allowed in order to decrease the cognitive load on respondents. In the first two scenarios, there were two basic plans and one premium, in the second two scenarios there were two premiums and one basic. The respondents did not know this distinction. Further, some special features were limited to the premium plans (as presented in Table 2).

An example of a stated preference experiment presented to the users is depicted in Fig. 3. Icons for the travel modes, hover over explanations and colours were used to provide a visually stimulating presentation for respondents. Using pictorial representations makes users’ perceptions of modes more homogenous, makes the task more interesting and easily understandable (Morikawa 1989). During the focus groups, understanding and acceptance of all the information increased as these elements were included in the design. The icons for the modes were kept the same as those used in the activity diary (tracking/verification) section of the survey that the users had filled out the week before.

Each respondent was presented with four SP tasks, in which levels were chosen based on a cleaned random experimental design. According to Walker et al. (2015) the random design performs as well as any other design and as all designs, will perform even better if it is cleaned to remove choice tasks where one alternative clearly completely dominates the others (hence there is no real tradeoff for the respondents). Hence, a condition was imposed on the scenarios such that each has to be internally consistent while making sense with regards to the research topic. If the sum of the base prices of plan A is greater than the sum or the base prices in plan B, then in the presented alternatives this also has to be true. This method helps minimise the chance of having strictly dominating alternatives, which would be problematic as they may lead to substantially biased estimates (Bliemer et al. 2014).

In addition, it is important to acknowledge the differences between the first three bundles (fixed) and the fourth, customisable menu. It has been widely examined in the literature, that both from a producer and a customer point of view, there is value in allowing users to create their own products (Fogliatto et al. 2012). From the supply side, a premium price is charged to offset the potential additional costs of customising (Piller et al. 2004; Chen and Wang 2007 Zhang and Tseng 2007). On the demand side, the additional value to consumers from being able to design their own products the way that they want, increases their willingness to pay (Fiore et al. 2004; Schreier 2006; Franke et al. 2010). We wanted to test whether this holds in the case of MaaS plans, as such the price level for the ‘create your own’ menu option was set higher in each experiment.

In each SP task, respondents were asked to state their preference between the four offered plan options. After each SP task, they were asked if they would actually buy their chosen plan if it were available today. The response options were: (1) Yes, I would definitely buy this plan, (2) Yes, I would consider buying this plan, (3) No, but I would use MaaS as pay-as-you-go, and (4) No, I would not use MaaS at all. This additional question was important, to better understand respondents’ willingness to purchase MaaS plans. The pay as you go option was included to capture those individuals who like the concept of the integrated service with a single payment and ticketing option, but would not want to commit to monthly plans. However, as no further information was given to respondents about how much the pay as you go options would cost them, these responses cannot be used for detailed analysis. In order to do that, a separate SP should be created just for the pay as you go option, which would have significantly increase the length of the survey and the burden to respondents.

The option to use MaaS as pay-as-you-go is also provided, as it is important to differentiate between people who would not want to subscribe to their chosen plan and those who are not interested in MaaS at all.

MaaS impact and attitudes

The final element of the London Mobility Survey is concluding questions about individuals’ attitudes and perceptions towards MaaS as well as the expected impact of MaaS on their mode choices. These are broken into questions that related to the specific MaaS plan the respondent chose in each SP task, and questions related to MaaS in general.

After each MaaS plan, respondents indicate whether they believe their chosen plan would increase, decrease or have no impact on their current modal split. The question responses are presented to users by transport mode, where they have three radio buttons to choose from. The transport modes are shown as icons, the same ones that were used in the SP to ease understanding. This question was presented to every user, regardless of whether they indicated that they would be interested in purchasing their chosen plan or not (meaning that analysis on this should be done with caution).

The questions relating to MaaS in general are presented on a separate page after all the SP tasks are complete. These first half of these questions relate to attitudes respondents have towards certain elements of MaaS. These are all statements with 7-point Likert scale responses. The second half narrows down on the impact that MaaS could have on private car and public transport use. Those respondents who are car users, are asked to indicate the top 3 ways that MaaS would impact their private vehicle use, while the same question is asked from public transport users.

Lessons learned for MaaS SP design process

Designing SP experiments is always challenging, especially when this involves services not yet available in the market. In the case of MaaS, this is further complicated, because the MaaS concept is still immature and the MaaS products are constantly evolving (i.e. see “Mobility tools and MaaS plans” section for a review of existing MaaS products). In the discussion above, the main principles of bundling are presented, however, the ideal design for future SPs and real-life applications may change as the concept matures.

To arrive to the SP design presented above, we have tested several designs and visualisation approaches. Some important learning points should be highlighted to help guide further research. First, we initially tested SP designs where the price of each service was presented as well as the plan price. However, during our focus groups and personal interviews this was not well received from respondents. We had several individuals state that this was “too much effort” for them to read and understand, and they would rather just have the plan price as it is currently presented to them when purchasing telecommunication plans. As the MaaS concept is new, the focus group participants were more comfortable with plans that were shown similarly to services that they are familiar with.

Second, we also wanted to test the concept of being able to use MaaS in various geographic areas (i.e. in different areas/zones of Greater London, and in other cities/the concept of MaaS roaming). However, adding this additional attribute was too much additional burden and ended up drawing attention away from the core MaaS product.

Third, the number and levels of modes presented had to be changed several times throughout the design process. Even though MaaS plans could include other modes and service designs, including these create too much of a burden to respondents. From the focus groups, we found that including less modes increased comprehension of the overall idea of MaaS plans. It has to be noted, that the survey was carried out at a time when most respondents were unaware of the MaaS concept. Once the service matures and awareness increases, it may be possible to test more complex plans. However, until then, simpler plans are preferred.

Fourth, a number of presentation methods were tested during the focus groups. These include: (1) presenting each plan on an individual page and then a final summary page where we ask them to choose; (2) presenting the plans in a table format, where the modes are in one column, the prices in another, (3) including the pay-as-you-go option in the experiment itself as a 5th choice. Regarding (1) a couple of focus group members liked this approach, as it was clear and easy to understand. However, the overwhelming feedback was that presenting it page by page was too long and that they did not want to have to click so many times. This approach would probably result in increased survey abandonment. Options (2) and (3) were better received as they were shorter, however, they were still less preferred to when we presented the plans similarly to the way they are used to seeing plans in real life (that is the design we eventually chose). Interesting option (3) caused some unnecessary confusion, and presenting the pay as you go option next to the plans ended up drawing away too much attention. As such, we decided to include the purchasing choice as a separate question, so that respondents could solely focus on their preferences for MaaS plans during the SP. As the MaaS market matures, there may also be other MaaS SPs worth exploring. For example, having a base MaaS plan and then having add-ons may also be a viable approach.

Application and data collection

In the following section two versions of the survey are be discussed. The first is a ‘Basic application’, where the above-detailed design is implemented through a web-based survey. Here, the respondents are recruited to fill out the RP survey followed by the SP on an online platform. The second, the ‘Extended application’ takes advantage of developments in smartphone based survey tools, and builds the MaaS survey as an extension to a smartphone-based prompted recall travel diary, utilizing the additional information attainable from such surveys.

Basic application

Sample and completion

Data was collected in April 2017 via an opinion panel.Footnote 2 Only people living in London over the age of 18 were eligible. Incentives were awarded to participants in terms of “currency points”, which is used by the market research company. These points are accrued over time and be cashed in for vouchers. Unfortunately, we were not able to obtain the exact monetary value of the points nor the overall number of people who were invited to participate in the survey. However, the requested 1000 completed surveys were reached within 3 days, after which the survey link was deactivated.

1374 people started the survey (that is, they have a unique identifier). Out of those who started, we have some sort of incomplete data from 24% of respondents. 11% abandoned the survey without answering any questions, a further 8% started the RP survey but did not finish it and a further 5% completed the RP survey, but did not finish the SP. These rates are summarised in Table 3. In total 1046 individuals completed all elements of the London Mobility Survey, resulting in 4184 SP observations. Based on the information we have, the AAPOR minimum response rate is: 1046/1374 = 76% (AAPOR 2015).

The characteristics for those who completed the survey, are presented in Table 4. The statistics are compared to the 2011 Census and information available from the Greater London authority (ONS 2011; GLA 2015). For the Census data values, only those who were over 18 were taken into to make it comparable to the LMS values. By comparing the two columns, we can see that LMS is not representative of the wider London population. For example, White ethnic groups are significantly over-represented in the sample while Black and Asian ethnic groups are under-represented. Caution should be exercised when interpreting the results with the current sample, or it should be weighted if the results are to be generalised.

First, it is worth looking at a snapshot of the tradeoffs between the menu and the fixed plans. The 1046 individuals who finished the survey completed a total of 4185 choice tasks. Respondents considered the menu in 23% (959) of the tasks. We were only able to capture those who played with the toggles and left them like that (rather than putting them back to zero). As such, the 23% is probably an underestimate of those that actually considered this option. In total 443 individuals (42% of respondents) considered the menu in at least one choice task. Breaking this down further, 103 people (23%) considered the menu in all four choice tasks, while 85 (19%) in two choice tasks, 61 (14%) in three choice tasks and 194 (44%) in only one choice task. Regarding actual choices, in only 16.6% of all cases was the ‘create your own’ plan eventually chosen, while in most cases the choice was one of the three fixed plans. Overall, 305 individuals (29% of respondents) chose the menu in at least one of the tasks. Looking at this in more detail, 79 (26%) chose the menu in all four tasks, 46 (15%) in three, 63 (21%) in two, and 117 (38%) in one of the choice tasks.

Next, the response burden is examined to evaluate the complexity of the tasks respondents have to face. Using the rating scheme of Axhausen et al. (2015) we can calculate the response burden. The Axhausen et al. rating scheme assigns a numerical value to each question type based on the complexity of the task. The values range from 1 for simple yes–no questions to 6 for complex open-ended questions. Stated choice questions are also assigned values, which are based on the number of questions, alternatives and variables in each scenario. In their study, Axhausen and colleagues assign response burdens points to 52 surveys, with the results ranging from 48 points to 3300 (mean: 484.2, std.: 515.2 median 330). The range of the response burdens in very large, as they had both very detailed and very short surveys in their sample. LMS received between 173 and 267 points based on the same point scheme. The range is due to the skip logic for certain questions. Comparing this to the 52 response burdens presented in Axhausen et al., LMS would fall within the 30th percentile.

In addition, survey completion times are also examined as a proxy for survey length and to gain further insights about the burden to respondents. This is important to understand, as longer questionnaire cause lower engagement and fatigue, which can lead to a decrease in the quality of response and survey abandonment (Gakesic and Mosnjak 2009; Callegaro et al. 2015). This is especially true for web-based surveys, where participants are used to the fast-paced nature of the Web. Some studies (e.g. Crawford, Couper and Lamias 2001) indicate that questionnaires should take no longer than 10 min, however, the general consensus is that the maximum length is around 20 min (Gunn 2002; Callegaro et al. 2015).

Table 5 presents the completion times for the RP survey. Most people completed it between 4 and 8 min, while the average response time was 7 min 19 s. 18.4% of respondents completed the survey in under 4 min, while 7.2% of them took over 12 min. Those who took over 12 min are likely to have taken a break in completing the survey or simultaneously engaged in other activities, there are a few extreme cases where completion took over 60 min (0.5% of cases). As many questions were only shown if the respondent had a private vehicle (skip logic), those who did not have a vehicle had fewer questions. This is reflected in the completion times (Table 6) as the mean completion time for those with vehicles is almost twice the time of car owners. Further, not all questions were mandatory to answer, which also results contributes to the spread in completion times. Although, in most cases the item non-response for the non-mandatory questions is under 10%.

Turning to the SP, we similarly examine the response times. There has recently been scepticism over online SPs, especially those using recruited panels, as there is a panel attrition effect where respondents are more likely to run through the survey without truly contemplating the information (Olsen 2009; Campbell et al. 2013). Studies show that this results in reduced data quality. For example, Börger (2016) reported that longer response times decrease choice randomness (unless these response times are extreme). In Borger’s study, the mean completion time for the full 6 choice tasks was 3 min and 4 s. However, each choice task only had 2 alternatives and a no change option—which is much simpler that the above describes MaaS bundle plus menu SP. It is also interesting to examine what happens to response time from one choice task to the next. Studies observe, that as respondent’s progress though choice situations their response times tend to fall and become more consistent (Brown et al., 2008; Vista, Rosenberger and Collins 2009). This is due to a number of reasons, including learning, use of heuristics or attempts to finish the survey as quickly as possible.

The response times for the LMS SP are presented in Table 7. The average completion time of the whole SP (all 4 choice situations) is 5 min and 22 s. However, the standard deviation is extremely high due to the few extreme outliers. As such, a second average is presented, which is 03:55, that excludes those who probably stopped the survey for a longer period of time before continuing between choice tasks. Putting this together with the RP survey response times, we can conclude that the survey took between 10 to 15 min for most respondents, which comparing with the literature, is not too long for respondents.

It is also worth examining whether considering the menu choice (create your own) influences response times for each SP experiment. Out of the total 4184 choice tasks, the menu was considered in 959—23% of cases. Table 8 presents the response times per choice task based on whether the menu was considered or not. If the respondent tried the create his/her own plan (played with the toggles) but did not choose it in the end, that choice task is included in the “Menu considered” column. As expected the mean response time for those choice tasks where the menu was considered is higher (78.15 s) than for those where it was not (70.66 s), although, the difference between the two is lower than anticipated. Interestingly, the standard deviation for those who did not consider the menu is higher than for those who did consider it. A reason for this may be that those individuals who tried the menu were more involved in—and committed to—the survey, thus were less likely to abandon it for longer periods before continuing.

Finally, number of interesting observations can be made from breaking down the response times to each SP page. Firstly, when calculating descriptive statistics using the whole sample, very high standard deviations are observed. Looking at the figures in Table 8, this is especially true for the first, but even more for the second choice situation. The extremely high standard deviation of the second choice task is caused by a handful of people who abandoned the survey for days before completing it. For the first choice task, we can see that 228 respondents spent more than 3 min on that task, while 68 spent more than 5 min, which is much more than the later choice tasks. The reason for this is that this was the first time they saw such an experiment and needed more time to process this. Some may also be a result of respondents having a look at the SP page, and deciding to do some other activities and then came back to it later. The number of respondents who ‘pause’ the survey completion significantly decreases for the last 3 choice tasks.

Aligning with the literature, LMS SP response times decreased as respondents worked though the choice tasks. Regardless of whether outliers were taken into account or not, response times significantly decreased throughout the 4 pages (Table 9). This also shows that while the mean response rate for the second page is over 52% lower than for page 1, the same rate of decrease is not true for later pages; the response time declines at a decreasing rate.

Extended application: smartphone based LMS

With the increase of smartphone penetration over the last decade, researchers have been increasingly using these to collect detailed and precise mobility information about individuals (e.g. Vacca and Meloni 2015; Carrel et al. 2017). Data from such collection tools can provide valuable insights into people’s mobility habits and decision-making process. To better understand MaaS and its possible effects, it is valuable to know in greater detail how peoples’ current mobility habits influence their MaaS preferences. As such the SP experiment and the whole survey has also been adapted as an extension to a smartphone based prompted recall travel survey that was adapted for the purposes of our study (FMS; Cottrill et al. 2013; Kamarginanni et al. 2016b). Having access to a state of the art smartphone based travel survey provided a great opportunity to use this tool to enhance the quality and quantity of data collected and available for analysis. An overview of the steps of this version of the London Mobility Survey is as follows (for more details, see Kamargianni and Dimakopoulos 2018):

-

Step 1: Create your account;

-

Step 2: Answer the pre-questionnaire;

-

Step 3: Download the app, log in, and start tracking and validating your activities;

-

Step 4: After a week of tracking go to the post survey to check your statistics and complete the MaaS SP.

After filling out the RP survey about basic socio-demographic information and details about their current mobility tool choices, respondents are tracked via a smartphone app for a 7-day period. During the span of the tracking, they are reminded to verify their travel and non-travel activities and are asked to answer additional questions about their experiences (completed either on the web interface or their smartphones). Both these questions and questions in the RP survey focus heavily on usage and attitudes towards the various characteristics of shared modes and innovative services. As the case study area is Greater London, all the elements of the survey are adapted to fit the local environment. After the 7 days of tracking is complete the stated preference (dubbed post-survey) becomes available. First, the revealed preference (RP) data is aggregated and users are presented with a summary record of their mobility behaviour (aggregated to a month), broken down by transport mode and including information about travel-cost, time, and distance and number of trips. Travel cost was estimated using a combination of sources. Responses from the RP survey and the validation provided most of the information. In addition, Transport for London open APIs (Application Programming Interfaces) were connected in the back end of the survey to collect precise information about public transport fares (Kamarginanni et al. 2016b). This mobility record (MR) will become an important element to build the SP survey and an example can be seen in Fig. 4. Please note, the legend was not shown in the actual survey. Instead, hover over explanations of the modes were used.

The MR shows the distance, time, number of trips and costs for each transport mode the individual uses. The data used to create this is a combination of RP survey responses, tracking and verification information as well as data gained from integrating external APIs into the system. The example seen in Fig. 4 is for a respondent who only walks, takes the bus, the tube and drives his personal vehicle; which is why the other transport modes have no values. The MR encourages the respondent to start thinking about their overall travel on a monthly basis as well as the concept of multimodality. Through our focus groups, we discovered that subjects found it very exciting to see their travel broken down as such and were very surprised by their statistics. The MR can be a great incentive for respondents to continue with the survey, especially if it is made interactive (not a current feature). For example, respondents could select on dials what breakdown they would like to see, such as weekday-weekend or hours of the day.

The order that the modes were presented remained consistent throughout the mobility records as well as all the plans in each scenario. This way they were familiar with the mode icons and could easily comprehend the main plan concept without having to spend too much time on each page. We preferred this approach as MaaS is a new concept and we wanted to decrease the effort for the participants.

Even though in this version of the London Mobility Survey the SP is an extension of a smartphone based travel survey, the SP element is only available on the computer based online interface so that all the plans could be seen next to each other. Alongside the plans a short version of the respondent’s personal MR was presented to give context (see Fig. 5). This is similar to a status quo alternative, even though the respondent can’t actually choose it. However, after asking the respondents’ preference among the four plans, they are asked if they would buy their chosen plan if it were available today (the option to use MaaS as pay-as-you-go is also available). Here, they could use their MR as a reference, knowing, that if they would not buy the MaaS plan, they would be picking their status quo. The remainder of the survey is the same as the other version of the survey. This will allow for later analysis between the different collection methods.

Response rates for advanced survey

The first two waves of data were collected during November—early December 2016, while the third during February 2017. The data was collected using Exterion Media’sFootnote 3 community panel. During these three waves 111 surveys were fully completed, meaning that the RP survey, 7 full days of validation and the SP were all finished. The waves were kept small intentionally to be able to adequately monitor all the elements and adjust if necessary. We do note, that the sample size is more limited than the basic survey, but the purpose of these waves was to test an extension of the basic LMS using advanced, contemporary survey methods. The survey completion rate at 33% is quite high considering the length and involvement required from respondents (completion rates of each of the steps can be seen in Table 10). The main dropout point was the user validation of trips, which gives an indication for areas of future development. The respondents were entered into small scale lotteries (approx. £20/prize) during 3 stages of the survey as a reward for participation. The respondents were told the number of prizes drawn, but not their odds of winning. To aid completion, respondents also received personalised push notification reminders once a day that were tailored to their progress in the survey (obviously this only worked if they got to the app download phase).

Modelling considerations

The data resulting from the SP experiment is not like the traditional single choice experiments. In traditional bundling literature only a small number of individual bundles are presented from which respondents need to choose from (e.g. Fojcik and Proff 2014; Hamilton and Koukova 2008; Madden et al. 2002). When customisable products are studied, either standalone menus are used (e.g. Kamakura and Kwak 2002) or fixed bundles are given, which can be extended with items chosen from a menu (e.g. Ben-Akiva and Gershenfeld 1998). The MaaS SP, not only includes fixed bundles of products, but also includes a menu option which are mutually exclusive. This means that the choice set includes the three fixed bundles and all the possible combination of the menu option, resulting in a very large choice set (over 26,000). Dealing with such scales makes enumerating the full choice set for modelling computationally infeasible. There are two possible ways around this. First, the sampling of alternatives process can be used to reduce computational demands (e.g. Ben-Akiva and Gershenfeld, 1998; Zhou and Kockelman 2008, Guevara and Ben-Akiva 2013). However, this method comes with some caveats. Most notable to the MaaS SP data is that one of the requirements of the positive conditioning property set out by McFadden (1978) under which consistent estimates can be obtained is that each choice observation be treated independent (Daly et al. 2014). The data used in this study is in violation of this, as there are repeated observations from the same individual, thus should not be treated independently. The second approach to dealing with large choice sets is aggregation of alternatives. This method uses aggregate categories of alternatives to estimate models with greatly reduced dimensionality (e.g. Kim et al. 2003; Pinjari et al. 2008).

To provide some descriptive results using the aggregation of alternatives approach, we build an initial model. The alternatives from the menu are aggregated based on the types of modes included in them. As there are four modes (public transport, bike sharing, car sharing and taxi), hypothetically there would be 42 = 16 possible combinations. However, the experiment constrained choices so that the respondent needed to choose at least 2 modes, and since some combinations were not chosen, only 10 categories remained. This, together with the three fixed plans resulted in a choice set of 13 alternatives. A mixed multinomial logit (MMNL) model is used with panel effects (Tsirimpa et al. 2007; Kamargianni 2015). Due to the repeated nature of the data, the panel (agent) effect should be captured with individual specific error terms. This allows tastes to be constant across replications for the same respondent (intra-respondent taste homogeneity) but with variation in tastes across respondents (inter-respondent taste heterogeneity) (Hess and Rose 2007).

The model results are presented in Table 11. Only the plan cost and the core modes are included in this initial model. Looking at the plan characteristics, as presumed, the cost coefficient is negative and statistically significant at the 99% confidence level. This means that as plans become more expensive, people prefer them less. Regarding the transport modes in the plans, all of them are statistically significant, but only the public transport options have positive coefficients. This shows that the type—and amount of transport modes in the plans are important to users and should be carefully considered in the design of plans. Both bus pass and travelcard (which is the London public transport pass that allows travel on all modes) have positive coefficients meaning that people strongly prefer plans when one of these are included (in the SP design, these were mutually exclusive). This result is expected, as the public transport system in London has very good coverage and is very popular among the city’s residents. Looking at the other modes, all of the remaining coefficients are negative. This means, that when these modes are included in the plans, people tend to prefer them less. This may seem alarming at first to those questioning MaaS plans, however, this result is not unexpected. It is well known that travel is a habitual behavior and it has been shown many times that much of travel behavior is driven by pure repetition and habit rather than by conscious deliberation (Schlich and Axhausen 2004; Klockner and Matthies 2004; Friedrichsmeier et al. 2013; Polydoropoulou et al. 2013). This will also mean, that people will most likely only prefer those modes in their plans which they frequently use—that is public transport. However, this should not be interpreted in any way as an argument against MaaS plans. Just because individuals do not prefer a certain mode in their plan, this does not necessarily mean they will not buy it or use it (this is a direction of further research). Finally, we obtain a highly significant coefficient for the standard deviation of the random panel effect σpanel, which means that this model allows for capturing intrinsic correlations among observations of the same individual. For further modelling exercises, due to the differences in error variances between alternatives (three fixed bundles will be correlated) nested models or error components structures should be used to accommodate this.

Conclusions

Mobility as a Service has become a widely discussed topic in the transportation field. One of its unique elements is the MaaS monthly subscription plans. However, the current state of the art has not come to a conclusion as to what MaaS plan approaches and designs work best. Important questions which currently remain unclear include:

-

What modes and features does each user group want in their plans and what is their willingness to pay for these features?

-

Do users prefer predefined bundles, customisable plans or pay-as-you-go options?

Further, from an operator and authority point of view, it is crucial to understand what certain MaaS product designs would mean with regards to modal split and potential shift in mode choice. The presented survey design aims to provide data that can help gain insights into all these questions.

It is important to reiterate, that although the presented London Mobility Survey, including the MaaS SP, is tailored to London, it can easily be adapted to other areas. A great basis for the RP survey is any local travel behaviour survey. In the London case, we customised it based on LTDS, which is the benchmark travel demand survey in the area. Although there were a number of challenges to overcome (see “Lessons learned for MaaS SP design process” section) the pieces of information that are necessary into customise the SP are as follows. Details about the local/regional (depending on the scope of the study) transport providers. This includes their structure, pricing, and even possible cooperation, e.g. already existing combined offers among multiple operators. Base prices need to be determined for the modes (per unit, denominate in either time or distance) which can be used as a base for pivoting the SP prices. Where several operators exist, the price of most likely operator who would part-take in a MaaS scheme can be used, or where this is not clear average values can be taken. Next, a list of additional features that are of interest to the area or the researchers are needed (but not necessary). Finally, any special combinations that are of specific policy interest to the specific area. All the designs and especially the wording of the MaaS explanation needs to be tailored to the local environment so that it can easily be understood by respondents (e.g. in London we drew parallels with the Oyster card as it is something respondents are familiar with).

To our knowledge, this research provides the first systematic approach to testing people’s preferences towards different Mobility as a Service plan designs using stated preference experiments. The data from this survey can provide valuable insights for decision makers and transport authorities looking to establish MaaS schemes. Since several research projects has started on MaaS, we aspire that the details, challenges and approaches presented and discussed in this paper would help gain insights for MaaS developments in all areas of the world.