Abstract

For Hamiltonian systems with non-canonical structure matrices, a new family of fourth-order energy-preserving integrators is presented. The integrators take a form of a combination of Runge–Kutta methods and continuous-stage Runge–Kutta methods and feature a set of free parameters that offer greater flexibility and efficiency. Specifically, we demonstrate that by carefully choosing these free parameters, a simplified Newton iteration applied to the integrators of order four can be parallelizable. This results in faster and more efficient integrators compared with existing fourth-order energy-preserving integrators.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we are concerned with the numerical integration of a system of ordinary differential equations (ODEs) of the form

where \(y:[0,T)\rightarrow \mathbb {R}^d\) is a dependent variable, \(S:\mathbb {R}^d \rightarrow \mathbb {R}^{d\times d}\) is a skew-symmetric matrix function, and \(H:\mathbb {R}^d \rightarrow \mathbb {R}\) is a real-valued function, which we call energy. The two functions S and H are assumed to be sufficiently regular. Along the solution to (1.1), the function H is constant:

where the dot stands for the differentiation with respect to t. Conversely, a system of ODEs having a first-integral can always be formulated in the form of (1.1) with an appropriate skew-symmetric matrix function S(y) [14], although the expression of S(y) might not be unique. When S(y) is constant and in particular \(S = J^{-1}\) with

the system is called a Hamiltonian system and the corresponding H is often referred to as the Hamiltonian. In more general cases, where S depends on y, if the Poisson bracket satisfies the Jacobi identity, the system is called a Poisson system (see, e.g., [8, Chapter VII.2]). In this paper, we always call the system of the form (1.1) a Poisson system, even if the Poisson bracket does not satisfy the Jacobi identity, bearing in mind that this terminology is just for convenience only. Furthermore, depending on the structure of S(y), the system of the form (1.1) exhibits rich geometric properties, such as symplecticity.

This paper focuses on energy-preserving integrators, which are a typical branch of geometric numerical integrators [8]. In this paper, an energy-preserving integrator refers to a one-step method \(y_0\mapsto y_1\), where \(y_1\approx y(h)\), such that \(H(y_1)=H(y_0)\). For such a method, \(H(y_n)=H(y_0)\) holds even if the step-size h is controlled adaptively.

The projection method is a relatively simple method. The projection method, while conceptually straightforward, encompasses a variety of approaches for projecting solutions onto the appropriate manifold. The effectiveness of this method, particularly concerning long-term behavior, may depend on both the selected projection technique and the underlying integrator [8, Chapter IV.4]. Therefore, caution is advised in employing the projection method.Footnote 1 A more sophisticated and systematic approach is called the discrete gradient method, which was first formulated by Gonzalez [6] (see also McLachlan, Quispel, and Robidoux [9]), although a similar idea had been known for quite some time. The discrete gradient method usually produces a second-order energy-preserving integrator. The average vector field (AVF) method, proposed by Quispel and McLaren [15], is a subclass of the discrete gradient method, which is a B-series method and of order two when S is a constant skew-symmetric matrix. Over the past decade, extensions of the AVF method to higher order have been extensively studied. For a constant S, Hairer proposed the AVF collocation method [7] and Brugnano, Iavernaro, and Trigiante proposed the Hamiltonian boundary value method [3]. These methods are based on so-called continuous-stage Runge–Kutta methods. Also worth mentioning is a relatively new work [5], which establishes a general theory on the order theory for discrete gradient methods.

Roughly speaking, the computational cost of the AVF collocation method is almost the same as the Gauss method of the same order. Miyatake and Butcher [12] characterize the condition for the continuous-stage Runge–Kutta (CSRK) method being energy-preserving in terms of the symmetry of an \(s\times s\) matrix M defining the CSRK method and find that the order condition can also be characterized in terms of \(M^{-1}\). The discussions using M seem fruitful in that one can construct an integrator with an intended order with some degrees of freedom. By manipulating the remaining parameters to enhance the integrator, for example, parallelizable integrators can be constructed.

For Poisson systems, efforts developing energy-preserving methods have also been devoted, and the aforementioned methods have been extended to this general class. For example, the AVF collocation method is extended to Poisson systems by introducing a new class of integration methods, which is a generalization of the CSRK method to partitioned systems [4] (see [1, 2] for the extension of the Hamiltonian boundary value method to the Poisson systems). We refer to this new class of integration method as the partitioned continuous-stage Runge–Kutta (PCSRK) method. The s-degree PCSRK methodFootnote 2 is characterized by the \(s\times s\) matrices \(M_i\) (\(i=1,\dots ,s\)) and the nodes \(c_i\) (\(i=1,\dots ,s\)). The results given in [11] suggest that a PCSRK method is energy-preserving if all \(M_i\) are symmetric, but it is not clear if the order condition is concisely characterized in terms of the matrices \(M_i\) and nodes \(c_i\), as is clearly done for the CSRK methods. This task does not seem so trivial; there are several difficulties associated with it. For example, recall that for the constant S, the order condition is characterized in terms of the inverse of M; however, for Poisson systems, although the highest order of the s-degree PCSRK methods is 2s [4], the corresponding \(M_i\)’s are singular. Thus, one cannot expect that the order conditions are characterized in terms of the inverse of \(M_i\)’s. Other difficulties are discussed in Remark 3.1.

Taking the above backgrounds into consideration, we focus only on fourth-order methods and address the following issues.

-

The fourth-order PCSRK method exists with \(s=2\) [4], which is unique if the degree is restricted to \(s=2\). In this paper, we set \(s=3\) and characterize the method for being order 4 in terms of \(M_1,M_2,M_3\) and \(c_1,c_2,c_3\). The key idea is to require that the PCSRK method be reduced to the CSRK method discussed in [12] when S is constant, and the method is symmetric, and simplify the order conditions for the bi-colored rooted trees with three vertices having a black root. This can be viewed as a three-degree PCSRK method with some degrees of freedom, and the parameters can be devised from another perspective.

-

As discussed in [12], an advantage of a numerical method with some degrees of freedom is that much more efficient variants may be able to be explored. Clearly, in general, the larger the degrees of the CSRK methods are, the more expensive the computational cost becomes. However, this is not always the case; for example, if the matrix M has a specific eigenstructure, the method can be implemented in a parallel architecture with almost the same cost as the case \(s=1\), though the memory usage grows. We show that a similar structure holds for the PCSRK methods.

-

Based on the above two points, we develop three-degree PCSRK integrators with some degrees of freedom, which are energy-preserving for Poisson systems, of order four, and efficiently implemented in a parallel architecture. The proposed integrators are reduced to the ones developed in [12] with similar properties for Hamiltonian systems.

The paper is organized as follows. In Section 2, after reviewing energy-preserving CSRK methods for constant S and their properties, we also discuss the formulation of energy-preserving PCSRK methods for general cases. We develop a family of energy-preserving PCSRK integrators in Section 3. We discuss the implementation issues and optimal parameter choices in Section 4. Concluding remarks are given in Section 5.

2 Preliminaries

2.1 Hamiltonian systems and CSRK methods

Let us consider the system

where S is a constant skew-symmetric matrix, but not necessarily \(J^{-1}\). Hamiltonian systems are a typical example of this class. The average vector field (AVF) method reads

where \(f(y) = S \nabla H(y)\). This method is of second-order and energy-preserving \(H(y_1)=H(y_0)\). The method can be regarded as a continuous-stage Runge–Kutta method.

Definition 2.1

(CSRK methods) Let \(A_{\tau ,\zeta }\) be a polynomial in \(\tau \) and \(\zeta \). Assume that \(A_{0,\zeta }=0\). The polynomial degree of \(A_{\tau ,\zeta }\) in \(\tau \) is denoted by s. Let \(B_\zeta \) be defined by \(B_\zeta = A_{1,\zeta }\). Define an s-degree polynomial \(Y_\tau \) (\(\tau \in [0,1]\)) and \(y_1\) such that they satisfy

A one-step method \(y_0\mapsto y_1\) is called an s-degree continuous-stage Runge–Kutta (CSRK) method.

The above definition does not specify the order of \(A_{\tau ,\zeta }\) in terms of \(\zeta \), but let us focus on \(A_{\tau ,\zeta }\) which is a polynomial of degree s in \(\tau \) and \(s-1\) in \(\zeta \). Such \(A_{\tau ,\zeta }\) will be denoted by

with a constant matrix \(M\in \mathbb {R}^{s\times s}\) so that the polynomial is identified with the matrix M. When \(s=1\) and \(M=1\), the method reduces to the AVF method.

A sufficient condition for energy preservation can be characterized in terms of M.

Theorem 2.1

([10, 12], see also [17]) When applied to (2.1), a CSRK method is energy-preserving if M is symmetric.

The symmetry of M means \((\partial /\partial \tau )A_{\tau ,\zeta }\) is symmetric. The condition is also necessary under a mild condition [12].

Several characterizations of the order conditions with respect to M are given in [12]. We note that the discussion there is based on the simplifying assumptions (see also [7]). A CSRK method is energy-preserving and of order at least \(p=2\eta \) if the symmetric matrix \(M\in \mathbb {R}^{s\times s}\) satisfies

where \(i_k\) denotes the k-th column of the \(s\times s\) identity matrix. If we choose \(\eta = s\) the method with

is of order 2s and coincides with the AVF collocation method of order 2s [7]. For example, when \(s=2\), we have

As another illustrative example, when \(s=3\), the above characterization indicates that the method with

is of order four as \(\eta =2\) except for \(\alpha = 1/5\). In this way, one can construct high-order energy-preserving integrators with some degrees of freedom. When \(\alpha \ne 7/36\), by introducing a new variable (parameter) \(\tilde{\alpha } = 1/(36\alpha - 7)\) the matrix M can be expressed more explicitly as

2.2 Poisson systems and PCSRK methods

Note that the AVF method (2.2) is not energy-preserving when applied to (1.1) with a non-constant S(y). A straightforward modification

is energy-preserving, symmetric, and thus of order two. Here, S(y) is discretized by using the mid-point rule to ensure the method is symmetric. Other choices, such as \(S(y_0)\) and \(S(y_1)\), still guarantee the energy-preservation, though the resulting integrator is of order one. It should be noted that in (2.5) \(\nabla H(y)\) term is discretized in a CSRK manner while S(y) in a standard RK manner. This observation leads to the following class of numerical integrators applied to a partitioned system

Definition 2.2

(PCSRK methods) Let \(A_{i,\tau ,j,\zeta }\) \((j=1,\dots ,s)\) be a polynomial in \(\tau \) and \(\zeta \) with the property \(A_{i,0,j,\zeta }=0\). \(A_{i,\tau ,j,\zeta }\) is assumed to be independent of i; thus, it is often denoted by \(A_{\tau ,j,\zeta }\). \(\hat{A}_{i,\tau ,j,\zeta }\) is defined by \(A_{i,c_i,j,\zeta }\) with s distinct nodes \(0\le c_1< \dots < c_s\le 1\). Let \(B_{j,\zeta } = \hat{B}_{j,\zeta } = A_{1,j,\zeta }\). Define an s-degree polynomial \(Y_\tau \) (\(\tau \in [0,1]\)), \(Z_1,\dots ,Z_s\), \(y_1\), and \(z_1\) such that they satisfy

with \(y_0=z_0\). A one-step method \(y_0\mapsto y_1\) is called an s-degree partitioned CSRK (PCSRK) method.

We note that by definition \(Z_i = Y_{c_i}\) and \(y_1 = z_1\); thus, the scheme can be written in a more compact form

The expression in Definition 2.2 is useful for discussing the order conditions.

As \(A_{i,\tau ,j,\zeta }\) depends on \(\tau \), j, and \(\zeta \), but does not depend on i, it is convenient to express it as

by using constant matrices \(M_j\in \mathbb {R}^{s\times s}\) for \(j=1,\dots ,s\). For example, the second-order method proposed by Cohen and Hairer [4] is given by

and \(c_1,c_2 = 1/2 \mp \sqrt{3}/6\). We observe that

which coincides with M in (2.3). Thus, the method is reduced to the AVF collocation method [7] when S is constant. It should be noted that both \(M_1\) and \(M_2\) are singular while \(M_1+M_2\) is nonsingular. This indicates that one cannot expect that \(M_i\) is characterized as the inverse of some matrices.

Sufficient conditions of PCSRK methods to be energy-preserving are characterized in terms of \(A_{i,\tau ,j,\zeta }\), or equivalently, the \(M_i\) matrices.

Theorem 2.2

([11]) When applied to (1.1), a PCSRK method is energy-preserving if all \(M_i\)’s are symmetric

The conditions for symmetry are also characterized as follows.

Theorem 2.3

(cf. [4])

A PCSRK method is symmetric if \(A_{i,1-\tau ,s+1-j,1-\zeta } + A_{i,\tau ,j,\zeta } = B_{j,\zeta }\) and \(c_{s+1-i}=1-c_i\).

Order conditions will be discussed in the next section.

3 A family of fourth-order energy-preserving integrators

In this section, we derive a family of fourth-order energy-preserving PCSRK methods. Specifically, we set \(s=3\) and aim to derive a 3-degree PCSRK integrator with some free parameters.

We begin with a few remarks on order conditions. In the formulation of the PCSRK method (Definition 2.2), it is not necessary to calculate \(z_1\), because only \(y_1\) is required as an output to proceed with the subsequent steps. The expression for \(y_1\) is given as a P-series:

where \(\phi , \sigma \) and F are the elementary weights, symmetry, and elementary differentials, respectively. The symbol \(\textit{T\,P}_y \) denotes the set of bi-colored trees with black roots, i.e.,

For more details about the order conditions with bi-colored trees, refer to [8].

A PCSRK method is of order p if it satisfies

where \(|\tau |\) denotes the order of \(\tau \), i.e., the number of vertices of \(\tau \). Here, \(e(\tau )\) for the mono-colored trees is defined by

and \(e(\tau )\) for the bi-colored trees takes the same value with the mono-colored trees.

Remark 3.1

Since checking all the order conditions is an immense task, one approach to avoid checking every condition relies on the simplifying assumptions. For the PCSRK methods, the simplifying assumptions are given as follows:

where

As discussed in [4], a method satisfying \(B(\rho ), C(\eta ),hC(\eta ), D(\xi ), \hat{D}(\xi )\) has the order at least \(p=\min (\rho ,2\eta +2,\xi + \eta + 1)\).

Utilizing simplifying assumptions is effective for deriving energy-preserving CSRK methods with some degrees of freedom for Hamiltonian systems. However, there is a subtle yet crucial difference between CSRK methods applied to Hamiltonian systems and PCSRK methods applied to Poisson systems. For Hamiltonian systems, if a CSRK method is energy-preserving, i.e., \(M=M^\textsf{T}\), then B(1) implies that \(B(\rho )\) is satisfied for all \(\rho =1,2,\dots \). In other words, a consistent energy-preserving CSRK method automatically guarantees \(B(\rho )\). This is a very important property, which further simplifies the discussions using simplifying assumptions. However, this is not the case for Poisson systems.

Now, we set \(s=3\), and our strategy is as follows. First, as a sufficient condition for the method to be energy-preserving and of order 4, we require that the method coincides with the 3-degree energy-preserving CSRK methods (2.4). We also require the sufficient conditions for being energy-preserving (Theorem 2.2) and symmetric (Theorem 2.3). The remaining task is to ensure the order conditions for the trees with three vertices and characterize \(M_1,M_2,M_3\) and \(c_1,c_2,c_3\) satisfying all assumptions and requirements as simply as possible.

As a sufficient condition, we require that

In addition to this, we require that \(M_1,M_2,M_3\) are symmetric, and the method itself is symmetric. Under the assumption that \(M_1,M_2,M_3\) are symmetric, Theorem 2.3 indicates that the method is symmetric if

and \(c_2 = 1/2\), \(c_1 + c_3 = 1\). Note that \(M_2\) can be arbitrary as long as it is symmetric.

To ensure that the method is of order 4, the method needs to satisfy the order conditions for the trees  . Note that the order conditions for

. Note that the order conditions for  and

and  are automatically satisfied because assuming (3.1) means that the method is already of order at least 4 when applied to a Hamiltonian system. More precisely, the order conditions for the trees of order up to 4 which have only black nodes are automatically satisfied.

are automatically satisfied because assuming (3.1) means that the method is already of order at least 4 when applied to a Hamiltonian system. More precisely, the order conditions for the trees of order up to 4 which have only black nodes are automatically satisfied.

The assumptions of the following proposition aid in constructing the intended integrators.

Proposition 3.1

Assume that a 3-degree PCSRK method satisfies

Then, when applied to Poisson systems, the method is energy-preserving, symmetric, and of order at least 4.

Proof

From Theorem 2.2, the condition (3.2) ensures the energy-preservation. The condition (3.3) guarantees that the method is of order at least 4 when applied to the cases where S is constant, indicating that for Poisson systems, the order conditions for  are automatically satisfied. The conditions (3.4) and (3.5) relate to the method being symmetric, suggesting that only trees with three vertices must be taken into account to guarantee that the method is of order at least 4. Let \(\phi \) be the elementary differentials. It remains to show that

are automatically satisfied. The conditions (3.4) and (3.5) relate to the method being symmetric, suggesting that only trees with three vertices must be taken into account to guarantee that the method is of order at least 4. Let \(\phi \) be the elementary differentials. It remains to show that  and

and  . We notice that from (3.2) and (3.3) that \(B_{i,\tau } = [1, \ \tau , \ \tau ^2] M_i [1, \ 1/2, \ 1/3]^\textsf{T}\), \(C_{i,\tau } = \tau \).

. We notice that from (3.2) and (3.3) that \(B_{i,\tau } = [1, \ \tau , \ \tau ^2] M_i [1, \ 1/2, \ 1/3]^\textsf{T}\), \(C_{i,\tau } = \tau \).

: From (3.6), we see that

: From (3.6), we see that

: From (3.7), we see that

: From (3.7), we see that

: From (3.6) and \(\sum _{i=1}^3 B_{i,\tau }=1\), we see that

: From (3.6) and \(\sum _{i=1}^3 B_{i,\tau }=1\), we see that

: From (3.3) and (3.7), we see that

: From (3.3) and (3.7), we see that

: From (3.6) and (3.7), we see that

: From (3.6) and (3.7), we see that

\(\square \)

The condition (3.6) comprises three equations. However, they are not independent under (3.3), (3.4), and (3.5): they actually impose only a single constraint. We explain this in detail below.

Using \(M=M_1+M_2+M_3\) and (3.5), we observe that

Applying (3.3) and (3.4), which yields \(M[1,\, 1/2,\, 1/3]^\textsf{T}= [1,\, 0,\, 0]^\textsf{T}\), we obtain

Here, we used

As a result, the condition (3.6) can be rewritten as

and the first and second constraints (rows) are evidently compatible. Consequently, we only need to consider

The remaining task is to determine or characterize \(M_1, M_2, M_3\) and \(c_1,c_2,c_3\) so that they satisfy the conditions (3.2)–(3.7). The condition (3.7) can be rewritten as

It is found that the symmetric matrix \(M_3\) satisfying (3.8) and (3.9) can be expressed as

We can set \(c_1<1/2\) arbitrary as long as \(c_1\ne 0\), and \(\gamma _1,\gamma _2,\gamma _3,\gamma _4\) are arbitrary real numbers. Accordingly, \(M_1\) is determined from (3.4). Thus, we can regard \(c_1\), \(\gamma _1,\gamma _2,\gamma _3,\gamma _4\) and \(\alpha \) in (3.3) as free parameters of the fourth-order methods.

Remark 3.2

The exploration of PCSRK methods with a degree greater than three, which are energy-preserving and have an order of at least four, is a challenge. Of course, formulating the conditions corresponding to (3.2)–(3.7) is relatively straightforward. However, special attention must be paid to the (3.6)-type condition due to the fact that

This equation no longer represents a single constraint. For example, when \(s=4\) or 5, the condition implies two independent constraints. This condition corresponds to the simplifying assumption \(C(\eta )\) with \(k=2\) and \(l=1\), which allows for a reduction in the number of order conditions. Specifically, in the above derivation, \(C(\eta )\) with \(k=2\) and \(l=1\) constitutes a single constraint, which simplifies the calculation. When \(s>3\), it is crucial to carefully consider whether to employ the assumption or treat  , and

, and  independently. The advantage of imposing (3.10) lies in the fact that the condition for \(M_i\) remains linear.

independently. The advantage of imposing (3.10) lies in the fact that the condition for \(M_i\) remains linear.

The author believes that a similar approach might help us construct higher-order methods. However, in order to streamline the derivation process, simplifying assumptions or their variants should be incorporated. Some challenges to address include expressing these assumptions in terms of the \(M_i\) matrices.

This paper has been focusing on the derivation of 3-degree fourth-order methods due to their apparent practical utility.

Remark 3.3

The discussion presented above pertains to the case where \(C_{i,\tau } = \tau \). However, more general cases can also be examined, as has been discussed for Hamiltonian systems [12]. Further exploration of these cases is not pursued here due to the cumbersome nature of the presentation and the limited practical advantages they appear to offer compared to the methods derived above.

Remark 3.4

A function C(y) is referred to as a Casimir function if the condition \(\nabla C(y)^\textsf{T}B(y)=0\) holds for all y. In cases where the Casimir takes the quadratic form \(C(y) = y^\textsf{T}A y\) with a symmetric constant matrix A, the s-degree 2s-order PCSRK method [4] exactly inherits the Casimir. However, the newly introduced fourth-order integrators cannot generally preserve the Casimir. This limitation may be considered a potential drawback of the new family.

4 Parameter selection

This section addresses implementation concerns. We begin by outlining a strategy for implementing PCSRK methods in a general context and then proceed to investigate parallelizable integrators. As shown below, the parallelizability is characterized in terms of only \(\alpha \). We also investigate the choice of other parameters.

4.1 Solving the nonlinear equations system

Let us express \(Y_\tau \) as

where \(l_i (\tau )\) is defined by

with \(c_0 = 0\). Note that \(Y_{c_i} = Z_i\). We now regard (2.6) and (2.7) as a system of nonlinear equations in terms of \(Y_{c_1},\dots ,Y_{c_s}\):

It is not necessary to evaluate (2.6) at \(c_1,\dots ,c_s\) as long as it is evaluated at s distinct points; however, \(c_1,\dots ,c_s\) are considered to avoid cumbersome presentation. Let

Then, we are concerned with solving

Note here that

In the first “\(\approx \)," the term involving \(\nabla _{Y_{c_j}} S(Y_{c_i})\) is omitted to avoid that tensors appear, by taking in mind that even if we care about such a term, the following discussion remains true. Thus, we have

where

and

denotes an approximate Jacobian matrix. Therefore, a simplified Newton-like method gives the iteration formula

4.2 Parallelizable integrators

As is the case with implicit Runge–Kutta methods and continuous-stage Runge–Kutta methods, if the matrix E has only real, distinct eigenvalues, the linear system (4.2) of size sN can be computed efficiently by using parallel architectures. If all eigenvalues of E are real and distinct, there exists a matrix T such that

Let \(Q = (I_{sN} - h E \otimes J_0)\) and define \(\overline{Q}:= (T^{-1}\otimes I_N) Q (T\otimes I_N)\). Then, it follows that

which is block diagonal. Therefore, \(\rho ^l\) can be calculated based on

The key point is that the linear system for \(\overline{\rho }^l\) of size sN consists of s linear systems of size N. Thus, the most computationally heavy part in updating \(Y_l\) can be computed in parallel. We note that T is a 3-by-3 matrix and thus the explicit computation of \(T^{-1}\) and the multiplication of \(T^{-1}\otimes I_N\) with a vector are not particularly challenging.

We note that E is identical to that appearing in the study of energy-preserving methods for Hamiltonian system [12].

Theorem 4.1

([12]) The eigenvalues of the matrix E defined in (4.1) are independent of the \(c_i\) values.

This theorem indicates that the parallelizability does not depend on the \(c_i\) values. If the method is parallelizable when applied to Hamiltonian systems, it is also parallelizable to Poisson systems.

For the method satisfying the assumptions in Proposition 3.1, according to [12, Section 5.3.1], the corresponding E has real and distinct eigenvalues if

4.3 Discussion for the accuracy

For parallelizable fourth-order integrators, the most computationally demanding aspect is solving linear systems of size d. In contrast, for the energy-preserving 2-degree PCSRK method, one needs to solve a linear system of size 2d. When dealing with dense coefficient matrices, direct solvers are often preferred for solving such linear systems. The computational complexity of direct solvers is proportional to the cube of the size of the linear systems.

Considering this, we assume that our parallelizable integrators are eight times faster than the 2-degree PCSRK method. However, we note that this is not always the case, as actual computational costs can vary significantly based on the specific problem and the choice of linear solver.

We compare the coefficients of elementary differentials with trees of order 5 for the P-series expansions of the exact solution, 2-degree PCSRK method (AVF(4)), and the proposed method. Table 1 compares the coefficients for the trees for which every vertex is black. Clearly, for both AVF(4) and the proposed methods, the coefficients for the trees  differ from those for the exact solution.

differ from those for the exact solution.

However, the proposed method exhibits an interesting property for the coefficients for the bi-colored tree. Tables 2, 3, 4, 5, 6, 7, 8, 9, and 10 show the coefficients for bi-colored trees with black roots with the following quantities:

We note that for AVF(4), even for the trees of the form  some coefficients for the trees with white nodes differ from the exact ones. In contrast, for the proposed method, if the parameters satisfy

some coefficients for the trees with white nodes differ from the exact ones. In contrast, for the proposed method, if the parameters satisfy

all coefficients are exact. These conditions are compatible by choosing the parameters satisfying

For the proposed method, only the coefficients for the bi-colored trees of the shape  that are expressed in terms of (C) or (D) cannot coincide with the exact ones if \(\tilde{\alpha }\) is in the range of (4.3).

that are expressed in terms of (C) or (D) cannot coincide with the exact ones if \(\tilde{\alpha }\) is in the range of (4.3).

We also note that, apart from constructing efficient fourth-order integrators, if we set \(\tilde{\alpha }=5\) all the coefficients for the trees with 5 vertices can be exact. However, the condition (4.4) indicates that there remains one degree of freedom. Therefore, 3-degree sixth-order integrators are not unique, which suggests that there exists a family of s-degree 2s-order energy-preserving integrators (for \(s=1\) and 2, such integrators are unique).

4.4 Numerical verification

As a simple test confirming the order of accuracy and the effect of the choice of parameters for the proposed method, we employ the Lotka–Volterra system

For the numerical experiment, the parameters were set to \(a=-2\), \(b=-1\), \(c=-0.5\), \(\nu =1\), \(\mu = 2\), and the initial values \(y_0 = (1.0,1.9,0.5)\). In the numerical experiment, a high-order quadrature with the tolerance \(10^{-12}\) is used to approximate the integrals appearing in the integrator.

The integrator actually preserves the Hamiltonian H(y). For instance, when the step size is set to \(h=0.05\), the error between true and numerical Hamiltonians grows as the time integration proceeds due to the rounding errors and the use of quadrature; however, at \(t=10\), this error remains below \(10^{-12}\). This level of accuracy is noteworthy, actually highlighting the energy preservation, especially when compared to the error in the Casimir invariant at the same point in time, which exceeds 0.01.

We now check that the proposed integrator is actually of order 4. We set \(\gamma _1,\dots ,\gamma _4\) to

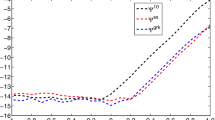

This choice corresponds to the 6th order integrator presented in [4] when \(\tilde{\alpha }=5\). In Fig. 1, the error behavior of the proposed method with this choice of parameters and \(\tilde{\alpha } = -234\) are compared with the 2nd and 4th order integrators proposed in [4]. This figure supports that the proposed integrator actually attains the 4th-order accuracy. While the error of the proposed method is substantially bigger than that of AVF(4), efficiency could still favor the proposed method if its computation is more than twice as fast as AVF(4).

Remark 4.1

We discuss the efficiency of our proposed method in more detail. It has been observed that to achieve a comparable level of accuracy, the proposed method necessitates nearly halving the step size. This suggests that if the computation time per time step of the proposed method is at least twice as fast as that of AVF(4), then the proposed method is considered to be more efficient.

A comparative analysis of the two methods is provided. We examine a scenario where the linear system of size 2d, required for solving AVF(4), is solved using a direct method on a single core. Conversely, three linear systems of size d for the proposed method are solved using the same direct method but potentially by three cores in parallel. Assuming that the simplified Newton iterations for both methods demand a nearly identical number of iterations to the convergence, the proposed method is approximately eight times faster because the computational cost of a direct solver typically scales with the cube of the system size.

However, it is important to note that such estimations are contingent upon the specifics of the problem and the selection of linear solvers. In the case of AVF(4), further parallelization might be achievable. Nonetheless, most techniques for parallelization can also be applied to the proposed method. Therefore, expecting the proposed method to compute a single step at least twice as fast as AVF(4) appears to be a reasonable assumption in most instances, particularly for large-scale problems.

Error at \(t=1\) of numerical solutions for the Lotka–Volterra system. The proposed method with the parameters (4.5) and \(\tilde{\alpha } = -234\) is compared with the 2nd and 4th order methods proposed in [4]. Dashed and dotted lines show the slope for the 2nd and 4th-order convergence, respectively

with the black root for the P-series expansions of the exact solution, the fourth-order AVF collocation method, and the proposed method

with the black root for the P-series expansions of the exact solution, the fourth-order AVF collocation method, and the proposed method with the black root for the P-series expansions of the exact solution, the fourth-order AVF collocation method, and the proposed method

with the black root for the P-series expansions of the exact solution, the fourth-order AVF collocation method, and the proposed method5 Concluding remarks

In this paper, we have shown a family of fourth-order energy-preserving integrators based on the partitioned CSRK methods. The integrators can be implemented in a parallel architecture if one of the parameters is chosen such that it satisfies a certain inequality. Actual implementation must tailored to the problem at hand and it is anticipated that these integrators, with their parallelizability, will demonstrate substantial efficiency when applied to large-scale problems. A more detailed evaluation of their performance in large-scale contexts will be the subject of forthcoming research, as similar evaluation was studied for the CSRK methods [16].

Availability of data and materials

Not applicable

Code availability

Notes

It is worth noting that the projection concept retains its utility. Additionally, we mention the work [13] that shows the equivalence between projection methods and the discrete gradient methods mentioned below.

The degree plays a similar role to the stages of (partitioned) Runge–Kutta methods.

References

Amodio, P., Brugnano, L., Iavernaro, F.: Arbitrarily high-order energy-conserving methods for Poisson problems. Numer. Algor. (2022). https://doi.org/10.1007/s11075-022-01285-z

Brugnano, L., Calvo, M., Montijano, J.I., Rández, L.: Energy-preserving methods for Poisson systems. J. Comput. Appl. Math. 236(16), 3890–3904 (2012). https://doi.org/10.1016/j.cam.2012.02.033

Brugnano, L., Iavernaro, F., Trigiante, D.: Hamiltonian boundary value methods (energy preserving discrete line integral methods). JNAIAM. J. Numer. Anal. Ind. Appl. Math. 5(1–2), 17–37 (2010)

Cohen, D., Hairer, E.: Linear energy-preserving integrators for Poisson systems. BIT 51(1), 91–101 (2011). https://doi.org/10.1007/s10543-011-0310-z

Eidnes, S.: Order theory for discrete gradient methods. BIT 62(4), 1207–1255 (2022). https://doi.org/10.1007/s10543-022-00909-z

Gonzalez, O.: Time integration and discrete Hamiltonian systems. J. Nonlinear Sci. 6, 449–467 (1996). https://doi.org/10.1007/s003329900018

Hairer, E.: Energy-preserving variant of collocation methods. JNAIAM. J. Numer. Anal. Ind. Appl. Math. 5, 73–84 (2010)

Hairer, E., Lubich, C., Wanner, G.: Geometric numerical integration: structure-preserving algorithms for ordinary differential equations, 2nd edn. Springer-Verlag, Berlin (2006)

McLachlan, R.I., Quispel, G.R.W., Robidoux, N.: Geometric integration using discrete gradients. R. Soc. Lond. Philos. Trans. Ser. A Math. Phys. Eng. Sci. 357, 1021–1045 (1999). https://doi.org/10.1098/rsta.1999.0363

Miyatake, Y.: An energy-preserving exponentially-fitted continuous stage Runge-Kutta method for Hamiltonian systems. BIT 54(3), 777–799 (2014). https://doi.org/10.1007/s10543-014-0474-4

Miyatake, Y.: A derivation of energy-preserving exponentially-fitted integrators for Poisson systems. Comput. Phys. Commun. 187, 156–161 (2015). https://doi.org/10.1016/j.cpc.2014.11.003

Miyatake, Y., Butcher, J.C.: A characterization of energy-preserving methods and the construction of parallel integrators for Hamiltonian systems. SIAM J. Numer. Anal. 54, 1993–2013 (2016). https://doi.org/10.1137/15M1020861

Norton, R.A., McLaren, D.I., Quispel, G.R.W., Stern, A., Zanna, A.: Projection methods and discrete gradient methods for preserving first integrals of ODEs. Discrete Contin. Dyn. Syst. 35(5), 2079–2098 (2015). https://doi.org/10.3934/dcds.2015.35.2079

Quispel, G., Capel, H.: Solving ODEs numerically while preserving a first integral. Phys. Lett. A 218(3–6), 223–228 (1996). https://doi.org/10.1016/0375-9601(96)00403-3

Quispel, G.R.W., McLaren, D.I.: A new class of energy-preserving numerical integration methods. J. Phys. A 41, 045206 (2008). https://doi.org/10.1088/1751-8113/41/4/045206

Sakai, T., Kudo, S., Imachi, H., Miyatake, Y., Hoshi, T., Yamamoto, Y.: A parallelizable energy-preserving integrator MB4 and its application to quantum-mechanical wavepacket dynamics. Jpn. J. Ind. Appl. Math. 38(1), 105–123 (2021). https://doi.org/10.1007/s13160-020-00430-2

Tang, W., Sun, Y.: Construction of Runge-Kutta type methods for solving ordinary differential equations. Appl. Math. Comput. 234, 179–191 (2014). https://doi.org/10.1016/j.amc.2014.02.042

Acknowledgements

The author is grateful for various comments by anonymous referees.

Funding

Open Access funding provided by Osaka University. This work was partially supported by JST Grant No. JPMJPR2129 and JSPS Grant Nos. 20H01822, 20H00581, and 21K18301.

Author information

Authors and Affiliations

Contributions

Y.M. as the sole author is responsible for the research results, numerical experiments, and main manuscript.

Corresponding author

Ethics declarations

Ethics approval

The author certifies that this manuscript has been submitted to only one journal at this time.

Consent for publication

The author provides consent for publication.

Conflict of interest

The author declares no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A. Coefficients of the elementary differentials

Appendix A. Coefficients of the elementary differentials

We present the coefficients of the elementary differentials, which were used in Section 4.3. The Julia code employed for verifying these coefficients is available on GitHub. This code can be accessed at the following repository: https://github.com/yutomiyatake/EP_fourth_Poisson.

with the black root for the P-series expansions of the exact solution, the fourth-order AVF collocation method, and the proposed method

with the black root for the P-series expansions of the exact solution, the fourth-order AVF collocation method, and the proposed method with the black root for the P-series expansions of the exact solution, the fourth-order AVF collocation method, and the proposed method

with the black root for the P-series expansions of the exact solution, the fourth-order AVF collocation method, and the proposed method with the black root for the P-series expansions of the exact solution, the fourth-order AVF collocation method, and the proposed method

with the black root for the P-series expansions of the exact solution, the fourth-order AVF collocation method, and the proposed method with the black root for the P-series expansions of the exact solution, the fourth-order AVF collocation method, and the proposed method

with the black root for the P-series expansions of the exact solution, the fourth-order AVF collocation method, and the proposed method with the black root for the P-series expansions of the exact solution, the fourth-order AVF collocation method, and the proposed method

with the black root for the P-series expansions of the exact solution, the fourth-order AVF collocation method, and the proposed method with the black root for the P-series expansions of the exact solution, the fourth-order AVF collocation method, and the proposed method

with the black root for the P-series expansions of the exact solution, the fourth-order AVF collocation method, and the proposed method with the black root for the P-series expansions of the exact solution, the fourth-order AVF collocation method, and the proposed method

with the black root for the P-series expansions of the exact solution, the fourth-order AVF collocation method, and the proposed methodRights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Miyatake, Y. A new family of fourth-order energy-preserving integrators. Numer Algor 96, 1269–1293 (2024). https://doi.org/10.1007/s11075-024-01824-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-024-01824-w