Abstract

Large-scale optimization algorithms frequently require sparse Hessian matrices that are not readily available. Existing methods for approximating large sparse Hessian matrices have limitations. To try and overcome these, we propose a novel approach that reformulates the problem as the solution of a large linear least squares problem. The least squares problem is sparse but can include a number of rows that contain significantly more entries than other rows and are regarded as dense. We exploit recent work on solving such problems using either the normal equations or an augmented system to derive a robust approach for computing approximate sparse Hessian matrices. Example sparse Hessians from the CUTEst test problem collection for optimization illustrate the effectiveness and robustness of the new method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider the large sparse optimization problem

where f(x) is a sufficiently smooth function of n variables. The solution x may be required to satisfy additional conditions (for instance, the components of x must be non negative), in which case the optimization problem is said to be constrained; otherwise, it is unconstrained. Whilst the gradient \(g(x) \mathrel {\mathop :}=\nabla f(x)\) is often readily available, the Hessian matrix \(H(x) \mathrel {\mathop :}=\nabla ^2 f(x)\) is frequently difficult to provide. For example, the backward mode of automatic differentiation enables the gradient of a nonlinear function to be computed at a cost that is a small multiple of the that of evaluating f(x), but the cost of evaluating H(x) using differencing techniques is \(\mathcal {O}(n)\) times that of f(x). This is unfortunate because there are important theoretical and practical benefits in having access to the Hessian matrix. The explosion of interest in machine learning and data science algorithms that involve optimizing a function has further emphasised the need for good approximations to Hessian matrices.

Interest in methods for building approximations to H(x) dates back to the 1960s. The focus at that time was on problems involving a small number of variables and consequently on small dense Hessian matrices. Extensions to the sparse case were not successful because either the formulae used generated dense matrices that were impractical for large problems, or imposing sparsity led to potential numerical instability in the approximation algorithms [7, 28,29,30]. Attention subsequently turned to limited-memory strategies [20, Chapter 7]. These did not seek to reproduce the Hessian matrix but to incorporate the curvature observed at a number of previous iterates. No attempt was made to impose sparsity.

Our interest is in large-scale problems for which it is essential that sparsity is exploited. The proposed new method formulates the problem as a large-scale linear least squares (LS) problem. In general, this LS problem is sparse but, if the Hessian matrix contains one or more rows with a large number of entries, then the LS matrix has some rows that are regarded as dense. These dense rows make the problem more challenging. Methods for tackling sparse-dense LS problems have been considered, for example, in [3, 5, 9, 23, 24, 26, 27]. Exploiting the work of Scott and Tůma [23, 26], we propose using sparse direct linear equation solvers combined with an iterative method. Recent software from the HSL Mathematical Software Library [16] is used to perform numerical experiments.

The paper is organised as follows. In Section 2, we introduce our proposed new LS formulation. Sparse direct methods for solving this LS problem are considered in Section 3, with an emphasis on the sparse-dense case. In Section 4, we report the results of numerical experiments that illustrate the potential of the new method to be used for approximating large sparse Hessian matrices in practice. Finally, concluding comments are given in Section 5.

2 Least squares formulation

Consider the twice differentiable function f(x) of n variables x, whose gradient g(x) is known. The challenge is to build approximations \(B^{(k)} = \{b^{(k)}_{ij}\}\) of the Hessian matrix H(x) at a sequence of given iterates \(x^{(k)}\). \(H(x^{(k)})\) is an \(n \times n\) symmetric matrix and we assume that its sparsity pattern (the locations of the nonzero entries) is known. The approach we propose is based on using the data from a sequence of \(m \ge 1\) previous steps to estimate \(B^{(k)}\). The idea of using recent difference pairs

was initially proposed by Fletcher, Grothey and Leyffer [8]. Their aim was to construct \(B^{(k)}\) that best satisfies the multiple secant conditions given by

They did this by solving, for each k, the convex quadratic programming problem

Here, if W is a matrix with entries \(=\{w_{ij}\}\) then \(\Vert W \Vert _F^2\) denotes its squared Frobenius norm and \(\mathcal {S}(W) \mathrel {\mathop :}=\{ (i,j) : w_{ij} \ne 0 \}\) is its sparsity pattern. Solving the so-called Constrained Procrustes Problem (2.3) results in an estimate of the Hessian matrix that is symmetric and whose sparsity is preserved, although positive-definiteness is not guaranteed. Consequently, this technique is useful inside a trust region method where positive-definiteness of the Hessian matrix is not a requirement. Problem (2.3) can be solved using existing well-developed optimization techniques, but for large problems they are computationally prohibitively expensive. Instead, we propose stacking the (unknown) nonzero entries in the upper triangular part of \(B^{(k)}\) row-by-row above each other in a vector \(z^{(k)}\) of size equal to the number of nonzero entries in the upper triangular part of \(B^{(k)}\) (equivalently, the entries in the lower triangular part are stacked column-by-column). In this way, if \(nz(B^{(k)})\) denotes the number of nonzero entries in the upper triangular part of \(B^{(k)}\), we redefine the problem as a large sparse linear system of equations of size \(mn \times nz(B^{(k)})\) given by

Here the matrix \(A^{(k)}\) and the vector \(c^{(k)}\) are known and depend on the secant conditions (2.2). To illustrate this formulation, consider the following two simple examples.

Example 1

Let \(n=3\) and consider the approximate Hessian matrix with \(nz(B^{(k)}) = 4\)

For \(m=2\), the \( 6 \times 4\) linear system (2.4) is

Example 2

Let \(n=4\) and consider the approximate Hessian matrix with \(nz(B^{(k)}) = 6\)

For \(m=2\), the \( 8 \times 6\) linear system (2.4) is

The matrix \(A^{(k)}\) is rectangular with its row dimension dependent on m (the number of secant directions) while the column dimension and the number of entries in each row depend on \(\mathcal {S}(H(x^{(k)})\). An important and attractive feature of this formulation is that it naturally imposes symmetry on \(B^{(k)}\).

There may be null rows present in the system (2.4); these result from linear terms in the objective and/or constraints of the optimization problem. All null rows are removed prior to solving the system. Whether the resulting LS system is over- or under-determined depends on m and the density of \(\mathcal {S}(H(x^{(k)})\). If it is over-determined then the equations will be inconsistent in general. In this case, we compute the least squares solution, that is, \(z^{(k)}\) that minimizes

If the system is under-determined then there are infinitely many solutions or no solutions because the equations are inconsistent. In this case, the \(z^{(k)}\) that minimizes the regularized problem

is computed. In this study, we focus on choosing m so that the system is over-determined (strictly speaking it is sufficient for the system to be well-determined).

Although most rows of \(A^{(k)}\) are sparse, some can be significantly denser than the others. This occurs if the Hessian matrix has one or more rows with many entries, which can be the case in some nonlinear optimization problems where the objective and/or constraints involve all (or many) of the variables. In particular, if \(B^{(k)}\) has a row with \(n_1 \le n\) entries then \(A^{(k)}\) has m rows with \(n_1\) entries (see Example 2 with \(n_1 = n = 4\)). If \(n_1\) is large (compared to the number of entries in the other rows of \(A^{(k)}\)), we refer to the problem as a sparse-dense LS problem.

For simplicity of exposition, in the remainder of this paper, we omit the superscript (k). When we wish to emphasise the dependence on the secant parameter, we use the notation A(m). We also denote the number of entries in the upper triangular part of \(B^{(k)}\) by N and set \(mn = M\).

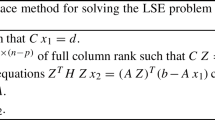

3 Solving large-scale least squares problems

Solving large-scale linear least squares problems is well-known to be significantly harder than solving large square linear systems of equations; sparse-dense LS problems are particularly challenging. In 2017, a review by Gould and Scott [12] reported on the performance of different software packages when employed to solve an extensive set of large LS problems arising from a range of practical applications. Direct methods for solving such systems are characterized by computing a matrix factorization in such a way that the problem is transformed into one that involves solving systems of equations with factor matrices that are easy and inexpensive. Direct methods obtain the solution in a finite and fixed number of steps that is independent of A and c. Due to rounding errors the computed solution is generally not equal to the exact one, but if a direct method is well implemented, the resulting software is extremely robust and can be used as a “black box solver”, with the user not needing any detailed knowledge or understanding of what is going on within the box. By contrast, an iterative method generally involves an unknown number of steps and its performance is highly problem dependent. A major advantage of iterative methods is that they require much less memory than direct methods, for which the memory requirements generally increase rapidly with problem size. Thus for very large problems, iterative methods are needed. For these to be effective, preconditioning is required. Gould and Scott highlighted some of the weaknesses of existing preconditioners for LS problems and demonstrated the specific need for new approaches together with software designed for solving sparse-dense LS problems. This led us to look at developing new ideas for preconditioners [2] and to work on algorithms that can handle sparse-dense problems [23,24,25,26,27]. These include direct solvers and LS preconditioners and, importantly, combining direct and iterative techniques.

In this paper, the sizes of the systems we are interested in allows us to focus on sparse direct methods and, for sparse-dense problems, we use them within an iterative method. We consider using both the normal equations and the larger but sparser augmented system formulation.

3.1 Direct methods for sparse LS problems

Solving (2.5) is mathematically equivalent to solving the \(N \times N\) normal equations

where, if A has full column rank, the normal matrix C is symmetric and positive definite. Thus, standard methods for solving such systems can be employed. In particular, a Cholesky factorization \(C = LL^T\), where the factor L is a lower triangular matrix, can be computed. The 2-norm condition number of the normal matrix is

where \(\lambda _1(C)\) and \(\lambda _N(C)\) are its largest and smallest eigenvalues, respectively. As the condition number of C is the square of that of A, an accurate solution may be difficult to compute if A is poorly conditioned. If A is not full rank, the Cholesky factorization of C breaks down; near rank degeneracy can cause similar numerical problems in finite precision arithmetic.

Observe that if P is any permutation matrix, then

so that the normal matrix is independent of the ordering of the rows of A. Hence for our Hessian approximations, C does not depend on the ordering of the secant conditions. However, the ordering of the rows and columns of C influences the sparsity of its factors. Many direct solvers offer an initial ordering phase that chooses an appropriate permutation to limit fill-in of the factors; otherwise, an ordering package such as METIS [17] (nested dissection ordering) or the HSL routine HSL_MC69 (which offers minimum degree and approximate minimum degree orderings) can be employed to preorder C [16].

An alternative approach is to use the much larger but sparser \((M+N)\times (M+N)\) augmented system

where \(r = c - Az\) is the residual vector. This is a symmetric indefinite system (commonly called a saddle point system) and therefore, if there is sufficient memory available, a sparse direct solver that incorporates numerical pivoting for stability can be used. Well-known and widely-available codes that compute an \(LDL^T\) factorization in which L is unit lower triangular and D is block diagonal with blocks of size 1 and 2 include MA57 [6] and HSL_MA97 [14, 15], MUMPS [18] and WSMP [32]. Again, preordering of the rows of the augmented system is key to limiting the density of the L factor and hence the memory requirements and the operation counts. Numerical results for direct solvers applied to both the normal equations and augmented system approaches are given in [22]. The reported experiments indicated that neither approach is consistently the best in terms of speed and/or the size of the computed factors.

Prescaling A can also be important for the success of the solver. In general, in place of (3.1) we solve

where S is a diagonal scaling matrix. For example, S could be chosen so that the 2-norm of each column of the scaled matrix AS is equal to unity. Similarly, in place of (3.2), we solve

To simplify notation, we omit S from the following discussion (but it is used in all numerical experiments).

Methods based on the QR factorization of A are also possible. These can be more stable for ill-conditioned problems but they can also be prohibitively expensive for large-scale problems. A recent computational study of QR methods for solving sparse least squares problems is given in [27].

3.2 Influence of m on the normal matrix

Assume that A(m) is sparse with full column rank. The rows of A(m) can be permuted so that

where each \(A_j\) is of order \(n \times N\) and \(\mathcal {S}(A_j) = \mathcal {S}(A_{j+1})\), \( 1 \le j < m\). In Example 1, \(A_1\) comprises rows 1, 3 and 5 and \(A_2\) rows 2, 4 and 6. It follows that the \(N \times N\) normal matrix is

where the \(C_j\) are independent and each has the same sparsity pattern. The \(C_j\) can be computed in parallel and then summed to obtain C(m). Thus increasing m has a limited effect on the work required to form C(m).

Writing \(C(m+1) = C(m) + A_{m+1}^T A_{m+1} \), it follows from the Courant-Fisher theorem that if the eigenvalues \(\{\lambda _i(C(m))\}\) and \(\{\lambda _i(C(m+1))\}\) (\(1 \le i \le N\)) are in decreasing order then the extreme eigenvalues satisfy

That is, as m increases the eigenvalues of the corresponding normal matrix move away from zero. There is, however, no guarantee that the conditioning of the normal matrix improves.

3.3 Solving sparse-dense LS problems

Observe that if one or more rows of A contain a significant number of entries, then the normal matrix C is effectively dense and factorizing it is impractical for large problems. Indeed, a direct solver will fail because of insufficient memory and if an incomplete factorization of C is employed as a preconditioner for an iterative method, the error in the factorization can be so large as to prohibit its effectiveness as a preconditioner. Dense rows do not prevent the use of a general-purpose sparse indefinite direct solver to solve the augmented system (3.2), but this fails to take advantage of the block structure and the need for pivoting for numerical stability inhibits the exploitation of parallelism. Obtaining robust preconditioners for such systems has been the subject of substantial research (see, for instance, [4, 21, 31] and the references therein), but this remains a challenge.

Assume the M rows of the (permuted) LS system matrix A are split into two parts with a conformal splitting of the right-hand side vector c as follows

with \(M = m_s + m_d\), \(m_s\ge N\) and \(m_s \gg m_d\). Problem (2.5) becomes

Splitting can be used to tackle sparse-dense problems in which A contains \(m_d \ge 1\) rows that have many more entries than the other rows (in our Hessian matrix approximations, \(m_d\) is a multiple of m). These “dense” rows comprise \(A_d\). In Example 2, the last \(m_d = m = 2\) rows of \(A^{(k)}\) arise from the last row of \(B^{(k)}\), which is dense and so these rows are dense (with n entries). Another possible motivation for splitting the rows is to accommodate appending a set of additional rows, which are not necessarily dense, to A. For example, if the number of secant conditions is increased to \(m +m_1\) then \(A_d\) corresponds to the extra \(m_d= m_1n\) rows and we are then interested in approaches that avoid recomputing everything from scratch.

Using (3.3), the normal equations are given by

These can be solved using the equivalent \((n+m_d) \times (n+m_d)\) blocked linear system

If \(A_s\) has full column rank and all its rows are sparse, then the reduced normal matrix \(C_s\) is symmetric positive definite and sparse. Let \(C_s = (P_s L_s) (P_s L_s)^T\) be its sparse Cholesky factorization, where the sparse factor \(L_s\) is lower triangular and \(P_s\) is a permutation matrix that is chosen to limit the number of entries in \(L_s\). We then have the signed Cholesky factorization

where \(B_d\) is the solution of the triangular system

and \(L_d\) is the Cholesky factor of the \(m_d \times m_d\) (negative) Schur complement

Assuming \(m_d\) is small, \(L_d\) can be computed using dense linear algebra and most of the work is in computing the sparse factorization of \(C_s\). Thus, if the rows in \(A_d\) change (whether or not they are dense), this approach provides an inexpensive updating strategy.

In practice, \(A_s\) can contain null columns. This is illustrated by Example 2, in which \(A_s\) comprises the first 6 rows; column 6 of \(A_s\) is null. In this case, \(A_s\) is rank-deficient and \(C_s\) is positive semidefinite and a Cholesky factorization breaks down. Even if \(C_s\) has no null columns, it can be singular or highly ill conditioned. There are a number of ways to overcome this, including removing the null columns explicitly [24] or employing matrix stretching [26]. A more straightforward approach is to use regularization in which the Cholesky factorization of the globally shifted matrix \( C_s(\alpha ) = A_s^T A_s + \alpha I \) is computed. For any \(\alpha >0\), \( C_s(\alpha )\) is positive definite and increasing \(\alpha \) improves its conditioning. However, the value of the least-squares objective computed using \( C_s(\alpha )\) may differ from the optimum for the original LS problem (with the difference increasing with \(\alpha \)). We can seek to recover the required LS solution by employing an iterative LS solver such as CGLS, LSQR or LSMR with

used as the preconditioner (see, for example, [26]). From the identity

we obtain

It follows that \(y = (C_s(\alpha ) + A_d^T A_d)^{-1}z\) can be computed from the solution of the system

Using (3.6) with \(C_s(\alpha )\) in place of \(C_s\), the steps needed to solve (3.8) (that is, to apply the preconditioner) are given in Algorithm 1.

Application of the preconditioner (3.7).

An alternative to shifting \(C_s\) is to use the splitting (3.3) with the augmented system (3.2) to obtain

where

Eliminating \(r_s\) reduces the problem to a 2-block system of order \((N+m_d)\times (N+m_d)\) of the form

Either K or \(K_r\) can be factorized using a sparse symmetric indefinite solver. The former has the advantage of not requiring the explicit computation of the reduced normal matrix \(C_s\) while the latter is a smaller system that corresponds to choosing the first \(m_s\) pivots in the factorization of K in the natural order.

4 Numerical experiments

The problems used in our experiments all come from the CUTEst test collectionFootnote 1 [11]; they are listed in Table 1. Algorithm 1 of [26] with the density parameter set to 0.05 is used to identify rows of the least squares matrix that we treat as dense; \(n_d\) is the number of such rows. The table includes the minimum number \(m_{min}\) of secant equations for the corresponding least squares matrix \(A^{(k)}\) in equation (2.4) to be overdetermined (excluding null rows). In practice, there may be situations, particularly during the earlier iterations of an optimization algorithm, where there are insufficient past iterations to enable m to be as large as in our experiments. In the current study, we do not consider this initialisation phase but assume throughout that we can use any \(m \ge m_\text {min}\).

The characteristics of the machine used to perform the experiments are given in Table 2. Eight processor cores are used for our reported results and timings are elapsed times in seconds.

All experiments (with the exception of the conditioning results given in Table 3) are performed in double precision arithmetic using the Fortran linear least squares solver HSL_MA85 from the HSL Mathematical Software Library [16]. This package is designed for large-scale problems that may contain some dense rows. It solves the system (3.5) or (3.9) (or (3.10) if there are some dense rows) using the sparse direct linear equation solver HSL_MA87 or HSL_MA97 respectively [13,14,15]. Both HSL_MA87 and HSL_MA97 employ OpenMP for parallelism and exploit high level BLAS routines. HSL_MA87 uses a DAG-based algorithm to compute the Cholesky factorization of sparse symmetric positive definite matrices. For problems with dense rows, if \(A_s\) contains null columns then the shift \(\alpha \) is set to \(10^{-12}\) and HSL_MA85 uses the factors of \(C_s(\alpha )\) computed by HSL_MA87 to precondition the iterative solver CGLS (as discussed in Section 3.3). For problems with no dense rows, HSL_MA85 may use iterative refinement to improve the LS solution. For the augmented system approach, HSL_MA85 uses the multifrontal code HSL_MA97. It incorporates numerical pivoting within an LDLT factorization. GMRES may be used within HSL_MA85 to improve the solution, with the factors computed by HSL_MA97 used as a preconditioner. HSL_MA85 includes options for scaling the least squares problem and for ordering the linear systems to limit the number of entries in the factors and the operations needed to perform the factorizations. In our experiments, we use equilibration scaling and nested dissection ordering and the convergence tolerances used by HSL_MA85 are set to \(\texttt{delta1} = 1.0^{-10}\), \(\texttt{delta2} = 1.0^{-8}\) and \(\mathtt{delta\_gmres} = 1.0^{-10}\). Further details of the package HSL_MA85 and the options it offers are given in the user documentation available at https://www.hsl.rl.ac.uk/catalogue/hsl_ma85.html.

For the purposes of verifying the results obtained using our least squares approach, we assume the Hessian matrix \(H=\{h_{ij}\}\) is known and report the relative componentwise error

where \(B=\{b_{ij}\}\) is the computed approximation of H. We also report the norm of the least squares residual \(\Vert r\Vert _2 = \Vert Az - c\Vert _2\).

4.1 Fixed Hessian matrix, general steps

While our ultimate goal is to provide useful, evolving Hessian matrix approximations for nonlinear functions, we start by testing whether the proposed new LS methods can compute good approximations in the simple case in which the Hessian matrix is fixed. That is, \(H(x^{(k)}) = H\) for all k. To do this, we consider the (unconstrained) quadratic programming problem

involving a scalar c, vector g and symmetric matrix H (note that here \(H = \nabla ^2 f(x)\) for all x). This problem underlies much of unconstrained optimization, with f and g often representing function and gradient values of a Taylor approximation to a nonlinear function f(x) evaluated at suitable x, and H being an approximation to its Hessian matrix. This H is the matrix we seek to approximate.

We also want to test problems with constraints; these can involve dense rows. Thus, we consider the more general problem

Here \(c_q\), \(g_q\) and \(H_q\) are the values, gradients and approximations to Hessian matrices of a given set of \(n_c\) nonlinear constraints. In this case, if \(\mu _q\) are Lagrange multipliers, the quadratic Lagrangian function

for which the Hessian matrix

is fundamental to many constrained optimization algorithms. We want to approximate the matrix \(H_L\).

Our interest is in investigating how the proposed new LS approximation methods perform in practice. To do so, we consider idealised instances of problems (4.2) and (4.3) in which the Hessian matrices H and \(H_L\) are fixed (they are independent of the iteration k). The method we use to generate our test Hessian matrices is described in Appendix A. For unconstrained (respectively, constrained) CUTEst problems, having generated a fixed Hessian matrix H (respectively, \(H_L\)), we randomly generate \(s^{(l)} \in (-1,1)\) and then compute \(y^{(l)} = H s^{(l)}\) (respectively, \(y^{(l)} = H_L s^{(l)}\)) for \(l = 1,\dotsc ,m\).

4.1.1 Varying the secant parameter

Table 3 presents estimates of the extremal eigenvalues for a subset of the test problems. These values were computed using the Matlab function eigs. They illustrate how the conditioning of the normal matrix improves as the secant parameter m increases. Choosing the minimum value \(m_{min}\) for (2.4) to be overdetermined (excluding null rows) can result in the system being close to singular (or even singular) in machine precision. Based on our experiments, we advocate choosing m to be at least \(m_{min} + 5\). However, for some problems a larger value is needed. In particular, we use \(m= m_{min} + 10\) for our experiments involving BQPGAUSS, and for TWIRIMD1 we use \(m = 70\). Note that it is possible to construct artificial examples for which increasing m leads to growth in the condition number, but we did not encounter this behaviour in practice.

For the subset of problems in Table 3, Table 4 shows the effects of varying the secant parameter m on the performance of the LS solver. Both the normal equation and the augmented system approaches are reported on (with the modifications of Section 3.3 used for the sparse-dense problems BQPGAUSS, CAR2 and ORTHREGE). For the normal equation formulation, the work involved in computing the Cholesky factors is independent of m but the computed solution, residual and \(rel\_err\) depend on m. The size of the augmented system increases with m, but this may not mean an increase in the number and entries in the factor or the operation count. This can occur if for smaller m the problem is ill-conditioned because then the indefinite factorization involves more work to retain numerical stability. The number of delayed pivots (reported as ndelay) is an indication of this (for larger m, ndelay is zero, or close to zero). We note that the quality of the results measured using the residual and relative error is similar for both the normal equations and augmented system approaches.

4.1.2 The importance of exploiting dense rows

For problems with one or more dense rows, Tables 5 and 6 illustrate the importance of exploiting these rows when solving the least squares problem (2.5). \(m_d = 0\) means that all the rows (including those that are dense) are treated by the solver HSL_MA85 as sparse. As expected, this leads to much denser factors that are more expensive to compute. For the normal equation formulation the increases are particularly large. For example, for problem ORTHREGE, if dense rows are exploited the normal matrix formulation requires 1.42E+05 flops and the solution time is 0.122 seconds but if all the rows are treated as sparse, the flops needed are 1.73E+11 and the time increases to 4.587 seconds.

When dense rows are exploited, the normal equations can be significantly faster than using the augmented system (for example, for problems BQPGAUSS and CAR2). This is because the Cholesky factorization is faster than an \(LDL^T\) factorization that has the overhead of pivoting for numerical stability.

In the remainder of the paper, all experiments on problems containing dense rows exploit those rows.

4.1.3 Results for problems with no dense rows

Table 7 reports results for the problems that have no dense rows. Again, both the normal equation and augmented system formulations are successful and generally of comparable quality.

4.2 Fixed Hessian matrix, nearly-dependent steps

Having confirmed that under idealized circumstances we can recover good approximations to Hessian matrices using our least squares approaches, we now consider two more realistic scenarios. In the first, we recognise that algorithms may produce steps that lie close to low-dimensional subspaces rather than uniformly in \(R^n\). For example, it is well known that the iterates generated by the steepest-descent method tend to lie predominantly in a subspace spanned by the eigenvectors corresponding to the two largest eigenvalues of the Hessian [1, 19]. Our aim is thus to assess the ability to approximate a Hessian matrix when the step directions \(s^{(l)}\) are not well distributed.

To this end, we repeat our experiments for the fixed H and \(H_L\) except we now generate the \(s^{(l)}\), \(l=1,\dotsc ,m\) as follows. For a chosen \(d < m\), we compute \(s^{(l)} \in (-1,1)\) randomly as before for \(l = 1,\ldots ,d\). Then for some small \(0 < \epsilon \ll 1\) and \(l = d+1,\dotsc ,m\), we set \(s^{(l)} = s^{(l-d)} + \epsilon \rho \), where \(\rho \in (-1,1)\) is a pseudo random number. This is intended to simulate optimization steps \(s^{(l)}\) that lie in subspaces of effective (but not exact) dimension d. In our experiments, \(\epsilon = 10^{-5}\) and \(d = 0.8m\). The results are given in Table 8. As the conditioning gets worse with nearly-dependent steps, for some of the problems we found that to obtain a \(rel\_err\) of \(O(10^{-9})\) or less a larger secant parameter was required; the values used are reported in column 2 of the table. For example, for problem BQPGAUSS, we used \(m = 20\), compared to the previous value of 15. With appropriate m, we again see that both the normal equation and augmented system formulations are successful in obtaining high quality approximate Hessian matrices.

4.3 Varying the Hessian matrix

In practice it is unlikely that the Hessian matrix is fixed, and thus exact reproduction from gradient differences is unlikely. In particular, from Taylor’s theorem

for objective functions with gradients g(x) and locally Lipschitz Hessian matrices H(x). If there are m steps \(s^{(l)}\), then

where \(S = (s^{(1)},\ldots ,s^{(m)}), \; Y = (y^{(1)},\ldots ,y^{(m)}), \; y^{(l)} = g(x+s^{(l)}) - g(x)\) and \(\Vert E \Vert = O(\Vert S \Vert ^2)\). Thus if \( B = Y S^{-1}\) then

and B is a good approximation to H(x) provided \(\Vert S \Vert \) and \(\Vert S^{-1} \Vert \) are modest.

We simulate this for \(l=1,\dotsc ,m\) by generating \(s^{(l)}\) as in Section 4.2 and then generating a perturbed \(y^{(l)} = H s^{(l)} + \epsilon \rho \) (or \(y^{(l)} = H_L s^{(l)} + \epsilon \rho \)) for small \(0 < \epsilon \ll 1\) and pseudo random \(\rho \in (-1,1)\). We no longer expect to reproduce H exactly (as the LS problem no longer has a zero residual), but our hope is to observe errors in \(\Vert H - B \Vert \) of order approximately \(\epsilon \). In our experiments we set \(\epsilon = 10^{-5}\). Because only \(y^{(l)}\) is perturbed, the least-squares matrix and the factorizations of the normal matrix and the augmented system matrix are unchanged. Thus, in Table 9 we only report the least-squares residual \(\Vert r\Vert _2\) and the relative error \(rel\_err\) given by (4.1). With the prescribed convergence tolerances for the solvers, for each problem the normal equation and augmented system formulations return the same residuals and relative errors. We see that \(rel\_err\) is now consistently \(O(10^{-4})\) or less.

5 Concluding remarks and future directions

In this paper, we have considered the problem of approximating sparse Hessian matrices. We have proposed a novel approach that uses the secant conditions and then solves a large sparse linear LS problem. Solving this is challenging because the LS system matrix can contain dense rows (for example, when the underlying optimization problem involves constraints that involve many variables) and it can be poorly conditioned. In our experiments, we found that increasing the number m of secant equations improves the conditioning of the LS problem. For many of our tests, a sufficient value of m was generally not much larger than the minimum value \(m_\text {min}\) that ensures the LS problem is overdetermined (typically \(m_\text {min} +5\)) but when we generated test problems in which we made the conditioning worse, larger m were needed to retain approximately the same level of accuracy in the computed Hessian matrix.

Existing methods for solving sparse-dense LS problems can be used and if these employ sparse direct solvers, then our numerical tests found them to be robust. Normal equation and augmented system approaches were tested. Both benefit significantly from special handling of the dense rows. In this case, we found there was no consistent winner between the two approaches but we observe that the former has a faster factorization time because it avoids pivoting. However, the incorporation of pivoting by the latter potentially makes it more reliable and refinement of the computed solution using an iterative solver is often not needed, resulting in a fast solve phase and competitive overall solution time.

The main weakness of our new approach for approximating Hessians is the size of the LS system matrix, which has a row dimension of mn and a column dimension equal to the number of entries in the Hessian matrix. Thus, although we were able to solve all our CUTEst test examples with a direct solver, the LS approach can be expensive in terms of time and memory requirements for large problems and for even larger LS problems, a preconditioned iterative solver will be needed. There is currently a lack of efficient, robust preconditioners for sparse-dense LS, although recent work of Al Daas, Jolivet and Scott [2] is promising, This lack of preconditioners hinders the development of “black box" software for computing Hessian matrices using our new approach. Consequently, our future plan is to design and implement alternative strategies that again use the secant equations but seek to employ more efficient methods of solution.

Data Availability

The CUTEst test collection is available from https://github.com/ralna/CUTEst

References

Akaike, H.: On a successive transformation of probability distribution and its application to the analysis of the optimum gradient method. Ann. Inst. Stat. Math. 11(1), 1–16 (1959)

Daas, H. Al., Jolivet, P., Scott, J. A.: A robust algebraic domain decomposition preconditioner for sparse normal equations. SIAM J. Sci. Comput. 44(3). (2022). https://doi.org/10.1137/21M1434891

Avron, H., Ng, E., Toledo, S.: To solve linear least-squares problems. SIAM J. Matrix Anal. Appl. 31(2), 674–693 (2009)

Benzi, M., Golub, G.H., Liesen, J.: Numerical solution of saddle point problems. Acta Numer 14, 1–137 (2005)

Björck, Å.: A general updating algorithm for constrained linear least squares problems. SIAM J. Sci. Stat. Comput. 5(2), 394–402 (1984)

Duff, I.S.: MA57-a code for the solution of sparse symmetric definite and indefinite systems. ACM Trans. Math. Soft. 30, 118–154 (2004)

Fletcher, R.: An optimal positive definite update for sparse Hessian matrices. SIAM J. Optimization. 5(1), 192–217 (1995)

Fletcher, R., Grothey, A., Leyffer, S.: Computing sparse Hessian and Jacobian approximations with optimal hereditary properties. In A.R. Conn L.T. Biegler, T.F. Coleman and F.N. Santosa, editors, Large-scale optimization with applications, Part II: Optimal Design and Control, volume 93 of IMA Volumes in Mathematics and its Applications, pages 37–52, Berlin, 1997. Springer

George, A., Heath, M. T.: Solution of sparse linear least squares problems using Givens rotations. Linear Algebra Appl. 34:69–83 (1980)

Gould, N.I.M., Orban, D., Toint, Ph.L.: GALAHAD-a library of thread-safe Fortran 90 packages for large-scale nonlinear optimization. ACM Trans. Math. Soft. 29(4), 353–372 (2003)

Gould, N. I. M., Orban, D., Toint, Ph. L.: CUTEst: a constrained and unconstrained testing environment with safe threads for mathematical optimization. Optim. Meth. Soft. (2014)

Gould, N. I. M., Scott, J. A.: The state–of–the–art of preconditioners for sparse linear least–squares problems. ACM Trans. Math. Soft. 43(4),36:1–36:35 (2017)

Hogg, J.D., Reid, J.K., Scott, J.A.: Design of a multicore sparse Cholesky factorization using DAGs. SIAM J. Sci. Comput. 32(6), 3627–3649 (2010)

Hogg, J. D., Scott, J. A.: HSL MA97: a bit–compatible multifrontal code for sparse symmetric systems. Technical Report RAL-TR-2011-024, STFC-Rutherford Appleton Lab. (2011)

Hogg, J.D., Scott, J.A.: New parallel sparse direct solvers for multicore architectures. Algorithms. 6, 702–725 (2013)

HSL. A collection of Fortran codes for large-scale scientific computation, http://www.hsl.rl.ac.uk Accessed 2023

METIS. A family of multilevel partitioning algorithms, https://github.com/KarypisLab Accessed 2022

MUMPS. A parallel sparse direct solver. Version 5.5.0, http://mumps.enseeiht.fr/ Accessed 2022

Nocedal, J., Sartenaer, A., Zhu, C.: On the behavior of the gradient norm in the steepest descent method. Comput. Optim. Appl. 22, 5–35 (2002)

Nocedal, J., Wright, S.: Numerical optimization. Springer (2006)

Rozložník, M.: Saddle-point problems and their iterative solution. Nečas Center Series. Birkhäuser/Springer, Cham (2018)

Scott, J.A.: On using Cholesky-based factorizations and regularization for solving rank-deficient linear least-squares problems. SIAM J. Sci. Comput. 9, C319-339 (2017)

Scott, J.A., Tůma, M.: Solving mixed sparse-dense linear least-squares problems by preconditioned iterative methods. SIAM J. Sci. Comput. 39(6), A2422–A2437 (2017)

Scott, J.A., Tůma, M.: A Schur complement approach to preconditioning sparse least-squares problems with some dense rows. Numerical Algorithms. 79(4), 1147–1168 (2018)

Scott, J.A., Tůma, M.: Sparse stretching for solving sparse-dense linear least-squares problems. SIAM J. Sci. Comput. 41, A1604–A1625 (2019)

Scott, J. A., Tůma, M.: Strengths and limitations of stretching for least-squares problems with some dense rows. ACM Trans. Math. Soft. 47(1),1:1–25 (2021)

Scott, J. A., Tůma, M.: A computational study of using black-box QR solvers for large-scale sparse-dense linear least squares problems. ACM Trans. Math. Soft., 48(1):5,1–24 (2022)

Sorensen, D.C.: An example concerning quasi-Newton estimates of a sparse Hessian. SIGNUM Newsletter. 16(2), 8–10 (1981)

Toint, Ph.L.: On sparse and symmetric matrix updating subject to a linear equation. Math. Comp. 31(140), 954–961 (1977)

Toint, Ph.L.: Some numerical results using a sparse matrix updating formula in unconstrained optimization. Math. Comp. 32(1403), 839–851 (1978)

Wathen, A.J.: Preconditioning. Acta Numer 24, 329–376 (2015)

WSMP. Watson Sparse Matrix Package (Version 20.12) (2020). http://researcher.watson.ibm.com/researcher/view_group.php?id=1426

Acknowledgements

We are grateful to the reviewer for his/her constructive feedback.

Funding

All authors were supported by EPSRC grant number EP/X032485/1.

Author information

Authors and Affiliations

Contributions

All authors contributed equally to this study

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Ethical Approval

Not applicable.

Software Availability

The HSL software is available from https://www.hsl.rl.ac.uk/ and the GALAHAD software is available from https://www.galahad.rl.ac.uk/

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Generation of Hessian matrices using CUTEst

Appendix A: Generation of Hessian matrices using CUTEst

Here we describe how the unconstrained and constrained fixed Hessians H and \(H_L\) used in the numerical experiments in Section 4 are generated.

For each unconstrained CUTEst test example we evaluate its Hessian matrix \(H^{cutest}(x)\) at a point \(x^{pert}\) that is a random perturbation of the CUTEst starting point \(x^{start}\) and set \(H = H^{cutest}(x^{pert})\). Specifically, if \(x^{start}_i\) (\(1 \le i \le n\)) is the initial value for component i of \(x^{start}\), with lower and upper bounds \(x_i^l\) and \(x_i^u\), then

Here \(\rho \in (0,1)\) is the pseudo random number returned by the call rand(seed,.true.,rho), where rand is from the optimization package GALAHAD [10] and the default seed is used.

For the constrained examples, we evaluate the Hessian of the Lagrangian matrix \(H^{cutest}_L(x,\mu )\) at a random perturbation \(x^{pert}\) of \(x^{start}\) (as above) and randomly generated Lagrange multipliers \(\mu _q^{rand} \in (-1,1)\) (\(1 \le q \le n_c\)), with component i of \(\mu ^{rand}\) returned by \(\mathtt{rand(seed,.false.,mu(i))}\). We then set \(H_L = H^{cutest}_L(x^{pert},\mu ^{rand})\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Fowkes, J.M., Gould, N.I.M. & Scott, J.A. Approximating sparse Hessian matrices using large-scale linear least squares. Numer Algor (2023). https://doi.org/10.1007/s11075-023-01681-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11075-023-01681-z