Abstract

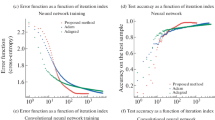

In this paper, we propose SGEM, stochastic gradient with energy and momentum, to solve a class of general non-convex stochastic optimization problems, based on the AEGD method introduced in AEGD (adaptive gradient descent with energy) Liu and Tian (Numerical Algebra, Control and Optimization, 2023). SGEM incorporates both energy and momentum so as to inherit their dual advantages. We show that SGEM features an unconditional energy stability property and provide a positive lower threshold for the energy variable. We further derive energy-dependent convergence rates in the general non-convex stochastic setting, as well as a regret bound in the online convex setting. Our experimental results show that SGEM converges faster than AEGD and generalizes better or at least as well as SGDM in training some deep neural networks.

Similar content being viewed by others

Data availability

The data that support the findings of this study are publicly available online at https://www.cs.toronto.edu/~kriz/cifar.html and https://www.image-net.org/.

Notes

Code is available at https://github.com/txping/SGEM

References

Allen-Zhu, Z.: Katyusha: the first direct acceleration of stochastic gradient methods. J. Mach. Learn. Res. 18(221), 1–51 (2018)

Bottou, L: Stochastic gradient descent tricks neural networks, tricks of the trade, reloaded ed., Lecture Notes in Computer Science (LNCS), vol. 7700. Springer (2012)

Bottou, L., Curtis, F.E., Nocedal, J.: Optimization methods for large-scale machine learning. SIAM Rev. 60(2), 223–311 (2018)

Chen, X., Liu, S., Sun, R., Hong, M.: On the convergence of a class of Adam-type algorithms for non-convex optimization. International conference on learning representations (2019)

Defazio, A., Bach, F., Lacoste-Julien, S.: SAGA: a fast incremental gradient method with support for non-strongly convex composite objectives. Adv. Neural. Inf. Proc. Syst. 27 (2014)

Duchi, J., Hazan, E., Singer, Y.: Adaptive subgradient methods for online learning and stochastic optimization. J. Mach. Learn. Res. 12, 2121–2159 (2011)

Goodfellow, I., Bengio, Y., Courville, A.: Deep learning. MIT Press (2016)

Hazan, E.: Introduction to online convex optimization. arXiv:1909.05207 (2019)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778. (2016)

Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2261–2269. (2017)

Jin, C., Netrapalli, P., Jordan, M.I.: Accelerated gradient descent escapes saddle points faster than gradient descent. In: Proceedings of the 31st Conference on learning theory, vol. 75, pp. 1042–1085. (2018)

Johnson, R., Zhang, T.: Accelerating stochastic gradient descent using predictive variance reduction. Adv. Neural Inf. Proc. Syst. 26 (2013)

Karimi, H., Nutini, J., Schmidt, M.: Linear convergence of gradient and proximal-gradient methods under the Polyak-Łojasiewicz condition. 9851(09), 795–811 (2016)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv:1412.6980 (2017)

Krizhevsky, A., Hinton, G.: Learning multiple layers of features from tiny images. University of Toronto (2009)

LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521, 436–44 (2015)

Lei, L., Ju, C., Chen, J., Jordan, M.I.: Non-convex finite-sum optimization via SCSG methods. Adv. Neural Inf. Proc. Syst. 30 (2017)

Li, X., Orabona, F.: On the convergence of stochastic gradient descent with adaptive stepsizes. In: Proceedings of the 22nd International conference on artificial intelligence and statistics, proceedings of machine learning research, vol. 89, pp. 983–992. (2019)

Liu, H., Tian, X.: AEGD: adaptive gradient decent with energy. Numerical Algebra, Control and Optimization. https://doi.org/10.3934/naco.2023015 (2023)

Liu, H., Tian, X.: Dynamic behavior for a gradient algorithm with energy and momentum. arXiv:2203.12199 (2022)

Liu, L., Jiang, H., He, P., Chen, W., Liu, X., Gao, J., Han, J.: On the variance of the adaptive learning rate and beyond. International conference on learning representations (2020)

Liu, Y., Gao, Y., Yin, W.: An improved analysis of stochastic gradient descent with momentum. NeurIPS (2020)

Luo, L., Xiong, Y., Liu, Y.: Adaptive gradient methods with dynamic bound of learning rate. International conference on learning representations (2019)

Osher, S., Wang, B., Yin, P., Luo, X., Barekat, F., Pham, M., Lin, A.: Laplacian smoothing gradient descent. arXiv:1806.06317 (2019)

Polyak, B.T.: Some methods of speeding up the convergence of iterative methods. Ž. Vyčisl. Mat i Mat. Fiz. 4, 791–803 (1964)

Qian, N.: On the momentum term in gradient descent learning algorithms. Neural Networks 12(1), 145–151 (1999)

Reddi, S., Kale, S., Kumar, S.: On the convergence of Adam and beyond. International conference on learning representations (2018)

Robbins, H., Monro, S.: A stochastic approximation method. Ann. Math. Stat. 22, 400–407 (1951)

Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., Huang, Z., Karpathy, A., Khosla, A., Bernstein, M., Berg, A.C., Fei-Fei, L.: ImageNet large scale visual recognition challenge. International Journal of Computer Vision (IJCV) 115(3), 211–252 (2015)

Shapiro, A., Wardi, Y.: Convergence analysis of gradient descent stochastic algorithms. J. Optim. Theory Appl. 91(2), 439–454 (1996)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556 (2015)

Sutskever, I., Martens, J., Dahl, G., Hinton, G.: On the importance of initialization and momentum in deep learning. In: Proceedings of the 30th International conference on machine learning, vol. 28, pp. 1139–1147 (2013)

Tieleman, T., Hinton, G.: RMSprop: divide the gradient by a running average of its recent magnitude. COURSERA: Neural Netw. Mach. Learn. 4(2), 26–31 (2012)

Wilson, A.C., Roelofs, R., Stern, M., Srebro, N., Recht, B.: The marginal value of adaptive gradient methods in machine learning. arXiv:1705.08292 (2018)

Yan, Y., Yang, T., Li, Z., Lin, Q., Yang, Y.: A unified analysis of stochastic momentum methods for deep learning. In: Proceedings of the 27th International joint conference on artificial intelligence, pp. 2955–2961 (2018)

Yang, X.: Linear, first and second-order, unconditionally energy stable numerical schemes for the phase field model of homopolymer blends. J. Comput. Phys. 327, 294–316 (2016)

Yu, H., Jin, R., Yang, S.: On the linear speedup analysis of communication efficient momentum SGD for distributed non-convex optimization. In: Proceedings of the 36th International conference on machine learning vol. 97, pp. 7184–7193 (2019)

Zaheer, M., Reddi, S., Sachan, D., Kale, S., Kumar, S.: Adaptive methods for nonconvex optimization. Advances in Neural Information Processing Systems (S. Bengio, H. Wallach, H. Larochelle, K. Grauman, N. Cesa-Bianchi, and R. Garnett, eds.), vol. 31, Curran Associates, Inc., (2018)

Zhao, J., Wang, Q., Yang, X.: Numerical approximations for a phase field dendritic crystal growth model based on the invariant energy quadratization approach. Int. J. Numer. Methods Eng. 110, 279–300 (2017)

Zhuang, J., Tang, T., Ding, Y., Tatikonda, S.C., Dvornek, N., Papademetris, X., Duncan, J.: AdaBelief Optimizer: adapting stepsizes by the belief in observed gradients. Adv. Neural Inf. Process. Syst. 33, 18795–18806 (2020)

Zinkevich, M.: Online convex programming and generalized infinitesimal gradient ascent. ICML, pp. 928–935 (2003)

Zou, F., Shen, L., Jie, Z., Zhang, W., Liu, W.: A sufficient condition for convergences of Adam and RMSProp. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 11119–11127 (2019)

Funding

This work was supported by the National Science Foundation under Grant DMS1812666.

Author information

Authors and Affiliations

Contributions

The authors have equal contributions.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1. Proof of Theorem 2

For the proofs of Theorems 2 and 3, we introduce notation

The initial data for \(r_i\) is taken as \(r_{1, i}={\tilde{F}}_1\). We also denote the update rule presented in Algorithm 1 as

where \(r_{t+1}\) is viewed as a \(n\times n\) diagonal matrix that is made up of \([r_{t+1,1},\) \(\ldots ,r_{t+1,i},\ldots ,r_{t+1,n}]\).

Lemma 1

Under the assumptions in Theorem 2, we have for all \(t\in [T]\),

-

(i)

\(\Vert \nabla f(\theta _t)\Vert _\infty \le G_\infty \).

-

(ii)

\(\mathbb {E}[({\tilde{F}}_t)^2]= F^2(\theta _t)=f(\theta _t)+c\).

-

(iii)

\(\mathbb {E}[{\tilde{F}}_t]\le F(\theta _t)\). In particular, \(\mathbb {E}[r_{1,i}]= \mathbb {E}[{\tilde{F}}_1]\le F(\theta _1)\) for all \(i\in [n]\).

-

(iv)

\(\sigma ^2_g=\mathbb {E}[\Vert g_t-\nabla f(\theta _t)\Vert ^2]\le G^2_\infty \) and \(\sigma ^2_f=\mathbb {E}[|f(\theta _t;\xi _t)- f(\theta _t)|^2]\le B^2.\)

-

(v)

\(\mathbb {E}[|F(\theta _t)-{\tilde{F}}_t|]\le \frac{1}{2\sqrt{a}}\sigma _f\).

-

(vi)

\(\mathbb {E}[\Vert \nabla F(\theta _t)-\frac{g_t}{2\tilde{F}_t}\Vert ^2]\le \frac{G^2_\infty }{8a^3}\sigma ^2_f+\frac{1}{2a}\sigma ^2_g.\)

Proof

-

(i)

By assumption \(\Vert g_t\Vert _\infty \le G_\infty \), we have

$$\begin{aligned} \Vert \nabla f(\theta _t)\Vert _\infty =\Vert \mathbb {E}[g_t]\Vert _\infty \le \mathbb {E}[\Vert g_t\Vert _\infty ]\le G_\infty . \end{aligned}$$ -

(ii)

This follows from the unbiased sampling of

$$\begin{aligned} f(\theta _t)=\mathbb {E}_{\xi _t}[ f(\theta _t; \xi _t)]. \end{aligned}$$ -

(iii)

By Jensen’s inequality, we have

$$\begin{aligned} \mathbb {E}[\tilde{F}_t] \le \sqrt{\mathbb {E}[\tilde{F}_t^2]}=\sqrt{F(\theta _t)^2}=F(\theta _t). \end{aligned}$$ -

(iv)

By assumptions \(\Vert g_t\Vert _\infty \le G_\infty \) and \(f(\theta _t;\xi _t)+c<B\), we have

$$\begin{aligned} \sigma ^2_g=\mathbb {E}[\Vert g_t-\nabla f(\theta _t)\Vert ^2] = \mathbb {E}[\Vert g_t\Vert ^2] - \Vert \nabla f(\theta _t)\Vert ^2\le G^2_\infty , \end{aligned}$$$$\begin{aligned} \sigma ^2_f=\mathbb {E}[\Vert f(\theta _t;\xi _t)-f(\theta _t)\Vert ^2] = \mathbb {E}[\Vert f(\theta _t;\xi _t)\Vert ^2] - \Vert f(\theta _t)\Vert ^2\le B^2. \end{aligned}$$ -

(v)

By the assumption \(0<a\le f(\theta _t;\xi _t)+c=\tilde{F}_t^2\), we have

$$\begin{aligned} \quad \mathbb {E}[|F(\theta _t)-\tilde{F}_t|] \le \mathbb {E}\Bigg [\bigg |\frac{f(\theta _t)-f(\theta _t;\xi _t)}{F(\theta _t)+\tilde{F}_t}\bigg |\Bigg ] \le \frac{1}{2\sqrt{a}}\mathbb {E}[|f(\theta _t)-f(\theta _t;\xi _t)|] \le \frac{1}{2\sqrt{a}}\sigma _f. \end{aligned}$$ -

(vi)

By the definition of \(F(\theta )\), we have

$$\begin{aligned} \Vert \nabla F(\theta _t)-\frac{g_t}{2\tilde{F}_t}\Vert ^2= & {} \bigg \Vert \frac{\nabla f(\theta _t)}{2F(\theta _t)}-\frac{g_t}{2\tilde{F}_t}\bigg \Vert ^2\\= & {} \frac{1}{4}\bigg \Vert \frac{\nabla f(\theta _t)(\tilde{F}_t-F(\theta _t)) }{F(\theta _t)\tilde{F}_t}+\frac{\nabla f(\theta _t)-g_t}{\tilde{F}_t}\bigg \Vert ^2\\\le & {} \frac{1}{2} \bigg \Vert \frac{\nabla f(\theta _t)(\tilde{F}_t-F(\theta _t)) }{F(\theta _t)\tilde{F}_t}\bigg \Vert ^2 + \frac{1}{2}\bigg \Vert \frac{\nabla f(\theta _t)-g_t}{\tilde{F}_t}\bigg \Vert ^2\\\le & {} \frac{G^2_\infty }{2a^{2}}|\tilde{F}_t-F(\theta _t)|^2+\frac{1}{2a}\Vert \nabla f(\theta _t)-g_t\Vert ^2, \end{aligned}$$where both the gradient bound and the assumption that \(0<a\le f(\theta _t;\xi _t)+c=\tilde{F}^2_t\) are essentially used. Take an expectation to get

$$\begin{aligned} \mathbb {E}[\Vert \nabla F(\theta _t)-\frac{g_t}{2\tilde{F}_t}\Vert ^2]\le \frac{G^2_\infty }{2a^{2}}\mathbb {E}[|\tilde{F}_t-F(\theta _t)|^2]+\frac{1}{2a}\mathbb {E}[\Vert \nabla f(\theta _t)-g_t\Vert ^2]. \end{aligned}$$Similar to the proof for (iv), we have

$$\begin{aligned} \mathbb {E}[|\tilde{F}_t-F(\theta _t)|^2]\le \frac{1}{4a}\sigma ^2_f. \end{aligned}$$This together with the variance assumption for \(g_t\) gives

$$\begin{aligned} \mathbb {E}[\Vert \nabla F(\theta _t)-\frac{g_t}{2\tilde{F}_t}\Vert ^2]\le \frac{G^2_\infty }{8a^3}\sigma ^2_f+\frac{1}{2a}\sigma ^2_g. \end{aligned}$$

Lemma 2

For any \(T\ge 1\), we have

-

(i)

\(\mathbb {E}\Big [\sum _{t=1}^{T} v_t^\top r_{t+1} v_t\Big ] \le \frac{n F(\theta _1)}{2\eta }\).

-

(ii)

\(\mathbb {E}\Big [\sum _{t=1}^{T} m_{t-1}^\top r_{t+1} m_{t-1}\Big ]\le \mathbb {E}\Big [\sum _{t=1}^{T} m_t^\top r_{t+1} m_t\Big ] \le \frac{2Bn F(\theta _1)}{\eta }\).

-

(iii)

\(\mathbb {E}\Big [\sum _{t=1}^{T}\Vert r_{t+1}m_t\Vert ^2\Big ] \le \frac{2Bn F^2(\theta _1)}{\eta }\).

-

(iv)

\(\mathbb {E}\Big [\sum _{t=1}^{T} g_t^\top r_{t+1} g_t\Big ] \le \frac{8Bn F(\theta _1)}{(1-\beta )^2\eta }\).

-

(v)

\(\mathbb {E}\Big [\sum _{t=1}^{T}\Vert r_{t+1}g_t\Vert ^2\Big ] \le \frac{8Bn F^2(\theta _1)}{(1-\beta )^2\eta }\).

Proof

From Algorithm 1 line 5, we have

Taking summation over t from 1 to T gives

From which we get

Taking expectation and using (iii) in Lemma 1 gives (i). Recall that \(m_t=2(1-\beta ^t)\tilde{F}_tv_t\) and \(\tilde{F}_t\le \sqrt{B}\), we further get

Using \(r_{t+1,i}\le r_{t,i}\) and \(m_{0,i}=0\), we also have

Connecting the above two inequalities and taking expectation gives (ii). Using \(r_{t+1,i}\le r_{1,i}\), the above inequality further implies

Taking expectation and using (ii) in Lemma 1 gives (iii). By \(m_t=\beta m_{t-1}+(1-\beta )g_t\), we have

Here, the third inequality is by \((a+b)^2\le 2a^2+2b^2\); (A.3) and \(0<\beta <1\) are used in the fourth inequality. Taking expectation and using (iii) in Lemma 1 gives (iv). Similar as the derivation for (ii), we have

Taking expectation and using (ii) in Lemma 1 gives (v).\(\square \)

First, note that by (iv) in Lemma 1, \(\max \{\sigma _g,\sigma _f\}\le \max \{G_\infty ,B\}\).

Recall that \(F(\theta )=\sqrt{f(\theta )+c}\), then for any \(x, y\in \{\theta _t\}_{t=0}^T\) we have

One may check that

These together with the L-smoothness of f lead to

where

This confirms the \(L_F\)-smoothness of F, which yields

Summation of the above over t from 1 to T and taken with the expectation gives

where

Below, we bound \(S_1, S_2, S_3, S_4\) separately. To bound \(S_1\), we first note that

from which we get

For \(S_2\), we have

where the fourth inequality is by the Cauchy-Schwarz inequality, and the last inequality is by Lemma 1 (i) (ii).

For \(S_3\), by the Cauchy-Schwarz inequality, we have

where the last inequality is by (vi) in Lemma 1 and (4.2) in Theorem 1.

For \(S_4\), also by (4.2) in Theorem 1, we have

With the above bounds on \(S_1, S_2, S_3, S_4\), (A.4) can be rearranged as

where (iii) in Lemma 1 was used. Hence,

where \(\sigma =\max \{\sigma _f,\sigma _g\}\) and

In the case \(\sigma =0\), we obtain the stated estimate in Theorem 2.

Appendix 2. Proof of Theorem 3

The upper bound on \(\sigma _g\) is given by (iv) in Lemma 1. Since f is L-smooth, we have

Denoting \(\eta _t=\eta /\tilde{F}_t\), the second term in the RHS of (B.1) can be expressed as

We further bound the second term and third term in the RHS of (B.2), respectively. For the second term, we note that \(|\frac{1-\beta }{1-\beta ^t}|\le 1\) and

The third inequality holds because for a positive diagonal matrix A, \(x^\top Ay\le \Vert x\Vert _\infty \Vert A\Vert _{1,1}\Vert y\Vert _\infty \), where \(\Vert A\Vert _{1,1}=\sum _{i}a_{ii}\). The last inequality follows from the result \(r_{t+1,i}\le r_{t,i}\) for \(i\in [n]\), the assumption \(\Vert g_t\Vert _\infty \le G_\infty \), \(\tilde{F}_t\ge \sqrt{a}\), and (i) in Lemma (1).

For the third term in the RHS of (B.2), we note that

in which

where the last inequality is because for a positive diagonal matrix A, \(x^\top Ay\le \frac{1}{2}x^\top Ax+\frac{1}{2}y^\top Ay\). Substituting (B.3) and (B.4) into (B.2), we get

With (B.5), we take an conditional expectation on (B.1) with respect to \((\theta )\) and rearrange to get

where the assumption \(\mathbb {E}_{\xi _t}[g_t]=\nabla f(\theta _t)\) is used in the first equality. Since \(\xi _1,...,\xi _t\) are independent random variables, we set \(\mathbb {E}=\mathbb {E}_{\xi _1}\mathbb {E}_{\xi _2}...\mathbb {E}_{\xi _T}\) and take a summation on (B.6) over t from 1 to T to get

Below, we bound each term in (B.7) separately. By the Cauchy-Schwarz inequality, we get

where Lemma 1 (ii) and the bounded variance assumption were used. We replace \(m_{t-1}\) in () by \(g_t\) and use Lemma 1 (v) to get

By (4.2), the last term in (B.7) is bounded above by

Substituting Lemma 1 (i) (iii), (B.10), (B.9), () into (B.7) to get

Note that the left hand side is bounded from below by

where we used \(|\frac{1-\beta }{1-\beta ^t}|\ge 1-\beta \) and \(\eta _t\ge \eta /\sqrt{B}\). Thus, we have

where

By the Hölder inequality, we have for any \(\alpha \in (0, 1)\),

Take \(X=\Delta :=\sum _{t=1}^{T}\Vert \nabla f(\theta _t)\Vert ^2, Y=\min _ir_{T,i}\), we obtain

Using (B.12), and lower bounding \(\mathbb {E}[\Delta ^\alpha ]\) by \(T^{\alpha }\mathbb {E}[\min _{1\le t\le T}\Vert \nabla f(\theta _t)\Vert ^{2\alpha }]\), we obtain

This by taking \(\alpha =1-\epsilon \) yields the stated bound.

Appendix 3. Proof of Theorem 4

Using the same argument as for (iv) in Lemma 2, we have

With this estimate and the convexity of \(f_t\), the regret can be bounded by

where the fourth inequality is by the Cauchy-Schwarz inequality, and the assumption \(\Vert x-y\Vert _\infty \le D_\infty \) for all \(x,y\in \mathcal {F}\) is used in the last inequality.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, H., Tian, X. SGEM: stochastic gradient with energy and momentum. Numer Algor 95, 1583–1610 (2024). https://doi.org/10.1007/s11075-023-01621-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-023-01621-x