Abstract

Iterative substructuring Domain Decomposition (DD) methods have been extensively studied, and they are usually associated with nonoverlapping decompositions. It is less known that classical overlapping DD methods can also be formulated in substructured form, i.e., as iterative methods acting on variables defined exclusively on the interfaces of the overlapping domain decomposition. We call such formulations substructured domain decomposition methods. We introduce here a substructured version of Restricted Additive Schwarz (RAS) which we call SRAS. We show that RAS and SRAS are equivalent when used as iterative solvers, as they produce the same iterates, while they are substantially different when used as preconditioners for GMRES. We link the volume and substructured Krylov spaces and show that the iterates are different by deriving the least squares problems solved at each GMRES iteration. When used as iterative solvers, SRAS presents computational advantages over RAS, as it avoids computations with matrices and vectors at the volume level. When used as preconditioners, SRAS has the further advantage of allowing GMRES to store smaller vectors and perform orthogonalization in a lower dimensional space. We then consider nonlinear problems, and we introduce SRASPEN (Substructured Restricted Additive Schwarz Preconditioned Exact Newton), where SRAS is used as a preconditioner for Newton’s method. In contrast to the linear case, we prove that Newton’s method applied to the preconditioned volume and substructured formulation produces the same iterates in the nonlinear case. Next, we introduce two-level versions of nonlinear SRAS and SRASPEN. Finally, we validate our theoretical results with numerical experiments.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider a boundary value problem posed in a Lipschitz domain \({\varOmega }\subset \mathbb {R}^{d}\), \(d\in \left \{1,2,3\right \}\),

We assume that (1) admits a unique solution in some Hilbert space \(\mathcal {V}\). If the boundary value problem is linear, a discretization of (1) with Nv degrees of freedom leads to a linear system

where \(A\in \mathbb {R}^{N_{v}\times N_{v}}\), \(\mathbf {u}\in V(\cong \mathbb {R}^{N_{v}})\), and f ∈ V. If the boundary value problem is nonlinear, we obtain a nonlinear system

where \(F:V\rightarrow V\) is a nonlinear function and u ∈ V. Several numerical methods have been proposed in the last decades for the efficient solution of such boundary value problems, e.g., multigrid methods [21, 37] and domain decomposition (DD) methods [34, 36]. We will focus on DD methods, which are usually divided into two distinct classes, that is overlapping methods, which include the AS (Additive Schwarz) and RAS (Restricted Additive Schwarz) methods [6, 36], and nonoverlapping methods such as FETI (Finite Element Tearing and Interconnect) and Neumann-Neumann methods [14, 26, 32]. Concerning nonlinear problems, DD methods can be applied either as nonlinear iterative methods, that is by just solving nonlinear problems in each subdomain and then exchanging information between subdomains as in the linear case [2, 29, 30], or as preconditioners to solve the Jacobian linear system inside Newton’s iteration. In the latter case, the term Newton-Krylov-DD is employed, where DD is replaced by the domain decomposition preconditioner used [23].

An alternative is to use a DD method as a preconditioner for Newton’s method. Preconditioning a nonlinear system F(u) = 0 means that we aim to replace the original nonlinear system with a new nonlinear system, still having the same solution, but for which the nonlinearities are more balanced and Newton’s method converges faster [3, 17]. Seminal contributions in nonlinear preconditioning have been made by Cai and Keyes in [3, 4], where they introduced ASPIN (Additive Schwarz Preconditioned Inexact Newton). The development of good preconditioners is not an easy task even in the linear case. One useful strategy is to study efficient iterative methods, and then to use the associated preconditioners in combination with Krylov methods [17]. The same logical path paved the way to the development of RASPEN (Restricted Additive Schwarz Preconditioned Exact Newton) in [13], which in short applies Newton’s method to the fixed point equation defined by the nonlinear RAS iteration at convergence. Extensions of this idea to Dirichlet-Neumann are presented in [7]. In [22], the authors describe and analyze the scalability of the two-level variants of the aforementioned methods (ASPIN and RASPEN). In particular, they discuss several approaches of adding the coarse space correction, and a numerical comparison of all these methods is reported for different types of coarse spaces. All these methods are left preconditioners. Right preconditioners are usually based on the concept of nonlinear elimination, presented in [27], and they are very efficient as shown in [5, 19, 20, 31]. Right nonlinear preconditioners based on FETI-DP (Finite Element Tearing and Interconnecting—Dual-Primal) and BDDC (Balancing Domain Decomposition by Constraint) have been shown to be very effective (see, e.g., [24, 25]). While left preconditioners aim to transform the original nonlinear function into a better behaved one, right preconditioners aim to provide a better initial guess for the next outer Newton iteration.

Nonoverlapping methods are sometimes called substructuring methods (a term borrowed from Przemieniecki’s work [33]), as in these methods the unknowns in the interior of the nonoverlapping domains are eliminated through static condensation so that one needs to solve a smaller system involving only the degrees of freedom on the interfaces between the nonoverlapping subdomains [36]. However, it is also possible to write an overlapping method, such as Lions’ Parallel Schwarz Method (PSM) [28], which is equivalent to RAS [16], in substructured form, even though this approach is much less common in the literature. For a two subdomain decomposition, a substructuring procedure applied to the PSM is carried out in [15, Section 5], [18, Section 3.4] and [10]. In [10, 11], the authors introduced a substructured formulation of the PSM at the continuous level for decompositions with many subdomains and crosspoints, and further studied ad hoc spectral and geometric two-level methods. In this particular framework, the substructured unknowns are now the degrees of freedom located on the portions of a subdomain boundary that lie in the interior of another subdomain; that is where the overlapping DD method takes the information to compute the new iterate. We emphasize that, at a given iteration n, any iterative DD method (overlapping or nonoverlapping) needs only a few values of un to compute the new approximation un+ 1. The major part of un is useless.

In this manuscript, we define a substructured version of RAS, that is we define an iterative scheme based on RAS which acts only over unknowns that are located on the portions of a subdomain boundary that lie in the interior of another subdomain. We study in detail the effects that such a substructuring procedure has on RAS when the latter is applied either as an iterative solver or as a preconditioner to solve linear and nonlinear boundary value problems. Does the substructured iterative version converge faster than the volume one? Is the convergence of GMRES affected by substructuring? What about nonlinear problems when instead of preconditioned GMRES we rely on preconditioned Newton? We prove that substructuring does not influence the convergence of the iterative methods both in the linear and nonlinear case, by showing that at each iteration, the restriction on the interfaces of the volume iterates coincides with the iterates of the substructured iterative method. Nevertheless, we discuss in Section 3.1 and corroborate by numerical experiments that a substructured formulation presents computational advantages. The equivalence of iterates does not hold anymore when considering preconditioned GMRES. Specifically, our study shows that GMRES should be applied to the substructured system, since it is computationally less expensive, requiring to perform orthogonalization on a much smaller space, and thus needs also less memory. In contrast to the linear case, we prove that, surprisingly, the nonlinear preconditioners RASPEN and SRASPEN (Substructured RASPEN) for Newton produce the same iterates once these are restricted to the interfaces. However, SRASPEN has again more favorable properties when assembling and solving the Jacobian matrices at each Newton iteration. Finally, we also extend the work in [10, 11] defining substructured two-level methods to the nonlinear case, where both smoother and coarse correction are defined directly on the interfaces between subdomains.

This paper is organized as follows: we introduce in Section 2 the mathematical setting with the domain, subdomains and operators defined on them. In Section 3, devoted to the linear case, we study the effects of substructuring on RAS and on GMRES applied to the preconditioned system. In Section 4, we extend our analysis to nonlinear boundary value problems. Section 5 contains two-level substructured methods for the nonlinear problems. Finally, Section 6 presents numerical tests to corroborate the framework proposed.

2 Notation

Let us decompose the domain Ω into N nonoverlapping subdomains Ωj, that is \({\varOmega } = \bigcup _{j \in \mathcal {J}} {\varOmega }_{j}\) with \(\mathcal {J}:=\{1,2,\dots ,N\}\).

The nonoverlapping subdomains Ωj are then enlarged to obtain subdomains \({\varOmega }^{\prime }_{j}\) which form an overlapping decomposition of Ω. For each subdomain \({\varOmega }^{\prime }_{j}\), we define Vj as the restriction of V to \({\varOmega }^{\prime }_{j}\), that is Vj collects the degrees of freedom on \({\varOmega }^{\prime }_{j}\). Further, we introduce the classical restriction and prolongation operators \(R_{j}:V\rightarrow V_{j}\), \(P_{j}:V_{j}\rightarrow V\), and the restricted prolongation operators \(\widetilde {P}_{j}:V_{j}\rightarrow V\). We assume that these operators satisfy

where \(I_{V_{j}}\) is the identity on Vj and I is the identity on V.

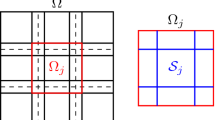

We now define the substructured skeleton. In the following, we use the notation introduced in [9]. For any \(j \in \mathcal {J}\), we define the set of neighboring indices \(N_{j} :=\{ \ell \in \mathcal {J} : {\varOmega }^{\prime }_{j} \cap \partial {\varOmega }^{\prime }_{\ell } \neq \emptyset \}\). Given a \(j \in \mathcal {J}\), we introduce the substructure of \({\varOmega }^{\prime }_{j}\) defined as \(S_{j} := \bigcup _{\ell \in N_{j}} \bigl (\partial {\varOmega }^{\prime }_{\ell } \cap {\varOmega }^{\prime }_{j}\bigr )\), that is the union of all the portions of \(\partial {\varOmega }^{\prime }_{\ell }\) with ℓ ∈ Nj. The substructure of the whole domain Ω is defined as \(S:=\bigcup _{j \in \mathcal {J}}\overline {S_{j}}\). A graphical representation of S is given in Fig. 1 for a decomposition of a square into nine subdomains. We now introduce the space \(\overline {V}\) as the trace space of V onto the substructure S. Associated with \(\overline {V}\), we consider the restriction operator \(\overline {R}:V\rightarrow \overline {V}\) and a prolongation operator \(\overline {P}:\overline {V}\rightarrow V\). The restriction operator \(\overline {R}\) takes an element v ∈ V and restricts it to the skeleton S. The prolongation operator \(\overline {P}\) extends an element \(v\in \overline {V}\) to the global space V. In our numerical experiments, \(\overline {P}\) extends an element \(v_{S}\in \overline {V}\) by zero in Ω ∖ S. However, we can consider several different prolongation operators. How this extension is done is not crucial as we will use \(\overline {P}\) inside a domain decomposition algorithm, and thus, only the values on the skeleton S will play a role. Hence, as of now, we will need only one assumption on the restriction and prolongation operator, namely

where \(\overline {I}\) is the identity over \(\overline {V}\).

The domain Ω is divided into nine nonoverlapping subdomains (left). The center panel shows how the diagonal nonoverlapping subdomains are enlarged to form overlapping subdomains. On the right, we denote the unknowns represented in \(\overline {V}\) (blue line) and the unknowns of a coarse space of \(\overline {V}\) (red crosses)

3 The linear case

In this section, we focus on the linear problem Au = f. After defining a substructured variant of RAS called SRAS, we prove the equivalence between RAS and SRAS. Then, we study in detail how GMRES performs if applied to the volume preconditioned system or the substructured system.

3.1 Linear iterative methods

To introduce our analysis, we recall the classical definition of RAS to solve the linear system (2). RAS starts from an approximation u0 and computes for \(n=1,2,\dots \),

where Aj := RjAPj, that is, we use exact local solvers. Let us now rewrite the iteration (6) in an equivalent form using the hypothesis in (4) and the definition of Aj,

We emphasize that \(\left (P_{j}R_{j}-I\right )\mathbf {u}^{n-1}\) contains non-zero elements only outside subdomain \({\varOmega }^{\prime }_{j}\), and in particular the terms \(A\left (P_{j}R_{j}-I\right )\mathbf {u}^{n-1}\) represent precisely the boundary conditions for \({\varOmega }^{\prime }_{j}\) given the old approximation un− 1. This observation suggests that RAS, like most domain decomposition methods, can be written in a substructured form. Indeed, despite iteration (7) being written in volume form, involving the entire vector un− 1, only very few elements of un− 1 are needed to compute the new approximation un. A substructured method iterates only on those values of u which are really needed at each iteration, avoiding thus superfluous operations on the whole volume vector u (e.g., the volume residual computation f − Aun− 1 and the summation with the old iterate un− 1 in the RAS method (6)). For further details about a substructured formulation of the parallel Schwarz method at the continuous level, we refer to [18] for the two subdomain case, and [10, 11] for a general decomposition into several subdomains with crosspoints.

In Section 2, we introduced the substructured space \(\overline {V}\) geometrically, but we can also provide an algebraic characterization using the RAS operators Rj and Pj. We consider

that is, \(\mathcal {K}\) is the set of indices such that the canonical vectors ek represent a Dirichlet boundary condition at least for a subdomain, and its complement \(\mathcal {K}^{c}:=\left \{1,\dots ,N_{v}\right \}\setminus \mathcal {K}\). The cardinality of \(\mathcal {K}\) is \(|\mathcal {K}|=:\overline {N}\). We can thus introduce

Finally \(\overline {R}\) is the Boolean restriction operator, mapping a vector of \(\mathbb {R}^{N_{v}}\) onto a vector of \(\mathbb {R}^{\overline {N}}\), keeping only the indices in \(\mathcal {K}\). Hence, \(\overline {V}:=\text {Im}\overline {R}(\cong \mathbb {R}^{\overline {N}})\) and \(\overline {P}=\overline {R}^{\top }\).

To define SRAS, we need one more assumption on the restriction and prolongation operators, namely

where M− 1 is the preconditioner for RAS, formally defined as

Heuristically, this assumption means that the operator \(\overline {P}\overline {R}\) preserves all the information needed by GRAS (defined in (7)) to compute correctly the values of the new iterate on the skeleton S. Indeed a direct calculation shows that (8) is equivalent to the condition

Given a substructured approximation \(\mathbf {v}^{0}\in \overline {V}\), for \(n=1,2,\dots \), we define SRAS as

RAS and SRAS are tightly linked, but when are they equivalent? Clearly, we must impose some conditions on \(\overline {P}\) and \(\overline {R}\). The next theorem shows that assumption (8) is in fact sufficient for equivalence.

Theorem 1 (Equivalence between RAS and SRAS)

Assume that the operators \(\overline {R}\) and \(\overline {P}\) satisfy (8). Given an initial guess u0 ∈ V and its substructured restriction \(\mathbf {v}^{0}:=\overline {R} \mathbf {u}^{0}\in \overline {V}\), define the sequences \(\left \{ \mathbf {u}^{n}\right \}\) and \(\left \{\mathbf {v}^{n}\right \}\) such that

Then, \(\overline {R}\mathbf {u}^{n}=\mathbf {v}^{n}\) for every iteration n ≥ 1.

Proof

We prove this statement for n = 1 by a direct calculation. Taking the restriction of u1 we have

where we used assumption (8), and the definition of v0 and GSRAS. For a general n, the proof is obtained by induction. □

Remark 1 (Implementation of SRAS)

In (10), we have introduced the substructured operator GSRAS directly through the volume operator GRAS. This definition is very useful from the theoretical point of view as it permits to link the volume and substructured methods and facilitates the theoretical analysis. However, we stress that one should not implement GSRAS by directly calling the volume routine GRAS onto a vector \(\overline {P}\mathbf {v}\). Doing so, one would lose all the computational advantages as computations on the volume vector \(\overline {P}v\) would be performed. There are two strategies to implement a fully substructured SRAS method. The first one is to implement a routine that, for each \(j=1,\dots ,N\), extracts from v those values which lie on the boundary of \({\varOmega }^{\prime }_{j}\) and rescale them appropriately as boundary conditions for the local subdomain solve. A second possibility arises from (10) and (7), by observing that

where \(\mathbf {b}:=\overline {R}{\sum }_{j=1}^{N}\widetilde {P}_{j}A_{j}^{-1}R_{j}f\). One can then pre-assemble the matrices \(\overline {R}_{j}:=R_{j}(-A(I-P_{j}R_{j})\overline {P}\in \mathbb {R}^{M_{j},\overline {N}}\), where Mj is the number of degrees of freedom in \({\varOmega }_{j}^{\prime }\). The matrices \(\overline {R}_{j}\) are very sparse and their goal is just to extract the values needed for the j th subdomain solve from vn− 1. Similarly \(\overline {P}_{j}:=\overline {R}\widetilde {P}_{j}\in \mathbb {R}^{\overline {N},M_{j}}\) weights a subdomain solution with the partition of unity and maps it to the substructured vector. We finally obtain the equivalent iterative method

where no computations are performed with matrices or vectors at the volume level, except for the subdomain solves.

3.2 Linear preconditioners for GMRES

It is well known that any stationary iterative method should be used in practice as a preconditioner for a Krylov method, since the Krylov method finds in general a much better residual polynomial with certain optimality properties, compared to the residual polynomial of the stationary iteration (see, e.g., [8]). The preconditioner associated with RAS is M− 1 and is defined in (9). The preconditioned volume system then reads

To discover the preconditioner associated with SRAS, we consider the fixed point limit of (10),

where in the second line we used the identity \(\overline {R}\overline {P}=\overline {I}_{S}\). We thus consider the preconditioned substructured system

Observe that as A = M − N, (15) can be written as \((\overline {I}-\overline {G})\mathbf {v}=\mathbf {b}\), where \(\overline {G}:=\overline {R}M^{-1}N\overline {P}\) and \(\mathbf {b}:=\overline {R}M^{-1}f\), thus recovering the classical form of the substructured PSM (see [10, 11, 18]).

It is then natural to ask how a Krylov method like GMRES performs if applied to (13), compared to (15). Let us consider an initial guess in volume u0, its restriction \(\mathbf {v}^{0}:=\overline {R}\mathbf {u}^{0}\) and the initial residuals r0 := M− 1(f − Au0), \(\overline {\mathbf {r}}^{0}:=\overline {R}M^{-1}(\mathbf {f}-A\overline {P}\mathbf {v}^{0})\). Then GMRES applied to the preconditioned systems (13) and (15) looks for solutions in the affine Krylov spaces

where k ≥ 1. The two Krylov spaces are tightly linked, as Theorem 2 below will show. To prove it, we need the following Lemma.

Lemma 1

If the restriction and prolongation operators \(\overline {R}\) and \(\overline {P}\) satisfy (8) then for k ≥ 1,

Proof

Multiplying equation (8) from the right by M− 1A we get

where in the second equality we have used once more (8). Using induction, one gets for every k ≥ 1,

and this completes the proof. □

Theorem 2 (Relation between RAS and SRAS Krylov subspaces)

Let us consider operators \(\overline {R}\) and \(\overline {P}\) satisfying (8), an initial guess u0 ∈ V, its restriction \(\mathbf {v}^{0}:=\overline {R}\mathbf {u}^{0}\in \overline {V}\) and the residuals r0 := M− 1(f − Au0), \(\overline {\mathbf {r}}^{0}:=\overline {R}M^{-1}(\mathbf {f}-A\overline {P}\mathbf {v}^{0})\). Then for every k ≥ 1, we have

Proof

First, due to (8) we have

Let us now show the first inclusion. If \(\mathbf {v}\in \overline {R}\left (\mathbf {u}^{0} + \mathcal {K}_{k}(M^{-1}A,\mathbf {r}^{0})\right )\), then \(\mathbf {v}= \overline {R} \mathbf {u}^{0}+ \overline {R}{\sum }_{j=0}^{k-1} \gamma _{j} \left (M^{-1}A\right )^{j}\mathbf {r}^{0},\) for some coefficients γj. Using Lemma 1, we can rewrite v as

and thus \( \overline {R}\left (\mathbf {u}^{0} + \mathcal {K}_{k}(M^{-1}A,\mathbf {r}^{0})\right ) \subset \mathbf {v}^{0}+\mathcal {K}_{k}(\overline {R}M^{-1}A\overline {P},\overline {\mathbf {r}}^{0})\). Similarly if \(\mathbf {w}\in \mathbf {v}^{0}+\mathcal {K}_{k}(\overline {R}M^{-1}A\overline {P},\overline {\mathbf {r}}^{0})\) then

thus \(\mathbf {w}\in \overline {R}\left (\mathbf {u}^{0} + \mathcal {K}_{k}(M^{-1}A,\mathbf {r}^{0})\right )\) and we achieve the desired relation (18). □

Theorem 2 shows that the restriction to the substructure of the affine volume Krylov space of RAS coincides with the affine substructured Krylov space of SRAS. One could then wonder if the restrictions of the iterates of GMRES applied to the preconditioned volume system (13) coincide with the iterates of GMRES applied to the preconditioned substructured system (15). However, this does not turn out to be true. Nevertheless, we can further link the action of GMRES on these two preconditioned systems.

It is well known, (see, e.g., [35, Section 6.5.1]), that GMRES applied to (13) and (15) generates a sequence of iterates \(\left \{ \mathbf {u}^{k}\right \}_{k}\) and \(\left \{\mathbf {v}^{k}\right \}_{k}\) such that

and

The iterates uk and vk can be characterized using orthogonal and Hessenberg matrices obtained with the Arnoldi iteration. In particular, the k th iteration of Arnoldi provides orthogonal matrices Qk,Qk+ 1 and a Hessenberg matrix Hk such that M− 1AQk = Qk+ 1Hk, and the columns of Qk form an orthonormal basis for the Krylov subspace \(\mathcal {K}_{k}(M^{-1}A,\mathbf {r}^{0})\). Using these matrices, one writes uk as uk = u0 + Qka, where \(\mathbf {a} \in \mathbb {R}^{k}\) is the solution of the least squares problem

and e1 is the canonical vector of \(\mathbb {R}^{k+1}\). Similarly, one characterizes the vector vk as \(\mathbf {v}^{k}=\mathbf {v}^{0}+\overline {Q}_{k}\mathbf {y}\) such that

where \(\overline {Q}_{k}, \overline {H}_{k}\) are the orthogonal and Hessenberg matrices obtained through the Arnoldi method applied to the matrix \(\overline {R}M^{-1}A\overline {P}\).

The next theorem provides a link between the volume least square problem (19) and the substructured one (20).

Theorem 3

Under the hypothesis of Theorem 2, the k th iterate of GMRES applied to (15) is equal to \(\mathbf {v}^{k}=\mathbf {v}^{0}+\overline {Q}_{k}\mathbf {y}=\mathbf {v}^{0}+\overline {R}Q_{k}\mathbf {t}\), where y satisfies (22) while

Proof

It is clear that \(\mathbf {v}^{k}=\mathbf {v}^{0}+\overline {Q}_{k}\mathbf {y}=\mathbf {v}^{0}+\overline {R}Q_{k}\mathbf {t}\) as the first equality follows from standard GMRES literature (see, e.g., [35, Section 6.5.1]). The second equality follows from Theorem 2 as we have shown that \(\mathbf {v}^{0}+\mathcal {K}_{k}(\overline {R}M^{-1}A\overline {P},\overline {\mathbf {r}}^{0})=\overline {R}(\mathbf {u}^{0}+\mathcal {K}_{k}(M^{-1}A,\mathbf {r}^{0}))\). Thus, the columns of \(\overline {R}Q_{k}\) form an orthonormal basis of \(\mathcal {K}_{k}(\overline {R}M^{-1}A\overline {P},\overline {\mathbf {r}}^{0})\) and hence, vk can be expressed as a linear combination of the columns of \(\overline {R}Q_{k}\) with coefficients in the vector \(\mathbf {t}\in \mathbb {R}^{k}\) plus v0. We are then left to show (23). We have

Using the relation Im\((\overline {R}Q_{k})=\)Im\((\overline {Q}_{k})\), Lemma 1, the Arnoldi relation M− 1AQk = Qk+ 1Hk and that r0 coincides with the first column of Qk except for a normalization constant, we conclude

and this completes the proof. □

Few comments are in order here. First, GMRES applied to (15) converges in maximum \(\overline {N}\) iterations as the preconditioned matrix \(\overline {R}M^{-1}A\overline {P}\) has size \(\overline {N}\times \overline {N}\). Second, Theorem 2 states that \(\overline {R}(\mathbf {u}^{0}+\mathcal {K}_{\overline {N}}(M^{-1}A,\mathbf {r}^{0}))\) already contains the exact substructured solution, that is the exact substructured solution lies in the restriction of the volume Krylov space after \(\overline {N}\) iterations. Theoretically, if one could get the exact substructured solution from \(\overline {R}(\mathbf {u}_{0}+\mathcal {K}_{\overline {N}}(M^{-1}A,\mathbf {r}^{0}))\), then \(\overline {N}\) iterations of GMRES applied to (13), plus an harmonic extension of the substructured data into the subdomains, would be sufficient to get the exact volume solution.

On the other hand, we can say a bit more analyzing the structure of M− 1A. Using the splitting A = M − N, we have M− 1A = I − M− 1N. A direct calculation states \(\mathcal {K}_{k}(M^{-1}A,\mathbf {r}^{0})=\mathcal {K}_{k}(M^{-1}N,\mathbf {r}^{0})\) by using the relation

that is the Krylov space generated by M− 1A is equal to the Krylov space generated by the RAS iteration matrix for error equation. We denote this linear operator with \(G^{\text {RAS}}_{0}\) which is defined as in (7) with f = 0. We now consider the orthogonal complement \(\widehat {V}^{\perp }:=(\text {span}\left \{\mathbf {e}_{k}\right \}_{k\in \mathcal {K}})^{\perp }=\text {span}\left \{\mathbf {e}_{i}\right \}_{i\in \mathcal {K}^{c}}\), and \(\dim (\widehat {V}^{\perp })=N_{v}-\overline {N}\). Since for every \(\mathbf {v}\in \widehat {V}^{\perp }\), it holds that RjA(I − PjRj)v = 0, we can conclude that \(\widehat {V}^{\perp } \subset \ker \left (G_{0}^{\text {RAS}}\right )\).

Using the rank-nullity theorem, we obtain

hence GMRES applied to the preconditioned volume system encounters a lucky Arnoldi breakdown after at most \(\overline {N}+1\) iterations (in exact arithmetic). This rank argument can be used for the substructured preconditioned system as well. Indeed as \(\overline {R}M^{-1}A\overline {P}=\overline {I}-\overline {R}M^{-1}N\overline {P}\), the substructured Krylov space is generated by the matrix \(\overline {R}M^{-1}N\overline {P}\), whose rank is equal to the rank of M− 1N, that is the rank of \(G_{0}^{\text {RAS}}\).

Heuristically, choosing a zero initial guess, r0 := M− 1f corresponds to a solution of subdomains problem with the correct right-hand side, but with zero Dirichlet boundary conditions along the interfaces of each subdomain. Thus, GMRES applied to (13) needs only to find the correct boundary conditions for each subdomain, and this can be achieved in at most \(\overline {N}\) iterations as Theorem 2 shows.

Finally, we remark that each GMRES iteration on (15) is computationally less expensive than a GMRES iteration on (13) as the orthogonalization of the Arnoldi method is carried out in a much smaller space. From the memory point of view, this implies that GMRES needs to store shorter vectors. Thus, a saturation of the memory is less likely, and restarted versions of GMRES may be avoided.

4 The nonlinear case

In this section, we study iterative and preconditioned domain decomposition methods to solve the nonlinear system (3).

4.1 Nonlinear iterative methods

RAS can be generalized to solve the nonlinear (3). To show this, we introduce the solution operators Gj which are defined through

where the operators Rj and Pj are defined in Section 2. Nonlinear RAS for N subdomains then reads

It is possible to show that (26) reduces to (7) if F(u) is a linear function: assuming that F(u) = Au −f, (25) becomes

which implies \(G_{j}\left (\mathbf {u}^{n-1}\right )=A_{j}^{-1}R_{j}\left (\mathbf {f}-A\left (I-P_{j}R_{j}\right )\mathbf {u}^{n-1}\right )\), and thus, (26) reduces to (7).

Similarly to the linear case, we introduce the nonlinear SRAS. Defining

we obtain the nonlinear substructured iteration

which is the nonlinear counterpart of (10).

The same calculations of Theorem 1 allow one to obtain an equivalence result between nonlinear RAS and nonlinear SRAS.

Theorem 4 (Equivalence between nonlinear RAS and SRAS)

Assume that the operators \(\overline {R}\) and \(\overline {P}\) satisfy \(\overline {R}{\sum }_{j\in \mathcal {J}}\widetilde {P}_{j} G_{j}(\mathbf {u})=\overline {R}{\sum }_{j\in \mathcal {J}}\widetilde {P}_{j} G_{j}(\overline {P}\overline {R}\mathbf {u})\). Let us consider an initial guess u0 ∈ V and its substructured restriction \(\mathbf {v}^{0}:=\overline {R} \mathbf {u}^{0}\in \overline {V}\), and define the sequences \(\left \{ \mathbf {u}^{n}\right \}\), \(\left \{\mathbf {v}^{n}\right \}\) such that

Then for every n ≥ 1, \(\overline {R}\mathbf {u}^{n}=\mathbf {v}^{n}\).

4.2 Nonlinear preconditioners for Newton’s method

In [13], it was proposed to use the fixed point equation of nonlinear RAS as a preconditioner for Newton’s method, in a spirit that goes back to [3, 4]. This method has been called RASPEN (Restricted Additive Schwarz Preconditioned Exact Newton) and it consists in applying Newton’s method to the fixed point equation of nonlinear RAS, that is,

For a comprehensive discussion of this method, we refer to [13]. As done in (14) for the linear case, we now introduce a substructured variant of RASPEN and we call it SRASPEN (Substructured Restricted Additive Schwarz Preconditioned Exact Newton). SRASPEN is obtained by applying Newton’s method to the fixed point equation of nonlinear SRAS, that is,

One can verify that the above equation \(\overline {\mathcal {F}}(\mathbf {v})=0\) can also be written as

This formulation of SRASPEN provides its relation with RASPEN and simplifies the task of computing the Jacobian of SRASPEN.

4.2.1 Computation of the Jacobian and implementation details

To apply Newton’s method, we need to compute the Jacobian of SRASPEN. Let \(J_{\mathcal {F}}(\mathbf {w})\) and \(J_{\overline {\mathcal {F}}}(\mathbf {w})\) denote the action of the Jacobian of RASPEN and SRASPEN on a vector w. Since these methods are closely related, indeed \(\overline {\mathcal {F}}(\mathbf {v})=\overline {R}\mathcal {F}(\overline {P}\mathbf {v})\), we can immediately compute the Jacobian of \(\overline {\mathcal {F}}\) once we have the Jacobian of \(\mathcal {F}\), using the chain rule, \(J_{\overline {\mathcal {F}}}(\mathbf {v})=\overline {R}J_{\mathcal {F}}(\overline {P}\mathbf {v})\overline {P}\). The Jacobian of \(\mathcal {F}\) has been derived in [13] and we report here the main steps for the sake of completeness. Differentiating (29) with respect to u leads to

Recall that the local inverse operators \(G_{j}:V\rightarrow V_{j}\) are defined in (25) as the solutions of RjF(PjGj(u) + (I − PjRj)u) = 0. Differentiating this relation yields

where u(j) := PjGj(u) + (I − PjRj)u is the volume solution vector in subdomain j and J is the Jacobian of the original nonlinear function F. Combining the above (31)–(32) and defining \(\widetilde {\mathbf {u}}^{(j)}:=P_{j}G_{j}(\overline {P}\mathbf {v})+(I-P_{j}R_{j})\overline {P}\mathbf {v}\), we get

and

where we used the assumptions \({\sum }_{j\in \mathcal {J}} \widetilde {P}_{j}R_{j}=\overline {I}\) and \(\overline {R}\overline {P}=I_{S}\). We remark that to assemble \(J_{\mathcal {F}}(\mathbf {u})\) or to compute its action on a given vector, one needs to calculate \(J\left (\mathbf {u}^{(j)}\right )\), that is, evaluate the Jacobian of the original nonlinear function F on the subdomain solutions u(j). The subdomain solutions u(j) are obtained evaluating \(\mathcal {F}(\mathbf {u})\), that is performing one step of RAS with initial guess equal to u. A smart implementation can use the local Jacobian matrices \(R_{j} J\left (\mathbf {u}^{(j)}\right )P_{j}\) that are already computed by the inner Newton solvers while solving the nonlinear problem on each subdomain, and hence no extra cost is required to assemble this term. Further, the matrices \(R_{j} J\left (\mathbf {u}^{(j)}\right )\) are different from the local Jacobian matrices at very few columns corresponding to the degrees of freedom on the interfaces, and thus, it suffices to only modify those specific entries. In a non-optimized implementation, one can also directly evaluate the Jacobian of F on the subdomain solutions u(j), without relying on already computed quantities. Concerning \(J_{\overline {\mathcal {F}}}(\mathbf {v})\), we emphasize that \(\widetilde {\mathbf {u}}^{(j)}\) is the volume subdomain solution obtained by substructured RAS starting from a substructured function v. Thus, like u(j), \(\widetilde {\mathbf {u}}^{(j)}\) is readily available in Newton’s iteration after evaluating the function \(\overline {\mathcal {F}}\).

From the computational point of view, SRASPEN has several advantages over RASPEN. From (33) and (34), we note that \(J_{\overline {\mathcal {F}}}\) is a matrix of dimension \(\overline {N}\times \overline {N}\) where \(\overline {N}\) is the number of unknowns on S, and thus is a much smaller matrix than \(J_{\mathcal {F}}\), whose size is Nv × Nv, with Nv the number of unknowns in volume. On the one hand, if one prefers to assemble the Jacobian matrix, either because one wants to use a direct solver or because one wants to recycle the Jacobian for several iterations, then SRASPEN dramatically reduces the cost of the assembly of the Jacobian matrix. On the other hand, we remark that (33) and (34) have the same structure of the volume and substructured preconditioned matrices (13) and (15), by just identifying \(M^{-1}={\sum }_{j\in \mathcal {J}} \widetilde {P}_{j}\left (R_{j} J\left (\mathbf {u}^{(j)}\right )P_{j}\right )^{-1}\). Similarly to Remark 1 in the linear case, we can have a fully substructured formulation, by writing

where \(\overline {P}_{j}:=\overline {R}\widetilde {P}_{j}\) and \(\overline {R}_{j}:=R_{j}J(\widetilde {u}^{j})\overline {P}\). It follows that if one prefers to use a Krylov method such as GMRES, then according to the discussion in Section 3.2, SRASPEN better exploits the properties of the underlying domain decomposition method, and saves computational time by permitting to perform the orthogonalization in a much smaller space. Further implementation details and a more extensive comparison are available in the numerical Section 6.

4.2.2 Convergence analysis of RASPEN and SRASPEN

Theorem 4 gives an equivalence between nonlinear RAS and nonlinear SRAS. Are RASPEN and SRASPEN equivalent? Does Newton’s method behave differently if applied to the volume or to the substructured fixed point equation, like it happens with GMRES (see Section 3.2)? In this section, we aim to answer these questions by discussing the convergence properties of the exact Newton’s method applied to \(\mathcal {F}\) and \(\overline {\mathcal {F}}\).

Let us recall that, given two approximations u0 and v0, the exact Newton’s method computes for n ≥ 1,

where \(J_{\mathcal {F}}\left (\mathbf {u}^{n-1}\right )\) and \(J_{\overline {\mathcal {F}}}\left (\mathbf {v}^{n-1}\right )\) are the Jacobian matrices respectively of \(\mathcal {F}\) and \(\overline {\mathcal {F}}\) evaluated at un− 1 and vn− 1. In this paragraph, we do not need a precise expression for \(J_{\mathcal {F}}\) and \(J_{\overline {\mathcal {F}}}\). However we recall that, the definition \(\overline {\mathcal {F}}(\mathbf {v})=\overline {R}\mathcal {F}(\overline {P}\mathbf {v})\) and the chain rule derivation provides us the relation \(J_{\overline {\mathcal {F}}}(\mathbf {v})=\overline {R}J_{\mathcal {F}}(\overline {P}\mathbf {v})\overline {P}\). If the operators \(\overline {R}\) and \(\overline {P}\) were square matrices, we would immediately obtain that RASPEN and SRASPEN are equivalent, due to the affine invariance theory for Newton’s method [12]. However, in our case, \(\overline {R}\) and \(\overline {P}\) are rectangular matrices and they map between spaces of different dimensions. Nevertheless, in the following theorem, we show that RASPEN and SRASPEN provide the same iterates restricted to the interfaces under further assumptions on \(\overline {R}\) and \(\overline {P}\), which is a direct generalization of (8) to the nonlinear case.

Theorem 5 (Equivalence between RASPEN and SRASPEN)

Assume that the operators \(\overline {R}\) and \(\overline {P}\) satisfy

Given an initial guess u0 ∈ V and its substructured restriction \(\mathbf {v}^{0}:=\overline {R} \mathbf {u}^{0}\in \overline {V}\), define the sequences \(\left \{ \mathbf {u}^{n}\right \}\) and \(\left \{\mathbf {v}^{n}\right \}\) such that

Then for every n ≥ 1, \(\overline {R}\mathbf {u}^{n}=\mathbf {v}^{n}\).

Proof

We first prove the equality \(\overline {R}\mathbf {u}^{1}=\mathbf {v}^{1}\) by direct calculations. Taking the restriction of the RASPEN iteration, we obtain

Now, due to the definition of \(\overline {\mathcal {F}}\) and of v0, and to the assumption (35), we have

Further, taking the Jacobian of assumption (35), we have \(\overline {R} J_{\mathcal {F}}(\mathbf {u}^{0})=J_{\overline {\mathcal {F}}} (\overline {R}\mathbf {u}^{0})\overline {R}\), which simplifies by taking the inverse of the Jacobians to

Finally substituting relations (37) and (38) into (36) leads to

and the general case is obtained by induction. □

5 Two-level nonlinear methods

RAS and SRAS can be generalized to two-level iterative schemes. This has already been treated in detail for the linear case in [10, 11]. In this section, we introduce two-level variants for nonlinear RAS and SRAS, and also for the associated RASPEN and SRASPEN.

5.1 Two-level iterative methods

To define a two-level method, we introduce a coarse space V0 ⊂ V, a restriction operator \(R_{0}:V\rightarrow V_{0}\) and an interpolation operator \(P_{0}:V_{0}\rightarrow V\). The nonlinear system F can be projected onto the coarse space V0, defining the coarse nonlinear function \(F_{0}\left (\mathbf {u}_{0}\right ):=R_{0}F\left (P_{0}\mathbf {u}_{0}\right )\), for every u0 ∈ V0. Due to this definition, it follows immediately that \(J_{F_{0}}\left (\mathbf {u}_{0}\right )=R_{0} J_{F}\left (P_{0}\mathbf {u}_{0}\right )P_{0}\), ∀u0 ∈ V0. To compute a coarse correction we rely on the FAS approach [1]. Given a current approximation u, the coarse correction C0(u) is computed as the solution of

Two-level nonlinear RAS is described by Algorithm 1 and it consists of a coarse correction followed by one iteration of nonlinear RAS (see [22] for different approaches).We now focus on its substructured counterpart. We introduce a coarse substructured space \(\overline {V}_{0}\subset \overline {V}\), a restriction operator \(\overline {R}_{0}:\overline {V}\rightarrow \overline {V}_{0}\) and a prolongation operator \(\overline {P}_{0}:\overline {V}_{0}\rightarrow \overline {V}\). We define the coarse substructured function as

From the definition it follows that \(J_{\overline {\mathcal {F}}_{0}}(\mathbf {v}_{0})=\overline {R}_{0}J_{\overline {\mathcal {F}}}(\overline {P}_{0}\mathbf {v}_{0})\overline {P}_{0}\), \(\forall \mathbf {v}_{0}\in \overline {V}_{0}\). There is a profound difference between two-level nonlinear RAS and two-level nonlinear SRAS: in the first one (Algorithm 1), the coarse function is obtained restricting the original nonlinear system F(u) = 0 onto a coarse mesh. In the substructured version, the coarse substructured function is defined restricting the fixed point equation of nonlinear SRAS to \(\overline {V}_{0}\). That is, the coarse substructured function corresponds to a coarse version of SRASPEN. Hence, we remark that this algorithm is the nonlinear counterpart of the linear two-level algorithm described in [10, 11]. Two-level nonlinear SRAS is then defined in Algorithm 2.

As in the linear case, numerical experiments will show that two-level iterative nonlinear SRAS exhibits faster convergence in terms of iteration counts compared to two-level nonlinear RAS. However, we remark that evaluating F0 is rather cheap, while evaluating \(\overline {\mathcal {F}}_{0}\) could be quite expensive as it requires to perform subdomain solves on the fine mesh. One possible improvement is to approximate \(\overline {\mathcal {F}}_{0}\) replacing \(\overline {\mathcal {F}}\) in its definition with another function which performs subdomain solves on a coarse mesh. Further, we emphasize that a prerequisite of any domain decomposition method is that the subdomain solves are cheap to compute in a high performance parallel implementation, so that in such a setting evaluating \(\overline {\mathcal {F}}_{0}\) needs to be cheap as well.

5.2 Two-level preconditioners for Newton’s method

Once we have defined the two-level iterative methods, we are ready to introduce the two-level versions of RASPEN and SRASPEN. The fixed point equation of two-level nonlinear RAS is

where we have introduced the correction operators Cj(u) := Gj(u) − Rju. Thus, two-level RASPEN defined in [13] consists in applying Newton’s method to the fixed point (41).

Similarly, the fixed point equation of two-level nonlinear SRAS is

where the correction operators \(\overline {C}_{j}\) are defined as \(\overline {C}_{j}(\mathbf {v}):=\overline {G}_{j}(\mathbf {v})-\overline {R}\widetilde {P}_{j}R_{j}\overline {P}\mathbf {v}\). Two-level SRASPEN consists in applying Newton’s method to the fixed point (42).

6 Numerical results

We discuss three different examples in this section to illustrate our theoretical results. In the first example, we consider a linear problem where we study the performance of GMRES when it is applied to the preconditioned volume system and the preconditioned substructured system. In the next two examples, we present numerical results in order to compare Newton’s method, NKRAS [5], nonlinear RAS, nonlinear SRAS, RASPEN, and SRASPEN for the solution of a one-dimensional Forchheimer equation and for a two-dimensional nonlinear diffusion equation.

6.1 Linear example

We consider the diffusion equation −Δu = f, with source term f ≡ 1 and homogeneous boundary conditions inside the unit cube Ω := (0,1)3 decomposed into N equally sized bricks with overlap, each discretized with 27000 degrees of freedom. The size of the overlap is δ := 4 × h. In Table 1, we study the computational effort and memory required by GMRES when applied to the preconditioned volume system (13) (GMRES-RAS) and to the preconditioned substructured system (15) (GMRES-SRAS). We let the number of subdomains grow, while keeping their sizes constant, that is the global problem becomes larger as N increases. We report the computational times to reach a relative residual smaller than 10− 8, and the number of gigabytes required to store the orthogonal matrices of the Arnoldi iteration, both for the volume and substructured implementations. The subdomain solves are performed in a serial fashion, we precompute the Cholesky factorizations for the subdomain matrices Aj, and for SRAS we use the fixed point equation related to formulation (12).

Table 1 shows that GMRES applied to the preconditioned substructured system is faster in terms of computational time compared to the volume implementation. This advantage becomes more evident as the global problem becomes larger. We emphasize that GMRES required the same number of iterations to reach the tolerance for both methods in all cases considered. Thus, the faster time to solution of GMRES-SRAS is due to the smaller number of floating point operations that GMRES-SRAS has to perform, since the orthogonalization steps are performed in a much smaller space, and SRAS avoids unnecessary volume computations. Furthermore, GMRES-SRAS significantly outperforms GMRES-RAS in terms of memory requirements; in this particular case, GMRES-SRAS computes and stores orthogonal matrices which are about seven times smaller than the ones used by GMRES-RAS.

6.2 Forchheimer equation in 1D

Forchheimer equation is an extension of the Darcy equation for high flow rates, where the linear relation between the flow velocity and the gradient flow does not hold anymore. In a one-dimensional domain Ω := (0,1), the Forchheimer model is

where \(u_{L},u_{R}\in \mathbb {R}\), λ(x) is a positive and bounded permeability field and \(q(y):=\text {sign}(y)\frac {-1+\sqrt {1+4\gamma |y|}}{2\gamma }\), with γ > 0. To discretize (43), we use the finite volume scheme described in detail in [13]. In our numerical experiments, we set \(\lambda (x)=2+\cos \limits (5\pi x)\), \(f(x)=50\sin \limits (5\pi x)e^{x}\), γ = 1, u(0) = 1 and u(1) = e1. The solution field u(x) and the force field f(x) are shown in Fig. 2.

We then study the convergence behavior of our different methods. Figure 3 shows how the relative error decays for the different methods and for a decomposition into 20 subdomains (left panel) and 50 subdomains (right panel). The initial guess is equal to zero for all these methods.

Both plots in Fig. 3 show that the convergence rate of iterative nonlinear RAS and nonlinear SRAS is the same and very slow. As expected, NKRAS with line search converges better than Newton’s method and further RASPEN and SRASPEN converge in the same number of outer Newton iterations as they produce the same iterates. Moreover, it seems that the convergence of RASPEN and SRASPEN is not affected by the number of subdomains. However, these plots do not tell the whole story, as one should focus not only on the number of iterations but also on the cost of each iteration. To compare the cost of an iteration of RASPEN and SRASPEN, we have to distinguish two cases, that is, if one solves the Jacobian system directly or with some Krylov methods, e.g., GMRES. First, suppose that we want to solve the Jacobian system with a direct method and thus we need to assemble and store the Jacobians. From the expressions in equation (34) we remark that the assembly of the Jacobian of RASPEN requires N × Nv subdomain solves, where N is the number of subdomains and Nv is the number of unknowns in volume. On the other hand, the assembly of the Jacobian of SRASPEN requires \(N\times \overline {N}\) solves, where \(\overline {N}\) is the number of unknowns on the substructures and \(\overline {N}\ll N_{v}\). Thus, while the assembly of \(J_{\mathcal {F}}\) is prohibitive, it can still be affordable to assemble \(J_{\overline {\mathcal {F}}}\). Further, the direct solution of the Jacobian system is feasible as \(J_{\overline {\mathcal {F}}}\) has size \(\overline {N}\times \overline {N}\). Suppose now that we solve the Jacobian systems with GMRES. Let us indicate with I(k) and IS(k) the number of GMRES iterations to solve the volume and substructured Jacobian systems at the k th outer Newton iteration. Each GMRES iteration requires N subdomain solves which can be performed in parallel. In our numerical experiment, we have observed that generally IS(k) ≤ I(k), with I(k) − IS(k) ≈ 0,1,2, that is GMRES requires the same number of iterations or slightly less to solve the substructured Jacobian system compared to the volume one.

To better compare these two methods, we follow [13] and introduce the quantity L(n) which counts the number of subdomain solves performed by these two methods till iteration n, taking into account the advantages of a parallel implementation. We set \(L(n)={\sum }_{k=1}^{n} L_{in}^{k} + I(k)\), where \(L_{in}^{k}\) is the maximum over the subdomains of the number of Newton iterations required to solve the local subdomain problems at iteration k. The number of linear solves performed by GMRES should be I(k) × N, but as the N linear solves can be performed in parallel, the total cost of GMRES corresponds approximately to I(k) linear solves. Figure 4 shows the error decay as a function of L(n). We note that the two methods require approximately the same computational cost and SRASPEN is slightly faster.

For the decomposition into 50 subdomains, RASPEN requires on average 91.5 GMRES iterations per Newton iteration, while SRASPEN requires an average of 90.87 iterations. The size of the substructured space \(\overline {V}\) is \(\overline {N}=98\). For the decomposition into 20 subdomains, RASPEN requires an average of 40 GMRES iterations per Newton iteration, while SRASPEN needs 38 iterations. The size of \(\overline {V}\) is \(\overline {N}=38\), which means that GMRES reaches the given tolerance of 10− 12 after exactly \(\overline {N}\) steps, which is the size of the substructured Jacobian. Under these circumstances, it can be convenient to actually assemble \(J_{\overline {\mathcal {F}}}\), as it requires \(\overline {N}\times N\) subdomain solves which is the total cost of GMRES. Furthermore, the \(\overline {N}\times N\) subdomain solves are embarrassingly parallel, while the \(\overline {N}\times N\) solves of GMRES can be parallelized in the spatial direction, but not in the iterative one. As future work, we believe it will be interesting to study the convergence of a Quasi-Newton method based on SRASPEN, where one assembles the Jacobian substructured matrix after every few outer Newton iterations, reducing the overall computational cost.

As a final remark, we specify that Fig. 4 has been obtained setting a zero initial guess for the nonlinear subdomain problems. However, at the iteration k of RASPEN one can use the subdomain restriction of the updated volume solution, that is \(R_{j} \mathbf {u}^{k-1}\), which has been obtained by solving the volume Jacobian system at iteration k − 1, and is thus generally a better initial guess for the next iteration. On the other hand in SRASPEN, one could use the subdomain solutions computed at iteration k − 1, i.e., \(\mathbf {u}_{i}^{k-1}\), as initial guess for the nonlinear subdomain problems, as the substructured Jacobian system corrects only the substructured values. Numerical experiments showed that with this particular choice of initial guess for the nonlinear subdomain problems, SRASPEN requires generally more Newton iterations to solve the local problems. In this setting, there is not a method that is constantly faster than the other as it depends on a delicate trade-off between the better GMRES performance and the need to perform more Newton iterations for the nonlinear local problems in SRASPEN.

6.3 Nonlinear diffusion

In this subsection we consider the nonlinear diffusion problem on a square domain Ω := (0,1)2,

where the right-hand side f is chosen such that \(u(x)=\sin \limits (\pi x)\sin \limits (\pi y)\) is the exact solution. We start all these methods with an initial guess u0(x) = 105, so that we start far away from the exact solution, and hence Newton’s method exhibits a long plateau before quadratic convergence begins.

Figure 5 shows the convergence behavior for the different methods as function of the number of iterations and the number of linear solves. The average number of GMRES iterations is 8.1667 for both RASPEN and SRASPEN for the four subdomain decomposition. For a decomposition into 25 subdomains, the average number of GMRES iterations is 19.14 for RASPEN and 19.57 for SRASPEN. We remark that as the number of subdomains increases, GMRES needs more iterations to solve the Jacobian system. This is consistent with the interpretation of (34) as a Jacobian matrix \(J\left (\mathbf {u}^{(j)}\right )\) preconditioned by the additive operator \({\sum }_{j\in \mathcal {J}} \left (R_{j} J\left (\mathbf {u}^{(j)}\right )P_{j}\right )^{-1}\); We expect this preconditioner not to be scalable since it does not involve a coarse correction. In Table 2 we compare the computational time in seconds to reach a tolerance of 10− 8 by RASPEN and SRASPEN. SRASPEN is faster due to the less expensive GMRES iteration which is inherited by the linear analysis (see Table 1).

We conclude this section by showing the convergence behavior for the two-level variants of nonlinear RAS, nonlinear SRAS, RASPEN, and SRASPEN. We use a coarse grid in volume taking half of the points in x and y, and a coarse substructured grid taking half of the unknowns as depicted in Fig. 1. The interpolation and restriction operators \(P_{0},R_{0},\overline {P}_{0}\) and \(\overline {R}_{0}\) are the classical linear interpolation and fully weighting restriction operators defined in Section 5. From Fig. 6, we note that two-level nonlinear SRAS is much faster than two-level nonlinear RAS, and this observation is in agreement with the linear case treated in [10, 11]. Since the two-level iterative methods are not equivalent, we also remark that two-level SRASPEN shows a better performance than two-level RASPEN in terms of iteration count. As the one-level smoother is the same in all methods, the better convergence of the substructured methods implies that the coarse equation involving \(\overline {\mathcal {F}}_{0}\) provides a much better coarse correction than the classical volume one involving F0.

Relative error decay versus the number of iterations for Newton’s method, iterative two-level nonlinear RAS and SRAS, and the two-level variants of RASPEN and SRASPEN. The left figure refers to a decomposition into 4 subdomains, while the right figure refers to a decomposition into 16 subdomains. The mesh size is h = 0.012 and the overlap is 4h

Even though the two-level substructured methods are faster in terms of iteration count, the solution of the FAS problem involving \(\overline {\mathcal {F}}_{0}=\overline {R}_{0}\overline {\mathcal {F}}(\overline {P}_{0}(\mathbf {v}_{0}))\) is rather expensive as it requires to evaluate twice the substructured function \(\overline {\mathcal {F}}\) (each evaluation requires subdomain solves) to compute the right-hand side, to solve a Jacobian system involving \(J_{\overline {\mathcal {F}}_{0}}\), and to evaluate \(\overline {\mathcal {F}}\) on the iterates, which again require the solution of subdomain problems. Unless one has a fully parallel implementation available, the coarse correction involving \(\overline {\mathcal {F}}_{0}\) is doomed to represent a bottleneck.

7 Conclusions

We presented an analysis of the effects of substructuring on RAS when it is applied as an iterative solver and as a preconditioner. We proved that iterative RAS and iterative SRAS converge at the same rate, both in the linear and nonlinear case. For the nonlinear case, we showed that the preconditioned methods, namely RASPEN and SRASPEN, also have the same rate of convergence as they produce the same iterates once these are restricted to the interfaces. Surprisingly, the equivalence between volume and substructured RAS breaks down when they are considered as preconditioners for Krylov methods. We showed that the Krylov spaces are equivalent, once the volume one is restricted to the substructure, however we obtained that the iterates are different by carefully deriving the least squares problems solved by GMRES. Our analysis shows that GMRES should be applied to the substructured system as it converges similarly when applied to the volume formulation, but needs much less memory. This allows us to state that, while nonlinear RASPEN and SRASPEN produce the same iterates, SRASPEN has advantages when solving the Jacobian system, either because the use of a direct solve is feasible or because the Krylov method can work at the substructured level. Finally, we introduced substructured two-level nonlinear SRAS and SRASPEN, and showed numerically that these methods have better convergence properties than their volume counterparts in terms of iteration count, although they are quite expensive in the present form per iteration. Future efforts will be in the direction of approximating \(\overline {\mathcal {F}}_{0}\), by replacing the function \(\overline {\mathcal {F}}\), which is defined on a fine mesh, with an approximation on a very coarse mesh, thus reducing the overall cost of the substructured coarse correction, or by using spectral coarse spaces.

References

Brandt, A., Livne, O.E.: Multigrid techniques. Society for industrial and applied mathematics (2011)

Cai, X.C., Dryja, M.: Domain decomposition methods for monotone nonlinear elliptic problems. Contemp. Math. 180 (1994)

Cai, X.C., Keyes, D.E.: Nonlinearly preconditioned inexact Newton algorithms. SIAM J. Sci. Comput. 24(1), 183–200 (2002)

Cai, X.C., Keyes, D.E., Young, D.P.: A nonlinear additive Schwarz preconditioned inexact Newton method for shocked duct flow. In: Proceedings of the 13th International Conference on Domain Decomposition Methods (2001)

Cai, X.C., Li, X.: Inexact Newton methods with restricted additive Schwarz based nonlinear elimination for problems with high local nonlinearity. SIAM J. Sci. Comput. 33(2), 746–762 (2011)

Cai, X.C., Sarkis, M.: A restricted additive Schwarz preconditioner for general sparse linear systems. SIAM J. Sci. Comput. 21(2), 792–797 (1999)

Chaouqui, F., Gander, M.J., Kumbhar, P.M., Vanzan, T.: On the nonlinear Dirichlet-Neumann method and preconditioner for Newton’s method. Accepted in Domain Decomposition Methods in Science and Engineering XXVI (2021)

Ciaramella, G., Gander, M.J.: Iterative methods and preconditioners for systems of linear equations. SIAM (2022)

Ciaramella, G., Hassan, M., Stamm, B.: On the scalability of the Schwarz method. SMAI J. Comput. Math. 6, 33–68 (2020)

Ciaramella, G., Vanzan, T.: Substructured two-grid and multi-grid domain decomposition methods. preprint available at https://infoscience.epfl.ch/record/288182?ln=en, submitted (2021)

Ciaramella, G., Vanzan, T.: Spectral substructured two-level domain decomposition methods. arXiv:1908.05537v3 submitted (2021)

Deuflhard, P.: Newton methods for nonlinear problems: affine invariance and adaptive algorithms. Springer series in computational mathematics. Springer, Berlin (2010)

Dolean, V., Gander, M.J., Kheriji, W., Kwok, F., Masson, R.: Nonlinear preconditioning: How to use a nonlinear Schwarz method to precondition Newton’s method. SIAM J. Sci. Comput. 38(6), A3357–A3380 (2016)

Farhat, C., Roux, F.X.: A method of finite element tearing and interconnecting and its parallel solution algorithm. Int. J. Numer. Methods Eng. 32(6), 1205–1227 (1991)

Gander, M.J.: Optimized Schwarz methods. SIAM J. Numer. Anal. 44(2), 699–731 (2006)

Gander, M.J.: Schwarz methods over the course of time. Electron. Trans. Numer. Ana. 31, 228–255 (2008)

Gander, M.J.: On the Origins of Linear and Non-Linear Preconditioning. In: Lee, C.O., Cai, X.C., Keyes, D.E., Kim, H.H., Klawonn, A., Park, E.J., Widlund, O.B. (eds.) Domain Decomposition Methods in Science and Engineering XXIII, pp 153–161. Springer International Publishing, Cham (2017)

Gander, M.J., Halpern, L.: Méthodes De Décomposition De Domaines – Notions De Base. Editions TI. Techniques de l’Ingénieur, France (2012)

Gong, S., Cai, X.C.: A nonlinear elimination preconditioned Newton method with applications in arterial wall simulation. In: International Conference on Domain Decomposition Methods, pp 353–361. Springer (2017)

Gong, S., Cai, X.C.: A nonlinear elimination preconditioned inexact Newton method for heterogeneous hyperelasticity. SIAM J. Sci. Comput. 41(5), S390–S408 (2019)

Hackbusch, W.: Multi-Grid Methods and applications. Springer series in computational mathematics. Springer, Berlin (2013)

Heinlein, A., Lanser, M.: Additive and hybrid nonlinear two-level Schwarz methods and energy minimizing coarse spaces for unstructured grids. SIAM J. Sci. Comput. 42(4), A2461–A2488 (2020)

Klawonn, A., Lanser, M., Niehoff, B., Radtke, P., Rheinbach, O.: Newton-Krylov-FETI-DP with Adaptive Coarse Spaces. In: Lee, C. O., Cai, X. C., Keyes, D. E., Kim, H. H., Klawonn, A., Park, E. J., Widlund, O. B. (eds.) Domain Decomposition Methods in Science and Engineering XXIII, pp 197–205. Springer International Publishing, Cham (2017)

Klawonn, A., Lanser, M., Rheinbach, O.: Nonlinear FETI-DP and BDDC methods. SIAM J. Sci. Comput. 36(2), A737–A765 (2014)

Klawonn, A., Lanser, M., Rheinbach, O., Uran, M.: Nonlinear FETI-DP and BDDC methods: a unified framework and parallel results. SIAM J. Sci. Comput. 39(6), C417–C451 (2017)

Klawonn, A., Widlund, O.: FETI and Neumann-Neumann iterative substructuring methods: connections and new results. Communications on Pure and Applied Mathematics: A Journal Issued by the Courant Institute of Mathematical Sciences 54(1), 57–90 (2001)

Lanzkron, P.J., Rose, D.J., Wilkes, J.T.: An analysis of approximate nonlinear elimination. SIAM J. Sci. Comput. 17(2), 538–559 (1996)

Lions, P.L.: On the Schwarz Alternating Method. I. In: First International Symposium on Domain Decomposition Methods for Partial Differential Equations, vol. 1, p 42 (1988)

Lui, S.H.: On Schwarz alternating methods for nonlinear elliptic PDEs. SIAM J. Sci. Comput. 21(4), 1506–1523 (1999)

Lui, S.H.: On monotone iteration and Schwarz methods for nonlinear parabolic PDEs. J. Comput. Appl. Math. 161(2), 449–468 (2003)

Luo, L., Cai, X.C., Yan, Z., Xu, L., Keyes, D.E.: A multilayer nonlinear elimination preconditioned inexact Newton method for steady-state incompressible flow problems in three dimensions. SIAM J. Sci. Comput. 42(6), B1404–B1428 (2020)

Mandel, J., Brezina, M.: Balancing domain decomposition for problems with large jumps in coefficients. Math. Comput. 65(216), 1387–1401 (1996)

Przemieniecki, J.S.: Matrix structural analysis of substructures. AIAA J. 1(1), 138–147 (1963)

Quarteroni, A., Valli, A.: Domain decomposition methods for partial differential equations. Numerical mathematics and scientific computation. Oxford Science Publications, Oxford (1999)

Saad, Y.: Iterative methods for sparse linear systems. SIAM (2003)

Toselli, A., Widlund, O.: Domain Decomposition Methods: Algorithms and Theory Series in Computational Mathematics, vol. 34. Springer, New York (2005)

Trottenberg, U., Oosterlee, C., Schuller, A.: Multigrid. Elsevier science (2000)

Acknowledgements

The last author acknowledges Gabriele Ciaramella for several insightful discussions on domain decomposition methods.

Funding

Open access funding provided by EPFL Lausanne. The third author received financial support from the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) Project-ID 258734477-SFB 1173.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chaouqui, F., Gander, M.J., Kumbhar, P.M. et al. Linear and nonlinear substructured Restricted Additive Schwarz iterations and preconditioning. Numer Algor 91, 81–107 (2022). https://doi.org/10.1007/s11075-022-01255-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01255-5