Abstract

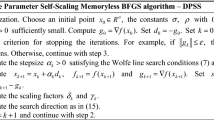

The memory-less SR1 and the memory-less BFGS methods are presented together with their numerical performances for solving a set of 800 unconstrained optimization problems with the number of variables in the range [1000, 10,000]. By memory-less quasi-Newton methods, we understand the quasi-Newton methods which are initialized by the unity matrix at every iteration. In these algorithms, the stepsize is computed by the Wolfe line search conditions. The convergence of the memory-less SR1 method is proved under the classical assumptions. Comparison between the memory-less SR1 and the memory-less BFGS method shows that memory-less BFGS is more efficient and more robust than the memory-less SR1. Comparison between memory-less SR1 and BFGS from CONMIN in implementation of Shanno and Phua shows that memory-less SR1 method is more efficient and more robust than BFGS method from CONMIN, one of the best implementation of BFGS. Additionally, comparison of memory-less SR1 and memory-less BFGS versus steepest descent shows that both these memory-less algorithms are more efficient and more robust. Performances of these algorithms for solving five applications from MINPACK-2 collection, each of them with 40,000 variables, are also presented. For solving these applications, the memory-less BFGS is more efficient than the memory-less SR1. It seems that the accuracy of the Hessian approximations along the iterations in quasi-Newton methods is not as crucial in these methods as it is believed.

Similar content being viewed by others

References

Wolfe, P.: Convergence conditions for ascent methods. SIAM Rev. 11, 226–235 (1969)

Wolfe, P.: Convergence conditions for ascent methods. II: Some corrections. SIAM Review. 13, 185–188 (1971)

Broyden, C.G.: Quasi-newton methods and their applications to function minimization. Math. Comput. 21(99), 368–381 (1967)

Davidon, W.C.: Variable metric method for minimization. (Research and Development Report ANL-5990. Argonne National Laboratories.) (1959)

Fiacco, A.V., McCormick, GP.: Nonlinear programming: sequential unconstrained minimization techniques. Research Analysis Corporation, McLean Virginia. Republished in 1990 by SIAM, Philadelphia (1968)

Davidon, W.C.: Variable metric method for minimization. SIAM J. Optim. 1(1), 1–17 (1991)

Fletcher, R., Powell, M.J.D.: A Rapidly Convergent Descent Method for Minimization, Computer Journal, 163–168 (1963)

Powell, M.J.D.: A new algorithm for unconstrained optimization. In: Rosen, J.B., Mangasarian, O.L., Ritter, K. (eds.) Nonlinear Programming, pp. 31–66. Academic Press, New York (1970)

Broyden, C.G.: The convergence of a class of double-rank minimization algorithms. I. General considerations. Journal of the Institute of Mathematics and Its Applications. 6, 76–90 (1970)

Fletcher, R.: A new approach to variable metric algorithms. Comput. J. 13, 317–322 (1970)

Goldfarb, D.: A family of variable metric method derived by variation mean. Math. Comput. 23, 23–26 (1970)

Shanno, D.F.: Conditioning of quasi-Newton methods for function minimization. Math. Comput. 24, 647–656 (1970)

Huang, H.Y.: Unified approach to quadratically convergent algorithms for functions minimization. J. Optim. Theory Appl. 5(6), 405–423 (1970)

Andrei, N.: Accelerated adaptive Perry conjugate gradient algorithms based on the selfscaling memoryless BFGS update. J. Comput. Appl. Math. 325, 149–164 (2017)

Babaie-Kafaki, S.: A modified scaling parameter for the memory-less BFGS updating formula. Numerical Algorithms. 72, 425–433 (2016)

Leong, W.J., Hassan, M.A.: Scaled memory-less symmetric rank one method for large-scale optimization. Appl. Math. Comput. 218, 413–418 (2011)

Modarres, F., Hassan, M.A., Leong, W.J.: Memory-less modified symmetric rank one method for large-scale unconstrained optimization. Am. J. Appl. Sci. 6, 2054–2059 (2009)

Moyi, A.U., Leong, W.J.: A sufficient descent three-term conjugate gradient method via symmetric rank-one update for large-scale optimization. Optimization. 65, 121–143 (2016)

Nakayama, S., Narushima, Y., Yabe, H.: A memoryless symmetric rank-one method with sufficient descent property for unconstrained optimization. J. Oper. Res. Soc. Jpn. 61, 53–70 (2018)

Nakayama, S., Narushima, Y., Yabe, H.: Memory-less quasi-Newton methods based on spectral-scaling Broyden family for unconstrained optimization. Journal of Industrial and Management Optimization. 15, 1773–1793 (2019)

Yao, S., Ning, L.: An adaptive three-term conjugate gradient method based on selfscaling memoryless BFGS matrix. J. Comput. Appl. Math. 332, 72–85 (2018)

Shanno, D.F.: CONMIN – a Fortran subroutine for minimizing an unconstrained nonlinear scalar valued function of a vector variable x either by the BFGS variable metric algorithm or by a Beale restarted conjugate gradient algorithm. Private communication, October. 17, (1983)

Shanno, D.F., Phua, K.H.: Algorithm 500. Minimization of unconstrained multivariable functions. ACM Transactions on Mathematical Software. 2, 87–94 (1976)

Averick, B.M., Carter, R.G., Moré, J.J., Xue, G.L.: The MINPACK-2 Test Problem Collection, Mathematics and Computer Science Division, Argonne National Laboratory, Preprint MCS-P153–0692 (1992)

Conn, A.R., Gould, N.I.M., Toint, P.L.: Convergence of quasi-newton matrices generated by the symmetric rank one update. Math. Program. 50(1–3), 177–195 (1991)

Khalfan, H.F., Byrd, R.H., Schnabel, R.B.: A theoretical and experimental study of the symmetric rank-one update. SIAM J. Optimization. 3(1), 1–24 (1993)

Kelley, C.T., Sachs, E.W.: Local convergence of the symmetric rank one iteration. Comput. Optim. Appl. 9, 43–63 (1998)

Benson, H.Y., Shanno, D.F.: Cubic regularization in symmetric rank-1 quasi-Newton methods. Math. Progr. Comput. 10, 457–486 (2018)

Chen, H., Lam, W.H., Chan, S.C.: On the convergence analysis of cubic regularized symmetric rank-1 quasi-Newton method and the incremental version in the application of large-scale problems. IEEE Access. 7, 114042–114059 (2019)

Dai, Y.H., Liao, L.Z.: New conjugate conditions and related nonlinear conjugate gradient methods. Applied Mathematics & Optimization. 43, 87–101 (2001)

Nocedal, J.: Theory of algorithms for unconstrained optimization. Acta Numerica. 1, 199–242 (1992)

Nocedal, J., Wright, S.J.: Numerical optimization. In: Springer Series in Operations Research. Springer Science+Business Media, New York, Second edition (2006)

Shanno, D.F.: Conjugate gradient methods with inexact searches. Math. Oper. Res. 3, 244–256 (1978a)

Shanno, D.F.: On the convergence of a new conjugate gradient algorithm. SIAM J. Numer. Anal. 15, 1247–1257 (1978b)

Andrei, N.: An acceleration of gradient descent algorithm with backtracking for unconstrained optimization. Numerical Algorithms. 42(1), 63–73 (2006)

Andrei, N.: Acceleration of conjugate gradient algorithms for unconstrained optimization. Appl. Math. Comput. 213(2), 361–369 (2009)

Andrei, N.: Nonlinear conjugate gradient methods for unconstrained optimization. Springer Optimization and Its Applications 158 (2020)

Bongartz, I., Conn, A.R., Gould, N.I.M., Toint, P.L.: CUTE: constrained and unconstrained testing environments. ACM Trans. Math. Softw. 21, 123–160 (1995)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91, 201–213 (2002)

Glowinski, R.: Numerical methods for nonlinear variational problems, Springer-Verlag, Berlin (1984)

Cimatti, G.: On a problem of the theory of lubrication governed by a variational inequality. Appl. Math. Optim. 3, 227–242 (1977)

Goodman, J., Kohn, R., Reyna, L.: Numerical study of a relaxed variational problem from optimal design. Comput. Methods Appl. Mech. Eng. 57, 107–127 (1986)

Aris, R.: The mathematical theory of diffusion and reaction in permeable catalysts. Oxford. (1975)

Bebernes, J., Eberly, D.: Mahematical problems from combustion theory, in: Applied Mathematical Sciences, vol. 83, Springer-Verlag (1989)

Nitsche, J.C.C.: Lectures on minimal surfaces, Vol. 1, Cambridge University Press (1989)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Andrei, N. A note on memory-less SR1 and memory-less BFGS methods for large-scale unconstrained optimization. Numer Algor 90, 223–240 (2022). https://doi.org/10.1007/s11075-021-01185-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-021-01185-8