Abstract

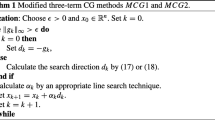

We propose a modified structured secant relation to get a more accurate approximation of the second curvature of the least squares objective function. Then, using this relation and an approach introduced by Andrei, we propose three scaled nonlinear conjugate gradient methods for nonlinear least squares problems. An attractive feature of one of the proposed methods is satisfication of the sufficient descent condition regardless of the line search and the objective function convexity. We establish that the three proposed algorithms are globally convergent, under the assumption of the Jacobian matrix having full column rank on the level set for one, and without such assumption for the other two. Numerical experiments are made on the collection of test problems, both zero-residual and nonzero-residual, using the Dolan–Moré performance profiles. They show that the outperformance of our proposed algorithms is more pronounced on nonzero-residual as well as large problems.

Similar content being viewed by others

References

Amini, K., Ghorbani Rizi, A.: A new structured quasi-Newton algorithm using partial information on Hessian. J. Comput. Appl. Math. 234, 805–811 (2010)

Al-Baali, M., Fletcher, R.: Variational methods for non-linear least squares. J. Oper. Res. Soc. 36, 405–421 (1985)

Andrei, N., Scaled memoryless, BFGS: Preconditioned conjugate gradient algorithm for unconstrained optimization. Optim. Methods Softw. 22, 561–571 (2007)

Andrei, N.: Accelerated scaled memoryless BFGS preconditioned conjugate gradient algorithm for unconstrained optimization. European J. Oper. Res. 204, 410–420 (2010)

Babaie-Kafaki, S.: A note on the global convergence theorem of the scaled conjugate gradient algorithms proposed by Andrei. Comput. Optim. Appl. 52, 409–414 (2012)

Babaie-Kafaki, S., Ghanbari, R., Mahdavi-Amiri, N.: Two new conjugate gradient methods based on modified secant relations. J. Comput. Appl. Math. 234, 1374–1386 (2010)

Barzilai, J., Borwein, J.M.: Two point step size gradient method. IMA J. Num. Anal. 8, 141–148 (1998)

Birgin, E., Martnez, J.M.: A spectral conjugate gradient method for unconstrained optimization. Appl. Math. Optim. 43, 117–128 (2001)

Broyden, C.G.: A class of methods for solving nonlinear simultaneous equations. Math. Comput. 19, 577–593 (1965)

Chen, L., Deng, N., Zhang, J.: A modified quasi-Newton method for structured optimization with partial information on the Hessian. Comput. Optim. Appl. 35, 5–18 (2006)

Dai, Y.H., Liao, L.Z.: New conjugacy conditions and related nonlinear conjugate gradient methods. Appl. Math. Optim. 43, 87–101 (2001)

Dennis, J.E., Gay, D.M., Welsch, R.E.: An adaptive nonlinear least-squares algorithm. ACM Trans. Math. Softw. 7, 348–368 (1981)

Dennis, J.E., Martinez, H.J., Tapia, R.A.: Convergence theory for the structured BFGS secant method with an application to nonlinear least squares. J. Optim. Theory Appl. 61, 161–178 (1989)

Dennis, J.E., Moré, J.J.: A characterization of superlinear convergence and its application to quasi-Newton methods. Math. Comput. 28, 549–560 (1974)

Dennis, J.E., Moré, J.J.: Quasi-newton methods, motivation and theory. SIAM Rev. 19, 46–89 (1977)

Dennis, J.E., Walker, H.F.: Convergence theorems for least-change secant update methods. SIAM J. Numer. Anal. 18, 949–987 (1981)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profles. Math Program. 91, 201–213 (2002)

Engels, J.R., Marté, H.J.: Local and superlinear convergence for partially known quasi-Newton methods. SIAM J. Optim. 1, 42–56 (1991)

Fan, J., Yuan, Y.: On the quadratic convergence of the Levenberg-Marquardt method without nonsingularity assumption. Computing 74, 23–39 (2005)

Fletcher, R.: On the Barzilai-Borwein method. In: Optimization and Control with Applications, pp. 35–256. Springer, Boston (2005)

Fletcher, R., Revees, C.M.: Function minimization by conjugate gradients. Comput. J. 7, 149–154 (1964)

Ford, J.A., Narushima, Y., Yabe, H.: Multi-step nonlinear conjugate gradient methods for unconstrained minimization. Comput. Optim. Appl. 40, 191–216 (2008)

Hager, W.W., Zhang, H.: A survey of nonlinear conjugate gradient methods. Pac. J. Optim. 2, 35–58 (2006)

Hager, W.W., Zhang, H.: A new conjugate gradient method with guaranteed de-scent and an efficient line search. SIAM J. Optim. 16, 170–192 (2005)

Hager, W.W., Zhang, H.: Algorithm 851: CG Descent, a conjugate gradient method with guaranteed descent. ACM Trans. Math. Softw. 32, 113–137 (2006)

Huschens, J.: On the use of product structure in secant methods for nonlinear least squares problems. SIAM J. Optim. 4, 108–129 (1994)

Kobayashi, M., Narushima, Y., Yabe, H.: Nonlinear conjugate gradient methods with structured secant condition for nonlinear least squares problems. J. Comput. Appl. Math. 234, 375–397 (2010)

Li, G., Tang, C., Wei, Z.: New conjugacy condition and related new conjugate gradient methods for unconstrained optimization. J. Comput. Appl. Math. 202, 523–539 (2007)

Luksan, L., Vlcek, J.: Sparse and Partially Separable Test Problems for Unconstrained and Equality Constrained Optimization Technical Report No. 767, Institute of Computer Science, Academy of Sciences of the Czech Republic (1999)

Nocedal, J., Wright, S.J.: Numerical Optimization. Springer, New York (2006)

Polak, E., Ribiére, G.: Note Sur la Convergence de Directions conjuguée. Francaise Informat Recherche Operationelle 16, 35–43 (1969)

Polyak, B.: The conjugate gradient method in extreme problems. USSR Comput. Math. Math. Phys. 9, 94–112 (1969)

Raydan, M.: The Barzilai and Borwein gradient method for the large scale unconstrained minimization problem. SIAM J. Optim. 7, 26–33 (1997)

Shanno, D.F.: Conjugate gradient methods with inexact searches. Math. Oper. Res. 3, 244–256 (1978)

Shanno, D.F., Phua, K.H.: Matrix conditioning and nonlinear optimization. Math. Program. 14, 149–160 (1978)

Shanno, D.F., Phua, K.H.: Algorithm 500: minimization of unconstrained multivariate functions. ACM Trans. Math. Softw. 2, 87–94 (1976)

Sugiki, K., Narushima, Y., Yabe, H.: Globally convergent three–term conjugate gradient methods that use secant conditions and generate descent search directions for unconstrained optimization. J. Optim. Theory Appl. 153, 733–757 (2012)

Wei, Z., Li, G., Qi, L.: New quasi-Newton methods for unconstrained optimization problems. Appl. Math. Comput. 175, 1156–1188 (2006)

Yabe, H., Takano, M.: Global convergence properties of nonlinear conjugate gradient methods with modified secant relation. Comput. Optim. Appl. 28, 203–225 (2004)

Yabe, H., Yamaki, N.: Local and superlinear convergence of structured quasi-Newton methods for nonlinear optimization. J. Oper. Res. Soc. Jpn. 39, 541–557 (1996)

Yuan, G., Wei, Z.: Convergence analysis of a modified BFGS method on convex minimizations. Comput. Optim. Appl. 47, 237–255 (2010)

Zhang, J.Z., Deng, N.Y., Chen, L.H.: New quasi-Newton equation and related methods for unconstrained optimization. J. Optim. Theory Appl. 102, 147–167 (1999)

Zhang, J.Z., Xu, C.X.: Properties and numerical performance of quasi-Newton methods with modified quasi-Newton equation. J. Comput. Appl. Math. 137, 269–278 (2001)

Zhou, W.: On the convergence of the modified Levenberg-Marquardt method with a nonmonotone second order Armijo type line search. J. Comput. Appl. Math. 239, 152–161 (2013)

Acknowledgements

The first author thanks Yazd University and the second author thanks Sharif University of Technology for supporting this work.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Dehghani, R., Mahdavi-Amiri, N. Scaled nonlinear conjugate gradient methods for nonlinear least squares problems. Numer Algor 82, 1–20 (2019). https://doi.org/10.1007/s11075-018-0591-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-018-0591-2

Keywords

- Nonlinear least squares

- Scaled nonlinear conjugate gradient

- Structured secant relation

- Global convergences