Abstract

We present a fast method for nonlinear data-driven model reduction of dynamical systems onto their slowest nonresonant spectral submanifolds (SSMs). While the recently proposed reduced-order modeling method SSMLearn uses implicit optimization to fit a spectral submanifold to data and reduce the dynamics to a normal form, here, we reformulate these tasks as explicit problems under certain simplifying assumptions. In addition, we provide a novel method for timelag selection when delay-embedding signals from multimodal systems. We show that our alternative approach to data-driven SSM construction yields accurate and sparse rigorous models for essentially nonlinear (or non-linearizable) dynamics on both numerical and experimental datasets. Aside from a major reduction in complexity, our new method allows an increase in the training data dimensionality by several orders of magnitude. This promises to extend data-driven, SSM-based modeling to problems with hundreds of thousands of degrees of freedom.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Nonlinear dynamical systems are omnipresent in nature and engineering. Examples include beam and plate buckling [1], turbulent fluid flows [24], vibrations in jointed structures [33], sloshing in fluid tanks [54], and even traffic jams [40]. As computational resources have grown, so has the interest in data-driven methods, which take input data from experiments or simulations and return a reduced model of the underlying system dynamics. To date, however, no rigorous method has been accepted as a standard for nonlinear system identification and reduced modeling.

Model simplicity (or parsimony) is vital for interpretability, control, and response prediction for mechanical devices [31]. This has motivated reduction methods based partially or fully on linearization of the underlying dynamics, such as the proper orthogonal decomposition [3, 38] and the dynamic mode decomposition (DMD) [48, 49]. Specifically, DMD obtains the best fit of a linear dynamical system to the data in an equation-free manner [32], often utilizing delay embedding to secure sufficiently many observables [17].

Many nonlinear mechanical systems, however, exhibit phenomena that cannot occur in linear systems, such as coexisting isolated steady states, and therefore cannot be captured by linear models [41]. We refer to such phenomena as non-linearizable. Machine learning methods can potentially capture such phenomena [7, 14, 23, 39], but tend to provide models that lack interpretability and perform poorly outside their training range [37].

Here, we propose spectral submanifolds (SSMs) to obtain sparse models of nonlinearizable phenomena. An SSM is the smoothest nonlinear continuation of a nonresonant spectral subspace emanating from a steady state, both in autonomous systems and systems with periodic or quasiperiodic forcing. SSMs are unique, attracting, and persistent invariant manifolds in the phase space, that lend themselves well to model reduction [22].

A concept related to SSMs is a nonlinear normal mode (NNM), which was originally defined as a periodic motion of a nonlinear system [30, 45, 55]. A later definition of NNMs [50] targets invariant manifolds tangent to spectral subspaces of the linearized system without focusing on the unique, smoothest such manifold. In the sense of this third NNM definition, SSMs are special NNMs with precisely known smoothness and uniqueness properties. They can be constructed over an arbitrary number of linearized modes which may also be in resonance with each other. Recently, it has been proven that two-dimensional SSMs converge to conservative NNMs composed of periodic orbits when the damping in the system tends to zero [36]. Closely linked to SSMs, invariant spectral foliations are the basis of another rigorous approach for extending linear modal analysis to nonlinear systems [51].

Spectral submanifolds can be rigorously constructed for mechanical systems defined by differential equations [43]. Given a Taylor expansion of a set of autonomous ODEs, the coefficients of an SSM can be efficiently computed up to any order and dimension [26]. This methodology has been used for model reduction of systems with hundreds of thousands of degrees of freedom, accurately predicting responses to small harmonic forcing [25, 27, 42], as well as the bifurcations of those responses [34, 35].

These developments have motivated applications of SSM theory to locate reduced-order models from data. As a first step, Ref. [52] fitted a multivariate polynomial to the sampling map and computed SSMs on the resulting Taylor expanded dynamics. This yielded good reconstructions of the backbone curves in a clamped-clamped beam experiment. Typically, however, due to the rapid growth in the number of terms, a polynomial expansion of the flow map in the full observable space is sensitive to overfitting and quickly becomes intractable in higher-dimensional systems.

Recently, another data-driven SSM identification method was proposed to alleviate the overfitting of the sampling map by separating the model reduction into two steps: manifold identification and reduced dynamics fitting [10]. Thus, the polynomial fit of the dynamics takes place only on the SSM, and hence the number of terms no longer grows with the data dimensionality. First, the data is embedded in a user-defined observable space. Then a polynomial representation of the SSM is fitted to the data and the data dimensionality is reduced by projection onto the tangent space of the SSM. Using nonlinear optimization techniques, finally a transformation from the reduced coordinates to a normal form is computed, maximizing sparsity while retaining essential nonlinearities [21].

While data-driven SSM-based model reduction has been successfully applied to both numerical and experimental data for fluid problems, structural dynamics, and fluid-structure interaction [10, 11, 29], the required implicit optimization can be computationally demanding for high-dimensional systems. This paper introduces an alternative method that explicitly computes an SSM followed by a classic normal form transformation in the reduced coordinates. Our key assumption is that the tangent space of the SSM at a fixed point can be found by singular value decomposition (SVD). This enables a major simplification and speedup at the expense of giving up the more general applicability of the method in Ref. [10]. In consequence, our proposed method is faster than previous SSM-based model reduction from data both in terms of implementation and execution. We do not undertake any formal speed comparison with other methods here.

After reduction to the computed SSM and numerical fitting of the reduced dynamics, we compute the normal form of the reduced dynamics analytically. This is in contrast to Ref. [10], which fits the normal form directly to data simultaneously at all required orders. The aims of our new approach are accessibility to practitioners, major speedup for rapid prototyping, analysis of higher-dimensional observable spaces, and insight into the differences between numerically and analytically computed normal forms.

The structure of this paper is as follows. First, Sect. 2 gives a brief introduction to SSM theory, summarizes SSM-based data-driven modeling, and details our new method. In Sect. 3, we discuss how to select an observable space using delay embedding and use SSM-reduced models for forced response prediction. In Sect. 4, we apply our newly derived SSM-based model reduction method to experiments from a sloshing tank, simulations of a von Kármán beam, and experiments on an internally resonant beam. Finally, in Sect. 5, we draw conclusions from the examples, suggest additional applications, and outline possible further enhancements to dynamics-based machine learning.

2 Model order reduction on spectral submanifolds

We consider nonlinear autonomous dynamical systems of class \({\mathscr {C}}^{l}\), \(l\in \{\mathbb {N}^+,\ \infty ,\ a\}\), with a denoting analyticity, in the form

We assume \(\varvec{A}\in \mathbb {R}^{p\times p}\) is diagonalizable and the real parts of its eigenvalues are either all strictly negative or all strictly positive. Note that this condition requires the origin to be a hyperbolic fixed point, which excludes rigid body modes from the analysis. We denote by \(\mathscr {V}_d{}\) the slowest d-dimensional spectral subspace of \(\varvec{A}\), i.e., the span of the eigenvectors corresponding to the d eigenvalues with real part closest to zero. When all eigenvalues have negative real parts, the dynamics of the linearized system will converge to \(\mathscr {V}_d{}\) in forward time.

If the eigenvalues corresponding to \(\mathscr {V}_d{}\) are non-resonant with the remaining \(p-d\) eigenvalues of \(\varvec{A}\), \(\mathscr {V}_d\) admits a unique smoothest, invariant, nonlinear continuation \(\mathscr {M}\) under addition of the higher-order terms [9]. We refer to \(\mathscr {M}\) as a spectral submanifold (SSM) [11, 22]. In the case of a resonance between \(\mathscr {V}_d\) and the rest of the spectrum of \(\varvec{A}\), we can include the resonant modal subspace in \(\mathscr {V}_d\) and thus obtain a higher-dimensional SSM. If all eigenvalues of \(\varvec{A}\) have negative real parts, \(\mathscr {M}\) will be an attracting slow manifold for system (1), just as \(\mathscr {V}_d\) is for the linear part of (1).

A numerical package, SSMTool, has been developed for the computation of SSMs from arbitrary finite-dimensional nonlinear systems [25, 26]. More recently, a data-driven method was developed to compute SSMs purely from observables of the dynamical system [10, 11]. In the following, we first review the literature on data-driven reduced-order modeling on SSMs, and then propose a simplified approach that is applicable under further assumptions.

2.1 Learning spectral submanifolds from data

We seek a parametrization of \(\mathscr {M}\) from trajectories in an observable space, which may be the full phase space or a suitable embedding, as described in Sect. 3.1. The data-driven methods we will put forward consist of two steps: manifold fitting and normal form computation.

We first outline the method recently proposed by Ref. [10], as implemented in the algorithm SSMLearn [12]. In that approach, one computes a parametrization of the SSM as a multivariate polynomial

with the reduced coordinates \(\varvec{\xi }= \varvec{V}^\top \varvec{y}\) and the matrix \(\varvec{V} \in \mathbb {R}^{p\times d}\) containing d orthonormal vectors spanning the spectral subspace \(\mathscr {V}_d{}\). Here, \(d_{i}\) denotes the number of d-variate monomials at order i. Throughout this paper, the superscript \((\cdot )^{l:r}\) denotes a vector of all monomials at orders l through r. For example, if \(\varvec{x} = [x_1, x_2]^\top \), then

Minimization of the distance of the parametrized SSM from the training data points \(\varvec{y}_j\) yields the optimal coefficient matrix \(\varvec{M}^\star \in \mathbb {R}^{p\times d_{1:m}}\), with \(d_{1:m}\) denoting the number of d-variate monomials from orders 1 up to m, as

subject to the constraints

Next, one computes the reduced dynamics \(\dot{\varvec{\xi }}=\varvec{R}\varvec{\xi }^{1:r}\) on the SSM in polynomial form, with coefficients in the matrix \(\varvec{R}\in \mathbb {R}^{d\times d_{1:r}}\), by minimizing

Finally, one computes the normal form of the reduced dynamics of \(\varvec{\xi }\) on the SSM [21]. This amounts to seeking a nonlinear transformation \(\varvec{t}^{-1}: \varvec{\xi }\mapsto \varvec{z}\) to new coordinates \(\varvec{z} \in \mathbb {C}^d\), that reduces the number of coefficients in \(\varvec{R}\) to a minimum set \(\varvec{N} \in \mathbb {C}^{d\times d_{1:n}}\). The transformation and normalized dynamics are given by

where \(\varvec{H}\in \mathbb {C}^{d\times d_{1:n}}\), and \(\varvec{W} \in \mathbb {C}^{d\times d}\) is the matrix of eigenvectors of the linear part \(\varvec{R}_1 = \varvec{W} \varvec{\Lambda }\varvec{W}^{-1}\) of the reduced dynamics. \(\varvec{N}\) is potentially a very sparse matrix, simplifying the dynamics to \(\varvec{n}(\varvec{z})\). The locations of nonzero elements in \(\varvec{N}\) are determined by any approximate inner resonances between the eigenvalues in \(\varvec{\Lambda }\) [10]. An example is given in (17).

The transformation to the normal form on the SSM is computed by minimization of the conjugacy error

Data-driven model reduction to SSMs has been successfully applied to both numerical and experimental datasets [10, 11, 29]. Yet, for very high-dimensional systems, the implicit optimization problems (3) and (7) may be intractable. Further, their solution would benefit from a simplified approach. Motivated by these challenges, in the remainder of this paper we discuss alternative, explicit formulations of the SSM geometry and its reduced normal form, which are possible under additional assumptions detailed below.

2.2 Fast spectral submanifold identification

In order to turn the SSM identification into an explicit problem, we assume that the tangent space of the SSM at the origin can be approximately obtained by a singular value decomposition (SVD) of the data. This is typically satisfied sufficiently close to an equilibrium point. Moreover, since the main variance in the data tends to occur along the tangent space of the SSM, the span of the first d principal directions given by SVD produces a good candidate for this subspace. A third motivation for our main assumption is that the image of \(\mathscr {M}\) in a delay-embedded space tends to be flat, even if \(\mathscr {M}\) is strongly curved in the full phase space [10]. An overview of the fast SSM construction method exploiting this simplification is shown in Fig. 1.

Our simplified data-driven SSM construction consists of two main parts, (I) fitting an SSM with SVD and polynomial regression, and (II) computing a normal form on the manifold by analytical solution of the cohomological equations. The obtained reduced model can then be used for (III) prediction of the autonomous system evolution or the response of the forced system

Specifically, we approximate the tangent space \(\mathscr {V}_d{}\) by d-dimensional truncated SVD on the snapshot matrix \(\varvec{Y} \in \mathbb {R}^{p\times N}\) as

where \(\varvec{U}_d \in \mathbb {R}^{p\times d}\), \(\varvec{S}_d \in \mathbb {R}^{d\times d}\), \(\varvec{{{\hat{V}}}}_d \in \mathbb {R}^{N\times d}\). Defining the tangent space approximation as

we can project the trajectories available from data onto this approximate subspace to obtain d reduced coordinates \(\varvec{\xi }\) as

We denote by \(\varvec{\Xi }\in \mathbb {R}^{d\times N}\) the projection of \(\varvec{Y}\) onto \(\varvec{V}\). Numerically, it is beneficial to first normalize each column of \(\varvec{V}\) with the maximum absolute value of the corresponding row in \(\varvec{{{\hat{V}}}}_d\).

This first step is equivalent to proper orthogonal decomposition [3, 38], but our main interest in this reduced subspace is its use for fitting a nonlinear parametrization of the SSM. The manifold parametrization coefficients \(\varvec{M}\in \mathbb {R}^{p\times d_{1:m}}\) are obtained by polynomial regression:

with \((\cdot )^\dagger \) denoting the Moore–Penrose pseudo-inverse.

The reduced dynamics are approximated by an \(\mathscr {O}(r)\) polynomial regression, with the coefficient matrix \(\varvec{R} \in \mathbb {R}^{d\times d_{1:r}}\), as

The time derivative \(\dot{\varvec{\xi }}\) may be computed numerically. The quality of this approximation is important for model accuracy. Therefore, we use a central finite difference method including 4 adjacent points in each time direction [20].

Next, diagonalizing the linear part \(\varvec{R}_1 = \varvec{W}\varvec{\Lambda }\varvec{W}^{-1}\), we again apply regression to rewrite \(\varvec{R}\) in the form \(\varvec{G}\in \mathbb {C}^{d\times d_{1:r}}\) with the eigenvalues in the linear part \(\varvec{G}_1=\varvec{\Lambda }\) and modal coordinates \(\varvec{\zeta }= \varvec{W}^{-1}\varvec{\xi }\), such that

To compute the normal form of the SSM-reduced dynamics (13), we seek a near-identity polynomial transformation \(\varvec{t}: \varvec{z} \mapsto \varvec{\zeta }\) with coefficients \(\varvec{T} \in \mathbb {C}^{d\times d_{1:n}}\) from new coordinates \(\varvec{z} \in \mathbb {C}^d\) such that

We enforce conjugacy between the normal form and reduced dynamics by plugging (14) into (13):

We can compute \(\varvec{T}\) and \(\varvec{N}\) by solving (15) recursively at increasing orders. See Ref. [25] for details on the computations.

The simplest non-trivial normal form arises on a 2D manifold emanating from a spectral subspace corresponding to two complex conjugate eigenvalues \((\lambda ,\bar{\lambda })\) with small real part. We denote the coordinates in the normal form \((z_1,z_2)=(z,\bar{z})\). The cubic normal form, with \(\gamma \in \mathbb {C}\), is

for which we obtain

Solving (15) yields

where

and \(G_i\) refers to element i of the top row in \(\varvec{G}\) [52].

We refer to the implementation of this simplified method for cubic-order, 2D normal form computations as fastSSM. The full script is written out in “Appendix A.1”. We have the reduced dynamics and normal form orders \(r=3\) and \(n=3\), and we are free to set the order m of the manifold.

While a cubic order normal form will suffice to model a number of datasets accurately [10, 11], for stronger nonlinearities we must include higher orders. In the following, we refer to the extension of the manifold fitting and normal form computation to arbitrary dimension and order as fastSSM\(^+\). This implementation solves (15) by automatically applying SSMTool to the coefficients of \(\varvec{R}\). The full code is written out in “Appendix A.2”. In principle, we are free to choose any orders m, r, and n, but to avoid overfitting we must limit the manifold order m and reduced dynamics order r. As an objective function for choosing these parameters, we minimize the mean error of the predictions of the training trajectories, as defined in (32). In addition, we need to pick a large enough n to make the tail of the SSM-reduced dynamics small enough.

Unlike (3) and (7), all computations in our proposed method are explicit. Therefore, we expect this approach to be faster than SSMLearn, which will generally come at the cost of increased modeling errors. Further, we note that in this simplified approach, the normal form on the SSM is computed analytically, rather than numerically, from a polynomial fit of the data.

In practice we must also approximate the inverse of \(\varvec{t}\) to transform initial conditions to the normal form before the integration. The most accurate and simplest way is a numerical solution for each initial condition, but a general inverse map can also be computed. Accordingly, we use an explicit 3rd-order polynomial expansion of the inverse of \(\varvec{t}\) in the fastSSM algorithm. In contrast, in the fastSSM\(^+\) algorithm for higher SSM dimensions and normal form orders, we fit a polynomial map by regression on the training data.

Finally, we note that the simplified SSM computation described here requires the data to lie sufficiently close to the SSM. We achieve this by removing any initial transients from the training dataset, as identified by a spectral analysis on the input signal [11]. Since the SSM is unique and attracting, this procedure ensures that we train on relevant data.

3 Pre- and post-processing of the data

We present here considerations for the delay embedding of observable functions to facilitate SSM identification. We also show how to use the obtained normal form dynamics to predict the forced response of the full system.

3.1 Embedding spectral submanifolds in the observable space

In practice, observing trajectories \(\varvec{x}(t)\) in the full phase space is often intractable for high-dimensional systems. An experimentalist might only observe a scalar quantity \(s(t) = \zeta (\varvec{x}(t))\), say, the displacement or acceleration at one point in a vibrating structure. Nevertheless, if the observable function \(\zeta : \mathbb {R}^{n_\textrm{full}} \rightarrow \mathbb {R}\) is generic, it can be used to reconstruct an invariant manifold of the system in an observable space via delay embedding.

To this end, we collect p measurements separated by a timelag \(\tau >0\) to form a vector \(\varvec{y}\) in an observable space \(\mathbb {R}^p\) as

Takens’ embedding theorem implies that if \(\varvec{x}(t)\) lies on an invariant d-dimensional manifold \(\mathscr {M}\) in the original phase space, then \(\varvec{y}(t)\) lies on a diffeomorphic copy of \(\mathscr {M}\) in \(\mathbb {R}^p\) with probability one, if \(p\ge 2d+1\) and \(\zeta (\varvec{x})\) is a generic observable [53]. This result also extends to generic multivariate functions \(\varvec{\zeta }: \mathbb {R}^{n_\textrm{full}} \rightarrow \mathbb {R}^{n_\textrm{obs}}\) as long as the total observable space dimension exceeds 2d [15, 47]. Even with a high-dimensional observable, however, we apply delay embedding to diversify the data and unveil information about the time derivative of the signal in our later sloshing example [11].

The timelag \(\tau \) is typically a multiple of the sampling time or timestep \(\Delta t\) of the available dataset. The default choice is \(\tau =\Delta t\), but one can also increase the timelag to \(\tau =k\Delta t\), \(k\in \mathbb {N}_+\). Delay-embedding an observed time series \([s(t_1), \ldots , s(t_N)]\) thus yields the snapshot matrix

Takens’ embedding theorem requires at least \(k=1\), \(p=2d+1\), which typically suffices for SSM identification when \(d=2\).

If we observe a smooth scalar signal s(t), then for small \(\tau \), we have

Delay-embedding s(t) then yields

As noted in Ref. [10], if \(\tau \) and p are small in comparison to \({\dot{s}}\), then the diffeomorphic copy of \(\mathscr {M}\) in the observable space is approximately a plane spanned by the two constant vectors appearing in (21).

For multimodal signals, however, p and k must be increased to allow distinction between the modes in the observable space. The modal subspaces of the full phase space have corresponding planes in the observable space, which must be identified to provide reduced coordinates for the SSM. A low delay embedding dimension and an overly short timelag can make these planes close to parallel, which complicates their identification. In contrast, we want to pick the timelag and embedding dimension such that the images of the modal subspaces in the observable space are close to orthogonal.

To illustrate this, we consider an observed superposition of two harmonics \(s(t) = a\cos (\omega _1 t+\psi _1) + b\cos (\omega _2 t+\psi _2)\), a reasonable model of a typical signal close to the origin. Using a trigonometric identity, we can rewrite

The delay-embedded observable vector is therefore

where

The trajectories will reside on a 4-dimensional hyperplane given by the constant matrix \(\varvec{V}\) of delay-embedded harmonic functions. Picking \(\tau \) and p too small complicates manifold identification, because the columns of \(\varvec{V}\) are close to linearly dependent. Instead, we choose a timelag such that these planes are as close to orthogonal as possible. Based on these considerations, we select a timelag \(\tau = k\Delta t\) such that \(\omega _2 \tau \approx \frac{\pi }{2}\), where \(\omega _2\) is the second eigenfrequency of the observed system. This argument generalizes to any number of modes, although the choice of k becomes more complicated. The embedding dimension p is set to at least \(2d+1\). Further increases to p can facilitate identification of a manifold but also complicate its geometry far from the origin.

3.2 Backbone curves and forced response

If all eigenvalues of the reduced dynamics are complex conjugate pairs, we can rewrite the normal form on the SSM in polar coordinates \((\varvec{\rho }, \varvec{\theta })\) with \(z_\ell = \rho _\ell \textrm{e}^{i\theta _\ell }\), \(z_{\ell +1} = \rho _\ell \textrm{e}^{-i\theta _\ell }\). This yields the SSM-reduced dynamics in the form

If the imaginary parts of all eigenvalue pairs are non-resonant with each other, then the functions \(c_\ell \) and \(\omega _\ell \) depend only on \(\varvec{\rho }\) [43]. For example, the cubic 2D normal form (16) in polar coordinates reads

For the general 2D case, we define an amplitude as

where \(\alpha : \mathbb {R}^p \rightarrow \mathbb {R}\) is a function mapping from the observable space to some amplitude of a particular degree of freedom or the norm of total displacements. The backbone curve of that amplitude is then defined as the parametrized curve

which is broadly used in the field of nonlinear vibrations to illustrate the overall effect of nonlinearities in the system.

Next, following Refs. [6, 44], we use the data-driven normal form on the SSM to predict the response of the system under additional, time-periodic external forcing. This amounts to adding a forcing term with amplitude f and frequency \(\Omega \) to the general 2D normal form to obtain

where we have introduced the phase difference \(\psi = \theta - \Omega t\). The forced response curve (FRC) is then defined as the bifurcation curve of the fixed points \((\rho _0,\psi _0)\) of (29) under varying \(\Omega \). Squaring and adding the equations in (29) yields a parametrization of the FRC for the forcing frequency \(\Omega \) and the phase lag \(\psi _0\) in the form

Since the relation between the experimental forcing and the normal form forcing f is unknown, a calibration of each FRC to at least one observation of a forced response is necessary. Following Refs. [10, 11], we achieve this by prescribing a single intersection point on the FRC in the frequency-amplitude plane. We use the calibration frequency \(\Omega _\textrm{cal}\) and amplitude \(u_\textrm{cal}\) of the maximal experimental response at each forcing level. The mapping to an equivalent point \(\varvec{y}_\textrm{cal}\) in the observable space can then be approximated by delay-embedding a cosine signal of amplitude \(u_\textrm{cal}\) and frequency \(\Omega _\textrm{cal}\). We compute the calibration amplitude \(\rho _\textrm{cal}\) by projecting \(\varvec{y}_\textrm{cal}\) onto the manifold and transforming to the normal form. The forcing f is then computed from the relationship

Finally, under periodic forcing, the SSM parametrization becomes time-dependent with the addition of a small periodic term. We ignore this contribution here to simplify the analysis, but note that this small non-autonomous correction can be exactly computed if needed [10, 11].

4 Examples

We now apply the two variants of our simplified SSM computation to three datasets: sloshing experiments in a water tank, simulation of a clamped-clamped von Kármán beam, and experiments on an internally resonant beam.

We quantify the quality of our SSM-reduced models with the normalized mean trajectory error (NMTE) [10]. Given a test trajectory with N snapshots \(\varvec{y}(t_j)\) and the model-based reconstruction \(\hat{\varvec{y}}(t_j)\) obtained by integrating the normal form dynamics and mapping back to the observable space, the NMTE is defined as

4.1 Tank sloshing

A tank partially filled with a liquid responds nonlinearly to horizontal harmonic excitation [54]. Stronger fluid oscillation gives rise to more shearing against the tank wall, so that the damping of the system increases nonlinearly with the amplitude [19]. In addition, the instantaneous frequency decreases at higher amplitudes. Both phenomena are crucial for predicting the forced response amplitude of the liquid. The industrial applications for sloshing models are numerous, ranging from the transportation of fluids in trucks [13] and container ships [19] to the design of fuel tanks for spacecraft [2, 16].

In Ref. [10], SSMLearn was applied to experimental sloshing data from Ref. [8]. With nonlinear models of both frequency and damping, the forced response of the first oscillation mode was accurately predicted from unforced decaying center of mass signals. Here, we will apply the fastSSM algorithm to a much higher-dimensional observable space that includes fine-resolved surface profile data.

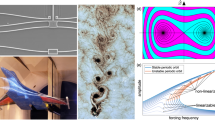

The experiments described in Ref. [8] were performed in a rectangular tank of width \(w=500\) mm and depth \(l=50\) mm, partially filled with water up to a height of 400 mm. The tank was mounted on a moving platform excited harmonically by a motor at different frequencies \(\Omega \) and amplitudes A. A camera was mounted on the moving platform, and the surface profile \(\varvec{h}\) was detected via image processing with the sampling time \(\Delta t = 0.033\) s. Figure 2 displays the experimental setup.

From the surface profile, the sloshing amplitude can be quantified by computing the horizontal position \({\hat{X}}\) of the liquid’s center of mass at each time, normalized by the tank width. \({\hat{X}}\) is a physically meaningful quantity, relevant for engineering applications and robust against small image evaluation errors. The tank was excited at the tested frequencies until a steady state was reached, and the driving was turned off. The oscillation amplitude then decayed along the backbone curve defined in (28).

Here we append the \({\hat{X}}\) signal with the measurements of the surface elevation \(\varvec{h}\) at \(1\,531\) points. We delay-embed these signals using 10 subsequent measurements to create a \(15\,320\)-dimensional observable space, in which a 2D, 7th-order SSM, shown in Fig. 3a, is identified. We fit the reduced dynamics on the SSM up to 3rd order, and then compute its 3rd-order normal form as

The backbone curves obtained from this normal form are shown in Figure 3b.

a A visualization of the identified 2D SSM, with the wall surface amplitude on the vertical axis. b The computed instantaneous damping and frequency show significant nonlinearity. c Prediction of the forced response amplitude and d phase lag compared with experimental results for three different forcing amplitudes. e The computational cost of manifold fitting is orders of magnitude lower with fastSSM than with SSMLearn, and scales better with higher dimensionality. f fastSSM\(^+\) normal form computation is also faster, although SSMLearn tends to require a lower order for convergence

In Fig. 3c and d, we compute forced response curves for the center of mass position, \({\hat{X}}\), and compare to its experimentally generated values obtained along frequency sweeps from both directions. We find the response prediction to be accurate. In particular, the nonlinear damping term of the normal form helps capturing the width of the forced response curve at higher amplitudes.

We also use the normal form (33) to predict the development of the decaying full surface profile \(\varvec{h}\) in Fig. 4. Here, we take the initial surface profile and transform it to an initial condition in the normal form coordinates. We integrate the normal form from this initial condition to predict its development in the observable space. We observe that the prediction is in close agreement with experiments, yielding a total NMTE of 2.05% over the entire phase space.

Snapshots of the fastSSM prediction of the surface profile decay. The initial condition is transformed to the SSM-reduced system, which is integrated to produce a 2D trajectory in normal form coordinates. By mapping the trajectory back to the observable space, we can predict the full surface profile and observe close agreement with the experiments in phase, amplitude, and shape

Finally, we compare execution times for fastSSM and SSMLearn when they are trained on the surface profile data. These computations were performed on MATLAB version 2020b, installed on an iMac with 2.3 GHz 18-Core Intel Xeon W and 128 GB RAM.

In Fig. 3e, the computational effort of fitting a 2D SSM is plotted against the dimensionality of the training data, i.e., the number of included surface points multiplied by the delay embedding dimension 10. Due to its explicit coefficient fitting, fastSSM achieves a major speedup, and enables analysis of significantly higher-dimensional data than SSMLearn. Since our proposed SSM fitting method is based on SVD and polynomial regression, we expect the computational effort to grow linearly with the input data dimensionality. This indeed holds approximately for the highest-dimensional training datasets in the present example.

After reduction to the SSM, both SSMLearn and fastSSM compute the \(\mathscr {O}(3)\) normal form in less than a second. In order to compare the computational effort for higher-order normal forms, we apply fastSSM\(^+\). Figure 3f shows the time required to compute a 2D normal form after fitting the SSM to the sloshing data. At a given order, fastSSM\(^+\) is on average 15 times faster than SSMLearn. While both methods are fast in this example, the difference becomes significant at higher dimensions. It should be noted, however, that in strongly nonlinear cases, an analytical expansion may require a higher-order normal form to converge, and so the difference to the practitioner may be smaller. This difference in the convergence of normal forms is closer examined in the next example.

4.2 von Kármán beam

We consider data from numerical simulations of a finite-element model of a clamped-clamped von Kármán nonlinear beam [27]. Each element has three degrees of freedom: axial deformation \(u_0\), transverse deflection \(w_0\), and rotation \(w_0'\). The nonlinear von Kármán axial strain approximation is

where the second term sets this model apart from the linear Euler–Bernoulli beam. The axial stress is modeled as

where E is the Young’s modulus and \(\kappa \) is the material rate of viscous damping.

After a convergence analysis, we set the number of elements to 12, which results in a 33-degree of freedom mechanical system, i.e., a 66-dimensional phase space. As initial condition, we compute the response to a static transverse load of 14 kN at the midpoint, removed at the simulation start. Our observable function is the midpoint displacement, and the objective is to reconstruct the SSM and its dynamics in the observable space by delay-embedding the signal. A sketch of the system is shown in Fig. 5a.

a The observable is the midpoint displacement u(t) of a 12-element von Kármán clamped-clamped beam. b Based on an \(\mathscr {O}(5)\) reduced dynamics model, we compute using the fastSSM\(^+\) algorithm a normal form that predicts the displacement decay accurately. c Prediction of the forced response amplitude. d The 2D SSM visualized in a 3D representation of the observable space. Above 20 mm deflection, increased orders of e fastSSM\(^{+}\) and f SSMTool no longer improve the backbone curve approximation. Higher amplitudes lie beyond the radius of convergence of the SSM Taylor expansion. SSMLearn, however, continues to approximate the backbone curve well beyond this limit

We set \(E=70\) GPa, \(\kappa =1.0\times 10^6\,\hbox {Pa}\cdot \hbox {s}\), length 1000 mm, width 50 mm, and thickness 20 mm. The sampling time is \(\Delta t = 0.0955\) s. To satisfy the conditions of Takens’ embedding theorem, we set the delay embedding dimension to \(p=5\). The maximum displacement in the training data is 15.9 mm.

A cubic normal form is insufficient to describe the higher amplitude oscillations, which prompts us to compute higher orders with SSMTool. We use a 1st-order SSM, 5th-order SSM-reduced dynamics, and obtain an 11th-order normal form

which yields a NMTE of 0.63% on the training data (Fig. 5b).

We compute the FRC and verify it against a numerical integration frequency sweep in Fig. 5c. Clearly, the autonomous SSM obtained from our new fastSSM\(^+\) algorithm predicts the forced response very accurately even with strong nonlinearities. Figure 5d shows a representation of the SSM geometry, which, as predicted in Sect. 3.1, is almost flat due to the small timelag.

There is, however, a limit to the range of validity of the normal form (36), and so the forced response prediction is not guaranteed to be accurate for arbitrarily high amplitudes. To explore this limitation, we increase the initial point load to 35 kN, equivalent to a maximum displacement of 24.4 mm in the training data. We plot backbone curves computed numerically with SSMLearn and analytically at increasing orders with fastSSM\(^+\) in Fig. 5e. For reference, we also compute an approximation of the instantaneous frequency with Peak Finding and Fitting (PFF) from Ref. [28]. Above approximately 20 mm transverse displacement of the beam midpoint, increasing the order of the normal form computation no longer improves the model, as the radius of analyticity seems to be reached. On the other hand, the SSMLearn model remains valid far beyond this limit. This is a clear advantage of the numerical normal form approach over analytical computations.

Taking this observation further, a similar convergence study is shown in Fig. 5f for increasing orders of SSMTool computation on the full finite-element system of equations. The SSMLearn backbone curve agrees with the PFF estimate at a larger range than the analytical normal form converges. This shows that data-driven reduced-order modeling can surpass analytical methods in terms of range of validity, even when the full system is known.

4.3 Resonant beam experiments

Our final dataset comprises velocity measurements from an internally resonant beam structure detailed in Ref. [11]. It consists of an internal beam jointed with three bolts to the left midpoint of an external C-shaped beam, which is clamped to ground at its rightmost edges (Fig. 6a). The bolts give rise to nonlinear frictional slip [5, 18], and the beam lengths are tuned so that the system has a 1:2 resonance between its slowest out-of-plane bending eigenfrequencies, measured to be 122.4 Hz and 243.4 Hz. Vibrations are induced with an impulse hammer at three different impact locations and the transient out-of-plane velocity of the inner beam tip is measured at 5120 Hz. Varying the impact location and force for data diversity, 12 different trajectories were obtained. Frequency analysis shows that only the two slowest eigenfrequencies are present in the signals, so we aim to identify a 4D internally resonant SSM in an observable space for our model reduction.

a Sketch of the experimental setup for the resonant beam tester [11]. b The test trajectories embedded in the delay-embedded observable space, in which we fit the SSM. c, d The reconstruction from the obtained 4D normal form dynamics agrees with the decay of measured velocities. e Plotting the modal amplitudes \(\rho \) against one another shows that \(\rho _2\) dies out more quickly than \(\rho _1\), but, due to modal interaction, the decay rates vary. f The instantaneous damping of the second mode is at times positive, indicating modal energy interchange

With more than one mode present in the data, we must judiciously choose the delay embedding parameters. In the experiment, the sampling time is \(\Delta t = 0.0001953\) s, which with \(\omega _2=2\pi \times 243.4\) rad/s produces \(\tau = 5\Delta t\) according to the method in Sect. 3.1. This timelag results in a good reconstruction already at the minimum Takens dimension, \(p=9\). However, increasing the dimensionality further increases modal orthogonality and thus improves trajectory reconstruction. Motivated by this, we select a delay embedding dimension of \(p=12\), as the reconstructions improve only marginally beyond this number. We show a representation of the test trajectories in this observable space in Fig. 6b.

We select three trajectories as the test set—one for each hammer impact location—and use the remaining nine trajectories for training. We set the order of expansion for the SSM \(m=3\), the SSM-reduced dynamics order \(r=3\), and obtain the 3rd-order normal form. The results do depend on the order of reduced dynamics, but are insensitive to the orders of computation for the SSM and the normal form on it. Our fastSSM\(^+\) algorithm detects an inner resonance from the data and we obtain the normal form

where the automatically fitted trigonometric terms involving \(\psi =2\theta _{1}-\theta _{2}\) account for the phase-dependence induced by the internal resonance. The reconstruction of the first test trajectory obtained by integrating the normal form is shown in Fig. 6c, with a zoomed-in version in Fig. 6d. The NMTE on the test set computed in the first coordinate is 1.28%.

Figure 6e plots the first modal amplitude, \(\rho _1\), against the second one, \(\rho _2\), for each simulated trajectory. The second mode clearly decays faster and trajectories are eventually dominated by the slower mode \(\rho _1\). However, due to the modal coupling terms in the normal form, the decay is not monotonic. Rather, there is a wealth of different decaying patterns depending on initial conditions.

Finally, Fig. 6f shows the instantaneous damping \({\dot{\rho }}_1/\rho _1\) and \({\dot{\rho }}_2/\rho _2\) for the reconstructions corresponding to the third impact location. For the slow mode, we observe strong nonlinearity as the instantaneous damping varies dramatically. The fluctuations are even larger for the fast mode. At times, its instantaneous damping reaches positive values, which indicates that energy is transferred from the slow to the fast mode, as previously observed in Refs. [4, 46]. Overall, the model obtained with our fastSSM\(^+\) algorithm agrees with the one returned by SSMLearn in Ref. [11].

5 Conclusions

We have introduced a fast alternative to a recent data-driven reduction method for nonlinear dynamical systems. Our approach is inspired by the SSMLearn method [10, 11], but formulates the fitting of an SSM as an explicit problem by assuming that its tangent space can be obtained by singular value decomposition on the data. Furthermore, we compute the normal form on the manifold explicitly and recursively, rather than solving an implicit minimization problem.

Our approach is based on rigorous existence and uniqueness results for SSMs constructed near hyperbolic fixed points of smooth dynamical systems. In addition, the spectral subspace over which the SSM is constructed may contain either stable directions or unstable directions but not a mixture of these. These assumptions formally exclude applications of our methods near non-hyperbolic fixed points, over spectral subspaces of mixed stability type and to systems with non-smooth features such as hysteretic phenomena and impacts. Our ongoing work seeks to extend SSM theory in a way that overcomes these limitations.

We have applied our alternative model-reduction method to three datasets: tank sloshing experiments, a nonlinear beam finite-element simulation, and experiments from an internally resonant mechanical structure. In all three problems, we obtained a model that accurately predicted the decay of the autonomous system. In addition, we have demonstrated that a forcing term can be added to the autonomous model for highly accurate prediction of the forced response amplitude and phase. Training on the beam experimental data, the internal resonance was automatically detected and our method generated a model that could predict energy repartition among the modes.

The assumptions made for our new method drastically speed up model identification on SSMs from data, increase the possible dimensionality of observable spaces we can tackle, and significantly simplify the numerical implementation. In comparison with the previous method, however, we sacrifice some model accuracy. Perhaps more significantly, we have found large differences in normal form convergence domains to the benefit of SSMLearn.

Specifically, we demonstrate on our simulated beam problem that the numerical normal form has a considerably larger range of validity than the analytical normal forms of the full system and consequently those of our new data-driven SSM construction. In other words, data-driven analysis can outperform analytical methods in terms of model validity, even when the full equations of the system are known. This suggests that a plausible approach to obtaining a reduced-order model of a finite-element structure would be to simulate the system and train on a small number of trajectories, rather than formulating the full equation system and computing SSMs analytically. This idea is a subject of our ongoing work.

Data availability

The data supporting the findings of this work can be openly accessed at www.github.com/haller-group/SSMLearn/tree/main/fastSSM.

Change history

21 February 2023

A Correction to this paper has been published: https://doi.org/10.1007/s11071-022-08151-6

References

Abramian, A., Virot, E., Lozano, E., Rubinstein, S., Schneider, T.: Nondestructive prediction of the buckling load of imperfect shells. Phys. Rev. Lett. 125, 225504 (2020)

Abramson, H. (ed.): The dynamic behavior of liquids in moving containers: with applications to space vehicle technology. In: Norman Abramson, H. (eds) NASA SP-106. Scientific and Technical Information Division. National Aeronautics and Space Administration, Washington, DC (1966)

Awrejcewicz, J., Krys’ko, V.A., Vakakis, A.F.: Order Reduction by Proper Orthogonal Decomposition (POD) Analysis, pp. 279–320. Springer, Berlin (2004)

Balachandran, B., Nayfeh, A., Pappa, R., Smith, S.: Identification of nonlinear interactions in structures. J. Guid. Control Dyn. 17, 257–262 (1994). https://doi.org/10.2514/3.21191

Brake, M.: The Mechanics of Jointed Structures: Recent Research and Open Challenges for Developing Predictive Models for Structural Dynamics. Springer International Publishing, Berlin (2018)

Breunung, T., Haller, G.: Explicit backbone curves from spectral submanifolds of forced-damped nonlinear mechanical systems. Proc. R. Soc. A 474, 20180083 (2018)

Brunton, S.L., Proctor, J.L., Kutz, J.N.: Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proc. Natl. Acad. Sci. 113(15), 3932–3937 (2016)

Bäuerlein, B., Avila, K.: Phase lag predicts nonlinear response maxima in liquid-sloshing experiments. J. Fluid Mech. 925, A22 (2021). https://doi.org/10.1017/jfm.2021.576

Cabré, X., Fontich, E., de la Llave, R.: The parameterization method for invariant manifolds I: manifolds associated to non-resonant subspaces. Indiana Univ. Math. J. 52(2), 283–328 (2003)

Cenedese, M., Axås, J., Bäuerlein, B., Avila, K., Haller, G.: Data-driven modeling and prediction of non-linearizable dynamics via spectral submanifolds. Nat. Commun. 13(1), 1–13 (2022). https://doi.org/10.1038/s41467-022-28518-y

Cenedese, M., Axås, J., Yang, H., Eriten, M., Haller, G.: Data-driven nonlinear model reduction to spectral submanifolds in mechanical systems. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 380(229), 20210194 (2022). https://doi.org/10.1098/rsta.2021.0194

Cenedese, M., Axås, J., Haller, G.: SSMLearn (2021). http://www.georgehaller.com

Cheli, F., D’Alessandro, V., Premoli, A., Sabbioni, E.: Simulation of sloshing in tank trucks. Int. J. Heavy Veh. Syst. 20, 1–16 (2013). https://doi.org/10.1504/IJHVS.2013.051099

Daniel, T., Casenave, F., Akkari, N., Ryckelynck, D.: Model order reduction assisted by deep neural networks (rom-net). Adv. Model. Simul. Eng. Sci. 7, 105786 (2020)

Deyle, E.R., Sugihara, G.: Generalized theorems for nonlinear state space reconstruction. PLOS ONE 6(3), 18295 (2011). https://doi.org/10.1371/journal.pone.0018295

Dodge, F.: The New Dynamic Behavior of Liquids in Moving Containers. Southwest Research Institute, San Antonio (2000)

Dylewsky, D., Kaiser, E., Brunton, S.L., Kutz, J.N.: Principal component trajectories for modeling spectrally continuous dynamics as forced linear systems. Phys. Rev. E 105, 015312 (2022). https://doi.org/10.1103/PhysRevE.105.015312

Eriten, M., Kurt, M., Luo, G., McFarland, D., Bergman, L., Vakakis, A.: Nonlinear system identification of frictional effects in a beam with a bolted joint connection. Mech. Syst. Signal Process. 39(1), 245–264 (2013). https://doi.org/10.1016/j.ymssp.2013.03.003

Faltinsen, O., Timokha, A.: Sloshing. Cambridge University Press, Cambridge (2009)

Fornberg, B.: Generation of finite difference formulas on arbitrarily spaced grids. Math. Comput. 51(184), 699–706 (1988). https://doi.org/10.1090/s0025-5718-1988-0935077-0

Guckenheimer, J., Holmes, P.: Nonlinear oscillations. In: Dynamical Systems and Bifurcation of Vector Fields. Springer, New York (1983)

Haller, G., Ponsioen, S.: Nonlinear normal modes and spectral submanifolds: existence, uniqueness and use in model reduction. Nonlinear Dyn. 86(3), 1493–1534 (2016)

Hartman, D., Mestha, L.K.: A deep learning framework for model reduction of dynamical systems. In: 2017 IEEE Conference on Control Technology and Applications (CCTA), pp. 1917–1922 (2017)

Holmes, P.J., Lumley, J.L., Berkooz, G., Rowley, C.W.: Turbulence, coherent structures, dynamical systems and symmetry. In: Cambridge Monographs on Mechanics, 2nd edn. Cambridge University Press (2012)

Jain, S., Haller, G.: How to compute invariant manifolds and their reduced dynamics in high-dimensional finite element models. Nonlinear Dyn. 107(2), 1417–1450 (2021). https://doi.org/10.1007/s11071-021-06957-4

Jain, S., Thurner, T., Li, M., Haller, G.: SSMTool: Computation of invariant manifolds and their reduced dynamics in high-dimensional mechanics problems. (2021). https://doi.org/10.5281/zenodo.4614201. http://www.georgehaller.com

Jain, S., Tiso, P., Haller, G.: Exact nonlinear model reduction for a von Kármán beam: slow-fast decomposition and spectral submanifolds. J. Sound Vib. 423, 195–211 (2018)

Jin, M., Chen, W., Brake, M.R.W., Song, H.: Identification of instantaneous frequency and damping from transient decay data. J. Vib. Acoust. 142(5), 051111 (2020). https://doi.org/10.1115/1.4047416

Kaszás, B., Cenedese, M., Haller, G.: Dynamics-based machine learning of transitions in couette flow. Phys. Rev. Fluids (2022) (In press)

Kerschen, G., Peeters, M., Golinval, J., Vakakis, A.: Nonlinear normal modes, Part I: A useful framework for the structural dynamicist. Mech. Syst. Signal Process. 23(1), 170–194 (2009). https://doi.org/10.1016/j.ymssp.2008.04.002

Kutz, J.N., Brunton, S.L.: Parsimony as the ultimate regularizer for physics-informed machine learning. Nonlinear Dyn. 107(3), 1801–1817 (2022). https://doi.org/10.1007/s11071-021-07118-3

Kutz, J.N., Brunton, S.L., Brunton, B.W., Proctor, J.L.: Dynamic Mode Decomposition. SIAM, Philadelphia, PA (2016)

Lacayo, R., Pesaresi, L., Groß, J., Fochler, D., Armand, J., Salles, L., Schwingshackl, C., Allen, M., Brake, M.: Nonlinear modeling of structures with bolted joints: a comparison of two approaches based on a time-domain and frequency-domain solver. Mech. Syst. Signal Process. 114, 413–438 (2019). https://doi.org/10.1016/j.ymssp.2018.05.033

Li, M., Haller, G.: Nonlinear analysis of forced mechanical systems with internal resonance using spectral submanifolds—Part II: Bifurcation and quasi-periodic response. Nonlinear Dyn. (2022). https://doi.org/10.1007/s11071-022-07476-6

Li, M., Jain, S., Haller, G.: Nonlinear analysis of forced mechanical systems with internal resonance using spectral submanifolds—Part I: Periodic response and forced response curve. Nonlinear Dyn. (2022)

de la Llave, R., Kogelbauer, F.: Global persistence of Lyapunov subcenter manifolds as spectral submanifolds under dissipative perturbations. SIAM J. Appl. Dyn. Sys. 18(4), 2099–2142 (2019)

Loiseau, J.C., Brunton, S.L., Noack, B.R.: From the POD-Galerkin Method to Sparse Manifold Models, pp. 279–320. De Gruyter, Berlin (2020)

Lumley, J.L.: The structure of inhomogeneous turbulent flows. In: Atmospheric Turbulence and Radio Wave Propagation, pp. 166–177 (1967)

Lusch, B., Kutz, J.N., Brunton, S.L.: Deep learning for universal linear embeddings of nonlinear dynamics. Nature Commun. 9(4950), 1–10 (2018)

Orosz, G., Stépán, G.: Subcritical Hopf bifurcations in a car-following model with reaction-time delay. Proc. R. Soc. A 462(2073), 2643–2670 (2006)

Page, J., Kerswell, R.: Koopman mode expansions between simple invariant solutions. J. Fluid Mech. 879, 1–27 (2019)

Ponsioen, S., Jain, S., Haller, G.: Model reduction to spectral submanifolds and forced-response calculation in high-dimensional mechanical systems. J. Sound Vib. 488, 115640 (2020)

Ponsioen, S., Pedergnana, T., Haller, G.: Automated computation of autonomous spectral submanifolds for nonlinear modal analysis. J. Sound Vib. 420, 269–295 (2018)

Ponsioen, S., Pedergnana, T., Haller, G.: Analytic prediction of isolated forced response curves from spectral submanifolds. Nonlinear Dyn. 98, 2755–2773 (2019)

Rosenberg, R.M.: The normal modes of nonlinear n-degree-of-freedom systems. J. Appl. Mech. 29(1), 7–14 (1962). https://doi.org/10.1115/1.3636501

Sapsis, T., Quinn, D., Vakakis, A., Bergman, L.: Effective stiffening and damping enhancement of structures with strongly nonlinear local attachments. J. Vib. Acoust. Stress Reliab. Des. 134(1), 011016 (2012). https://doi.org/10.1115/1.4005005

Sauer, T., Yorke, J., Casdagli, M.: Embedology. J. Stat. Phys. 65, 579–616 (1991)

Schmid, P.: Dynamic mode decomposition of numerical and experimental data. J. Fluid Mech. 656, 5–28 (2010)

Schmid, P.J.: Dynamic mode decomposition and its variants. Annu. Rev. Fluid Mech. 54(1), 225–254 (2022). https://doi.org/10.1146/annurev-fluid-030121-015835

Shaw, S., Pierre, C.: Normal modes for non-linear vibratory systems. J. Sound Vib. 164(1), 85–124 (1993)

Szalai, R.: Invariant spectral foliations with applications to model order reduction and synthesis. Nonlinear Dyn. 101, 2645–2669 (2020)

Szalai, R., Ehrhardt, D., Haller, G.: Nonlinear model identification and spectral submanifolds for multi-degree-of-freedom mechanical vibrations. Proc. R. Soc. A 473(2202), 20160759 (2017)

Takens, F.: Detecting strange attractors in turbulence. In: Rand, D., Young, L. (eds.) Dynamical Systems and Turbulence, Warwick, 1980, pp. 366–381. Springer, Berlin (1981)

Taylor, G.: An experimental study of standing waves. Proc. R. Soc. Lond. Ser. A Math. Phys. Sci. 218(1132), 44–59 (1953)

Vakakis, A.: Non-linear normal modes (NNMs) and their applications in vibration theory: an overview. Mech. Syst. Signal Process. 11(1), 3–22 (1997). https://doi.org/10.1006/mssp.1996.9999

Funding

Open access funding provided by Swiss Federal Institute of Technology Zurich.

Author information

Authors and Affiliations

Contributions

JA and GH designed the research. JA carried out the research. JA and MC developed the software and analyzed the examples. JA wrote the paper. GH lead the research team.

Corresponding author

Ethics declarations

Competing interests

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file 1 (mp4 5421 KB)

A Code

A Code

1.1 A.1 fastSSM

The fastSSM code requires no external packages and can be applied to data out-of-the-box. This implementation is limited to finding 2D SSMs and computing their 3rd order normal form. For strong nonlinearities or higher-dimensional SSMs, we recommend installing SSMTool and running fastSSM\(^+\). fastSSM is also available at www.github.com/haller-group/SSMLearn/tree/main/fastSSM.

1.2 A.2 fastSSM\(^+\)

This implementation applies SSMTool to extend normal form computations to arbitrary dimension and order. The fastSSM\(^+\) code is also available at www.github.com/haller-group/SSMLearn/tree/main/fastSSM. Requires installation of SSMTool [26].

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Axås, J., Cenedese, M. & Haller, G. Fast data-driven model reduction for nonlinear dynamical systems. Nonlinear Dyn 111, 7941–7957 (2023). https://doi.org/10.1007/s11071-022-08014-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-022-08014-0