Abstract

In many practical situations, it is impossible to measure the individual trajectories generated by an unknown chaotic system, but we can observe the evolution of probability density functions generated by such a system. The paper proposes for the first time a matrix-based approach to solve the generalized inverse Frobenius–Perron problem, that is, to reconstruct an unknown one-dimensional chaotic transformation, based on a temporal sequence of probability density functions generated by the transformation. Numerical examples are used to demonstrate the applicability of the proposed approach and evaluate its robustness with respect to constantly applied stochastic perturbations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

One-dimensional chaotic maps describe many dynamical processes, encountered in engineering, biology, physics and economics [1], which generate density of states. Examples include particle formation in emulsion polymerization [2], papermaking systems [3], bursty packet traffic in computer networks [4, 5], cellular uplink load in WCDMA systems [6]. A major challenge is that of inferring the chaotic map that describes the evolution of the unknown chaotic system, solely based on experimental observations.

Starting with seminal papers of Farmer and Sidorovich [7], Casadgli [8] and Abarbanel et al. [9], the problem of inferring dynamical models of chaotic systems directly from time series data has been addressed by many authors using neural networks [10], polynomial [11] or wavelet models [12].

In many practical applications, it is more convenient to observe experimentally the evolution of the probability density functions, instead of individual point trajectories, generated by such systems. For example, the particle image velocimetry (PIV) method of flow visualization [13] allows identifying individual tracer particles in each image, but not to track these between images. In biology, flow cytometry is routinely used to measure the expression of membrane proteins of individual cells in a population [14]. However, it is impossible to track the cells between subsequent analyses.

The problem of inferring an unknown chaotic map given the invariant density generated by the map is known as the inverse Frobenius–Perron problem (IFPP). This inverse problem has been investigated by Friedman and Boyarsky [15] who treated the inverse problem for a very restrictive class of piecewise constant density functions, using graph-theoretical methods. Ershov and Malinetskii [16] proposed a numerical algorithm for constructing a one-dimensional unimodal transformation which has a given invariant density. The results were generalized in Góra and Boyarsky [17], who introduced a matrix method for constructing a 3-band transformation such that an arbitrary given piecewise constant density is invariant under the transformation. Diakonos and Schmelcher [18] considered the inverse problem for a class of symmetric maps that have invariant symmetric Beta density functions. For the given symmetry constraints, they show that this problem has a unique solution. A generalization of this approach, which deals with a broader class of continuous unimodal maps for which each branch of the map covers the complete interval and considers asymmetric beta density functions, is proposed in [19]. Huang presented approaches to constructing smooth chaotic transformation with closed form [20, 21] and multi-branches complete chaotic map [22] given invariant densities. Boyarsky and Góra [23] studied the problem of representing the dynamics of chaotic maps, which is irreversible by a reversible deterministic process. Baranovsky and Daems [24] considered the problem of synthesizing one-dimensional piecewise linear Markov maps with prescribed autocorrelation function. The desired invariant density is then obtained by performing a suitable coordinate transformation. An alternative stochastic optimization approach is proposed in [25] to synthesize smooth unimodal maps with given invariant density and autocorrelation function. An analytical approach to solving the IFPP for two specific types of one-dimensional symmetric maps, given an analytic form of the invariant density, was introduced in [26]. A method for constructing chaotic maps with arbitrary piecewise constant invariant densities and arbitrary mixing properties using positive matrix theory was proposed in [5]. The approach has been exploited to synthesize dynamical systems with desired characteristics, i.e. Lyapunov exponent and mixing properties that share the same invariant density [27] and to analyse and design the communication networks based on TCP-like congestion control mechanisms [28]. An extension of this work to randomly switched chaotic maps is studied in [29]. It is also shown how the method can be extended to higher dimensions and how the approach can be used to encode images. In [30], the inverse problem is formulated as the problem of stabilizing a target distribution using an open-loop perturbation approach. In order to characterize the patterns of activity in the olfactory bulb, an optimization approach was proposed to infer the elements of the Frobenius–Perron matrices that encode the invariant density functions of interspike intervals corresponding to different odours [31].

All existing methods can be used to construct a map with a given invariant density. A limitation of these approaches is that the solution to the inverse problem is not unique. Typically, there are many transformations, exhibiting a wide variety of dynamical behaviours, which share the same invariant density. Therefore, the reconstructed map does not necessarily exhibit the same dynamics as the underlying systems even though it preserves the required invariant density. Additional constraints and model validity tests have to be used to ensure that the reconstructed map captures the dynamical properties of the underlying system (Lyapunov exponents, fixed points, etc.) and predicts its evolution. This is of paramount importance in a many practical applications ranging from modelling and control of particulate processes [48], characterizing the formation and evolution of the persistent spatial structures in chaotic fluid mixing [32], characterizing the chaotic behaviour of electrical circuits [33], chaotic signal processing [34, 35], analysing and interpreting cellular heterogeneity [36, 37] and identification of molecular conformations [38]. Potthast and Roland [39] solved the Frobenius–Perron equation describing the evolution of uniform probability distributions under the action of nonlinear dynamical automata that implement Turing machines. In this context, the realization of nonlinear dynamical automata that describe cognitive processes based on experimental data can be formulated as an inverse Frobenius–Perron problem.

Another major limitation of the existing matrix-based reconstruction algorithms is the assumption that the Markov partition is known in advance. In general, no a priori information about the unknown map is available, so the partition identification problem has to be solved as part of the reconstruction method.

This paper proposes a systematic method for determining an unknown chaotic map given sequences of density functions estimated from data. In other words, the inverse problem studied in this paper is that of determining the map that exhibits the same transient as well as asymptotic dynamics as the underlying system that generated the data. The proposed methodology involves the identification of the Markov partition, estimation of the Frobenius–Perron matrix and the reconstruction of the underlying map.

To our knowledge, our approach provides for the first time a solution to the problem of inferring, from sequences of density functions, a broad class of one-dimensional transformations that admit an invariant density when the Markov partition is not known in advance.

This paper is organized as follows: in Sect, 2, we give a brief introduction to the inverse Frobenius–Perron problem. The methodology for reconstructing piecewise-linear semi-Markov transformations from sequences of densities is presented and demonstrated using a numerical simulation example in Sect. 3. An extension of the method to continuous nonlinear transformations is introduced and demonstrated numerically in Sect. 4. Conclusions are presented in Sect. 5.

2 The inverse Frobenius–Perron problem

Let \(I = [a, b],\,{\mathfrak {B}}\) be a Borel \(\sigma \)-algebra of subsets in \(I\), and \(\mu \) denote the normalized Lebesgue measure on \(I\). Let \(S:I\rightarrow I\) be a measurable, non-singular transformation, that is, \(\mu (S^{-1}(A))\in {\mathfrak {B}}\) for any \(A\in {\mathfrak {B}}\) and \(\mu (S^{-1}(A))=0\) for all \(A\in {\mathfrak {B}}\) with \(\mu (A)=0\). If \(x_{n}\) is a random variable on \(I\) having the probability density function \(f_{n}\in {\mathfrak {D}}(I,{\mathfrak {B}},\mu )\), \({\mathfrak {D}}=\{f\in L^{1}(I,{\mathfrak {B}},\mu ):f\ge 0,\left\| f \right\| _{1}=1\}\), such that

then \(x_{n+1}\) given by

is distributed according to the probability density function \(f_{n+1} =P_{S}f_{n}\), where \(P_{S}:L^{1}(I)\rightarrow L^{1}(I)\), defined by

is the Frobenius–Perron operator [40] associated with the transformation \(S\). In this case, \(P_{S}\) can be written explicitly as

The Frobenius–Perron operator of a non-singular transformation \(S\) is a Markov operator [41].

Definition 1

A linear operator \(P_{S}:L^{1}\rightarrow L^{1}\) satisfying

-

(a)

\(P_{S}f_{n}\ge 0\) for \(f_{n}\ge 0\), \(f_{n}\in L^{1}\);

-

(b)

\(\left\| {P_{S}}\right\| _{L^{1}} <1\), and \(\left\| {P_{S}f_{n}}\right\| _{L^{1}} =\left\| {f_{n}}\right\| _{L^{1}}\), for \(f_{n}\ge 0\), \(f_{n}\in L^{1}\),

is called a Markov operator.

Definition 2

A Markov operator \(P_{S}:L^{1}\rightarrow L^{1}\) is called strongly constrictive if there exists a compact set \({\mathcal {F}} \subset L^{1}\) such that for any \(f_{n}\in D\), \(\mathop {\lim }\nolimits _{n\rightarrow \infty } \hbox {dist}(P^{n}f,{\mathcal {F}})=0\), where \(\hbox {dist}(P^{n}f,{\mathcal {F}})=\inf _{f\in \mathtt{F}} \left\| {f-P^{n}f}\right\| _{L^{1}}\).

If \(P_{S}\) is strongly constrictive, according to the spectral decomposition theorem [40], there exist a sequence of densities \(f_{1},\ldots ,f_{r}\) and a sequence of bounded linear functionals \(g_{1},\ldots ,g_{r}\) such that

where \(P_{S}^{n}\) is the \(n\)th iteration of \(P\), the densities \(f_{1},\ldots ,f_{r}\) have mutually disjoint supports (\(f_{i}f_{j}=0\) for \(i\ne j)\), and \(P_{S}f_{i}=f_{\alpha (i)}\), \(i=1,\ldots ,r\) and \(\{\alpha (1),\ldots ,\alpha (r)\}\) is a permutation of the integers \(\{1,\ldots ,r\}\). Furthermore, \(P_{S}^{n}f\) converges to an invariant density \(f^{{*}}\), which satisfies \(f^{{*}}=P_{S}f^{{*}}\).

Let \({\mathfrak {R}} =\{R_{1}, R_{2}, \ldots , R_{N}\}\) be a partition of \(I\) into intervals, and \(\hbox {int}(R_{i})\cap \hbox {int}(R_{j})=\varnothing \) if \(i\ne j\). If \(S\) is a piecewise monotonic transformation

where \(S_{i}\) is the monotonic segment of \(S\) on each interval \(R_{i}\).

The inverse Frobenius–Perron problem is usually formulated [17] as the problem of determining the point transformation \(S\) such that the dynamical system \(x_{n+1} =S(x_{n})\) has a given invariant probability density function \(f^{{*}}\). In general, the problem does not have a unique solution.

The generalized inverse problem addressed in this paper is that of inferring the point transformation which generated a sequence of density functions and has a given invariant density function. Specifically, let \(\{x_{0,i}^{j}\}_{i,j=1}^{\theta ,K}\) and \(\{x_{1,i}^{j}\}_{i,j=1}^{\theta ,K}\) be two sets of initial and final states observed in \(K\) separate experiments, where \(x_{1,i}^{j}=S(x_{0,i}^{j})\), \(i=1,\ldots ,\theta \), \(j=1,\ldots ,K\), and \(S:I\rightarrow I\) is an unknown, non-singular point transformation. We assume that for practical reasons, we cannot associate with an initial state \(x_{0,i}^{j}\) the corresponding image \(x_{1,i}^{j}\), but we can estimate the probability density functions \(f_{0}^{j}\) and \(f_{1}^{j}\) associated with the initial and final states, \(\{x_{0,i}^{j}\}_{i=1}^{\theta }\) and \(\{x_{1,i}^{j}\}_{i=1}^{\theta }\), respectively. Moreover, let \(f^{{*}}\) be the observed invariant density of the system. The inverse problem is to determine \(S:I\rightarrow I\) such that \(f_{1}^{j}=P_{S}f_{0}^{j}\) for \(j=1,K\) and \(f^{{*}}=P_{S}f^{{*}}\).

3 A solution to the IFPP for piecewise-linear semi-Markov transformations

This section presents a method for solving the IFPP for a class of piecewise monotonic and expanding transformations called \({\mathfrak {R}}\)-semi-Markov [17], where

is a partition of \(I=[a,b]\), \(c_{0}=a\), \(c_{N}=b\).

Definition 3

A transformation \(S:I\rightarrow I\) is said to be semi-Markov with respect to the partition \({\mathfrak {R}}\) (or \({\mathfrak {R}}\)-semi-Markov) if there exist disjoint intervals \(Q_{j}^{(i)}\) so that \(R_{i}=\cup _{k=1}^{p(i)} Q_{k}^{(i)}\,i=1,\ldots ,N\), the restriction of \(S\) to \(Q_{k}^{(i)}\), denoted \(\left. S\right| _{Q_{k}^{(i)}}\), is monotonic and \(S(Q_{k}^{(i)})\in {\mathfrak {R}} \) [17].

The restriction \(\left. S\right| _{Q_{k}^{(i)}}\) is a homeomorphism from \(R_{i}\) to a union of intervals of \({\mathfrak {R}}\)

where \(R_{r(i,k)} =S(Q_{k}^{(i)})\in {\mathfrak {R}} \), \(Q_{k}^{(i)} =[q_{k-1}^{(i)} ,q_{k}^{(i)}]\), \(i=1,\ldots ,N\), \(k=1,\ldots ,p(i)\) and \(p(i)\) denotes the number of disjoint subintervals \(Q_{k}^{(i)}\) corresponding to \(R_{i}\).

The following theorem [17] establishes an important property of such transformation, namely that its invariant density is piecewise constant over \({\mathfrak {R}}\).

Theorem 1

If S is a \({\mathfrak {R}}\)-semi-Markov, piecewise-linear and expanding transformation, i.e. \(\left. S\right| _{Q_{j}^{(i)}}\) is linear with slope greater than 1, \(k=1,\ldots ,p(i)\), \(i=1, \ldots , N\), then any S-invariant density is constant on intervals of \({\mathfrak {R}}\).

If \(f=\sum \limits _{i=1}^{N}{h_{i}\chi _{R_{i}}}\), i.e. \(f\in H({\mathfrak {R}})\), where \(H({\mathfrak {R}})\) denotes the space of piecewise constant functions defined over the partition \({\mathfrak {R}}\), then the Frobenius–Perron operator \(P_{S}\) associated with the piecewise-linear \({\mathfrak {R}}\)-semi-Markov transformation satisfies \(P_{S}f=M_{S}h^{f}\), where \(M_{S}=(m_{i,j})_{1\le i,\;j\le N}\) (the matrix induced by \(S)\) is given by

and \(h^{f}=[h_{1},h_{2},\ldots ,h_{N}]^{T}\).

Let \(S\) be an unknown piecewise-linear \({\mathfrak {R}}\)-semi-Markov transformation and \(\{f_{t,\;i} \}_{t,\;i=1}^{T,\;K}\) be a sequence of probability density functions generated by the unknown map \(S\), given a set of initial density functions \(\{f_{0,\;i} \}_{i=1, \;K}\). Assuming that the invariant density function \(f^{{*}}\) of the Frobenius–Perron operator associated with the unknown transformation \(S\) can be estimated from experimental data, the proposed identification approach can be summarized as follows:

-

a.

Given the samples, construct a uniform partition \(C\) and an initial piecewise constant density estimate \(f_{C}^{*}\) of the true invariant density \(f^{{*}}\) which maximizes a penalized log-likelihood function.

-

b.

Select a sub-partition \(C_{d}(\overline{l}_{j})\) of \(C\).

-

c.

Estimate the matrix representation of the Frobenius–Perron operator over the partition \(C_{d}(\overline{l}_{j})\) based on the observed sequences of densities generated by \(S\).

-

d.

Construct the piecewise-linear map \(\hat{{S}}^{(\overline{l}_{j})}\) corresponding to the matrix representation.

-

e.

Compute the piecewise constant invariant density \(f_{C_{d}(\overline{l}_{j})}^{*}\) associated with the identified transformation \(\hat{{S}}^{(\overline{l}_{j})}\) and evaluate performance criterion.

-

f.

Repeat steps (b) to (e) to identify the partition and map which minimize the performance criterion.

3.1 Identification of the Markov partition

Let \(f^{*}\in H({\mathfrak {R}})\) be the invariant density associated with a \({\mathfrak {R}}\)-semi-Markov transformation \(S\). Let \(\{x_{i}^{*}\}_{i=1}^{\theta }\) be a finite number of independent observations of \(f^{*}\). The aim is to determine an orthogonal basis set \(\{\chi _{R_{i}} (x)\}_{i=1}^{N}\) such that

where \(\chi _{R_{i}} (x)\) is the indicator function and \(h_{i}\) are the expansion coefficients given by

\(\lambda (R_{i})\) denotes the length of the interval \(R_{i}\).

We start by constructing a uniform partition \(\Delta \) with intervals \({N}^{'}\) that maximizes the following penalized log-likelihood function [42]

where \(1\le {N}^{'}\le \theta /{\log \theta }\), \(D_{i}=\sum \limits _{j=1}^{\theta } {\chi _{\Delta _{i}} (x_{j}^{*})}\), and

The coefficients \({h}^{'}_{i}\) for the regular histogram are given by

Let \(C=\{c_{1},\ldots ,c_{{N}^{'}-1} \}\) be the strictly increasing sequence of cut points corresponding to the resulting uniform partition \(\Delta =\{\Delta _{i}\}_{i=1}^{N^{\prime }}\). Let \(L=\{l_{j}\}_{j=1}^{N^{\prime }-1}\), \(l_{j}={{N}^{'}\cdot \left| {({h}^{'}_{j+1} -{h}^{'}_{j})}\right| }/{(b-a)}\) and \(\overline{L} =\{\overline{l}_{j}\}_{j=1}^{{N}^{''}}\), \(0\le {N}^{''}\le {N}^{'}-1\), be the longest strictly increasing subsequence of \(L\).

The final Markov partition \({\mathfrak {R}}\) is determined by solving

where \(C_{d}(\overline{l}_{j})=\{c_{d_{1}(\overline{l}_{j})} ,\ldots ,c_{d_\rho (\overline{l}_{j})} \}\) is the longest subsequence of \(C\) which, for the selected threshold \(\overline{l}_{j}\in \overline{L}\), satisfies \(d_{1}(\overline{l}_{j})=1\) if \(l_{1}>\overline{l}_{j}\) and in general \(d_{i+1} (\overline{l}_{j})=d_{i}(\overline{l}_{j})+1\) if \(l_{d_{i}+1} >\overline{l}_{j}\) for \(i=1,\,\ldots ,\,\rho -1\). In Eq. (14), \(f_{C_{d}(\overline{l}_{j})}^{*}\) denotes the piecewise constant invariant density associated with the transformation \(\hat{{S}}^{(\overline{l}_{j})}\) identified over the partition

3.2 Identification of the Frobenius–Perron matrix

Let \({\mathfrak {R}} =\{R_{1},R_{2},\ldots ,R_{N}\}=\{[a,c_{1}],(c_{1},c_{2}],\ldots ,(c_{N-1} ,b]\}\) be a candidate Markov partition and \(\{f_{t,i} \}_{t,\;i=1}^{T,\;K}\) be the piecewise constant densities on \({\mathfrak {R}}\), which are estimated from the samples.

Let \(f_{0}(x)\) be an initial density function that is piecewise constant on the partition \({\mathfrak {R}}\)

where the coefficients satisfy \(\sum \limits _{i=1}^{N}{w_{0,i} \lambda (R_{i})=1}\).

Let \(X_{0}=\{x_{0,j} \}_{j=1}^{\theta } \) be the set of initial conditions obtained by sampling \(f_{0}(x)\) and

be the set of states obtained by applying \(t\) times the transformation \(S\) such that \(x_{t,j} =S^{t}(x_{0,j})\) for some \(x_{0,j} \in X_{0}\), \(j=1,\ldots ,\theta \).

The density function associated with the states \(X_{t}\) is given by

where the coefficients \(w_{t,j} =\frac{1}{\lambda (R_{j})\cdot \theta }\sum \limits _{j=1}^{\theta } {\chi _{R_{j}} (x_{t,j})}\). Let \({{\mathbf {w}}}^{f_{t}}=[w_{t,1} ,\ldots ,w_{t,N} ]\) be the vector defining \(f_{t}(x)\), \(t=0,\ldots ,T\) where typically \(T\ge N\). In practice, the observed \(f_{t}(x)\), \(t=0,\ldots ,T\), are approximations of the true density functions, which are inferred from experimental observations.

It follows that

where

and

The matrix M is obtained as a solution to a constrained optimization problem

subject to

where \(||\cdot ||_{F}\) denotes the Frobenius norm.

The matrix \(\Phi ={{\mathbf {W}}}_{0}^{T}{{\mathbf {W}}}_{0}\) has to be non-singular for the solution to be unique.

Proposition 1

Given a sequence of piecewise constant density functions \(f_{0},\ldots , f_{T}\) generated by a piecewise-linear \({\mathfrak {R}}\)-semi-Markov transformation S(x), the matrix \(\Phi ={{\mathbf {W}}}_{0}^{T}{{\mathbf {W}}}_{0}\) is non-singular if \(f_{N-2} (x)\ne f^{*}(x)\).

Proof

If \(f_{N-2} (x)=f^{*}(x)\), then \(f_{t}(x)=f^{*}(x)\) for \(t=N-1,\ldots ,T\); that is, the matrix \({{\mathbf {W}}}_{0}\) has at most \(N-2\) rows that are distinct from \(f^{*}(x)\).

Using Cauchy–Binet formula, the determinant of \(\Phi \) can be written as

where \([T]\) denotes the set \(\left\{ {1,\ldots ,T}\right\} \), \(\left( {{\begin{array}{l} {[T]} \\ N \\ \end{array}}}\right) \) denotes the set of subsets of size \(N\) of \([T]\) and \({{\mathbf {W}}}_{0,K,[T]}\) is a \(N\times N\) matrix whose rows are the rows of \({{\mathbf {W}}}_{0}\) at indices given in \(K\). Since \({{\mathbf {W}}}_{0}\) has at most \(N\)-2 rows that are distinct from \(f^{*}(x)\), it follows that \({{\mathbf {W}}}_{0,K,[T]}\) has at least two rows that are identical; hence, \(\det ({{\mathbf {W}}}_{0,K,[T]})=0\) for any \(K\in \left( {{ \begin{array}{l} {[T]} \\ N \\ \end{array}}}\right) \). Consequently, \(\det ({{\mathbf {W}}}_{0}^{T}{{\mathbf {W}}}_{0})=0\), which concludes the proof. \(\square \)

Proposition 2

A \({\mathfrak {R}}\)-semi-Markov, piecewise-linear and expanding transformation S can be uniquely identified given N linearly independent, piecewise constant densities \(f_{0}^{i}\in H({\mathfrak {R}})\) and their images \(f_{1}^{i}\in H({\mathfrak {R}})\) under the transformation.

Proof

Let

Since \(\{f_{0}^{i}\}_{i=1}^{N}\) are linearly independent, \(\{{{\mathbf {w}}}_{i}^{0}\}_{i=1}^{N}\), \({{\mathbf {w}}}_{i}^{0}=[w_{i,1}^{0},\ldots ,w_{i,N}^{0}]\) are also linearly independent. Moreover, given that \(S \) is a \({\mathfrak {R}}\)-semi-Markov, piecewise linear and expanding, we have

where \({{\mathbf {w}}}_{i}^{1}=[w_{i,1}^{1},\ldots ,w_{i,N}^{1}]={{\mathbf {w}}}_{i}^{0}{{\mathbf {M}}},\,i=1,\ldots ,N\). Alternatively, this can be written as

where

and

Since \({{{\mathbf {W}}}}^{'}_{0}\) is non-singular, the Frobenius–Perron matrix M is given by

The derivative of \(\left. S\right| _{Q_{k}^{(i)}}\) is \(1/{m_{i,j}}\), and the length of \(Q_{k}^{(i)}\) is given by

which allows computing iteratively \(q_{k}^{(i)}\) for each interval \(R_{i}\) starting with \(q_{0}^{(i)} =c_{i-1}\). By assuming each branch \(\left. S\right| _{R_{i}}\) is monotonically increasing, the piecewise-linear semi-Markov mapping is given by

for \(k=1,\ldots ,p(i)\), and \(j\) is the index of image \(R_{j}\) of \(Q_{k}^{(i)}\); i.e. \(S(Q_{k}^{(i)})=R_{j}\), \(i=1,\ldots ,N\), \(j=1,\ldots ,N\), where \(m_{i,j} \ne 0\). \(\square \)

The map is constructed as depicted in Fig. 1.

In practice, we can choose the piecewise constant probability density functions \(f_{0}^{j}(x)=\frac{1}{\lambda (R_{j})}\chi _{R_{j}} (x)\). These are sampled in order to generate \(N\) sets of initial conditions

that will be used in the experiments. For each set of initial conditions \(X_{1}^{i}\), we measure a corresponding set of final states

where \(x_{1,j}^{i}=S(x_{0,k}^{i})\) for some \(x_{0,k}^{i}\in X_{0}^{i}\). The density function \(f_{1}^{i}\) associated with the set \(X_{1}^{i}\) of final states is given by

where \(v_{i,j} =\frac{1}{\lambda (R_{j})\cdot \theta }\sum \limits _{k=1}^{\theta } {\chi _{R_{j}} (x_{1,k}^{i})}\).

Remark

We only need to generate initial conditions for the densities that correspond to the finest uniform partition \(N={N}^{'}\). Coarser partitions are obtained by merging adjacent intervals, for example \(R_{j}\) and \(R_{j+1}\), leading to the new partition \(\{\overline{R}_{1},\ldots ,\overline{R}_{N-1} \}\). It follows that the initial and final states corresponding to the merged interval \(\overline{R}_{j}=R_{j}\cup R_{j+1}\) are given by \(\overline{X}_{0}^{j}=X_{0}^{j}\cup X_{0}^{j+1}\) and \(\overline{X}_{1}^{j}=X_{1}^{j}\cup X_{1}^{j+1}\), respectively. The initial and final densities corresponding to the merged interval are given by \(\overline{f}_{0}^{j}(x)=\frac{1}{\lambda (\bar{{R}}_{J})}\chi _{\overline{R}_{j}} (x)\) and \(\overline{f}_{1}^{j}(x)=\frac{1}{2\lambda (\overline{R}_{i})\cdot \theta }\sum \limits _{i=1}^{N-1} {\sum \limits _{k=1}^{\theta } {\chi _{\overline{R}_{i}} (x_{1,k}^{j})}} \chi _{\overline{R}_{i}} (x)\), respectively.

In general, initial density functions are not piecewise constant over the partition \({\mathfrak {R}}\). Let \(f\in L^{2}\supset H({\mathfrak {R}}_{Q})\), \(P^{N_{Q}}:L^{2}\rightarrow H({\mathfrak {R}}_{Q})\) be the orthogonal projector operator and \(Z^{N_{Q}}=I-P^{N_{Q}}\) such that \(f=P^{N_{Q}}f+Z^{N_{Q}}f=f_{p}+f_{z}\). where \({\mathfrak {R}}_{Q}=\{Q_{1}^{(1)} ,\ldots ,Q_{1}^{p(1)} ,\ldots ,Q_{N}^{p(N)} \}=\{Q_{1},\ldots ,Q_{N_{Q}} \}\), \(R_{i}=\cup _{k=1}^{p(i)} Q_{k}^{(i)}\), \(i=1,\;\ldots ,\;N\), \(H({\mathfrak {R}}_{Q})=span\{\chi _{Q_{k}^{(i)}} \}\) and \(N_{Q}=\sum \limits _{i=1}^{N}{p(i)}\).

Theorem 2

A \({\mathfrak {R}}\)-semi-Markov, piecewise-linear and expanding transformation, where \(R_{i}=\cup _{k=1}^{p(i)} Q_{k}^{(i)}\), \(i=1, .., N\), can be uniquely identified given a set of initial densities \(\{f_{0}^{i}\}_{i=1}^{N_{Q}}\), \(N_{Q}=\sum \limits _{i=1}^{N}{p(i)}\), and their images \(\{f_{1}^{i}\}_{i=1}^{N}\) under the transformation, if \(\{P^{N_{Q}}f_{0}^{i}\}_{i=1}^{N_{Q}}\) are linearly independent.

Proof

The Frobenius–Perron operator associated with \(S\) is given by

It follows that

where \(|f_{0}^{i\prime }(S|_{Q_{k}^{(i)}}^{-1} (x))|\in \{\beta _{1},\ldots .,\beta _{N_{Q}} \}\).

Then,

Hence,

Alternatively, (27) can be written as

where \({{\mathbf {M}}}_{Q}={{\mathbf {W}}}_{0}^{-1} {{\mathbf {W}}}_{1}=\{m_{i,j} \}_{i,j=1}^{N_{Q}}\) is the Frobenius–Perron matrix that corresponds to a unique piecewise linear and expanding transformation \(S\) given by

for \(k=1,\ldots ,p(i)\), \(j\) is the index of image \(R_{j}\) of \(Q_{k}^{(i)}\); i.e. \(S(Q_{k}^{(i)})=R_{j}\), \(i=1,\ldots ,N\), \(j=1,\ldots ,N\), \(s(1)=0\) and \(s(i)=s(i-1)+p(i-1)\) for \(i>1\). \(\square \)

3.3 Numerical example 1

The applicability of the proposed algorithm is demonstrated using numerical simulation. Consider the following piecewise-linear and expanding transformation \(S:[0,1]\rightarrow [0,1]\)

for \(i=1,\ldots ,4\), \(j=1,\ldots ,4\), defined on the partition \({\mathfrak {R}}=\{R_{i}\}_{i=1}^{4}=\{[0,0.4], \,(0.4,0.5],\,(0.5,0.8], \,(0.8,1]\}\), where

The graph of \(S\) is shown in Fig. 2.

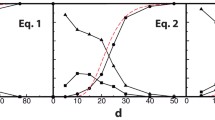

A set of initial states \(X_{0}=\{x_{0,j}\}_{j=1}^{\theta } \), \(\theta =5\times 10^{3}\), generated by sampling from a uniform probability density function \(f_{0} (x)=\chi _{\left[ {0,1}\right] } (x)\), were iterated using \(S\) to generate a corresponding set of final states \(X_{T} =\{x_{T,j} \}_{j=1}^{\theta }\) where \(T=20{,}000\). The data set \(X_{T}\) was used to determine the uniform partition \(\Delta \) with \({N}^{'}\) intervals, \(1\le {N}^{'}\le \left\lfloor {\theta /\log \theta }\right\rfloor =587\), which maximizes the penalized log-likelihood function in equation (10). In this example, \({N}^{'}=10\); i.e. \(C=\{0.1,\ldots ,0.9\}\) and the estimated invariant density \(f_{C}^{*} (x)\) with respect to the 10-interval partition is shown in Fig. 3. The sequence \(L=\{l_{j}\}_{j=1}^{9}\), \(l_{j}=10|h^{\prime }_{j+1} -h^{\prime }_{j}|\) is shown in Fig. 4.

In this example, \(\overline{L} =\{\bar{{l}}_{j}\}_{j=1}^{9}=\{0.12, \;0.18,\;0.22,\;0.34,\;0.40,\;0.82,\;4.72,\;14.32, \;15.74\}\) and the minimum of

is obtained for \(\overline{l}_{7}=4.72\), as shown in Fig. 5. This corresponds to the final Markov partition \({\mathfrak {R}} =\left\{ {R_{1},R_{2},R_{3},R_{4}}\right\} \), where \(R_{1}=[0{,}0.4]\), \(R_{2}=(0.4{,}0.5]\), \(R_{3}=(0.5{,}0.8]\) and \(R_{4}=(0.8{,}1]\). Figure 6 shows the initial density functions used to generate the set of the initial conditions and the final density functions estimated from the corresponding final states for \(T=1\).

Numerical example 1: the value of the cost function given in equation (14) for each threshold \(\bar{{l}}_{j},j=1,\ldots ,9.\)

For the identified partition, the estimated Frobenius–Perron matrix is

The corresponding identified mapping \(\hat{{S}}\) is shown in Fig. 7.

The estimated coefficients of the identified piecewise-linear semi-Markov transformation \(\left. {\hat{{S}}}\right| _{R_{i}} (x)=\hat{{\alpha }}_{i,j} x+\hat{{\beta }}_{i,j}\) are

To evaluate the performance of the reconstruction algorithm, we computed the absolute percentage error

for \(x\in X=\{0.01,\;0.02,\;\ldots ,\;0.99\}\). As shown in Fig. 8, the relative error between the identified and original map is less than 2 %. Furthermore, Fig. 9 shows the true invariant density \(f^{{*}}\) associated with \(S\) superimposed on the invariant density \(\hat{{f}}^{{*}}\) associated with the identified map \(\hat{{S}}\) (Fig. 7).

In practical situations, measurements are corrupted by noise. Given the process

where \(S:R\rightarrow R\) is a measurable transformation and \(\{\zeta _{n}\}\) is a sequence of independent random variables with density \(g\), it can be shown [41] that the evolution of densities for this transformation is described by the Markov operator \(\bar{{P}}:L^{1}\rightarrow L^{1}\) defined by

Furthermore, if \(\bar{{P}}\) is constrictive then \(\bar{{P}}\) has a unique invariant density \(f^{*}\) and the sequence \(\{\bar{{P}}^{n}f\}\) is asymptotically stable for every \(f\in D\) [41].

To study how noise affects the performance of our algorithm, we considered the process

where \(S:[0,1]\rightarrow [0,1]\) is a measurable transformation that has a unique invariant density \(f^{*}\), \(\{\zeta _{n}\}\) is i.i.d. with density \(g\) and \(\varepsilon \) is a known noise level. This leads to an integral operator \(P_{\varepsilon }\) which has a unique invariant density \(f_{\varepsilon }^{*}\) [41]. It can be shown that \(\mathop {\lim }\limits _{\varepsilon \rightarrow 0} \left\| {P_{\varepsilon } f-Pf}\right\| =0\) for all \(f\in D\) and that, for \(0<\varepsilon <\varepsilon _{0}\), if \(\mathop {\lim }\limits _{\varepsilon \rightarrow 0} f_{\varepsilon }^{*}\) exists, then the limit is \(f^{*}\).

To evaluate the performance of the proposed algorithm in the presence of noise, we assumed \(\zeta \sim \hbox {N}(0,1)\) and reconstructed the map for different values of \(\varepsilon \). We computed the mean absolute percentage error (MAPE) between \(S\) and \(\hat{{S}}\).

where \(\{x_{i}\}_{i=1}^{\theta _{\delta S}} =\{0.01,\ldots ,0.99\}\), \(\theta _{\delta S} =99\).

The results, summarized in (Table 1), demonstrate that the algorithm is robust with respect to constantly applied stochastic perturbations. Remarkably, the approximation errors remain relatively small even for noise levels that would make it almost impossible to reconstruct the map based on time series data [11, 43].

4 Extension to general nonlinear transformations

The approach to reconstructing piecewise-linear and expanding transformations from densities can be extended to more general nonlinear maps. Ulam [44] conjectured that for one-dimensional systems, the infinite-dimensional Frobenius–Perron operator can be approximated arbitrarily well by a finite-dimensional Markov transformation defined over a uniform partition of the interval of interest. The conjecture was proven by Li [45] who also provided a rigorous numerical algorithm for constructing the finite-dimensional operator when the one-dimensional transformation \(S\) is known. Here, the aim is to construct from data a piecewise-linear semi-Markov transformation \(\hat{{S}}\) which approximates the original map \(S\).

The main assumptions are that (a) \(S:I\rightarrow I\) is continuous, \(I=[a,b]\); (b) the Frobenius–Perron operator \(P_{S}:L^{1}\rightarrow L^{1}\) associated with the transformation has a unique stationary density \(f^{{*}}\) and c) \(P_{S}^{n}f\rightarrow f^{{*}}\) for every \(f\in D\); i.e. the sequence \(\{P_{S}^{n}\}\) is asymptotically stable.

Asymptotic stability of \(\{P_{S}^{n}\}\) has been established for certain classes of piecewise \(C^{2}\) maps. For example, we have the following result [41].

Theorem 3

If \(S:[0,1]\rightarrow [0,1]\) is a piecewise monotonic transformation satisfying the conditions:

-

a.

There is a partition \(0<c_{1}<\ldots <c_{N-1} <1\) such that the restriction of S to an interval \(R_{i}=(c_{i-1} ,c_{i})\) is a \(C^{2}\) function;

-

b.

\(S(R_{i})=(0,1);\)

-

c.

\(|S^{\prime }(x)|>1\) for \(x\ne c_{i}\);

-

d.

There is a finite constant \(\psi \) such that

$$\begin{aligned} {-{S}^{''}(x)}/{\left[ {S}^{'}(x)\right] }^{2}\le \psi , \quad x\ne c_{i}, i=1,\ldots ,N-1, \end{aligned}$$(51)then \(\{P_{S}^{n}\}\) is asymptotically stable.

By using a change of variables, it is sometimes possible to extend the applicability of the above theorem to more general transformations, such as the logistic map [41], which do not satisfy the restrictive conditions on the derivatives of \(S\).

4.1 Identification of the Markov partition

Although, for a nonlinear transformation, the invariant density \(f^{{*}}\in D\) is not piecewise constant, the approach used to determine the Markov partition for piecewise-linear transformation in Sect. 3 is also used to determine the optimal partition for the piecewise-linear approximation of the unknown nonlinear map.

4.2 Identification of the Frobenius–Perron matrix

For the identified Markov partition \({\mathfrak {R}}\), a tentative Frobenius–Perron matrix can be identified using the approaches described in Sect. 3.

Let the obtained Frobenius–Perron matrix be denoted by \(\hat{{{{\mathbf {M}}}}}=(\hat{{m}}_{i,j})_{1\le i,j\le N}\). The indices of the contiguous nonzero entries on the \(i\)-the row are denoted by \(r^{i}=\{r_{s}^{i},r_{s}^{i}+1,\ldots ,r_{e}^{i}\}\). \(\hat{{m}}_{i,r_{m}^{i}} \lambda (R_{r_{m}^{i}})=\max \{\hat{{m}}_{i,j} \lambda (R_{j})\}_{j=1}^{N}\), \(r_{m}^{i}\in r^{i}\). Since \(S\) is continuous, \(\cup _{k=1}^{p(i)} R_{r(i,k)}\) is a connected interval, where \(R_{r(i,k)} =S(Q_{k}^{(i)})\in {\mathfrak {R}}\), \(i=1,\ldots ,N\), \(k=1,\ldots ,p(i)\); thus, \(p(i)=r_{e}^{i}-r_{s}^{i}+1\), \(\{r(i,k)\}_{k=1}^{p(i)} =r^{i}\). Here, \(r(i,k)\in \{1,\ldots ,N\}\) are column indices of nonzero entries on the \(i\)-th row of the Frobenius–Perron matrix which satisfy

for \(i=1,\ldots ,N,\,j=1,\ldots ,p(i)-1\). This means that \(m_{i,r(i,k)} >0\) for \(k=1,\ldots ,p(i)\) such that the solution to the optimization problem satisfies

and \(m_{i,j} =0\) if \(j\ne r(i,k),k=1,\ldots ,p(i)\).

4.3 Reconstruction of the transformation from the Frobenius–Perron matrix

The method for constructing a piecewise-linear approximation \(\hat{{S}}(x)\) over the partition \({\mathfrak {R}}\) is augmented to take into account the fact that the underlying transformation is continuous and that on each interval of the partition, \(S_{R_{i}}\) is either monotonically increasing or decreasing. The entries of the positive Frobenius–Perron matrix are used to calculate the absolute value of the slope of \(\hat{{S}}|_{Q_{k}^{(i)}}\) as \(|\hat{{S}}|_{Q_{k}^{(i)}} |=1/{m_{i,j}}\). A simple algorithm was derived to decide whether the slope of \(\hat{{S}}|_{Q_{k}^{(i)}}\) on the interval \(R_{i}\) is positive or negative.

Let \(I_{i}=[c_{r(i,1)-1} ,c_{r(i,p(i))}]\) for \(i=1,\;\ldots ,\;N\), be the image of the interval \(R_{i}\) under the transformation \(\hat{{S}}\) which induce the identified Frobenius–Perron matrix M. \(c_{r(i,1)-1}\) is the starting point of \(R_{r(i,1)}\) which is the image of the subinterval \(Q_{1}^{(i)}\), and \(c_{0}=a\) if \(r(i,1)=1\). \(c_{r(i,p(i))}\) is the end point of \(R_{r(i,p(i))}\), the image of the subinterval \(Q_{p(i)}^{(i)}\). As before, \(\{r(i,k)\}_{k=1}^{p(i)}\) denote the column indices corresponding to the nonzero entries in the \(i\)-th row of M.

Let \(\overline{c}_{i}=\frac{1}{2}[c_{r(i,1)-1} ,c_{r(i,p(i))} ]\) be the midpoint of the image \(I_{i}\). The sign \(\sigma (i)\)of \(\{\left. {{\hat{{S}}}^{'}(x)}\right| _{Q_{k}^{(i)}} \}_{k=1}^{p(i)}\) is given by

for \(i=2,\ldots ,N\) and \(\sigma (1)=\sigma (2)\).

Given that the derivative of \(\left. S\right| _{Q_{k}^{(i)}}\) is \(1/{m_{i,j}}\), the end point \(q_{k}^{(i)}\) of subinterval \(Q_{k}^{(i)}\) within \(R_{i}\) is given by

where \(k=1,\ldots ,p(i)-1\) and \(q_{p(i)}^{(i)} =c_{i}\).

The piecewise-linear semi-Markov transformation for each subinterval \(Q_{j}^{(i)}\) is given by

for \(i=1,\ldots ,N,\,j=1,\ldots ,N,\,k=1,\ldots ,p(i)-1\), \(m_{i,j} \ne 0\).

The construction of the piecewise-linear semi-Markov transformation to approximate the original continuous nonlinear map is depicted in Fig. 10.

A smooth version of the estimated transformation can be obtained by fitting a polynomial smoothing spline.

4.4 Numerical example 2

The extended reconstruction algorithm is demonstrated using the quadratic (logistic) map (Fig. 11)

It can be shown that \(\{P_{S}^{n}\}\) associated with this transformation is asymptotically stable [41].

A set of initial states \(X_{0} =\{x_{0,j} \}_{j=1}^{\theta }\), \(\theta =5\times 10^{3}\), generated by sampling from a uniform probability density function \(f_{0} (x)=\chi _{\left[ {0,1}\right] } (x)\), were iterated using \(S\) to generate a corresponding set of final states \(X_{T} =\{x_{T,j} \}_{j=1}^{\theta }\) where \(T=30{,}000\). The data set \(X_{T}\) was used to search for an uniform partition \(\Delta \) with \({N}^{'}\) intervals, \(1\le N^{\prime }\le \left\lfloor {\theta /\log \theta }\right\rfloor =587\), which maximizes the penalized log-likelihood function in Eq. (12), which in this case corresponds to \({N}^{'}=145\). The estimated invariant density \(f_{C}^{*} (x)\) with respect to the 145-interval partition is shown in Fig. 12. In this example, the longest strictly monotone subsequence \(\overline{L}\) of \(L=\{l_{j}\}_{j=1}^{144}\), \(l_{j}=145|h^{\prime }_{j+1} -h^{\prime }_{j}|\) has 52 elements and the minimization of

is achieved for \(\overline{l}_{20} =0.1560\), as shown in Fig. 13. This corresponds to a final Markov partition with 72 intervals. The invariant density on the irregular partition \({\mathfrak {R}}\) with 72 intervals is shown in Fig. 14.

To identify the Frobenius–Perron matrix, 100 densities (see Appendix) were randomly sampled to generate 100 sets of initial states \(X_{0}^{i}=\{x_{0,j}^{i}\}_{j=1}^{\theta } \), \(i=1,\ldots ,100, \theta =5\times 10^{3}\). The initial states \(X_{0}^{i}\) and their images \(X_{1}^{i}\) under the transformation \(S\) were used to estimate the initial and final density functions on \({\mathfrak {R}}\). Examples of initial and final densities are shown in Fig. 15.

The constructed piecewise-linear semi-Markov transformation with respect to the partition \({\mathfrak {R}}\) is shown in Fig. 16. The smoothed map, obtained by fitting a cubic spline (smoothing parameter: 0.999), is shown in Fig. 17. The relative approximation error is shown in Fig. 18.

The estimated invariant density on \({\mathfrak {R}}\), obtained by iterating the smoothed map 20,000 times with the initial states \(X_{0}\), is shown in Fig. 19, compared with the true invariant density [41] \(f^{{*}}(x)=1/{\left( {\pi \sqrt{x(1-x)}}\right) }\).

The performance of the algorithm for different noise levels was also evaluated, and the results are summarized in Table 2. As it can be seen, the approximation error remains relatively low (\(<\)5 %) for levels of noise (\(>\)50 %) that normally cause severe problems to reconstruction algorithms that use time series data.

5 Conclusions

This paper has addressed in a systematic manner the problem of inferring one-dimensional chaotic maps based on sequences of probability density functions. Compared with previous solutions to solving the inverse Frobenius–Perron, we have derived sufficient conditions and rigorously demonstrated that the proposed approach can uniquely identify the transformation that describes the underlying chaotic dynamics. Specifically, the reconstructed maps exhibit the same dynamics as the original systems and therefore can be used to carry out stability analysis, determine invariant sets and manipulate the dynamical behaviour of the underlying system of interest. The applicability to the proposed methodology and its performance for different levels of noise was demonstrated using numerical simulations involving a piecewise-linear and expanding transformations as well as a continuous one-dimensional nonlinear transformation.

One of the reasons for developing the method presented in this paper was to characterize the heterogeneity of human embryonic stem cell (hESC) cultures and to develop efficient protocols for controlling their differentiation. In essence, sorted sub-populations of stem cells expressing different levels of particular cell-surface markers can over time reconstitute the equilibrium distribution of the parent population [46]. Using flow cytometry, it is possible to follow the evolution of the initial density function of the sorted cell fraction, by sampling and re-plating cells, over a number of days. The sequence of density functions generated in this process can then be used to infer the underlying transformation that governs the process, which can help elucidate the existence of cellular substates [47] that, potentially, correspond to the unstable fixed points of the reconstructed map. We have designed the experiments and started generating data by using flow cytometry to sort out cells with different initial densities. These are re-plated and re-analysed in subsequent days to generate sequences of density functions that are required to solve the generalized inverse problem.

The method could be extended to higher-dimensional maps, but this is not necessarily straightforward. The main limitation is the lack of rigorous theoretical results for two- and higher-dimensional maps. While for one-dimensional maps, we have a complete and elegant theoretical framework, for higher-dimensional maps key results are only available for some special cases. A possible solution is to convert the \(N\)-dimensional problem to a 1-D problem [29] and estimate the corresponding F–P matrix using the approach introduced in this paper. The main challenge is solving the inverse Ulam problem, i.e. construct the transformation based on the estimated F–P matrix. As noted in [30], for higher-dimensional systems Ulam’s conjecture has been proven only for some special cases [48–51]. The method we are interested to explore to construct the transformation which approximates the original high-dimensional map is that introduced by Bollt [30].

References

Ott, E.: Chaos in Dynamical Systems. Cambridge University Press, Cambridge (1993)

Coen, E.M., Gilbert, R.G., Morrison, B.R., Leube, H., Peach, S.: Modelling particle size distributions and secondary particle formation in emulsion polymerisation. Polymer 39(26), 7099–7112 (1998). doi:10.1016/S0032-3861(98)00255-9

Wang, H., Baki, H., Kabore, P.: Control of bounded dynamic stochastic distributions using square root models: an applicability study in papermaking systems. Trans. Inst. Meas. Control 23(1), 51–68 (2001)

Mondragó, R.J.C., : A model of packet traffic using a random wall model. Int. J. Bifurc. Chaos 09(07), 1381–1392 (1999). doi:10.1142/S021812749900095X

Rogers, A., Shorten, R., Heffernan, D.M.: Synthesizing chaotic maps with prescribed invariant densities. Phys. Lett. A 330(6), 435–441 (2004). doi:10.1016/j.physleta.2004.08.022

Wigren, T.: Soft uplink load estimation in WCDMA. IEEE Trans. Veh. Technol. 58(2), 760–772 (2009). doi:10.1109/tvt.2008.926210

Farmer, J.D., Sidorowich, J.J.: Predicting chaotic time series. Phys. Rev. Lett. 59(8), 845–848 (1987)

Casdagli, M.: Nonlinear prediction of chaotic time series. Physica D 35(3), 335–356 (1989). doi:10.1016/0167-2789(89)90074-2

Abarbanel, H.D.I., Brown, R., Kadtke, J.B.: Prediction and system identification in chaotic nonlinear systems: time series with broadband spectra. Phys. Lett. A 138(8), 401–408 (1989). doi:10.1016/0375-9601(89)90839-6

Principe, J.C., Rathie, A., Kuo, J.-M.: Prediction of chaotic time series with neural networks and the issue of dynamic modeling. Int. J. Bifurc. Chaos 02(04), 989–996 (1992). doi:10.1142/S0218127492000598

Aguirre, L.A., Billings, S.A.: Identification of models for chaotic systems from noisy data: implications for performance and nonlinear filtering. Physica D 85(1–2), 239–258 (1995). doi:10.1016/0167-2789(95)00116-L

Billings, S.A., Coca, D.: Discrete wavelet models for identification and qualitative analysis of chaotic systems. Int. J. Bifurc. Chaos 09(07), 1263–1284 (1999). doi:10.1142/S0218127499000894

Lueptow, R., Akonur, A., Shinbrot, T.: PIV for granular flows. Exp. Fluids 28(2), 183–186 (2000)

Wu, J., Tzanakakis, E.S.: Deconstructing stem cell population heterogeneity: single-cell analysis and modeling approaches. Biotechnol. Adv. 31(7), 1047–1062 (2013). doi:10.1016/j.biotechadv.2013.09.001

Friedman, N., Boyarsky, A.: Construction of Ergodic transformations. Adv. Math. 45(3), 213–254 (1982)

Ershov, S.V., Malinetskii, G.G.: The solution of the inverse problem for the Perron–Frobenius equation. USSR Comput. Math. Math. Phys. 28(5), 136–141 (1988)

Góra, P., Boyarsky, A.: A matrix solution to the inverse Perron–Frobenius problem. Proc. Am. Math. Soc. 118(2), 409–414 (1993)

Diakonos, F.K., Schmelcher, P.: On the construction of one-dimensional iterative maps from the invariant density: the dynamical route to the beta distribution. Phys. Lett. A 211(4), 199–203 (1996)

Pingel, D., Schmelcher, P., Diakonos, F.K.: Theory and examples of the inverse Frobenius–Perron problem for complete chaotic maps. Chaos 9(2), 357–366 (1999)

Huang, W.: Constructing chaotic transformations with closed functional forms. Discret. Dyn. Nat. Soc. 2006, 1–16 (2006)

Huang, W.: On the complete chaotic maps that preserve prescribed absolutely continuous invariant densities. In: Topics on Chaotic Systems: Selected Papers from CHAOS 2008 International Conference, pp. 166–173 (2009)

Huang, W.: Constructing multi-branches complete chaotic maps that preserve specified invariant density. Discret. Dyn. Nat. Soc. 2009, 14 (2009). doi:10.1155/2009/378761

Boyarsky, A., Góra, P.: An irreversible process represented by a reversible one. Int. J. Bifurc. Chaos 18(07), 2059–2061 (2008)

Baranovsky, A., Daems, D.: Design of one-dimensional chaotic maps with prescribed statistical properties. Int. J. Bifurc. Chaos 5(6), 1585–1598 (1995)

Diakonos, F.K., Pingel, D., Schmelcher, P.: A stochastic approach to the construction of one-dimensional chaotic maps with prescribed statistical properties. Phys. Lett. A 264(2–3), 162–170 (1999). doi:10.1016/S0375-9601(99)00775-6

Koga, S.: The inverse problem of Flobenius–Perron equations in 1D difference systems-1D map idealization. Prog. Theor. Phys. 86(5), 991–1002 (1991)

Rogers, A., Shorten, R., Heffernan, D.M.: A novel matrix approach for controlling the invariant densities of chaotic maps. Chaos Solitons Fractals 35(1), 161–175 (2008). doi:10.1016/j.chaos.2006.05.017

Berman, A., Shorten, R., Leith, D.: Positive matrices associated with synchronised communication networks. Linear Algebra Appl. 393, 47–54 (2004). doi:10.1016/j.laa.2004.07.016

Rogers, A., Shorten, R., Heffernan, D.M., Naughton, D.: Synthesis of piecewise-linear chaotic maps: invariant densities, autocorrelations, and switching. Int. J. Bifurc. Chaos 18(8), 2169–2189 (2008)

Bollt, E.M.: Controlling chaos and the inverse Frobenius–Perron problem: global stabilization of arbitrary invariant measures. Int. J. Bifurc. Chaos 10(5), 1033–1050 (2000)

Lozowski, A.G., Lysetskiy, M., Zurada, J.M.: Signal processing with temporal sequences in olfactory systems. IEEE Trans. Neural Netw. 15(5), 1268–1275 (2004). doi:10.1109/tnn.2004.832730

Pikovsky, A., Popovych, O.: Persistent patterns in deterministic mixing flows. Europhys. Lett. 61(5), 625 (2003)

Wyk, M.A.v., Ding, J.: Stochastic analysis of electrical circuits. In: Chaos in Circuits and Systems, pp. 215–236. World Scientific, Singapore (2002)

Isabelle, S.H., Wornell, G.W.: Statistical analysis and spectral estimation techniques for one-dimensional chaotic signals. IEEE Trans. Signal Process. 45(6), 1495–1506 (1997)

Götz, M., Abel, A., Schwarz, W.: What is the use of Frobenius–Perron operator for chaotic signal processing? In: Proceedings of NDES. Citeseer (1997)

Altschuler, S.J., Wu, L.F.: Cellular heterogeneity: do differences make a difference? Cell 141(4), 559–563 (2010). doi:10.1016/j.cell.2010.04.033

MacArthur, B.D., Lemischka, I.R.: Statistical mechanics of pluripotency. Cell 154(3), 484–489 (2013). doi:10.1016/j.cell.2013.07.024

Schütte, C., Huisinga, W., Deuflhard, P.: Transfer operator approach to conformational dynamics in biomolecular systems. In: Fiedler, B. (ed.) Ergodic Theory, Analysis, and Efficient Simulation of Dynamical Systems, pp. 191–223. Springer, Berlin (2001)

Potthast, R.: Implementing Turing Machines in Dynamic Field Architectures. arXiv preprint arXiv:1204.5462 (2012)

Boyarsky, A., Góra, P.: Laws of Chaos: Invariant Measures and Dynamical Systems in One Dimension. Probability and its Applications. Birkhäuser, Boston, MA (1997)

Lasota, A., Mackey, M.C.: Chaos, Fractals, and Noise: Stochastic Aspects of Dynamics, 2nd edn. Springer, New York (1994)

Rozenholc, Y., Mildenberger, T., Gather, U.: Combining regular and irregular histograms by penalized likelihood. Comput. Stat. Data Anal. 54(12), 3313–3323 (2010). doi:10.1016/j.csda.2010.04.021

Aguirre, L.A., Billings, S.A.: Retrieving dynamical invariants from chaotic data using NARMAX models. Int. J. Bifurc. Chaos 05(02), 449–474 (1995). doi:10.1142/S0218127495000363

Ulam, S.M.: A Collection of Mathematical Problems: Interscience Tracts in Pure and Applied Mathematics, vol. 8. Interscience, New York (1960)

Li, T.-Y.: Finite approximation for the Frobenius–Perron operator. A solution to Ulam’s conjecture. J. Approx. Theory 17(2), 177–186 (1976). doi:10.1016/0021-9045(76)90037-X

Chang, H.H., Hemberg, M., Barahona, M., Ingber, D.E., Huang, S.: Transcriptome-wide noise controls lineage choice in mammalian progenitor cells. Nature 453(7194), 544–547 (2008)

Enver, T., Pera, M., Peterson, C., Andrews, P.W.: Stem cell states, fates, and the rules of attraction. Cell Stem Cell 4(5), 387–397 (2009)

Froyland, G.: Finite approximation of Sinai–Bowen–Ruelle measures for Anosov systems in two dimensions. Random Comput. Dyn. 3(4), 251–264 (1995)

Froyland, G.: Computing physical invariant measures. In: International Symposium on Nonlinear Theory and its Applications, Japan, Research Society of Nonlinear Theory and its Applications (IEICE), pp. 1129–1132 (1997)

Boyarsky, A., Lou, Y.: Approximating measures invariant under higher-dimensional chaotic transformations. J. Approx. Theory 65(2), 231–244 (1991)

Ding, J., Zhou, A.H.: Piecewise linear Markov approximations of Frobenius–Perron operators associated with multi-dimensional transformations. Nonlinear Anal. Theory Methods Appl. 25(4), 399–408 (1995)

Acknowledgments

X. N. gratefully acknowledges the support from the Department of Automatic Control and Systems Engineering at the University of Sheffield and China Scholarship Council. D. C. gratefully acknowledges the support from MRC, BBSRC and the Human Frontier Science Program.

Author information

Authors and Affiliations

Corresponding author

Appendix: initial states for example 2

Appendix: initial states for example 2

The 100 sets of initial states used in example 2 are obtained by sampling the following density functions:

where \(\hbox {B}(\cdot ,\;\cdot )\) is beta function.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Nie, X., Coca, D. Reconstruction of one-dimensional chaotic maps from sequences of probability density functions. Nonlinear Dyn 80, 1373–1390 (2015). https://doi.org/10.1007/s11071-015-1949-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-015-1949-9