Abstract

Traditional deep learning methods require a large amount of labeled data for model training, which is laborious and costly in real word. Few-shot learning (FSL) aims to recognize novel classes with only a small number of labeled samples to address these challenges. We focus on metric-based few-shot learning with improvements in both feature extraction and metric method. In our work, we propose the Pluralistic Attention Network (PANet), a novel attention-oriented framework, involving both a local encoded intra-attention(LEIA) module and a global encoded reciprocal attention(GERA) module. The LEIA is designed to capture comprehensive local feature dependencies within every single sample. The GERA concentrates on the correlation between two samples and learns the discriminability of representations obtained from the LEIA. The two modules are complementary to each other and ensure the feature information within and between images can be fully utilized. Furthermore, we also design a dual-centralization (DC) cosine similarity to eliminate the disparity of data distribution in different dimensions and enhance the metric accuracy between support and query samples. Our method is thoroughly evaluated with extensive experiments, and the results demonstrate that with the contribution of each component, our model can achieve high-performance on four widely used few-shot classification benchmarks of miniImageNet, tieredImageNet, CUB-200-2011 and CIFAR-FS.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

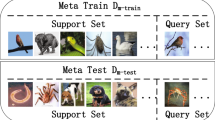

In recent years, deep neural networks have achieved excellent performance on various challenging tasks, such as image classification [1, 2], semantic segmentation [3, 4], object detection [5, 6], text recognition [7, 8] and voice recognition [9, 10]. However, such powerful performance relies heavily on large-scale labeled samples and preparing sufficient human-annotated datasets is often laborious and expensive in practice. To alleviate the performance of the model in the case of limited samples, the GAN-based methods [11, 12] generate new data through model learning to expand the number of samples of the original dataset. Domain adaptation methods [13,14,15], on the other hand, enhance the model’s transferability by aligning or adjusting the data distributions between the source and target domains. Few-shot learning (FSL), inspired by human learning, can easily absorb new concepts from only few examples. FSL aims to recognize a set of novel classes (query set) with few labeled data utilizing the knowledge learned from a set of base classes (support set) with abundant labeled samples[16].

Metric-based few-shot learning method is a simple and effective solution [17,18,19] which can achieve promising performance. The metric-based method usually contains a feature encoder and a metric function. The feature encoder is used to map samples into feature vector. The metric function is applied to compute the similarity between the query sets and the support sets. Then the query sample can be accurately categorized based on the nearest neighbor principle. In order to improve the accuracy of metric-based methods, the existing approaches attempt to train a well-performing feature encoder that matches with a fixed metric, such as MatchingNet [20], or directly to learn an appropriate metric function via a CNN model, such as RelationNet [18]. Attention mechanism can divert the focus of the model to the most important regions of an image and disregard irrelevant parts [21], which shows great advantages in feature extraction stage. The primary challenge in the few-shot learning problem is the scarcity of samples, and the effective utilization of limited sample features is of paramount concern. In the image classification tasks, global features can provide insights into the commonalities and overall differences between classes. Furthermore, by capturing local features that are relevant to specific objects or categories, the model can become less sensitive to irrelevant information, thereby enhancing the distinctiveness of images and facilitating the discrimination between different categories. Attention-based architectures have been widely applied for FSL, such as cross attention network [22], self-attention relation network [23] and adaptive attention [24]. However, only one or two kinds of attentions are involved in these methods which has restricted the extraction of feature information to limited dimensions.

In this paper, we propose PANet, a two-phase attention-oriented method to address this challenge. As illustrated in Fig. 1, it mainly consists of three modules: feature embedding module, local encoded intra-attention(LEIA) module and global encoded reciprocal attention(GERA) module. We combine the encode regional spatial [25] and adjacent channel attention together in a sequential way to form the LEIA, which aims to capture comprehensive local feature dependencies in every single sample and prepare informative inputs for the GERA module. The GERA module incorporates global attention maps of both the support and query images to locate the most relevant regions in support-query image pairs, thus enhancing the discriminability of representations obtained from the LEIA module. The two modules are mutually complementary from two different aspects and ensure the feature information within or between images can be both fully utilized. In addition, we also propose a dual-centralization (DC) cosine similarity method which adopts centralizing operations during the cosine similarity calculation. DC can eliminate the disparity of data distribution in different dimensions and enhance the accuracy and reliability of the metric. Our model achieves new state-of-the-art performances. We also conduct a series of ablation experiments and the results demonstrate that each key component in our proposed model plays a critical role. Our contributions can be summarized as follows:

-

We focus on adjacent channel attention and design a novel local encoded intra-attention module, which can learn local feature dependencies between samples along both channel and spatial dimensions.

-

We propose a novel global encoded reciprocal attention module for few-shot image classification, which captures discriminative global features by interactively learning spatial and channel attention between the support and query samples.

-

We design a dual-centralization (DC) cosine similarity metric, which performs twice centering operations on different dimensions of the feature vector to alleviate the disparity in data distribution and improve the reliability of cosine similarity.

Overall framework of our method. The PANet contains three modules: feature embedding module, local encoded intra-attention(LEIA) module and global encoded reciprocal attention(GERA) module. The feature embedding module generates the feature maps for the input images. The LEIA module refines regional spatial and adjacent channel attention upon feature maps. The GERA module computes the encoded reciprocal spatial and channel attention between the support and query representations

2 Related Work

2.1 Few-Shot Learning

Few-shot learning (FSL) aims to learn a classifier from a set of base classes with abundant labeled samples, then adapt to a set of novel classes with few labeled data [26]. Existing studies on FSL can be mainly divided into two categories. Metric-based methods aim to learn a good feature encoder and an appropriate metric function. The feature encoder provides reliable feature maps for the metric function to categorize novel samples according to the nearest neighbor principles. For example, ProtoNet [17] utilizes CNN to extract features and minimizes the euclidean distance between the query samples and the category prototype of support samples to make the correct classification. DeepEMD [27] also uses CNN as the feature encoder, which employs the earth mover distance to compare the discriminative representations composed of local features for image classification tasks. In the work of [28], a cycle optimization metric network is proposed to explore the commutative relationship between support and query samples, achieving performance improvement through a forward-backward network framework guided by the cycle-consistency loss. Optimization-based methods can quickly adapt to novel tasks in a few optimization steps. For example, MAML [29] manages to seek a good initialization that can be quickly adapted to new tasks with fine-tuning. MetaOptNet [30] proposes to formulate the use of linear classifiers as convex learning problems and exploit gradient-based techniques to obtain the suitable parameters of the feature encoder that generalizes well across tasks.

2.2 Few-Shot Learning on Image Classification

Few-shot image classification is a common and important task in few-shot learning, which primarily focuses on the challenge of classifying new categories with only a limited number of samples. Tseng et al. [31] design a feature transformation layer that enhances image features through affine transformations to simulate various feature distributions in different domains during the training phase. This allows the model to capture variations in feature distributions across different domains, resulting in strong generalization capabilities for cross-domain few-shot image classification tasks. Li et al. [32] employ a weight imprinting strategy and design a knowledge transfer mechanism to extensively exploit prior knowledge for inferring information about new categories. They also use a fine-tuning strategy during the training phase to simulate various feature distributions in different domains, reducing the impact of changes in the data domain on the model. Cao et al. [33] propose COMET, which learns feature embeddings of concepts through an independent concept learner and compares them with concept prototypes. COMET effectively summarizes information about concept dimensions, assigns a concept importance score to each dimension, and enhances the accuracy of fine-grained few-shot image classification. Huang et al. [34] design a novel Low-Rank Pairwise Alignment Bilinear Network (LRPABN) to capture subtle differences between support samples and query samples in fine-grained images to learn an effective distance metric.

2.3 Attention Mechanism

Attention mechanism is widely used in computer vision tasks. Intra-attention pays more attention to the internal connections of the input data. The queries, keys, and values in intra-attention come from the same data source, and are all obtained by the same matrix through different linear transformations. Shaw et al. [35] extend the intra-attention mechanism to efficiently consider representations of the relative positions between sequence elements. Yang et al. [36] model localness for intra-attention networks to enhance the ability of capturing useful local information. Pr et al. [37] design a simple intra-attention layer that performs global attention across all pixels, it also reduces training parameters while maintaining network performance. Reciprocal attention mechanism was first proposed for video question answering task [38] and then has been widely used in various computer vision tasks. For example, Lu et al. [39] utilize co-attention in video object segmentation between frames sampled from a video sequence. In few-shot learning, reciprocal attention focuses on features of both the query and support images at the same time, especially the most relevant regions in support-query image pairs. Hsieh et al. [40] deal with one-shot object detection task based on co-attention mechanism. Hou et al. [22] develop a meta-learner to compute the cross attention between support and query feature maps. Inspired by these works, we design a brand new adjacent channel attention that extracts adjacent channel groups of the central channel to generate attention maps. We also embed relative positional encoding into adjacent channel attention to boost feature representation by exploiting positional information. Then we combine the regional spatial and adjacent channel attention together to form the local encoded intra-attention module, which can capture more comprehensive and meaningful local feature information along two principal dimensions.

3 Methodology

3.1 Problem Definition

FSL aims to classify new categories utilizing few labeled samples. To alleviate the overfitting problem with such a small number of samples, we adopt the meta-learning framework with episodic training strategy. We denote the training set and the testing set in the form of image-label pairs, \({D_{train}=\{X_{tra},Y_{tra}}\}\) and \(D_{test}=\{X_{tst},Y_{tst}\}\), respectively, \(Y_{tra} \cap Y_{tst} = \emptyset \). Both \(D_{train}\) and \(D_{test}\) consist of multiple episodes. The episodes used in training simulate the test process, which can maintain the consistency of the training and testing stage. As for each episode, also called N-way K-shot task, is formed by randomly extracting N classes and K labeled images per class as the support set \(S=\left\{ x_{s}^{i}, y_{s}^{i}\right\} _{i=1}^{N\times K}\). Then randomly select r samples for each class in the remaining images of N classes as the query set \(Q=\left\{ x_{q}^{j}, y_{q}^{j}\right\} _{j=1}^{N\times r}\). We iteratively sample a N-way K-shot episode from \(D_{train}\) to learn a good model. The model learned in this way can be trained with a small number of support samples to achieve rapid identification of query samples for new tasks.

3.2 Overall Framework

As shown in Fig. 1, PANet mainly consists of three components: feature embedding module, local encoded intra-attention(LEIA) module and global encoded reciprocal attention(GERA) module. Given a pair of support and query images as input, the feature encoder can generate the base feature maps, \(F_s\) and \(F_q\in \mathbb {R} ^{C\times H\times W}\), where C denotes the channel size and \(H\times W\) denotes the spatial size of each feature map. Then the LEIA module sequentially focuses on important regions of base feature maps in space and channel dimensions to learn the regional spatial attention representations \(P_s\), \(P_q\in \mathbb {R} ^{C\times H\times W}\) and the adjacent channel attention representations \(D_s\), \(D_q\in \mathbb {R} ^{C\times H\times W}\). Following the LEIA module, the GERA module highlights the relevant regions and computes reciprocal spatial and channel attention maps \(A_{s}^{sp}\) and \(A_{q}^{sp}\), \(A_{s}^{ch}\) and \(A_{q}^{ch}\) between the pair of image representations, where superscript sp denotes the spatial dimension, ch denotes the channel dimension. Then the attended features are aggregated to produce the global attention representations, \(G_s\) and \(G_q\). The above process is conducted to all support images in parallel, and the query sample can be categorized as the class of its nearest support embedding according to the dual-centralization cosine similarity.

3.3 Local Encoded Intra-Attention(LEIA) Module

The LEIA module is designed to capture comprehensive local features of an image, involving both the encoded regional spatial attention and the adjacent channel attention. In our LEIA module, \(F_s\) and \(F_q\) sequentially pass through regional spatial attention and adjacent channel attention module to obtain local encoded intra-attention representations, \(D_s\) and \(D_q\). \(D_s\) and \(D_q\) contain both regional spatial information and adjacent channel information, improving the quality of feature representation. The Figs. 2 and 3 describe the two main components of LEIA module.

3.3.1 Regional Spatial Attention

Following the structure of stand-alone self-attention [37], our module also can capture the regional spatial information in feature representation. Figure 2 illustrates the detailed operation of regional spatial attention. We first apply unfold operation to extract the local region \(L\in \mathbb {R} ^{C\times k\times k}\) with spatial extent k centered around every pixel \(f\in \mathbb {R} ^{C\times 1\times 1}\) in feature maps \(F\in \mathbb {R} ^{C\times H\times W}\). Then we encode the position information into every local region and calculate the regional spatial attention exerted on every single pixel. Finally, we obtain the pixel \(p\in \mathbb {R} ^{C\times 1\times 1}\) with regional spatial attention and all the pixels with regional spatial attention can be represented as \(P\in \mathbb {R} ^{C\times H\times W}\).

Given a pixel \(f_{(i,j)}\in \mathbb {R} ^{C\times 1\times 1}\) and its local region of pixels in positions \((x,y)\in L_k\left( i,j \right) \), \(p_{(i,j)}\) can be computed as:

where the query \(q_{(i,j)}=W_{Q} f_{(i,j)}\), keys \(k_{(x,y)}=W_{K} f_{(x,y)}\), and values \(v_{(x,y)}=W_{V} f_{(x,y)}\) are linear transformations of the center pixel \(f_{(i,j)}\) and its neighbor pixels \(f_{(x,y)}\) in local region L. \(W_{Q}\), \(W_{K}\), \(W_{V}\) are all independent linear transformation layers. A row offset embedding \(e_{x-i}\in \mathbb {R} ^{\frac{C}{2}\times H\times 1}\) and a column offset embedding \(e_{y-j}\in \mathbb {R} ^{\frac{C}{2}\times 1\times W}\) are joined to form the relative positional embedding \(e_{(x-i, y-j)}\). The \(e_{(x-i, y-j)}\) is utilized to encode position information for \(f_{(x,y)}\). The computation in Eq. 1 is applied for every pixel \(f_{(i, j)}\) and the output \(p_{(i,j)}\) is concatenated together to generate the regional spatial attention representation P.

3.3.2 Adjacent Channel Attention

We propose a novel channel attention module to learn the latent relationships between channels of feature maps. Given the regional spatial attention representation P as input, we use pooling operation to aggregate spatial information and also apply unfold operation to extract the adjacent group \(J\in \mathbb {R} ^{k\times 1\times 1}\) with extent k centered around every channel. Then we compute the adjacent channel attention exerted on every single channel and adopt aggregation to acquire the \(d\in \mathbb {R} ^{1\times 1\times 1}\) with adjacent channel information. Finally, all the learned channels are combined together to form the adjacent channel attention representation \(D\in \mathbb {R} ^{C\times H\times W}\). Figure 3 shows the details process of our adjacent channel attention. Given a channel \(p_i\) and its neighbor channel \(p_{x}\) in adjacent group J where \(x\in J_{k}\left( i\right) \), the output \(d_{i}\) is computed as follows:

where \(\sigma \) is a Sigmoid function. We use offset \(e_{x-i}\in \mathbb {R} ^{k\times 1\times 1}\) to represent the relative channel embedding which can be utilized to encode position information for \(p_x\). The query \(q_i\), keys \(k_x\) and values \(v_x\) in Eq. 2 are denoted below:

Where \(W_Q\), \(W_K\) and \(W_V\) indicate independent linear transform operation. To compute the channel attention efficiently, we apply both the average-pooling and max-pooling to aggregate spatial information.

3.4 Global Encoded Reciprocal Attention(GERA) Module

We design a global encoded reciprocal attention(GERA) module to capture discriminative global features by interactively learning spatial and channel attention between the support and query samples. Figure 4 describes the structure of GERA module. The GERA module first transforms the local representations \(D_{s}\) and \(D_{q}\) to transformation representations \(T_{s}\) and \(T_{q}\). Then the module encodes the position information and provides the intermediate representations \(I_{s}\) and \(I_{q}\). After that, the module performs cross operation between the support and query representations and obtains the reciprocal attention maps \(A_{s}^{sp}\), \(A_{q}^{sp}\), \(A_{s}^{ch}\) and \(A_{q}^{ch}\) in both the spatial and channel dimensions. Finally, the global attention representations \(G_s\) and \(G_q\) are acquired by multiplying attention maps with the input representations of the GERA module in a sequential manner. The detailed computation is presented below.

The linear transformation for the local attention representations \(D_{s}\) and \(D_{q}\) can be represented as:

where \(W_{s}\) and \(W_{q}\) represent independent linear transform operation. \(T_{s}\) and \(T_{q}\in \mathbb {R} ^{C\times H\times W}\) denote the transformation representations. To better utilize the position information, we apply the relative position embedding \(E_{s}\) and \(E_{q}\) to encode \(T_{s}\) and \(T_{q}\):

where \(\oplus \) denotes the element addition. \(I_s\) and \(I_q\in \mathbb {R} ^{C\times H\times W}\) are intermediate representations. Then the reciprocal attention maps are calculated in turn:

Here, \(\otimes \) denotes matrix multiplication, superscript sp denotes the spatial dimension, \(A_{s}^{sp}\) and \(A_{q}^{sp}\in \mathbb {R} ^{(H\times W)\times (H\times W)}\) denote reciprocal spatial attention maps.

where \(\odot \) denotes the element multiplication. GP is the global pooling operation involving both the average-pooling and max-pooling. Superscript ch denotes the channel dimension. \(A_{s}^{ch}\) and \(A_{q}^{ch}\in \mathbb {R} ^{C\times 1\times 1}\) represent reciprocal channel attention maps. Finally, the global attention representations \(G_{s}\) and \(G_{q}\in \mathbb {R} ^{C\times H\times W}\) can be gained by the following calculation:

\(G_{s}\) and \(G_{q}\) are the ultimate representations of PANet owned with pluralistic attentions: regional spatial attention, adjacent channel attention, global spatial attention and global channel attention. These attentions are complementary to each other and conspicuously enhance the distinctiveness of features obtained from both the support and query samples.

3.5 Dual-centralization (DC) Cosine Similarity

In this section, we design a new metric approach to compute the similarity between the support and query representations. We first aggregate the spatial information of \(G_s\) and \(G_q\) using the global pooling operation and get final embeddings s and \(q\in \mathbb {R} ^{C}\). Then we perform dual-centralization on different dimensions of these embeddings to enhance the reliability of cosine similarity. The complete calculation process is as follows:

where N is the total number of support embeddings, M is the total number of query embeddings, and C is the total number of channels. The first centralization is applied among all the support or query embeddings. The second centralization is applied to the channel dimension of each embedding. After two centralizing operations, we get the adjusted embeddings \(s^{\prime }\) and \(q^{\prime }\in \mathbb {R} ^{C}\). Before calculating the similarity, we average the K support embeddings for each class to obtain a set of prototype embeddings \(\overline{s^{\prime }}\):

Then the cosine similarity is utilized to metric the distance between prototype and query embeddings. The similarity score logit is defined as:

where \(sim<\cdot>\) means the cosine similarity. By introducing two centralizing operations, our DC cosine similarity alleviates the disparity of data distribution on different dimensions, which makes the similarity metric more accurate and reliable compared with the general cosine similarity.

3.6 Loss Function

In the training process, the total loss L includes the global classification loss \(L_{global}\) and the episode loss \(L_{episode}\). The \(L_{global}\) learns inter-class relationships across the entire data distribution space by training a global classifier. The global classifier adopts a fully-connected layer followed by softmax to correctly classify query samples among overall I categories in the training set. And the \(L_{global}\) is computed as:

where \(f_q\in \mathbb {R} ^{C}\) is the average-pooled base representations. \([w_{1}^{\top }, w_{2}^{\top }, \cdots , w_{I}^{\top }]\) and \([b_1, b_2, \cdots , b_I]\) are weights and biases of the fully-connected layer, respectively. \(L_{episode}\) learns in each episode within few samples per class, which aims to calculate the similarity between query and support samples to realize the recognition of query samples. The \(L_{episode}\) is calculated as:

where \(logit_{n}=sim<\overline{s_{n}^{\prime }}\, \ q_{n}^{\prime }>\) is the similarity score between the prototype of the \(n_{th}\) class and the query sample from the \(n_{th}\) class, \(logit_{n^{\prime }}\) is similar. N is the total number of the classes in a N-way K-shot episode.

The overall loss function is defined as:

where \(\lambda \) is an adjusting hyperparameter that balances the effects of different loss terms. The model can be trained by optimizing L with gradient descent algorithm. The training process of our model is summarized in Algorithm 1.

4 Experiment

In this section, we evaluate our proposed PANet on four standard benchmarks (miniImageNet, CUB-200-2011, CIFAR-FS and tieredImageNet) and compare the results with recent state-of-the-art FSL methods on both 5-way 1-shot and 5-way 5-shot tasks. To verify the effectiveness of major components, we also perform a series of ablation studies and comparison experiments with other attention modules.

4.1 Dataset

miniImageNet is a subset of ImageNet [41]. It consists of 100 categories and each category includes 600 images. Following the instruction of [42], we split the dataset into train, val and test with 64, 16 and 20 classes respectively.

tieredImageNet is another subset of ImageNet. It consists of 608 categories and each category contains 1200 images. Following the setup provided by [43], the dataset is divided into 351 classes for training, 97 classes for validation and 160 classes for testing respectively.

CUB-200-2011 is a fine-grained bird classification dataset [44]. It comprises 11788 images from 200 classes. As suggested in [45], CUB is separated into 100, 50 and 50 categories for training, validation and testing respectively.

CIFAR-FS is a subset of CIFAR-100 [46]. It contains 600 classes and each class includes 100 images. Following the data preparation from [30], we divide the dataset into 64 train categories, 16 validation categories and 20 testing categories.

4.2 Implementation Details

Following the recent FSL works [47, 48], we employ ResNet12 as the backbone of our model and the input image size is set to 84\(\times \)84. During the training stage, the proposed PANet as well as the backbone with a fully-connected layer are trained parallel in a single stage which is different from the commonly used two-stage framework (pretraining followed by episodic meta-training) [19, 27]. Note that the fully-connected layer only provides auxiliary updates for the backbone in the training phase and it will be out of function during the validation and test period. We test 15 query samples for each class in an episode and adopt the average classification accuracy with 95% confidence intervals of randomly sampled 10,000 test episodes as the final results. We employ the stochastic gradient descent (SGD) as the optimizer with weight decay of 0.0005 and momentum of 0.9. The spatial and channel extent k are both set to 5. We train the model for 90 epochs with initial learning rate 0.1 and use a decay factor of 0.05 at the 60th, 70th and 80th epoch. All experiments are implemented by PyTorch toolkit.

4.3 Comparison with State-of-the-Art Methods

To evaluate the performance of our model, we conduct a series of comparison experiments on four standard FSL benchmarks in both 5-way 1-shot and 5-way 5-shot settings. As shown in Table 1, we conduct experiments on two classic datasets, miniImageNet and tieredImageNet. The results explicitly indicate that our model achieves the new state-of-the-art performance compared to other methods, especially in some with complex structure or larger backbone (WRN-28-10 [50, 52], ResNet34 [56], ViT [63], etc.). For example, our method outperforms the FewTRUE [63] 1.58% (1-shot), 1.21% (5-shot) on miniImageNet and 1.73% (1-shot), 1.49% (5-shot) on tieredImageNet. In addition, under the same backbone (ResNet12), compared with the recent method DeepEMDv2 [64], the performance gains of our method is 0.83% and 1.59% on miniImageNet, 0.40% and 0.94% on tieredImageNet. We also validate the performance of our model on CIFAR-FS dataset, as shown in Table 2. Compared with different backbones, our method is still competitive on few-shot classification tasks. It can be seen that our method achieves a 0.73% improvement on the 1-shot task and nearly 2% improvement on the 5-shot task compared to the state-of-the-art methods.

CUB-200-2021 is a fine-grained datasets, and it is more challenging to use it for few-shot image classification tasks. It can be found in Table 3, our method outperforms BaseTransformer [67] 0.70% on 1-shot task and outperforms RENet [58] 0.93% on 5-shot task. The comparison of these results convincingly prove that extracting comprehensive features of a single image and enhancing the transferability between the support and query images can acquire outstanding performance in few-shot classification tasks.

4.4 Comparison with Other Attention Modules

We conduct comparison experiments with other conventional attention modules to verify the advantage of our PANet. The experiments are performed on miniImageNet and CIFAR-FS in 5-way 1-shot setting. We compared our PANet and sub-attention modules (LEIA and GERA) with both single attention methods that only uses one type attention module and multi attention methods that involves several different attention modules. As can be seen in Table 4, the majority of multi attention modules outperform our enhanced baseline, while the single attention modules performs almost as well as our enhanced baseline. GLAM[73] and RENet[58] reach nearly identical accuracies on miniImageNet compared with our two sub-attention modules. However, PANet can achieve much better performance compared with other attention modules by combining LEIA and GERA. We also calculated the parameter count of the model in different attention type in Table 4. Compared with single-attention models, PANet contains more attention mechanisms, therefore requiring more parameters to achieve higher classification accuracy. Among multi-attention models, PANet exhibits a significant performance advantage with a relatively similar parameter count. The comparative results underscore the feasibility of our method.

4.5 Ablation Study

Analyze the effects of each component in our proposed method. In this work, we mainly propose three components: local encoded intra-attention(LEIA) module, global encoded reciprocal attention(GERA) module and dual-centralization (DC) cosine similarity. To investigate the effects of each component, we conduct thorough ablation experiments on miniImageNet and CIFAR-FS datasets in 5-way 1-shot setting. We adopt the ResNet12 with general cosine similarity as the baseline. Then we conduct ablation experiments to analyze the impact of the three components on the model. We first evaluate the effect of DC on the model and then carry out the following ablation experiments on the basis of enhanced baseline (baseline with DC). The detailed results in Table 5 demonstrate that each key component makes pivotal contribution to the final state-of-the-art performance. Our baseline can achieve 65.62\(\%\) on miniImageNet and 72.51\(\%\) on CIFAR-FS. We replace the general cosine similarity with our DC, the accuracy can increase by 1.13\(\%\) and 1.55\(\%\) respectively compared with the baseline. On this basis, we added LEIA to achieve 1.16\(\%\) and 0.86\(\%\) performance gains compared to the enhanced baseline. Then we only use GERA, which can achieve the same effect as LEIA, and has a performance improvement compared to the enhanced baseline. Finally, we achieve higher performance gains by combining LEIA and GERA together, improving more than 2\(\%\) compared with the enhanced baseline on miniImageNet and about 3\(\%\) over the the enhanced baseline on CIFAR-FS.

Moreover, we also analyze the training loss and classification accuracy on the miniImageNet in the 5-way 1-shot setting. Figure 5 shows the training loss of the model under different module combinations. For different combinations, the training loss of the model tends to converge after about 60 epochs. It also can be found that, PANet (DC+LEIA+GERA) has a lower loss compared with other methods, which shows that the combination of the three modules is more conducive to the optimization of the network. Figure 6 depicts the accuracy curves of the baseline and our different models, we can clearly see that our models have faster growth rate and higher accuracy values. Similarly, PANet achieves the highest classification accuracy. The ablation experiments strongly suggest that our proposed PANet is an effective method for FSL.

Arrangement of attention modules. In this experiment, we explore the effect of the arrangement of different submodules in LEIA on its performance. We compare five different ways of arranging submodules of LEIA: (1) regional spatial attention only, (2) adjacent channel attention only, (3) sequential local spatial-channel attention, (4) sequential local channel-spatial attention, and (5) parallel use of both attention modules. Since each module focuses on different dimensions, the order may affect the overall performance. Figure 7 summarizes the 5-way 1-shot experimental results on different attention arranging methods. From the results, we can find that capturing local attention sequentially achieves higher accuracy than doing in parallel on these four datasets. In addition, the order of channel attention and spatial attention also has a great impact on the performance of the model. It can be found that the combination order of spaces and channels can optimize the overall performance of the model. The combination of two attention methods outperforms the spatial or channel attention methods alone, which shows that adopting two kinds of attention is effective, and the optimal permutation strategy further improves the performance.

The choices of the hyperparameter. To select appropriate adjusting hyperparameters for balancing loss terms in Eq. 20, we conduct a series of comparison experiments on miniImageNet, tieredImageNet, CUB-200-2011 and CIFAR-FS. Figure 8a shows the 5-way 1-shot and 5-way 5-shot accuracies on the test set of miniImageNet, we can find that \(\lambda = 0.25\) is the best among different adjusting hyperparameters for both 5-way 1-shot and 5-way 5-shot settings. Different datasets have different statistics, and the optimal value of \(\lambda \) may be different on multiple datasets. According to experiment results shown from Fig. 8b–d, we can conclude that the choice of an optimal hyperparameter is closely concerned with the sort of dataset and not so relevant with the settings(5-way 1-shot or 5-way 5-shot). For tieredImageNet, we get the best performance on the test sets when the hyperparameter \(\lambda =0.25\). For CIFAR-FS, \(\lambda = 0.5\) is the optimum choice. The model achieves higher accuracy when choosing \(\lambda = 1.5\) on CUB-200-2011.

4.6 Feature Visualization Analysis

In this section, we provide visualization results to further understand the impact of our method. We apply Grad-cam [74] to PANet to visualize the model learning important regions in images. We randomly select two categories of images from the miniImageNet dataset and each category includes two images, then we feed them into the trained PANet. Figure 9 shows the learned weights visualized after the images pass through different attention modules in the PANet. The LEIA module includes regional spatial attention and adjacent channel attention, with the ability to focus and capture local features, making it able to highlight and emphasize targets in images than baseline methods. The GERA model demonstrates its capability to deactivate irrelevant features and locate the common region in the support-query image pair, indicating its proficiency in capturing these global features that are crucial for understanding the broader context and semantic relationships between objects or regions. With the subsequent integration of GERA module, the model exhibits a more accurate understanding of the overall localization of target objects. The highlighted regions in the heatmap increasingly overlap with the regions in the image containing the target objects. Both local and global features are valuable in image classification tasks, and the LEIA and GERA modules have been designed to leverage and exploit these features for accurate support-query image pair analysis.

Heatmaps of each module from PANet. Here we select two categories of four images for visualization. F is the output of the feature extractor, representing the base features; D is the output of LEIA module, representing the learned local features; G is the output of GREA module, representing the learned global attention features; subscripts s and q denote the support and query image, respectively

Comparison with other attention models. We compared four different types of attention mechanisms, SE represents the single channel attention; Local represents the single spatial attention; CAN represents the cross attention; CBAM represents the multi-attention. PANet is our proposed method which involves pluralistic attention mechanisms

We also conducted comparative experiments with other attention models in Fig. 10. For the same input image, we compare it through different attention model and visualize it through the Grad-CAM method. The visualization results show that our proposed PANet can capture broader and more accurate features compared with other attention models.

Figure 11 is t-SNE visualization of the baseline, LEIA, GERA and PANet. We randomly sample 500 images from five test classes of miniImageNet and acquire the embeddings of all images using the trained models in 5-way 1-shot and 5-way 5-shot settings, separately. Then we apply t-SNE to reduce the dimensions of these embeddings into 2D and utilize different colors to represent different categories. From Fig. 11a, we can observe that the class boundaries are fuzzy for the embeddings produced by the baseline. Moreover, samples of the same category are scattered and samples of different categories are overlapped. By adding the LEIA, different classes get roughly precise boundaries in Fig. 11b. In addition, samples of the same category in Fig. 11c become more compact by applying the GERA. As shown in Fig. 11d, with the joint effect of two modules, our proposed method can generate embeddings with more gathering clusters and discriminative class boundaries. In the 5-way 5-shot setting, the visualization is better due to more training samples of the model. From Fig. 11e–h, we can find that the clusters are more compact and the category distinctions are more evident compared with the corresponding 5-way 1-shot visualization results.

5 Conclusion

In this work, we have designed a novel attention framework (PANet) for few-shot classification task, which involves pluralistic attention mechanism including local attention, global attention, spatial attention, channel attention, intra-attention and reciprocal attention. Our model can obtain discriminative representations by extracting comprehensive features of every single image with local encoded intra-attention(LEIA) module and capturing the most relevant regions of support-query sample pairs with global encoded reciprocal attention(GERA) module. Besides, with the contribution of dual-centralization (DC) cosine similarity, the distance between the support and query representations can be computed accurately. The experiment results demonstrate that PANet achieves new state-of-the-art performance on four standard FSL benchmarks and every essential component in the framework plays a crucial role. In future research, we plan to apply our method to other tasks, like semantic segmentation and object detection.

Data availability statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Collier M, Mustafa B, Kokiopoulou E, Jenatton R, Berent J (2021) Correlated input-dependent label noise in large-scale image classification. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1551–1560

Sellami A, Tabbone S (2022) Deep neural networks-based relevant latent representation learning for hyperspectral image classification. Pattern Recogn 121:108224

Zhou B, Krähenbühl P (2022) Cross-view transformers for real-time map-view semantic segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 13760–13769

Strudel R, Garcia R, Laptev I, Schmid C (2021) Segmenter: transformer for semantic segmentation. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 7262–7272

Wang C-Y, Bochkovskiy A, Liao H-YM (2023) Yolov7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 7464–7475

Li S, Liu Y, Zhang Y, Luo Y, Liu J (2023) Adaptive generation of weakly supervised semantic segmentation for object detection. Neural Process Lett 55(1):657–670

Valy D, Verleysen M, Chhun S, Burie J-C (2018) Character and text recognition of khmer historical palm leaf manuscripts. In: 2018 16th international conference on frontiers in handwriting recognition (ICFHR), pp 13–18. IEEE

Huang M, Liu Y, Peng Z, Liu C, Lin D, Zhu S, Yuan N, Ding K, Jin L (2022) Swintextspotter: Scene text spotting via better synergy between text detection and text recognition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 4593–4603

Mu X, Lu J, Watta P, Hassoun MH (2009) Weighted voting-based ensemble classifiers with application to human face recognition and voice recognition. In: 2009 international joint conference on neural networks, pp 2168–2171. IEEE

Khotimah K, Santoso AB, Ma’arif M, Azhiimah AN, Suprianto B, Sumbawati MS, Rijanto T (2020) Validation of voice recognition in various google voice languages using voice recognition module v3 based on microcontroller. In: 2020 third international conference on vocational education and electrical engineering (ICVEE), pp 1–6. IEEE

Huang S-W, Lin C-T, Chen S-P, Wu Y-Y, Hsu P-H, Lai S-H (2018) Auggan: cross domain adaptation with gan-based data augmentation. In: Proceedings of the European conference on computer vision (ECCV), pp 718–731

Kim J-H, Hwang Y (2022) Gan-based synthetic data augmentation for infrared small target detection. IEEE Trans Geosci Remote Sens 60:1–12

Long M, Cao Y, Wang J, Jordan M (2015) Learning transferable features with deep adaptation networks. In: International conference on machine learning, pp 97–105. PMLR

Deng W, Liao Q, Zhao L, Guo D, Kuang G, Hu D, Liu L (2021) Joint clustering and discriminative feature alignment for unsupervised domain adaptation. IEEE Trans Image Process 30:7842–7855

Kang G, Jiang L, Yang Y, Hauptmann AG (2019) Contrastive adaptation network for unsupervised domain adaptation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 4893–4902

He Y, Zang C, Zeng P, Dong Q, Liu D, Liu Y (2023) Convolutional shrinkage neural networks based model-agnostic meta-learning for few-shot learning. Neural Process Lett 55(1):505–518

Snell J, Swersky K, Zemel R (2017) Prototypical networks for few-shot learning. Adv Neural Inf Process Syst 30

Sung F, Yang Y, Zhang L, Xiang T, Torr PH, Hospedales TM (2018) Learning to compare: Relation network for few-shot learning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1199–1208

Ye H-J, Hu H, Zhan D-C, Sha F (2020) Few-shot learning via embedding adaptation with set-to-set functions. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8808–8817

Vinyals O, Blundell C, Lillicrap T, Wierstra D et al (2016) Matching networks for one shot learning. Adv Neural Inf Process Syst 29

Guo M-H, Xu T-X, Liu J-J, Liu Z-N, Jiang P-T, Mu T-J, Zhang S-H, Martin RR, Cheng M-M, Hu S-M (2022) Attention mechanisms in computer vision: a survey. Comput Vis Med 8:331–368

Hou R, Chang H, Ma B, Shan S, Chen X (2019) Cross attention network for few-shot classification. Adv Neural Inf Process Syst 32

Hui B, Zhu P, Hu Q, Wang Q (2019) Self-attention relation network for few-shot learning. In: 2019 IEEE international conference on multimedia & expo workshops (ICMEW), pp 198–203. IEEE

Jiang Z, Kang B, Zhou K, Feng J (2020) Few-shot classification via adaptive attention. arXiv preprint arXiv:2008.02465

Wang X, Girshick R, Gupta A, He K (2018) Non-local neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7794–7803

Wang Y, Yao Q, Kwok JT, Ni LM (2020) Generalizing from a few examples: a survey on few-shot learning. ACM Comput Surv 53(3):1–34

Zhang C, Cai Y, Lin G, Shen C (2020) Deepemd: Few-shot image classification with differentiable earth mover’s distance and structured classifiers. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 12203–12213

Liu Q, Cao W, He Z (2023) Cycle optimization metric learning for few-shot classification. Pattern Recogn 139:109468

Finn C, Abbeel P, Levine S (2017) Model-agnostic meta-learning for fast adaptation of deep networks. In: International conference on machine learning, pp 1126–1135. PMLR

Lee K, Maji S, Ravichandran A, Soatto S (2019) Meta-learning with differentiable convex optimization. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10657–10665

Tseng H-Y, Lee H-Y, Huang J-B, Yang M-H (2019) Cross-domain few-shot classification via learned feature-wise transformation. In: International conference on learning representations

Li M, Wang R, Yang J, Xue L, Hu M (2021) Multi-domain few-shot image recognition with knowledge transfer. Neurocomputing 442:64–72

Cao K, Brbic M, Leskovec J (2020) Concept learners for few-shot learning. In: International conference on learning representations

Huang H, Zhang J, Zhang J, Xu J, Wu Q (2020) Low-rank pairwise alignment bilinear network for few-shot fine-grained image classification. IEEE Trans Multimed 23:1666–1680

Shaw P, Uszkoreit J, Vaswani A (2018) Self-attention with relative position representations. arXiv preprint arXiv:1803.02155

Yang B, Tu Z, Wong DF, Meng F, Chao LS, Zhang T (2018) Modeling localness for self-attention networks. In: Proceedings of the 2018 conference on empirical methods in natural language processing, pp 4449–4458

Ramachandran P, Parmar N, Vaswani A, Bello I, Levskaya A, Shlens J (2019) Stand-alone self-attention in vision models. Adv Neural Inf Process Syst 32

Lu J, Yang J, Batra D, Parikh D (2016) Hierarchical question-image co-attention for visual question answering. Adv Neural Inf Process Syst 29

Lu X, Wang W, Ma C, Shen J, Shao L, Porikli F (2019) See more, know more: Unsupervised video object segmentation with co-attention siamese networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3623–3632

Hsieh T-I, Lo Y-C, Chen H-T, Liu T-L (2019) One-shot object detection with co-attention and co-excitation. Adv Neural Inf Process Syst 32

Deng J, Dong W, Socher R, Li L-J, Li K, Fei-Fei L (2009) Imagenet: a large-scale hierarchical image database. In: 2009 IEEE conference on computer vision and pattern recognition, pp 248–255. IEEE

Ravi S, Larochelle H (2016) Optimization as a model for few-shot learning

Ren M, Triantafillou E, Ravi S, Snell J, Swersky K, Tenenbaum JB, Larochelle H, Zemel RS (2018) Meta-learning for semi-supervised few-shot classification. arXiv preprint arXiv:1803.00676

Wah C, Branson S, Welinder P, Perona P, Belongie S (2011) The caltech-ucsd birds-200-2011 dataset

Zhang B, Li X, Ye Y, Huang Z, Zhang L (2021) Prototype completion with primitive knowledge for few-shot learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3754–3762

Krizhevsky A, Hinton G, et al (2009) Learning multiple layers of features from tiny images

Rizve MN, Khan S, Khan FS, Shah M (2021) Exploring complementary strengths of invariant and equivariant representations for few-shot learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10836–10846

Liu Y, Zhang W, Xiang C, Zheng T, Cai D, He X (2022) Learning to affiliate: Mutual centralized learning for few-shot classification. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 14411–14420

Oreshkin B, Rodríguez López P, Lacoste A (2018) Tadam: task dependent adaptive metric for improved few-shot learning. Adv Neural Inf Process Syst 31

Qiao S, Liu C, Shen W, Yuille AL (2018) Few-shot image recognition by predicting parameters from activations. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7229–7238

Sun Q, Liu Y, Chua T-S, Schiele B (2019) Meta-transfer learning for few-shot learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 403–412

Rusu AA, Rao D, Sygnowski J, Vinyals O, Pascanu R, Osindero S, Hadsell R (2018) Meta-learning with latent embedding optimization. In: International conference on learning representations

Chen W-Y, Liu Y-C, Kira Z, Wang Y-CF, Huang J-B (2019) A closer look at few-shot classification. In: International conference on learning representations

Liu Y, Lee J, Park M, Kim S, Yang E, Hwang S, Yang Y (2019) Learning to propagate labels: Transductive propagation network for few-shot learning. In: 7th international conference on learning representations, ICLR 2019

Li H, Eigen D, Dodge S, Zeiler M, Wang X (2019) Finding task-relevant features for few-shot learning by category traversal. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1–10

Mangla P, Kumari N, Sinha A, Singh M, Krishnamurthy B, Balasubramanian VN (2020) Charting the right manifold: Manifold mixup for few-shot learning. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp 2218–2227

Fei N, Lu Z, Xiang T, Huang S (2020) Melr: Meta-learning via modeling episode-level relationships for few-shot learning. In: International conference on learning representations

Kang D, Kwon H, Min J, Cho M (2021) Relational embedding for few-shot classification. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 8822–8833

Xu C, Fu Y, Liu C, Wang C, Li J, Huang F, Zhang L, Xue X (2021) Learning dynamic alignment via meta-filter for few-shot learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 5182–5191

Zhao J, Yang Y, Lin X, Yang J, He L (2021) Looking wider for better adaptive representation in few-shot learning. In: Proceedings of the AAAI conference on artificial intelligence, vol 35, pp 10981–10989

Luo X, Wei L, Wen L, Yang J, Xie L, Xu Z, Tian Q (2021) Rectifying the shortcut learning of background for few-shot learning. Adv Neural Inf Process Syst 34:13073–13085

Xie J, Long F, Lv J, Wang Q, Li P (2022) Joint distribution matters: deep Brownian distance covariance for few-shot classification. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 7972–7981

Hiller M, Ma R, Harandi M, Drummond T (2022) Rethinking generalization in few-shot classification. Adv Neural Inf Process Syst 35:3582–3595

Zhang C, Cai Y, Lin G, Shen C (2022) Deepemd: differentiable earth mover’s distance for few-shot learning. IEEE Trans Pattern Anal Mach Intell 45:5632–5648

Liu Q, Chen Y, Cao W (2023) Dual-domain reciprocal learning design for few-shot image classification. Neural Comput Appl, pp 1–14

Gidaris S, Bursuc A, Komodakis N, Pérez P, Cord M (2019) Boosting few-shot visual learning with self-supervision. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 8059–8068

Maniparambil M, McGuinness K, O’Connor N (2022) Basetransformers: attention over base data-points for one shot learning. arXiv preprint arXiv:2210.02476

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Huang S, Wang Q, Zhang S, Yan S, He X (2019) Dynamic context correspondence network for semantic alignment. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 2010–2019

Wang Q, Wu B, Zhu P, Li P, Zuo W, Hu Q (2020) Eca-net: Efficient channel attention for deep convolutional neural networks. In: 2020 IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 11531–11539

Fu J, Liu J, Tian H, Li Y, Bao Y, Fang Z, Lu H (2019) Dual attention network for scene segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3146–3154

Woo S, Park J, Lee J-Y, Kweon IS (2018) Cbam: Convolutional block attention module. In: Proceedings of the european conference on computer vision (ECCV), pp 3–19

Song CH, Han HJ, Avrithis Y (2022) All the attention you need: Global-local, spatial-channel attention for image retrieval. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp 2754–2763

Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D (2017) Grad-cam: Visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE international conference on computer vision, pp 618–626

Acknowledgements

This work is supported by National Natural Science Foundation of China, No.61771322, No.61971290, Shenzhen foundation for basic research JCYJ20220531100814033, and the Shenzhen Stabilit Support General Project (Category A) 20200826104014001. This work is also supported by Center for Computational Science and Engineering at Southern University of Science and Technology.

Author information

Authors and Affiliations

Contributions

Wenming Cao, Tianyuan Li and Qifan Liu wrote the main manuscript text and Zhiquan He. prepared figures and data. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cao, W., Li, T., Liu, Q. et al. PANet: Pluralistic Attention Network for Few-Shot Image Classification. Neural Process Lett 56, 209 (2024). https://doi.org/10.1007/s11063-024-11638-5

Accepted:

Published:

DOI: https://doi.org/10.1007/s11063-024-11638-5