Abstract

Monocular depth estimation (MDE) has made great progress with the development of convolutional neural networks (CNNs). However, these approaches suffer from essential shortsightedness due to the utilization of insufficient feature-based reasoning. To this end, we propose an effective parallel CNNs and Transformer model for MDE via dual attention (PCTDepth). Specifically, we use two stream backbones to extract features, where ResNet and Swin Transformer are utilized to obtain local detail features and global long-range dependencies, respectively. Furthermore, a hierarchical fusion module (HFM) is designed to actively exchange beneficial information for the complementation of each representation during the intermediate fusion. Finally, a dual attention module is incorporated for each fused feature in the decoder stage to improve the accuracy of the model by enhancing inter-channel correlations and focusing on relevant spatial locations. Comprehensive experiments on the KITTI dataset demonstrate that the proposed model consistently outperforms the other state-of-the-art methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Monocular depth estimation (MDE) refers to the task of extracting distance information between objects in a scene and a camera using only a single image. Accurate depth estimation is a critical step for enabling three-dimensional reconstruction and a range of downstream tasks. In particular, a high-quality depth map prediction can serve as a useful prior in RGB image processing, making it applicable in various academic and industrial applications, including Simultaneous Localization and Mapping (SLAM) [1], autonomous driving [2], scene reconstruction [3], object detection [4], semantic segmentation [5], and other domains.

In general, there are two primary methods for obtaining depth information: depth sensor-based or image-based depth estimation [6,7,8,9,10,11]. The former is to use depth sensors, such as Kinect, Velodyne Lidar, and ZED binocular cameras. Although depth sensors have been widely used in different scenarios, they suffer from expensive prices, high-energy consumption, and less structured information. Most images are captured using ordinary cameras, which only provide color information about the scene. As a result, image-based depth estimation has become the focus of research [12,13,14,15,16], which involves using single or multiple visible light image sequences of the same scene to estimate depth. Image-based depth estimation can be classified as monocular depth estimation (MDE), binocular depth estimation (BDE), and multi-view depth estimation (MVDE). However, BDE and MVDE pose significant challenges, such as computational requirements, memory usage, and reliance on different camera parameters. In contrast, MDE using a single visible light image to estimate depth is a low-cost and accessible technique for depth estimation. Therefore, MDE has become an essential technique for depth estimation in various applications.

MDE is an ill-posed problem because multiple 3D scenes can be projected into the same 2D image. Early works [6, 17,18,19] try to solve this problem by calculating the depth value per pixel in a given image using hand-crafted features, such as texture, shadow, and geometric constraints. Besides, some methods [6, 11, 20] attempt to employ the Markov Random Fields (MRFs) or Condition Random Fields (CRFs) to transform the depth estimation into energy optimization, finding a depth configuration that best matches the depth of the actual scene. Although traditional algorithms can generate promising prediction results in simple scenarios, they struggle to effectively solve complex scenes due to issues such as occlusion, changes in lighting, and texture loss.

To address these challenges, deep learning-based neural networks (such as VGG, ResNet, PVT, and Transformer), thanks to the great success of using Convolutional Neural Networks (CNNs) [7], have been widely adopted in MDE tasks [13, 21, 22] and have made significant progress. The deep learning-based method generally uses continuous regression to recover a monocular depth map by minimizing the error between the actual depth of the ground and the predicted depth. By incorporating the fusion of features related to object appearance, geometry, semantics, spatial relations, and other factors, deep learning-based methods can solve the ill-posed problem of MDE more effectively. For instance, Song et al. [23] used Laplacian pyramids in the decoder stage to fully use the underlying properties of well-encoded features. Typically, the encoder-decoder structure is usually used in MDE, where the encoder gradually extracts multi-scale features, and the decoder restores the details of objects using multi-level up-sampling and residual connection for high-resolution prediction. However, the continuous down-sampling in CNNs-based depth estimation may cause the loss of some essential feature information, which is unrecoverable in the decoder stage. To tackle this, many methods have focused on improving the decoder [12, 13, 23,24,25] to obtain more intensive feature maps and prevent the loss of some essential information in continuous up-sampling. Although these methods show improvement in model performance, CNNs-based approaches still suffer from limited receptive fields and less global representation. Therefore, it is crucial to obtain dense long-range dependencies in the encoder stage to achieve accurate depth estimation.

In response to these problems, researchers have incorporated the Transformer into MDE [21, 26], as the Transformer has demonstrated success in handling global dependencies in other tasks. However, the pure Transformer model lacks the ability to model local information due to the absence of spatial inductive bias. To achieve more satisfactory results, some methods have started to combine Transformer with CNNs [13, 22, 26,27,28] to leverage the strengths of both approaches. This combination allows for better performance in MDE tasks by effectively modeling both local and global information. Most existing works in the design of fusion structure use a serial structure [13, 22, 26], as illustrated in Fig. 1b, to further process the features obtained from CNNs or Transformer, thereby enhancing some of the features and achieving some performance improvement. However, if the features acquired from the previous stage are not accurate enough, it may affect the subsequent features. Alternatively, fewer methods adopt a parallel strategy [27, 28], as shown in Fig. 1a, to obtain the last layer of features for fusion through a parallel backbone network to improve the effectiveness and performance of the model. Unfortunately, this may bring the problem of insufficient fusion. Therefore, how the two features can be effectively fused is a major challenge in applying the combination of Transformer and CNN to depth estimation. Secondly, the combination of Transformer and CNN can have a significant performance improvement, but the number of parameters this brings is also considerable, while the downstream tasks of depth estimation (e.g., autonomous driving, SLAM, etc.) require smaller parameters as well as better performance, which implies that a better balance between the parameters and the performance is needed when designing the network mechanism.

In this paper, we propose a novel layer parallel network structure, PCTDepth, as presented in Fig. 1c, to fuse the feature maps of different layers of two backbone networks to increase the diversity of features. Different from most existing MDE methods using a single feature encoder with a single backbone to extract depth cues, our PCTDepth adopts a two-stream structure to parallelly extract local and global cues via CNNs and Transformer networks. The advantages of this are twofold: (1), it significantly increases the feature space of depth information, and (2) it takes advantage of the complementary effects of local and global information. Specifically, we first use parallel ResNet and Swin Transformer as encoders to extract local adjacent information features and long-distance dependencies, respectively. Through the parallel fusion of ResNet and Transformer, dense local and global information can be obtained at the encoder stage to avoid the loss of important feature information due to repeated down-sampling operations. Secondly, we construct an efficient hierarchical fusion module (HFM) to promote the effective fusion of hierarchical outputs from the ResNet and the Transformer. Finally, we incorporate a dual attention module (DAM) in the decoder stage, which splits the fused features into two parts for processing to adjust the channel weights and weight features at different spatial locations, and thus gradually reconstruct the depth map from coarse to fine scales.

The main contributions of this work are summarized as follows:

-

We propose a parallel architecture PCTDepth that combines CNNs and Transformer for MDE, which makes it easy for the model to acquire dense features in the encoder phase, preparing the building blocks for the next fusion step.

-

We develop an efficient hierarchical fusion module (HFM) that facilitates the seamless integration of long-range dependencies and local detail information, thus complementing each other.

-

We design a dual attention module (DAM) module that splits the fused features into two parts, which can dynamically adjust the importance of channels and spatial dimensions in the feature map for different levels of features using an attention mechanism to improve the accuracy of the model.

2 Related Work

2.1 Supervised Monocular Depth Estimation

Supervised monocular depth estimation trains the model using the ground truth depth map and applies it to estimate the corresponding depth map from a single image. Liu et al. [9] used continuous CRFs to optimize the depth map based on the regional similarity of the images. Saxena et al. [20] were the first to propose a method based on supervised learning to predict depth from local features, which was then refined through MRFs by combining global context information to improve the local prediction. On this basis, Saxena et al. [6] designed multi-scale MRFs and assumed that all scenes are horizontally aligned with the ground, which used the predicted depth map to reconstruct the scene structure. Liu et al. [8] formulated MDE as a discrete-continuous optimization problem and obtained its solution using particle belief propagation. It can be seen that early works were mainly based on geometric models, which are usually only useful in specific scenarios.

Later, with the great success of CNNs in various tasks, the most recent methods based on CNNs [12, 13, 23, 29,30,31,32] for MDE currently dominate the task. Eigen et al. [7] were the first to introduce deep learning to depth estimation, which utilized a two-branch strategy to initially predict global information for the entire image followed by adjusting the predicted local information for the image. Based on the above work, the Eigen team [29] proposed a unified multi-scale network framework that utilized a deeper VGG-based network as the basic network, where the third fine-scale network was employed to further add details and improve resolution. Yin et al. [14] introduced virtual normal to solve the generalization problem of MDE while maintaining as much geometric information as possible, using three randomly selected points in the reconstructed 3D space as geometric constraints. In addition, some approaches [25, 33] treated the task as an ordinal regression problem, where a multi-scale network was used to obtain multi-level information. Considering the nature of the scene from far to near, Cao et al. [10] first treated the MDE problem as a classification problem at the pixel level. Lee et al. [12] proposed multi-scale local planar guidance to guide the densely encoded features. Wang et al. [24] proposed a semantic divide-and-conquer strategy that combines deep networks with shallow models to reduce MDE tasks to individual semantic fragments. Although many methods have attempted to improve the decoder and have shown promising results, current depth estimation methods with convolution operations at the encoder stage have limited ability to capture long-distance relationships between objects due to their restricted receptive field. To overcome this limitation, we propose a strategy that combines the Transformer and CNNs layer through parallel fusion, which enhances the ability of the encoder to acquire dense feature information.

2.2 Transformer

Because of the Transformer’s excellent performance in natural language processing (NLP), many researchers have started to introduce the Transformer into the field of computer vision, overcoming the limitations of RNNs and significantly improving the performance of the models. Vaswani et al. [34] designed a self-attentive mechanism with a multilayer perceptron (MLP) to overcome the limitations (inability to parallelize, low training efficiency, and short memory length) of previous RNNs in NLP. Dosovitskiy et al. [35] were the first to use Vision Transformer (ViT) to solve image classification tasks, and the success of ViT in image classification tasks accelerated the introduction of Transformer to other tasks [36, 37]. The standard Transformer still has challenges in terms of large resolution and varying scales when used directly in computer vision. In response to these challenges, Liu et al. [38] proposed Swin Transformer, whose representation is computed with Shifted windows that can achieve global modeling capability.

However, due to its lack of spatial inductive bias, pure Transformers cannot effectively recover detailed features. Therefore, only a few researchers have attempted to use Transformers for monocular depth estimation tasks [13, 21, 22, 26]. Bhat et al. [13] first introduced the Transformer to MDE by proposing the AdaBins network, which used a baseline encoder-decoder architecture followed by a mini-ViT that divides the depth range into bins. Ranftl et al. [21] proposed a DPT network using ViT as an encoder to obtain a global receptive field at different stages with an additional convolutional decoder for dense prediction. Yang et al. [22] embedded ViT in the middle of the entire network, first exploiting the inductive bias of ResNet in modeling spatial correlations and later exploiting the power of Transformer in modeling global relationships. These methods [13, 22, 26] use a serial strategy to obtain higher-level feature representations to further improve performance. However, this makes it possible for some useful feature information to be lost when passing features between each network, thus affecting the quality of the fused features. Therefore, a few methods [27, 28] adopt a parallel structure, such as [28], which employs three encoders to obtain helpful information operating at different spatial resolutions, and then integrates these pieces of information using a multi-scale fusion block. However, this approach may suffer from insufficient feature fusion and semantic information. In contrast to previous methods, we propose an efficient hierarchical fusion module (HFM) that facilitates the seamless integration of long-range dependencies and local detail information, thus complementing each other.

Overview of our proposed network architecture. DAM: dual attention module, HFM: hierarchical fusion module. First, we use the Swin Transformer blocks and ResNet blocks of the encoder to obtain the features, respectively. Then, the proposed HFM is used to combine the features of different levels and resolutions of Swin Transformer and ResNet. Finally, the original resolution is restored for dense prediction by up-sampling and convolution operations with the help of DAM module

2.3 Attention Mechanism

Attention methods have been used with great success in many computer vision tasks, including image classification, object detection, and semantic segmentation. For pixel-level prediction, Chen et al. [39] first described an attention model to incorporate multi-scale features learned by FCN for semantic segmentation. Later, Hu et al. [40] introduced a Squeeze-and-Excitation (SE) block that adaptively recalibrates channel-wise feature responses by explicitly modeling interdependencies between channels. Based on the SE block, Zhang et al. [41] improved the Squeeze block and designed EncNet, a network equipped with a channel attention mechanism to model the global environment. Wang et al. [42] improved the Excitation block and designed ECANet, which introduced a one-dimensional convolution operation in the channel dimension to enhance the model’s representation capability while maintaining computational efficiency. Woo et al. [43] extended it and proposed the CBAM module, which introduces an intermediate feature map and uses the multiplication of the attention map and the input feature map for adaptive feature refinement. Wang et al. [44] proposed non-local operations as a generic family of building blocks for capturing long-range dependencies. Fu et al. [45] proposed a dual-attention network for scene segmentation by using two independent attention modules to model the semantic dependencies associated with the spatial and channel dimensions. Recently, Huynh et al. [46] designed a novel attention mechanism that incorporates a non-local coplanarity constraint to the network for the MDE task. As attention mechanisms have been demonstrated to improve model performance, an increasing number of methods [31, 47,48,49] have adopted them. We adopt a dual attention module (DAM) module to split the fusion features obtained from the HFM module into two parts to further improve the accuracy by dynamically adjusting the importance of the channel and spatial dimensions in the feature maps with different attentions.

3 Methodology

3.1 Overall Architecture

Our overall architecture is shown in Fig. 2, which incorporates the popular dense prediction encoder-decoder architecture, with the input RGB map \(\ I \rightarrow \textit{R}^{H\times W \times 3}\) and the output depth map\(\ D \rightarrow \textit{R}^{H\times W \times 1}\). We adopt a dual-branch network architecture that includes a Swin Transformer branch and a ResNet branch, which are used to obtain the feature maps at different levels and resolutions. Then, the proposed hierarchical fusion module (HFM) is used to combine the features from both branches to capture global and local information simultaneously. Finally, our dual attention module (DAM) is used to recover the original resolution for dense prediction by performing up-sampling and convolution operations. Our hybrid architecture allows us to exploit the Transformer’s ability to capture global contextual information and the CNNs’ ability to efficiently acquire local information efficiently, which provides a promising strategy for achieving satisfactory results.

3.2 Encoder

Dense prediction tasks commonly utilize encoder-decoder architectures, such as in monocular depth estimation tasks [50,51,52,53,54,55]. The backbone, also known as the encoder, is responsible for extracting image-rich features, while the decoder incorporates these features to generate a final dense prediction. Over the years, several backbone networks have been proposed in the field of computer vision, including ResNet and the widely-used Swin Transformer [38, 50], which is known for its hierarchical nature, computational efficiency, and superior performance. To enhance the diversity and expressiveness of the features and provide a better depiction of the image content, we adopt two backbone networks to simultaneously extract local features and global dependencies in parallel. The overall architecture of the model is depicted in Fig. 2.

3.3 HFM Module

We introduce an efficient parallel hierarchical interaction fusion feature module (HFM), depicted in Fig. 3. Unlike Hwang et al. [27], which utilizes residual blocks to improve local features, our objective in developing HFM is to comprehensively integrate local detailed features from the ResNet branch and global features from the Transformer branch using adaptive feature alignment. The module generates four fused features \(\{F_{i}\}_{i=1}^{4}\) with a channel number of 64, reducing model complexity, enhancing computational efficiency, and preventing overfitting. We obtain the position relationship between the vectors in the Transformer and ResNet branch to get the feature \(\{x_{st}^{i}\}_{i=1}^{4}\) and \(\{x_{res}^{i}\}_{i=1}^{4}\). The feature \(\{x_{st}^{i}\}_{i=1}^{4}\), after passing through Block1, is first processed by a 3\(\times \)3 convolution operation to extract more distinctive features. Then, an up-sampling operation is performed to increase the feature resolution, expand the receptive field, and prepare for the subsequent feature fusion. As convolution and up-sampling operations can lead to some information loss, we utilize an adaptive feature alignment structure to obtain\(\ F_{t}\ \), which reduces information loss to a certain extent and enhances feature representation ability. The specific formula is as follows.

where \(\sigma \) indicates Sigmoid activation function. In this step, we manipulate the Transformer branch to help the model understand more clearly the relationships between different regions in the image.

The ResNet branch feature information is processed in a similar way. After passing through Block2, the feature \(\{x_{res}^{i}\}_{i=1}^{4}\) undergoes global average pooling (GAP) to reduce the dimensionality of the feature maps. This step compresses multiple feature maps into a single feature vector, reducing the risk of overfitting. In this step, we manipulate the ResNet branch to enhance the model’s perception of different features, improving its performance.

where \(\sigma \) indicates Softmax activation function.

Then, we concatenate the processed features \(F_{t}\) and \(F_{r}\) from the two branches to achieve the fusion of local and global information.

The resulting feature is further optimized using a pre-activation block which consists of BN, ReLU, and Conv (BRC). A typical convolution block consists of Conv, BN, and ReLU, discarding most of the negative values of the non-linear property of the ReLU activation in the last step. We mitigate this problem by using BRC to obtain the intermediate fusion feature \(F_{mid}\). Then, the optimized feature \(F_{mid}\) is concatenated with the initial features to preserve the detailed information and prevent information loss.

where Cat denotes concatenation operation. Finally, the concatenated feature is optimized again, resulting in the fused feature \(\{F_{i}\}_{i=1}^{4}\).

3.4 Decoder of DAM Attention Module

In the decoder stage, we obtain the fused features \(\{F_{i}\}_{i=1}^{4}\) of Swin Transformer and ResNet from the HFM module at resolution size [H/2, H/4, H/8, H/16]. To improve the accuracy of the model, we use the dual attention module to handle high-level semantic features and low-level features separately, as shown in Fig. 4. By using channel attention (CA) to process high-level features, the model is able to better capture global semantic information. Meanwhile, using spatial attention (SA) to process low-level features helps to improve the model’s sensitivity to local details.

To leverage high-level semantic features \(F_{3}\) and \(F_{4}\), we use the CA block to dynamically adjust channel weights. Firstly, we use GAP and GMP(global max pooling) to focus on important features in high-level representations. Secondly, we use concatenation operations and convolution operations to exploit correlations between channels and enhance the expressive power of relevant feature channels.

where Conv indicates a convolution operation with 3\(\times \)3 kernel, \(k\in \{3, 4\}\). The \(\sigma \) indicates Sigmoid activation function.

Conversely, for shallow-level features \(F_{1}\) and \(F_{2}\), here \(q\in \{1, 2\}\). we use the SA block to extract global features from the input feature map, and learn weight coefficients for different spatial locations to emphasize the features at different spatial locations. This enhances the model’s ability to focus on various spatial locations and improve its accuracy.

Afterward, the feature \(F_{4}^{c}\) undergoes an up-sampling operation to restore the image resolution to H/8. Next, we concatenate the feature \(F_{4}^{c}\) with \(F_{3}^{c}\), and perform another up-sampling operation. This process is repeated until it is fused with the multi-level fusion feature to get \(F^{'}\), as shown in equation (7) below.

where \(F_{i}^{'}\) denotes the \(i^{th}\) level of fused features. After obtaining the fused feature \(F_{1}^{'}\) from equation (7), we use it as input to equation (8).

where \(Last\_layer\) indicates two convolution operation with 3\(\times \)3 kernel and a ReLU activation function. Finally,the last prediction depth \(D^{pre}\) is obtained by applying the Sigmoid activation function.

3.5 Loss Function

Following previous work [12, 23], we also use the scale-invariant loss (SI) proposed by Eigen et al. [7] to supervise the training, which calculates the distance between the predicted output depth \({\hat{d}}_{i}\) and the ground truth depth map \(d_{i}\). The equation of SI loss is as follows:

where \(g_{i}=log{\hat{d}}_{i}-log{d}_{i}\), \(\lambda \)=0.5. T denotes the number of pixels having valid ground truth values.

4 Experiments

4.1 Datasets

KITTI [60] is a large-scale outdoor dataset that contains RGB and depth image pairs from autonomous driving scenes. Depth maps are generated by accumulating LiDAR measurements from the entire sequence. We use the KITTI dataset to validate the performance of the proposed model on the monocular depth estimation task. The test set and training set are divided according to the criteria proposed by Eigen et al. [7]. We use 23K images from 32 scenes for training, 697 images from the remaining 29 scenes for testing, and a maximum value of 80 m for evaluation.

4.2 Evaluation Metrics

We follow the standard evaluation scheme in previous work [10] and used the following quantitative evaluation metrics in our experiments, including mean absolute relative error (Abs Rel), mean squared relative error (Sq Rel), root mean squared error (RMSE), root mean squared log error (RMSE log) and the accuracy under the threshold (\(\delta _{i}<1.25^{i}\), i = 1, 2, 3). These error metrics are defined as:

where \(d_{i}^{*}\) and \(d_{i}\) are the ground-truth depth and predicted depth at pixel i, respectively, and T is the total number of pixels of the test images.

4.3 Implementation Details

We use the PyTorch framework to implement our proposed architecture and the experiments are performed on 2 NVIDIA GTX A4000 GPUs. The images in the KITTI [60] dataset are cropped to a size of 320 \(\times \) 320. For training, we use the one-cycle learning rate strategy of the Adam optimizer. The entire training is divided into two parts, with the learning rate increasing from 3e-5 to 1e-4 in the first half and decreasing from 1e-4 to 3e-5 in the second half. We set the batch size to 12 and the model converges at around 25 epochs.

4.4 Comparison to the State-of-the-Art

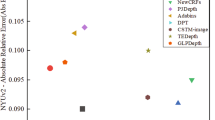

We compare our PCTDepth with the state-of-the-art methods on the KITTI dataset. We present the results from both quantitative and qualitative perspectives, which are illustrated in Table 1 and Fig. 5, respectively. The proposed PCTDepth is trained on the input range and tested on the depth values in [0 m, 80 m]. As can be seen from Table 1, PCTDepth outperforms existing depth estimation methods in terms of both error and correctness metrics, except for the Sq Rel error with \(\delta _{2}\) threshold, where we achieve a marginally lower score than AdaBins [13] by 0.002 and 0.001. The RMSE and RMSE log metrics measure the squared difference between the estimated depth and its corresponding GT, which amplifies the error in the apparently erroneous estimate. The lower the RMSE or RMSE log, the better the scene structure is recovered. As can be seen from Table 1, the proposed PCTDepth achieves the best results for both the RMSE and RMSE log metrics. Our RMSE result is 3.3% lower than the second-best result, and the RMSE log result is even 10.2% lower than the second-best. Additionally, our \(\delta _{1}<1.25\) is 0.009 higher than that of the TransDepth [22] which is the serial structure of CNNs and Transformers in the current latest method.

As shown in Fig. 5, our proposed PCTDepth can accurately estimate depth maps for complex urban scenes, including fine objects such as railings and road signs, as well as dynamic objects such as cars and pedestrians. For fine objects (\(1^{st}\),\(2^{nd}\), and \(5^{th}\) rows), our method produces depth maps with more complete details and smoother boundaries compared with other state-of-the-art methods, such as TransDepth [22], BTS [12], AdaBins [13], and DPT [21]. Moreover, for the cars and pedestrians (\(3^{rd}\) and \(4^{th}\) rows), our estimated depth maps better reflect the surface information of the multiple overlapping cars and people, with more complete and smoother contours. These qualitative results demonstrate the effectiveness of our proposed PCTDepth in recovering the scene structure of urban environments.

4.5 Ablation Study

This section aims to validate the effectiveness of our method by conducting ablation studies to assess the individual contributions of each module on the KITTI dataset. As shown in Table 2, the Base, B, H, and DAM represent ResNet34 combined with Swin Transformer, pre-activation block, hierarchical fusion module, and dual attention module, respectively. Table 2 demonstrates that the parallel strategy of combining ResNet with Transformer can significantly reduce errors, resulting in a Abs Rel error of 0.055. The use of the HFM fusion module can reduce various errors and increase the accuracy under the threshold. The DAM attention module can improve the \(\delta _{3}\) (\(\delta <1.25^{3}\)) metric to a state-of-the-art level of 0.999.

4.5.1 Verify the Effectiveness of the CNNs-Transformer Architecture

To verify the effectiveness of our proposed parallel network structure, we conduct ablation experiments on Transformer branches and CNNs branches, as shown in Table 3. We compared the effect of combining different ResNet networks with the Transformer on the KITTI dataset. R and Swin represent ResNet and Swin Transformer, respectively. The experiments show that the error of either the CNNs network alone or the Transformer network alone is greater than the combination of the two. Comparing the different ResNet networks, we observe that the combination of Swin Transformer and ResNet34 yields the best results. Additionally, Fig. 6 shows the results of our qualitative analysis, which can validate the effectiveness of our proposed hierarchical interaction parallel fusion architecture.

4.5.2 Verify the Effectiveness of the HFM Module

The comparison between depth maps generated with and without the HFM module is illustrated in Fig. 7. The results show that the module-free approach can capture large targets such as cars and railings, but the generated depth map’s boundary is more blurred. However, small targets like utility poles and street signs are difficult to capture, or not captured at all. In contrast, the HFM module not only captures the shape and size feature information of large objects, but also has good control of small target details such as utility poles. To verify the effectiveness of the HFM fusion module in terms of target size and edge clarity, we compare three objects: cars (large), billboards (medium), and railings (small). The experiments show that adding the HFM module reduces the depth loss by 6.81% compared to a parallel architecture using only Swin Transformer combined with CNNs (baseline). Additionally, using the Attention module, the depth loss is reduced by 7.62% compared to the baseline. These results are shown in Table 4.

4.5.3 Verify the Effectiveness of the DAM Module

In the decoder stage, we designed three attention module schemes as shown in Fig. 8 and Table 5. We compared the three attention module design options presented in Fig. 8a–c, with and without the attention module.

As can be seen from Table 5, all three strategies have improved the indicators. However, overall, the strategy (c) directly using the CA and SA of our design has a significant improvement for all indicators.

4.6 FLOPs, Params, and Epochs

In addition to the performance evaluation, we also compare the FLOPs and the number of parameters of some of the state-of-the-art methods to reduce parameters and FLOPs as much as possible without significantly reducing accuracy. We use the torchstat module in PyTorch to calculate the FLOPs and parameters of the model, which help analyze the complexity of the model. FLOPs (floating-point operations) refer to the number of floating-point operations and can be used to measure the complexity of an algorithm or model, as shown in Table 6. Our method has slightly higher FLOPs than DPT [21] and DenseDepth [61], as well as slightly higher Parameters than DenseDepth [61]. Although DPT [21] surpasses our method in terms of parameters, our parameters and FLOPs are significantly lower than those of the methods we compared against.

As shown in Table 7, we also compare the number of training epochs in which the model tended to converge during the training stage. Compared to some of the current methods, our approach achieves model convergence in fewer epochs.

5 Conclusion

In this paper, we propose a new parallel CNNs-Transformer hierarchical interactive fusion architecture with dual attention to complete the extraction of dense features in the encoder stage and avoid the absence of edge and detail features in the decoder stage. Specifically, we introduce an efficient HFM module and a DAM module to help achieve an effective fusion of Transformer global features with CNNs local features and to better resume high resolution. We validate the effectiveness of our proposed architecture on the KITTI dataset, and the results show that our approach has a competitive advantage over the state-of-the-art results. Although complex models may offer superior performance, they require significant computational resources, leading to increased training and inference times and costs. Therefore, we aim to explore model pruning and lightweight network design to address these limitations in our future research and achieve the goal of easy deployment to practical applications.

References

Tiwari L, Ji P, Tran QH, Zhuang B, Anand S, Chandraker M (2020) Pseudo rgb-d for self-improving monocular slam and depth prediction. In: Proceedings of the European conference on computer vision, pp 437–455

Yuan Z, Song X, Bai L, Wang Z, Ouyang W (2022) Temporal-channel transformer for 3d lidar-based video object detection for autonomous driving. IEEE Trans Circuits Syst Video Technol 32(4):2068–2078

Liu H, Tang X, Shen S (2020) Depth-map completion for large indoor scene reconstruction. Pattern Recognit 99:107112

Zhang S, Wen L, Lei Z, Li SZ (2021) Refinedet++: single-shot refinement neural network for object detection. IEEE Trans Circuits Syst Video Technol 31(2):674–687

Bi X, Chen D, Huang H, Wang S, Zhang H (2023) Combining pixel-level and structure-level adaptation for semantic segmentation. Neural Process Lett 1:1–16

Saxena A, Sun M, Ng AY (2008) Make3d: learning 3d scene structure from a single still image. IEEE Trans Pattern Anal Mach Intell 31(5):824–840

Eigen D, Puhrsch C, Fergus R (2014) Depth map prediction from a single image using a multi-scale deep network. Adv Neural Inf Process Syst 27:2366–2374

Liu M, Salzmann M, He X (2014) Discrete-continuous depth estimation from a single image. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 716–723

Liu F, Shen C, Lin G (2015) Deep convolutional neural fields for depth estimation from a single image. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5162–5170

Cao Y, Wu Z, Shen C (2017) Estimating depth from monocular images as classification using deep fully convolutional residual networks. IEEE Trans Circuits Syst Video Technol 28(11):3174–3182

Wang X, Hou C, Pu L, Hou Y (2015) A depth estimating method from a single image using foe crf. Multimed Tools Appl 74:9491–9506

Lee JH, Han MK, Ko DW, Suh IH (2019) From big to small: Multi-scale local planar guidance for monocular depth estimation. arXiv preprint arXiv:1907.10326

Bhat SF, Alhashim I, Wonka P (2021) Adabins: Depth estimation using adaptive bins. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4009–4018

Yin W, Liu Y, Shen C, Yan Y (2019) Enforcing geometric constraints of virtual normal for depth prediction. In: Proceedings of the IEEE international conference on computer vision, pp 5684–5693

Wang P, Shen X, Lin Z, Cohen S, Price B, Yuille AL (2015) Towards unified depth and semantic prediction from a single image. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2800–2809

Hu J, Ozay M, Zhang Y, Okatani T (2019) Revisiting single image depth estimation: Toward higher resolution maps with accurate object boundaries. In: Proceedings of the IEEE winter conference on applications of computer vision, pp 1043–1051

Aloimonos J (1988) Shape from texture. Biol Cybern 58(5):345–360

Battiato S, Capra A, Curti S, La Cascia M (2004) 3d stereoscopic image pairs by depth-map generation. In: Proceedings of the 2nd international symposium on 3D data processing, visualization and transmission, pp 124–131

Malik AS, Choi T-S (2008) A novel algorithm for estimation of depth map using image focus for 3d shape recovery in the presence of noise. Pattern Recogn 41(7):2200–2225

Saxena A, Chung S, Ng A (2005) Learning depth from single monocular images. Adv Neural Inf Process Syst 18:1161–1168

Ranftl R, Bochkovskiy A, Koltun V (2021) Vision transformers for dense prediction. In: Proceedings of the IEEE international conference on computer vision, pp 12179–12188

Yang G, Tang H, Ding M, Sebe N, Ricci E (2021) Transformer-based attention networks for continuous pixel-wise prediction. In: Proceedings of the IEEE international conference on computer vision, pp 16269–16279

Song M, Lim S, Kim W (2021) Monocular depth estimation using laplacian pyramid-based depth residuals. IEEE Trans Circuits Syst Video Technol 31(11):4381–4393

Wang L, Zhang J, Wang O, Lin Z, Lu H (2020) Sdc-depth: semantic divide-and-conquer network for monocular depth estimation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 541–550

Meng X, Fan C, Ming Y, Yu H (2022) Cornet: context-based ordinal regression network for monocular depth estimation. IEEE Trans Circuits Syst Video Technol 32(7):4841–4853

Kim D, Ga W, Ahn P, Joo D, Chun S, Kim J (2022) Global-local path networks for monocular depth estimation with vertical cutdepth. arXiv preprint arXiv:2201.07436

Hwang S-J, Park S-J, Baek J-H, Kim B (2022) Self-supervised monocular depth estimation using hybrid transformer encoder. IEEE Sens J 22(19):18762–18770

Tomar SS, Suin M, Rajagopalan A (2023) Hybrid transformer based feature fusion for self-supervised monocular depth estimation. In: Proceedings of the European conference on computer vision workshops, pp 308–326

Eigen D, Fergus R (2015) Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. In: Proceedings of the IEEE international conference on computer vision, pp 2650–2658

Laina I, Rupprecht C, Belagiannis V, Tombari F, Navab N (2016) Deeper depth prediction with fully convolutional residual networks. In: Proceedings of the 14th international conference on 3D vision, pp 239–248

Lee S, Lee J, Kim B, Yi E, Kim J (2021) Patch-wise attention network for monocular depth estimation. In: Proceedings of the AAAI conference on artificial intelligence, pp 1873–1881

Xiang X, Kong X, Qiu Y, Zhang K, Lv N (2021) Self-supervised monocular trained depth estimation using triplet attention and funnel activation. Neural Process Lett 53(6):4489–4506

Fu H, Gong M, Wang C, Batmanghelich K, Tao D (2018) Deep ordinal regression network for monocular depth estimation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2002–2011

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Ł, Polosukhin I (2017) Attention is all you need. Adv Neural Inf Process Syst 30:5998–6008

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S (2020) An image is worth 16x16 words: transformers for image recognition at scale. arXiv preprint arXiv:2010.11929

Zheng S, Lu J, Zhao H, Zhu X, Luo Z, Wang Y, Fu Y, Feng J, Xiang T, Torr PH (2021) Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6881–6890

Xie E, Wang W, Yu Z, Anandkumar A, Alvarez JM, Luo P (2021) Segformer: simple and efficient design for semantic segmentation with transformers. Adv Neural Inf Process Syst 34:12077–12090

Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B (2021) Swin transformer: Hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE international conference on computer vision, pp 10012–10022

Chen LC, Yang Y, Wang J, Xu W, Yuille AL (2016) Attention to scale: Scale-aware semantic image segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3640–3649

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Zhang H, Dana K, Shi J, Zhang Z, Wang X, Tyagi A, Agrawal A (2018) Context encoding for semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7151–7160

Wang Q, Wu B, Zhu P, Li P, Zuo W, Hu Q (2020) Eca-net: Efficient channel attention for deep convolutional neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 11534–11542

Woo S, Park J, Lee JY, Kweon IS (2018) Cbam: Convolutional block attention module. In: Proceedings of the European conference on computer vision, pp 3–19

Wang X, Girshick R, Gupta A, He K (2018) Non-local neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7794–7803

Fu J, Liu J, Tian H, Li Y, Bao Y, Fang Z, Lu H (2019) Dual attention network for scene segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3146–3154

Huynh L, Nguyen-Ha P, Matas J, Rahtu E, Heikkilä J (2020) Guiding monocular depth estimation using depth-attention volume. In: Proceedings of the European conference on computer vision, pp 581–597

Lee M, Hwang S, Park C, Lee S (2022) Edgeconv with attention module for monocular depth estimation. In: Proceedings of the IEEE winter conference on applications of computer vision, pp 2858–2867

Song X, Li W, Zhou D, Dai Y, Fang J, Li H, Zhang L (2021) Mlda-net: multi-level dual attention-based network for self-supervised monocular depth estimation. IEEE Trans Image Process 30:4691–4705

Wang Z, Dai X, Guo Z, Huang C, Zhang H (2022) Unsupervised monocular depth estimation with channel and spatial attention. IEEE Trans Neural Networks and Learn Syst 1:1–11

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Cao Y, Zhao T, Xian K, Shen C, Cao Z, Xu S (2020) Monocular depth estimation with augmented ordinal depth relationships. IEEE Trans Circuits Syst Video Technol 30(8):2674–2682

Xu D, Ricci E, Ouyang W, Wang X, Sebe N (2017) Multi-scale continuous crfs as sequential deep networks for monocular depth estimation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5354–5362

Gan Y, Xu X, Sun W, Lin L (2018) Monocular depth estimation with affinity, vertical pooling, and label enhancement. In: Proceedings of the European conference on computer vision, pp 224–239

Johnston A, Carneiro G (2020) Self-supervised monocular trained depth estimation using self-attention and discrete disparity volume. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4756–4765

Xu D, Alameda-Pineda X, Ouyang W, Ricci E, Wang X, Sebe N (2020) Probabilistic graph attention network with conditional kernels for pixel-wise prediction. IEEE Trans Pattern Anal Mach Intell 44(5):2673–2688

Liu F, Shen C, Lin G, Reid I (2015) Learning depth from single monocular images using deep convolutional neural fields. EEE Trans Pattern Anal Mach Intell 38(10):2024–2039

Godard C, Mac Aodha O, Brostow GJ (2017) Unsupervised monocular depth estimation with left-right consistency. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 270–279

Kuznietsov Y, Stuckler J, Leibe B (2017) Semi-supervised deep learning for monocular depth map prediction. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6647–6655

Patil V, Sakaridis C, Liniger A, Van Gool L (2022) P3depth: monocular depth estimation with a piecewise planarity prior. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1610–1621

Geiger A, Lenz P, Stiller C, Urtasun R (2013) Vision meets robotics: the kitti dataset. Int J Robot Res 32(11):1231–1237

Alhashim I, Wonka P (2018) High quality monocular depth estimation via transfer learning. arXiv preprint arXiv:1812.11941

Chen X, Chen X, Zha ZJ (2019) Structure-aware residual pyramid network for monocular depth estimation. arXiv preprint arXiv:1907.06023

Chen Y, Zhao H, Hu Z, Peng J (2021) Attention-based context aggregation network for monocular depth estimation. Int J Mach Learn Cybern 12:1583–1596

Acknowledgements

This work was supported by the National Natural Science Foundation of China (62102003), Anhui Postdoctoral Science Foundation (2022B623), Natural Science Foundation of Anhui Province (2108085QF258), the University Synergy Innovation Program of Anhui Province (GXXT-2021-006, GXXT-2022-038), the Institute of Energy, Hefei Comprehensive National Science Center under (21KZS217), Central guiding local technology development special funds (202107d06020001), University-level general projects of Anhui University of science and technology (xjyb2020-04), Anhui University of Science and Technology Graduate Innovation Fund (2022CX2117).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xia, C., Duan, X., Gao, X. et al. PCTDepth: Exploiting Parallel CNNs and Transformer via Dual Attention for Monocular Depth Estimation. Neural Process Lett 56, 73 (2024). https://doi.org/10.1007/s11063-024-11524-0

Accepted:

Published:

DOI: https://doi.org/10.1007/s11063-024-11524-0