Abstract

The learning process and hyper-parameter optimization of artificial neural networks (ANNs) and deep learning (DL) architectures is considered one of the most challenging machine learning problems. Several past studies have used gradient-based back propagation methods to train DL architectures. However, gradient-based methods have major drawbacks such as stucking at local minimums in multi-objective cost functions, expensive execution time due to calculating gradient information with thousands of iterations and needing the cost functions to be continuous. Since training the ANNs and DLs is an NP-hard optimization problem, their structure and parameters optimization using the meta-heuristic (MH) algorithms has been considerably raised. MH algorithms can accurately formulate the optimal estimation of DL components (such as hyper-parameter, weights, number of layers, number of neurons, learning rate, etc.). This paper provides a comprehensive review of the optimization of ANNs and DLs using MH algorithms. In this paper, we have reviewed the latest developments in the use of MH algorithms in the DL and ANN methods, presented their disadvantages and advantages, and pointed out some research directions to fill the gaps between MHs and DL methods. Moreover, it has been explained that the evolutionary hybrid architecture still has limited applicability in the literature. Also, this paper classifies the latest MH algorithms in the literature to demonstrate their effectiveness in DL and ANN training for various applications. Most researchers tend to extend novel hybrid algorithms by combining MHs to optimize the hyper-parameters of DLs and ANNs. The development of hybrid MHs helps improving algorithms performance and capable of solving complex optimization problems. In general, the optimal performance of the MHs should be able to achieve a suitable trade-off between exploration and exploitation features. Hence, this paper tries to summarize various MH algorithms in terms of the convergence trend, exploration, exploitation, and the ability to avoid local minima. The integration of MH with DLs is expected to accelerate the training process in the coming few years. However, relevant publications in this way are still rare.

Similar content being viewed by others

1 Introduction

Artificial Intelligence (AI) was first introduced in the ideas and hypotheses of Gottfried Leibniz [1]. In 1943, McCulloch and Pitts proposed an evolutionary model of the human brain that began research on the artificial neural network (ANN) [2]. ANNs can learn and recognize and solve a wide range of complex problems. Today, ANNs and deep learning (DL) techniques are the most popular and main methods of machine learning (ML) algorithms [3,4,5,6,7,8,9,10]. Figure 1 compares the accuracy of a typical machine learning algorithm and a deep neural network (DNN). As can be seen, if sufficient data and computational power are available, DL techniques perform better (in terms of accuracy) than conventional machine learning approaches [2].

Comparison of the accuracy of a typical machine learning algorithm and a deep neural network [2]

Since 2006, DL has become a popular topic in machine learning. Its position in AI and data science has been shown in Fig. 2 [10]. DL techniques are superior to traditional ML algorithms due to data availability and systems processing power development [10, 11]. In smaller databases and simple applications, traditional ML algorithms perform better because they are easier to implement. This is one of the most important reasons that neural networks and DL techniques had not grown much in the early years [1, 2, 12]. With the advent of the Big Data era, much faster data collection, storage, updating, and management advances have become possible. In addition, the development of GPU has made efficient processing in large data sets. These dramatic advances have led to recent advances in DL techniques [2, 10]. Additionally, reducing the computation time and increasing the convergence process have increased the popularity of these algorithms [3, 4]. Moreover, the position of DL and ANNs in the taxonomy of artificial intelligence approaches has been shown in Fig. 3.

The position of deep learning in artificial intelligence and data science [10]

ANNs have been used in various applications, including function approximation [13, 14], classification [15,16,17,18,19,20], feature selection [21, 22], medical image registration [6], pattern recognition [23,24,25,26], data mining [27], signal processing [28], Nonlinear system identification [29, 30], speech processing [31], etc. In addition, different DL methods have been used in various applications, including classification [32,33,34,35,36], prediction [37,38,39], Phoneme recognition [40], hand-written digit recognition [41,42,43,44,45,46], etc.

Given the importance of using ANNs and DL methods in various applications, identifying weaknesses and improving these algorithms is one of the current issues in machine learning. The learning process of ANNs and DL architectures is considered one of the most difficult machines learning challenges. Over the past two decades, optimizing the structure and parameters of ANNs and DLs has been one of the main interests of researchers [8,9,10]. Optimization of ANNs and DLs is often considered from several aspects: optimization of weights, hyper-parameters, network structure, activation nodes, learning parameters, learning algorithm, learning environment, etc. [9].

Optimizing weights, biases, and hyper-parameters is one of the most important parts of neural networks and DL architectures. In fact, ANNs and DLs are distinguished by two pillars of structure and learning algorithm. In many past studies, gradient-based methods have been used for architecture training. However, due to the limitations of gradient-based algorithms, the need to use optimization algorithms has been identified [8,9,10]. For example, in back propagation (BP) learning algorithm, the goal of learning is to optimize the weights and thresholds of the network to minimize the cost function.

In gradient-based learning algorithms, the cost function must be derivative to use BP. This is also one of the weaknesses of gradient-based learning algorithms. Because, in many cases, the activation function (and the cost function) is not derivative. Sigmoid activation functions are commonly used in these algorithms. In the literature, several gradient-based methods, such as Back Propagation (BP) and Levenberg Marquardt (LM) methods, have been developed to teach neural network-based systems [29]. But gradient-based methods have the following major drawbacks.

-

For multi-objective cost functions, they may be stuck at local minimums.

-

The execution time of these algorithms is very expensive due to the calculation of gradient information with thousands of iterations.

-

If there are several local minimums in the problem search space, the learning algorithm reaches error = 0 in the first local minimum. As a result, the learning algorithm converges in the first local minimum and will not achieve the optimal solution. MH algorithms easily escape the local minimum using exploitation and exploration and are a good alternative for gradient-based algorithms.

-

In gradient-based learning algorithms, the cost function must be derivative. As a result, the cost function must be continuous. This is also one of the weaknesses of gradient-based learning algorithms. Because, in many cases, the activation function is not derivative. For example, if a step function were used instead of the sigmoid function, all backward calculations in gradient-based learning algorithms would be useless.

At first, Conjugate Gradient Algorithm [47], Newton's Method [48], Stochastic Gradient Descent (SGD) [49], and Adaptive Moment Estimation (Adam) [50] were developed to improve gradient-based learning algorithms, which have better generalizability and convergence than the BP algorithm. However, these methods' neural networks and DL architectures are considered "black boxes" [8]. Because it cannot be interpreted with human intuition. Evolutionary and swarm intelligence algorithms have provided a generalized and optimal network [51,52,53,54].

Since training the ANNs and DLs is an NP-hard optimization problem, their structure and parameters optimization using the meta-heuristic (MH) algorithms has been considerably raised. As an optimization problem, MH algorithms formulate the optimal estimation of DL components (such as hyper-parameter, weights, number of layers/neurons, learning rate) [8]. The existence of multiple objectives in optimizing ANNs and DLs, such as error minimization, network generalization, and model simplification, has increased the need for multi-objective MH algorithms. Using MH algorithms to optimize ANNs and DL architectures is still challenging, and more research is needed. Using MH algorithms to train DLs improves the learning process. This increases the accuracy of the algorithm and reduces its execution time.

The rest of the paper is organized as follows: Sect. 2 shows the research methodology. In Sect. 3, first the concept of deep learning models is discussed, then some well-known and state-of-the-art competitive meta-heuristic algorithms are introduced. In Sect. 4, a comprehensive review of the training ANNs and DLs using MH algorithms has been collected. In Sect. 5, the analysis of statistical results from the literature review, challenges and future perspectives are reviewed. Finally, in Sect. 6, the conclusion of this paper is presented.

2 Methodology

This paper has used 440 papers from different journals and publishers in the field of training ANNs and DL architectures (by MH algorithm) for a systematic literature review. First, 627 papers were reviewed, and after reading all the papers, 440 papers entered the next stage. This study systematically searched Google Scholar, Web of Science, and Scopus databases to find related papers. In particular, a thorough search was conducted in Elsevier, IEEE, Springer, Taylor & Francis, John Wiley & Sons, MDPI, Tech Science Press, and other journals. Some conference papers were also selected. In addition, we searched for papers sources to find missing papers. In this paper, only the papers published in English were selected. The following keyword combinations have been used to search for papers:

‘Deep learning’, ‘Artificial neural networks’, ‘Meta-heuristics’, ‘Parameters optimization’, ‘Optimized, ‘Training’, ‘Learning algorithm’, ‘Deep Autoencoder’, ‘Adaptive Network Fuzzy Inference System’, ‘Convolutional Neural Network’, ‘Deep Boltzmann Machine’, ‘Deep Belief Network’, ‘Deep Neural Networks’, ‘Evolutionary Deep Networks’, ‘Feed Forward Neural Network’, ‘Generative Adversarial Network’, ‘Long Short-Term Memory’, ‘Machine Learning’, ‘Radial Basis Function Neural Network’, ‘Recurrent Neural Network’, ‘Artificial Bee Colony’, ‘Ant Colony Optimization’, ‘Artificial Intelligence’, ‘Bat Algorithm’, ‘Biogeography-Based Optimization’, ‘Chimp Optimization Algorithm’, ‘Cuckoo Search’, ‘Differential Evolution’, ‘Evolutionary Algorithm’, ‘Evolutionary Computation’, ‘Evolutionary Deep Learning’, ‘Evolution Strategy’, ‘Firefly Algorithm’, ‘Genetic Algorithm’, ‘Gravitational Search Algorithm’, ‘Grasshopper Optimization Algorithm’, ‘Grey Wolf Optimizer’, ‘Harmony Search’, ‘Jaya Algorithm’, ‘Memetic Evolution Algorithm’, ‘Multi-objective Optimization’, ‘Non-dominated Sorting Genetic Algorithm’, ‘Particle Swarm Optimization’, ‘Quantum-Based Algorithm’, ‘Simulated Annealing’, ‘Swarm Intelligence’, ‘Trajectory-Based Optimization’, ‘Tabu Search’, and etc.

In this paper, we have tried to collect and discuss all research from the beginning of 1988 to 2022 (September), and therefore 627 articles were selected. The bibliometric tool in this paper was such that first, all papers' titles and the abstract quality of journals based on JCR were reviewed. After this initial review, 187 papers were deleted. Then, the papers that entered the next phase were thoroughly reviewed, and all the discussions and challenges related to this literature review were presented in the next sections.

After analyzing the candidate papers, we found that optimizing the parameters of artificial neural networks and deep learning architectures is a major challenge, and meta-heuristic algorithms are a promising way to solve this challenge. We also noticed that by the mid-2022, there would be a big gap in collecting all papers in this field. Finally, the research questions that need to be answered are as follows:

-

(1)

Why is the optimization of ANNs and DL parameters important?

-

(2)

Which MH algorithms are more used to optimize ANNs and DL architectures?

-

(3)

Which of the ANN and DL parameters are optimized by meta-heuristic algorithms?

-

(4)

Which applications (and dataset) are solved by DLs optimized by meta-heuristic algorithms?

-

(5)

Which ANN and DL architectures are optimized by meta-heuristic algorithms?

-

(6)

What is the effect of using meta-heuristic algorithms to optimize ANNs and DL architectures?

-

(7)

What is the effect of improving meta-heuristic algorithms (and combination of MHs) to optimize ANNs and DL architectures?

3 Background

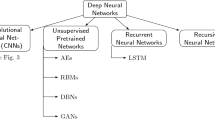

In the late 1990s, two events created a new challenge in neural networks that marks the beginning of DL today. Long short-term memory (LSTM) was introduced by Hochreiter and Schmidhuber in 1997 and is still one of the most popular DL architectures [55]. In 1998, LeCun et al. developed the first convolutional neural network (CNN), LeNet-5, which yielded significant results in the MNIST dataset [56]. Neither CNN nor LSTM attracted the attention of the large AI community at the time. The last event in the return of deep neural networks (DNNs) was a paper by Hinton et al. in 2006 that introduced deep belief networks (DBN) and produced far better results in the MNIST dataset [57, 58]. After this paper, the renaming of deep neural networks to DL was completed, and a new era in the history of AI began. Figure 4 shows common DL architectures, which are: Long short-term memory (LSTM), Convolutional Neural Networks (CNNs), Deep Belief Networks (DBN), Recurrent Neural Networks (RNN), Deep Boltzmann Machines (DBM), Deep Auto Encoder (DAE), and Deep Neural Networks (DNN).

Much more research is needed to train and optimize the parameters and structure of ANNs and DL architectures. The learning process of ANNs and DLs is one of the most difficult machines learning challenges and has recently attracted the attention of many researchers [8, 10]. Figure 5 shows an example of the evolutionary deep learning architecture (PSO-DCNN) for classification problem.

In recent years, MH algorithms have emerged as a promising method for training ANNs and DLs. The term MH was first introduced in 1986 by Glover [59]. MH methods have become very popular in the last two decades. In designing the MH algorithm, two contradictory criteria are considered: Exploration in the search space and exploitation of the best solutions. In exploration, unsearched areas are visited to ensure that all areas of the search space are searched uniformly. Potential areas are explored more fully in exploitation to find a better solution. Unlike exact methods, MHs solve large-scale problems in a reasonable time. Figure 6 shows the different types of MHs, which include four main categories.

Since a few decades ago, a few nature-inspired meta-heuristic algorithms, such as genetic algorithm (GA) [60], ant colony optimization (ACO) [61], particle swarm optimization (PSO) [62], simulated annealing (SA) [63], and differential evolution (DE) [64] have been introduced and used for different optimization problems. Afterward, many studies concentrated on the improvement or adaptation of these MH algorithms for new applications. Other researchers tried to introduce new meta-heuristic algorithms by taking inspiration from nature. Some newer algorithms such as the grey wolf optimization (gwo) [65], black widow optimization (BWO) [66], chimp optimization algorithm (ChOA) [67], red fox optimization (RFO) [68], and gannet optimization algorithm (GOA) [69] are the results of such efforts. Table 1 presents general information about some of the more popular algorithms. In the following, five well-known algorithms called particle swarm optimization (PSO), genetic algorithm (GA), artificial bee colony (ABC), differential evolution (DE), biogeography-based optimization (BBO), and two state-of-the-art competitive algorithms called grey wolf optimization (GWO), and chimp optimization algorithm (ChOA) are introduced.

3.1 Genetic Algorithm (GA)

Genetic algorithm is an exploratory search inspired by Charles Darwin’s theory of natural evolution, first introduced by Holland in 1975 [60]. This algorithm reflects the natural selection process in which the best individuals for reproduction are selected to produce offspring. This algorithm repeatedly changes the population of individual solutions. In each generation, GA randomly selects individuals from the current population and uses them as parents to produce offspring for the next generation. Over successive generations, the population "evolves" toward an optimal solution. Four phases are considered in a GA.

-

Initial Population This process begins with a group of chromosomes called a population. Each chromosome is a solution to the problem you want to solve. A chromosome is characterized by a set of variables called genes.

-

Selection Two pairs of chromosomes (parents) are selected based on their fitness scores. Chromosomes with high fitness have more chance to be selected for reproduction.

-

Crossover This operator is the most significant step in a GA algorithm. For each pair of parents to be mated, a crossover point is randomly selected from within the genes. Offspring are created by exchanging the genes of parents. The crossover operator is applied to improve the exploitation of algorithm. This operator actually searches the space around a chromosome.

-

Mutation In some newly formed offspring, some of their genes can be subjected to a mutation. The mutation operator is applied to enhance exploration.

Today in many applications, GA is used to train the deep learning architectures such as convolutional neural network (GA-CNN). In this proposed architectures, GA optimizes the weights and biases of the CNN. In the following, GA modeling for this problem is presented. For GA modeling, one of the main tasks is to define a solution in the form of a chromosome. Figure 7 shows the definition of a chromosome in GA.

Figure 8 shows the single point crossover operator of standard GA. As can be seen, in a single-point crossover, only two chromosomes are combined. Figure 9 illustrates the mutation process of GA.

3.2 Differential Evolution (DE)

Differential evolution (DE) is a global optimization algorithm developed by Storn and Price in the year 1997 [64]. Similar to other popular approaches, such as genetic algorithm and evolutionary algorithm, the differential evolution starts with an initial population of candidate solutions. These candidate solutions are iteratively improved by introducing crossover, mutation, and selection into the population, and retaining the fittest candidate solutions. Due to its several competitive advantages, DE is one of the most popular MH algorithm used by researchers and practitioners to tackle a diverse set of real-world applications. First, the implementation of DE is simpler than most other MHs. This feature enables those practitioners who may not have strong coding skills to make simple adjustments to the DE coding to solve problems. Second, despite its simplicity, DE can show a more promising optimization ability than other MHs in solving different types of optimization problems such as nonlinearity and multimodality. Third, various DE algorithms have appeared as the top three best-performing optimizers in most CEC competitions since 2005. Figure 10 shows the flowchart of the DE algorithm.

3.3 Particle Swarm Optimization (PSO)

Particle Swarm Optimization (PSO) algorithm is one of the most important intelligent optimization algorithms in the field of Swarm Intelligence. This algorithm was introduced by Kennedy and Eberhart in 1995, inspired by the social behavior of animals such as fish and birds that live together in small and large groups. PSO is suitable for a wide range of continuous and discrete problems and has performed very well in different optimization problems [62].

In PSO, all possible solutions are mapped to corresponded particles, and every particle is assigned an initial velocity that deputes a position change. For calculating the next velocity of the particles in the solution space, an optimization function is utilized. Particle velocity is made of three main movements: a) the percentage of the previous movement's continuation, b) the movement toward the best personal experience, and c) the movement toward the best global experience. Equations (1) and (2) are respectively expressing the update of velocity and position of the particles.

3.4 Artificial Bee Colony (ABC)

Artificial bee colony (ABC) is a swarm based meta-heuristic algorithm that was introduced by Karaboga in 2005. ABC was inspired by the intelligent search behavior of honey bees [78]. In ABC algorithm, the colony contains three types of artificial bees (Fig. 11):

-

Scout bees Solutions that are randomly generated to discover new spaces are called scout bees. Scout bees are responsible for exploring the search space.

-

Employed bees A number of scout bees with good fitness function become employed bees. Employed bees are responsible for advertising quality food sources.

-

Onlooker bees The onlooker bees are responsible for searching the neighborhood for employed bees. Onlooker bees receive information about food sources and search around these sources. The role of these bees is both exploitation and exploration of algorithm.

In ABC, scout bees randomly discover a population of initial solution vectors and then repeatedly improve them by onlooker and employed bees (using neighbor search method to move towards better solutions while eliminating poor solutions). In general, ABC uses two main methods (neighbor search and random search) to get the optimal answer: Random search by scout and onlooker bees and neighbor search by employed and onlooker bees. In ABC, each candidate answer indicates the position of food source, and the quality of the nectar is used as a fitness function. In this algorithm, first, all initial populations are explored by scout bees. Scout bees with best fitness functions are selected as the employed bees. Employed bees exploit the solution positions and then onlooker bees are created. The higher the quality of the employed bee, the more onlooker bees will be created around it. The onlooker bee also select new food positions (using the employed bee information) and exploit around these positions. In the next step, random scout bees are created to find new random food positions. ABC algorithm can be formulated as Eq. (3)-(5).

where.

\({P}_{i}\) = Probability of selecting employed bees by onlooker bees.

\({fit}_{i}\) = Fitness function of the \({i}^{th}\) solution.

\({V}_{ij}\) = Onlooker bee.

\({X}_{L}^{j}\) = Scout bees.

\({X}_{min}^{j}\) = Low limit of search space.

\({X}_{max}^{j}\) = High limit of search space, \(SN\) = Number of employed bees.

\(i\) \(\in \){1, 2, …, SN}.

\(j\) = Dimension \(\in \) {1, 2, …, D}.

\(k\) = Onlooker bee number.

\({\varphi }_{ij}\) is the random number \(\in [0, 1]\)

\(L\) = Scout bee number.

3.5 Biogeography-Based Optimization (BBO)

Biographical-based optimization is a population-based evolutionary algorithm first proposed by Dan Simon in 2008 [83]. The answer in BBO is called habitat and habitat is considered as a vector of its habitant. In addition, the value of each habitat is defined by the habitat suitability index (HSI). The high value of HSI shows high fitness function of habitat. Three main operators of BBO include migration, mutation and elitism. In BBO, each habitat has its own emigration rate, immigration rate, and mutation rate. The emigration (\({\mu }_{j}\left(k\right)\)) rate and immigration rate (\({\lambda }_{j}\left(k\right)\)) are defined as Eq. (6) and Eq. (7).

In which, \(k(j)\) represents the rank of the jth habitat after sorting accordance to their HSI and \(N\) is the highest rank in the total habitat (population size). The rank \(k(j)\) is related to the habitat suitability index (fitness function). In addition, \(E\) represents the highest emigration rate and \(I\) represents the highest immigration rate. Migration, mutation and elitism are the main operators of this algorithm. By assuming \(H_{i}\) as the host habitat and \(H_{j}\) as the guest habitat, the migration process for the standard BBO will be as the Eq. (8):

According to the Eq. (8), the host habitat (selected based on the immigration rate and roulette wheel method) receives information only from the guest habitat (selected based on the emigration rate and roulette wheel method) and itself.

3.6 Grey Wolf Optimization (GWO)

GWO is a swarm-based MH algorithm inspired by the the gray wolf’s hunting policies [65]. GWO divide the population into four levels: alpha, beta, delta, and omega. Alphas are the leaders that make decisions about living, hunting, and moving wolfs, while the beta act as an advisor to the alpha. The delta is responsible for warning when there is danger and protecting the pack, providing food and caring for sick or injured wolves. In the end, Omega is the last wolve that has to obey leaders. They follow four phases: hunting, searching, encircling, and then attacking the prey. GWO is one of the state-of-the-art competitive MH algorithm, which has attracted great attention of researchers. GWO is simple to set parameters, flexible and has a good trade-off between exploration and exploitation.

3.7 Chimp optimization Algorithm (ChOA)

ChOA algorithms is one of the new MH algorithm introduced by Khishe and Mosavi in 2020. ChOA is inspired by the chimps’ movement in group hunting and their sexual motivations [67]. In the ChOA, prey hunting is utilized to reach the optimal solution in the optimization problem. ChOA divides hunting into four main phases: driving, blocking, chasing, and attacking. In the first, ChOA is initialized by the generating a random chimps’ population. Chimps are then randomly classified into four groups: attacker, chaser, barrier, and driver. In order to model driving and chasing the prey, Eqs. (9)–(13) have been proposed.

where, \({{\varvec{X}}}_{prey}\) is the prey position vector, \({{\varvec{X}}}_{chimp}\) denote the chimp position vector, \(t\) present the current iteration, \({\varvec{a}},\boldsymbol{ }{\varvec{c}} and {\varvec{m}}\) are the coefficient vectors, \({\varvec{f}}\) is the dynamic vector \(\in [0, 2.5]\), \({{\varvec{r}}}_{1} and {{\varvec{r}}}_{2}\) are the random vectors \(\in [0, 1]\), and \({\varvec{m}}\) denote a chaotic vector.

The chimps first detect the prey’s position in the hunting step using driver, blocker, and chaser chimps. In the exploitation process, the hunting process is done by attackers. For this purpose, the prey’s position is estimated by the attacker, barrier, chaser, and driver chimps, and other chimps update their position through the prey. This process is formulated as Eqs. (14)–(16).

where, \({{\varvec{X}}}_{Attacher}\) denotes the best search agent, \({{\varvec{X}}}_{Barrier}\) is the second-best search agent, \({{\varvec{X}}}_{Chaser}\) presents the third-best search agent, \({{\varvec{X}}}_{Driver}\) is the fourth-best search agent, and \({\varvec{X}} (t+1)\) is the updated position of each chimp.

Also, to set up the exploration process, \({\varvec{a}}\) parameter is applied such that \({\varvec{a}} >1\) and \({\varvec{a}} <-1\) is the cause of diverging chimps and preys. As well, \({\varvec{a}}\) parameter with the values between + 1 and − 1, help the chimps and preys to be converged and will lead to improved exploitation. In addition, \({\varvec{c}}\) parameter helps the algorithm to have the exploration process. Finally, all chimps attack their prey to achieve social rights (sexual incentive) after prey hunting regardless of their duties. In order to formulate social behavior, chaotic maps are used as Eq. (17).

3.8 Memetic Algorithms (Hybridization)

It is complicated to find the best possible solution in the search space in large-scale optimization problems. Moreover, changing algorithm variables does not have much influence on the algorithm convergence. Therefore, for massive dataset with high complexity, even if the researchers have determined accurate initial parameters, the algorithm will not be able to perform adequate exploration and exploitation. Consequently, to achieve comprehensive global and local searches, we need to apply powerful operators to make better exploration and exploitation. MH algorithms can be combined with others and overcome this problem by using the advantages and operators of other algorithms [125]. Despite promising results achieved by MHs over the past years, many successful attempts have been made that do not pursue a single inspiration from nature but compound various MHs exploiting their complementarity. This is particularly important for challenging optimization applications where combination methods show promising performance, leading to further intensification of the research. Generally, High-level hybridization of MHs is achieved by running algorithms in a sequence where all factors changed by one MH are transferred to the other algorithm [125]. According to the literature review, most hybridization models are designed for specific optimization problem, including clustering, feature selection, and image segmentation. Since modelling a hybrid model that would be able to improve more than one MH is challenging, available solutions mostly use two competitive algorithms to an optimization problem. In recent decades, researchers have utilized a combination of algorithms to improve the performance of the optimization process.

3.9 Modification of MH (Devoted Local Search and Manipulating the Solutions Space)

The increasing discovery of alternative methods to solve optimization problems makes it necessary to parallelize and modify available algorithms. Achieving a suitable solution using a MH algorithms may need a long runtime, iterations, or population. The first one is to use the neighborhood search method in order to minimize the exploration of the solution space. In addition, powerful CPU can affect the convergence speed of the MH algorithm and therefore work more efficiently. In the proposed neighborhood search approach, smaller populations called groups may formed. Suppose the number of computer cores is specified at the beginning of the algorithm. In comparison with the standard version of MH algorithms, an initial population consisting of N individuals is generated randomly. From this population, suitable individuals are selected. Each individual in population will be the best adapted solution in the smaller group that will be created under his leadership. The second proposed approach involves manipulating the solutions space to minimize the number of calculations. In this proposition, the multi-threading approach plays a big role because dividing the space and selecting the best areas does not cost extra. In addition, the third proposed approach is the combination of the previous two methods. While the proposed approach of parallelization and manipulation of solution space improves the performance of classical algorithms, they are so flexible that can be improved with different ideas. In addition, it achieves better results in different applications [126].

4 Review of the Training DL and AANs by MH Algorithms

This section provides an overview of the optimization of neural networks and DL architectures using MH algorithms. The review of papers is divided into two parts: ANN optimization and DL optimization.

4.1 Review1: Training the AANs by MH Algorithms

This section provides a comprehensive overview of the optimization of different types of ANNs using MH algorithms. Optimization of ANNs is often considered from several aspects: optimization of weights, hyper-parameters, network structure, activation nodes, learning parameters, learning algorithm, learning environment, etc.

Eberhart and Kennedy [62] used the PSO algorithm to optimize the weights of an MLPNN. The proposed architecture performed very well on a benchmark data set. Storn and Price [64] used a differential evolution algorithm to optimize the weights of an FFNN. Experiments on the nonlinear optimization problem indicated the superiority of the proposed DE-FFNN algorithm. PSO algorithm was used by Chunkai et al. [127] to optimize the weights and architecture of MLPNN. This hybrid approach was introduced to model the quality estimation of a product. The results showed that the performance of PSO-MLPNN is better than other algorithms. Li et al. [128] used the genetic algorithm to train the parameters and weights of an ANN. The proposed architecture (GA-ANN) showed good performance for the pollutant emissions problem.

Leung et al. [129] used the improved genetic algorithm (IGA) to optimize the architecture and weights of an ANN. This study compared the proposed architecture (IGA-ANN) with other architectures and presented better results. Meissner et al. [130] used an improved PSO algorithm to optimize the number of neurons, parameters, and weights of an ANN. The developed architecture showed good results in benchmark datasets. Geethanjali et al. [131] used the PSO algorithm to train the ANN (MLFFNN). The results showed that the PSO- MLFFNN architecture was more accurate and faster than the BP- MLFFNN architecture. Yu et al. [132] used PSO and DPSO algorithms to optimize the architecture and parameters (weight and bias) of a three-layer FFANN network. The proposed algorithm was named ESPNet. A self-adaptive evolutionary strategy was used to improve PSO and DPSO. Experimental results from two real-world problems show that ESPNet can generate compact neural networks with good generalizability.

Khayat et al. [133] used GA and PSO algorithms to optimize the weights of a SOFNN. The results showed that the optimized SOFNN architecture based on GA and PSO performs well. Lin and Hsieh [134] used the improved PSO algorithm to optimize the weights of a three-layer neural network. The proposed approach provided good performance for the classification data. Cruz-Ramírez et al. [135] used the Pareto Memetic Differential Evolution Algorithm (MPDA) to optimize the structure and weights of a neural network. The proposed approach performed well in benchmark problems. Subudhi and Jena [29] used the combination of the memetic differential evolution (MDE) algorithm and BP algorithm (DEBP) to train a multilayer neural network to identify a nonlinear system. DEBP performance was compared with six other algorithms such as Back Propagation (BP), Genetic Algorithm (GA), PSO, DE, Back Propagation genetic algorithm (GABP), and Back Propagation Particle Swarm Optimization (PSOBP). The results of different algorithms showed that the proposed DEBP has better identification compared to other cases.

Malviya and Pratihar [136] used PSO, BP, and two clustering algorithms (including Fuzzy C-means) to train the RBFNN and MLFFNN networks for the MIG welding process problem. In this research, connection weights and learning parameters are optimized. Zhao and Qian [137] used the CPSO algorithm to optimize the weights and architecture of a three-layer FFNN. The performance of CPSO-FFNN was compared with the existing architectures in the research literature, and the results showed the superiority of the proposed architecture. Green II et al. [138] used the CFO algorithm to optimize the weights of an ANN. The performance of the CFO was compared with the PSO algorithm, which shows the superiority of CFO-NN.

Vasumathi and Moorthi [139] used the PSO algorithm to optimize the weights of an ANN. The results showed that the proposed PSO-ANN architecture performs well in the harmonic estimation problem. Yaghini et al. [140] used a combination of the improved particle swarm optimization (IOPSO) and the BP algorithm to train an ANN. The developed architecture was implemented on eight benchmark datasets. IOPSO-BPA-ANN also performed better than the other 10 algorithms. Dragoi et al. [141] used the differential evolutionary self-adaptation algorithm (SADE) to optimize the weights, architecture, and learning parameters of an ANN. The developed approach for the aerobic fermentation process was proposed and presented good results. Ismail et al. [142] used a combination of PSO and BP algorithms to train the product unit neural network (PUNN). The PSO-BP-PUNN architecture performed better than the PSO-PUNN and BP-PUNN architectures.

Das et al. [143] used the PSO algorithm to train ANN. In this study, all four parameters of weight, number of layers, number of neurons and learning parameters were optimized simultaneously. According to the results, the PSO-ANN architecture performed better than other architectures in the literature. Mirjalili et al. [144] used the BBO algorithm to optimize the weights of an MLPNN for classification and function approximation problems. They compared the BBO algorithm with five other metaheuristic algorithms and the BP and ELM algorithms. BBO results were better than other algorithms in terms of accuracy and convergence speed. Jaddi et al. [145] used the improvement of the bat algorithm to optimize an ANN. Where both the ANN structure and the network weights are optimized. Statistical analysis showed that the bat algorithm with Ring and Master-Slave strategies for the classification problem performed better than other methods in the literature.

Jaddi et al. [146] used the improved bat algorithm (MBA) to optimize the weights, architecture, and active neurons of an ANN. The hybrid algorithm showed high performance in six classification problems, two-time series problems and one real-world problem. González et al. [147] used the fuzzy gravitational search algorithm (FGSA) to train a neural network's modules, layers and nodes. The proposed FGSA-NN architecture was implemented for the pattern recognition problem and provided acceptable results. Gaxiola et al. [148] used particle swarm optimization and a genetic algorithm to optimize the weights of type-2 fuzzy inference systems. The developed architectures were implemented on time series benchmark datasets. According to the results, NNT2FWGA and NNT2FWPSO algorithms performed better than NNT2FW. Karaboga and Kaya [149] used the hybrid artificial bee colony algorithm (aABC) to train ANFIS. The performance of aABC-ANFIS was compared with 14 other architectures on four nonlinear dynamic systems, which showed its superiority in accuracy.

Jafrasteh and Fathianpour [150] used an improved artificial bee colony algorithm (SPABC) to train the LLRBF neural network. The results of the proposed algorithm were compared with six other MH algorithms that show the superiority of SPABC-LLRBFNN. Khishe et al. [19] used the improved migration model of the biogeography-based optimization to optimize the weights and biases of an MLPNN. They developed the exponential-logarithmic migration model to improve BBO performance. Additionally, the performance of the proposed algorithm was compared with six other MH algorithms for sonar data classification, which showed the superiority of IBBO-MLPNN. Ganjefar and Tofighi [151] used a combination of GA and GD algorithms to train an ANN. The proposed HGAGD-NN approach has yielded good results for several benchmark problems.

Aljarah et al. [152] used the whale optimization algorithm (WOA) to train the weights of an MLPNN. They implemented the proposed WOA-MLP algorithm on 20 benchmark problems, which produced better accuracy and speed than the BP, GA, PSO, ACO, DE, ES, and PBIL algorithms. Heidari et al. [153] used the grasshopper optimization algorithm (GOA) to train an MLPNN. The performance of GOA-MLPNN was evaluated with eight other algorithms on five medical identification classification datasets. Finally, the proposed GOA-MLPNN algorithm gave better results in different criteria. Hadavandi et al. [154] proposed an MLPNN simulator based on the gray wolf optimizer (GWO) to predict the tensile strength of Siro-Spun yarn. The gray wolf optimizer algorithm was applied to train the neural network weights. Finally, proposed hybrid architecture GWO-MLPNN performed better than a traditional learning-based neural network (BP-MLPNN).

Haznedar and Kalinli [155] used the SA algorithm to train an ANFIS. The SA-ANFIS architecture was compared with GA, BP algorithms and various architectures from the research literature, which showed the superiority of SA-ANFIS. Pham et al. [156] used biogeography-based optimization to optimize the weights and parameters of an MLPNN to predict the soil composition coefficient. This study used BP-MLPNN, RBFNN, Gaussian Process (GP) and SVR algorithms to compare with BBO-MLPNN. According to the results, the BBO-MLPNN algorithm excelled in three criteria: RMSE, MAE and correlation coefficient. Han et al. [157] used the improved mutation model of the DE algorithm to optimize the neural network. The DE-BPNN model has been implemented to predict the performance of pre-cooling systems, which has yielded far better results than other networks.

Rojas-Delgado et al. [158] used particle swarm optimization (PSO), firefly algorithm (FA), and cuckoo search (CS) to train the ANN. The various neural network architectures trained by meta-heuristic algorithms were implemented on six benchmark problems that performed very well compared to traditional methods. Khishe and Mosavi [159] used the chimp optimization algorithm to optimize the weights and biases of an MLPNN. In that study, the performance of the MLPNN-ChOA algorithm was compared with the performance of IMA, GWO and a hybrid algorithm on the underwater acoustic dataset classification problem, which showed the superiority of the MLPNN-ChOA. Wang et al. [160] used the PSO and CA algorithms to optimize the neural network weights. The combined particle swarm optimization (HPSO) algorithm was first developed in that research. The HPSO algorithm was combined with CA, and finally, the HPSO-CA algorithm was implemented for network training (HPSO-CA-ANN). The developed algorithm and five other MH algorithms were implemented on 15 benchmark datasets that performed better than the others.

Al-Majidi et al. [161] used the PSO algorithm to optimize the weights and architecture of FFNN. The results showed that the optimized FFNN architecture based on the PSO accurately predicts the maximum power point. Ertuğrul [54] used the differential evolution algorithm (DE) to optimize the nodes and learning parameters of RaANN. The results showed that the differential evolution algorithm for 48 synthetic datasets performed better than other methods. Ansari et al. [162] used the magnetic optimization algorithm (MOA) & PSO to optimize the weights of the back-propagation neural network. According to the results, the proposed approach (MOA-BBNN) performed well in the bankruptcy prediction problem.

Zhang et al., [163] used the chicken swarm optimization (CSO) algorithm to optimize the weights, biases, and number of layers of the Elman neural network (ENN). According to the results, the proposed hybrid approach (CSO-ENN) performed well in the Air pollution forecasting. Also, the performance of the proposed hybrid architecture has been better than other algorithms. Li et al., [164] used the biogeography-based optimization (BBO) algorithm to optimize the weights of MLPNN for medical image classification. The results showed that the proposed hybrid architecture (BBO-MLPNN) performs better than the other original architectures.

Table 2 summarizes the above research as well as many other studies. As can be seen, for each research, the author's name, year of publication, type of neural network, optimized components in the network, type of MH algorithm used, application and data set used are listed. In the following, for a more comprehensive review, some statistical analysis of the research collected in Table 2 is presented.

4.1.1 Investigation of Optimized Components in ANNs

As an optimization problem, MH algorithms formulate the optimal estimation of ANN components (such as weights, number of layers, number of neurons, learning rate, etc.). This section examines the abundance of MH use for optimized components in neural networks (according to the papers in Table 2). Figure 12 shows the relative abundance of research on optimized components in ANNs using MH algorithms.

As shown in Fig. 12, in 221 studies (69%), weights and biases have been adjusted using MH algorithms, which shows a high percentage. In 47 studies (14%), the number of neurons in the layers has been adjusted using MH algorithms. Moreover, in 22 studies (7%), the number of layers in the neural network has been adjusted. Finally, in 31 studies (10%), learning parameters, learning algorithms or activation functions have been adjusted. Figure 13 also shows the relative abundance of research in the simultaneous optimization of two components of ANNs.

As can be seen in Fig. 13, in 15 studies, weights and layers have been adjusted simultaneously. In 28 studies, weights and neurons; in 15 studies, weights and learning parameters; in 14 studies, the number of layers and neurons; in 6 studies, the number of layers and learning parameters; and in 14 studies, the number of neurons and learning parameters have been adjusted simultaneously. Figure 14 shows the relative abundance of research in the simultaneous optimization of three components of ANNs. As can be seen, in 6 studies, weights, the number of neurons and learning parameters have been adjusted simultaneously. In 7 studies, weights, number of layers and number of neurons; in 2 studies, weights, number of layers and learning parameters; in 5 studies, number of layers, number of neurons and learning parameters were adjusted simultaneously. According to Table 2, in only one study [143], all four neural network components were adjusted simultaneously. Therefore, little research has been done in this area.

4.1.2 Investigation of Meta-Heuristic Algorithms Used in Ann's Optimization

According to Table 2, many MH algorithms have been developed to optimize neural networks. Figure 15 shows the MH algorithms used to optimize ANNs. PSO, 76 implementations and GA, 47 implementations, was the most used MH algorithms. GWO, DE, SA, ABC, GSA, WOA, BBO, and FOA algorithms are also in the next ranks. Most researchers tend to extend novel hybrid algorithms by combining MHs to optimize the hyper-parameters of ANNs. The development of hybrid MHs helps improving algorithms performance and capable of solving complex optimization problems. According to the results of Table 2, many researches have used the modification and hybridization of meta-heuristic algorithms to optimize neural network parameters. Also, the performance of the proposed hybrid MH algorithms have been better than others.

4.1.3 Checking the Number of Papers Published in Journals and Years

In this section, the papers in Table 2 are categorized according to the type of journals and the year of their publication. Figure 16 shows the percentage of papers published in various journals (based on Table 2). As shown, 74 papers (44%) in Elsevier, 30 papers (21%) in Springer, 27 papers (13%) in IEEE, 16 papers (8%) in Taylor & Francis, 13 papers (6%) in John Wiley & Sons, and 14 papers (8%) in other journals have been published regarding the use of MH for ANNs.

Papers published in journals (based on Table 2)

Figure 17 also indicates the changes in the number of papers published in different years about the use of MH for Training ANNs. Between 1988 and 2002, few papers were developed for neural network optimization. From 2003 to 2010, neural network optimization received a little more attention from researchers, and the number of papers in this field increased. But from 2011 to 2022, many researchers have worked on neural network optimization. Especially since 2021, the number of these papers has been increasing. This implies that this problem is still a challenge and many problems need to be resolved.

4.1.4 Applications of Hybrid MH-NNs

In this section, the application of the papers in Table 2 is evaluated. Figure 18 shows the application of the papers regarding the use of MH for ANNs. 77 papers in benchmark problem (Classification, prediction, time series, optimization, system identification), 53 papers in electrical engineering, signal processing and energy systems, 34 papers in civil engineering, 18 papers in mechanical engineering, 16 papers in biomedical and chemical engineering, 15 papers in medical image classification and medical diseases diagnosis, 8 papers in environmental management, 8 papers in economy and product quality, and 19 papers in other applications have been published regarding the use of MH for ANNs.

As can be seen, most of the MH-ANNs were implemented on benchmark problems and datasets. The optimal solutions of the benchmark problems are known. Therefore, they are a very good criterion for evaluating algorithms. Also, many evolutionary ANNs have been implemented in electrical engineering, civil engineering, mechanical engineering, and medical image classification applications. The results of these papers show that the proposed hybrid ANNs architectures perform better than others. Therefore, it can be said that evolutionary artificial neural networks (MH-ANNs) are promising methods in these applications.

4.1.5 Contributions of Different Continents in Using the Hybrid MH-NN Models

Figure 19 shows the distribution of studied papers according to the affiliation of the authors for each continent. As can be seen, Asia has the largest portion of contributions in the world with the maximum number of papers from China, Korea, and India, while America has the lowest contributions.

4.2 Review2: Training the DL Architectures by MH Algorithms

One of the weaknesses of DL architectures is finding the optimal value of algorithm parameters. This section provides a comprehensive overview of optimizing different DL architectures using MH algorithms. Optimization of DL architectures is often considered from several aspects: optimization of weights, hyper-parameters, network structure, activation nodes, learning parameters, learning algorithm, learning environment, etc. [9].

Ku et al. [367] used the genetic algorithm to optimize the weights of an RNN. The proposed approach (GA-RNN) was compared with Lamarckian and Baldwinian mechanisms, which indicated better results (convergence speed and accuracy). Blanco et al. [368] used the genetic algorithm (GA) to improve the performance of an RNN. The results indicated that the proposed algorithm solves the time complexity well. Delgado et al. [369] used multi-objective SPEA2 and NSGA_II algorithms to optimize the topology and structure of an RNN. The proposed architectures performed well for the time series problem. Bayer et al. [370] used the NSGA_II to train an LSTM architecture. The results showed that the proposed network performs well in learning sequences.

Lin and Lee [371] used the improved PSO algorithm to optimize the weights of an RFNN. The results indicated that the IPSO algorithm for controlling nonlinear systems performed better than other methods (traditional PSO and GA). Subrahmanya and Shin [372] used the combination of PSO and CMA-ES algorithms to optimize the structure and weights of an RNN. According to the results, the proposed architecture (HMH-RNN) indicated good performance. Hsieh et al. [373] used the artificial bee colony (ABC) algorithm to optimize the weights of an RNN. According to experiments, the proposed approach indicates good capital market performance and can be implemented in a trading system to predict stock prices and maximize profits.

David and Greental [41] used combined gradient-based learning and genetic algorithm strategy to train a deep neural network. The proposed architecture performed very well in the benchmark data set. Shinozaki and Watanabe [40] used GA and CMA-ES algorithms to optimize the structure and parameters of a DNN. The results demonstrated that the proposed algorithm is suitable for adjusting neural network parameters. Sheikhan et al. [374] used the GSA binary algorithm to optimize the structure and weights of an RNN network. The proposed algorithm (BGSA-RNN) was compared with gradient-based and PSO algorithms, which provided significant results. A combination of evolutionary algorithm and DBN network was used by Chen et al. [375] for image classification. The results indicated that the execution time decreases rapidly.

Real et al. [376] used an evolutionary algorithm for convolutional neural network (CNN) training to classify CIFAR-10 and CIFAR-100 datasets. The findings implied that the proposed approach could provide competitive results in two popular datasets. Tang et al. [377] used the PSO algorithm to optimize the weights of a DSNN. The proposed algorithm performed very well in feature extraction problems and EEG signal detection. Song et al. [378] used improved biogeography-based optimization (IBBO) to optimize the parameters and weights of DDEA. The results indicated that the proposed approach (IBBO-DDEA) for gastrointestinal complications prediction performed better than other methods (such as ANN and other common architectures).

Da Silva et al. [379] used the PSO algorithm to optimize the hyper-parameters of a convolutional neural network. Experiments on a CAD system indicated an improvement in the accuracy of the proposed algorithm. The WWO algorithm was used by Zhou et al. [380] to optimize the structure and weights of a DNN. Experiments on several benchmark datasets indicated that the proposed WWO-DNN approach performs better than the gradient-based methods. Shi et al. [381] used the PSO algorithm to optimize the number of neurons in the hidden layers of a deep neural network. Experimental results demonstrated that the detection rate in the proposed algorithm was improved by 9.4% and 8.8% compared to conventional DNN and support vector machine (SVM). In addition, another experiment compared to the genetic algorithm (GA) proved that the proposed particle swarm optimization (PSO) is more effective in deep neural network (DNN) optimization. Hong et al. [382] used the genetic algorithm (GA) to optimize the parameters and hyper-parameters of the CNN. Experimental results for the price forecasting problem showed that the proposed GA-CNN always offers higher forecasting accuracy and lower error rates than other forecasting methods.

Guo et al. [383] used a distributed particle swarm optimization (DPSO) algorithm to optimize the hyper-parameters of convolutional neural network (CNN). Experiments on the image classification dataset indicated that the proposed DPSO method improved the performance of the CNN model while reducing computational time compared to traditional algorithms. ZahediNasab and Mohseni [384] used the genetic algorithm (GA) to optimize the deep neural network (DNN) activation function. Experiments on the medical classification and MNIST datasets showed the proposed approach's superiority. It was also stated that selecting an appropriate adaptive activation function plays an important role in the quality of a deep neural network. Jallal et al. [385] used an improved PSO algorithm for DNN training to improve the prediction accuracy of a solar tracker. The DNN-RODDPSO algorithm performed better than the standard algorithms in the literature. Elmasry et al. [386] used the PSO algorithm to optimize the hyper-parameters of three DL algorithms called DNN, LSTM-RNN and DBN. Experiments on the network intrusion detection problem proposed that these three developed architectures performed better than conventional architectures.

Kan et al. [387] used the adaptive particle swarm optimization (APSO) algorithm to optimize the weights and biases of the convolutional neural network (CNN). According to the results, the proposed hybrid approach (APSO-CNN) performed well in IoT network intrusion detection. Also, the performance of the proposed hybrid architecture has been better than other algorithms. Kanna and Santhi, [388] used the black widow optimization (BWO) algorithm to optimize the weights of CNN-LSTM for intrusion detection systems. The results showed that the proposed hybrid architecture (BWO-CNN-LSTM) performs better than the other original architectures. Ragab et al. [389] used enhanced gravitational search optimization (EGSO) algorithm to optimize the weights and biases of the convolutional neural network (CNN). According to the results, the proposed hybrid approach (EGSO-CNN) performed well in COVID-19 diagnosis problem. Also, the performance of the proposed hybrid architecture has been better than other algorithms.

Table 3 summarizes the above research as well as many other studies. As can be seen, for each research, the author name, year of publication, type of DL, optimized components, type of MH algorithm used, application and data set used are listed. In the following, for a more comprehensive review, some statistical analysis of the research collected in Table 3 is presented.

4.2.1 Investigation of optimized components in DL architectures

As an optimization problem, MH algorithms formulate the optimal estimation of DL components (such as hyper-parameter, weights, number of layers, number of neurons, learning rate, etc.). This section examines the abundance of MH use for optimized components in DL architectures (according to the papers in Table 3). Figure 20 represents the relative abundance of research on optimized components in DLs using MH algorithms. As demonstrated in Fig. 20, in 61 studies (20%), weights and biases have been adjusted using MH algorithms. In 76 studies (26%), the number of layers and neurons in the layers have been adjusted using MH algorithms. Moreover, in 114 studies (38%), hyper-parameters in DL architectures have been adjusted. Finally, in 47 studies (16%), learning parameters, learning algorithms or activation functions have been adjusted.

Figure 21 also indicates the relative abundance of research in the simultaneous optimization of two components of DLs. As can be seen in Fig. 21, in 14 studies, weights and layers, and neurons were adjusted simultaneously. In 12 studies, weights and hyper-parameter; in 4 studies, weights and learning parameters; in 40 studies, the number of layers and number of neurons and hyper-parameter; in 31 studies, the number of layers and number of neurons and learning parameters, and in 31 studies hyper-parameter and learning parameters have been adjusted simultaneously. Figure 22 also represents the relative abundance of research in the simultaneous optimization of three DL components (according to Table 3).

As can be seen, in 3 studies, weights, the number of layers and number of neurons and the hyper-parameter were adjusted simultaneously. In 3 studies, weights, number of layers and number of neurons and learning parameters; in 2 studies, weights, hyper-parameter and learning parameters; in 18 studies, hyper-parameter, number of layers and number of neurons and learning parameters were adjusted simultaneously. According to Table 3, in only 2 studies, all four DL components were adjusted simultaneously. Therefore, very little research has been done in this area (simultaneous optimization of three/four components).

4.2.2 Investigation of Meta-Heuristic Algorithms Used in DL's Optimization

According to Table 3, many MH algorithms have been developed to optimize DL architectures. Figure 23 represents the MH algorithms used to optimize DLs. PSO with 48 implementations and GA with 27 implementations were the most used algorithms. EA, GWO, FA, WOA, ABC, ACO, HS, NSGA_II, CMA-ES, and GOA algorithms are also in the next ranks.

4.2.3 Investigating the Abundance of MHs Used for Different Types of DL Architectures

Some of the popular DL architectures are Long short-term memory (LSTM), Convolutional Neural Networks (CNNs), Deep Belief Networks (DBN), Recurrent Neural Networks (RNN), Deep Boltzmann Machines (DBM), Deep Auto Encoder (DAE), and Deep Neural Networks (DNN). In this section, the abundance of MHs used for different DL architectures is investigated (Fig. 24). CNN with 96 implementations, LSTM with 37 implementations, and DBN with 24 implementations were the most used DL architectures, which are set using MH algorithms. DNN, RNN, DAE, DBM, GAN, DSNN, DAR, and EDEN architectures are also in the next ranks.

4.2.4 Checking the Number of Papers Published in Journals and Years

In this section, the papers in Table 3 are categorized according to the type of journals and the year of their publication. Figure 25 demonstrates the percentage of papers published in various journals (based on Table 3). As indicated, 71 papers (37%) in Elsevier, 39 papers (20%) in Springer, 25 papers (13%) in IEEE, 6 papers (3%) in Taylor & Francis, and 17 papers (9%) In John Wiley & Sons, and 35 papers (18%) in other journals have been published regarding the use of MH for DL architectures.

Papers published in journals (based on Table 3)

Figure 26 also represents the changes in the number of papers published in different years about the use of MH for Training DLs. Between 1988 and 2016, few papers were developed for DL optimization. From 2017 to 2020, DL optimization received a little more attention from researchers, and the number of papers in this field increased. But from 2021 to 2022, many researchers have worked on DL optimization. This problem is still a challenge, and many problems need to be resolved.

4.2.5 Applications of DLs

In this section, the application of the papers in Table 3 is evaluated. Figure 27 shows the application of the papers regarding the use of MH for DLs. 48 papers in medical image classification and medical diseases diagnosis, 46 papers in Benchmark problem (Classification, prediction, time series, optimization, recognition, system identification), 44 papers in electrical engineering, signal processing and energy systems, 23 papers in civil engineering and environmental management, 8 papers in mechanical engineering, 3 papers in biomedical and chemical engineering, 4 papers in economy and product quality, and 17 papers in other applications have been published regarding the use of MH for ANNs.

As can be seen, most of the DLs were implemented on medical image classification and benchmark problems (such as MNIST, CIFAR-10, Caltech, CINIC-10, and EMNIST datasets). According to Table 3, evolutionary CNN architectures have been used in many medical image classification applications. The results of these papers show that the proposed hybrid DL architectures perform better than others. Therefore, the combination of MH and CNNs methods can be useful for medical applications.

4.2.6 Contributions of Different Continents in Using the Hybrid MH-DL Models

Figure 28 shows the distribution of studied papers according to the affiliation of the authors for each continent. As can be seen, Asia has the largest portion of contributions in the world, while America has the lowest contributions.

5 Discussion, Statistical Results, Limitations, and Future Challenges

5.1 Discussion and Statistical Results of Tables 2 and 3

As can be seen from the results of Tables 2 and 3, neural network optimization has been considered by researchers from the past to the present. But the optimization of DL parameters has recently been considered, and more research is needed in this field. The main reason is that the DL concept has been seriously pursued since 2008. Therefore, many challenges and more research are needed in this field. The existence of many parameters in DL architectures has led to the use of MH algorithms to optimize them. According to Table 3, DL optimization has been considered by researchers since 2015.

According to the literature review, well-known MH algorithms such as GA and PSO have been used for training the NN and DL. But according to the No Free Lunch (NFL) theorem, each problem has its characteristics, and different algorithms must be tested to solve it [540]. According to the NFL theorem, it is very difficult to find a comprehensive MH algorithm to solve various problems [541]. Therefore, an MH algorithm may not be suitable for optimizing the NN and DL parameters. However, it works well in solving some problems. In addition, the only way to determine the convergence of the MH algorithm is through its experimental evaluations. Because MH algorithms search the problem space (based on their operators), it is difficult to choose the MH algorithm as the best method for a particular problem. Therefore, it is necessary to use different algorithms to optimize the NN and DL parameters.

In many research studies on optimization problems [18, 19, 542, 543], improving common versions of MH algorithms (and combination of algorithm) has increased exploitation and exploration power. In some recent research [66, 67, 120], new MH algorithms have been introduced, which have performed better than the old algorithms in many optimization problems. According to the literature review (Tables 2 and 3), in most research, common algorithms (such as PSO and GA) have been used to optimize NN and DL. Therefore, the development of old MH algorithms, as well as novel MH algorithms for optimizing NN and DL parameters, is a new challenge, which can be seen in recent papers in Tables 2 and 3.

It is complicated to find the best possible solution in the search space in large-scale optimization problems. Moreover, changing algorithm variables does not have much influence on the algorithm convergence. Therefore, for massive dataset with high complexity, even if the researchers have determined accurate initial parameters, the algorithm will not be able to perform adequate exploration and exploitation. Consequently, to achieve comprehensive global and local searches, we need to apply powerful operators to make better exploration and exploitation. MH algorithms can be combined with others and overcome this problem by using the advantages and operators of other algorithms. In recent decades, researchers have utilized a combination of algorithms to improve the performance of the optimization process. The weakness of an algorithm can be compensated by the operation of other algorithms.

Most researchers tend to extend novel hybrid algorithms by combining MHs to optimize the hyper-parameters of DLs and ANNs. The development of hybrid MHs helps improving algorithms performance and capable of solving complex optimization problems. According to the results, many researches have used the modification and hybridization of meta-heuristic algorithms to optimize ANN and DL parameters. Also, the performance of the proposed hybrid MH algorithms have been better than others.

In general, the optimal performance of the MHs should be able to achieve a suitable trade-off between exploration and exploitation features. The exploration operator can explore the search space more efficiently and perform a global search to avoid getting stuck in local minimum, but it may encounter slow convergence. On other hand, the exploitation operator leads to very high convergence rates, but may be trapped in a local minimum. Among the existing MH algorithms, some of them are better in convergence trend (exploitation) while others have more ability to avoid getting trapped in local optimum (exploration). Table 4 indicates the comparison of different MH algorithms in terms of their ability of finding global optimum, convergence trend, exploitation ability, exploration ability, parameter setting, and implementation. As can be seen, grey wolf optimizer, black widow optimization, chimp optimization algorithm, differential evolution, red fox optimization, capuchin search algorithm, and gannet optimization algorithm perform well in most properties and their operators can be used to improve other architectures. This framework is useful for researchers for their applications in improved hybrid algorithm.

According to the statistical results of Table 2, in only one study, the simultaneous optimization of all components (weights, number of layers, number of neurons and learning functions/parameters) of neural networks has been investigated. Also, in two study, the simultaneous optimization of all components (weights, number of layers and neurons, hyper-parameter, and learning functions/parameters) of DLs has been investigated. However, there is no research on training DL (simultaneous optimization of all components). So researchers in the future can optimize all components simultaneously to improve network performance. This is a challenge for both neural networks and DL architectures. In addition, in neural networks, in most cases, the weight of the network is optimized. But in DL architectures, weight, hyper-parameter, and network structure are optimized equally. Since optimizing ANN and DL architectures is a complex and multi-objective problem (MOO), using multi-objective MH algorithms or developing new multi-objective MH algorithms is also challenging. While in very few papers, multi-objective MH algorithms have been used to optimize ANN and DL parameters (as represented in Tables 2 and 3).

In optimizing DL algorithms, CNN architecture is more trained. According to the NFL theorem for MH algorithms, implementing all DL algorithms for various problems is also challenging. In fact, different DL architectures need to be implemented for different problems and their experimental results evaluated. Therefore, optimizing other DL architectures can be considered to solve various problems in the future. Table 5 also indicates the advantages and disadvantages of compared techniques.

5.2 Limitations of Deep Learning

Notwithstanding the positive outcomes of the reviewed papers, there are still some challenges and limitations related to deep learning and DL methods that should be addressed.

-

Over-fitting problem in a deep neural network Many parameters relate to unseen datasets in some complex applications. This can cause a difference in the error caused by the training dataset and the new unseen dataset.

-

Hyper-parameters optimization DL architectures have several hyper-parameters, for example, learning rate, number of hidden layers, number of neurons in each hidden layer, number of convolution and max-pooling layers, and so on. Most often these hyper-parameters are adjusted by trial and error method. MH algorithms formulate the optimal estimation of DL components (such as hyper-parameter, weights, number of layers, number of neurons, learning rate, etc.).

-

Computing Power Required High computing power is required to tackle a real-world problem using DL models. Therefore, experts are trying to develop high-performance multi-core GPUs and similar processing units such as TPUs in the future.

-

Gradient-based learning The learning process of DL architectures is considered one of the most challenging machine learning problems. Several past studies have used gradient-based methods to train DL architectures. However, gradient-based methods have major drawbacks such as stucking at local minimums in multi-objective cost functions, expensive execution time due to calculating gradient information with thousands of iterations and needing the cost functions to be continuous. Since training the ANNs and DLs is an NP-hard optimization problem, their structure and parameters optimization using the meta-heuristic algorithms has been considerably raised.

-

Dataset unavailability for various applications DL requires a large amount of training dataset. The classification accuracy of the DL architectures is highly dependent on the quality and size of the dataset. However, unavailability of the dataset is one the biggest barrier in the success of DL architectures.

-

Determining the type of DL architecture to solve a particular problem Many studies have used different DL architectures to solve engineering and medical problems. However, there is no explanation for how these architectures are chosen to solve specific problems.

-

Heterogeneity in image dataset The nature of data varies from hardware to hardware and thus, there are many variations in images due to sensors and other factors. In addition, the wide range of medical applications requires the combination of several different datasets for learning and accuracy of algorithms.

-

Architecture Implementation Cost Feature extraction can be done in advance and then the proper methods can be implemented. The purpose of this process is to reduce the computing runtime (training) and computing power required.

-

Lack of results of different DL architectures on benchmark database The lack of results of different DL architectures is still a challenge in solving many benchmark database or benchmark engineering problems. For example, in some studies [544, 545], the authors have used different DL architectures and compared the results with the decision tree.

-

Reasonable Computing Time Some applications with many variables in some deep learning methods, (such as DNN) have high dimensions, which poses a challenge for these models to obtain an accurate DNN in a reasonable execution time.

-

One-Shot Learning DL architectures require a lot of training data to provide high-quality results. For example, the Image-Net database contains more than a million images, and the DL architecture often requires thousands of instances to classify them correctly. Human does not need thousands of bicycle images to learn a picture of a bicycle. When a bicycle is shown to a child, they can often recognize another bicycle, even in different models, shapes, and colors.

-

Imbalanced data In this problem, one or more classes may have very few representatives in the training process. MH algorithms can be used to deal with such problems.

-

Theoretical backbone Unlike decision trees, SVMs, and other machine learning architectures, most of the DL methods are yet to possess a strong theoretical backbone.

5.3 Future Work

While deep learning models have been successfully applied in various application fields, there are future works and challenges that require to be addressed. Scientists and researchers should do more research and work to overcome the challenges facing the future of deep learning. In addition, more DL techniques and inspirations are needed to develop new DL architectures. New techniques will be necessary for complex applications. In addition, DL architectures can take advantage of various sub-domains of swarm intelligence and evolutionary computation that are still unexplored. In this section, according to the literature review, some relevant perspectives for future work are listed.

-

Design of DL methods Deep learning is used as an efficient method to deal with big data problem. Furthermore, DL method has get great success with a large number of unlabeled data. However, rather strong techniques are required when a limited training data is available. Therefore, it is important to consider designing DL techniques from multiple training datasets in the future.

-

DL and mobile devices The idea of DL chips has attracted the attention of many researchers. Deep learning techniques can be implemented in mobile devices with low-power energy.

-

Transfer Learning The learning architecture in the human brain has evolved over millions of years and has been transferred from generation to generation. Humans transfer part of their learning as an experience to future generations. In addition, humans constantly learn about different tasks that help them learn specific tasks faster. For this reason, learning different problems is achieved by making basic and easy settings. Developing the concept of transfer learning in DL is one of the challenges in this field and can be a new field of work for researchers in the future. Transfer learning reduces training time and the use of previous learning experiences in new tasks.

-

DL and Reinforcement Learning (RL) RL mainly involves goal-oriented algorithms that learn how to achieve a complex goal. Recently, the combination of DL and RL methods has attracted the attention of researchers. These methods have led to several applications such as self-driving cars and AlphaGo. Future works can focus on exploring MH algorithms in optimizing learning methods in deep RL.

-

Unsupervised Learning-Based DL Because having labeled data is usually costly, the next generation of DL techniques is more semi-supervised and unsupervised. Here, clustering concepts and algorithms can be used to improve the performance of DL algorithms.

-

Stability of DL Stability analysis of DL is considered an important problem in this field due to its numerous advantages for different applications. Therefore, we should focus on some problems such as stability analysis, state estimation, and synchronization for DLs.

-

Dimensionality reduction This problem is one of the most prevalent challenges needed to be addressed since the number of the features from deep learning method can be huge. This problem weakens the performance of the algorithm, since most of these features are redundant. To address this problem in the future, various MHs can be combined with DL models. MH algorithms first select the optimal features and then transfer them to a DL model.

-

Developing more challenging evolutionary DL models There are many papers in this field (EvoDL), but not much paper has been undertaken to evolve Generative Adversarial Network (GAN) by using MH algorithms. In addition, MH-based optimization algorithms may also be explored to evolve DL extensions of non-iterative learning paradigms.

-

Energy-efficient Learning Problem In most cases, DL architectures that work on big data are inefficient in energy consumption. On the other hand, the human brain requires very little energy to learning and often does not perform accurate calculations (estimates). This energy is enough to learn about many problems and can add to the power of generalization. Therefore, in the future, DL architectures must be designed to be energy efficient.

-