Abstract

The goal to improve prediction accuracy and robustness of predictive models is quite important for time series prediction (TSP). Multi-model predictions ensemble exhibits favorable capability to enhance forecasting precision. Nevertheless, a static ensemble system does not always function well for all the circumstances. This work proposes six novel dynamic ensemble selection (DES) algorithms for TSP, including one DES algorithm based on Predictor Accuracy over Local Region (DES-PALR), two DES algorithms based on the Consensus of Predictors (DES-CP) and three Dynamic Validation Set determination algorithms. The first dynamic validation set determination algorithm is designed based on the similarity between the Predictive value of the test sample and the Objective values of the training samples. The second one is constructed based on the similarity between the Newly constituted sample for the test sample and All the training samples. Finally, the third one is developed based on the similarity between the Output profile of the test sample and the Output profile of each training sample. These proposed algorithms successfully realize dynamic ensemble selection for TSP. Experimental results on twelve benchmark time series datasets have demonstrated that the proposed DES algorithms greatly improve predictive performance when compared against current state-of-the-art prediction algorithms and the static ensemble selection techniques.

Similar content being viewed by others

References

Hamilton JD (1994) Time series analysis. Princeton University Press, Princeton

Brockwell PJ, Davis RA (2009) Introduction to time series and forecasting. Springer, Berlin

Pulido ME, Melin P (2012) Optimization of type-2 fuzzy integration in ensemble neural networks for predicting the Dow Jones time series. In: Fuzzy information processing society, pp 1–6

Palivonaite R, Ragulskis M (2016) Short-term time series algebraic forecasting with internal smoothing. Neurocomputing 171:854–865

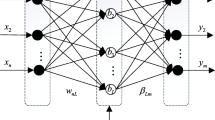

Ma Z, Dai Q (2016) Selected an stacking ELMs for time series prediction. Neural Process Lett 44:1–26

Balkin SD, Ord JK (2000) Automatic neural network modeling for univariate time series. Int J Forecast 16:509–515

Giordano F, La Rocca M, Perna C (2007) Forecasting nonlinear time series with neural network sieve bootstrap. Comput Stat Data Anal 51:3871–3884

Jain A, Kumar AM (2007) Hybrid neural network models for hydrologic time series forecasting. Appl Soft Comput 7:585–592

Lapedes AS, Farber RF (1987) Nonlinear signal processing using neural networks: prediction and system modeling. In: 1. IEEE international conference on neural networks

Chakraborty K, Mehrotra K, Mohan CK, Ranka S (1992) Original contribution: forecasting the behavior of multivariate time series using neural networks. Neural Netw 5:961–970

Chatfield C, Weigend AS (1994) Time series prediction: forecasting the future and understanding the past: Neil A. Gershenfeld and Andreas S. Weigend, 1994, ‘The future of time series’, in: A.S. Weigend and N.A. Gershenfeld, eds., (Addison-Wesley, Reading, MA), 1–70. Int J Forecast 10:161–163

Adhikari R (2015) A neural network based linear ensemble framework for time series forecasting. Neurocomputing 157:231–242

Pelikan E, Groot CD, Wurtz D (1992) Power consumption in West-Bohemia: improved forecasts with decorrelating connectionist networks. Neural Netw World 2:701–712

Britto AS, Sabourin R, Oliveira LES (2014) Dynamic selection of classifiers—a comprehensive review. Pattern Recognit 47:3665–3680

Kittler J, Hatef M, Duin RPW, Matas J (1998) On combining classifiers. IEEE Trans Pattern Anal Mach Intell 20:226–239

Adhikari R, Verma G, Khandelwal I (2014) A model ranking based selective ensemble approach for time series forecasting. In: International conference on intelligent computing, communication and convergence, pp 14–21

Cruz RMO, Sabourin R, Cavalcanti GDC, Ren TI (2015) META-DES: a dynamic ensemble selection framework using meta-learning. Pattern Recognit 48:1925–1935

Gheyas IA, Smith LS (2011) A novel neural network ensemble architecture for time series forecasting. Neurocomputing 74:3855–3864

Kourentzes N, Barrow DK, Crone SF (2014) Neural network ensemble operators for time series forecasting. Expert Syst Appl Int J 41:4235–4244

Donate JP, Cortez P, Sánchez GG, Miguel ASD (2013) Time series forecasting using a weighted cross-validation evolutionary artificial neural network ensemble. Neurocomputing 109:27–32

Krikunov AV, Kovalchuk SV (2015) Dynamic selection of ensemble members in multi-model hydrometeorological ensemble forecasting. Procedia Comput Sci 66:220–227

Adhikari R, Verma G (2016) Time series forecasting through a dynamic weighted ensemble approach. Springer, New Delhi

Kolter JZ, Maloof MA (2007) Dynamic weighted majority: an ensemble method for drifting concepts. J Mach Learn Res 8:2755–2790

Woods K, Kegelmeyer WP, Bowyer K (1997) Combination of multiple classifiers using local accuracy estimates. IEEE Trans Pattern Anal Mach Intell 19:405–410

Smits PC (2002) Multiple classifier systems for supervised remote sensing image classification based on dynamic classifier selection. IEEE Trans Geosci Remote Sens 40(4):801–813

Kuncheva LI (2000) Clustering-and-selection model for classifier combination. In: International conference on knowledge-based intelligent engineering systems and allied technologies. Proceedings, vol 1, pp 185–188

Kuncheva LI, Bezdek JC, Duin RPW (2001) Decision templates for multiple classifier fusion: an experimental comparison. Pattern Recognit 34:299–314

Zhou ZH, Wu JX, Jiang Y, Chen SF (2001) Genetic algorithm based selective neural network ensemble. In: International joint conference on artificial intelligence, pp 797–802

Santos EMD, Sabourin R, Maupin P (2008) A dynamic overproduce-and-choose strategy for the selection of classifier ensembles. Pattern Recognit 41:2993–3009

Huang GB, Zhu QY, Siew CK (2006) Extreme learning machine: theory and applications. Neurocomputing 70:489–501

Zhao G, Shen Z, Miao C, Gay R (2008) Enhanced Extreme learning machine with stacked generalization. In: International joint conference on neural networks, pp 1191–1198

Huang GB (2003) Learning capability and storage capacity of two-hidden-layer feedforward networks. IEEE Trans Neural Netw 14:274–281

Huang GB, Zhu QY, Siew CK (2004) Extreme learning machine: a new learning scheme of feedforward neural networks. In: IEEE international joint conference on neural networks, vol 2, pp 985–990

Zhou Z-H, Wu J, Tang W (2002) Ensembling neural networks: many could be better than all. Artif Intell 137:239–263

Ko AHR, Sabourin R, Britto AS (2008) From dynamic classifier selection to dynamic ensemble selection. Pattern Recognit 41:1718–1731

Kuncheva LI (2002) Switching between selection and fusion in combining classifiers: an experiment. IEEE Trans Syst Man Cybern B Cybern 32:146–156

Hartigan JA, Wong MA (1979) Algorithm AS 136: a k-means clustering algorithm. Appl Stat 28:100–108

Wang S, Qi L, Yu P, Peng X (2011) CLS-SVM: a local modeling method for time series forecasting. Chin J Sci Instrum 32:1824–1829

Paterlini S, Minerva T (2003) Evolutionary approaches for cluster analysis. Soft Computing Applications. Physica-Verlag, HD

Bezdek JC, Pal NR (1998) Some new indexes of cluster validity. IEEE Trans Syst Man Cybern B Cybern 28:301–315

Dos Santos EM, Sabourin R, Maupin P (2006) Single and multi-objective genetic algorithms for the selection of ensemble of classifiers. In: International joint conference on neural networks, IJCNN, pp 3070–3077

Partalas I, Tsoumakas G, Vlahavas I (2008) Focused ensemble selection: a diversity-based method for greedy ensemble selection. In: European Conference on Artificial Intelligence (ECAI). IOS Press

Goldberg DE (1989) Genetic algorithms in search, optimization, and machine learning. Addison-Wesley Pub. Co, Boston

Hyndman R (ed) Time Series Data Library. https://datamarket.com/data/list/

Root-mean-square deviation. https://en.wikipedia.org/wiki/Root-mean-square_deviation

Mean absolute error. https://en.wikipedia.org/wiki/Mean_absolute_error

Acknowledgements

This work is supported by the National Natural Science Foundation of China under the Grant No. 61473150.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yao, C., Dai, Q. & Song, G. Several Novel Dynamic Ensemble Selection Algorithms for Time Series Prediction. Neural Process Lett 50, 1789–1829 (2019). https://doi.org/10.1007/s11063-018-9957-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-018-9957-7