Abstract

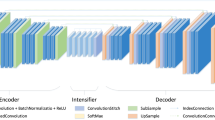

Automatic extraction of open-pit mine (OM) areas from very high-resolution (VHR) remote sensing images is important for mineral resource management and monitoring. However, few good results have been achieved on this topic due to the highly heterogeneous OM environment. Based on DeepLabv3+, this study proposes a semantic segmentation network, named OM-DeepLab, for extracting OM from VHR images. First, Xception is employed as the backbone network to extract deep features from the input image patch. Second, Atrous Spatial Pyramid Pooling (ASPP) is attached to capture semantic features at multi-scales. In order to strengthen the learning of important information, attention mechanism is embedded following the ASPP. The foregoing operations constitute an encoder. Third, in order to retain detail information in the process of feature sampling, a low-level feature multi-scale fusion (LFMF) module is proposed to form the decoder by connecting shallow features and high-level features derived from encoder. For OM extraction from large-scale VHR images, a novel model prediction method is presented. The proposed network is utilized to achieve the initial OM extraction. Finally, post-processing containing an object-based Conditional Random Fields (CRF) is performed to refine the OM extraction results. To validate the proposed method, an OM dataset containing 136 OM areas from government department of mine supervision was applied in the experiments, and pixel-level \(F1{\text{-}}score\) of 0.912 was achieved. In addition, to evaluate the effectiveness of the algorithm in practical applications, a large-scale VHR image covering 736 km2 was utilized in the experiments, and the pixel-level \(F1{\text{-}}score\), object-level \(FalseAlarm\), object-level \(MissingAlarm\) were 0.854, 0.132, 0.042, respectively. This study has practical significance in terms of automatically extracting OM from VHR images, which is beneficial to OM management and monitoring.

Similar content being viewed by others

References

Ayhan, B., Kwan, C., Budavari, B., Kwan, L., Lu, Y., Perez, D., & Vlachos, M. (2020). Vegetation detection using deep learning and conventional methods. Remote Sensing, 12(15), 2502x.

Baatz, M., & Schape, A. (2000). Multiresolution segmentation: An optimization approach for high quality multi-scale image segmentation. In J. Strobl, T. Blaschke, & G. Griesebner (Eds.), Angewandte Geographische Informationsverarbeitung XII (pp. 12–23). Wichmann-Verlag.

Badrinarayanan, V., Kendall, A., & Cipolla, R. (2017). SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 39(12), 2481–2495.

Boykov, Y., Veksler, O., & Zabih, R. (2001). Fast approximate energy minimization via graph cuts. IEEE Transactions on Pattern Analysis and Machine Intelligence, 23(11), 1222–1239.

Braghina, C., Peptenatu, D., Constantinescu, S., Pintilii, R. D., & Draghici, C. (2010). The pressure exerted on the natural environment in the open pit exploitation areas in Oltenia. Carpathian Journal of Earth and Environmental Sciences, 5(1), 33–40.

Chen, L. C., Papandreou, G., Kokkinos, I., Murphy, K., & Yuille, A. L. (2018a). DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Transactions on Pattern Analysis and Machine Intelligence, 40(4), 834–848.

Chen, L. C. E., Zhu, Y. K., Papandreou, G., Schroff, F., & Adam, H. (2018b). Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV) (pp. 833–851). https://doi.org/10.1007/978-3-030-01234-2_49

Chen, T., Hu, N., Niu, R., Zhen, N., & Plaza, A. (2020). Object-oriented open-pit mine mapping using Gaofen-2 satellite image and convolutional neural network, for the Yuzhou City, China. Remote Sensing, 12(23), 3895.

Chen, T., Zheng, X., Niu, R., & Plaza, A. (2022). Open-pit mine area mapping with Gaofen-2 satellite images using U-Net+. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 15, 3589–3599.

Chen, W., Li, X., & Wang, L. (2019). Fine land cover classification in an open pit mining area using optimized support vector machine and worldview-3 imagery. Remote Sensing, 12(1), 82.

Chen, W. T., Li, X. J., He, H. X., & Wang, L. Z. (2018c). A review of fine-scale land use and land cover classification in open-pit mining areas by remote sensing techniques. Remote Sensing, 10(1), 15.

Chollet, F. (2017). Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1800–1807).

Creswell, A., White, T., Dumoulin, V., Arulkumaran, K., Sengupta, B., & Bharath, A. A. (2018). Generative adversarial networks: An overview. IEEE Signal Processing Magazine, 35(1), 53–65.

Demirel, N., Düzgün, S., & Emil, M. K. (2011a). Landuse change detection in a surface coal mine area using multi-temporal high-resolution satellite images. International Journal of Mining Reclamation and Environment, 25(4), 342–349.

Demirel, N., Emil, M. K., & Duzgun, H. S. (2011b). Surface coal mine area monitoring using multi-temporal high-resolution satellite imagery. International Journal of Coal Geology, 86(1), 3–11.

Du, S., Du, S., Liu, B., & Zhang, X. (2021). “Incorporating DeepLabv3+ and object-based image analysis for semantic segmentation of very high resolution remote sensing images. International Journal of Digital Earth, 14(3), 357–378.

Hu, J., Shen, L., & Sun, G. (2018). Squeeze-and-excitation networks. In Proceedings of the IEEE conference on computer vision and pattern recognition (Vol. 42, No. 8, pp. 2011–2023).

Hu, N., Chen, T., Niu, R., & Zhen, N. (2019). Object-oriented open pit extraction based on convolutional neural network. A case study in Yuzhou, China. In IGARSS 2019–2019 IEEE International Geoscience and Remote Sensing Symposium (pp. 9435–9438). IEEE.

Huang, Z., Wang, X., Huang, L., Huang, C., Wei, Y., & Liu, W. (2019). Ccnet: Criss-cross attention for semantic segmentation. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 603–612).

Lafferty, J., McCallum, A., & Pereira, F. C. (2001). Conditional random fields: Probabilistic models for segmenting and labeling sequence data. In ICML ’01 Proceedings of the Eighteenth International Conference on Machine Learning (pp. 282–289).

LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436–444.

Li, X., Chen, W., & Cheng, X. (2016). A comparison of machine learning algorithms for mapping of complex surface-mined and agricultural landscapes using ZiYuan-3 Stereo Satellite Imagery. Remote Sensing, 8(6), 514.

Lin, M., Chen, Q., & Yan, S. (2013). Network in network. arXiv preprint arXiv:1312.4400

Long, J., Shelhamer, E., & Darrell, T. (2015). Fully Convolutional Networks for Semantic Segmentation. In 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 3431–3440). https://doi.org/10.1109/cvpr.2015.7298965

Maxwell, A. E., Strager, M. P., Warner, T. A., Zegre, N. P., & Yuill, C. B. (2014). Comparison of NAIP orthophotography and RapidEye satellite imagery for mapping of mining and mine reclamation. Giscience & Remote Sensing, 51(3), 301–320.

Maxwell, A. E., Warner, T. A., & Strager, M. P. (2015a). Combining RapidEye Satellite Imagery and Lidar for mapping of mining and mine reclamation. Photogrammetric Engineering and Remote Sensing, 80(2), 179–189.

Maxwell, A. E., Warner, T. A., Strager, M. P., Conley, J. F., & Sharp, A. L. (2015b). Assessing machine-learning algorithms and image and Lidar-derived variables for GEOBIA classification of mining and mine reclamation. International Journal of Remote Sensing, 36(4), 954–978.

Paisitkriangkrai, S., Sherrah, J., Janney, P., & Hengel, A. V. D. (2015). Effective semantic pixel labelling with convolutional networks and conditional random fields. In 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW) (pp. 36–43).

Panboonyuen, T., Jitkajornwanich, K., Lawawirojwong, S., Srestasathiern, P., & Vateekul, P. (2019). Semantic segmentation on remotely sensed images using an enhanced global convolutional network with channel attention and domain specific transfer learning. Remote Sensing, 11(1), 83.

Ronneberger, O., Fischer, P., & Brox, T. (2015). U-Net: Convolutional networks for biomedical image segmentation. In: International conference on medical image computing and computer-assisted intervention (Vol. 9351, pp. 234–341).

Sharma, A., Liu, X. W., Yang, X. J., & Shi, D. (2017). A patch-based convolutional neural network for remote sensing image classification. Neural Networks, 95, 19–28.

Sun, X., Shao, H., Xiang, X., Yuan, L., Zhou, Y., & Xian, W. (2020). A coupling method for eco-geological environmental safety assessment in mining areas using PCA and catastrophe theory. Natural Resources Research, 29(6), 4133–4148.

Townsend, P. A., Helmers, D. P., & Kingdon, C. C. (2009). Changes in the extent of surface mining and reclamation in the Central Appalachians detected using a 1976–2006 Landsat time series. Remote Sensing of Environment, 113(1), 62–72.

Wang, C., Chang, L., Zhao, L., & Niu, R. (2020). Automatic identification and dynamic monitoring of open-pit mines based on improved mask R-CNN and transfer learning. Remote Sensing, 12(21), 3474.

Wang, L., Huang, X., Zheng, C., & Zhang, Y. (2017). A Markov random field integrating spectral dissimilarity and class co-occurrence dependency for remote sensing image classification optimization. ISPRS Journal of Photogrammetry and Remote Sensing, 128, 223–239.

Woo, S., Park, J., Lee, J. Y., & Kweon, I. S. (2018). Cbam: Convolutional block attention module. In Proceedings of the European conference on computer vision (ECCV) (pp. 3–19).

Xiao, D., Yin, L., & Fu, Y. (2021). Open-pit mine road extraction from high-resolution remote sensing images using RATT-UNet. IEEE Geoscience and Remote Sensing Letters, 19, 1–5.

Xie, H., Pan, Y., Luan, J., Yang, X., & Xi, Y. (2020). Semantic segmentation of open pit mining area based on remote sensing shallow features and deep learning. In International conference on Big Data Analytics for Cyber-Physical-Systems (pp. 52–59). Springer, Singapore.

Xie, H., Pan, Y., Luan, J., Yang, X., & Xi, Y. (2021). Open-pit mining area segmentation of remote sensing images based on DUSegNet. Journal of the Indian Society of Remote Sensing, 49(6), 1257–1270.

Xu, Y., Du, B., & Zhang, L. (2021). Self-attention context network: Addressing the threat of adversarial attacks for hyperspectral image classification. IEEE Transactions on Image Processing, 30, 8671–8685.

Xu, Y., Du, B., Zhang, L., Zhang, Q., Wang, G., & Zhang, L. (2019). Self-ensembling attention networks: Addressing domain shift for semantic segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence (Vol. 33, No. 1, pp. 5581–5588).

Yang, L., Chen, Y., Song, S., Li, F., & Huang, G. (2021). Deep Siamese networks based change detection with remote sensing images. Remote Sensing, 13(17), 3394.

Yu, F., & Koltun, V. (2015). Multi-scale context aggregation by dilated convolutions. arXiv preprint arXiv:1511.07122

Yu, X., Zhang, K., & Zhang, Y. (2022). Land use classification of open-pit mine based on multi-scale segmentation and random forest model. PLoS ONE, 17(2), e0263870.

Yu, X. R., Wu, X. M., Luo, C. B., & Ren, P. (2017). Deep learning in remote sensing scene classification: A data augmentation enhanced convolutional neural network framework. Giscience & Remote Sensing, 54(5), 741–758.

Zhang, C., Sargent, I., Pan, X., Li, H., Gardiner, A., Hare, J., & Peter, M. A. (2018). An Object-Based Convolutional Neural Network (OCNN) for urban land use classification. Remote Sensing of Environment, 216, 57–70.

Zhang, C. X., Yue, P., Tapete, D., Shangguan, B., Wang, M., & Wu, Z. Y. (2020). A multi-level context-guided classification method with object-based convolutional neural network for land cover classification using very high resolution remote sensing images. International Journal of Applied Earth Observation and Geoinformation, 88, 102086.

Zhao, H., Shi, J., Qi, X., Wang, X., & Jia, J. (2017). Pyramid scene parsing network. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 2881–2890). https://doi.org/10.1109/cvpr.2017.660

Zhao, L., Niu, R., Li, B., Chen, T., & Wang, Y. (2022). Application of improved instance segmentation algorithm based on VoVNet-v2 in open-pit mines remote sensing pre-survey. Remote Sensing, 14(11), 2626.

Funding

This study was funded by the China Postdoctoral Science Foundation (No. 2021M703511), the National Natural Science Foundation of China (No. 42271480), the Fundamental Research Funds for the Central Universities (Nos. 2022XJDC02, 2022JCCXDC04) and the Yueqi Young Scholars Program of China University of Mining and Technology at Beijing (No. 800015Z1189).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

No potential conflict of interest was reported by the authors.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Du, S., Xing, J., Li, J. et al. Open-Pit Mine Extraction from Very High-Resolution Remote Sensing Images Using OM-DeepLab. Nat Resour Res 31, 3173–3194 (2022). https://doi.org/10.1007/s11053-022-10114-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11053-022-10114-y