Abstract

We argue that some properties of sign language grammar have counterparts in non-signers’ intuitions about gestures, including ones that are probably very uncommon. Thus despite the intrinsic limitations of gestures compared to full-fledged sign languages, they might access some of the same rules. While gesture research often focuses on co-speech gestures, we investigate pro-speech gestures, which fully replace spoken words and thus often make an at-issue semantic contribution, like signs. We argue that gestural loci can emulate several properties of sign language loci (= positions in signing space that realize discourse referents): there can be an arbitrary number of them, with a distinction between speaker-, addressee- and third person-denoting loci. They may be free or bound, and they may be used to realize ‘donkey’ anaphora. Some gestural verbs include loci in their realization, and for this reason they resemble some ‘agreement verbs’ found in sign language (Schlenker and Chemla 2018). As in sign language, gestural loci can have rich iconic uses, with high loci used for tall individuals. Turning to plurality, we argue that repetition-based gestural nouns replicate several properties of repetition-based plurals in ASL (Schlenker and Lamberton 2019): unpunctuated repetitions provide vague information about quantities, punctuated repetitions are often semantically precise, and rich iconic information can be provided in both cases depending on the arrangement of the repetitions, an observation that extends to some mass terms. We further suggest that gestural verbs can give rise to repetition-based pluractional readings, as their sign language counterparts (Kuhn 2015a, 2015b; Kuhn and Aristodemo 2017). Following Strickland et al. (2015), we further argue that a distinction between telic and atelic sign language verbs, involving the existence of sharp boundaries, can be replicated with gestural verbs. Finally, turning to attitude and action reports, we suggest (following in part Lillo-Martin 2012) that Role Shift, which serves to adopt another agent’s perspective in sign language, has gestural counterparts. (An Appendix discusses possible gestural counterparts of ‘Locative Shift,’ a sign language operation in which one may co-opt a location-denoting locus to refer to an individual found at that location.)

Similar content being viewed by others

Notes

See Abner et al. (2015a) for an introduction to gestures from a linguistic perspective.

We write ‘often lead to ungrammaticality’ rather than ‘always lead to ungrammaticality’ because a pro-speech gesture may replace a modifier, and since modifiers can usually be omitted, this would be the case of the corresponding pro-speech gesture as well.

Following Schlenker (to appear a), we treat as iconic those sign language rules that come with a requirement that the denotations of an expression E preserve some properties of the form of E. For instance, the verb GROW can be realized more or less quickly to indicate that the growth process was more or less quick, hence an iconic component, to the effect, roughly, that the faster the realization of the sign, the faster the growth process. (Note that if a lexical sign happens to resemble its denotation, but no interpretive rule makes reference to the preservation of some geometric properties, it does not count as ‘iconic’ in this sense, although it may result from ‘fossilized’ iconicity, so to speak.)

To facilitate cross-reference, video numbers are provided in each gestural example in the text as well as in the Supplementary Materials.

Schlenker (2018b) seeks to derive parts of the typology from pragmatic considerations; we need not be concerned with this derivation here.

One should not infer that pro- and post-speech gestures are necessarily accompanied with onomatopoeia. This is not the case in (i), which involves a silent gesture: the speaker’s eyes are gradually closed as his head is tilted forward and downward, thus representing a person falling asleep.

-

(i)

In two minutes, our Chair will

-

- . (Schlenker 2018b)

. (Schlenker 2018b)

-

(i)

Some related French data were discussed with French-speaking colleagues but are not reported here.

The model is a second language learner of ASL and LSF (French Sign Language). He did not provide judgments on the videos he recorded, as these might have been influenced by his knowledge of sign language.

One of them, Consultant 2, had one week of instruction in French Sign Language (LSF).

With apologies to the readers, some of our examples refer to objectionable situations.

A fourth possibility, briefly mentioned by Schlenker and Chemla (2018), is that certain inconspicuous aspects of gestures and possibly signs, having to do with orientation, can be disregarded under ellipsis. This theoretical direction has not been fully explored yet.

Kendon (2004) writes in particular: “Gestures understood as pointing are commonly done with the hands, but they may also be done with the head, by certain movements of the eyes, by protruding the lips…, by a movement of the elbow, in some circumstances even with the foot…” (For an experimental study of the informational contribution of co-speech pointing relative to its linguistic context, see for instance So et al. 2009, 2013.)

An anonymous reviewer suggests that “using a location other than the actual current location of the addressee for indexing could be possible when a different guise is relevant.” We do not have data on this point, but note that this result would be expected on the basis of some spoken language data, as noted in Schlenker (2003), where a non-standard guise for a speaker-denoting pronoun licenses the use of third person features:

“Consider a situation in which the identity of a person seen in a mirror is in doubt. I could very well utter the following: He looks like me … Mmh, he must be me, in fact he is me!”

Example (3) in the Supplementary Materials (Video 3861) seeks to assess what happens when an arbitrary locus is established to refer to a non-speech act participant present in the context. Specifically, a head movement (combined with eyegaze) indicates that the speaker intends the expression this guy to refer to someone on his left, but simultaneously, a mismatched open hand on the right co-occurs with this guy to indicate locus assignment. Thus the sentence tests whether a locus on the right can refer to a salient person on the left. The result is deviant, but more work is needed to determine whether this is because of the particular realization we chose, or because of a sign language-like prohibition against using arbitrary loci to refer to entities that are present in the context.

In this connection, an anonymous reviewer finds “that pointing to a location when referring to the addressee seems possible in the case <in which> the addressee is virtually located in different places,” as in (i). This should be tested, and could indirectly bear on the availability of Locative Shift for second person pronouns, as discussed in Appendix II.

-

(i)

I have the choice to visit

-

- -

- [you in France],

[you in France],  -

- -

- [you in England], or

[you in England], or  -

- -

- [John in Croatia]. You know who I am going to visit?

[John in Croatia]. You know who I am going to visit?  -

- .

.

-

(i)

This remark was explicitly made by a consultant with respect to a related video [Video 3867].

Amir Anvari (p.c.) has been independently working on the issue of Condition B violations with pro-speech pointing.

But was used in a. and b., and was used in c.

Thanks to an anonymous reviewer for correcting an earlier version of this discussion.

When testing these examples, it could be important to take into account the possibility of ‘neutral’ uses of gestures, in a central position or towards the speaker’s dominant side. For a right-handed speaker, if

-

- -

- [one plane] appears on the right, using

[one plane] appears on the right, using  -

- -

- -

- to describe that same plane should be quite odd. But moving instead from the non-dominant to the dominant side might conceivably be more acceptable due to a neutral, non-located use of the gesture, produced close to the speaker’s dominant side.

to describe that same plane should be quite odd. But moving instead from the non-dominant to the dominant side might conceivably be more acceptable due to a neutral, non-located use of the gesture, produced close to the speaker’s dominant side.Note that our main clause has two conjuncts because a consultant noted that a main clause with only

-

- -

- heblesses

heblesses -

- introduces what seems to be an unjustified asymmetry between the two bishop-denoted loci; the second conjunct was intended to restore the symmetry.

introduces what seems to be an unjustified asymmetry between the two bishop-denoted loci; the second conjunct was intended to restore the symmetry.Although there is, in our judgment, no doubt at all that the sentence entails that the second bishop blesses the first bishop, we fail to report the inference because our survey contained an infelicity in the statement of the relevant question: “Who does the other bishop bless? (i) himself (ii) the other bishop.” Without context, ‘the other bishop’ is ambiguous in this case; Consultant 3 commented on this and stated that they understood the expression to refer to the first bishop (this is the only possibility that makes pragmatic sense given that option (i) is: himself).

Special thanks to Salvador Mascarenhas (p.c.) for discussion of these and related points.

In addition, a reflexive pronoun would be needed to express the ‘self-blessing’ reading (because of Condition B of Binding Theory).

We leave for future research an investigation of singular donkey pronouns with split antecedents, also discussed in Schlenker (2011b).

Consultant 3 commented on the realization of the fingers, writing: ‘It’s a little strange with the fingers together’ (see the Supplementary Materials). Note that it’s not the position of the fingers but their shape that the consultant objected to. There might be a sign language influence in the finger shape that we adopted, and this should be corrected in the future (the fact that Consultant 3 is a (non-signing) linguist with prior knowledge of some sign language research seems to have helped them disregard this gestural infelicity).

A paradigm somewhat similar to (47), but with hit

-

-

replacing

replacing  -

-

, was explored in (22) in the Supplementary Materials (Video 3979 and 3989). We obtained the same comparative results as in (47)a,b,c: (a) high pointing for an individual situation high was acceptable; (b) low pointing was degraded; but (c) the latter effect was obviated under ellipsis. But all scores were lowered, with (a) = 4, (b) = 1.3, (c) = 3.7. Consultant 1 commented about (a) that “it’s not clear why the pointing gestures were necessary at all.” It’s hard to conclude at this point, and more work is needed on this issue.

, was explored in (22) in the Supplementary Materials (Video 3979 and 3989). We obtained the same comparative results as in (47)a,b,c: (a) high pointing for an individual situation high was acceptable; (b) low pointing was degraded; but (c) the latter effect was obviated under ellipsis. But all scores were lowered, with (a) = 4, (b) = 1.3, (c) = 3.7. Consultant 1 commented about (a) that “it’s not clear why the pointing gestures were necessary at all.” It’s hard to conclude at this point, and more work is needed on this issue.Thanks to an anonymous reviewer for urging that this point be clarified.

This section is a summary of some aspects of Schlenker and Lamberton (2019)

This condition does not apply when a numeral co-occurs with a punctuated repetition. Number is then given by the numeral, while the unpunctuated nature of the repetition indicates that the objects were spread out.

Coppola et al. (2013) describe punctuated repetitions in homesigners as “series of discrete movements, each referring to an entity or action in the vignette. Each movement was clearly articulated and easily segmentable from the rest of the movements.” By contrast, unpunctuated repetitions “were movements produced in rapid succession with no clear break between them. Although the pauses between these iterations were much smaller than those separating the components of Punctuated Movements, they were identifiable and could be easily counted. These movements could be produced in a single space, but more often were produced in multiple spatial locations.”

Schlenker and Lamberton (2019) checked in their last judgment task that these sentences do not trigger any inference to the effect that if there are trophies, they should be arranged in a particular way. This was to ascertain that there is no ‘projection’ outside of the conditional of the inference pertaining to the arrangement of the relevant objects. This test matters because if the iconic conditions behaved like co-speech gestures as analyzed in Schlenker (2015, 2018a), one would expect an inference to the effect that if there are trophies, they are arranged in a linear/triangular fashion.

We note for completeness that Schlenker (to appear b) argues that repetition-based gestural plurals share crucial semantic properties with standard English plurals when combined with anaphora. Specifically, they give rise to (vague) universal readings in positive environments, as in: Ann found her presents (implying that she found all or almost all). But in negative environments, they give rise to existential readings: Ann didn’t find her presents doesn’t mean that she didn’t find all, but rather that she didn’t find any. These results in the gestural domain are confirmed with experimental means in Tieu et al. (2019).

The iterations are hard to count, and the first onomatopoeia pf was produced with slight pause, which probably explains the inference drawn by one of the consultants (= ‘once, followed by several quick slaps’).

It is worth noting that Kuhn and Aristodemo discuss two types of repetitions in LSF. Besides the one-handed repetition -rep, discussed here, they analyze a two-handed alternating repetition -alt, which comes with further constraints: the plural events that satisfy the condition must have subevents with different thematic arguments. It would be interesting to investigate in future research whether the distinction between -rep and -alt has counterparts in pro-speech gestures.

Schlenker (to appear a) proposes instead that Wilbur’s finding should be recast within a theory of iconic meaning. The point is that sign language telic verbs can be modulated in such a way that the entire development of the sign rather than just its endpoints provide information about the precise development of the denoted action.

One question we attempted to test in our survey but do not report on here (because the results are too inconsistent and complex) pertains to the interaction of eyebrow raising (as a potential focus-marking device) and pro-speech gestures. See the Supplementary Materials for the data we obtained, and Dohen (2005) and Dohen and Loevenbruck (2009) for the use of eyebrow raising marking focus when used as co-speech facial expressions.

This point is worth emphasizing, for while it is obvious to competent linguists that sign languages are full-fledged—and extremely interesting—languages, and that they have a crucial role to play in the development of deaf children (e.g. Mellon et al. 2015), there are still attempts in some countries to assimilate them to mere gestural codes.

We make no claim about the preferential temporal order in which loci are introduced; alphabetization just refers to the geometric position of loci with respect to the dominant vs. non-dominant side, not to their temporal order or appearance.

See Schlenker (2018d) for earlier speculations on the existence of Locative Shift in gestures.

The model interchangeably used ‘work with’ and ‘get along with’ in different sentences; similar for ‘I’ll’ vs. ‘I am going to.’

The model interchangeably used if later I need and if I later need depending on the sentence.

References

Abner, Natasha, Kensy Cooperrider, and Susan Goldwin-Meadow. 2015a. Gesture for linguists: A handy primer. Language and Linguistics Compass 9(11): 437–449.

Abner, Natasha, Savithry Namboodiripad, Elisabet Spaepen, and Susan Goldin-Meadow. 2015b. Morphology in child homesign: Evidence from number marking. Slides from a talk given at the 2015 Annual Meeting of the Linguistics. Society of America (LSA), Portland.

Beaver, David. 2001. Presupposition and assertion in dynamic semantics. Stanford: CSLI Publications.

Brasoveanu, Adrian. 2006. Structured nominal and modal reference. PhD diss., The State University of New Jersey.

Brasoveanu, Adrian. 2010. Decomposing modal quantification. Journal of Semantics 27(4): 437–527.

Clark, Herbert. 1996. Using language. Cambridge: Cambridge University Press.

Clark, Herbert. 2016. Depicting as a method of communication. Psychological Review 123(3): 324–347.

Coppola, Marie, Elisabet Spaepen, and Susan Goldin-Meadow. 2013. Communicating about quantity without a language model: Number devices in homesign grammar. Cognitive Psychology 67: 1–25.

Cormier, Kearsy, Adam Schembri, and Bencie Woll. 2013. Pronouns and pointing in sign languages. Lingua 137: 230–247.

Davidson, Kathryn. 2015. Quotation, demonstration, and iconicity. Linguistics & Philosophy 38(6): 477–520.

Dohen, Marion. 2005. Deixis prosodique multisensorielle: Production et perception audiovisuelle de la Focalisation contrastive en français. PhD diss., Institut National Polytechnique de Grenoble.

Dohen, Marion, and Hélène Loevenbruck. 2009. Interaction of audition and vision for the perception of prosodic contrastive focus. Language & Speech 52(2–3): 177–206.

Ebert, Cornelia, and Christian Ebert. 2014. Gestures, demonstratives, and the attributive/referential distinction. In Handout of a talk given at Semantics and Philosophy in Europe (SPE) 7. Berlin, June 28, 2014.

Ebert, Cornelia. 2018. A comparison of sign language with speech plus gesture. Theoretical Linguistics 44(3–4): 239–249.

Elbourne, Paul. 2005. Situations and individuals. Cambridge: MIT Press.

Emmorey, Karen. 2002. Language, cognition, and the brain: Insights from sign language research. Mahwah: Erlbaum.

Emmorey, Karen, and Brenda Falgier. 2004. Conceptual locations and pronominal reference in American Sign Language. Journal of Psycholinguistic Research 33(4): 321–331.

Evans, Gareth. 1977. Pronouns, quantifiers and relative clauses. Canadian Journal of Philosophy 7(3): 467–536.

Feldstein, Emily. 2015. The development of grammatical number and space: Reconsidering evidence from child language and homesign through adult gesture. Ms. Harvard University

Fricke, Ellen. 2008. Grundlagen einer multimodalen Grammatik des Deutschen: Syntaktische Strukturen und Funktionen. Habilitation treatise. Frankfurt (Oder): European University Viadrina.

Geach, Peter. 1962. Reference and generality. Ithaca: Cornell University Press.

Giorgolo, Gianluca. 2010. Space and time in our hands. PhD diss., Uil-OTS, Universiteit Utrecht.

Goldin-Meadow, Susan. 2003. The resilience of language. New York: Taylor & Francis.

Goldin-Meadow, Susan, So Wing Chee Özyürek Asli, and Carolyn Mylander. 2008. The natural order of events: How speakers of different languages represent events nonverbally. Proceedings of the National Academy of Sciences 105(27): 9163–9168.

Goldin-Meadow, Susan, and Diane Brentari. 2017. Gesture, sign and language: The coming of age of sign language and gesture studies. Behavioral and Brain Sciences 40, e46. https://doi.org/10.1017/S0140525X15001247.

Heim, Irene. 1982. The semantics of definite and indefinite noun phrases. PhD diss., University of Massachusetts, Amherst.

Heim, Irene. 1990. E-type pronouns and donkey anaphora. Linguistics and Philosophy 13: 137–177.

Jouitteau, Mélanie. 2004. Gestures as expletives, multichannel syntax. In West Coast Conference on Formal Linguistics (WCCFL) 23, eds. Vineeta Chand, Ann Kelleher, Angelo J. Rodríguez, and Benjamin Schmeiser, 422–435. Somerville: Cascadilla.

Kamp, Hans. 1981. A theory of truth and semantic representation. In Formal methods in the study of language, eds. Jereon Groenendijk, Theo Janssen, and Martin Stokhof, 277–322. Amsterdam: University of Amsterdam.

Kamp, H., and U. Reyle. 1993. From discourse to logic. Dordrecht: Kluwer.

Kegl, Judy. 2004. ASL Syntax: Research in progress and proposed research. Sign Language & Linguistics 7 (2). Reprint of an MIT manuscript written in 1977.

Kendon, Adam. 2004. Gesture: Visible action as utterance, New York: Cambridge University Press.

Koulidobrova, Elena. 2011. SELF: Intensifier and ‘long distance’ effects in American Sign Language (ASL). Ms., University of Connecticut.

Koulidobrova, Elena. 2018. Counting nouns in ASL. Ms., Central Connecticut State University. http://ling.auf.net/lingbuzz/003871.

Kuhn, Jeremy. 2015a. ASL loci: Variables or features? Journal of Semantics 33(3): 449–491. https://doi.org/10.1093/jos/ffv005.

Kuhn, Jeremy. 2015b. Cross-categorial singular and plural reference in sign language. PhD diss., New York University.

Kuhn, Jeremy, and Valentina Aristodemo. 2017. Pluractionality, iconicity, and scope in French Sign Language. Semantics & Pragmatics 10: Article 6. https://doi.org/10.3765/sp.10.6.

Ladewig, Silva. 2011. Syntactic and semantic integration of gestures into speech: Structural, cognitive, and conceptual aspects. Frankfurt (Oder): European University Viadrina. PhD diss.

Lascarides, Alex, and Matthew Stone. 2009. A formal semantic analysis of gesture. Journal of Semantics 26(3): 393–449.

Liddell, Scott. 2003. Grammar, gesture, and meaning in American Sign Language. Cambridge: Cambridge University Press.

Lillo-Martin, Diane. 2012. Utterance reports and constructed action in sign and spoken languages. In Sign language: An international handbook, eds. Roland Pfau, Markus Steinbach, and Bencie Woll, 365–387. Amsterdam: Mouton de Gruyter.

Lillo-Martin, Diane, and Edward S. Klima. 1990. Pointing out differences: ASL pronouns in syntactic theory. In Theoretical issues in Sign Language research, Volume 1: Linguistics, eds. Susan Fischer and Patricia Siple, 191–210. Chicago: The University of Chicago Press.

Lillo-Martin, Diane, and Richard Meier. 2011. On the linguistic status of ‘agreement’ in sign languages. Theoretical Linguistics 37(3–4): 95–141.

Malaia, Evie, and Ronnie B. Wilbur. 2012. Kinematic signatures of telic and atelic events in ASL predicates. Language and Speech 55: 407–421.

McNeill, David. 2005. Gesture and thought. Chicago: University of Chicago Press.

Mellon, Nancy, Niparko John, Christian Rathmann, Gaurav Mathur, Tom Humphries, Donna Jo Napoli, Theresa Handley, Sasha Scambler, and John Lantos, 2015. Should all deaf children learn sign language? Pediatrics 136(1): 170–176.

Nouwen, Rick. 2003. Plural pronominal anaphora in context. Number 84 in Netherlands Graduate School of Linguistics Dissertations. LOT, Utrecht.

Ortega, Gerardo, Annika Schiefner, and Asli Özyürek. 2017. Speakers’ gestures predict the meaning and perception of iconicity in signs. In 39th Annual Conference of theCognitive Science Society (CogSci) 2017, 889–894.

Ortega-Santos, Ivan. 2016. A formal analysis of lip-pointing in Latin-American Spanish. Isogloss 2: 113–128.

Padden, Carol. 1988. Grammatical theory and signed languages. In Linguistics: The Cambridge survey, ed. Frederick J. Newmeyer. Vol. 2, 250–266.

Perniss, Pamela, and Asli Özyürek. 2015. Visible cohesion: A comparison of reference tracking in sign, speech, and co-speech gesture. Topics in Cognitive Science 7: 36–60.

Perniss, Pamela, Asli Özyürek, and Gary Morgan. 2015. The influence of the visual modality on language structure and conventionalization: Insights from sign language and gesture. Topics in Cognitive Science 7: 2–11.

Pfau, Roland, and Markus Steinbach. 2006. Pluralization in sign and in speech: A cross-modal typological study. Linguistic Typology 10: 49–135.

Pfau, Roland, Martin Salzmann, and Martin Steinbach. 2018. The syntax of sign language agreement: Common ingredients, but unusual recipe. Glossa 3(1): 107. https://doi.org/10.5334/gjgl.511.

Quer, Josep. 2005. Context shift and indexical variables in sign languages. In Semantic and Linguistic Theory (SALT)15, Ithaca: CLC Publications.

Quer, Josep. 2013. Attitude ascriptions in sign languages and role shift. In 13th Meeting of the Texas Linguistics Society, ed. Leah C. Geer, 12–28. Austin: Texas Linguistics Forum.

Quinto-Pozos, David, and Fey Parrill. 2015. Signers and co-speech gesturers adopt similar strategies for portraying viewpoint in narratives. Topics in Cognitive Science 7: 12–35.

Rothstein, Susan. 2004. Structuring events: A study in the semantics of lexical aspect. Oxford: Blackwell.

Sandler, Wendy, and Diane Lillo-Martin. 2006. Sign language and linguistic universals. Cambridge: Cambridge University Press.

Schembri, Adam, Kearsy Cormier, and Jordan Fenlon. 2018. Indicating verbs as typologically unique constructions: Reconsidering verb ‘agreement’ in sign languages. Glossa 3(1): 89. https://doi.org/10.5334/gjgl.468.

Schlenker, Philippe. 2003. A plea for monsters. Linguistics & Philosophy 26: 29–120.

Schlenker, Philippe. 2008. Be articulate: A pragmatic theory of presupposition projection. Target article. Theoretical Linguistics 34(3): 157–212.

Schlenker, Philippe. 2009. Local contexts. Semantics & Pragmatics 2(3): 1–78. https://doi.org/10.3765/sp.2.3.

Schlenker, Philippe. 2011a. Indexicality and DeSe reports. In Semantics: An international handbook of natural language meaning, eds. Claudia Maienborn, Klaus von Heusinger, and Paul Portner. Vol. 2, Article 61, 1561–1604. Berlin: Mouton de Gruyter.

Schlenker, Philippe. 2011b. Donkey anaphora: The view from sign language (ASL and LSF). Linguistics and Philosophy 34(4): 341–395.

Schlenker, Philippe. 2011. Iconic agreement. Theoretical Linguistics 37(3-4): 223–234.

Schlenker, Philippe. 2013. Temporal and modal anaphora in sign language (ASL). Natural Language and Linguistic Theory 31(1): 207–234.

Schlenker, Philippe. 2014. Iconic features. Natural Language Semantics 22(4): 299–356.

Schlenker, Philippe. 2015. Gestural presuppositions (squib). Snippets (Issue 30). https://doi.org/10.7358/snip-2015-030-schl.

Schlenker, Philippe. 2016a. Featural variables. Natural Language and Linguistic Theory 34(3): 1067–1088. https://doi.org/10.1007/s11049-015-9323-7.

Schlenker, Philippe. 2016b. Logical visibility and iconicity in sign language semantics: Theoretical perspectives. Ms., Institut Jean-Nicod and New York University.

Schlenker, Philippe. 2017a. Sign language and the foundations of anaphora. Annual Review of Linguistics 3: 149–177.

Schlenker, Philippe. 2017b. Super monsters I: Attitude and action role shift in sign language. Semantics & Pragmatics 10. 2017.

Schlenker, Philippe. 2017c. Super monsters II: Role shift, iconicity and quotation in sign language. Semantics & Pragmatics 10. 2017.

Schlenker, Philippe. 2018a. Gesture projection and cosuppositions. Linguistics & Philosophy 41(3): 295–365.

Schlenker, Philippe. 2018b. Iconic pragmatics. Natural Language & Linguistic Theory 36(3): 877–936.

Schlenker, Philippe. 2018c. Visible meaning: Sign language and the foundations of semantics. Theoretical Linguistics 44(3–4): 123–208.

Schlenker, Philippe. 2018d. Locative shift. Glossa 3(1): 115. http://doi.org/10.5334/gjgl.561.

Schlenker, Philippe. 2018e. Sign language semantics: Problems and prospects. Theoretical Linguistics 44(3–4): 295–353.

Schlenker, Philippe, to appear, Gestural Semantics: Replicating the typology of linguistic inferences with pro- and post-speech gestures. Natural Language & Linguistic Theory.

Schlenker, Philippe, and Emmanuel Chemla. 2018. Gestural agreement. Natural Language & Linguistic Theory 36(2): 587–625. https://doi.org/10.1007/s11049-017-9378-8.

Schlenker, Philippe, and Jonathan Lamberton. 2019. Iconic plurality. Linguistics & Philosophy 42: 45–108. https://doi.org/10.1007/s10988-018-9236-0.

Schlenker, Philippe, Jonathan Lamberton, and Mirko Santoro. 2013. Iconic variables. Linguistics & Philosophy 36(2): 91–149.

Schlenker, Philippe, and Gaurav Mathur. 2013. A strong crossover effect in ASL (squib). Snippets 27: 16–18.

Slama-Cazacu, Tatiana. 1976. Nonverbal components in message sequence: “Mixed syntax.” In Language and man: Anthropological issues, eds. William Charles McCormack, and Stephen Wurm (pp. 217–227). The Hague: Mouton.

So, Wing Chee, Sotaro Kita, and Susan Goldin-Meadow. 2009. Using the hands to identify who does what to whom: Speech and gesture go hand-in-hand. Cognitive Science 33: 115–125.

So, Wing Chee, Sotaro Kita, and Susan Goldin-Meadow. 2013. When do speakers use gestures to specify who does what to whom? The role of language proficiency and type of gestures in narratives. Journal of Psycholinguistic Research 42: 581–594. https://doi.org/10.1007/s10936-012-9230-6.

Sprouse, Jon, and Diogo Almeida. 2012. Assessing the reliability of textbook data in syntax: Adger’s Core Syntax. Journal of Linguistics 48: 609–652.

Sprouse, Jon, and Diogo Almeida. 2013. The empirical status of data in syntax: A reply to Gibson and Fedorenko. Language and Cognitive Processes. 28: 222–228. https://doi.org/10.1080/01690965.2012.703782.

Sprouse, Jon, Carson T. Schütze, and Diogo Almeida. 2013. A comparison of informal and formal acceptability judgments using a random sample from Linguistic Inquiry 2001-2010. Lingua 134: 219–248. https://doi.org/10.1016/j.lingua.2013.07.002.

Streeck, Jürgen. 2002. Grammars, words, and embodied meanings: On the evolution and uses of so and like. Journal of Communication 52: 581–596.

Strickland, Brent, Carlo Geraci, Emmanuel Chemla, Philippe Schlenker, Meltem Kelepir, and Richard Pfau. 2015. Event representations constrain the structure of language: Sign language as a window into universally accessible linguistic biases. Proceedings the National Academy of Sciences (PNAS) 112. https://doi.org/10.1073/pnas.1423080112.

Tieu, Lyn, Robert Pasternak, Philippe Schlenker, and Emmanuel Chemla. 2017. Co-speech gesture projection: Evidence from truth-value judgment and picture selection tasks. Glossa 2(1): 102.

Tieu, Lyn, Robert Pasternak, Philippe Schlenker, and Emmanuel Chemla. 2018. Co-speech gesture projection: Evidence from inferential judgments. Glossa 3(1): 109. http://doi.org/10.5334/gjgl.580.

Tieu, Lyn, Philippe Schlenker, and Emmanuel Chemla. 2019. Linguistic inferences without words: Replicating the inferential typology with gestures. Proceedings of the National Academy of Sciences (PNAS). https://doi.org/10.1073/pnas.1821018116.

van den Berg, Martin. 1996. Some aspects of the internal structure of discourse: The dynamics of nominal anaphora. PhD diss., ILLC, Universiteit van Amsterdam.

van Hoek, Karen. 1992. Conceptual spaces and pronominal reference in American Sign Language. Nordic Journal of Linguistics 15: 183–199.

Wilbur, Ronnie. 2003. Representations of telicity in ASL. Chicago Linguistic Society 39: 354–368.

Wilbur, Ronnie. 2008. Complex predicates involving events, time and aspect: Is this why sign languages look so similar? In Signs of the time, ed. Joseph Quer, 217–250. Hamburg: Signum.

Acknowledgements

Special thanks to Emmanuel Chemla and Lyn Tieu for detailed discussion of almost all of this piece, and to Brian Buccola, Rob Pasternak, Salvador Mascarenhas and Lyn Tieu for extraordinarily patient help with judgments. Jeremy Kuhn’s help in the last stage of this project was priceless; many thanks to him for allowing videos to be linked to the article. I am also grateful to Cornelia Ebert and Clemens Mayr for discussion of some aspects of the data and analysis, and to Adam Schembri for urging that signs be compared to gestures. Earlier exchanges with Susan Goldin-Meadow proved particularly helpful. I benefited from comments of the participants to the Week of Signs and Gestures in Stuttgart (June 2017). I also received excellent comments and criticisms from several anonymous referees for Natural Language & Linguistic Theory. References were prepared by Lucie Ravaux, and she also anonymized the videos linked to this paper. For a companion paper on gestural semantics, see Schlenker (to appear b).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Grant acknowledgments: The research leading to these results received funding from the European Research Council under the European Union’s Seventh Framework Programme (FP/2007-2013) / ERC grant agreement N°324115–FRONTSEM (PI: Schlenker), and also under the European Union’s Horizon 2020 Research and Innovation Programme (ERC grant agreement No 788077, Orisem, PI: Schlenker). Research was conducted at Institut d’Etudes Cognitives, Ecole Normale Supérieure—PSL Research University. Institut d’Etudes Cognitives is supported by grant FrontCog ANR-17-EURE-0017.

Electronic Supplementary Material

Appendices

Appendix I: Transcription conventions

❑ Sign language transcription conventions

In the following, sign language sentences are glossed in capital letters, as is standard. Expressions of the form WORD–i, WORDi and […EXPRESSION…]i indicate that the relevant expression is associated with the locus (= position in signing space) i. A suffixed locus, as in WORD–i, indicates that the association is effected by modulating the sign in such a way that it points towards locus i (this is different from the addition of a pointing sign IX-i to a word); a subscripted locus, as in WORDi or […EXPRESSION…]i, indicates that the relevant expression is signed in position i. Locus names are assigned from right to left from the signer’s perspective; thus when loci a, b, c are mentioned, a appears on the signer’s right, c on the left, and b somewhere in between. IX (for ‘index’) is a pointing sign towards a locus, while POSS is possessive; they are glossed as IX-i and POSS-i if they point towards (or ‘index’) locus i; the numbers 1 and 2 correspond to the position of the signer and addressee respectively. IX-i is a standard way of realizing a pronoun corresponding to locus i, but it can also serve to establish rather than to retrieve one. Agreement verbs include loci in their realization—for instance, the verb a-ASK-1 starts out from the locus a and targets the first person locus 1; it means that the third person individual denoted by a asks something to the signer. IX-arc-i refers to a plural pronoun indexing locus i, as it involves an arc motion towards i rather than a simple pointing sign.

The suffix -rep is used for unpunctuated repetitions, and in such cases -rep3, -rep4, -rep≥ 4, -rep5 … indicate that there are 3, 4, at least 4, 5, … iterations. When relevant, we add a subscript indicating the shape of the repetition, e.g. -rep3horizontal for a horizontal repetition (whether in a straight line or as horizontal arc), -rep3triangle for a triangular-shaped repetition. The suffix -cont is used for continuous repetitions, and subscripts may be used as well to indicate the shape of the movement, such as -conthorizontal or -conttriangle. Punctuated repetitions of an expression WORD are encoded as [WORD WORD WORD] if they involve three iterations of that expression; [WORD WORD WORD]horizontal and [WORD WORD WORD]triangle provide information about the shape of the repetition.

Unless otherwise noted, non-manuals are not transcribed. An exception is when they appeared in the original publications from which the sentences are cited. If so, \(\hat{\ }\) above a word or expression indicates that it was realized with raised eyebrows. Further conventions are introduced in the text as they become relevant.

❑ Spoken language transcription conventions

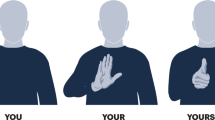

Glossing conventions for gestures were chosen to be reminiscent of sign language: here too, we used capital letters to gloss elements that are produced manually. This choice should definitely not suggest that signs are gestures or conversely.Footnote 39

For legibility, we use a non-standard font to transcribe gestures. A gesture that co-occurs with a spoken word (= a co-speech gesture) is written in capital letters or as a picture (or both) preceding the expression it modifies (in some cases, we have added a link to a video to illustrate some gestures). The modified spoken expression will be boldfaced, and enclosed in square brackets if it contains several words.

Examples: | John |

John | |

John |

A gesture that replaces a spoken word (= a pro-speech gesture) is written in capital letters or as a picture, or both.

Examples: | My enemy, I will |

My enemy, I will | |

My enemy, I will |

As in sign language, pointing gestures are alphabetized from the dominant to the non-dominant side from the speaker’s perspective.Footnote 40 -

- encodes pointing with a finger towards position a, while

encodes pointing with a finger towards position a, while  -

- -

- encodes pointing with an open hand, palm up, towards position a. A gestural verb involving slapping was glossed as

encodes pointing with an open hand, palm up, towards position a. A gestural verb involving slapping was glossed as  -

- if it was realized towards the addressee, and as

if it was realized towards the addressee, and as  -

- if it was realized towards a third person position—which we’ll also call ‘locus’ for terminological simplicity. Refining the notation, we will write

if it was realized towards a third person position—which we’ll also call ‘locus’ for terminological simplicity. Refining the notation, we will write  (-

(- ) if we think that this form is both a second person and a neutral form, usable in all persons. We will use the notation

) if we think that this form is both a second person and a neutral form, usable in all persons. We will use the notation  -

- to refer to pointing towards gestural locus a.

to refer to pointing towards gestural locus a.

Appendix II: Locative shift in signs and in gestures

This Appendix is devoted to a puzzling property of ASL (and LSF): in some cases, a location-denoting locus can be co-opted to refer to an individual found at that location. We summarize the main findings and ask whether they extend to gestural loci. As we will see, judgments in our survey are variable across consultants, and an experimental investigation might be called for.Footnote 41

❑ Basic Locative Shift in sign language

In ASL (and LSF), one may sometimes re-use a locus initially associated with a spatial location to denote an individual found at that location (Padden 1988; van Hoek 1992; Emmorey 2002; Emmorey and Falgier 2004; Schlenker 2013, 2018d). This phenomenon, sometimes called ‘Locative Shift,’ is illustrated in (67). In (67)a(i), locus b, associated with JOHN, appears as the object agreement marker of HELP, whereas in (67)a(ii) loci a and c, associated with FRENCH CITY and AMERICAN CITY respectively, are used to refer to John-in-the-French-city and John-in-the-American-city. The latter sentence exemplifies Locative Shift, which in this case is preferred (in other examples, there is optionality). The operation is constrained, however: indexical loci (here illustrated with a second person locus) usually do not like to undergo Locative Shift, as illustrated in (67)b(ii).

-

(67)

a. JOHN IX-b WORK [IX-a FRENCH CITY]a SAME WORK [IX-c AMERICA CITY]c.

‘John does business in a French city and he does business in an American city.

(i) No Locative Shift

4.2 IX-a IX-1 1-HELP-b. IX-c IX-1 NOT 1-HELP-b.

(ii) Locative Shift

6 JOHN IX-b WORK [IX-a FRENCH CITY]a SAME WORK [IX-c AMERICA CITY]c.

IX-a IX-1 1-HELP-a. IX-c IX-1 NOT 1-HELP-c.

There [= in the French city] I help him. There [= in the American city] I don’t help him.’

b. IX-2 WORK [IX-a FRENCH CITY]a SAME IX-2 WORK [IX-c AMERICA CITY]c.

‘You do business in a French city and you do business in an American city.’

(i) No Locative Shift

6.3 IX-a IX-1 1-HELP-2. IX-c IX-1 NOT 1-HELP-2.

There [= in the French city] I help you. There [= in the American city] I don’t help you.’

(ii) Locative Shift

2.3 IX-a IX-1 1-HELP-a. IX-c IX-1 NOT 1-HELP-c.

(ASL, 8, 1; 3 judgments; Schlenker 2011c)

❑ Basic Locative Shift in gestures

A basic case of Locative Shift with pro-speech gestures is illustrated in (68)c: instead of pointing towards the gestural locus introduced with John, the speaker points towards the gestural locus introduced with New York, but to refer to John-in-New York, so to speak.

-

(68)

Since

-

- -

- John can’t seem to work/get along with

John can’t seem to work/get along with  -

- -

- you, I’ll/I am going to have him transferred to

you, I’ll/I am going to have him transferred to  -

- -

- New York. And later, if I need to downsize, you know who I’ll fire?Footnote 42

New York. And later, if I need to downsize, you know who I’ll fire?Footnote 42a. 6.3

-

- . (= the addressee)

. (= the addressee)b. 4.7

-

- . (= John)

. (= John)c. 4.3

-

- . (= John)

. (= John)(Video 3991 https://youtu.be/j6lerTXyde8)

-

(69)

Acceptability ratings for (68)

Video 3991

a.

b.

c.

Consultant 1

5

2

5

Consultant 2

7

6

1

Consultant 3

7

6

7

Averages obscure some of the patterns in this case: Consultant 1 obligatorily applies Locative Shift, Consultant 2 prohibits it, Consultant 3 allows it, as seen by the scores in (69).

When it is denied that John will be in New York, pointing towards the gestural locus associated with New York to refer to John entirely fails (for all consultants), as seen in (70).

-

(70)

Since

-

- -

- John can’t seem to work with

John can’t seem to work with  -

- -

- you, I won’t have him transferred to

you, I won’t have him transferred to  -

- -

- New York. And if later I need/I laterFootnote 43 need to downsize, you know who I’ll fire?

New York. And if later I need/I laterFootnote 43 need to downsize, you know who I’ll fire?a. 6.3

-

- . (= the addressee)

. (= the addressee)b. 6.3

-

- . (= John)

. (= John)c. 1

-

- .

.(Video 3993 https://youtu.be/i1WfHT0Vx28)

Do we replicate in gestures the sign language finding that second person loci resist Locative Shift? The lower score in (7)c suggests that this is indeed the case, but due to the complexity of the ratings in the baseline in (69), we must discuss individual judgments, provided in (72).

-

(71)

Since

-

- -

- you can’t seem to work with

you can’t seem to work with  -

- -

- John, I’ll have you transferred to

John, I’ll have you transferred to  -

- -

- New York. And if later I need to downsize, you know who I’ll fire?

New York. And if later I need to downsize, you know who I’ll fire?a. 6.5

-

- . (= the addressee)

. (= the addressee)b. 6.3

-

- . (= John)

. (= John)c. 3.8

-

- . (= the addressee (?))

. (= the addressee (?))(Video 3997 https://youtu.be/cGj_sT78v_8)

-

(72)

Acceptability ratings for (71)

Video 3997

a.

b.

c.

Consultant 1

5.5

5

4.5

Consultant 2

7

7

1

Consultant 3

7

7

6

Let us look at the individual scores for (71)c. Consultant 1, who obligatorily applied Locative Shift in (69), allows for the non-locative-shifted option in (71)a, and slightly disprefers applying Locative Shift to it (score: 4.5). Consultant 2 prohibited Locative Shift in (69) and continues to prohibit it in this case (score: 1). From the perspective of the sign language prohibition against locative-shifted indexical pronouns, it is surprising that Consultant 3 accepts Locative Shift (score: 6). Still, their pattern of preference is reversed: Consultant 3 preferred to apply Locative Shift to third person pointing in (69) (scores: 7 with Locative Shift, 6 without), whereas with second person pointing, Consultant 3 prefers not to apply Locative Shift (scores: 6 with Locative Shift, 7 without).

We conclude that (i) Locative Shift has a counterpart with gestural loci, but (ii) it’s not clear whether gestural Locative Shift can apply to indexical pronouns or, as argued for ASL, is preferably avoided in such cases. Experimental work would be helpful in this area.

❑ Theoretical consequences

There are two facts to be explained with respect to the sign language data. First, (i) why can location-denoting loci be co-opted to denote individuals found at these locations? Second, (ii) why is this operation degraded when the speaker or addressee (and possibly other deictic elements) are denoted? The first question arises in our gestural data, the second question might or might not.

The question in (i) was answered in Schlenker (2018d), by way of the hypothesis in (73). In a nutshell, it states that a locus a denoting an individual s(a) and a locus b denoting a location (or more generally a situation) s(b) can be combined to form a complex expression \(a ^{b}\) which (i) denotes the stage of individual s(a) found in situation s(b), and (ii) is spelled out as b. The key in this analysis is that locative-shifted loci have more fine-grained meanings than standard loci and denote individuals at situations rather than just individuals or just situations.

-

(73)

Hypothesis about Locative Shift (Schlenker 2018d)

a. Syntax

If a locus adenotes an individual s(a) and a locus b denotes a spatial, temporal or modal situation s(b), then under some pragmatic conditions, the locus b can also spell out the complex expression \(a ^{b}\).

b. Semantics

Evaluated under an assignment s, \(a ^{b}\) denotes the stage of individual s(a) found in situation s(b).

Turning to the question in (ii), its answer might lie in the observation, made in connection with (9)a, that there is a strong preference in sign language and gestures alike to refer to elements that are present in the context by pointing at them (but see Schlenker 2018d for further discussion). This same principle might explain why one cannot easily introduce/retrieve an arbitrary locus to refer to the addressee in (9). And it would lead us to expect that in gestures as well Locative Shift cannot target indexical pronouns.

Rights and permissions

About this article

Cite this article

Schlenker, P. Gestural grammar. Nat Lang Linguist Theory 38, 887–936 (2020). https://doi.org/10.1007/s11049-019-09460-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11049-019-09460-z

-

- . (Schlenker

. (Schlenker  -

- -

- [you in France],

[you in France],  -

- -

- [you in England], or

[you in England], or  -

- -

- [John in Croatia]. You know who I am going to visit?

[John in Croatia]. You know who I am going to visit?  -

- .

. -

- -

- [one plane] appears on the right, using

[one plane] appears on the right, using  -

- -

- -

- to describe that same plane should be quite odd. But moving instead from the non-dominant to the dominant side might conceivably be more acceptable due to a neutral, non-located use of the gesture, produced close to the speaker’s dominant side.

to describe that same plane should be quite odd. But moving instead from the non-dominant to the dominant side might conceivably be more acceptable due to a neutral, non-located use of the gesture, produced close to the speaker’s dominant side. -

- -

- heblesses

heblesses -

- introduces what seems to be an unjustified asymmetry between the two bishop-denoted loci; the second conjunct was intended to restore the symmetry.

introduces what seems to be an unjustified asymmetry between the two bishop-denoted loci; the second conjunct was intended to restore the symmetry. -

-

replacing

replacing  -

-

, was explored in (22) in the Supplementary Materials (Video 3979 and 3989). We obtained the same comparative results as in (47)a,b,c: (a) high pointing for an individual situation high was acceptable; (b) low pointing was degraded; but (c) the latter effect was obviated under ellipsis. But all scores were lowered, with (a) = 4, (b) = 1.3, (c) = 3.7. Consultant 1 commented about (a) that “it’s not clear why the pointing gestures were necessary at all.” It’s hard to conclude at this point, and more work is needed on this issue.

, was explored in (22) in the Supplementary Materials (Video 3979 and 3989). We obtained the same comparative results as in (47)a,b,c: (a) high pointing for an individual situation high was acceptable; (b) low pointing was degraded; but (c) the latter effect was obviated under ellipsis. But all scores were lowered, with (a) = 4, (b) = 1.3, (c) = 3.7. Consultant 1 commented about (a) that “it’s not clear why the pointing gestures were necessary at all.” It’s hard to conclude at this point, and more work is needed on this issue. punished his enemy.

punished his enemy. _

_ punished his enemy.

punished his enemy. punished his enemy.

punished his enemy. .

. _

_ .

. .

. -

- -

- John can’t seem to work/get along with

John can’t seem to work/get along with  -

- -

- you, I’ll/I am going to have him transferred to

you, I’ll/I am going to have him transferred to  -

- -

- New York. And later, if I need to downsize, you know who I’ll fire?

New York. And later, if I need to downsize, you know who I’ll fire? -

- . (= the addressee)

. (= the addressee) -

- . (= John)

. (= John) -

- . (= John)

. (= John) -

- -

- John can’t seem to work with

John can’t seem to work with  -

- -

- you, I won’t have him transferred to

you, I won’t have him transferred to  -

- -

- New York. And if later I need/I later

New York. And if later I need/I later -

- . (= the addressee)

. (= the addressee) -

- . (= John)

. (= John) -

- .

. -

- -

- you can’t seem to work with

you can’t seem to work with  -

- -

- John, I’ll have you transferred to

John, I’ll have you transferred to  -

- -

- New York. And if later I need to downsize, you know who I’ll fire?

New York. And if later I need to downsize, you know who I’ll fire? -

- . (= the addressee)

. (= the addressee) -

- . (= John)

. (= John) -

- . (= the addressee (?))

. (= the addressee (?))