Abstract

Coronavirus disease 2019 (COVID-19) has swiftly spread throughout the globe, causing widespread infection in various countries and regions, and was declared a pandemic by World Health Organization (WHO) in 2020. Computer algorithms and models can help in the identification and classification of the COVID-19 virus in the medical domain, especially in CT, and X-rays and Electrocardiography tests with rapid and accurate results. In this paper, a COVID-19 electrocardiography classification model based on grey wolf optimization and support vector machine will be presented. A public online electrocardiography dataset was investigated in this paper with two classes (COVID-19, and Normal. The proposed model consists of three phases. The first phase is the feature extraction based on Resnet50. The second phase is the feature selection based on grey wolf optimization. The third phase is the classification based on the support vector machine. The experimental trials show that the proposed model achieves the highest accuracy possible when it is compared with other models that use different feature extraction and selection models, such as Alexnet and whale optimization algorithms. Also, the proposed model achieves the highest testing accuracy possible with 99.1% while related work that used hexaxial feature mapping and deep learning achieved 96.20% with an improvement of 2.9%. The achieved testing accuracy and its performance metrics such as Precision, Recall, and F1 Score support the research findings that the proposed model, while achieving the highest accuracy possible, it also consumes less time in the training by selecting a minimum number of features if it is compared with other related works which use the same dataset.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Since the first cases were identified in 2019, coronavirus disease 2019 (COVID-19) has spread rapidly around the world, causing widespread infection in several countries and regions, and was declared a public health emergency of international concern in January 2020 [1]. COVID-19 is caused by Severe Acute Respiratory Syndrome Coronavirus 2 (SARS-CoV-2) infection and can cause a wide range of symptoms, including fever, cough, loss of taste, and even death [2]. However, not all COVID-19 patients are symptomatic, and there is a proportion of asymptomatic but infectious patients, which makes it difficult to interrupt the spread of the virus by isolating COVID-19 patients. Therefore, an effective and rapid method of COVID-19 diagnosis is essential for the control of COVID-19.

Various studies on different COVID-19-related challenges have been conducted and resolved by the computer science discipline, for instance, tracking COVID-19 geographical transmissions based on real-time tweets [3]. The relevance of new technologies in the battle against the COVID-19 pandemic is being investigated [4], evaluating the impact of COVID-19 on the electrical and oil industries [5], classifying potential coronavirus treatments on a single human cell [6], face mask detection [7] and more.

Currently, two of the most widely used diagnostic methods for COVID-19 are Reverse Transcription Polymerase Chain Reaction (RT-PCR) for viral testing of samples obtained from nasopharyngeal swabs and diagnostic analysis of infection in chest radiographs [8]. However, both of these methods require specialized facilities to obtain samples and are difficult to scale up, i.e., to identify patients from large populations rapidly.

The computer-aided diagnosis system (CAD) based on deep learning is also one of the hottest research fields currently being applied to a wide range of diagnostic tasks for various diseases [9, 10]. There have been several proposals for a deep learning-based CAD system based on COVID-19. Numerous studies concentrate on the categorization and classification of COVID-19 CT and X-ray images are presented in [11,12,13,14].

Wang, Zhang [2] uses wavelet entropy extracted from chest CT images as features and a Self-adaptive Jaya algorithm to train a feedforward neural network to classify these features for COVID-19 diagnosis. Zhou, Wang [15]’s research provided a weakly supervised method for localising COVID-19 lung infections with limited data and utilised this method to generate pseudo-data and enhance supervised segmentation. Yu, Wang [16] based on transfer learning technique to build a COVID-19 CAD system. They use a pre-trained GoogleNet to extract features from chest radiography and replace the last two layers of GoogleNet to classify these features. However, these methods are mostly based on medical radiography, making it hard to identify patients from large populations rapidly.

In recent years, Internet of Things (IoT) devices have been used in a wide range of areas of human life and industrial production, and the sensor components in smart wearable IoT devices are becoming increasingly diverse. The ability to capture vital health data, such as electrocardiogram (ECG) and electroencephalogram (EEG) signals, is a growing capability of novel smart wearable devices integrated with the Internet of Things (IoT). Researchers worldwide are actively exploring the utility of these bio signals in healthcare applications, such as research work presented in [17] which utilize the use of EEG signals to contribute to many areas such as manufacturing robotic arms for disabled people. Although the main impact of COVID-19 is on the respiratory system, depending on the severity, it can also affect other systems, particularly the cardiovascular system. There have also been several attempts to combine ECG data from IoT devices with deep learning techniques to build COVID-19 CAD systems based on ECG data, and promising performance has been achieved. Ozdemir, Ozdemir [18] uses the Gray-Level Co-Occurrence Matrix to extract features from ECG signals and represent them as two-dimensional color images and further extract and classify features from these images by a CNN for the diagnosis of COVID-19. The COVID-19 prediction performance of their experiment achieved an accuracy of 93.00% and an F1-Score of 93.20%. Nainwal, Malik [19] used an eight-layer CNN to classify ECG images, achieving an accuracy of 98.11%, a sensitivity of 98.6%, and a specificity of 96.40%.

Irmak [20] conducted multiple experiments on ECG tracking images generated from ECG signals using a CNN with 20 learnable network layers. An accuracy of 83.05% is achieved for the multi-classification task with classes COVID-19, abnormal heartbeats, myocardial infarction, and normal; 86.55% for the multi-classification task with classes COVID-19, abnormal heartbeats, and myocardial infarction. Attallah [21] features from two different layers of each structure (high-level and low-level) based on five different structural deep learning techniques and used the discrete wavelet transform to process the features extracted from the high-level layer and integrate them with the features extracted from the low-level layer. The integrated features are classified using the ensemble classification method. Their experiments achieved 91.73% and 98.8% accuracies in the multivariate and binary classification tasks of COVID-19, respectively.

This research will present a COVID-19 electrocardiography classification based on grey wolf optimization and support vector machine. The model will be tested and compared with other models which use different optimization algorithms and deep learning models. Moreover, the proposed model will be compared to related works using the same dataset in this research. The main contributions of this research are.

-

1.

(GOW-KNN F Layer, and WO-KNN F Layer) A feature-selection layer based on Grey Wolf Optimization and Whale Optimization with K-nearest Neighbour as a fitness function is proposed.

-

2.

Two models are proposed (COECG-Alexnet-GWO-SVM, and COECG-Resnet-WO-SVM) based Alexnet and Resnet18 as feature extraction for COVID-19 ECG classification.

-

3.

Resnet18 as a feature extraction is better than Alexnet as a feature extraction according to the testing accuracy metric, as it produced a meaningful powerful feature.

-

4.

The SVM is the selected main classifier in the proposed model as it achieved the highest accuracy possible in the experimental trial section.

-

5.

COECG-Resnet-GWO-SVM is better than COECG-Resnet-WO-SVM according to performance metrics.

-

6.

COECG-Resnet-GWO-SVM overcomes related works in terms of testing accuracy and performance metrics as it achieves 99.1% in testing accuracy. It overcomes related work that uses hexaxial feature mapping and deep learning with an improvement of 2.9%. Moreover, it overcomes related works that use bilayers of deep features integration and resnet18 with an improvement ranging from 0.3% to 0.43%.

In the rest of this paper, Sect. 2 introduces some recent research in the field of research. Section 3 explains the background of the proposed model and its components. Section 4 presents the dataset characteristics. The proposed model will be shown in section 5. Section 6 illustrates the experiment's setup and results. The conclusion and future works will be presented in Section 7.

2 Related work

The rapid and precise diagnosis of COVID-19 remains a critical challenge that has spurred numerous advancements in medical diagnostics, particularly through the analysis of electrocardiogram (ECG) data. This section examines a series of innovative approaches that harness deep learning and machine learning technologies to enhance ECG-based COVID-19 detection.

Recent studies have leveraged the capabilities of deep convolutional neural networks (CNNs) to differentiate COVID-19 from other cardiovascular diseases using ECG trace images. For instance, a novel CNN model has been developed to achieve high classification accuracy, demonstrating 98.8% effectiveness in binary classifications and 91.73% in multi-class scenarios, thereby illustrating the potential of deep learning models in clinical diagnostics [22]. Building upon these developments, other researchers have explored hybrid approaches that combine machine learning algorithms such as SVM and Random Forests with deep learning features extracted from ECG signals. This integration not only enhanced the diagnostic accuracy, reaching up to 98.2% in binary classifications, but also improved the interpretability of ECG data, which is crucial for clinical decision-making [23].

Focusing specifically on predictive modelling, the ML-ECG-COVID study developed a suite of machine-learning models that processed ECG signals to predict COVID-19 infection. By integrating time, frequency, and time–frequency domain features, this approach achieved notable accuracy and sensitivity rates, highlighting its utility for early detection and management of the disease [24]. Complementing this, the ECG-BiCoNet project introduced a novel pipeline that utilised bi-layers of deep features integration from ECG data, enhancing the capability to diagnose COVID-19 with an impressive degree of precision [21].

The application of pre-trained CNN models such as ResNet50 has shown significant promise in enhancing the analysis of ECG images. These models are particularly effective in handling complex datasets and have achieved classification accuracies up to 97.83% in multi-class scenarios, showcasing their ability to discern subtle patterns in ECG data that are indicative of COVID-19 [20]. Similarly, another study employing DenseNet201 provided insights into the diagnostic capabilities of deep learning architectures, further advancing the medical imaging and diagnostics field for COVID-19 and other cardiac conditions [25].

Moreover, innovative ECG representation techniques like Hexaxial feature mapping have been developed to enhance the visual interpretation of ECG data for CNN processing. This method transforms 12-lead ECGs into 2D colourful images, improving the diagnostic performance of CNNs with accuracies reaching 96.20%. Such innovative approaches not only facilitate the application of CNNs but also enhance their diagnostic effectiveness, proving especially beneficial in scenarios where quick and accurate diagnosis is essential [18].

A noteworthy advancement in the field is the development of COV-ECGNET, which explored the accessibility of ECG analysis in low-resource settings. This study has pioneered the use of deep convolutional neural network models to detect COVID-19 from ECG trace images, which can be captured using common smartphones. The study utilised a series of advanced deep learning architectures, including ResNet18, ResNet50, MobileNetv2, and DenseNet201, to classify ECG images into categories such as normal, COVID-19, and other cardiovascular diseases (CVDs). The methodology was specifically tailored to operate efficiently on platforms with limited computational power, such as mobile devices, making it highly accessible and scalable. The use of MobileNetv2 demonstrated exceptional performance in terms of speed and accuracy in real-time settings, which is critical for timely decision-making in clinical environments. COV-ECGNET achieved impressive accuracy rates, with Densenet201 and InceptionV3 showing the best performance across different classification schemes [26]. Table 1 provides a comparative analysis with the others similar related works in terms of approach, accuracy, advantages and disadvantages.

The research studies have achieved promising performance in applying deep learning and machine learning techniques to analyze and diagnose COVID-19 from ECG data. However, there is an unignorable gap in the optimization process of selecting the most important features; a diagnostic performance of these methodologies currently limits minimizing computational burden. It is clear from the review that most of the present models address the issue of feature extraction efficacy or that of neural network architectural depths separately. Using evolutionary algorithms like Grey Wolf Optimization (GWO), the strategic role of feature selection remained uncharted. The above gap thus points to a potential for improving both the speed and efficiency of COVID-19 ECG classification systems with minimum impact on diagnostic accuracy. Our proposed model of COECG-Resnet-GWO-SVM fills this gap by presenting the use of GWO for optimal feature selection, expecting to reduce the model's overall complexity and increase its efficiency.

3 Background

The proposed model consists of three phases, the first phase is the feature extraction, the second one is the feature selection, and the third phase is the classification. The feature extraction will be based on deep transfer learning (DTL), the core of DTL is the convolution neural network (CNN), which will be presented in subsection 3.1. The second phase is the feature selection based on Grey Wolf Optimization Algorithm (GWOA), which will be introduced in subsect. 3.2. The integration of optimization algorithms with CNN have been demonstrated in various research such as research work in [27] which utilized the use antlion optimization with CNN for plant leaf recognition and [28] which exploited the use of binary particle swarm optimization with decision tree (BPSO-DT) with CNN for cancer RNA sequence analysis and classification. The third phase in our model is classification, which will be based on the Support Vector Machine as a classifier and will be exhibited in subsect. 3.3. The justifications for using those algorithms and models will be elaborated in sections 4 and 5.

3.1 Convolutional neural network

As one of the most popular deep learning-based image feature extraction methods, Convolutional Neural Networks (CNNs) [29] can extract image features effectively with the consideration of the structure information of the input images.

Generally, a CNN consists of an input layer, convolution layers with activation functions, pooling layers, and an output layer. The convolution layers are the core components for feature extraction. In convolution layers, several small matrices consist of weights (kernels) with pre-configured size slide over the input image following a sequence of left to right, top to bottom. For each sliding process, a convolution operation based on the kernels is performed on the kernel projections on the input image channels to extract features from input images. Since the centroid of kernels can never project on the edges of the input images for the entire sliding process, the information located there will be lost in the convolution layer.

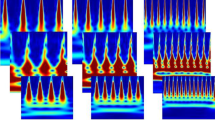

Padding [30] is a hyperparameter of convolution layers to address the above issue. Typically, there are two padding modes, i.e., VALID and SAME. With padding in the SAME mode, the input image will be padded with pixels of value 0 to make the kernels sliding start from the top-left corner and end at the bottom-right corner to obtain information located at the edge of the input images. Therefore, the output image size will remain the same as the input images. In contrast, no padding operation will be performed with padding in the VALID mode so that the output images of the convolution will be smaller than the input images. It can indeed reduce the computation complexity compared to the padding in the SAME mode, but the information located at the edge of input images will be lost. The padding mode is normally configured according to the significance of the information located at the edge of input images to the objective of the task. Fig. 1 illustrates examples of the process in convolution layers with padding in the SAME and VALID modes.

Although the convolution layer can effectively reduce the feature dimensions, the computational resource required by CNN-based image classification tasks is unignorable. Therefore, most of the convolution layers in CNNs are followed by activation functions and max pooling layers to reduce further the dimension of the convolved features and, thus, the computational resource requirements.

Activation functions [31] in CNNs are similar to those in traditional neural networks (e.g., multi-layer perceptrons), take the feature maps outputted from a network layer as input, filter out the weak features (features with low or zero gradients) and take the remaining features as the input of the next network layer. Most of the activation functions are nonlinear and contribute to not only reducing the feature dimensions but also increasing the nonlinearity of the neural networks to avoid the gradient disappearance so that the networks can be applied to more complex tasks. Equation (1) shows the calculation for input x of a widely used activation function, Rectified Linear Unit (ReLU).

Pooling layers [32] is another approach of CNNs to down-sample the feature maps by summarising the presence of features in patches of the feature maps. The two most common pooling layers are max pooling layers and mean pooling layers. The former uses the feature with maximum value in a patch of feature maps as output, and the latter calculates the mean value of all features in a patch of feature maps as output. Figure 2 illustrates examples of max pooling and mean pooling, respectively.

The grid on the left is a dummy input feature map, and the two on the right are dummy output feature maps. The two arrows represent two different pooling methods: max pooling and mean pooling. The green blocks are a patch of the input feature map. With max pooling, it will output 78, which is the max value in the patch; with mean pooling, it will output 39, which is the mean value among all values in the patch.

With the above techniques, CNN can extract features from image data while keeping the structure information. Many variants of CNN with various architectures have emerged for image-processing tasks in various domains. Our research is based on two typical CNN architectures, AlexNet and Residual Neural Network (ResNet).

AlexNet [33], one of the most typical CNN, won the championship of the Large-Scale Visual Recognition Challenge (ILSVRC) competition in 2012 with a top-5 error rate of 15.3%. It has 600 million parameters and 650,000 neurons and mainly consists of five convolution layers with ReLU activation functions and three max-pooling layers after the first, second and last convolution layers.

ResNet [34] won the championship of ILSVRC 2015 and has 152 network layers, which is a very high network depth even for a deep learning model at that time. ResNet’s high network depth is made possible by one of its key component types called residual learning unit that can mitigate the negative effects of increasing network depth, e.g., gradient explosion and gradient disappearance, and makes ResNet a new milestone of CNNs’ development.

3.2 Grey wolf optimization

The Grey Wolf Optimisation (GWO) [35] was inspired by and simulated the leadership and hunting mechanisms of the grey wolf pack. The grey wolf in nature is a pack-dwelling canid that lives at the top of the food chain. The pack adheres to a strict social dominance hierarchy in which the alpha wolf is responsible for decision-making for the entire pack, the beta wolf assists the alpha wolf in making decisions, and the delta wolf follows the alpha and beta wolves and dominates the omega wolves. GWO builds such social hierarchy at the beginning of each iteration of the algorithm by assigning three positions with the best fitness values as \(\alpha\), \(\beta\), and \(\delta\), corresponding to the grey wolf pack’s alpha, beta, and delta wolves. Meanwhile, all other positions will then correspond to the omega wolves.

When a grey wolf pack searches for prey, all wolves will gradually approach the prey and surround it. GWO assumes \(\alpha\), \(\beta\), and \(\delta\) have the strongest ability to identify the optimal position and let all \(\omega\) follow them to approach the optimal solution. The procedure is divided into two steps, (1) calculating the distance between each \(\omega\) and \(\alpha\), \(\beta\), and \(\delta\); (2) defining the direction and stride lengths of \(\omega\) to \(\alpha\), \(\beta\), and \(\delta\); (3) updating each \(\omega\)’s position. Equation (2) shows the calculation of the distances between \(\omega\) and \(\alpha\), \(\beta\) and \(\delta\).

where \({D}_{\alpha }\), \({D}_{\beta }\) and \({D}_{\delta }\) represent the distance between \(\omega\) and \(\alpha\), \(\beta\) and \(\delta\), respectively. \({X}_{\alpha }\), \({X}_{\beta }\), \({X}_{\delta }\) and \({X}_{\omega }\) represent the positions of \(\alpha\), \(\beta\), \(\delta ,\) and \(\omega\), respectively. \({C}_{\alpha }\), \({C}_{\beta }\) and \({C}_{\delta }\) are vectors calculated by Eq. (3).

where \({r}_{1}\) is a random vector within the range of \(\left[0, 1\right]\). After calculating the distance between each \(\omega\) and \(\alpha\), \(\beta\), and \(\delta\), the direction and stride lengths of \(\omega\) to \(\alpha\), \(\beta\), and \(\delta\) can be calculated by the equation (4).

where \({L}_{\alpha }\), \({L}_{\beta }\) and \({L}_{\delta }\) represent the direction and stride lengths of \(\omega\) to \(\alpha\), \(\beta\), and \(\delta\), respectively. \({A}_{\alpha }\), \({A}_{\beta }\) and \({A}_{\delta }\) are vectors calculated by Eq. (5).

where \(a\) is the convergence factor, decreases linearly from 2 to 0 with iterations going. \({r}_{2}\) is a random vector within the range of \([0, 1]\). The updated position of \(\omega\) can be finally defined by Eq. (6).

3.3 Support vector machine

A Support Vector Machine (SVM) [36] is a model for classifying data in a supervised manner. SVMs treat data points as \(p\) dimensional vectors and try to distinguish them using a \(p-1\) dimensional hyperplane. The most important feature of SVM is that it finds the hyperplane with the largest interval between samples of each class.

Assuming a linearly divisible binary dataset with \(N\) samples, \(T=\{\left({{\varvec{x}}}_{1},{y}_{1}\right), \left({{\varvec{x}}}_{2}, {y}_{2}\right), \cdots , ({{\varvec{x}}}_{N},{y}_{N})\}\) where \({{\varvec{x}}}_{i}\) is a vector that includes data features of the \(i\) th sample, \({y}_{i}\) is the label of the \(i\) th sample. A hyperplane \(w\cdot {\varvec{x}}+b=0\) can eventually divide the dataset into two classes with appropriate adjustment of coefficient \(w\) and bias \(b\). The distance \({d}_{i}\) between the hyperplane and the \(i\) th sample can be calculated by Eq. (7).

In this case, the objective of SVM is to find the \(w\) and \(b\) of the most distant hyperplane from all data samples of both classes.

4 Dataset characteristics

The dataset used in this research was presented in [37]. The dataset consisted of 1937 unique patient records gathered using ECG Device 'EDAN SERIES-3' deployed in Cardiac Care and Isolation Units across Pakistan. The acquired ECG imagery data were analyzed manually by medical academics utilizing the Telehealth ECG diagnosis system, under the supervision of senior medical practitioners with expertise in ECG interpretation [37]. A sample of dataset images is presented in Fig. 3.

A subset of the dataset was selected in this research. The focus of this research is to classify whether the patient has been infected with COVID-19 or not. Two classes were selected from this dataset, which included COVID-19 and Normal ECG images. The number of COVID-19 ECG images was 250 and the number of Normal ECG images was 859. The total number of selected ECG images from the original dataset was 1109 images.

5 The proposed model method and phases

The proposed model consists of three phases, as presented in Fig. 4. The first phase is the feature extraction using Resnet50. The second phase is the features selection process based on the Grey Wolf Optimization Algorithm (GWOA). The third phase is the training and testing based on a Support Vector Machine (SVM).

Data input

The proposed method begins with The ECG dataset which was fed into the model. The ECG dataset consists of 2 classes (Normal and COVID-19). It consists of 1109 images.

Feature extraction phase

This phase leverages a pre-trained convolutional neural network (CNN) ResNet50. It is a powerful deep learning architecture particularly adept at extracting features from complex data, especially images. Here, the pre-trained ResNet50 acts as a feature extractor. The Resnet50 model extracts 2048 features from every image. The output of this phase was obtain a set of extracted features.

Feature selection phase

After obtaining a set of features representing the most significant characteristics identified by the ResNet50 within the data (obtain a set of extracted features). However, depending on the complexity of the data, there might be a large number of extracted features, some of which could be redundant or irrelevant to the specific classification task. This feature selection algorithm (GWOA) selects 142 features from every image. We modified the GWOA algorithm to adapt the K Nearest Neighbor (KNN) algorithm as a fitness function. Based on the achieved accuracy by KNN and eliminating features one by one. The GWOA would favor feature combinations that lead to higher classification accuracy with the KNN. The output of this phase would be 1109 images * 142 features.

Training and testing phase

The dataset is then divided into two distinct sets: a training set and a testing set. The training set typically comprises a larger portion of the data (around 80%) This set is used to train the model and identify patterns within the data. The remaining data becomes the testing set (around 20%) and is used later to evaluate the model's performance on unseen data. Training and testing were based on (SVM) as a classifier. The performance metrics were calculated at the end of this phase to measure the performance of the proposed model method and will be presented in the experimental trials’ results section.

The selection of Resnet50 as a features extractor was based on the results obtained during the experimental trials. The Resnet50 model was compared with another model Alexnet as a feature extractor. The highest testing accuracy was achieved when the Resnet50 was selected as a features extractor. The same as for (GWOA), it was compared with Whale Optimization (WO). The (GWOA) also achieved the highest testing accuracy possible when the proposed mode used it as a feature selector. With the same design architecture philosophy, the (SVM) achieved the highest test accuracy possible when compared to other classifiers and therefore was selected as the main classifier in this proposed model.

6 Experimental results

All the experiments were performed on a computer server equipped with 32 GB of RAM, an Nvidia Tesla T4 (16 GB RAM), and a Core i5 processor (3.75 GHz). The development of experiments was GPU-specific on the MATLAB 2021a software package. During the experiments, the following specifications were used.

-

Two deep transfer learning models for feature extractions and also for pretrained deep learning models (Alexnet, and Resnet50).

-

Two optimization algorithms for feature extraction were selected (Gey Wolf Optimization Algorithm, and Whale Optimization Algorithm).

-

Two classifiers were tested (Support Vector Machine, and fully connected Neural Networks).

-

The dataset was divided into two parts [38] (80% of the data for the training process, and 20% for the testing process).

-

Data Augmentation [39, 40] was applied to the training data.

-

Deep transfer learning models were used as a classification model also to test the performance of the proposed model according to the next environment setup

-

Training time and the number of features will be recorded during the experiments.

-

Testing accuracy, Precision, Recall, and F1 Score [43] are selected as performance metrics and presented from Equations \* (8) to \* (11), along with the consumed time during the training process.

$$\mathrm{Testing Accuracy }=\frac{\mathrm{TPos}+\mathrm{TNeg}}{\left(\mathrm{TPos}+\mathrm{FPos}\right)+\left( \mathrm{TNeg}+\mathrm{FNeg}\right)}$$(8)$$\mathrm{Precision}=\frac{\mathrm{TPos}}{(\mathrm{TPos}+\mathrm{FPos})}$$(9)$$\mathrm{Recall }= \frac{\mathrm{TruePos}}{(\mathrm{TPos}+FNeg)}$$(10)$$\mathrm{F}1\mathrm{ Score }=2*\frac{\mathrm{Precision}*\mathrm{Recall}}{(\mathrm{Precision}+\mathrm{Recall})}$$(11)

Where TPos is the total number of True Positive samples, TNeg is the total number of True Negative samples, FalsePos is the total number of False Positive samples, and FalseNeg is the total number of False Negative samples from a confusion matrix.

6.1 Alexnet as a feature extractor and as a pretrained deep learning model

The first experiment included Alexnet as a feature extractor with GWOA and WOA as a feature selector with SVM as a classifier and Alexnet as a DTL model. The obtained results (testing accuracy, Precision, Recall, F1 Score, number of features, and training time) would be measured and compared with Alexnet as a DTL model. Testing accuracy, Precision, Recall, and F1 Score were calculated using the confusion matrix presented in Fig. 5 (a), Fig. 6 (a), and Fig. 7. While Fig. 5 (b), and Fig. 6 (b) illustrated the fitness function progress for the feature selection process for GWOA, and WOA.

The confusion matrices presented above illustrated that the Alexnet with GWOA and SVM classifier achieved the highest testing accuracy possible with 98.2%, while Alexnet with WOA and SVM classifier achieved 97.7%. Both confusion matrices would be used to calculate the performance metrics, along with Alexnet's confusion matrix as a DTL model. The progress of the fitness function for GWOA, and WOA illustrated that GWOA achieved the minimum value after the fourth iteration and the number of selected features was 885 while the WOA's minimum value was achieved after the iteration number 37 and the number of selected features was 45.

The testing accuracy for Alexnet as a DTL model was 94.6% which is less than the testing accuracy achieved by both GWOA and WOA as feature extractors with SVM as a classifier. Table 2 presents the performance metrics calculated from the previous confusion matrices.

Table 2 shows that the performance metrics for Alexnet with GWOA and SVM classifier achieved the highest percentage in the testing accuracy, Precision, Recall, and F1 Score with values 0.982, 0.9655, 0.9881, and 0.9767 consequently. Table 3 presents the training time and the number of features for every model. The WOA had the minimum number of features (46), and a minimum training time of 20.88 s. The GWOA had 885 features with 108.368 s of training time. The time of training is proportional to the number of features. More features mean more training time, but it didn’t mean better accuracy. Alexnet, as the DTL model had the maximum number of features but didn’t achieve the highest accuracy possible for testing accuracy.

6.2 Resent50 as a feature extractor and as a pretrained deep-learning model

The second experiment included Resnet50 as a feature extractor with GWOA and WOA as a feature selector with SVM as a classifier. The obtained results which included testing accuracy, Precision, Recall, F1 Score, number of features, and training would be measured and would be compared with Resnet50 as a DTL model. Testing accuracy, Precision, Recall, and F1 Score were calculated as experiment number 1 using the confusion matrix presented in Fig. 8 (a), Fig. 9 (a), and Fig. 10. While Fig. 8 (b), and Fig. 9 (b) showed the fitness function progress for the feature selection process for GWOA, and WOA.

The confusion matrices presented above illustrated that the Resnet50 with GWOA and SVM classifier achieved the highest testing accuracy possible with 99.1%, while Resnet50 with WOA and SVM classifier achieved 98.6%. Both confusion matrices will be used to calculate the performance metrics, along with the confusion matrix of Resnet50 as the DTL model. The progress of the fitness function for GWOA, and WOA illustrated that GWOA achieved the minimum value after the 68 iterations and the number of selected features was 142 while WOA's minimum value was achieved after the iteration number 32 and the number of selected features was 51.

The testing accuracy for Resnet50 as a DTL model was 94.6% which is less than both GWOA and WOA as feature extractors and SVM as classifier. Table 4 presents the performance metrics calculated from the previous confusion matrices.

Table 4 shows that the performance metrics for Resnet50 with GWOA and SVM classifier achieved the highest percentage in testing accuracy, Precision, Recall, and F1 Score with values 0.991, 0.9904, 0.9942, and 0.9873 consequently. Table 5 presents the training time and the number of features for every model. The WOA had the minimum number of features (51), and a minimum training time of 15.03 s. The GWOA had 142 features with 29.36 s of training time. The time of training is proportional to the number of features. More features mean more training time, but it didn’t mean better accuracy, same as stated in experiment number 1. Resnet50 as DTL had the maximum number of features but didn’t achieve the highest accuracy possible for the testing accuracy.

6.3 Results discussion

The previous subsections illustrated that The GWOA achieved the highest accuracy possible in testing accuracy and performance metrics whether the Alexnet or Resnet50 was selected as a feature selector. The proposed models with GWOA as a feature selector and SVM as a classifier achieved 98.2% in testing accuracy with Alexnet and 99.1% with Resent50.

For the time consumed by the training phase and the number of features, the WOA achieved the lowest consumed time of the training, with 20.88 s and 46 features when using Alexnet. It achieved 15.03 s with 51 features when using Resnet50. Although WOA with SVM had the least time and number of selected features, it didn’t achieve the highest testing accuracy with performance metrics. The training time could be neglected as it happened once and would not affect the time of the classification process. Therefore, the research decision is to select Resnet50 as a feature extractor with GWOA as a feature selector and SVM as a classifier to be the main proposed model. As it achieved the highest accuracy possible for testing accuracy and performance metrics if it is compared to Alexnet and Resnet50 as a DTL model.

The next step is to compare the proposed model with other related works which used the same dataset. Table 6 presents a comparison table of the proposed model with other related works.

Table 6 illustrates that the proposed model achieved the highest testing accuracy, with 99.1%, compared with related works. Also, it achieved the highest Recall percentage with 99.42%. The model presented in [21] achieved higher accuracy in precision and F1 score with 0.76%, and 0.07%, consequently more than the achieved percentage in the proposed model. Meanwhile, testing accuracy is considered one the most important metrics to judge the accuracy of the model, and the proposed model overcomes all related works in terms of testing accuracy with the minimum consumed time on the training and the minimum number of features if compared to the related works which used deep learning models.

7 Conclusion

Since the first cases were found in 2019, coronavirus disease 2019 (COVID-19) has spread fast throughout the world, causing widespread illness in various countries and regions, and was classified as a worldwide public health emergency in January 2020 as a pandemic. There was a need to fight against this pandemic by any means. Computer Aided Diagnosis (CAD) systems can help in this fight by helping doctors quickly identify this virus. In this paper, A COVID-19 electrocardiography classification based on grey wolf optimization and support vector machine was presented. The proposed model used a dataset that was and is still publicly available, and two classes were selected from this dataset, which included two classes (COVID-19, and Normal) ECG images. The model consisted of three phases; The first phase was the feature extraction based on Resnet50. The second phase was the feature selection based on grey wolf optimization. The third phase was the classification based on the support vector machine. Experimental trials showed that the proposed model achieved the highest testing accuracy possible with 99.1% if it was compared with other models that used Alexnet as a feature extractor and/or whale optimization algorithm as a feature selector. Also, it super passed Alexnet and Resnet50 if it is used as a classifier. The performance, such as Precision, Recall, and F1 Score, supported the research findings, and the proposed model overcame the related works that used the same dataset in terms of overall testing accuracy.

Data availability

The data that support the findings of this study are available online and published on [37].

References

Wang S-H, Nayak DR, Guttery DS, Zhang X, Zhang Y-D (2021) COVID-19 classification by CCSHNet with deep fusion using transfer learning and discriminant correlation analysis. Inf Fusion 68:131–148

Wang W, Zhang X, Wang S-H, Zhang Y-D (2022) Covid-19 diagnosis by WE-SAJ. Syst Sci Control Eng 10(1):325–335

Ahmed K, Abdelghafar S, Salama A, Khalifa NEM, Darwish A, Hassanien AE (2021) Tracking of COVID-19 geographical infections on real-time tweets. Digital Transformation and Emerging Technologies for Fighting COVID-19 Pandemic: Innovative Approaches, pp 297–310

Abd El-Aziz AA, Khalifa NEM, Darwsih A, Hassanien AE (2021) The role of emerging technologies for combating COVID-19 pandemic. Digital Transformation and Emerging Technologies for Fighting COVID-19 Pandemic: Innovative Approaches, pp 21–41

Abd El-Aziz AA, Khalifa NEM, Hassanien AE (2021) Exploring the impacts of COVID-19 on oil and electricity industry. The Global Environmental Effects During and Beyond COVID-19: Intelligent Computing Solutions, pp 149–161

Khalifa NE, Manogaran G, Taha MHN, Loey M (2021) The classification of possible Coronavirus treatments on a single human cell using deep learning and machine learning approaches. J Theor Appl Inf Technol 99:21

Khalifa NE, Loey M, Chakrabortty RK, Taha MHN (2022) ‘Within the Protection of COVID-19 Spreading A Face Mask Detection Model Based on the Neutrosophic RGB with Deep Transfer Learning. Neutrosophic Sets Syst 50(1):18

van Kasteren PB et al (2020) Comparison of seven commercial RT-PCR diagnostic kits for COVID-19. J Clin Virol 128:104412

Huang C, Wang W, Zhang X, Wang SH, Zhang YD (2022) Tuberculosis diagnosis using deep transferred EfficientNet. IEEE/ACM Trans Comput Biol Bioinform 20(5):2639–2646

Zhou Q, Zhang X, Zhang Y-D (2021) ‘Ensemble learning with attention-based multiple instance pooling for classification of SPT’, IEEE Trans. Circuits Syst II Express Briefs 69(3):1927–1931

Khalifa NEM, Taha MHN, Chakrabortty RK, Loey M (2022) Covid-19 chest X-rays classification through the fusion of deep transfer learning and machine learning methods. In: Proceedings of 7th International Conference on Harmony Search, Soft Computing and Applications: ICHSA 2022. Singapore: Springer Nature Singapore, pp 1–11

Khalifa NEM, Smarandache F, Manogaran G, Loey M (2021) A study of the neutrosophic set significance on deep transfer learning models: an experimental case on a limited covid-19 chest x-ray dataset. Cogn Comput 1–10

Khalifa NEM, Manogaran G, Taha MHN, Loey M (2022) A deep learning semantic segmentation architecture for COVID-19 lesions discovery in limited chest CT datasets. Expert Syst 39(6):e12742

Khalifa NEM, Taha MHN, Hassanien AE, Taha SHN (2020) The detection of covid-19 in ct medical images: A deep learning approach. Big Data Analytics and Artificial Intelligence against COVID-19: Innovation Vision and Approach, pp 73–90

Zhou Q, Wang S, Zhang X, Zhang YD (2022) WVALE: weak variational autoencoder for localisation and enhancement of COVID-19 lung infections. Comput Methods Prog Biomed 221:106883

Yu X, Wang SH, Zhang X, Zhang YD (2020) Detection of COVID-19 by GoogLeNet-COD. In: Intelligent Computing Theories and Application: 16th International Conference, ICIC 2020, Bari, Italy, October 2–5, 2020, Proceedings, Part I 16. Springer International Publishing, pp 499–509

Rastegar H, Giveki D, Choubin M (2024) EEG signals classification using a new radial basis function neural network and jellyfish meta-heuristic algorithm. Evol Intell 17(2):1197–1208

Ozdemir MA, Ozdemir GD, Guren O (2021) Classification of COVID-19 electrocardiograms by using hexaxial feature mapping and deep learning. BMC Med Inform Decis Mak 21(1):1–20

Nainwal A, Malik GK, Jangra A (2021) Convolution Neural Network Based COVID-19 Screening Model. Adv Syst Sci Appl 21(3):31–39

Irmak E (2022) COVID-19 disease diagnosis from paper-based ECG trace image data using a novel convolutional neural network model. Phys Eng Sci Med 45(1):167–179

Attallah O (2022) ECG-BiCoNet: An ECG-based pipeline for COVID-19 diagnosis using Bi-Layers of deep features integration. Comput Biol Med 142:105210

Bassiouni MM, Hegazy I, Rizk N, El-Dahshan E-SA, Salem AM (2022) Automated Detection of COVID-19 Using Deep Learning Approaches with Paper-Based ECG Reports. Circuits Syst Signal Process 41(10):5535–5577. https://doi.org/10.1007/s00034-022-02035-1

Attallah O (2022) An Intelligent ECG-Based Tool for Diagnosing COVID-19 via Ensemble Deep Learning Techniques. Biosensors 12(5):5. https://doi.org/10.3390/bios12050299

Irungu J, Oladunni T, Grizzle AC, Denis M, Savadkoohi M, Ososanya E (2023) ML-ECG-COVID: A Machine Learning-Electrocardiogram Signal Processing Technique for COVID-19 Predictive Modeling. IEEE Access 11:135994–136014. https://doi.org/10.1109/ACCESS.2023.3335384

Gomes JC, de Santana MA, Masood AI, de Lima CL, dos Santos WP (2023) COVID-19’s influence on cardiac function: a machine learning perspective on ECG analysis. Med Biol Eng Comput 61(5):1057–1081. https://doi.org/10.1007/s11517-023-02773-7

Rahman T et al (2022) COV-ECGNET: COVID-19 detection using ECG trace images with deep convolutional neural network. Health Inf Sci Syst 10(1):1–16

Giveki D, Zaheri A, Allahyari N (2024) Designing CNNs with optimal architectures using antlion optimization for plant leaf recognition. Multimed Tools Appl 1–30

Khalifa NEM, Taha MHN, Ali DE, Slowik A, Hassanien AE (2020) Artificial Intelligence Technique for Gene Expression by Tumor RNA-Seq Data: A Novel Optimized Deep Learning Approach. IEEE Access 8:22874–22883

O'shea K, Nash R (2015) An introduction to convolutional neural networks. arXiv preprint arXiv:1511.08458

Dwarampudi M, Reddy NV (2019) Effects of padding on LSTMs and CNNs. arXiv preprint arXiv:1903.07288

Nwankpa C, Ijomah W, Gachagan A, Marshall S (2018) Activation functions: Comparison of trends in practice and research for deep learning. arXiv preprint arXiv:1811.03378

Bailer C, Habtegebrial T, Stricker D (2018) Fast feature extraction with CNNs with pooling layers. arXiv preprint arXiv:1805.03096

Yuan ZW, Zhang J (2016) Feature extraction and image retrieval based on AlexNet. In: Eighth International Conference on Digital Image Processing (ICDIP 2016). SPIE 10033:65–69

Zhou T, Huo B, Lu H, Ren H (2020) Research on residual neural network and its application on medical image processing. Acta Electonica Sin 48(7):1436

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61

Noble WS (2006) What is a support vector machine? Nat Biotechnol 24(12):1565–1567

Khan AH, Hussain M, Malik MK (2021) ECG Images dataset of Cardiac and COVID-19 Patients. Data Brief 34:106762

Jena PR, Majhi R, Kalli R, Managi S, Majhi B (2021) Impact of COVID-19 on GDP of major economies: Application of the artificial neural network forecaster. Econ Anal Policy 69:324–339. https://doi.org/10.1016/j.eap.2020.12.013

Perez L, Wang J (2017) The effectiveness of data augmentation in image classification using deep learning. arXiv preprint arXiv:1712.04621

Khalifa NE, Loey M, Mirjalili S (2022) A comprehensive survey of recent trends in deep learning for digital images augmentation. Artif Intell Rev 55(3):2351–2377

Prechelt L (2002) Early stopping-but when? In: Neural Networks: Tricks of the trade. Berlin, Heidelberg: Springer Berlin Heidelberg, pp 55–69

Li M, Zhang T, Chen Y, Smola AJ (2014) Efficient mini-batch training for stochastic optimization. In: Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining, pp 661–670

Goutte C, Gaussier E (2005) A probabilistic interpretation of precision, recall and F-score, with implication for evaluation. In: European conference on information retrieval. Berlin, Heidelberg: Springer Berlin Heidelberg, pp 345–359

Acknowledgements

The authors of this study would like to express their gratitude to the Google Cloud Platform (GCP). Their processing power credits grant help in the execution of the experiments carried out in this study.

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB). This research received no external funding.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study's conception and design. The first draft of the manuscript was written by Nour Eldeen Khalifa and Wei Wang, Ahmed A. Mawgoud and Yu-Dong Zhang revised the first version. All authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethical approval

This material is the author's original work, which has not been previously published elsewhere. The paper is not currently being considered for publication elsewhere.

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Khalifa, N.E., Wang, W., Mawgoud, A.A. et al. COECG-resnet-GWO-SVM: an optimized COVID-19 electrocardiography classification model based on resnet50, grey wolf optimization and support vector machine. Multimed Tools Appl (2024). https://doi.org/10.1007/s11042-024-19733-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11042-024-19733-4