Abstract

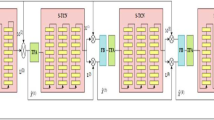

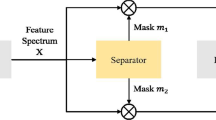

The recent development of speech enhancement methods has incorporated attention mechanisms for learning long-term speech signal dependencies. The utilization of deep convolution networks (DCN) equipped with the self-attention (SA) and transformers has showed promising results in speech enhancement (SE). While self-attention networks excel in extracting significant long-sequence contextual information in mining tasks, they may not effectively concentrate on subtle aspects within speech signals. These subtle details include temporal or spectral continuity, spectral structure, and timbre. To tackle this problem, in the current work, we propose a novel speech enhancement model based on adaptive attention. The proposed model incorporates both local and global attention modules in between a convolutional encoder and a convolutional decoder. The local attention module (LAM) integrates channel and spatial attentions, which can make the model pay more attention to the local details in the speech block, specifically the frame-level features. And the features at utterance-level are explored through a self-attention mechanism in global attention module (GAM). Different from existing transformers, the feed forward network of GAM is improved by introducing a 1D-Conv layer and Bi-directional long short-term memory (Bi-LSTM) for extracting global features, so that the network can more effectively model long sequence context. Moreover, a CNN module is also added to global attention module so that short-term noise can be reduced more effectively, based on the ability of CNN to extract local information. The proposed model stands apart from the current speech enhancement techniques that solely rely on self-attention networks. Instead, our approach models the speech signal using two different attention networks simultaneously, both local detail information and global contextual information of speech are considered, thus better extracting useful information from the speech signal. The effectiveness of the proposed model is assessed using both objective (PESQ and STOI) and subjective tests (signal distortion (CSIG), background distortion (CBAK) and overall quality (COVL)) on two distinct datasets: Voice Bank-Demand dataset and LibriSpeech dataset. The experimental findings demonstrate that our model outperformed the competing baselines on both the datasets.

Similar content being viewed by others

References

Abdulatif S, Cao R, Yang B (2022) Cmgan: Conformer-based metric-gan for monaural speech enhancement. arXiv preprint arXiv:2209.11112

Abdulbaqi J, Gu Y, Chen S et al (2020) Residual recurrent neural network for speech enhancement. ICASSP 2020–2020 IEEE International Conference on Acoustics. Speech and Signal Processing (ICASSP), IEEE, pp 6659–6663

Abgeena A, Garg S (2023) S-lstm-att: a hybrid deep learning approach with optimized features for emotion recognition in electroencephalogram. Health inf sci syst 11(1):40

Bastanfard A, Abbasian A (2023) Speech emotion recognition in persian based on stacked autoencoder by comparing local and global features. Multimed Tools Appl. pp 1–18

Braun S, Gamper H, Reddy CK et al (2021) Towards efficient models for real-time deep noise suppression. ICASSP 2021–2021 IEEE International Conference on Acoustics. Speech and Signal Processing (ICASSP), IEEE, pp 656–660

Defossez A, Synnaeve G, Adi Y (2020) Real time speech enhancement in the waveform domain. arXiv preprint arXiv:2006.12847

Fu SW, Hu Ty, Tsao Y, et al (2017) Complex spectrogram enhancement by convolutional neural network with multi-metrics learning. In: 2017 IEEE 27th international workshop on machine learning for signal processing (MLSP), IEEE, pp 1–6

Fu SW, Liao CF, Tsao Y, et al (2019) Metricgan: Generative adversarial networks based black-box metric scores optimization for speech enhancement. In: International Conference on Machine Learning, PMLR, pp 2031–2041

Giri R, Isik U, Krishnaswamy A (2019) Attention wave-u-net for speech enhancement. In: 2019 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), IEEE, pp 249–253

Gnanamanickam J, Natarajan Y, KR SP (2021) A hybrid speech enhancement algorithm for voice assistance application. Sensors 21(21):7025

Han JY, Zheng WZ, Huang RJ, et al (2018) Hearing aids app design based on deep learning technology. In: 2018 11th International Symposium on Chinese Spoken Language Processing (ISCSLP), IEEE, pp 495–496

Han X, Pan M, Li Z, et al (2022) Vhf speech enhancement based on transformer. ’IEEE Trans Intell Transp Syst 3:146–152

Hao K (2020) Multimedia english teaching analysis based on deep learning speech enhancement algorithm and robust expression positioning. J Intell. Fuzzy Syst 39(2):1779–1791

He B, Wang K, Zhu WP (2022) Dbaunet: Dual-branch attention u-net for time-domain speech enhancement. In: TENCON 2022-2022 IEEE Region 10 Conference (TENCON), IEEE, pp 1–6

Hsieh TA, Wang HM, Lu X et al (2020) Wavecrn: An efficient convolutional recurrent neural network for end-to-end speech enhancement. IEEE Signal Process Lett 27:2149–2153

Hu Y (2007) Subjective evaluation and comparison of speech enhancement algorithms. Speech Commun 49:588–601

Hu Y, Loizou PC (2007) Evaluation of objective quality measures for speech enhancement. IEEE Trans Audio Speech Lang Process 16(1):229–238

Hu Y, Liu Y, Lv S, et al (2020) Dccrn: Deep complex convolution recurrent network for phase-aware speech enhancement. arXiv preprint arXiv:2008.00264

Jannu C, Vanambathina SD (????) Dct based densely connected convolutional gru for real-time speech enhancement. J Intell Fuzzy Syst (Preprint):1–14

Jannu C, Vanambathina SD (2023) An attention based densely connected u-net with convolutional gru for speech enhancement. In: 2023 3rd International conference on Artificial Intelligence and Signal Processing (AISP), IEEE, pp 1–5

Jannu C, Vanambathina SD (2023) Multi-stage progressive learning-based speech enhancement using time–frequency attentive squeezed temporal convolutional networks. Circuits, Systems, and Signal Processing pp 1–27

Jannu C, Vanambathina SD (2023) Shuffle attention u-net for speech enhancement in time domain. Int J Image Graph p 2450043

Karthik A, MazherIqbal J (2021) Efficient speech enhancement using recurrent convolution encoder and decoder. Wirel Pers Commun 119(3):1959–1973

Kim E, Seo H (2021) Se-conformer: Time-domain speech enhancement using conformer. In: Interspeech, pp 2736–2740

Kingma DP, Ba J (2014) Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980

Kishore V, Tiwari N, Paramasivam P (2020) Improved speech enhancement using tcn with multiple encoder-decoder layers. In: Interspeech, pp 4531–4535

Koizumi Y, Yatabe K, Delcroix M et al (2020) Speech enhancement using self-adaptation and multi-head self-attention. ICASSP 2020–2020 IEEE International Conference on Acoustics. Speech and Signal Processing (ICASSP), IEEE, pp 181–185

Kong Z, Ping W, Dantrey A et al (2022) Speech denoising in the waveform domain with self-attention. ICASSP 2022–2022 IEEE International Conference on Acoustics. Speech and Signal Processing (ICASSP), IEEE, pp 7867–7871

Lalitha V, Prema P, Mathew L (2010) A kepstrum based approach for enhancement of dysarthric speech. In: 2010 3rd International Congress on Image and Signal Processing, IEEE, pp 3474–3478

Li A, Yuan M, Zheng C et al (2020) Speech enhancement using progressive learning-based convolutional recurrent neural network. Appl Acoust 166:107347

Li A, Liu W, Zheng C et al (2021) Two heads are better than one: A two-stage complex spectral mapping approach for monaural speech enhancement. IEEE/ACM Trans. Audio Speech Lang Process 29:1829–1843

Li A, Zheng C, Peng R, et al (2021) On the importance of power compression and phase estimation in monaural speech dereverberation. JASA express letters 1(1)

Lin J, Van Wijngaarden AJ, Smith MC, et al (2021) Speaker-aware speech enhancement with self-attention. In: 2021 29th European Signal Processing Conference (EUSIPCO), IEEE, pp 486–490

Lin J, van Wijngaarden AJdL, Wang KC et al (2021) Speech enhancement using multi-stage self-attentive temporal convolutional networks. IEEE/ACM Trans. Audio Speech Lang Process 29:3440–3450

Macartney C, Weyde T (2018) Improved speech enhancement with the wave-u-net. arXiv preprint arXiv:1811.11307

Mehrish A, Majumder N, Bharadwaj R, et al (2023) A review of deep learning techniques for speech processing. Inf Fusion p 101869

Nossier SA, Wall J, Moniri M, et al (2020) Mapping and masking targets comparison using different deep learning based speech enhancement architectures. In: 2020 International Joint Conference on Neural Networks (IJCNN), IEEE, pp 1–8

Panayotov V, Chen G, Povey D, et al (2015) Librispeech: an asr corpus based on public domain audio books. In: 2015 IEEE international conference on acoustics, speech and signal processing (ICASSP), IEEE, pp 5206–5210

Pandey A, Wang D (2019) Tcnn: Temporal convolutional neural network for real-time speech enhancement in the time domain. ICASSP 2019–2019 IEEE International Conference on Acoustics. Speech and Signal Processing (ICASSP), IEEE, pp 6875–6879

Pandey A, Wang D (2020) Densely connected neural network with dilated convolutions for real-time speech enhancement in the time domain. ICASSP 2020–2020 IEEE International Conference on Acoustics. Speech and Signal Processing (ICASSP), IEEE, pp 6629–6633

Pandey A, Wang D (2021) Dense cnn with self-attention for time-domain speech enhancement. IEEE/ACM Trans Audio Speech Lang Process 29:1270–1279

Pascual S, Bonafonte A, Serra J (2017) Segan: Speech enhancement generative adversarial network. arXiv preprint arXiv:1703.09452

Phan H, McLoughlin IV, Pham L et al (2020) Improving gans for speech enhancement. IEEE Signal Process Lett 27:1700–1704

Phan H, Le Nguyen H, Chén OY et al (2021) Self-attention generative adversarial network for speech enhancement. ICASSP 2021–2021 IEEE International Conference on Acoustics. Speech and Signal Processing (ICASSP), IEEE, pp 7103–7107

Recommendation I (2003) Subjective test methodology for evaluating speech communication systems that include noise suppression algorithm. ITU-T recommendation p 835

Reddy CK, Dubey H, Gopal V et al (2021) Icassp 2021 deep noise suppression challenge. ICASSP 2021–2021 IEEE International Conference on Acoustics. Speech and Signal Processing (ICASSP), IEEE, pp 6623–6627

Rethage D, Pons J, Serra X (2018) A wavenet for speech denoising. 2018 IEEE International Conference on Acoustics. Speech and Signal Processing (ICASSP), IEEE, pp 5069–5073

Rix AW, Beerends JG, Hollier MP, et al (2001) Perceptual evaluation of speech quality (pesq)-a new method for speech quality assessment of telephone networks and codecs. In: 2001 IEEE international conference on acoustics, speech, and signal processing. Proceedings (Cat. No. 01CH37221), IEEE, pp 749–752

Roy SK, Paliwal KK (2020) Causal convolutional encoder decoder-based augmented kalman filter for speech enhancement. In: 2020 14th International Conference on Signal Processing and Communication Systems (ICSPCS), IEEE, pp 1–7

Shahnawazuddin S, Deepak K, Pradhan G et al (2017) Enhancing noise and pitch robustness of children’s asr. 2017 IEEE International Conference on Acoustics. Speech and Signal Processing (ICASSP), IEEE, pp 5225–5229

Soni MH, Shah N, Patil HA (2018) Time-frequency masking-based speech enhancement using generative adversarial network. In: 2018 IEEE international conference on acoustics, speech and signal processing (ICASSP), IEEE, pp 5039–5043

Strake M, Defraene B, Fluyt K et al (2020) Fully convolutional recurrent networks for speech enhancement. ICASSP 2020–2020 IEEE International Conference on Acoustics. Speech and Signal Processing (ICASSP), IEEE, pp 6674–6678

Taal CH, Hendriks RC, Heusdens R, et al (2010) A short-time objective intelligibility measure for time-frequency weighted noisy speech. In: 2010 IEEE international conference on acoustics, speech and signal processing, IEEE, pp 4214–4217

Tan K, Wang D (2018) A convolutional recurrent neural network for real-time speech enhancement. In: Interspeech, pp 3229–3233

Tan K, Wang D (2019) Learning complex spectral mapping with gated convolutional recurrent networks for monaural speech enhancement. IEEE/ACM Trans Audio Speech Lang Process 28:380–390

Thiemann J, Ito N, Vincent E (2013) The diverse environments multi-channel acoustic noise database (demand): A database of multichannel environmental noise recordings. In: Proceedings of Meetings on Acoustics, AIP Publishing

Tigga NP, Garg S (2022) Efficacy of novel attention-based gated recurrent units transformer for depression detection using electroencephalogram signals. Health Inf Sci Syst 11(1):1

Ullah R, Wuttisittikulkij L, Chaudhary S et al (2022) End-to-end deep convolutional recurrent models for noise robust waveform speech enhancement. Sensors 22(20):7782

Valentini-Botinhao C, Wang X, Takaki S, et al (2016) Investigating rnn-based speech enhancement methods for noise-robust text-to-speech. In: SSW, pp 146–152

Vaswani A, Shazeer N, Parmar N, et al (2017) Attention is all you need. Adv Neural Inf Process Syst 30

Wang K, Cai J, Yao J et al (2021) Co-teaching based pseudo label refinery for cross-domain object detection. IET Image Process 15(13):3189–3199

Wang K, He B, Zhu WP (2021) Tstnn: Two-stage transformer based neural network for speech enhancement in the time domain. ICASSP 2021–2021 IEEE International Conference on Acoustics. Speech and Signal Processing (ICASSP), IEEE, pp 7098–7102

Wang SH, Fernandes SL, Zhu Z et al (2021) Avnc: attention-based vgg-style network for covid-19 diagnosis by cbam. IEEE Sens J 22(18):17431–17438

Wang Z, Zhang T, Shao Y et al (2021) Lstm-convolutional-blstm encoder-decoder network for minimum mean-square error approach to speech enhancement. Appl Acoust 172:107647

Xian Y, Sun Y, Wang W et al (2021) Convolutional fusion network for monaural speech enhancement. Neural Netw 143:97–107

Xian Y, Sun Y, Wang W, et al (2021) Multi-scale residual convolutional encoder decoder with bidirectional long short-term memory for single channel speech enhancement. In: 2020 28th European Signal Processing Conference (EUSIPCO), IEEE, pp 431–435

Xiang X, Zhang X, Chen H (2021) A nested u-net with self-attention and dense connectivity for monaural speech enhancement. IEEE Signal Process Lett 29:105–109

Xu S, Fosler-Lussier E (2019) Spatial and channel attention based convolutional neural networks for modeling noisy speech. ICASSP 2019–2019 IEEE International Conference on Acoustics. Speech and Signal Processing (ICASSP), IEEE, pp 6625–6629

Yadav S, Rai A (2020) Frequency and temporal convolutional attention for text-independent speaker recognition. In: ICASSP 2020-2020 IEEE international conference on acoustics, speech and signal processing (ICASSP), IEEE, pp 6794–6798

Yamaguchi T, Ota J, Otake M (2012) A system that assists group conversation of older adults by evaluating speech duration and facial expression of each participant during conversation. In: 2012 IEEE International conference on robotics and automation, IEEE, pp 4481–4486

Yu F, Koltun V (2015) Multi-scale context aggregation by dilated convolutions. arXiv preprint arXiv:1511.07122

Yu G, Li A, Wang H et al (2022) Dbt-net: Dual-branch federative magnitude and phase estimation with attention-in-attention transformer for monaural speech enhancement. IEEE/ACM Trans Audio Speech Lang Process 30:2629–2644

Zhang Q, Nicolson A, Wang M, et al (2019) Monaural speech enhancement using a multi-branch temporal convolutional network. arXiv preprint arXiv:1912.12023

Zhao H, Zarar S, Tashev I et al (2018) Convolutional-recurrent neural networks for speech enhancement. 2018 IEEE International Conference on Acoustics. Speech and Signal Processing (ICASSP), IEEE, pp 2401–2405

Zhao S, Nguyen TH, Ma B (2021) Monaural speech enhancement with complex convolutional block attention module and joint time frequency losses. ICASSP 2021–2021 IEEE International Conference on Acoustics. Speech and Signal Processing (ICASSP), IEEE, pp 6648–6652

Funding

No Funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declared that they have no conflict of interest in this work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Parisae, V., Bhavanam, S.N. Adaptive attention mechanism for single channel speech enhancement. Multimed Tools Appl (2024). https://doi.org/10.1007/s11042-024-19076-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11042-024-19076-0